-

PDF

- Split View

-

Views

-

Cite

Cite

Bo Chen, Ziwei Xie, Jiezhong Qiu, Zhaofeng Ye, Jinbo Xu, Jie Tang, Improved the heterodimer protein complex prediction with protein language models, Briefings in Bioinformatics, Volume 24, Issue 4, July 2023, bbad221, https://doi.org/10.1093/bib/bbad221

Close - Share Icon Share

Abstract

AlphaFold-Multimer has greatly improved the protein complex structure prediction, but its accuracy also depends on the quality of the multiple sequence alignment (MSA) formed by the interacting homologs (i.e. interologs) of the complex under prediction. Here we propose a novel method, ESMPair, that can identify interologs of a complex using protein language models. We show that ESMPair can generate better interologs than the default MSA generation method in AlphaFold-Multimer. Our method results in better complex structure prediction than AlphaFold-Multimer by a large margin (+10.7% in terms of the Top-5 best DockQ), especially when the predicted complex structures have low confidence. We further show that by combining several MSA generation methods, we may yield even better complex structure prediction accuracy than Alphafold-Multimer (+22% in terms of the Top-5 best DockQ). By systematically analyzing the impact factors of our algorithm we find that the diversity of MSA of interologs significantly affects the prediction accuracy. Moreover, we show that ESMPair performs particularly well on complexes in eucaryotes.

INTRODUCTION

Most proteins function in the form of protein complexes [1–5]. Consequently, obtaining accurate protein complex structures is vital to understanding how a protein functions at the atom level. Experimental methods, such as X-ray crystallography and cryo-electron microscopy, are costly and low-throughput, and require intensive efforts to prepare samples for structure determination. The computational methods, termed as protein complex prediction (PCP) or protein–protein docking, is an attractive alternative for solving complex structures. PCP takes sequences and/or the unbound structures of individual protein chains as inputs and then predicts the bound complex structures. Traditional computational methods often rely on the global search paradigms, such as fast-Fourier transform-based methods like ClusPro [6], PIPER [7] and ZDOCK [8] and Monte Carlo sampling-based methods like RosettaDock [9], which have been widely used in practice. These methods exhaustively search the conformation space of a complex, and optimize score functions to obtain the final structures. Since the conformation space is large, these methods have to make restrictive constraints on the search space in order to obtain results within a reasonable amount of time. Typical constraints include reducing the search resolutions, making the input monomers rigid bodies and using score functions that can be quickly evaluated [7, 8]. As a result, global search methods have relatively low prediction accuracy and are used with more computationally intensive local refinement methods to obtain higher resolution predictions [10]. To date, PCP is still a fundamental and long-standing challenge in computational structural biology [11, 12]. Various methods have been proposed for PCP, but with limited accuracy [6, 7, 13–15]. When only sequences are given as inputs, PCP is even harder because the unbound structures of individual chains and auxiliary information on the complex interfaces are unavailable.

In the last decades, deep learning has enabled substantial progress in quite a few computational structural biology tasks, such as protein contact [16–18], tertiary structure prediction [19–21] and cryo-electron microscopy structure determination [22, 23]. Among these, co-evolution-analysis-based contact prediction [21, 24, 25] and structure prediction [26, 27] have made substantial progress and demonstrated state-of-the-act accuracy for monomers. These methods utilize the co-evolutionary information hidden in MSA to infer inter-residue interactions or three-dimensional structures of the targets. AlphaFold2 is the representative method, which has showed unparalleled accuracy in CASP14 [19]. Recently, AlphaFold-Multimer [28], a derived version of AlphaFold2 for multimers, significantly outperforms prior PCP systems [6, 26, 27]. However, compared with the accuracy of AlphaFold2 [19] on folding monomers, the accuracy of AlphaFold-Multimer on predicting the protein complex structures is far from satisfactory. Its success rate is around 70% and the mean DockQ score is around 0.6 (medium quality judged by DockQ) [27]. The most important input feature to AlphaFold-Multimer is the multiple sequence alignment (MSA) [26, 27]. Compared with AlphaFold2 [19], which takes the MSA of a single protein as the input, AlphaFold-Multimer needs to build an MSA of interologs for the protein complex structure prediction. However, how to construct such an MSA is still an open problem for heteromers. It requires the identification of interacting homologs in the MSAs of constituent single chains, which may be challenging since one species may have multiple sequences similar to the target sequence (paralogs). Several algorithms have been proposed to identify putative interologs from genome data, such as profiling co-evolved genes [29], and comparing phylogenetic trees[30]. Genome co-localization and species information are two commonly used heuristics to form interologs for co-evolution-based complex contact and structure prediction [28, 31]. Genome co-localization is based on the observation that, in bacteria, many interacting genes are coded in operons [32, 33] and are co-transcribed to perform their functions. However, this rule does not perform well for complexes in eukaryotes with a large number of paralogs, since it becomes more difficult to disambiguate correct interologs [31, 34]. The other phylogeny-based method for identifying interologs is first proposed in ComplexContact [31] and later similar ideas are adopted by AlphaFold-Multimer. This method first identifies groups of paralogs (sequences of the same species) from the MSA of each chain, then ranks the paralogs based on their sequence similarity to their corresponding primary chain and finally pairs sequences of the same species and with the same rank together. However, they are all hand-crafted approaches which merely take effects on the specific domains. In this paper, we instead investigate general and automatic algorithms for constructing MSAs of interologs for heterodimers effectively.

Representation learning via pre-training techniques has been used in different applications [37–40]. Inspired by this, protein language models [41–43] (PLMs) have surged as the main regime for protein representation learning built on a large amount of protein sequences, which benefits downstream tasks, such as contact prediction [18, 42], remote homology detection [44, 45] and mutation effect prediction [46]. PLMs can comprehensively capture the biological constraints and co-evolutionary information encoded in the sequence, which is a plausible interpretation for their impressive performance on various downstream tasks than canonical methods relying on dedicated hand-crafted traits. To this, a natural question arises: Can we leverage the co-evolutionary information featured by PLMs to build effective interologs?

In this paper, we mainly focus on ab initio protein complex structure prediction, i.e. predicting the complex structure without prior information on the binding interfaces of the target complex. To the best of our knowledge, we are the first to propose a simple yet effective MSA pairing algorithm, ESMPair, that uses the immediate output from PLMs to form joint MSAs, i.e. MSA of interologs; in particular, we leverage column-wise attention scores from ESM-MSA-1b [42] to identify and pair homologs from MSAs of constituent single chains. We conduct extensive experiments on three test sets, i.e. pConf70, pConf80 and DockQ49. Compared with previous methods, ESMPair achieves state-of-the-art structure prediction accuracy on heterodimers (+10.7%, +7.3%, and +3.7% in terms of the Top-5 best DockQ score over AlphaFold-Multimer on three test sets, respectively). Moreover, we find out that the ensemble strategies, which combine ESMPair with other MSA pairing methods, significantly improve the structure prediction accuracy over the standard single strategy. We further analyze the performance of complexes from eukaryotes, bacteria and archaea, and find that ESMPair performs the best on eukaryotes for which identifying interologs is quite difficult [31, 34]. Most strikingly, on a few targets where one of the constituent chains is from eukaryotes and the other is from bacteria, ESMPair considerably outperforms other baselines (+25% in overall performance over AlphaFold-Multimer), which strongly demonstrates that the PLM-enhanced MSA pairing method is robust for targets from different superkingdoms. Then we exposit that the diversity of interologs has a significant positive correlation with the prediction accuracy. Lastly, we explore other approaches that utilize the output of ESM-MSA-1b. For example, we take the cosine-similarity score between the sequence embeddings as the metric to build interologs, which performs on par with the default protocol used in Alphafold-Multimer. Generally, ESMPair is the first simple yet effective algorithm that incorporates the strength of PLMs into tackling the issues of identifying MSA of interologs. We believe ESMPair will facilitate the fields of protein structure prediction which highly resorts to the co-evolution information hidden in MSA.

RESULTS

In this section, we first briefly outline the framework of ESMPair for PCP. Then, we discuss how our proposed method has a better complex prediction accuracy than previous MSA pairing methods. We find that the ensemble strategy showcases better performance than the default single strategy. We further quantitatively analyze several key factors and hyperparameters that may impact the performance of our method, and also explore the capability of different measurements to distinguish the acceptable predictions from the unacceptable ones. Finally, we compare the performance between ESMPair and AlphaFold-Multimer on CASP15 heteromer.

ESMPair overview

The overall framework of ESMPair is illustrated in Figure 1 with the details in Methods. In complex structure prediction, predictors such as AlphaFold-Multimer uses inter-chain co-evolutionary signals by pairing sequences between MSA of constituent single chains of the query complex. Formally, given a query heterodimer, we obtain individual MSAs of its two constituent chains, denoted as |$M_{1} \in \mathcal{A}^{N_{1} \times C_{1}}$| and |$\ M_{2} \in \mathcal{A}^{N_{2} \times C_{2}}$|, where |$\mathcal{A}$| is the alphabet used by PLM, |$N_{1}$| and |$N_{2}$| are the number of the sequences in MSAs |$M_{1}$| and |$M_{2}$| and |$C_{1}$| and |$C_{2}$| are the sequence length. The MSA pairing pipeline aims at designing a matching or an injection |$\pi : [N_{1}] \to [N_{2}]$| between MSAs from each chain to build the MSA of interologs, dubbed as |$M_{\pi } \in \mathcal{A}^{N \times (C_{1} + C_{2})}$|, where |$N$| is the number of the sequence in the joint MSA. In practice, the MSA of interologs |$M_{\pi }$| is a collection of the concatenated sequence |$\{\text{concat}(M_{1}[i], M_{2}[\pi (i)]): i \in \mathcal{P}\}$|, where |$\mathcal{P}$| is the indices of the sequences from |$M_{1}$| that can be paired with any sequences from |$M_{2}$| according to the matching pattern |$\pi $|. Then MSA of interologs is taken by predictors as input to predict the structure of the query heterodimer. Our aim is to leverage the superiority of PLMs to explore an effective matching strategy |$\pi $| that facilities the protein complex structure prediction.

![Schematic illustration of ESMPair that builds interologs as the input to AlphaFold-Multimer. Given a pair of query sequences as input: (1) we first search the UniProt database [35] with JackHMMER [36] to generate the MSA for each query sequence, (2) sequences of the same taxonomy rank are grouped into the same cluster, (3) ESM-MSA-1b is applied to estimate the column attention score (ColAttn_score) between each sequence homolog of MSA with the query sequence. (4) One interolog is obtained by directly concatenating two matched sequence homologs. We match two sequence homologs of the same taxonomy group with similar attention scores from the two query sequences (5) AlphaFold-Multimer takes the interolog MSA as input to predict the complex structure.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/bib/24/4/10.1093_bib_bbad221/1/m_bbad221f1.jpeg?Expires=1749128854&Signature=C0Ba0pmewzgEATM65wmK2ZomCbzgvSzZ9aPJ1gZbVUOcwhEXhhKpPjbXrhQ2PzLyzfOrnFVuT3pdnIHZIf--TT7uvOLXYQ1R0bgylb1z2Ly41mR8TtgjRtmarpYhohIFlJPcIteXQId9vGQmflk1tO5xcQxINPG~y8FqDVMyH0HmFzic6N~PwtzauqpoWEYdNyJQEIG2nQpN856IbZYm5X-Qi6Ebk8nmPtFd1tHCQQyVF7RHQldHUtcftVAgEl~cENqFKtA7YKOsBw0OZ9ORddWy5jjvZ55Z0kLIBL2wTx~oWvbfAxhTzboXFOSPlNjS-FU0l7pLG4nMJivzsha5CA__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Schematic illustration of ESMPair that builds interologs as the input to AlphaFold-Multimer. Given a pair of query sequences as input: (1) we first search the UniProt database [35] with JackHMMER [36] to generate the MSA for each query sequence, (2) sequences of the same taxonomy rank are grouped into the same cluster, (3) ESM-MSA-1b is applied to estimate the column attention score (ColAttn_score) between each sequence homolog of MSA with the query sequence. (4) One interolog is obtained by directly concatenating two matched sequence homologs. We match two sequence homologs of the same taxonomy group with similar attention scores from the two query sequences (5) AlphaFold-Multimer takes the interolog MSA as input to predict the complex structure.

ESMPair outperforms other MSA pairing methods on heterodimer predictions

Overall evaluation. For each test target we predict five 3D structures using Alphafold-Multimer’s five models and then report the average of Top-|$k$| (k=1, 5) Best DockQ score of the predicted structures and the corresponding success rate (SR) in Table 1. Our method outperforms the other methods. To be specific, our method outperforms the AF-Multimer’s default MSA pairing strategy on all three test sets (0.259 versus 0.234 on pConf70, 0.423 versus 0.406 on pConf80 and 0.265 versus 0.242 on DockQ49, in term of Top-5 DockQ score). Our experimental results confirm that our proposed column-wise-attention-based MSA pairing method, ESMPair, is better than (1) the sequence similarity-based method used in AF-Multimer and (2) the cosine similarity-based method based on the mixed noisy residue embedding, i.e. ESMPair(InterLocalCos), ESMPair(InterGlobalCos) and ESMPair(IntraCos). Hereinafter, we abbreviate them as IntraLocalCos, InterGlobalCos and InterCos.

DockQ scores and success rate of PLM-enhanced pairing methods and baselines. We report the average of Top-5 Best DockQ score, Top-1 Best DockQ score and Success Rate (DockQ|$\geq $|0.23) (%) on pConf70, DockQ49and pConf80 test sets. For one test target, we predicted five different structures using the five AlphaFold-Multimer models. Subscript in red represents the performance gain of our method over the default MSA pairing strategy in Alphafold-Multimer (%). PV is short for the P-values from paired single-tail Student’s t-test between ESMPair and the compared baselines based on the Top-1 Best DockQ score.

| Methods . | pConf70 . | DockQ49 . | pConf80 . | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . |

| Non-Pairing Methods | ||||||||||||

| Block | 0.199 | 0.179 | 30.4 | 0.039 | 0.212 | 0.194 | 49.0 | 0.002 | 0.351 | 0.319 | 51.2 | 0.001 |

| Baseline Pairing Methods | ||||||||||||

| Genome | 0.215 | 0.182 | 33.7 | 0.042 | 0.219 | 0.195 | 49.0 | 0.002 | 0.377 | 0.346 | 54.7 | 0.030 |

| AF-Multimer | 0.234 | 0.203 | 42.4 | 0.048 | 0.247 | 0.219 | 58.0 | 0.003 | 0.408 | 0.369 | 62.5 | 0.101 |

| PLM-enhanced Pairing Methods | ||||||||||||

| InterLocalCos | 0.218 | 0.180 | 33.7 | 0.007 | 0.236 | 0.210 | 52.3 | 0.002 | 0.389 | 0.353 | 56.5 | 0.001 |

| InterGlobalCos | 0.224 | 0.182 | 35.9 | 0.018 | 0.229 | 0.206 | 52.9 | 0.001 | 0.391 | 0.350 | 57.1 | 0.004 |

| IntraCos | 0.235 | 0.199 | 37.0 | 0.014 | 0.251 | 0.219 | 54.8 | 0.001 | 0.400 | 0.362 | 58.3 | 0.003 |

| ESMPair | 0.259 (+10.7) | 0.214 (+5.4) | 42.4 (+0.0) | - | 0.265 (+7.3) | 0.235 (+7.3) | 58.7 (+1.2) | - | 0.423 (+3.7) | 0.378 (+2.4) | 63.1 (+1.0) | - |

| Methods . | pConf70 . | DockQ49 . | pConf80 . | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . |

| Non-Pairing Methods | ||||||||||||

| Block | 0.199 | 0.179 | 30.4 | 0.039 | 0.212 | 0.194 | 49.0 | 0.002 | 0.351 | 0.319 | 51.2 | 0.001 |

| Baseline Pairing Methods | ||||||||||||

| Genome | 0.215 | 0.182 | 33.7 | 0.042 | 0.219 | 0.195 | 49.0 | 0.002 | 0.377 | 0.346 | 54.7 | 0.030 |

| AF-Multimer | 0.234 | 0.203 | 42.4 | 0.048 | 0.247 | 0.219 | 58.0 | 0.003 | 0.408 | 0.369 | 62.5 | 0.101 |

| PLM-enhanced Pairing Methods | ||||||||||||

| InterLocalCos | 0.218 | 0.180 | 33.7 | 0.007 | 0.236 | 0.210 | 52.3 | 0.002 | 0.389 | 0.353 | 56.5 | 0.001 |

| InterGlobalCos | 0.224 | 0.182 | 35.9 | 0.018 | 0.229 | 0.206 | 52.9 | 0.001 | 0.391 | 0.350 | 57.1 | 0.004 |

| IntraCos | 0.235 | 0.199 | 37.0 | 0.014 | 0.251 | 0.219 | 54.8 | 0.001 | 0.400 | 0.362 | 58.3 | 0.003 |

| ESMPair | 0.259 (+10.7) | 0.214 (+5.4) | 42.4 (+0.0) | - | 0.265 (+7.3) | 0.235 (+7.3) | 58.7 (+1.2) | - | 0.423 (+3.7) | 0.378 (+2.4) | 63.1 (+1.0) | - |

DockQ scores and success rate of PLM-enhanced pairing methods and baselines. We report the average of Top-5 Best DockQ score, Top-1 Best DockQ score and Success Rate (DockQ|$\geq $|0.23) (%) on pConf70, DockQ49and pConf80 test sets. For one test target, we predicted five different structures using the five AlphaFold-Multimer models. Subscript in red represents the performance gain of our method over the default MSA pairing strategy in Alphafold-Multimer (%). PV is short for the P-values from paired single-tail Student’s t-test between ESMPair and the compared baselines based on the Top-1 Best DockQ score.

| Methods . | pConf70 . | DockQ49 . | pConf80 . | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . |

| Non-Pairing Methods | ||||||||||||

| Block | 0.199 | 0.179 | 30.4 | 0.039 | 0.212 | 0.194 | 49.0 | 0.002 | 0.351 | 0.319 | 51.2 | 0.001 |

| Baseline Pairing Methods | ||||||||||||

| Genome | 0.215 | 0.182 | 33.7 | 0.042 | 0.219 | 0.195 | 49.0 | 0.002 | 0.377 | 0.346 | 54.7 | 0.030 |

| AF-Multimer | 0.234 | 0.203 | 42.4 | 0.048 | 0.247 | 0.219 | 58.0 | 0.003 | 0.408 | 0.369 | 62.5 | 0.101 |

| PLM-enhanced Pairing Methods | ||||||||||||

| InterLocalCos | 0.218 | 0.180 | 33.7 | 0.007 | 0.236 | 0.210 | 52.3 | 0.002 | 0.389 | 0.353 | 56.5 | 0.001 |

| InterGlobalCos | 0.224 | 0.182 | 35.9 | 0.018 | 0.229 | 0.206 | 52.9 | 0.001 | 0.391 | 0.350 | 57.1 | 0.004 |

| IntraCos | 0.235 | 0.199 | 37.0 | 0.014 | 0.251 | 0.219 | 54.8 | 0.001 | 0.400 | 0.362 | 58.3 | 0.003 |

| ESMPair | 0.259 (+10.7) | 0.214 (+5.4) | 42.4 (+0.0) | - | 0.265 (+7.3) | 0.235 (+7.3) | 58.7 (+1.2) | - | 0.423 (+3.7) | 0.378 (+2.4) | 63.1 (+1.0) | - |

| Methods . | pConf70 . | DockQ49 . | pConf80 . | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . | Top5 . | Top1 . | SR . | PV . |

| Non-Pairing Methods | ||||||||||||

| Block | 0.199 | 0.179 | 30.4 | 0.039 | 0.212 | 0.194 | 49.0 | 0.002 | 0.351 | 0.319 | 51.2 | 0.001 |

| Baseline Pairing Methods | ||||||||||||

| Genome | 0.215 | 0.182 | 33.7 | 0.042 | 0.219 | 0.195 | 49.0 | 0.002 | 0.377 | 0.346 | 54.7 | 0.030 |

| AF-Multimer | 0.234 | 0.203 | 42.4 | 0.048 | 0.247 | 0.219 | 58.0 | 0.003 | 0.408 | 0.369 | 62.5 | 0.101 |

| PLM-enhanced Pairing Methods | ||||||||||||

| InterLocalCos | 0.218 | 0.180 | 33.7 | 0.007 | 0.236 | 0.210 | 52.3 | 0.002 | 0.389 | 0.353 | 56.5 | 0.001 |

| InterGlobalCos | 0.224 | 0.182 | 35.9 | 0.018 | 0.229 | 0.206 | 52.9 | 0.001 | 0.391 | 0.350 | 57.1 | 0.004 |

| IntraCos | 0.235 | 0.199 | 37.0 | 0.014 | 0.251 | 0.219 | 54.8 | 0.001 | 0.400 | 0.362 | 58.3 | 0.003 |

| ESMPair | 0.259 (+10.7) | 0.214 (+5.4) | 42.4 (+0.0) | - | 0.265 (+7.3) | 0.235 (+7.3) | 58.7 (+1.2) | - | 0.423 (+3.7) | 0.378 (+2.4) | 63.1 (+1.0) | - |

Among all the MSA pairing methods, block diagonalization performs the worst (-30% compared with ESMPair in terms of the average of Top-5 best DockQ). The result indicates that the inter-chain co-evolutionary information helps with complex structure prediction. Among MSA pairing baselines, AF-Muiltmer surpasses genetic co-localization by a large margin (+12.8% Top-5 DockQ). All the proposed PLM-enhanced pairing methods substantially outperform the block diagonalization and the genetic-based methods. Even though AF-Multimer may have overly optimistic performance using the default pairing method since the training MSAs are built using it, IntraCos MSA pairing method performs on a par with AF-Multimer, and ESMPair further exceeds it by a large margin (+4.2|$\sim $|10.7% Top-5 DockQ score over three test sets). Please refer to Supplementary Tables 10–12 for more comprehensive measurements to qualify the performance comparison between ESMPair and AF-Multimer.

Intra-ranking methods are superior to inter-ranking ones both in effectiveness and scalability. From Table 1, we can also see that the inter-ranking methods like InterLocalCos and InterGlobalCos underperform the intra-ranking ones, i.e. IntraCos and ESMPair. We speculate that as ESM-MSA-1b pre-trains in the monomer data, it fails to directly capture the underlying correlations across the constituent chains in the complex. Besides heterodimers, when it comes to predict the structure of multimer with more than two chains, the intra-ranking strategies are the self-contained methods that only need to rank the MSAs in each single chain, and then match MSA of the same rank with other chains to build effective interologs with time complexity of |$O(N)$|, where |$N$| is the depth of MSA. In contrast, the inter-pairing strategies suffer from the exponential growth of combinations with increasing interacting chains with the time complexity |$O(N^{r})$|, where |$r$| is the number of chains in the multimer. Thus, the intra-ranking methods are more time-efficient and scalable than the inter-ranking ones.

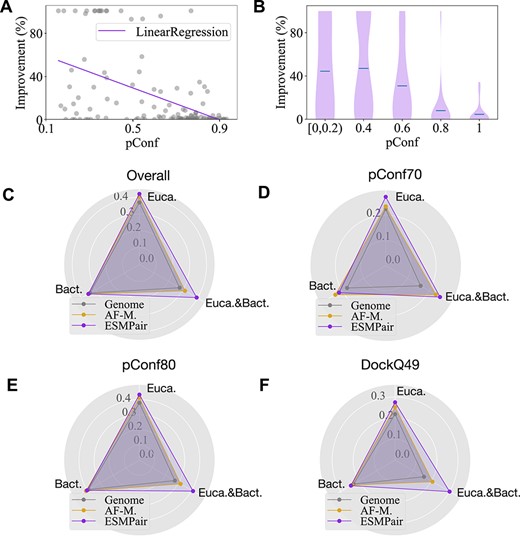

ESMPair performs better on low pConf targets. As shown in Table 1, the performance gap between ESMPair and AF-Multimer becomes narrower on pConf80 than on pConf70, with improvement ratio from 3.7% to 10.7%. For an in-depth analysis, we quantitatively analyze the correlations between the predicted confidence score (pConf) estimated by AF-Multimer and the performance gap of the average of Top-5 Best DockQ score between ESMPair and AF-Multimer on DockQ49, as illustrated in Figure 2(a-b). The relative improvement is negatively correlated (Pearson Correlation Coefficient is -0.49) with the predicted confidence score. When pConf is less than 0.2, the relative improvements even achieve 100%, while when pConf is more than 0.8, ESMPair performs nearly on par with AF-Muiltimer. This is because AF-Multimer can do well on a relatively easier target; it is very challenging to further improve it.

Comparison of the prediction performance on different pConf score regions or domains. a-b, The negative correlations between the relative improvement between ESMPair and AF-Multimer in different pConf score regions. c–f, We compare the DockQ score among ESMPair, AF-Multimer and Genome on Eucaryote, Bacteria and Eucaryote&Bacteria domains. The Euca.&Bact. is a special domain where the two constituent chains in the heterodimer belong to the two domains, respectively. Specifically, the heterodimers of our dataset are from Eucaryotes, Bacteria, Viruses, Archaea, Eucaryotes;Bacteria, respectively. We group the data from Bateria, Viruses and Archaea as the Bateria domain. In all test sets, ESMPair significantly outperforms the other two baselines on the Eucaryote targets.

To further quantify the performance comparisons between ESMPair and AF-Multimer on natural targets with all range of pConf scores, we follow the same data processing pipeline to generate the dataset without applying any filtering based on pConf scores. Subsequently, we randomly select 300 targets, and use ESMPair and the default pairing strategy of AF-Multimer to predict their structures. Of these 300 targets, 256 have pConf scores greater than or equal to 0.7 while 44 have pConf scores lower than 0.7. For convenience, we use model 1 to generate predictions for each target, and make only one prediction per target. We report the average of DockQ score, TMscore, Interface Contact Score (ICS) and Interface Path Score (IPS) as the evaluation metrics, shown in Table 2. The results suggest ESMPair outperforms AF-M on targets with low pConf (pConf < 0.7) scores, whereas it performs comparably with AF-M on those with high pConf (pConf |$\geq $| 0.7) scores.

Comparisons between ESMPair and AF-Multimer on targets from all range pConf scores. We report the average of DockQ score, TMscore, ICS and IPS as the evaluation metrics (Larger values mean better performance).

| Methods . | DockQ . | TMscore . | ICS . | IPS . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . |

| AF-M | 0.225 | 0.757 | 0.687 | 0.788 | 0.901 | 0.886 | 0.234 | 0.771 | 0.700 | 0.445 | 0.766 | 0.724 |

| ESMPair | 0.299 | 0.753 | 0.695 | 0.799 | 0.900 | 0.886 | 0.326 | 0.766 | 0.708 | 0.481 | 0.762 | 0.726 |

| Methods . | DockQ . | TMscore . | ICS . | IPS . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . |

| AF-M | 0.225 | 0.757 | 0.687 | 0.788 | 0.901 | 0.886 | 0.234 | 0.771 | 0.700 | 0.445 | 0.766 | 0.724 |

| ESMPair | 0.299 | 0.753 | 0.695 | 0.799 | 0.900 | 0.886 | 0.326 | 0.766 | 0.708 | 0.481 | 0.762 | 0.726 |

Comparisons between ESMPair and AF-Multimer on targets from all range pConf scores. We report the average of DockQ score, TMscore, ICS and IPS as the evaluation metrics (Larger values mean better performance).

| Methods . | DockQ . | TMscore . | ICS . | IPS . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . |

| AF-M | 0.225 | 0.757 | 0.687 | 0.788 | 0.901 | 0.886 | 0.234 | 0.771 | 0.700 | 0.445 | 0.766 | 0.724 |

| ESMPair | 0.299 | 0.753 | 0.695 | 0.799 | 0.900 | 0.886 | 0.326 | 0.766 | 0.708 | 0.481 | 0.762 | 0.726 |

| Methods . | DockQ . | TMscore . | ICS . | IPS . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . | < 0.7 . | |$\geq $| 0.7 . | ALL . |

| AF-M | 0.225 | 0.757 | 0.687 | 0.788 | 0.901 | 0.886 | 0.234 | 0.771 | 0.700 | 0.445 | 0.766 | 0.724 |

| ESMPair | 0.299 | 0.753 | 0.695 | 0.799 | 0.900 | 0.886 | 0.326 | 0.766 | 0.708 | 0.481 | 0.762 | 0.726 |

ESMPair has a higher prediction accuracy on eucaryote targets. We further compare the DockQ distribution of ESMPair, AF-Multimer and Genome on three kingdoms, i.e. Eucaryote, Bacteria and Eucaryote&Bacteria, which is a special domain where the two constituent chains in the heterodimer belong to the two domains, respectively. To be specific, all the heterodimers from pConf70, DockQ49 and pConf80 are divided via the domains of Eucaryotes, Bacteria, Viruses, Archaea, Eucaryotes;Bacteria, respectively. Note that we group the data from Bateria, Viruses and Archaea as the Bacteria domain. The detailed statistic of each domain is shown in Supplementary Table 6. Figure 2(c–f) demonstrates that ESMPair performs better than the other two MSA pairing methods on the Eucaryotes data by a large margin (0.420 for ESMPair, 0.402 for AF-Multimer and 0.369 for Genome on the overall data). As it is notoriously difficult to identify homologous protein sequences for the Eucaryotes data, ESMPair has a desirable property to build effective interologs on the Eucaryotes. While in the Bacteria data, three strategies have similar performance (around 0.35 on the whole data). Most strikingly, we find ESMPair has an extraordinary performance on the Euca.&Bact. data over the other two methods (0.394 for ESMPair, 0.314 for AF-Multimer and 0.277 for Genome on the overall data). We further check the performance gap for each target from the Euca.&Bact. data. ESMPair performs significantly better on the three out of six targets, 0.443 (ESMPair) versus 0.013 (AF-Multimer) on 5D6J, 0.289 versus 0.201 on 6B03 and 0.864 versus 0.854 on 7AYE. Besides, ESMPair performs on par with AF-Multimer on the other three targets. These results shed light on the robustness of PLMs. As PLMs are pre-trained on billions of protein data [41–43], it can break the bottleneck of other hand-crafted MSA pairing methods, such as genetic-based methods, phylogeny-based methods, etc, which merely take effect in the specific domain. Our proposed PLMs-enhanced methods can identify the co-evolutionary signals effectively to build MSA of interologs across different superkingdoms. However, as it is notoriously difficult to find sufficient such cases that meet our selection criteria for more comprehensive analysis, our observations are based on the six Euca.&Bact. targets. If possible, we will continue to find more such cases to justify our conclusions. We leave this to a future study.

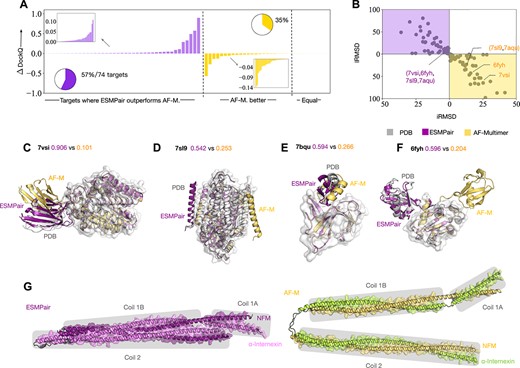

ESMPair outperforms AF-Multimer on most of the newly released targets. We further select 74 targets that AF-Multimer does not train on [28], i.e. the targets whose release date is later than 30 April 2018from the test dataset. Then we compare the performance of predicted structures on these targets between ESMPair and AF-Multimer in Figure 3. From Figure 3(a), ESMPair outperforms AF-Multimer on most of the targets (57%) with a relative larger performance gap, while AF-Multimer outperforms ESMPair on fewer targets (35%) with a relative lower gap. We further plot the distributions between interface RMSD and ligand RMSD of predicted structures via ESMPair and AF-Multimer, in Figure 3(b). The holistic distributions predicted by ESMPair are closer to the origin of coordinates than that predicted by AF-Multimer, which strongly proved that ESMPair is superior to AF-Multimer on the predictions of newly released targets.

Comparing ESMPair and AF-Multimer predictions on newly release targets (a–f) and unresolved cases (g). a–f, The comparisons are made on the 74 targets whose data were released later on 30 April 2018. a, The bar charts show the relative performance gap between ESMPair and AF-Multimer on three categories: ESMPair outperforms AF-M.; AF-M. outperforms ESMPair; Equal performance. b, The interface and ligand RMSD distributions of predicted stuctures via ESMPair (Purple) and AF-M (Yellow). c–f, Four cases are further visualized. Among this, the ligand orientation are wrongly-predicted via AF-M. on 7VSI and 7AQU, while the binding site are wrongly-predicted by AF-M. on 7SL9 and 6FYH. g, The intermediate filament protein NFM-INA heterodimer structure predicted via ESMPair shows a four-helix bundle. The gray boxes show the interacting motifs of coil 1A, coil 1B and coil 2 of the two proteins.

Furthermore, we show why ESMPair performs better than AF-Multimer by analyzing four PDB targets, 7VSI, 7AQU, 6FYH and 7VSI, in Figure 3(c–f). Among these, 7VSI and 6FYH have a larger predicted iRMSD and lRMSD variance by AF-Multimer, because AF-Multimer predicts the wrong binding sites. While AF-Multimer predicts the right binding sites on 7SL9 and 7AQU that have a smaller predicted iRMSD and lRMSD variance, it unfortunately predicts the wrong ligand orientations. In contrast, our proposed ESMPair correctly predicts the binding sites on the receptor and also places the ligand in the approximately correct relative orientation.

To better illustrate the usage of ESMPair in predicting the protein complexes without known resolved 3D structures, we inspected the intermediate filament heterodimer formed between the neurofilament medium polypeptide (NFM, UniProt ID P08553) and |$\alpha $|-internexin (UniProt ID P46660), which is known to form an anti-parallel four-helix bundle [47, 48]. As shown in Figure 3(g), both ESMPair and AF-Multimer correctly predict the three binding interfaces between the coil 1A, coil 1B and coil 2 motifs from NFM and |$\alpha $|-internexin. However, ESMPair predicted the two coiled coils to pack as a four-helix bundle, which is consistent with the experimental pieces of evidence, while the AF-multimer predicted the two coiled coils to be separated. This case demonstrates the potential to apply ESMPair to model unresolved protein complexes.

ESMPair is more robust than AF-Multimer on different sequence lengths. We split the targets from pConf70, pConf80 and DockQ49 datasets via the sequence length into two groups: one is the targets with |$\geq $| 100 residues and the other one owns the targets with < 100 residues. Note that we use the shorter protein between the two chains as the length of targets. We provide the average Top-1 Best DockQ comparison between the targets as shown in Table 3. The results demonstrate that ESMPair performs consistently better than AF-M in different length. Moreover, ESMPair is robust for complex with variable lengths.

The Top-1 Best DockQ performance of two groups with different sequence length ( |$\geq $| 100 & < 100). The GAP value is the subtraction between the DockQ score of the two different length groups.

| Methods . | # Targets . | DockQ (<100) . | DockQ (|$\geq $|100) . | GAP . |

|---|---|---|---|---|

| AF-M | 81 | 0.328 | 0.355 | -0.027 |

| ESMPair | 152 | 0.359 | 0.366 | -0.007 |

| Methods . | # Targets . | DockQ (<100) . | DockQ (|$\geq $|100) . | GAP . |

|---|---|---|---|---|

| AF-M | 81 | 0.328 | 0.355 | -0.027 |

| ESMPair | 152 | 0.359 | 0.366 | -0.007 |

The Top-1 Best DockQ performance of two groups with different sequence length ( |$\geq $| 100 & < 100). The GAP value is the subtraction between the DockQ score of the two different length groups.

| Methods . | # Targets . | DockQ (<100) . | DockQ (|$\geq $|100) . | GAP . |

|---|---|---|---|---|

| AF-M | 81 | 0.328 | 0.355 | -0.027 |

| ESMPair | 152 | 0.359 | 0.366 | -0.007 |

| Methods . | # Targets . | DockQ (<100) . | DockQ (|$\geq $|100) . | GAP . |

|---|---|---|---|---|

| AF-M | 81 | 0.328 | 0.355 | -0.027 |

| ESMPair | 152 | 0.359 | 0.366 | -0.007 |

ESMPair outperforms AF-Multimer with or without the full features. We use the pConf70 set for comparing the performance between ESMPair and AF-Multimer on the full feature settings, i.e. adding the template information and AMBER force-field. For each target, we run each of the five models once, as shown in Table 4. We can conclude that (1) the full feature setting indeed significantly improves the performance of ESMPair; (2) ESMPair rivals AF-Multimer in all settings.

| Methods . | DockQ . | TMscore . | ICS . | IPS . |

|---|---|---|---|---|

| Without full AF-M features | ||||

| AF-M | 0.20 | 0.69 | 0.26 | 0.41 |

| ESMPair | 0.21 | 0.69 | 0.28 | 0.41 |

| With full AF-M features | ||||

| AF-M | 0.29 | 0.72 | 0.35 | 0.44 |

| ESMPair | 0.32 | 0.71 | 0.39 | 0.45 |

| Methods . | DockQ . | TMscore . | ICS . | IPS . |

|---|---|---|---|---|

| Without full AF-M features | ||||

| AF-M | 0.20 | 0.69 | 0.26 | 0.41 |

| ESMPair | 0.21 | 0.69 | 0.28 | 0.41 |

| With full AF-M features | ||||

| AF-M | 0.29 | 0.72 | 0.35 | 0.44 |

| ESMPair | 0.32 | 0.71 | 0.39 | 0.45 |

| Methods . | DockQ . | TMscore . | ICS . | IPS . |

|---|---|---|---|---|

| Without full AF-M features | ||||

| AF-M | 0.20 | 0.69 | 0.26 | 0.41 |

| ESMPair | 0.21 | 0.69 | 0.28 | 0.41 |

| With full AF-M features | ||||

| AF-M | 0.29 | 0.72 | 0.35 | 0.44 |

| ESMPair | 0.32 | 0.71 | 0.39 | 0.45 |

| Methods . | DockQ . | TMscore . | ICS . | IPS . |

|---|---|---|---|---|

| Without full AF-M features | ||||

| AF-M | 0.20 | 0.69 | 0.26 | 0.41 |

| ESMPair | 0.21 | 0.69 | 0.28 | 0.41 |

| With full AF-M features | ||||

| AF-M | 0.29 | 0.72 | 0.35 | 0.44 |

| ESMPair | 0.32 | 0.71 | 0.39 | 0.45 |

ESMPair performs better when only pairing the top-ranked sequence. We construct interologs via merely pairing the top-1 rank sequences on each species of the two MSAs, and report the average of Top-1 Best DockQ score on the pConf70 datasets, as illustrated in Table 5. The results confirm that the sequence which is most similar to the query sequence may be more informative, and contributes more to the accuracy of structural predictions. Analogously, [49] also concluded that the top-ranked sequences within each specific species in the two MSAs perform better than those that pair all the sequences.

The Top-1 Best DockQ via only pairing the top-ranked sequence or the default pairing setting

| Methods . | DockQ . | Methods . | DockQ . |

|---|---|---|---|

| ESMPair | 0.214 | IntraCos | 0.199 |

| ESMPair (Top-1) | 0.221 | IntraCos (Top-1) | 0.210 |

| Methods . | DockQ . | Methods . | DockQ . |

|---|---|---|---|

| ESMPair | 0.214 | IntraCos | 0.199 |

| ESMPair (Top-1) | 0.221 | IntraCos (Top-1) | 0.210 |

The Top-1 Best DockQ via only pairing the top-ranked sequence or the default pairing setting

| Methods . | DockQ . | Methods . | DockQ . |

|---|---|---|---|

| ESMPair | 0.214 | IntraCos | 0.199 |

| ESMPair (Top-1) | 0.221 | IntraCos (Top-1) | 0.210 |

| Methods . | DockQ . | Methods . | DockQ . |

|---|---|---|---|

| ESMPair | 0.214 | IntraCos | 0.199 |

| ESMPair (Top-1) | 0.221 | IntraCos (Top-1) | 0.210 |

Ensemble improves the prediction accuracy

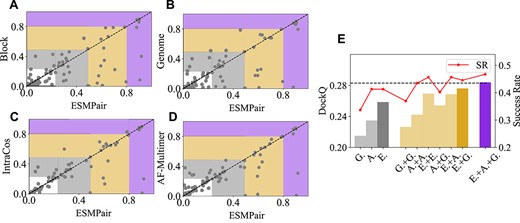

From Figure 4 (a–d), we found that different MSA pairing methods have their own advantages; even block diagonalization performs slightly better than ESMPair on about 30% targets, which implies that they can complement each other. To verify that, we combine 10 models predicted by any two of the MSA paring methods, then we report the average of Top-5 Best DockQ score, as shown in Figure 4 (e). The corresponding Top-1 Best DockQ score is also presented in the Supplementary Table 13. The ensemble strategies, i.e. the yellow and purple bars, significantly outperform the corresponding single strategy, i.e. the gray bars. Specifically, the performance of intra-ensemble strategies surpasses the corresponding single strategy, for example, the DockQ score of ESMPair + ESMPair is 0.269 versus 0.259 of ESMPair, which demonstrates that simply increasing the number of predictions of each model also benefits the structure prediction accuracy of each target. Among the inter-ensemble strategies, ESMPair in addition to any one of the single strategy always have a better performance than the one without ESMPair, for example, the SR of ESMPair + Genome is 44.6% versus 40.4% of AF-Multimer + Genome. Finally, the ensemble of all three strategies, i.e. the purple bar, reaches the best performance with 0.285 DockQ score and 46.8% Success Rate, which motivates us that instead of merely using a single strategy to build interologs, the ensemble MSA pairing strategy may be the silver bullet to identify more effective interologs.

The comparisons between ESMPair and four alternative MSA pairing approaches (a–d), and various ensemble strategy (e) on the targets from pConf70. (a–d) The coordinates of each point demonstrate the reported DockQ score of the target between ESMPair (x-axis) and other methods (y-axis). A point under the diagonal dash line implies ESMPair performs better than the compared method on this target. The highlighted regions represent the incorrect (white), acceptable (gray), medium (yellow) and high-quality (purple) predicted models according to DockQ score. (e) The gray bars represent the performance of single strategies, where G. stands for Genome, A. is for AF-Multimer and E. is for ESMPair. ESMPair is the best with 0.259 DockQ score and 42.4% Success Rate. The yellow bars show the ensemble performance of the two strategies. Among these, ESMPair + Genome performs the best with 0.277 DockQ score with 44.6% Success Rate. The purple bar implies the best performance about the ensemble of all the three strategies with 0.285 DockQ score with 46.8% Success Rate.

More analytic studies of ESMPair: key factors, hyperparameters and measurements to identify high-quality predictions

In this part, we analytically and empirically investigate the inherent properties of ESMPair. Generally, we find that the diversity of the formed MSA of interologs has a strong correlation with the performance of ESMPair. Then, we study the effect of different layers of ESM-MSA-1b [42] on identifying homologs. Finally, we demonstrate that the predicted confidence score output by AlphaFold-Multimer is a rational measurement to differentiate the correct predictions from the incorrect ones.

The diversity about MSA of interologs affects the predicted structure accuracy by ESMPair. We investigate the connections between the performance of ESMPair and some key factors of the formed MSA of interologs, such as the column-wise attention score (i.e. ColAttn_score), the number of effective sequences within MSA measured by Meff (i.e. #Meff), the number of species (i.e. #Species) and the depth of MSA (i.e. Msa:Depth). To be specific, we predict 1689 heterodimers sampled from PDB without filtering and divide them into different regions according to the value of each factor. Notably, for ColAttn_score, we average the score of each single chain in interolog, then re-scaling it in the logarithm form, and then averaging ColAttn_score of all interologs from the paired MSA as the final score of the target. For #Meff, #Species and Msa:Depth, we directly calculate the corresponding statistics based on the interologs.

The correlations between DockQ score and each of the above factors are illustrated in Figure 5. #Meff, #Species and Msa:Depth have a similar trend where the predicted structure accuracy improves with the increasing of these factors. It implies that MSA with more diversity represents the more co-evolutional information that benefits structure predictions of AF-Multimer, which also meets with previous insights [42]. Moreover, the increasing ColAttn_score results in the decreasing structure prediction accuracy. Considering the self-attention mechanism in the PLM, given a sequence as the query, the self-attention mechanism aims at identifying the sequence with high homology affinity, i.e. the sequence with a high similarity score [18]. Therefore, a large ColAttn_score indicates the MSA with a low #Meff, which potentially results in an inaccurate structure prediction. To justify our speculation, we explicitly characterize the dependency between ColAttn_score and #Meff, as shown in Figure 5 (e). ColAttn_score has shown a negative correlation to the #Meff, with the Pearson correlation coefficient of -0.70, which elucidates that a higher ColAttn_score reflects MSA with lower sequence diversity.

Different factors affect the performance of structure prediction. The correlations between the average of Top-5 Best DockQ score (Y-axis) and (a) the column attention score (log-scale) predicted by ESM-MSA-1b, (b) the number of effective sequences measured by Meff, (c) the number of species and (d) the depth of matched MSA (log-scale). (e) The distribution of column attention score (X-axis) and the number of effective interologs in the paired MSA (Y-axis). The red curve is the visualization of the fitted linear regression model. The Pearson correlation coefficient is about -0.70, which strongly indicates that an increasing column attention score results in the decreasing number of effective interologs.

ESMPair built on the last few transformer Layers has a better performance. As ESMPair leverages the column-wise attention output by ESM-MSA-1b[42] to rank and match interologs, how do the column-wise attention weight matrices by different transformer layers affect the efficacy of ESMPair? To answer this, we use the DockQ score of predicted structures as the metric to measure the quality of the input interologs built by ESMPair, as shown in Supplement Figure 7. ESMPair, which is based on the attention output of layer 6 (0.258 DockQ score and 40.2% Success Rate), layer 7 (0.249 and 43.0%) and SUM (0.262 and 42.2%), performs better than other layers. Overall, the SUM aggregation of all the layers is relatively superior to others, thus we use SUM as the default setting of ESMPair.

What is more, ESMPair which is built on the last few layers (6–12th) identifies homologous sequences more precisely than the former layers (1–5th). The phenomenon is consistent with the empirical insights about how to effectively fine-tune the pre-trained language models in the downstream tasks: the last few layers are the most task-specific, while the former layers encode the general knowledge of the training data[50–52], thus only aggregating latter layers may exploiting more homologous information form MSAs.

Moreover, we also probe the effect of different heads output. As we directly use the DockQ score between the ground truth structure and the predicted structure via AF-M as the indicator to evaluate the effects of different heads, it is too heavy for us to study the different heads in all range layers. Thus, we study the effects of 12 different heads by summing the head output of all 12 layers. The results are shown in Figure 8. We can see that different heads have no obvious trend. Maybe we should probe more detailed comparisons, such as the detailed studies of different heads in specific layers. We leave this to future studies.

Predicted confidence score as an indicator to distinguish acceptable models. Practically, besides the substantial improved DockQ performance through ESMPair, it is also vital to figure out how to identify the correct models (DockQ|$\geq $|0.23) from the incorrect ones [27]. To achieve this, we also predict all the 1689 heterodimers via ESMPair, then we apply: (1) the predicted Confidence Score (pConf), (2) Interface pTM (ipTM), (3) predicted TM-score (pTM) and (4) the number of contacts between residues from two chains (the distance of |$C_{\beta }$| atoms in the residues from different chains within 8 |$\overset{\circ}{\text A}$|) (Contacts) as the metric to rank models, as shown in Supplement Figure 6. From Figure 6(a), we find both pConf and ipTM are capable of distinguishing the acceptable models from the unacceptable ones with AUC of 0.97. pTM has a worse performance with AUC of 0.85; as pTM is used as the pessimistic predictor to measure the predicted structure accuracy of each single chain, it ignores the interactions between chains. Contacts merely count the number of interacting residues from different chains, which hardly indicates the accuracy of the predicted structure. pConf and iPTM both consider the structure in both the single chain and interfaces, which are considerate indicators to validate the quality of the predicted structure. We further quantify the interplays between pConf and DockQ score of the predicted structure, as shown in Figure 6(b), which further confirms the strong correlations between pConf and the structure prediction accuracy.

Comparisons between ESMPair and AF-Multimer on CASP15 heteromers

We adopted the ESMPair into the RaptorX-Multimer algorithm to predict some of the heteromer targets from CASP15. The result shows that RaptorX-Multimer is slightly worse than the standard version AF-Multimer (Z-scores are 12.29 and 12.07 for NBIS-AF2-multimer and RaptorX-Multimer, respectively). We speculate the following factors may degrade the performance: (1) Using different sequence databases, for instance, we did not use BFD but used other metagenome databases, (2) Relaxation issues, due to resource constraints, for certain targets, we did not perform relaxation to refine our final predictions and (3). Technical issues with our server during the competition.

To further compare the performance of ESMPair and AF-M in predicting the structures of heterodimer CASP15 targets with native structures, we exclude targets with more than 1200 residues (H1129 and H1157) and apply both pairing strategies to generate MSAs. We then use all input features provided in the official AF-M repository, including MSAs generated from Uniclust30, BFD, Mgnify, Uniref90, UniProt databases, and templates generated from PDB. To ensure accuracy, we ran all five models five times, resulting in 25 predictions per target. We evaluate the performance of our models using various metrics based on Top-5/-1 predictions ranked by their predicted confidences, including DockQ, IRMS, TMscore, ICS and IPS, oligomer-LDDT and QS-global. We also report the highest DockQ score in all model predictions. To combine the predictions of ESMPair and AF-M, we use an ensemble strategy. The results are shown in Supplementary Table 7–9. The results show that the ensemble strategy outperforms AF-M in the Top-5 predictions in terms of average DockQ. However, ESMPair performs poorly in the Top-5 and Top-1 predictions but outperforms AF-M in the best predictions. This suggests that the pConf score obtained from the default pairing strategy of AF-M may not be suitable for ESMPair to select the best models. Therefore, the performance of ESMPair could be greatly improved with a more sensitive confidence predictor.

However, we also note biases in the CASP15 heterodimer test sets, including the fact that five out of eight heterodimeric targets (H1140-44) have the same receptor sequence (subunit 1) and are nanobody-antigen complexes. As such, we believe that our constructed test sets with around 200 diverse heterodimeric targets are more fair to comparing MSA pairing algorithms.

METHODS

In this part, we introduce the framework of our proposed PLMs-enhanced MSA pairing method, i.e. ESMPair. Besides, we explore other promising alternative pairing methods built on PLMs, such as InterGlobalCos, InterGlobalCos and IntraCos. The overall framework of ESMPair is illustrated in Figure 1.

The PLM-enhanced MSA pairing pipeline

Previous efforts [41–43] have confirmed that PLMs can characterize the co-evolutionary signals and biological structure constraints encoded in the protein sequence. Moreover, the MSA-based PLMs [18, 42] further explicitly capture the co-evolutionary information hidden in MSAs via axial attention mechanisms [53, 54]. In light of this, we adopt the state-of-the-art MSA-based PLM, i.e. ESM-MSA-1b [42], as the basis to explore how to utilize them to build rational MSA of interologs to improve the PCP based on Alphafold-Mutimer [28].

Column attention (ESMPair). The column attention weight matrix, which is calculated via each column of MSA via ESM-MSA-1b, can be treated as the metric to measure pairwise similarities between aligned residues in each column. Formally, for each chain, we have the MSA |$M \in \mathcal{A}^{N \times C}$|, where |$\mathcal{A}$| denotes the set of alphabetic symbols used to represent the amino acids, |$N$| represents the number of sequences and |$C$| represents the length of the sequence, i.e. the number of residues. The collections of column attention matrices are denoted as |$\{A_{lhc}\in \mathbb{R}^{N\times N}: l \in [L], h \in [H], c \in [C]\}$|, where |$L$| is the number of layers in PLM, |$H$| is the number of attention heads of each layer. We first symmetrize each column attention matrix, and then aggregate the symmetrized matrices along the dimension of |$L$|, |$H$| and |$C$| to obtain the pairwise similarity matrix among the sequences of MSA, denoted as |$S \in \mathbb{R}^{N\times N}$| (Eq.(1)). |$S$| is symmetric and its first row |$S_{1} \in \mathbb{R}^{1\times N}$| can be viewed as measuring similarity scores between the query sequence and other sequences in the MSA,

where |$\top $| represents the transpose operation and |$\text{AGG}$| is an entry-wise aggregation operator such as entry-wise mean operation |$\text{MEAN}(\cdot )$|, sum operator |$\text{SUM}(\cdot )$|, etc. Unless otherwise specified, |$\text{AGG}$| is specified as |$\text{SUM}(\cdot )$| in this paper.

The MSA pairing strategy is specified as follows: for a query heterodimer, we first obtain |$S_{1}$| of individual MSAs of constituent single chains. Then we group sequences from the MSA by their species, and rank sequences according to their similarity score of |$S_{1}$| in each MSA, respectively. Finally, the sequences of each MSA with the same rank in the same species group are concatenated as interologs. The overall workflow is shown in Supplementary Figure 9.

Cosine similarity. The cosine similarity measurement has been thoroughly explored by pre-train language models [55, 56]. Intuitively, as PLMs generate residue-level embeddings for each sequence in the MSA, the sequence embedding can be directly obtained by aggregating all the residue embeddings in the sequence. Thus we can calculate the cosine similarity matrix between each sequence to measure their pairwise similarities.

To be more specific, we specify two MSA pairing strategies, i.e. Intra-ranking (IntraCos) and Inter-pairing, based on the cosine similarity measurement between sequence embeddings as follows:

Intra-ranking (IntraCos). Firstly, for all sequences from a given MSA |$M \in \mathcal{A}^{N\times C}$|, we obtain a collection of residue-level embedding |$\{E_{ln} \in \mathbb{R}^{C\times d}: l \in [L], n \in [N] \}$|, where |$d$| is the embedding dimension. For sequence |$n \in [N]$|, we can obtain its sequence-level embeddings |$E_{n} = \text{AGG}_{l \in [L], c \in [C]} (E_{lnc})$| by aggregating over all layers |$L$| and all residues |$C$|, where |$E_{n} \in \mathbb{R}^{d}$|. Then we compute cosine similarities between the query sequence embedding, |$E_{1}$|, and other sequence embeddings, {|$E_{n}$|, where |$n\neq 1$|}, in the MSA to obtain the pairwise similarity score matrix (IntraCosScore) |$S_{1} \in \mathbb{R}^{1\times N}$|. After that, we build interologs like ESMPair does.

Inter-ranking. Instead of ranking sequences in each MSA and matching sequences of the same rank, here we directly compute the similarity score matrix between sequences from different MSAs. Formally, given two MSAs |$M_{1} \in \mathcal{A}^{N_{1}\times C_{1}}$| and |$M_{2} \in \mathcal{A}^{N_{2} \times C_{2}}$|, we obtain two individual collections of sequence embeddings |$\{E^{(1)}_{n}: n \in [N_{1}]\}$| and |$\{E^{(2)}_{n}: n \in [N_{2}]\}$|. The inter-chain cosine similarity matrix is denoted by |$B\in \mathbb{R}^{N_{1}\times N_{2}}$|, where |$B_{ij} = \cos (E_{1}[i], E_{2}[j])$|. Without loss of generality, we assume |$N_{i}\leq N_{j}$|, we propose two algorithms to build interologs as follows:

(i) Global maximization optimization (InterGlobalCos). We formalize the pairing problem as a maximum-weighted bipartite matching problem. The weighted bipartite |$G = (V, E)$| is constructed as follows: sequences from individual MSAs of two chains form the set of vertices in |$G$|, i.e. |$V^{(1)} = \{M_{i}^{(1)} \in \mathcal{A}^{C_{1}}: i \in [N_{1}]\}$|, |$V^{(2)} = \{M_{j}^{(2)} \in \mathcal{A}^{C_{2}}: j \in [N_{2}]\}$|, and |$V = V^{(1)} \cup V^{(2)}$|. There are no edges among sequences from the same chain MSA, thus |$V^{(1)}$| and |$V^{(2)}$| are two independent sets. There is an edge |$e_{ij}$| between |$M_{i}^{(1)}$| and |$M_{j}^{(2)}$| if these two sequences are from the same species; the weight associated with |$e_{ij}$| is |$B_{ij}$|. An optimal MSA matching pattern can be obtained by Kuhn–Munkres (KM) algorithm[57] in the polynomial time.

(ii) Local maximization optimization (InterLocalCos). KM algorithm finds a global optimal solution. However, as suggested by [49], in each species, the sequence that is most similar to the query sequence may be more informative, while other sequences that are less similar may add noises. Thus we propose a greedy algorithm that focuses on pairs that have high similarity scores with the query sequence. We iteratively select a pair of sequences |$(i,j)$| that have the largest score in |$B$| among sequences that have not been selected before until reaching a pre-defined maximal number of pairs.

Complex structure prediction of heteromers with more than two different chains. The proposed methods, such as ESMPair and IntraCos, can be easily extended to build MSA of interologs for heteromers with more than two different chains. In practice, we can rank the MSAs in each query sequence by the similarity matrix obtained by the corresponding metric, then match them with the same rank in each species to build effective interologs.

Settings

Evaluation metric. We evaluate the accuracy of predicted complex structures using DockQ [58], a widely-used metric in the computational structural biology community. Specifically, for each protein complex target, we calculate the highest DockQ score among its top-|$N$| predicted models selected by their predicted confidences from Alphafold-Multimer. We refer to this metric as the best DockQ among top-|$N$| predictions. We also report other metrics in some experiments like IRMS, TMscore, ICS and IPS, oligomer-LDDT and QS-global.

Datasets. In order to investigate how improving pairing MSAs can improve the performance of AlphaFold-Multimer, we construct a test set satisfying the following criteria:

(i) There are at least 100 sequences that can be paired given the species constraints.

(ii) The two constituent chains of a heterodimeric target share < 90% sequence identity.

We select heterodimers consisting of chains with 20–1024 residues (due to the constraint of ESM-MSA-1b and also ignore peptide-protein complex), and the overall number of residues in a dimer is less than 1600 (due to GPU memory constraint) from Protein Data Bank (PDB), as accessed on 3 March 2022. We use the default AlphaFold-Multimer MSA search setting to search the UniProt database [35] with JackHMMER [36], which is used for MSA pairing. We also search the Uniclust30 database [59] with HHblits [60], which is used for monomers, i.e. block diagonal pairing. We further select those heterodimers with at least 100 sequences that can be paired by AlphaFold-Multimer’s default pairing strategy. We define two dimers as at most |$x$|% similar, if the maximum sequence identity between their constituent monomers is no more than |$x$|%. Overall, we select 801 heterodimeric targets from PDB that are at most 40% similar to any other targets in the dataset, and satisfy the aforementioned two criteria. Then we use AlphaFold-Multimer (using the default MSA matching algorithm) to predict their complex structures. Based on their predicted confidence scores (pConf) or DockQ scores, 92 targets with their pConf less than 0.7 are denoted as the pConf70 test set. We select 0.7 as the low confidence cutoff based on our fitted logistic regression models over 7000 DockQ and pConf pairs, because the conditional probability of the model having medium or better quality given pConf equals 0.7 is slightly greater than 0.5 (around 0.6), while the probability is less than 0.5 if pConf equals 0.6. For more comparisons, we also select 0.8 as the cutoff, which results in the pConf80 test set of 168 targets, and 155 targets with their predicted DockQ scores less than 0.49 are denoted as the DockQ49 test set.

Baselines. Several heuristic MSA pairing strategies have been developed for protein complex contact and 3D structure prediction [20, 26].

Phylogeny-based method. The strategy is first proposed in ComplexContact [31] for complex contact prediction and is widely adopted by the community. AlphaFold-Multimer employed a similar strategy. This strategy first groups sequences in an MSA by their species and then ranks sequences of the same species by their similarity to the query sequence. When there is more than one sequence in a species group, it joins two sequences of the same rank within the same species group to form an interolog. AlphaFold-Multimer uses this strategy and shows state-of-the-art accuracy in complex structure prediction [28]. Practically, we run the implementation code of Alphafold-Multimer following the default setting of official repertory (https://github.com/deepmind/alphafold) . Notably, we only evaluate the unrelaxed model without the template information for the time efficiency[19].

Genetic distances. In bacteria, interacting genes sometimes are co-located in operons and co-transcribed to form protein complexes [61]. Consequently, we can detect interologs by the genetic distance of two genes. This strategy pairs sequences of the same species based on the distances of their positions in the contigs, which are retrieved from ENA. In our implementations, given a sequence from the first chain, we pair it with the sequence from the second chain that is closest to it in terms of genetic distance. If there are more than one closest sequence, we select the one that has the lowest e-value to the query sequence of the second chain; the e-value is calculated by the MSA search algorithm used to construct the chain MSA.

Block diagonalization. This strategy pads each chain sequence with gaps to the full length of the complex [26]. Therefore, each sequence in the constructed joint MSA, except for the query sequence, will include non-gap tokens in exactly one chain and gap tokens in other chains. By sorting sequences in the joint MSA, we can make non-gap tokens to appear only in the diagonal blocks, thus this strategy is termed as block diagonalization. In our implementations, given a sequence from the first (second) chain, we append (prepend) non-gap tokens to it until the number of non-gap tokens equals the length of the second (first) chain.

Running environment. We conduct the experiments on an Enterprise Linux Server with 56 Intel(R) Xeon(R) Gold 5120 CPU @ 2.20GHz, and a single NVIDIA Tesla V100 SXM2 with 32GB memory size.

Time/Memory requirement analysis. As ESMPair adopts column-wise attention score from ESM-MSA-1b as the metric to construct MSA of interologs, the major running time and memory requirement comes from ESM-MSA-1b. Practically, a single V100 GPU with 32G memory size can run a batch in the shape of 512 sequences with the max length of each sequence as 1,024 within a few seconds. While other baseline methods like Block diagonalization, Genome, or the default strategy of AF-M require no other machine learning models to supplement additional information. Thus, these methods are free of memory requirements.

Generally, the major running time and memory requirement of end-to-end complex structure predictions lies in the process of the MSA searching and the final AF-M prediction. Taking the target with the PDBID ’4rca’ as the example, it contains two subchains with each having about 300 residues. Statistically, the running time of searching MSA of each subchain in the Uniprot database with jackhmmer consumes about half an hour, which results in about 100K MSA sequences for each subchain. After that, ESMPair is applied to construct MSA of interologs within a few minutes resulting in 20K MSA of interologs. As a follow-up, AF-M takes more than 20 minutes to use 5 models for predicting the structure of the target based on obtained MSA of interologs with each model predicting once without accessing the template and amber relaxation.

In summary, the running time cost of sequence linking methods can be totally ignored in the end-to-end complex prediction pipeline with AF-M.

CONCLUSION AND DISCUSSION

This paper explores a series of simple yet effective MSA pairing algorithms based on pre-trained PLMs for constructing effective interologs. To the best of our knowledge, this is the first time that PLMs are used to construct joint MSAs. Experimental results have confirmed that the proposed ESMPair significantly outperforms the state-of-the-art phylogeny-based protocol adopted by AlphaFold-Multimer. What is more, ESMPair performs significantly better on targets from eukaryotes, which are hard to be predicted accurately by AF-Multimer. We further confirm that, instead of using the conventional single strategy to build interologs, the ensemble MSA pairing strategy can largely improve the structure prediction accuracy. Generally, ESMPair has a profound impact on biological applications depending on the high-quilty MSA. In the future, we will continue to explore more potential ways to leverage the advantages of PLM in building and choosing MSA. We also looking forward to applying our proposed methods to improve current MSA-based applications.

Limitations. In this paper, we merely consider how to build effective interologs for heterodimers, which broadly benefits biological applications depending on the high-quality MSA, such as the complex contact prediction [62, 63], complex structure prediction discussed in this paper, etc. However, there also is a large proportion of homodimers in biological assemblies. As it is trivial to build interologs for them, how to select high-quality MSA for homodimers is a more challenging yet important question. Previous work [42, 49] has an empirical insight that instead of using the full MSA searched from the protein sequence database, we can select a few high-quality MSA following some promisings, such as using the MSA maximizing the sequence diversity [42], or choosing the MSA owning the largest sequence similarity with the primary sequence [49]. To date, few efforts have systematically investigated the MSA-selection problem. We leave this for future work.

As we propose a series of MSA paring methods built on the output of PLMs, the representation ability of the PLMs directly affects the performance of our proposed methods. In this paper, we choose the state-of-the-art PLM so far, i.e. ESM-MSA-1b [42], to support our algorithms. However, it is always worth exploiting the potential correlations between different PLM configurations and the performance of our proposed PLM-enhanced methods to identify effective interologs.

Although ESMPair has advantages over the default strategy adopted by AF-Multimer in identifying MSA of interologs, their success rate is similar. After a deep analysis, we observe that ESMPair outperforms AF-Multimer mostly in the acceptable cases (DockQ |$\geq $| 0.23); however, it is notoriously difficult for ESMPair to improve DockQ score of unacceptable cases to be acceptable (Only 3% targets). As we follow the pipeline of the complex structure prediction via AF-Multimer (Figure 1), the limited ability of AF-Mulitmer becomes the bottleneck in the performance of ESMPair. Nevertheless, the above extensive experimental results have proved that ESMPair consistently outperforms AF-Multimer despite AF-Multimer having an inductive training bias toward its default MSA pairing strategy. From the training process of AF-Multimer, we know that the performance of structure prediction highly depends on the quality of the input MSA. In light of this, we assume that if AF-Muiltimer can fine-tune, or totally train from scratch based on ESMPair’s MSA pairing method, the accuracy of structure predictions may be further improved. Moreover, compared with the conventional MSA pairing method, which only uses a single strategy to identify interologs, the ensemble strategy has shown superior performance both in DockQ score and Success Rate without fine-tuning AF-Multimer. We are of the view that the ensemble strategy proposes a new perspective on how to comprehensively exploit the co-evolutionary patterns among MSA, thus further having a wide impact on the biological algorithms resorting to the input MSA.

Our method leverages the deep representation learned by PLMs for paired MSA generation, making a paradigm shift from feature-engineering to feature learning on MSA pairing.

Tested on the heterodimers where AlphaFold-Multimer fails, our method greatly outperforms the state-of-the-art methods.

A careful ensemble of our PLM-based method and existing methods yields even better PCP accuracy, showing that the deep representation learned by PLMs is complementary to traditional genome-based or phylogeny-based MSA features.

In this work, we not only provide a state-of-the-art solution for PCP, but also demonstrate that the representation learned by pre-trained PLMs can be utilized to improve the modeling of related biological problems, where a large amount of unannotated data can provide valuable information of the underlying biological principles. We believe this study provides a good example of introducing PLMs to study the complex interplay of interacting systems.

AUTHOR CONTRIBUTIONS

B.C. proposed the main idea, conducted the main experiments and wrote initial manuscript. Z.W.X., J.Z.Q. and Z.F.Y. collected the experimental data, designed experiments and wrote the initial manuscript. J.B.X. and J.T. gave the detailed instructions and refined the manuscript.

FUNDING

This work was supported by Technology and Innovation Major Project of the Ministry of Science and Technology of China under Grant 2020AAA0108400 and 2020AAA0108402, NSFC for Distinguished Young Scholar (61825602), Zhipu.AI, and Zhejiang Lab (2022PE0AC04).

DATA AVAILABILITY

Data that involved in this work can be obtained from Github: https://github.com/allanchen95/ESMPair.

CODE AVAILABILITY

The code of this study can be obtained from GitHub: https://github.com/allanchen95/ESMPair.

Author Biographies

Bo Chen is a PhD student at Knowledge Engineering Group (KEG), Department of Computer Science and Technology of Tsinghua University, under the surpervision of Prof. Jie Tang. His research interests include name disambiguation, geometric deep learning, and AI for science.

Ziwei Xie is a PhD student at the Toyota Technological Institute at Chicago. His research interests lie in computational protein structure prediction and protein design. He has previously published articles on topics such as protein contact prediction.

Jiezhong Qiu is a principal investigator of Research Center for Intelligent Computing Platforms, Zhejiang Lab. He got his PhD degree from Tsinghua University in 2022. His research interests include algorithm design for large-scale information networks and representation learning for graph-structured data.

Zhaofeng Ye got his PhD from Tsinghua University, School of Medicine. His research interests are forcused on developing computational methods for drug discovery and molecular modeling. He is now a researcher at Tencent Quantum Lab.

Jinbo Xu is the Professor at the Toyota Technological Institute at Chicago. His research lies in the interface of machine learning and AI, computer algorithms and computational biology, i.e., studying AI and computer algorithms to analyze and interpret high volumes of biological data (especially proteomics data) and based upon which building predictive models. He is known for a protein structure prediction program RaptorX, which has been ranked very top in CASPs (Critical Assessment of Structure Prediction) and more importantly, widely used by the broader community. His other work includes machine learning methods, protein de novo sequencing, transcript assembly, protein homology search, protein-protein interaction prediction and functional prediction of variants.

Jie Tang is a WeBank Chair Professor of the Department of Computer Science at Tsinghua University. His interests include artificial general intelligence, data mining, social networks, and machine learning. He is leading several major efforts on building large language models, e.g., GLM-130B, ChatGLM, CogView/CogVideo, CodeGeeX, with the open-sourced versions downloaded by more than 2,000,000 times all over the world. He also invented AMiner.cn, which has attracted 30,000,000 users from 220 countries/regions in the world. He has published 400+ articles in major computer science conferences and journals, and got the SIGKDD Test-of-Time Award.

REFERENCES

Author notes

Bo Chen and Ziwei Xie contributed equally to this work.