-

PDF

- Split View

-

Views

-

Cite

Cite

Sandipan Paul Arnab, Md Ruhul Amin, Michael DeGiorgio, Uncovering Footprints of Natural Selection Through Spectral Analysis of Genomic Summary Statistics, Molecular Biology and Evolution, Volume 40, Issue 7, July 2023, msad157, https://doi.org/10.1093/molbev/msad157

Close - Share Icon Share

Abstract

Natural selection leaves a spatial pattern along the genome, with a haplotype distribution distortion near the selected locus that fades with distance. Evaluating the spatial signal of a population-genetic summary statistic across the genome allows for patterns of natural selection to be distinguished from neutrality. Considering the genomic spatial distribution of multiple summary statistics is expected to aid in uncovering subtle signatures of selection. In recent years, numerous methods have been devised that consider genomic spatial distributions across summary statistics, utilizing both classical machine learning and deep learning architectures. However, better predictions may be attainable by improving the way in which features are extracted from these summary statistics. We apply wavelet transform, multitaper spectral analysis, and S-transform to summary statistic arrays to achieve this goal. Each analysis method converts one-dimensional summary statistic arrays to two-dimensional images of spectral analysis, allowing simultaneous temporal and spectral assessment. We feed these images into convolutional neural networks and consider combining models using ensemble stacking. Our modeling framework achieves high accuracy and power across a diverse set of evolutionary settings, including population size changes and test sets of varying sweep strength, softness, and timing. A scan of central European whole-genome sequences recapitulated well-established sweep candidates and predicted novel cancer-associated genes as sweeps with high support. Given that this modeling framework is also robust to missing genomic segments, we believe that it will represent a welcome addition to the population-genomic toolkit for learning about adaptive processes from genomic data.

Introduction

A number of phenomena shape genomic diversity, including nonadaptive processes, such as mutation, recombination, genetic drift, and migration as well as adaptive processes, such as positive, negative, and balancing selection (Gillespie 2004). Many of these events leave local footprints of altered haplotypic variation across individuals in populations, restructuring the landscape of diversity across the genome (Fay et al. 2001; Prezeworski et al. 2005; Charlesworth 2006; Schlamp et al. 2016). To learn about such processes, myriad summary statistics have been developed over decades, providing tools for testing whether patterns in genetic variation match expectations, either from theoretical models or from mean patterns observed from simulations (e.g., Tajima 1983; Garud et al. 2015). One of the most extensively studied population-genetic phenomena that has received substantial attention in terms of method development over the past few decades is natural selection.

Natural selection is a process that acts on traits of individuals within an environment, leading to differential fitness among individuals that may result in changes in the frequencies of alleles that code for such traits within a population (Gillespie 2004). Genomic studies of a wide range of populations and species have been analyzed using a variety of summary statistic methodologies to search for signatures of natural selection (e.g., Glinka et al. 2003; Lucas et al. 2019; Xue et al. 2021). Summary statistics developed throughout the past several years rely heavily on the haplotype frequency spectrum (e.g., Garud et al. 2015), whereas more classical summaries focused more on the site frequency spectrum (e.g., Tajima 1983). These varied approaches interrogate different aspects of genomic variation, and lend greater ability to detect specific forms of adaptation (Vitti et al. 2013).

However, such summary statistics typically make simplifying assumptions about expected patterns of variation, and can be both underpowered and nonrobust to confounding factors when applied individually. To overcome the pitfalls associated with using a single summary statistic to uncover signals of evolutionary processes, combining the knowledge garnered from a plethora of summary statistics has become an emerging trend (Schrider and Kern 2018). Specifically, the recent expansion of modeling frameworks that combine sets of measured values to discriminate among diverse evolutionary scenarios is owed to the advancement of computational technologies and resurgence of statistical machine learning and artificial intelligence.

The goal of supervised machine learning is to provide algorithms with a dataset of known input (feature) and output (response) values, with the goal to learn the relationship (or function) that maps measured features to a given response (Hastie et al. 2009). This learned function is the model, and the shape of the function is estimated (trained) from the dataset of input and output examples, termed the training set. This model can then be deployed to make predictions on new input data. The taxonomy of supervised learning algorithms can be further split into regression and classification tasks, which depend on whether the response is a quantitative (regression) or qualitative (classification) value (Hastie et al. 2009). Different machine learning algorithms make varying assumptions regarding the form of this function, which ultimately influences the predictive accuracy of the trained models. Commonly employed supervised machine learning methods include linear regression (Weisberg 2005), logistic regression (Kleinbaum et al. 2002), decision trees (Safavian and Landgrebe 1991), random forests (Breiman 2001), support vector machines (Hearst et al. 1998), and neural networks (Müller et al. 1995).

The predictive models based on the application of supervised machine learning to problems in evolutionary genomics have been shown to typically offer greater detection power and accuracy, while also combating the drawbacks of individual hand-engineered summary statistics (e.g., Lin et al. 2011; Schrider and Kern 2016; Sheehan and Song 2016; Kern and Schrider 2018; Sugden et al. 2018; Mughal and DeGiorgio 2019; Mughal et al. 2020). These machine learning techniques employ diverse modeling paradigms, and have differing performances and robustness to confounding factors depending on how the data are modeled as well as the types of summary statistics that are used as input to the models. Thus, all methods show room for improvement in prediction performance.

To glean more information from input summary statistics, many of these models (e.g., Lin et al. 2011; Schrider and Kern 2016; Sheehan and Song 2016) construct feature sets so that they capture the expected spatial autocorrelation of variation in a local genomic region. That is, the input summary statistics are calculated over a number of contiguous or overlapping genomic windows with the hope that the machine learning models will discover relationships among various statistics calculated across different windows to aid in prediction. However, explicitly modeling these autocorrelations may have the potential for improving prediction performance. As an example, Mughal et al. (2020) developed a method for learning about positive natural selection by utilizing multiple summary statistics computed in overlapping genomic windows as input, and then modeled the autocorrelation across these windows by estimating the underlying continuous functional form of each summary statistic. Specifically, Mughal et al. (2020) employed a spectral analysis technique termed the discrete wavelet transform, which decomposed the summary statistic vectors in the form of multilevel details of constituent low- and high-frequency regions, enabling additional meaningful information to be extracted from the summary statistics.

Spectral analysis of signals has been extensively applied in various domains, including biomedical sciences (O’Brien et al. 2019), power systems (Khan and Pierre 2018), and seismography (Puryear et al. 2012), to extract information about the source (or process) responsible for the generation of the examined signals from their oscillatory characteristics. One way to extract information from the signal is to divide the signal into time-localized components and examine each part of the signal independently though spectra. Different spectral analysis methods focus on different characteristics of a signal (Xiang and Hu 2012), and thus, images of the characteristics identified by different spectral analysis methods can be used as input to established modeling frameworks that are able to extract meaningful information and make accurate predictions. One mechanism for attempting to learn such features is with supervised machine learning models known as convolutional neural networks (CNNs, LeCun et al. 1998).

Neural networks are a class of machine learning architectures that are inspired by the structure and function of the human brain. They consist of layers of interconnected nodes termed neurons, which process information in a way that is similar to how neurons in the brain process information. Such models can be used for a wide range of predictive modeling tasks that involve large amounts of data and complex relationships between the measured features and a predicted response. CNNs are a subclass of neural networks architectures that are effective for applications requiring image recognition and processing.

Multilayered CNNs process data in a hierarchical fashion through a network of nodes. When the input is an image, the first layer can identify simple features, such as edges and corners of objects in the image, whereas successive layers may identify more complicated features, such as shapes or higher-order objects, by building upon features learned from previous layers (LeCun et al. 1998). The final layer of the CNN makes a prediction using the identified features from the input image. To learn features from input images, CNNs rely on convolutions, which involve sliding a filter of a given size over the image and computing the dot product between the filter and each matching patch of pixels in the image (LeCun et al. 1998). Through this process, the network is able to identify invariant local patterns and features. Other layers, including pooling layers and activation layers, are also used in CNNs. Downsampling the output of the convolutional layers with pooling layers makes feature maps more precise, invariant to object orientation, and robust to noise, as well as makes the network more accurate. Networks learn more complicated relationships between features and the response through activation layers, which introduce nonlinearity to the network (LeCun et al. 1998). In the field of image recognition, CNNs have proven to be highly effective, often outperforming human experts on a variety of classification tasks (De Man et al. 2019).

CNNs offer a framework for extracting features from inputs that can be one-dimensional vectors, two-dimensional matrices (or grayscale images), and three-dimensional tensors (or color images) (LeCun et al. 1998). Several studies have shown the effectiveness of CNNs for detecting evolutionary events for both one- and two-dimensional signals (Schrider and Kern 2016; Flagel et al. 2019; Torada et al. 2019; Gower et al. 2021). Indeed, CNNs have been applied in the context of learning about evolutionary processes from image representations of haplotype variation, and have been demonstrated to often have greater power and accuracy compared to the current state-of-the-art summary statistic-based methods (Flagel et al. 2019; Isildak et al. 2021). A hybrid application of using two-dimensional spectra generated through signal decomposition to train CNNs has the potential to empower the CNNs to make more effective predictive models. To employ this modeling strategy, one-dimensional summary statistic signals need to be converted into two-dimensional spectra (Cohen 1995; Sejdi et al. 2009), which provide information about the spectral estimates of the underlying source (or process) that generates genomic variation.

Therefore, we seek to improve evolutionary process classifiers, by adding a layer of spectral inference of the underlying process generating the genetic variation. To that end, we use the detection of positive natural selection as a test case, as this setting is where the majority of population-genetic machine learning development has focused, and thus represents a test case for illustrating the performance gains by modeling input data differently. Positive natural selection increases the frequencies of alleles in a population that code for beneficial traits, potentially leading to fixation within the population and ultimately reducing diversity at the selected locus (Gillespie 2004). As this beneficial allele increases in frequency, alleles on the same haplotype at nearby neutral loci also increase in frequency through a process known as genetic hitchhiking (Smith and Haigh 1974). The resulting loss of haplotypic diversity around the selected locus is known as a selective sweep (Przeworski 2002; Hermisson and Pennings 2005), and is a footprint that is often used to uncover signals of past positive selection. Depending on the number of distinct haplotypes that have risen to high frequency, selective sweeps can be categorized as either soft or hard, with hard sweeps typically easier to detect due to their more conspicuous genomic pattern (Przeworski 2002; Hermisson and Pennings 2005; Garud et al. 2015).

In this article, we examine the utility of applying three signal decomposition methods on arrays of summary statistics computed across overlapping windows to generate spectra (Thomson 1982; Daubechies 1992; Stockwell et al. 1996), and develop machine learning methods trained with these images. We additionally employ ensemble-based stacking procedures (Hastie et al. 2009) that aggregate the results of individual classifiers with the goal of further improving power and accuracy to detect sweeps from genome variation. With this in mind, we introduce an approach termed SISSSCO (Spectral Inference of Summary Statistic Signals using COnvolutional neural networks) with open-source implementation available at https://www.github.com/sandipanpaul06/SISSSCO. As an empirical test case, we then apply our trained SISSSCO models to whole-genome data of the well-studied central European human individuals sequenced by the 1000 Genomes Project (The 1000 Genomes Project Consortium 2015). SISSSCO identifies multiple genes, including LCT, ABCA12, SLC45A2, HLA-DRB6, and HCG9, which have been identified as sweep candidates from previous studies. SISSSCO also identified several novel sweep candidates, including PDPN, WASF2, LRIG2, and SDAD1.

Results

In this section, we begin by highlighting power and accuracy to detect selective sweeps using various strategies that combine different spectral decompositions of summary statistic signals as well as stacking of trained CNN architectures. We also compare the performance of these approaches with other contemporary machine learning methods that take summary statistics as input to detect sweeps. We then investigate how confounding factors, like changing population sizes over time, the existence of missing genomic segments, and background selection, influence predictive accuracy, power, and robustness. Finally, as a proof of concept, we test our new approaches using a genomic dataset from a human population that has been extensively studied.

Modeling Description

To train and test our models, we simulated neutral and sweep replicate observations using the coalescent simulator discoal (Kern and Schrider 2016) under either an equilibrium constant-size demographic history of 10,000 diploid individuals (Takahata 1993) or under a nonequilibrium history inferred from central European human genomes (Terhorst et al. 2017) that includes a recent severe population bottleneck. Per-site per-generation mutation () and recombination rates (exponential distribution with mean and truncated at ) were chosen to reflect expectations from human genomes and previous studies (Payseur and Nachman 2000; Scally and Durbin 2012; Schrider and Kern 2016). For each simulated replicate, we sampled 198 haplotypes of length 1.1 megabase (Mb) to match the number of sampled haplotypes in our empirical experiments.

At the center of simulated sequences for sweep observations, we introduced a beneficial mutation that became selected for at a frequency of (drawn uniformly at random on a logarithmic scale) with per-generation selection coefficient (drawn uniformly at random on a logarithmic scale) and became fixed in the population t generations prior to sampling. For each of the two demographic scenarios, we generated two datasets: one with the sweep completing at time of sampling ( generations) and a setting that should be more difficult to distinguish from neutrality, with [0, 1,200] generations drawn uniformly at random, permitting the processes of mutation, recombination, and genetic drift to erode genomic footprints of the selective sweep after fixation. We denote these four datasets as Equilibrium_fixed, Equilibrium_variable, Nonequilibrium_fixed, and Nonequilibrium_variable, where the demographic history is given by either equilibrium (constant-size) or nonequilibrium (European human bottleneck), and the time of sampling after sweep completion is given by either as a fixed () or variable ([0, 1,200]) number of generations.

For each class (neutral or sweep), we generated 11,000 independent simulated replicate observations, with 9,000, 1,000, and 1,000 observations reserved for training, validation, and testing. For each replicate, we computed summary statistics across the simulated sequence to obtain nine one-dimensional signals to use as features for downstream modeling identical to the ones used in Mughal et al. (2020) (see Methods for summary statistic computation on simulated data). The initial summary statistic that we explored in our model training is the mean pairwise sequence difference (; Tajima 1983) estimated across sampled haplotypes. The dataset containing instances of computed as a one-dimensional signal of length 128 across a genomic sequence of neutral and selective sweep regions was used to test the efficacy of each of the three spectral analysis methods. These summary statistic signals of length 128 are based on short overlapping windows with a fixed number of single nucleotide polymorphisms (SNPs) per window, and a fixed SNP stride between windows (see Methods section). We calculated in overlapping windows with a goal to capture local patterns along a chromosome (see Methods section for details).

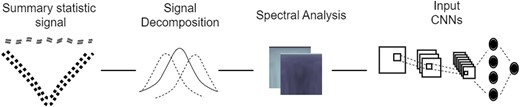

The two-dimensional images that we obtain by performing spectral analysis on a one-dimensional signal (e.g., ) are then fed into a CNN (LeCun et al. 1998), which is depicted in figure 1. The CNN has an input size of containing N training observations of c different summary statistic signals decomposed as images through spectral analysis. Here we have , , and . As we are currently only considering a single signal based on the statistic, we are using a channel input for our CNN. The CNN has two convolution layers with 32 filters, kernels of size (Agrawal and Mittal 2020), and a stride of two (Kong and Lucey 2017) with zero padding (Hashemi 2019). Each convolution layer is then followed by an activation layer using a rectified linear unit (ReLU), as well as a batch normalizing layer (Goodfellow et al. 2016). The convolution layers are followed by a dense layer containing 128 nodes, which is the same as the input signal length n. The dense layer also contains an elastic-net style regularization penalty (Zou and Hastie 2005), whereby network weights shrink in magnitude together toward zero through an -norm penalty while simultaneously performing feature selection by setting some weights to zero through an -norm penalty (Hastie et al. 2009). The fraction of regularization deriving from the -norm penalty is controlled by hyperparameter and the amount of total regularization is controlled by hyperparameter . The model also utilizes a dropout layer with dropout rate hyperparameter to further prevent model overfitting by reaching a saturation point (Srivastava et al. 2014; Goodfellow et al. 2016). The model is trained with each hyperparameter triple, with a batch size of 50 for 30 iterations, and the best model is chosen as the one with the smallest validation loss, where we employ the categorical cross-entropy loss measurement. We deployed the keras Python library (Chollet et al. 2015) with a TensorFlow (Abadi et al. 2015) back-end for training of CNNs and making downstream predictions from the learned models.

Depiction of a channel convolutional neural network (CNN) architecture. A summary statistic signal of length is used as input to a spectral analysis method (either wavelet decomposition, multitaper analysis, or S-transform) to decompose the signal into a matrix of dimensions , with , which is then standardized at each element based on the mean and standard deviation across all training observations, and is then used as input to a CNN. The CNN has two convolution layers (three layers for the S-transform), followed by a dense layer with n nodes containing both elastic-net and dropout regularization. The output layer of the CNN is a softmax that computes the probability of a sweep.

The first of three spectral analysis methods that we consider is wavelet decomposition. Specifically, we assume that each sequence of length represents a sample from a continuous wavelet containing n data points. This signal is then decomposed by a level m wavelet analysis method, with the Morlet wavelet (Bernardino and Santos-Victor 2005) selected as the mother wavelet. Level is chosen for the scalograms generated to match the size of the spectral images that result from the other two spectral analysis methods that we subsequently introduce. Every decomposed signal generates an dimensional scalogram matrix. A more detailed treatment of the wavelet decomposition for spectral analysis is provided in the Methods section, and we employed the PyWavelets Python package (Lee et al. 2019) to construct scalogram images.

Next, for the multitaper spectral analysis approach, to derive the periodogram of the estimate of the true power spectral density from a signal of size using the multitaper spectral analysis method, we used a window length of n. We calculated discrete prolate spheroidal sequence (DPSS) tapers over time half-bandwidth parameter () values in and a DPSS window size of , which results in a matrix of tapering windows of size and a vector of eigenvalues of length m. Here, is the bandwidth of the most dominant frequencies in the frequency domain such that . Using this matrix and vector, a periodogram of size is generated, which is the same as the dimension of the scalogram that we considered with the wavelet analysis method. See the Methods section for a complete detailed description of multitaper analysis. We utilized the spectrum Python package (Cokelaer and Hasch 2017) to generate multitaper periodogram images.

Finally, for spectral analysis using the Stockwell transform (also known as the S-transform) we used the same datasets as the previous two spectral analysis approaches. The S-transform returns a spectrogram matrix estimate of the true power spectral density that has size , where and where the length of the signal is . The spectrogram has the same image size as the previous two methods. See the Methods section for further details on the S-transform. We used the stockwell Python package (Satriano 2017) to estimate S-transform spectral images. The images are then fed into a CNN with identical architecture to that of the previous two methods with the addition of a third convolution layer, which we included as we found that adding this extra convolution layer substantially increased performance under the S-transform image inputs.

Application of Signal Decomposition

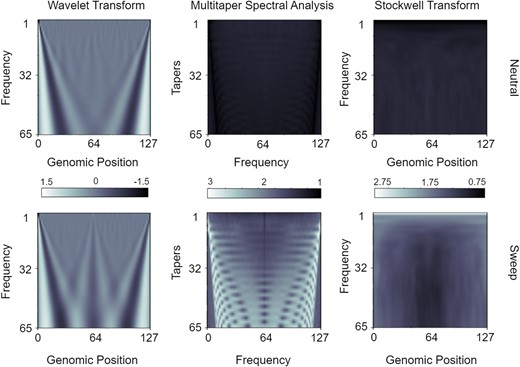

Supplementary figure S1, Supplementary Material online presents heatmaps of the raw spectral images, averaged across simulated replicates, for neutral and selective sweep regions using three signal decomposition methods. However, based on these raw images, it is difficult to visually distinguish between sweeps and neutrality for each of the spectral analysis methods. To better explore the visual differences within these matrices, we scaled each element of each spectral analysis matrix to have unit standard deviation across the neutral and sweep replicates. The mean scaled matrices depicted in figure 2 show the emergence of more-readily distinguishable patterns between sweeps and neutrality. The wavelet decomposition results display a clear distinction between the two classes, with a triangular bulge in the mid-segment of the sweep scalogram that is not present within the neutral scalogram. This pattern indicates that the selective sweep signals have information in the middle windows between windows 45 and 85 that is not present in neutral signals. Similarly, the mean sweep spectrogram generated by S-transform shows a T-shaped construct in the midportion of the image, again indicating a difference of power between the classes of some low- to mid-frequency components in the central windows. The mean spectra generated by multitaper analysis depict a rib-cage like structure in the mean sweep periodogram. Each ‘rib’ represents a Fourier transformation of a signal tapered by a single taper. The frequency of the taper increases as we descend the rows of the image, whereas the amplitude of the central window of the taper decreases. Hence, a signal tapered by higher frequency tapers generate a distorted representation of the signal. As the frequencies of the tapers increase, more low- and high-frequency components in the sweep signal are lost, resulting in a narrower spectral density. These characteristics of the tapers lead to the the rib-cage structure depicted in the mean sweep image.

Mean spectral analysis input matrices for windows of the mean pairwise sequence differences across the neutral and sweep replicates under the Equilibrium_fixed dataset containing an equilibrium constant-size demographic history and a sweep that completed generations before sampling. Top row are neutral simulations and bottom row are sweep simulations. Spectral methods are depicted from left to right columns for the wavelet decomposition, multitaper analysis, and the S-transform, respectively. Elements of each matrix have been scaled to have a standard deviation of one across all N simulated replicates for a given spectral analysis method.

The standardized (combined centering and scaling) images in supplementary figure S2, Supplementary Materialonline that are ultimately used as input to CNNs show that the classes can be easily visually differentiated as the images show exactly opposite patterns for the two classes, with the images for neutral regions having lower values for the majority of the area in the images. These opposite patterns are due to centering. A peach pit shape is present in the center of both mean sweep and neutral spectrograms generated by the S-transform, albeit represented by two distinctly different shades corresponding to positive and negative values, respectively. Several mid- and low-frequency components are present in the central windows of the sweep samples, which results in the bright core of the peach pit in the mean sweep image. The rib-cage structure is also present in mean spectra of both classes in the images created by multitaper analysis, with different shades for the two classes corresponding mostly to positive and negative values.

Figure 2, supplementary figures S1 and S2, Supplementary Material online highlight the qualitative patterns in images derived from neutral and sweep settings that result from three different spectral analysis methods applied to a sequence of values calculated across overlapping genomic windows. Given that these images show qualitative differences between sweeps and neutrality, our goal is to evaluate the predictive ability of discriminating between sweeps and neutrality from such input images. These mean images suggest that there exists useful information within the spectral images that may help distinguish between the two classes. Nevertheless, it may be difficult to spot anomalies by looking at the individual spectral analysis images, especially if it is important to distinguish between classes while remaining resistant to artifacts. Therefore, we used the CNN architecture described above in the Modeling description subsection. We fed the images derived from application of the three spectral analysis methods to a sequence of values to evaluate classification rates and accuracies. Supplementary figure S3, Supplementary Material online shows that the models trained on wavelet analysis scalogram and S-transform spectrogram images have an imbalance in their classification rates, with skews toward detecting neutral regions more accurately than the sweep regions. In contrast, the model trained on multitaper analysis periodogram images with a time half-bandwidth parameter of 2.5 displays greater accuracy for correctly estimating sweeps compared to neutral regions, whereas changing the time half-bandwidth parameter to 2.0 or lower results in classification rates more skewed toward correctly detecting neutrality. Because we want to avoid false discoveries of sweeps, higher time half-bandwidth parameter values are more expensive computationally, and time half-bandwidth parameters higher than 2.5 did not change performance significantly in our preliminary tests, we selected 2.0 for future multitaper experiments.

Stacking Models to Enhance Sweep Detection

We have three models trained with three signal decomposition methods that have yielded comparable but slightly differing results (supplementary fig. S3, Supplementary Material online). We now discuss architectures to increase the learning capacity of our models when trained to jointly consider all three spectra. Our previous experiments explored a single summary statistic signal () to decompose and train the models with spectra. Following Mughal et al. (2020), we next compute nine one-dimensional summary statistic signals (, , , and frequencies of the 5 most common haplotypes) per simulated replicate and generate 9 spectra for each of the 3 spectral analysis methods, resulting in 27 different images.

The first joint modeling approach taken was to train three separate models using three signal decomposition methods with nine images per replicate provided as input to a CNN, with one image for each of the channels of the CNN (supplementary fig. S4, Supplementary Material online). These models were then concatenated and trained in three different ways. The first of these three strategies is to train each of the three nine-channel CNNs, fix the weights of the trained CNNs, and concatenate their output layers (sweep probability values) into a three-element vector of sweep probabilities. The linear combination of these sweep probabilities is then used as input to a new softmax function to predict the probability of a sweep from evidence of the three pretrained CNNs. The final weights of the linear combination leading to the new softmax function are trained, and we denote this method by SISSSCO[3CO] (three-input CNNs and concatenation of the output layer). The weights of the three individually trained CNNs are not retrained in the final model. A depiction of this SISSSCO[3CO] architecture is given in supplementary figure S5, Supplementary Material online. In the next strategy, we instead concatenated the dense layers of the three nine-channel CNNs, leading to a vector of elements that we send to a new softmax layer as in the SISSSCO[3CO] method. As with SISSSCO[3CO], we trained the weights of the linear combination leading from the concatenation of the dense layers to the new softmax function, but did not retrain the weights of the three individually trained CNNs, and we denote this method by SISSSCO[3CD] (three-input CNNs and concatenation of the dense layer). A depiction of the SISSSCO[3CD] architecture is given in supplementary figure S6, Supplementary Material online. The third and final strategy, has an identical architecture of the SISSSCO[3CD] model, with one key difference—the weights of the entire concatenated model are jointly trained. We denote this method by SISSSCO[3MD] (three-input CNNs and merging of the dense layer prior to training). A depiction of the SISSSCO[3MD] architecture is given in supplementary figure S7, Supplementary Material online.

The second joint modeling approach is more complex than the first. Specifically, we construct 3 CNNs per summary statistic based on the 3 signal decomposition methods, resulting in 27 distinct CNNs each with channel (fig. 1). Similar to the previous concatenation strategies, the concatenation and training were accomplished in an identical fashion by pretraining individual CNNs and concatenating output layers (model denoted by SISSSCO[27CO]), pretraining individual CNNs and concatenating dense layers (model denoted by SISSSCO[27CD]), and concatenating dense layers of individual CNNs with all weights in the subsequent merged model trained (model denoted by SISSSCO[27MD]). Both SISSSCO[27CD] and SISSSCO[27MD] methods result in the most complex final models, with the dense layer containing nodes. Though SISSSCO[27CD] and SISSSCO[27MD] have the same number of concatenated dense layer nodes, the node weights are not set prior to concatenation for SISSSCO[27MD], making SISSSCO[27MD] the most computationally expensive method among all the six models. To further elaborate, SISSSCO[27CD] and SISSSCO[27MD] each have a total of 83,98,818 parameters, of which are trainable postconcatenation for SISSSCO[27CD], whereas SISSSCO[27CO] has 83,98,589 parameters of which 27 are trainable postconcatenation. The architectures of the SISSSCO[27CO], SISSSCO[27CD], and SISSSCO[27MD] models are depicted in supplementary figure S8, Supplementary Material online, figure 3, and supplementary figure S9, Supplementary Material online, respectively. In the next subsection, we evaluate the accuracies and powers of the six SISSSCO models on idealistic constant-size demographic history datasets.

![Depiction of the SISSSCO[27CD] model. Each summary statistic signal (π^, H1, H12, H2/H1 and frequencies of the first five most common haplotypes respectively denoted by P1 to P5) of length n=128 is used as input to each of the three spectral analysis method (wavelet decomposition, multitaper analysis, and S-transform) to decompose the signal into three matrices of dimension m×n, with m=65, which are then each standardized at each element based on the mean and standard deviation across all N=18,000 training observations. These 27 images (9 statistics across 3 spectral analysis methods) each used as input to train 27 independent convolutional neural networks (CNNs). The CNNs have two convolution layers (three layers for the S-transform), followed by a dense layer with n nodes containing both elastic-net and dropout regularization. The output layer of the CNN is a softmax that computes the probability of a sweep. After training, the model parameters are fixed, and the dense layers of the 27 CNNs are concatenated and these 27n=3,456 nodes are used as input to a new output layer, which computes the probability of a sweep as a softmax.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mbe/40/7/10.1093_molbev_msad157/1/m_msad157f3.jpeg?Expires=1749000785&Signature=JncWqfXGK3~bRqF1TEP4CZ5Xh303rQkZH~7Y8YaCjefKVP3r7hOWu4C3XRqy2GyYLCr5xhPsWU~kj57FiNDmcs-YsAUfZ~nudYiLIyyre8b5RAXwjZGDIBdQ8XiyOq8yxn89tGckzAPYf~P3NT3Kr6ZuG6UbW--rPcNE~wrf6awad9WgT4E2owTFL4YJiHP5XmwZk8moK3AuZY~nZ1CcJGMxeEAn1oCk40cT65q8KrYrK-BbaNG7vLuxXnpRu4TJk~IlKOtH~vHyi34utEB1DInwtKrujsHb72Rl6Xb7bfpWXEriwgEYLPnlMzxIwlL6QzQ8I5OPJs2VE9FsozEH5Q__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Depiction of the SISSSCO[27CD] model. Each summary statistic signal (, , , and frequencies of the first five most common haplotypes respectively denoted by to ) of length is used as input to each of the three spectral analysis method (wavelet decomposition, multitaper analysis, and S-transform) to decompose the signal into three matrices of dimension , with , which are then each standardized at each element based on the mean and standard deviation across all training observations. These 27 images (9 statistics across 3 spectral analysis methods) each used as input to train 27 independent convolutional neural networks (CNNs). The CNNs have two convolution layers (three layers for the S-transform), followed by a dense layer with n nodes containing both elastic-net and dropout regularization. The output layer of the CNN is a softmax that computes the probability of a sweep. After training, the model parameters are fixed, and the dense layers of the 27 CNNs are concatenated and these nodes are used as input to a new output layer, which computes the probability of a sweep as a softmax.

Power and Accuracy to Detect Sweeps

All of our six SISSSCO models have high classification accuracies and powers on the two constant-size demographic history datasets (supplementary figs. S10–S13, Supplementary Material online). Of these, SISSSCO[27CD] exhibited uniformly highest accuracy to discriminate sweeps from neutrality, reaching 99.75% and 99.80% accuracy on the Equilibrium_fixed and Equilibrium_variable datasets, respectively (supplementary figs. S10 and S12, Supplementary Material online). However, even the worst performing SISSSCO model had high accuracy on each dataset, with SISSSCO[3CD] achieving an accuracy of 96.50% and 95.45% on the Equilibrium_fixed and Equilibrium_variable datasets, respectively (supplementary figs. S10 and S12, Supplementary Material online). This lower classification accuracy of SISSSCO[3CD] compared to the other SISSSCO models appears to be primarily driven by a skew in misclassifying neutral regions as sweeps (supplementary figs. S10 and S12, Supplementary Material online).

The accuracy results are also reflected in the high powers of the SISSSCO models to detect sweeps based on receiver operating characteristic (ROC) curves (supplementary figs. S11 and S13, Supplementary Material online). ROC curves are graphical representations that display the tradeoff between the true positive rate and the false positive rate of a binary classifier as the discrimination threshold changes. Specifically, SISSSCO[27CD] achieves an area under the ROC curve of close to one for both datasets (supplementary figs. S11 and S13, Supplementary Material online), suggesting that it has perfect power to detect sweeps for even small false positive rates. Moreover, consistent with SISSSCO[3CD] having the lowest accuracy among the six SISSSCO models, the ROC curves show that SISSSCO[3CD] reaches high power for low false positive rates, but plateaus at this level until high false positive rates (supplementary figs. S11 and S13, Supplementary Material online), reducing the overall area under the ROC curve compared to the other SISSSCO models. The results show that, though all SISSSCO models have high powers and accuracies for sweep detection, the most parameter rich (yet not most computationally expensive) SISSSCO[27CD] model outperforms all others developed here on the constant-size demographic history datasets (supplementary figs. S10–S13, Supplementary Material online).

ROC curves are helpful for determining the optimal threshold and assessing the overall performance of a classifier. In contrast, confusion matrices display classification performance for only one possible choice for the threshold. Specifically, the confusion matrices presented here employ a sweep probability threshold of 0.5, such that predicted probabilities greater than 0.5 are classified as a sweep, and otherwise are classified as neutral. Adjusting this default threshold of 0.5 would modulate method accuracy and robustness to false discoveries. For the confusion matrices, we have assigned the class label (neutral or sweep) that has the larger probability conditional on the input data—that is, we choose label such that is maximal for input X.

Performance Relative to Comparable Methods

We tested the classification performance of our models against three state-of-the-art methods that employ summary statistics as input: SURFDAWave (Mughal et al. 2020), diploS/HIC (Schrider and Kern 2016), and evolBoosting (Lin et al. 2011). SURFDAWave is a wavelet-based classification method that takes as input nine summary statistic arrays, exactly the ones that we have used for our study, and learns the functional form of the spatial distribution of each summary statistic using a wavelet basis expansion to represent the autocorrelation within a summary statistic across the genome. The method then uses estimated wavelet coefficients as input to elastic-net logistic regression models for classifying selective sweeps and predicting adaptive parameters.

On the other hand, to detect selective sweeps, diploS/HIC takes a complementary deep learning approach to extract additional information from arrays of different features of population-genetic variation. In particular, the deep CNN classifier used in diploS/HIC takes images of a set of multidimensional summary statistic vectors calculated in 11 windows, with the central window denoted as the target. The set of summary statistics considered is different from SURFDWave, instead employing a set of summary statistics that assesses nucleotide and multilocus genotype variation without the need for phased haplotypes.

Furthermore, evolBoosting also uses arrays of different summary statistics as input and applies boosting to detect selective sweeps from neutrality. The purpose of the boosting (Schapire 1999) ensemble technique is to create an optimum combination of simple classification rules obtained from the base classifiers (Hastie et al. 2009), which are themselves quite simple and not particularly accurate. This strategy is inspired by the observation that, in most cases, an ensemble of basic rules can outperform classifiers individually (Schapire 1999). Boosting involves fitting data instances to a model, and training the model in a series. Incorrect predictions are used to train a subsequent model. Each newly added base model improves prediction error by accounting for error that was not captured by the set of prior base models. At each iteration, the less reliable rules of each base classifier are aggregated into a single, more reliable rule.

These three methods consider both linear and nonlinear classification strategies, with SURFDAWave employing a linear model and diploS/HIC and evolBoosting nonlinear approaches. We applied these three methods using their default settings, such as window lengths, window sizes, sets of features, and summary statistic generation and usage. It is important to note that diploS/HIC was originally developed to discriminate among five classes: soft sweeps, hard sweeps, linked soft sweeps, linked hard sweeps, and neutrality. As in Mughal et al. (2020), we retooled the method as a binary classifier to distinguish selective sweeps from neutrality given input summary statistics.

On both the Equilibrium_fixed and Equilibrium_variable datasets, SURFDAWave, diploS/HIC, and evolBoosting achieved relatively high accuracy to discriminate sweeps from neutrality, with the lowest of them (evolBoosting) achieving an accuracy of 97% and 95% on the Equilibrium_fixed and Equilibrium_variable datasets, respectively (supplementary figs. S10 and S12, Supplementary Material online). SURFDAWave had highest accuracy among the three methods on each dataset, achieving an accuracy of 97.95% and 97.60% on the Equilibrium_fixed and Equilibrium_variable datasets, respectively (supplementary figs. S10 and S12, Supplementary Material online). The marginally lower accuracies of evolBoosting and diploS/HIC compared to SURFDAWave appears to be due to an imbalance in their predictions, with extremely high accuracy at correctly classifying neutrality coupled with elevated misclassification rates of sweeps as neutral (supplementary figs. S10 and S12, Supplementary Material online). However, this skew toward misclassifying sweeps as neutral is conservative, and is substantially more desirable than a skew toward falsely discovering neutral regions as sweeps. Moreover, as expected, each method had a decrease in accuracy on the more challenging Equilibrium_variable dataset (supplementary fig. S12, Supplementary Material online) relative to the Equilibrium_fixed dataset (supplementary fig. S10, Supplementary Material online). In comparison with SISSSCO, four of the SISSSCO models had higher accuracy than the competing methods on the Equilibrium_fixed dataset (supplementary fig. S10, Supplementary Material online), whereas three of them showed higher accuracy on the Equilibrium_variable dataset (supplementary fig. S12, Supplementary Material online).

In terms of method power, SURFDAWave, evolBoosting, and diploS/HIC tended to exhibit marginally lower power than the SISSSCO models, yet generally still achieved similarly high levels of the area under the ROC curves as SISSSCO models on both datasets (supplementary figs. S11 and S13, Supplementary Material online). An exception is evolBoosting, which displayed substantially lower area under the ROC curve compared to other methods, achieving a power (true positive rate) close to one for false positive rates close to 0.2, whereas all other methods attained power close to one for false positive rates less than 0.05. These results suggest that under the constant-size demographic history and selection setting explored here, several SISSSCO models had higher classification accuracies and powers compared to other leading machine learning methods that use as input summary statistics for detecting sweeps. Moreover, the SISSSCO[27CD] model achieves near perfect classification accuracy and power.

Robustness to Background Selection

A ubiquitous force affecting genetic variation across chromosomes is background selection (McVicker et al. 2009; Comeron 2014), which results from the purging of deleterious genetic variants by negative selection (Charlesworth et al. 1993; Hudson and Kaplan 1995; Charlesworth 2012). Importantly, background selection has historically been a confounding factor when searching for sweep footprints from allelic variation, as it can lead to distortions in the distribution of allele frequencies that masquerade as positive selection (Charlesworth et al. 1993, 1995, 1997; Keinan and Reich 2010; Seger et al. 2010; Nicolaisen and Desai 2013; Huber et al. 2016). However, though background selection is unlikely to leave prominent signatures of low haplotypic variation (Charlesworth et al. 1993; Charlesworth 2012; Enard et al. 2014; Fagny et al. 2014; Schrider 2020), it is nevertheless important to explore whether SISSSCO is robust to this common selective force.

To investigate the effect of background selection on model performance, we generated 1,000 test replicates that matched the demographic history and genetic parameters of the Equilibrium_variable dataset using the forward-time simulator SLiM (Haller and Messer 2019), and evolved the simulated population for 120,000 generations (12 times the diploid size), which included a 100,000 generation burn-in period (10 times the diploid size) with 20,000 generations of evolution afterward. Following Cheng et al. (2017), we simulated background selection where recessive () deleterious mutations, with selection coefficients (s) drawn from a gamma distribution with mean of and shape parameter of , are distributed across a protein-coding gene of length 55 kilobases located at the center of the simulated 1.1 Mb region. This simulated gene consists of 50 exons each of length 100 bases, 49 introns each of length 1,000 bases, an upstream untranslated region (UTR) of length 200 bases, and a downstream UTR of length 800 bases, with the lengths of these elements approximately matching mean human values (Mignone et al. 2002; Sakharkar et al. 2004). Within this gene, 75% of mutations in exons are deleterious, 10% in introns are deleterious, and 50% in and UTRs are deleterious. We then computed summary statistics and corresponding spectral analysis images from the 198 haplotypes sampled from each simulated replicate in an identical manner to those used to train SISSSCO, and then fed sets of spectral images as input to the SISSSCO models trained on the Equilibrium_variable dataset. As expected, we find that all SISSSCO models are robust to background selection, with the proportion of false sweep signals due to background selection mirroring closely the false positive rate from neutral simulations, and all methods classifying over 96% of background selection replicates as neutral (supplementary fig. S14, Supplementary Material online).

Influence of Population Size Changes

Our prior experiments have highlighted the excellent classification accuracies and powers for the SISSSCO models. However, such test settings were idealistic, in which there has been no demographic changes over time—in contrast to the expectation for real populations. We therefore trained and tested our models on a demographic history estimated from the well-studied human central European population (CEU) from the 1000 Genomes Project dataset (The 1000 Genomes Project Consortium 2015), for which there is extensive evidence of severe population size changes in recent history (Terhorst et al. 2017).

As with the idealistic constant-size demographic histories, we trained our methods on the Nonequilibrium_fixed and Nonequilibrium_variable datasets, which differ by whether the time that the sweep completed was fixed at generations before sampling or variable and drawn from a distribution [0, 1,200] generations in the past, respectively. The latter dataset represents a setting that should be more difficult, as it leads to blurring of the boundaries between the sweep and neutral classes. Moreover, we deployed the six SISSSCO models as well as the comparison methods (SURFDAWAave, diploS/HIC, and evolBoosting) with identical architectures, training paradigms, and quantity of train, test, and validation data as for the constant population size experiments.

Similarly to the constant-size setting, SISSSCO[27CD] displayed near perfect accuracy of 99.9% and 99.5% to discriminate sweeps from neutrality on the Nonequilibrium_fixed and Nonequilibrium_variable datasets, respectively (fig. 4 and supplementary fig. S15, Supplementary Material online). SISSSCO[27CD] also had uniformly highest accuracy across all tested SISSSCO and non-SISSSCO methods (fig. 4 and supplementary fig. S15, Supplementary Material online). Of the non-SISSSCO methods, highest accuracy was achieved by SURFDAWave (98.65%), and lowest by evolBoosting (94.50%) on the Nonequilibrium_fixed dataset (supplementary fig. S15, Supplementary Material online). On the Nonequilibrium_variable dataset we see the same pattern among the non-SISSSCO methods, with SURFDAWave achieving the highest accuracy (96.55%), and evolBoosting the lowest (93.00%) (fig. 4).

![Classification rates and accuracies as depicted by confusion matrices to differentiate sweeps from neutrality on the Nonequilibrium_variable dataset for the six SISSSCO architectures compared to SURFDAWave, diploS/HIC, and evolBoosting. The Nonequilibrium_variable dataset is based on the nonequilibrium recent strong bottleneck demographic history of central European humans (CEU population in the 1000 Genomes Project) and a sweep that completed t∈[0, 1,200] generations before sampling.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mbe/40/7/10.1093_molbev_msad157/1/m_msad157f4.jpeg?Expires=1749000785&Signature=RvLvDIPS8FRmbjgfnw2oPQCRK-m6Vvy~3Vw60cbxXSRoLFjf96QFNv1tA6bOfIPbviNvVUN50SEmlxbSyL9X6WH4GStZruTVYDZWKlD0xyiouzCNnL1JdU2~EGPUksI0ZYgsNO1sLj3456ZyP--SVX8if9i6fav5ckCnt3DeP-z-zWwFyLrQQrAUnvKl1rbs0wqsQs8lGiUqoTNbelA4bSZjaGrEsZt46DdBfpkdJhaOrxSgGXt6YU801hBFDhEh4diVIY5vrWBsQGcLgYNKy8Jn0WCnUUlUC3YRkxiDSt5HV4n-O~CTlycF5KlVLv5WcV8VsTpL1LLMgoDJw5GIMQ__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Classification rates and accuracies as depicted by confusion matrices to differentiate sweeps from neutrality on the Nonequilibrium_variable dataset for the six SISSSCO architectures compared to SURFDAWave, diploS/HIC, and evolBoosting. The Nonequilibrium_variable dataset is based on the nonequilibrium recent strong bottleneck demographic history of central European humans (CEU population in the 1000 Genomes Project) and a sweep that completed [0, 1,200] generations before sampling.

The high classification accuracies on these datasets are echoed by their high powers to detect sweeps, with all methods aside from evolBoosting achieving areas under the ROC curves that are close to one on the Nonequilibrium_fixed dataset (supplementary fig. S16, Supplementary Material online). However, the Nonequilibrium_variable dataset was more challenging, with SISSSCO[27CD] the only method achieving near perfect area under the ROC curve, though SISSSCO[27MD] is close (right panel of fig. 5). For small false positive rates of less than 0.05, evolBoosting has the lowest power, followed by diploS/HIC and SURFDAWave having comparable powers, which have lower powers than the three-input SISSSCO models (SISSSCO[3CO], SISSSCO[3CD], and SISSSCO[3MD]), with the 27-input SISSSCO models (SISSSCO[27CO], SISSSCO[27CD], and SISSSCO[27MD]) harboring the highest overall powers (right panel of fig. 5). The decreased powers of some of the methods are reflected in the imbalance in classification rates demonstrated in figure 4, for which some methods have a skew toward misclassifying sweeps as neutral. However, as discussed for the constant-size demographic history results, such classification is conservative, as we wish to avoid the alternative skew toward false discovery of sweeps. Overall, our experiments point to SISSSCO[27CD] having near perfect accuracy and power on the two selection regimes simulated under the nonequilibrium recent strong population bottleneck demographic history.

![Power to detect sweeps as depicted by ROC curves on the Nonequilibrium_variable dataset for the six SISSSCO architectures compared to SURFDAWave, diploS/HIC, and evolBoosting. The Nonequilibrium_variable dataset is based on the nonequilibrium recent strong bottleneck demographic history of central European humans (CEU population in the 1000 Genomes Project) and a sweep that completed t∈[0, 1,200] generations before sampling. The right panel is a zoom in on the upper left-hand corners of the left panel.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mbe/40/7/10.1093_molbev_msad157/1/m_msad157f5.jpeg?Expires=1749000785&Signature=luJoQ6MXsEIlczH3imiQXzDk7XncdhlRZlOjWZBVpfDl2~XTnu7cPT-9DoPIDOfwTIU2YL02tOgjmP~dUvzv1jYWSfmItYRjVqjjOveKEuJMhBfPHhMr8jAihx0f6l-9~w6zdnZbcaN2lEm8y63hzWg1CWmGQowuLF7upS9kXqQ6D4Zg~am4sEhIi9hCirfv0iNWw-O2GxCEj6lgVKIDX5QRHxwWEq60H5i-eDXnhTzl8xptdTyJbE47p-kNZTrtsWbLp7nw1jE4HNFMRTbHPpzh6Me5WIm9nR~tCgLLwO8SVuCPfkcYtjm7Y6o0GTg69Ns1jc2JPPiHX0mLpTiMEw__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Power to detect sweeps as depicted by ROC curves on the Nonequilibrium_variable dataset for the six SISSSCO architectures compared to SURFDAWave, diploS/HIC, and evolBoosting. The Nonequilibrium_variable dataset is based on the nonequilibrium recent strong bottleneck demographic history of central European humans (CEU population in the 1000 Genomes Project) and a sweep that completed [0, 1,200] generations before sampling. The right panel is a zoom in on the upper left-hand corners of the left panel.

Comparison to Summary- and Likelihood-based Sweep Detectors

To showcase the power to detect traces of selective sweeps by using spectral images, we compared SISSSCO against three state-of-the-art machine learning models that are also geared toward detecting adaptation from vectors of multiple summary statistics. To evaluate how SISSSCO fares against more traditional nonmachine learning sweep detectors, we compared our most consistently performing method (SISSSCO[27CD]) to the summary statistics (Garud et al. 2015) and Fay and Wu’s H (Fay and Wu 2003), as well as to the likelihood method SweepFinder2 (DeGiorgio et al. 2016) across all four datasets. We computed and H for different window sizes, considering windows of 25, 50, or 100 SNPs, and chose 50 SNP windows for comparison as they gave and H their highest powers. displayed higher power to detect sweeps compared to H and SweepFinder2 on three of the four datasets (supplementary fig. S17, Supplementary Material online), with H showing generally low power on all tested scenarios and SweepFinder2 having highest power among the three methods on the Equilibrium_variable dataset. The overall superior performance of , especially compared to SweepFinder2 is unsurprising. The reasoning is that our test datasets consider sweeps of differing degrees of softness and hardness, and was developed to detect hard and soft sweeps with similar efficiency, whereas SweepFinder2 employs a model of a recent hard sweep and has limited power on soft sweeps. Even with the general superior performance of compared to H and SweepFinder2, SISSSCO[27CD] has substantially higher power to detect sweeps compared to these three traditional methods on all four datasets.

Robustness to Missing Genomic Segments

The presence of missing genomic segments results from technical artifacts, and can lead to reductions in haplotypic diversity due to unobserved polymorphism. As such losses of local genomic variation can masquerade as selective sweep footprints, missing genomic segments may mislead methods that detect sweeps to falsely classify neutral genomic regions harboring missing segments as having undergone positive selection. Hence, our goal is to examine whether missing genomic segments within neutrally evolving test regions lead SISSSCO and non-SISSSCO methods to falsely identify them as selective sweeps, and whether such missing genomic segments hampers the ability of the methods to discriminate between sweeps and neutrality. We therefore simulated an independent set of discoal (Kern and Schrider 2016) replicates for neutral and sweep regions, and generated missing genomic segments from these new simulations. Specifically, we first followed the protocol of Mughal et al. (2020) by excluding approximately 30% of the SNPs in each simulated replicate, distributed evenly across 10 nonoverlapping genomic blocks of equal size containing approximately 3% of the SNPs in the replicate. The locations of these blocks are chosen uniformly at random, with a new location chosen for a block if it intersects with locations of previously placed blocks. To ensure disruption of genomic diversity near the locations that beneficial alleles are introduced in sweep replicates, we also made sure that at least one of these blocks overlaps with either the 200 SNPs to the left or 200 SNPs to the right of the center of the simulated sequences for each neutral and sweep test replicate. This simulation protocol allows us to evaluate how a sparse distribution of missing polymorphic sites that are spread across simulated genomic regions affects the ability to distinguish sweeps from neutrality.

We then computed summary statistics using the remaining 70% of SNPs in each replicate, with these statistics measured identically as for the training set using overlapping windows with a window length of 10 SNPs and a stride of three SNPs calculated over the 400 central SNP sites (200 to the left of the sequence center, and 200 to the right). These one-dimensional summary statistic arrays are then used to generate spectra through the three signal decomposition methods to produce the test dataset consisting of sweep and neutral regions with missing genomic segments.

Because the Nonequilibrium_variable dataset is the most complex and features a realistic demographic history, we sought to evaluate robustness to missing genomic segments on this dataset. We employ models from previous analyses that are trained without missing genomic segments (figs. 4 and 5) to these test datasets that contain missing genomic segments. As would be expected, the inclusion of missing genomic segments in the test dataset leads to a reduction in classification accuracy across all methods (fig. 6) compared to no missing segments (fig. 4). Most notably, diploS/HIC, SISSSCO[3MD], and evolBoosting experienced moderate to large reductions in accuracy to discriminate sweeps from neutrality, with reductions of 3.85%, 4.40%, and 5.00%, respectively (compare figs. 4 and 6). This reduction in accuracy appears to be primarily driven by an increase in misclassifying neutral regions as sweeps (fig. 6), for which evolBoosting displays a 23% misclassification rate of falsely detecting neutral regions as sweeps. Of the nine methods compared, SISSSCO[27MD] has the highest and near perfect accuracy on missing genomic segments of 99.95%, exceeding the classification performance of the SISSSCO[27CD] model that achieved accuracy of 99.50% without missing genomic segments but has only 97.90% with missing segments. Even on this challenging dataset, SISSSCO[27CD] and SISSSCO[27MD] have near perfect powers as evidenced by their near perfect areas under the ROC curves (supplementary fig. S18, Supplementary Material online). Therefore, the SISSSCO[27CD] and SISSSCO[27MD] models perform comparably well on missing genomic segments in terms of power, with SISSSCO[27MD] edging out SISSSCO[27CD] in terms of accuracy even though both methods exhibit high accuracy.

![Classification rates and accuracies as depicted by confusion matrices to differentiate sweeps from neutrality on the Nonequilibrium_variable dataset when test data contain missing genomic segments for the six SISSSCO architectures compared to SURFDAWave, diploS/HIC, and evolBoosting. The Nonequilibrium_variable dataset is based on the nonequilibrium recent strong bottleneck demographic history of central European humans (CEU population in the 1000 Genomes Project) and a sweep that completed t∈[0, 1,200] generations before sampling. Trained models are identical to those in figure 4 and fitted to training observations without missing data, but the test observations derive from sequences containing approximately 30% missing SNPs distributed evenly across 10 nonoverlapping segments.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mbe/40/7/10.1093_molbev_msad157/1/m_msad157f6.jpeg?Expires=1749000785&Signature=0MF7akxxc4hi2movS319r5mOU~76MBhVbs~xXuCuOamyVS9xOZLM63-XiJe3V8U9o-VIh2E0gpc3Ocl3WfIolteX7rmWWPLaGEaVnmbiuHfd3-aoDwXxWMaGwxc4Dgcl1itHqxCR6tIZ5wo3dXMmQ0OQWnfmLBtkkjiaCHP6oE2V2DzdvOfn5Gg3GraVt6wLS2dL~KzGMIo7XHfwMfivkgBQNoUIvMDC4tSfDtl3qce0kzaqUHnN2xEpyIy~9yRA3VWapDCuiwXVqu8FpFeMFb2xny5wtpQ34cT7l5uzn4cXmIb7Bningi~-YB-T8firJaj3w4DDqX~2W1KXTrG1RA__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Classification rates and accuracies as depicted by confusion matrices to differentiate sweeps from neutrality on the Nonequilibrium_variable dataset when test data contain missing genomic segments for the six SISSSCO architectures compared to SURFDAWave, diploS/HIC, and evolBoosting. The Nonequilibrium_variable dataset is based on the nonequilibrium recent strong bottleneck demographic history of central European humans (CEU population in the 1000 Genomes Project) and a sweep that completed [0, 1,200] generations before sampling. Trained models are identical to those in figure 4 and fitted to training observations without missing data, but the test observations derive from sequences containing approximately 30% missing SNPs distributed evenly across 10 nonoverlapping segments.

As an alternate approach, we generated missing segments to mimic an empirical distribution of missing segments as in our empirical application to humans (see Processing empirical data subsection of the Methods section), where we define a missing segment as a 100 kb region of mean CRG (Centre for Genomic Regulation) mappability and alignability score lower than 0.9 (Talkowski et al. 2011). To generate missing data blocks in the simulated neutral and sweep test replicates, we first randomly selected one of the 22 human autosomes, with probability of selecting a given autosome weighted by its length from the hg19 human reference build. For the selected chromosome, we chose a starting genomic position for a 1.1 Mb segment uniformly at random, and scaled the genomic positions to begin at zero and end at one to match the format of the sequences simulated by discoal. If a random 1.1 Mb segment did not have at least one region of low mean CRG score, then a new segment was randomly drawn until one containing a region with low mean CRG score was found. We then removed SNPs at positions from a given simulated replicate that intersected with genomic stretches of low mean CRG scores. Removal of SNPs in this manner ensures that missing data blocks match the distribution of regions of low mean CRG scores in the human reference genome. We repeated this process for each simulated neutral and sweep test replicate. This distribution of missing genomic segments is substantially different from our prior missing segment distribution, with similar levels of mean missing SNPs across test replicates (on average 32.518% of SNPs discarded), but each 1.1 Mb segment typically only a few (and typically one) long blocks of missing SNPs in contrast to 10 short blocks.

We applied each of the six SISSSCO models and the three other competing methods to these test replicates with missing segments inspired by an empirical distribution. Supplementary figures S19 and S20, Supplementary Material online show that all methods suffer significantly from this distribution of missing segments. Among the SISSSCO models, SISSSCO[27CD], SISSSCO[27CO], and SISSSCO[3CO] had the highest classification accuracies and powers to detect sweeps, with these SISSSCO models still achieving high accuracies of 92.5%, 91.0%, and 91.0%, respectively. Moreover, SURFDAWave performed similarly to the high performing SISSSCO methods, with an accuracy of 91.5%. In contrast, the performances of evolBoosting and diploS/HIC were impacted most drastically, leading to generally low classification accuracies of 64.0% and 83.5%, respectively, and with evolBoosting demonstrating low power to detect sweeps. We attribute the reduced performances of diploS/HIC and evolBoosting on the settings of missing genomic segments to the fact that they operate on summary statistics that have been computed across physical-based genomic, as opposed to the SNP-based windows utilized by the SISSSCO models and SURFDAWave.

Effect of Signal Decomposition

To study the benefits of adding the layer of spectral inference within SISSSCO, we evaluated the accuracy and power of CNN models that take as input nine raw summary statistic vectors instead of 27 spectra. Specifically, we adapted the SISSSCO model architectures to construct four one-dimensional CNN models: a single CNN with nine channels (1D-CNN[1CNN]), nine pretrained single-channel CNNs with the output layers concatenated (1D-CNN[9CO]), nine pretrained single-channel CNNs with the dense layer concatenated (1D-CNN[9CD]), and nine simultaneously trained single-channel CNNs with the dense layer concatenated (1D-CNN[9MD]). We find that all four 1D-CNN methods have substantially lower classification accuracy and power than SISSSCO[27CD] on the Nonequilibrium_variable dataset (compare supplementary fig. S21, Supplementary Material online to fig. 4). Among the four 1D-CNN models, we found 1D-CNN[9MD] to have highest accuracy, which is approximately 5% lower than SISSSCO[27CD]. The powers of the 1D-CNN methods evidenced by the ROC curves echo the relative accuracies of the methods, with the ranking from worst to best performance given by 1D-CNN[1CNN], 1D-CNN[9CO], 1D-CNN[9CD], and 1D-CNN[9MD]. The powers demonstrated by the 1D-CNN architectures are dwarfed by SISSSCO[27CD], which displays a near perfect area under the ROC curve (supplementary fig. S21, Supplementary Material online). Though the SISSSCO models require significantly more time and computational resources to train compared to the 1D-CNN models, the improvement in model performance is quite considerable. Therefore, adding the layer of spectral inference appears to provide additional performance gains to SISSSCO compared to operating on the raw summary statistics.

Interpretability of the SISSSCO Models

Thus far, we have focused on the predictive ability of the SISSSCO models. However, interpretability of the models is also important. A mechanism that can facilitate interpretation is through computation of saliency maps (Zhai and Shah 2006). When discussing visual processing, the term “saliency” refers to the ability to recognize and differentiate individual aspects of an image, such as its pixels and resolution. These elements highlight the most visually compelling parts of an image. Saliency maps are a topographical representation of these locations, and their purpose is to reflect the degree of importance of a pixel to the human visual system. Therefore, to enhance interpretability of SISSSCO we generated aggregated saliency maps for SISSSCO[27CD] and visualize them as heatmaps (fig. 7). We used the GradientTape function from TensorFlow (Abadi et al. 2015) to calculate the gradients of variables based on the loss function that we chose. We constructed these maps by averaging the saliency maps of the 27 pretrained CNNs using all 18,000 training samples (9,000 per class), where the weight of the saliency map of a given CNN in the average is taken from the dense layer node weights that lead to the concatenated dense layer of SISSSCO[27CD]. We constructed three such heatmaps, where each map aggregates saliency maps generated by the nine individual CNNs trained on spectral images from one of the three signal decomposition methods, giving one heatmap for the wavelet decomposition, one for the multitaper analysis, and one for the S-transform. The saliency maps for the wavelet decomposition and the S-transform place emphasis on low-frequency oscillations to explain the underlying summary statistic signals, with the wavelet decomposition demonstrating a notable localization near the central window of the summary statistics, which is expected to be close to the selected locus. In contrast, the saliency map for the multitaper analysis exhibits a different pattern, placing most emphasis on the edges of the ribs in the rib-cage structure (recall the mean multitaper images in fig. 2).

![Saliency maps of the pretrained component CNNs of SISSSCO[27CD] aggregated on the basis of dense layer node weights post concatenation across 9,000 training observations per class. The top left, top right, and bottom images are aggregated using saliency maps generated by nine component single-channel CNNs trained using spectral images generated by wavelet decomposition, S-transform, and multitaper analysis, respectively.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mbe/40/7/10.1093_molbev_msad157/1/m_msad157f7.jpeg?Expires=1749000785&Signature=1ZKYPWRl3~e0fFL5kyVqdz9tjBOOx4kiPclNN7VzWRj0qLz~hNYsMvWkzj~3Pp~ygAAVo49qsDd~5owtV-9K1xCE0jZ1SaNBtcEmQN9wV0Fnm2bbreFCLMMyxmXuE0yS6oojQhfSSXcvRDW0BbTgBpkc8k1HGzIy~5IN-qlK8QCOcA-i~LDBe-UhfUETqTdx2nHRv8L-bBhVG31mGDSFUi67Xonxk36Uid2d93qZe3MFPeUSgEcSQ1zghCyyfEyDYE9n8yaTaNEsbuff5IsyAdfYlv2v8DY9LNfrW1zPduveoT6eKwiYbO2ID1KrmfEB-cnKipGxQyuTDNe-9Syz9A__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Saliency maps of the pretrained component CNNs of SISSSCO[27CD] aggregated on the basis of dense layer node weights post concatenation across 9,000 training observations per class. The top left, top right, and bottom images are aggregated using saliency maps generated by nine component single-channel CNNs trained using spectral images generated by wavelet decomposition, S-transform, and multitaper analysis, respectively.

Roles of Summary and Spectral Methods in SISSSCO Predictions

Using saliency maps, we were able to learn which pixels of input spectral analysis images SISSSCO tends to place greater emphasis when making predictions. However, a related effort is to decipher the role that different summary statistics and spectral analysis methods play in making prediction within SISSSCO. That is, we wish to investigate whether certain summary statistics or spectral analysis approaches are more important in the SISSSCO model than others. To accomplish this, for each of the 18,000 training observations (9,000 per class) for the Nonequilibrium_variable dataset, we fed the 27 spectral images to their corresponding pretrained individuals CNNs and obtained the values for the 128 nodes within the dense layer of the CNN. For each observation, we then merged the 27 vectors of dense layer values into a single vector of length . We processed all observations in the same fashion, and created an input matrix with 18,000 rows, corresponding to the training observations, and 3,456 columns, corresponding to the values of the 27 component CNN dense layers. We then grouped features from these 3,456 columns of the input matrix, either by summary statistic giving 9 groups, by spectral analysis method giving 3 groups, or by each pair of summary statistic and spectral analysis method giving 27 groups. Given one of these groupings, we applied group lasso (Yuan and Lin 2006) to fit a logistic regression model to discriminate sweeps from neutrality while performing both regularization as well as group selection. This computationally efficient approach helps identify groups of features less important for classification, whether due to irrelevance for predicting the response or due to correlation with other groups of features, by setting weights of every feature in a group to zero.

We first considered grouping with 27 groups defined by distinct summary statistic and spectral analysis pairs, and find that group lasso removes 13 groups (sets coefficients to zero for all features in the groups), with all combinations of , , and with the 3 spectral analysis methods removed. Additionally, seven groups utilizing multitaper analysis were also removed. We next evaluated groupings with three groups defined by distinct spectral analysis methods, and find that group lasso removes the group defined by multitaper analysis images. Finally, we explored grouping with nine groups defined by distinct summary statistics, and find that group lasso removes four groups defined by images of , , , and .

Based on the results from these group lasso experiments, we trained two new stacked CNN architectures in an identical manner to that of SISSSCO[27CD], which we denote by SISSSCO[18CD] and SISSSCO[15CD]. The SISSSCO[18CD] architecture is trained with 18 spectral analysis images per observation using all nine summary statistics decomposed by wavelet decomposition and S-transform (i.e., multitaper spectral analysis images are removed), whereas SISSSCO[15CD] is trained with 15 spectral analysis images per observation using the five summary statistics , , , , and decomposed by all three spectral analysis techniques (i.e., , , , and images are removed). We find that both new SISSSCO models have lower power and accuracy to detect sweeps than SISSSCO[27CD] (supplementary fig. S22, Supplementary Material online). Moreover, based on the superior performance of SISSSCO[18CD] over SISSSCO[15CD], we conclude that removing summary statistics had a more deleterious effect on classification performance than eliminating the multitaper images.

Application to Unphased Genotypes

The SISSSCO models were trained with phased haplotypic data. However, phased data are difficult or impossible to reliably generate for many study systems—notably most nonmodel organisms. Hence, for our models to be versatile, it is imperative that they can also accommodate unphased data (e.g., similarly to diploS/HIC of Kern and Schrider 2018). Fortunately, the phased haplotype summary statistics used by SISSSCO have natural analogs for unphased multilocus genotype data. Specifically, we could replace , , and with their respective unphased analogs , , and (Harris et al. 2018) and exchange the frequencies of the five most common haplotypes with the five most common unphased multilocus genotypes. Given the relatively strong concordance with results from haplotype-based methods (Harris et al. 2018; Harris and DeGiorgio 2020a, 2020b; DeGiorgio and Szpiech 2022) and power to detect sweeps in prior studies using unphased multilocus genotypes (Kern and Schrider 2018; Mughal and DeGiorgio 2019; Gower et al. 2021), we expect that SISSSCO would retain excellent classification accuracy and power when applied to unphased data.

To test this hypotheses, we calculated , , , , and the five most common unphased multilocus genotypes from the 18,000 training, 2,000 test, and 2,000 validation observations (respectively 9,000, 1,000, and 1,000 per class) from the Nonequilibrium_variable dataset. We obtained these summary statistics from unphased multilocus genotype data in an identical manner as with phased haplotype data by computing 128 windows of size 10 SNPs with a stride of three SNPs across 400 SNPs of each replicate, with these SNPs selected as 200 SNPs immediately to the left and 200 SNPs immediately to the right of the center of the simulated sequence. We also generated spectral images in an identical manner to when we employed the original nine summary statistics computed from haplotype data. Using these spectral images, we trained a classifier with an identical architecture to the haplotype-based SISSSCO[27CD] (denoted SISSSCO_MLG[27CD]) that achieves an overall accuracy of 95.60% (supplementary fig. S23, Supplementary Material online), which is only marginally higher than diploS/HIC (fig. 4), which was developed for unphased data. However, diploS/HIC correctly classifies neutral regions with a slightly higher accuracy compared to SISSSCO_MLG[27CD].

Effect of Sweep Strength and Softness