-

PDF

- Split View

-

Views

-

Cite

Cite

N. I. Fisher, A Comprehensive Approach to Problems of Performance Measurement, Journal of the Royal Statistical Society Series A: Statistics in Society, Volume 182, Issue 3, June 2019, Pages 755–803, https://doi.org/10.1111/rssa.12424

Close - Share Icon Share

Summary

The paper describes a comprehensive approach to problems of performance measurement that can be used to tackle a wide range of situations, including designing monthly board and leadership reports in enterprises, assessing research quality and monitoring the efficiency and effectiveness of government programmes. It provides a review of various methods for tackling these problems and outlines some current areas of research. Although technical statistical issues are buried somewhat below the surface, statistical thinking is very much part of the main line of argument, meaning that performance measurement should be an area attracting serious attention from statisticians.

1. Introduction

1.1. Purpose

Performance indicators permeate most aspects of everyday life in government, business and industry. Whatever is being done—exercising due diligence as a company board member, evaluating the efficiency and effectiveness of a government programme, monitoring a patient's health, selecting players for a professional sporting team, assessing a research grant, tackling safety issues in a workplace, … —there is ever-increasing reliance on ‘metrics’ to benchmark, to set priorities and to monitor progress.

However, this increasing need has not been matched by increasing research activity into systematic ways to develop performance indicators for a specific application. Rather, solutions tend to be ad hoc, with little general learning being transferred from one area of application to another. It is a situation that would appear to call for increased attention by statisticians.

Of the various approaches that are described, this paper advocates the use of an organizing principle—alignment—as a systematic way of tackling problems of performance measurement, coupled with two key imperatives—the need to establish a link to higher level business drivers, and to identify priorities that are likely to have the biggest effect on the business. An important consequence of adopting this approach is the delineation, at different scales, of chains of metrics in-process →output→outcome (impact), the first two links typically being objective in character and the last subjective. It is the last link in the chain—and arguably the most important from a decision-making perspective—that has received the least attention. In contrast with most of the published literature, this paper focuses more on this aspect, and less on the rather better-studied early links.

1.2. Historical developments

Statisticians have been studying the use of performance indicators to monitor, control and improve industrial processes and systems for a long time, the first notable contribution probably being the classic 1939 work on statistical process control by Walter Shewhart (e.g. Shewhart (1986)). However, performance indicators are also in widespread use in many areas that have been largely unstudied by statisticians. There has certainly been critical and scholarly focus on specific topics. For example, in the UK ‘league tables’ for educational institutions have received much attention (e.g. Goldstein and Leckie (2008), Foley and Goldstein (2012) and references cited therein). In academia, so-called ‘bibliometrics’ are in widespread use for ranking academic departments, schools and institutions, for awarding research grants, and for assessing cases for promotion; see for example Adler et al. (2009) for a critique of their use. Performance indicators in the area of healthcare have been studied extensively by Spiegelhalter and co-workers (see for example Spiegelhalter et al. (2012) and references therein) and Ash et al. (2012), and in numerous articles by Ronald Does and his colleagues in the context of implementing Six Sigma programmes (see http://www.uva.nl/profiel/d/o/r.j.m.m.does/r.j.m.m.does.html). Battisti et al. (2010) have studied how to measure innovation, in response to the innovation charity Nesta (nesta.org.uk) and two UK Government departments becoming interested in the development of an innovation index in an attempt to rank firms and governments in terms of their innovation performance.

However, no sets of overarching principles or frameworks have emerged that provide general guidance about how to approach problems of performance measurement in these and other such settings. One consequence of this has been the production of lists of performance indicators that have been generated with little or no attention paid to the adequacy or suitability of the list, or to how the indicators can be used for monitoring, control and improvement (see for example Parmenter (2010), with its long list of performance measures on pages 217–293, yet essentially no mention of processes and, on page 8, dismissal of the need to distinguish lead from lag indicators!).

That said, some insight about how to start to develop a suitable approach can be gained from elsewhere in the management and marketing literature.

In a manual that formed the basis of a course on industrial management for Japanese industrialists (Sarasohn and Protzman, 1948, 1998), Homer Sarasohn began by posing the question ‘Why does any company exist?’. In rejecting the possible response that its purpose is to make a profit, he observed that such a response

(Sarasohn and Protzman (1948), page 1). One immediate implication of this passage—if one accepts its argument—is that the issue of performance measurement as it relates to an enterprise is really multivariate: we not only need metrics relating to the interests of the owners of the company, but also to several other interested parties (or stakeholders) in order to assess the viability of the enterprise.‘… is entirely selfish and one-sided. It ignores entirely the sociologic aspects that should be a part of a company's thinking. The business enterprise must be founded upon a sense of responsibility to the public and to its employees. Service to its customers, the wellbeing of its employees, good citizenship in the communities in which it operates—these are cardinal principles fundamental to any business. They provide the platform upon which a profitable company is built’

The next insight comes from marketing and derives from a business paradox that confronted AT&T in 1986. At that time, AT&T was a very large company, with about 300000 employees in 19 business units that operated in 32 countries and competed in 67 market sectors. They were surveying 60000 customers world wide each month and achieving 95% customer satisfaction. Yet, at the same time, they lost 6% market share, where 1% corresponded to $600 million. The then chairman, Bob Allen, put together a team from across the business units and told them to find out why there was no connection between customer satisfaction and business performance and to fix it. Ray Kordupleski, who led the team, recounts the following story (Kordupleski, 2003, 2018).

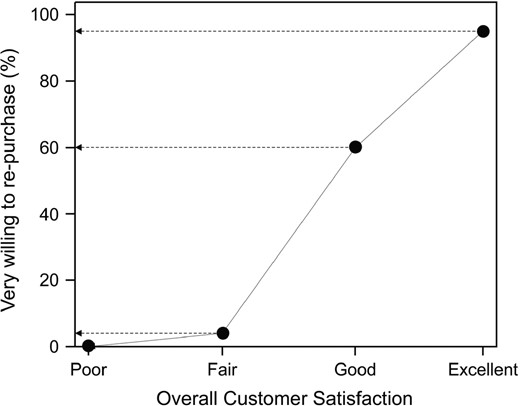

Many of AT&T's problems are captured in a graph similar to Fig. 1, which shows loyalty (as measured by the percentage of customers very willing to repurchase) plotted against overall customer satisfaction.

Relationship between overall customer satisfaction and loyalty for AT&T in 1986: 95% of respondents rating AT&T excellent were very willing to repurchase, compared with 60% of those who rated them good and 4% for those rating them fair (after Kordupleski (2003))

Kordupleski summarized the main problems as follows.

- (a)

Responses of good or excellent were pooled and categorized as satisfactory. However, there is no such thing as a satisfied customer, only degrees of satisfaction, and evidently good is very different from excellent in terms of loyalty.

- (b)

Improvements were focused on the 5% of people rating AT&T poor or fair, rather than on the largest group (good), 40% of whom were probably looking elsewhere for a supplier.

- (c)

The results compared one part of AT&T with another part of AT&T, rather than AT&T against its competitors.

- (d)

The survey ignored issues to do with price, just focusing on quality.

However, buried deep in the survey there was a question about value, which assesses people's perception of the quality of what they receive, balanced against their perception of the price paid. It was when AT&T redesigned their survey to focus on value and relative value (or customer value added) that they obtained lead indicators of high level business drivers such as market share, revenue share and return on investment (e.g. Kordupleski (2018), chapter 1; see also Section 2.3 below). In fact, AT&T's leadership could manage all customer-related aspects of their business with just three key ratios: relative satisfaction with value, relative satisfaction with quality and relative satisfaction with price.

This is a simple but profound outcome. Firstly, these top level metrics are all measures of perception, rather than hard numbers (profit, market share …). Further, it raises the possibility of applying similar logic to relationships with other important stakeholders, and so points to a plausible approach to developing a performance measurement system for an enterprise by establishing a concept of value for each group of stakeholders. In devising a generic approach for this and for other performance measurement issues, we shall use as exemplars three different situations, two of which present wide-open areas for further research.

- (a)

Scenario 1: you have just been appointed to the board of an enterprise—perhaps a publicly listed company, perhaps a private company or maybe a quasi-autonomous government body—and are about to attend your first board meeting. Although you have not taken a company director's course, you have a sufficient understanding of governance matters to be aware that board members have individual and collective accountability for the wellbeing of the enterprise. The board papers have been circulated a few days in advance, and you open them and start to browse through the numbers being reported. What sorts of numbers would enable you to exercise your Director's duties with due diligence?

- (b)

Scenario 2: you have just been appointed Deputy Vice-Chancellor (Research) of a university that has not been performing well in the last few years of competing for research grants and so is sliding down the university rankings’ scale. The national assessment criteria have resulted in an edict from your Vice-Chancellor that all research must be submitted to ‘tier 1’ journals. As a consequence, your researchers are abandoning the pursuit of research projects of considerable prospective benefit to the community (an important part of your university's charter) in favour of smaller theoretical research activities that provide an opportunity for publication in ‘authorized’ journals. How can you turn things around?

- (c)

Scenario 3: you have just had a meeting with the head of the Department of the Environment. The best climate forecasts that are available suggest that the critical shortage of water is likely to continue for years. The government has decided on a major national project to encourage every household to make use of ‘grey’ water by offering subsidies for installation of dual reticulation systems. This will have the side benefit of providing an injection of funds into the economy and a boost to employment. However, the government wants to make sure that its money is being spent efficiently as well as effectively. What will you do to ensure this?

Although these three scenarios are fictitious as described, they are synthesized from actual situations. And, in each case, there is a critical underlying issue relating to performance measurement: what sorts of performance indicators need to be in place to carry out these tasks well? This in turn leads to consideration of questions such as these.

- (i)

What really needs to be measured?: impacts?: outputs?; inputs?; something else?

- (ii)

What is the purpose of the measures?: benchmarking?; monitoring?; control?; improvement?

- (iii)

How do the measures relate to business processes?

And so, more basically,

- (a)

where do we start?;

- (b)

what sorts of metrics will help?;

- (c)

how do we know whether what we produce is useful, let alone sufficient for what is really needed?

1.3. Layout of the paper

Section 2 discusses performance measurement for an enterprise: an appropriate context for scenario 1. Section 2.1 provides a brief overview of earlier work, and Sections 2.2 and 2.3 look in more detail at a generic methodology that will be applied throughout the paper. In particular, the concept of alignment emerges as an organizing principle that provides a means of tackling a range of other performance measurement issues including those in scenarios 2 and 3.

Section 3 considers extending these concepts to issues arising in research environments, such as assessing research quality (scenario 2); Section 4 provides some comments about measuring the deployment of government programmes (scenario 3). Section 5 contains a concluding discussion.

Throughout the paper an asterisk indicates that a definition or description for the marked term is available in Appendix A, and the code (S) means see the on-line supplementary material.

Although technical statistical issues are buried somewhat below the surface of many performance measurement problems, statistical thinking is very much part of the main line of argument, meaning that performance measurement as a general topic should be an area attracting serious attention from statisticians.

2. Performance measurement for an enterprise

2.1. Earlier work

A review of previous approaches to performance measurement for an enterprise can be found in Fisher and Nair (2008), and Fisher (2013), pages xxi–xxiii, so the discussion here is confined to mentioning just two of these.

One simple approach

‘… is to look for a very small set of generic metrics that can be used throughout an organization or, equivalently, at all scales of measurement from micro to macro …’ (Fisher (2013), page xxi).

Fisher and Nair (2008) commented that

‘The power of this concept is that it could be used to focus communication at all levels of the organisation: in the words used by a senior industry figure to one of the authors, “I want to be able to drill down through management layers with a single metric, to find out what's causing a problem”’.

It is a matter of historical interest that the Six Sigma movement originated from just such a starting point at Motorola, Inc. (personal communication from Debby King-Rowley, former global Director of Executive Education at Motorola, September 13th, 2008):

‘There was a very early focus on cycle time reduction across the board. That was introduced from the C-suite down in 1986. At that time, Motorola was working on quality through the 3 leading guru schools of thought at the time—Deming, Phil Cosby, and Juran. No single approach was being promoted from corporate. When cycle time was introduced, it was introduced as part of a 3-legged stool—cycle time, quality, cost. All three had to be in balance within the business units. Cycle time was the only one being driven (in goal of 50% reduction and process) from headquarters. Once cycle time focus was in place, an eye was turned to quality to standardise the approach on a company-wide basis. A lot of work was being done with Deming's concepts, but an internal electrical engineer, Bill Smith, in our then “Communications Sector” created the concept of Six Sigma. He took the idea to Bob Galvin, who is quoted as telling Bill “I don’t fully understand it, but it seems to make sense. Come meet with me weekly til I understand it.” Bill did, Bob fully grasped it, then others (particularly statisticians like Mikel Harry) were brought in to support and advance Bill's Six Sigma. Then the rest is history.’

Robert Kaplan and David Norton tackled the problem from quite a different perspective; indeed, from four perspectives. In a series of publications (e.g. Kaplan and Norton (1992, 1996a, 1996b, 2006, 2010) they introduced and developed the so-called Balanced Scorecard as a means of measuring strategy. In their initial work, they defined four so-called perspectives—customer, internal, innovation and learning, and shareholder—from which they suggested that a set of measures be derived to give senior officers a fast but comprehensive overview of the business. Subsequently, they found it necessary to introduce other perspectives, e.g. an environmental perspective.

We provide further comment on the Balanced Scorecard at the end of Section 2. Here, suffice it to say that, to the extent that it works, it is still not addressing the whole performance measurement problem. Fisher (2013) suggested that a performance measurement system should provide, inter alia,

- (a)

a concise overview of the health of an enterprise (where is it now, and where is it heading?),

- (b)

a quantitative basis for selecting improvement priorities, and

- (c)

alignment of the efforts of the people with the mission of the enterprise,

and suggested that such a system could usefully be modelled with two basic components:

- (i)

a framework for performance measures and

- (ii)

a general process for putting the measures in place (i.e. populating the framework) and using them to best effect, e.g. by identifying priorities for action to address the most pressing business issues—falling market share, high staff turnover, loss of community support, … .

Much of the published research on performance measurement relates to frameworks, not systems: little if anything is said about how to identify and use the performance measures. Neely et al. (2007) provided an overview of some of the frameworks that are available, particularly the Balanced Scorecard, and noted some of its deficiencies (see also Atkinson et al. (1997) in this regard). An Internet search of ‘performance measurement system’ identifies a number of other ‘systems’, none of which appears to be complete, either because of the limited scope of the framework or because the response to (ii) is inadequate.

As a final comment, a performance measurement system is still, at best, a crucial aid to decision making that allows boards to exercise—and demonstrate that it is exercising—due diligence in leading an enterprise. Thus, it can assist in managing overall risk rather than eliminating it. As W. Edwards Deming observed (e.g. Deming (1993)), ‘Management is prediction’. Statisticians understand better than most the import of this statement.

2.2. A performance measurement framework

A stakeholder-centred performance measurement framework was described in detail in Dransfield et al. (1999) (see also Fisher (2013), chapter 3) and will only be outlined here. It comprises a set of three principles* relating to alignment, process thinking and practicability; a paradigm for performance measures and a structure for performance measures.

The following paradigm was communicated by e-mail to the author by Myron Tribus around 1993.

- (a)

What products or services are produced and for whom?

- (b)

How will ‘quality’, or ‘excellence’, of the product or service be assessed and how can this be measured?

- (c)

Which processes produce these products and services?

- (d)

What has to be measured to forecast whether a satisfactory level of quality will be attained?

It seems so obvious that one wonders why it should even be put in writing. However, it is easy to find examples to demonstrate that some people do not abide by its logic. There is an extensive literature on the subject of ‘key performance indicators’ (KPIs). The term itself is often taken to be self-defining, although this is often contradicted by subsequent description and usage of specific KPIs. In many instances, for example, KPIs relate to the fourth step of the Tribus paradigm, without the first step having been carried out: in other words, the target has not been specified yet people are happy to start firing. (The author is indebted to Jim Rosenberger for introducing him to the concept of Texas target practice: firing a gun against a barn wall, and then drawing the target where the bullets actually hit.) A similar situation can be found in the literature relating to measuring research quality: indicators are selected without first defining what is meant by ‘research quality’. This issue will be revisited in Section 3. Evidently, the paradigm captures the essence of aligning activities with the ultimate goal.

The proposed structure, or hierarchy, for performance measures is motivated by the postulate that an enterprise can only be successful in the long term if it creates and adds value (in a sense to be defined) for five different groups of stakeholders: its owners, its customers, its people, its partners and the wider community (see Dransfield et al. (1999) for a detailed discussion). Each of these groups makes some form of investment in the enterprise, whether it be money, effort, support, …, and each has alternative possibilities for the investment: hence the need to create superior value for the stakeholder than they can acquire elsewhere. (The italicization reflects another point of confusion in the literature: some researchers use the term ‘customer value’ to refer to value created by the customer for the company, rather than the reverse as is intended here.)

So, the starting point for performance measurement in this stakeholder view of the world is to ask ‘What does value mean for each stakeholder group, and how can we measure it?’. This provides a basis for measuring success. Success measures constitute the ‘strategic zone of measurement’ and so fall within the remit of the board of the enterprise. However, success measures (equivalent to measures of outcomes) are not suitable for managing the enterprise: they are lag indicators, resulting from past efforts. (‘Managing on outcomes is like steering a car by looking in the rear view mirror’: an observation probably due to Myron Tribus. It is also a reason that the calculation of bonuses for leaders of some large enterprises is based on the results 5 years later; see for example the bonus bank approach of Stern Stewart; Bischof et al. (2010).) The Holy Grail of performance measurement is to find lead indicators of success, and it is these measures that will be termed KPIs in this paper. KPIs constitute the ‘tactical zone of measurement’ and are of interest to both the board and the leadership team.

The key to improving KPIs and hence success measures is, of course, to work on the various business processes in the enterprise, which calls for many more measures relating to monitoring, controlling and improving processes. These measures comprise the ‘operational zone of measurement’ and relate to accountabilities and responsibilities of numerous people in the enterprise. The three zones of measurement correspond to three zones of management as discussed, for example, by Sarasohn and Protzman (1948, 1998) and subsequently as reflected in systems such as Hoshin Kanji* to ensure alignment of operational activities with strategic intent.

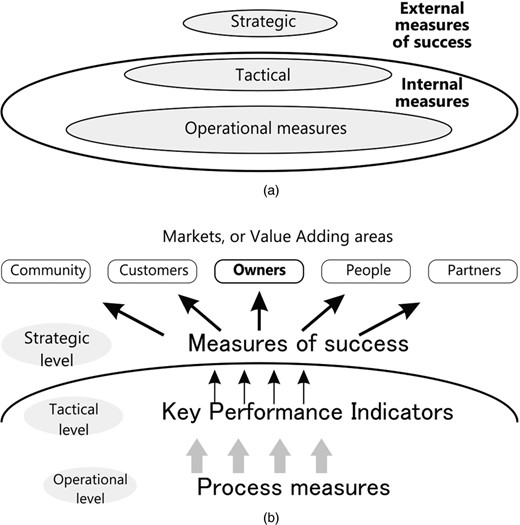

Fig. 2 depicts these three zones, and the purpose of the metrics in each zone.

The two figures show the performance measurement framework, with its focus on five stakeholder groups, and with three distinct zones of measurement delineated: in the strategic zone, measures of success are outcome or lag measures, a consequence of the past efforts of the enterprise; the tactical zone is of more interest to the leadership of the enterprise in terms of management, because the KPIs are lead indicators of future success; the operational zone comprises the usual metrics for monitoring, controlling and improving processes and so improving KPIs

The next issue to be considered is how to put appropriate measures in place and to use them to set priorities. An excellent market research process for just this purpose has been available for about three decades in the context of customers but is largely unknown in the statistical community. The key elements are sketched in Section 2.3, and then developed and adapted in Sections 2.5–2.8 to produce the complete system and so to provide the board and leadership reports. Section 2.4 contains a discussion of some technical statistical issues that are associated with the process.

2.3. Finding customer-related metrics: a process for managing customer value

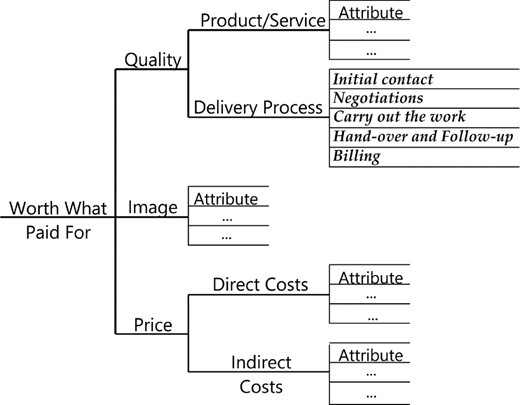

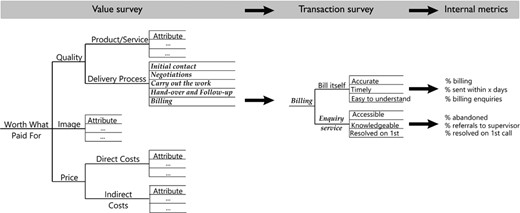

We return to the AT&T example that was introduced in Section 1. As described by Kordupleski (2003, 2018), the AT&T team devised a new style of survey instrument that structured the survey questions in a customer satisfaction survey according to a so-called ‘value tree’; Fig. 3.

Prototypical structure of a customer value tree: in this representation, overall value (described as worth what paid for) has three main ‘drivers’, quality, image and price; quality is represented as the product or service received, and the delivery process comprising the sequence of customer experiences in receiving this product or service; price has as its drivers both direct costs and indirect costs (or cost of doing business); the small sets of ‘attributes’ associated with each of the main branches are determined from market focus groups; a respondent assigns ratings on a 10-point scale, starting at the lowest level of the tree and working upwards, so yielding a tree-structured measurement

Data are obtained by asking respondents to rate the performance of their supplier on a 10-point scale, where 1 is poor and 10 is excellent, starting with the attributes of product or service, followed by an overall rating of product or service, and so on, until eventually an overall rating for value is assigned. This leads to a structured set of ratings that can be modelled and analysed in hierarchical fashion, by fitting product or service as a function of its attributes, delivery process as a function of its subprocesses, quality as a function of product or service and delivery process, …, and finally, value as a function of quality, image and price.

To make the results readily understandable and actionable by senior executives, Kordupleski recommended analysing the data on their original scale by fitting simple linear models (see Section 2.4 for more discussion). The goal is to arrive at a set of impact weights and mean ratings as shown in Table 1, which displays the competitive profile for the top level of the value tree. Two important actions derive from Table 1.

| . | Impact weight (%) . | Mean rating (se±0.05) . | Relative rating (%) . | |

|---|---|---|---|---|

| This company . | Competition . | |||

| QualityQ | 42 | 7.7 | 7.6 | 101 |

| Image I | 15 | 7.1 | 7.3 | 97 |

| Price P | 30 | 6.9 | 7.0 | 98 |

| Value V | 7.3 | 7.5 | 97 | |

| . | Impact weight (%) . | Mean rating (se±0.05) . | Relative rating (%) . | |

|---|---|---|---|---|

| This company . | Competition . | |||

| QualityQ | 42 | 7.7 | 7.6 | 101 |

| Image I | 15 | 7.1 | 7.3 | 97 |

| Price P | 30 | 6.9 | 7.0 | 98 |

| Value V | 7.3 | 7.5 | 97 | |

The overall value score is 7.3, compared with the competition which averages 7.5, so, for this enterprise, the customer value-added score is below par at 97%. (Par would be a customer value-added score in the range 98−102, allowing for the variability in the data.) The ratings are on a 10-point scale, where 1 ≡ poor and 10 ≡ excellent. The impact weights have been derived by fitting a simple linear regression model to V as a function of Q, I and P, and then normalizing the coefficients to add to the value of R2.

| . | Impact weight (%) . | Mean rating (se±0.05) . | Relative rating (%) . | |

|---|---|---|---|---|

| This company . | Competition . | |||

| QualityQ | 42 | 7.7 | 7.6 | 101 |

| Image I | 15 | 7.1 | 7.3 | 97 |

| Price P | 30 | 6.9 | 7.0 | 98 |

| Value V | 7.3 | 7.5 | 97 | |

| . | Impact weight (%) . | Mean rating (se±0.05) . | Relative rating (%) . | |

|---|---|---|---|---|

| This company . | Competition . | |||

| QualityQ | 42 | 7.7 | 7.6 | 101 |

| Image I | 15 | 7.1 | 7.3 | 97 |

| Price P | 30 | 6.9 | 7.0 | 98 |

| Value V | 7.3 | 7.5 | 97 | |

The overall value score is 7.3, compared with the competition which averages 7.5, so, for this enterprise, the customer value-added score is below par at 97%. (Par would be a customer value-added score in the range 98−102, allowing for the variability in the data.) The ratings are on a 10-point scale, where 1 ≡ poor and 10 ≡ excellent. The impact weights have been derived by fitting a simple linear regression model to V as a function of Q, I and P, and then normalizing the coefficients to add to the value of R2.

- (a)

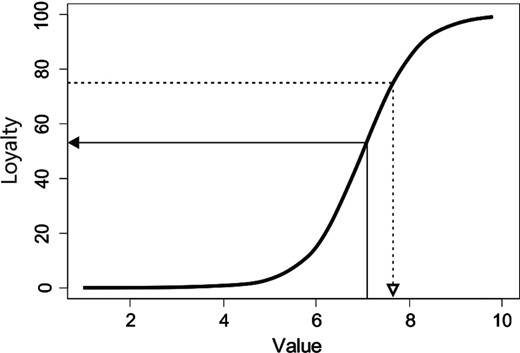

The overall value score needs to be linked to higher level business drivers, such as customer loyalty. A way to do this is shown in Fig. 4. It provides a basis for setting an overall (say 12-month) improvement goal for the rating of customer value, e.g. an increase of 0.5.

- (b)

Table 1 suggests where to focus improvements and allows prediction of the change in the value rating. To take a trivial example, suppose that three improvement teams are set up, one focusing on quality, one on image, and one on price, and they have been set the following targets: improve quality Q by 0.5 (7.7 → 8.2); improve image I by 0.5 (7.1 → 7.6); improve price P by 0.6 (6.9 → 7.5). Based on the impact weights in Table 1, the predicted improvement is 0.495, which is very close to the goal of 0.5.

The lower levels of the value tree can now be used to identify where to focus attention: identify attributes that have high impact weights and low relative ratings and bring the usual quality improvement tools to bear.

One final process step is needed to yield the desired performance measures. Whereas a value survey involves surveying the whole market—both one's own customers and those of one's competitors—if possible, the improvement activities will generally result in lower level transaction surveys of one's own customers. These help to identify low level quality attributes and, finally, (hard) in-process measures that can be used to monitor, control and improve business processes. The overall chain of actions is shown in Fig. 5.

A value–loyalty graph shows the typical relationship between value, on a 10-point scale, and the percentage of people who are very willing to show some specific form of loyalty, such as being very willing to recommend an enterprise to others, or very willing to repurchase: in this example, the specific score V = 7.1 corresponds (←) to about 63% of people being very willing to recommend (i.e. providing a rating of 8, 9 or 10 on a 10-point scale); if the 12-month target for loyalty is chosen as 75%, this means that the value score must be increased to about 7.65 (based on Fisher (2013), exhibit 4.1; after Kordupleski (2003))

Complete chain of actions for development of internal metrics: priorities identified in a value survey trigger a transaction survey for an important business process, leading to identification of operational quality attributes and internal metrics that can be tracked to monitor improvement (from Fisher (2013), exhibit 5.11; after Kordupleski (2003))

There are some important points to make about this overall process.

- (a)

It is consistent with survey process desiderata*.

- (b)

The overall measure of customer satisfaction can be linked to higher level business drivers (see Fig. 4). Making this connection is critical, because customer loyalty can be linked to bottom line financial performance and so form the basis for choosing between competing possibilities for improvement. In an initial customer value study of senior managers by the Property Division of a research agency, five business impact questions were asked. The loyalty results for each question corresponding to the overall rating of value were

- (i)

40% rating the Division as strongly aligned with corporate objectives,

- (ii)

7% rating the Division highly on optimizing ecological sustainability,

- (ii)

3% rating the Division highly on improving operating costs of facilities,

- (iv)

27% rating the Division highly on developing and maintaining valued research facilities and

- (v)

20% rating the division highly on optimizing utilization of properties.

The striking graphical results (S) corresponding to Fig. 4 led to specific initiatives being included in a 5-year strategic plan developed shortly thereafter.

- (i)

- (c)

It provides the leadership with a means of selecting their top two or three priorities for improvement: what needs to be fixed and in what order? Many satisfaction survey instruments identify a large number of issues to address, but with little guidance about which improvements are likely to make the biggest difference. What is actually needed to support good decision making is a short list of top priorities: more data will be available in a few months to identify other areas that require attention, and in which order. According to this approach, focus attention in areas leading to the greatest gain in customer value and so in customer loyalty (or whatever other higher level business metric is appropriate).

- (d)

It demonstrates an important hierarchical structure among performance measures, consistent with the performance measurement framework. Some measures are far more important than others. The customer value-added score is a success measure, the value score is a tactical measure and the attribute scores and in-process measures are operational measures.

- (e)

It reveals the interplay between lead and lag indicators. At the highest level, this is represented by the value score as a lead indicator for the customer value-added score. At the lowest level, the in-process measures are lead indicators for the satisfaction ratings of their corresponding quality attributes. A not-so-incidental point arises here: distinguishing between attributes and in-process measures that have desirable consequences and those that, although seemingly good for the business, may have undesirable consequences elsewhere in the system. Kordupleski (2003) illustrated this point compellingly with the example of a billing process. If the company's focus is on banking customers’ payments as fast as possible, it leads to billing process measures such as overdue payments. However, if the focus is on what is important to the customer about the whole payment process, this leads to quality attributes such as no surprises, easy to understand and easy to correct, and to in-process measures such as percentage of invoices generating enquiries and percentage of invoice enquiries resolved at first call. These are ‘customer friendly’ metrics while still enabling the business to operate more efficiently.

- (f)

The structured survey instrument provides a means of checking that no important satisfaction driver has been omitted. If, at some level, the model fit is unsatisfactory, something is missing, and the focus group work needs to be revisited.

- (g)

Overall value is linked to the business processes that are the means to improve it.

- (h)

An on-going dialogue with stakeholders is essential. The whole process of managing customer value (and, more generally, stakeholder value) is one of conducting an on-going dialogue with the stakeholder—asking what needs attention next, responding to the feedback, letting the stakeholder know about the improvements, and then asking ‘What next?’. (It goes without saying that this cannot be accomplished without an internal culture of continuous improvement.)

- (i)

Overall, the process helps to align the people in the enterprise with creating and adding value for customers.

- (j)

All the major branches in the value tree can be used for benchmarking purposes.

- (k)

The customer value tree provides a clear interpretation for the term ‘value proposition’. Thus, taking Table 1 as a starting point, a company may choose as its strategy to be at par with the competition on price and image, but to excel on quality, and that strategy would inform its improvement actions relating to lower level branches of the value tree. Kordupleski (2003), page 25 (see also Fisher (2013), page 48), has an interesting way of representing this in a so-called ‘value map’.

- (l)

The market's perception of what constitutes value does not stay fixed in time. At some point such a change will start to become evident through degradation in the quality of the model fits and early warning may also be gleaned from qualitative responses. One needs to sample the market at a frequency that is appropriate to the market to be able to guard against poor quality data; however, see the next point.

- (m)

People are becoming increasingly resistant to requests for feedback. In particular, this makes it increasingly difficult to obtain competitive data, and more reliance needs to be placed on internal benchmarking, for example by using the value–loyalty plot.

Before looking at how customer value management can be adapted to other stakeholder groups, we consider some statistical modelling issues.

2.4. Statistical modelling and analysis issues

2.4.1. The rating scheme

To provide context for these comments, we take as an example the National Student Survey 2017 (http://www.thestudentsurvey.com/content/NSS2017_Core_Questionnaire.pdf). A simple analysis of the National Student Survey instrument shows that it probably fails all of the desiderata* for such surveys. One critical weakness derives from its use of the Likert scale (Likert, 1932), with students being asked, in effect, ‘Please rate your agreement with …’ by using a seven-point scale ranging from strongly disagree to strongly agree. The problem with this approach is that it measures agreement, not performance level: students are being asked to indicate agreement or disagreement with a number of statements, rather than to rate the performance of the institution (via the instructor). Also, it can lead to difficulties of interpretation. Subjective judgements are built into the survey statements. For example, a student may disagree strongly with ‘I have received detailed comments on my work’ yet the comments may have been quite sufficient for the student's needs. A more egregious example is the overall statement ‘Overall, I am satisfied with the quality of the course’. A student may strongly agree that he or she is ‘satisfied’; however (see Section 1.2), there is no such thing as a satisfied customer, only that there are degrees of satisfaction, and the degree of satisfaction relative to the competition is what really counts. Such an approach to satisfaction surveys does not address this issue. See Kordupleski (2003) for a comparison of different approaches to posing survey questions.

2.4.2. Modelling value data

A critical consideration in modelling and analysing value data is the interpretability of the results by decision makers—typically non-statisticians—to inform the selection of a small number of improvement priorities. So, the goal is to provide the sort of results that are shown in Table 1.

The initial approach that was used at AT&T, where the customer value programme was developed and launched in response to AT&T's 1986 crisis, was due to Rich de Nicola and Ray Kordupleski (Kordupleski, 2003, 2018). It involved modelling the data set by using a sequence of hierarchical models as described in the previous section. At each level, they fitted simple linear models and then converted the regression coefficients to elasticities* to aid interpretation.

Subsequently, Kordupleski left AT&T, and after a while the customer value programme started to drift. Senior leadership in Lucent again became concerned about their customer data. An in-house statistical research group led by William Cleveland built a comprehensive hierarchical Bayes model for the entire available data, which extended over several survey periods. Clark et al. (1999) reported careful empirical validation of modelling assumptions (e.g. whether it is reasonable to model data measured on the 10-point scale as continuous variables), and that modelling appeared to be very effective in explaining the various components of variation in the data. However, this came at a significant cost: the model involved using a transformation to symmetrize the data and the results were reported on the transformed scale as trellis graphics rather than in the form that is shown in Table 1 that is better suited to direct interpretation and action by management.

The empirical evidence from studying a wide range of value data sets (e.g. Kordupleski, personal communication, and the author's own experience) suggests that, if interpretability is an overriding consideration, then the following simple modification of the de Nicola–Kordupleski approach suffices to give reliable and actionable results. Fit a least squares model. Occasionally collinearities can occur and can generally be handled satisfactorily by eliminating one of the attributes. Set negative coefficients to 0. Use coefficients as impact weights, normalized to add to multiple-correlation coefficient R2. Use R2 to describe the model fit. Poor model fits, particularly those exhibiting large negative coefficients, are indicative either of insufficient care being taken to identify all the important attributes of a particular driver or, if this occurs after a number of survey cycles, of significant change in what is important to the market.

Of course, the linear models are only approximations but (exemplifying George Box's observation about all models being wrong but some being useful) the approximations are very useful here, because the goal of the analysis is usually to identify the top two or three areas needing focus. Experience in applying this approach in a variety of settings, particularly for customers, confirms that, provided that the choice of the top level structure for the value tree and the initial identification of attributes are done carefully, statistically acceptable model fits can be obtained that are useful for prediction; and, further, selecting and implementing improvements based on on-going acquisition and analysis of value survey data leads to continuous improvements in business results.

2.4.3. Modelling value data collected over time

Most monitoring of markets is still done by static survey—monthly, quarterly or yearly. However, there may be something to be gained from acquiring and analysing customer satisfaction data continuously to enable a timely response to changes in customer satisfaction, and the same is true for other stakeholders.

In this situation, rather than collecting the entire data set in a period of 3–4 weeks each quarter, a small fraction is collected every week or two, resulting in a sequence of values (X1, t1), (X2, t2), … where Xi is the tree-structured observation from respondent i at time ti, i = 1, 2,…. The goals of the modelling and analysis are now slightly enhanced:

- (a)

obtain results similar to those in Table 1, where the weights convey a sense of the relative importance of the various factors in determining a customer's overall satisfaction with the superordinate attribute, and the summary ratings have associated estimates of precision, either in the form of standard errors or expressed as confidence intervals;

- (b)

report the results in the original scale;

- (c)

detect ‘interesting’ temporal changes, either in ratings or in weights;

- (d)

provide readily interpretable trend charts of what is going on.

Fisher et al. (2005) developed a method for modelling such data by using a Kalman filter, including a running R2 to normalize the regression coefficients as described earlier. An application of this method is given below in the context of people value surveys (Section 2.5).

2.4.4. Handling mistaken responses

Generally, in value surveys, respondents are asked to rate an enterprise on a set of statements where, the higher the rating, the greater the degree of satisfaction with how the enterprise is perceived to be performing. When the stakeholder group is the community, value is sometimes modelled as a trade-off between perceived benefits of a proposal, and perceived concerns. There is negligible prospect of misunderstanding (S) when respondents are asked to rate a list of prospective benefits. However, respondents can be confused about the meaning of a high versus a low rating when it comes to rating concerns, resulting in flipped ratings (11 – R instead of R). Fortunately, it is possible to handle such situations, by using the expectation–maximization algorithm to decide which responses should probably be reflected (R→ 11 – R), so that approach B can be adopted with some confidence. (See Fisher et al. (2008) and Fisher and Lee (2011) for a discussion of the project and of the expectation–maximization approach respectively.)

2.4.5. ‘Ordered text mining’

In addition to being asked to provide ratings, respondents are requested to provide reasons for their ratings of main branches and of overall perceived value. These data can provide valuable insight into what needs to be addressed, once an area of focus has been identified as a priority from the analysis of the quantitative data. With a large number of respondents, it is of interest to explore whether some automated procedure might suggest common themes among the reasons for low ratings.

There is a substantial literature relating to text mining and related methodologies (topic modelling, …) for studying unstructured text. Generally, these procedures have been applied to large blocks of text. The situation here is a little different: there are large amounts of small blocks of text (e.g. a maximum of about 250 characters), and there is a quantitative covariate (the rating) associated with each small block. There is some published work on this problem: see for example McAuliffe and Blei (2008). David Banks and his students Christine Chai and Min Jun Park have made a preliminary study of some value survey data by using topic modelling and obtained some encouraging results with the very limited data that were available (1158 words and 530 comments, after data cleaning); see Chai (2017). The small size of each comment, which is deliberately restricted to minimize the amount of time that is needed to complete a survey, presents a challenge to the technique.

2.5. Managing people value

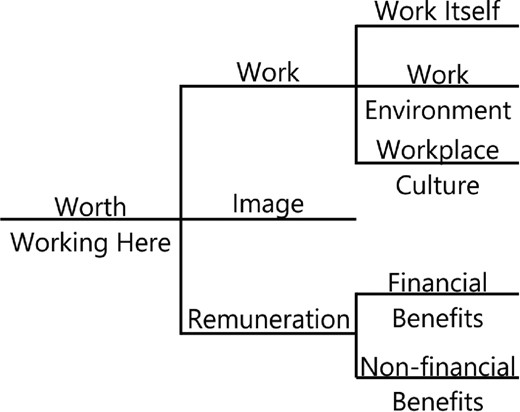

For people, a value tree that has proved helpful in practice (as judged by the quality of model fits and by the improvements in the business resulting from actions taken in response to each round of survey results) is shown in Fig. 6. The concept of value is described as worth working here, with three main drivers: work, image and remuneration.

A possible high level structure for a people value tree, as the basis of a staff survey: the concept of overall people value is described by worth working here

Staff surveys based on this instrument tend to be rather shorter than traditional staff surveys, while still having the important property that it is possible to check statistically to ensure that no important attribute has been omitted.

For many enterprises, typical survey practice is to conduct a huge staff survey annually, or perhaps every 2 years. However, such a low frequency is useless for managing for improvement. At best, an enterprise can only react to bad news some time after things have started to go downhill and people have become sufficiently disenchanted that some have left … which has a very deleterious effect on the business bottom line. According to a rule of thumb that is used in the human resources area, unplanned staff turnover is conservatively reckoned to cost an enterprise at least 150% of the annual salary package for senior officers, and 100% for less senior people, and that is without counting the lost knowledge and knowhow. (See for example Lucas (2013) and Turnage (2015) for more discussion. Whereas academic institutions tend to have very low staff turnover, it is the author's experience that for other enterprises, such as building and construction companies, turnover may be of the order of 15–20%, and at least one leading events management company runs at 45%. And, at some nursing homes in the USA, turnover exceeds 100% for some categories of staff.) Thus there are considerable savings to be made by effecting a few per cent reduction.

Here, continuous surveying has much to offer. Fisher (2013), section 6.5, described such a process applied to a small government agency of about 100 people, where an initial survey of all the staff provided the basis for identifying some key improvement activities. The value tree that was used was a precursor to that shown in Fig. 6. The initial survey results for work and its drivers are shown in Table 2.

Profile for part of the initial people value survey for a government agency†

| Driver . | Impact weight (%) . | Mean rating (precision ±0.20) . |

|---|---|---|

| Work itself | 50 | 5.5 |

| Work environment | 24 | 5.3 |

| Image | 26 | 5.9 |

| Work | 5.5 |

| Driver . | Impact weight (%) . | Mean rating (precision ±0.20) . |

|---|---|---|

| Work itself | 50 | 5.5 |

| Work environment | 24 | 5.3 |

| Image | 26 | 5.9 |

| Work | 5.5 |

Impact weights have been normalized to add to 100%; the actual unexplained variation was 14%; mean ratings have been adjusted by a constant for confidentiality (from Fisher (2013), exhibit 6.8).

Profile for part of the initial people value survey for a government agency†

| Driver . | Impact weight (%) . | Mean rating (precision ±0.20) . |

|---|---|---|

| Work itself | 50 | 5.5 |

| Work environment | 24 | 5.3 |

| Image | 26 | 5.9 |

| Work | 5.5 |

| Driver . | Impact weight (%) . | Mean rating (precision ±0.20) . |

|---|---|---|

| Work itself | 50 | 5.5 |

| Work environment | 24 | 5.3 |

| Image | 26 | 5.9 |

| Work | 5.5 |

Impact weights have been normalized to add to 100%; the actual unexplained variation was 14%; mean ratings have been adjusted by a constant for confidentiality (from Fisher (2013), exhibit 6.8).

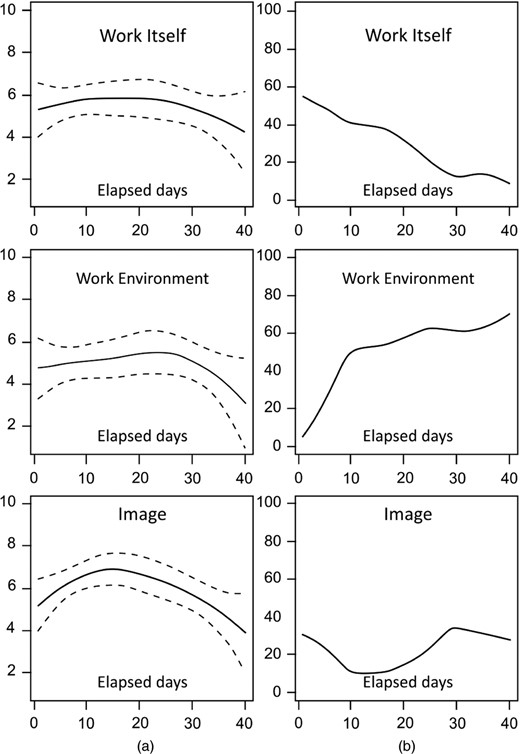

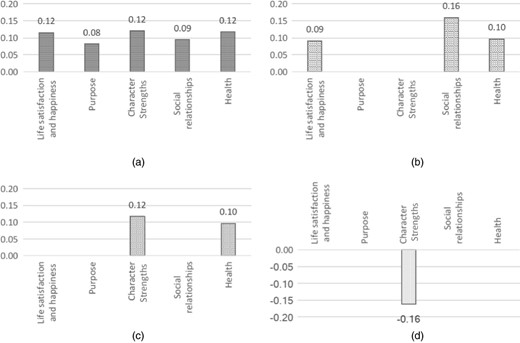

Shortly thereafter a continuous process was implemented involving sampling a few randomly selected respondents each week, to monitor the effect of improvement initiatives and to identify where next to focus attention. Fig. 7 shows the trends in the ratings and impact weights after a number of weeks of surveying. There are quite pronounced concave patterns in the ratings. Even more striking, however, are the trends in the relative impact weights. Whereas the initial impact of work itself was 50%, by the end of the period it had dropped to 0, whereas the relative importance of work environment had risen from 25% to 75%. During this period, the head of the agency had been summarily dismissed. People lost all interest in their jobs, and their overall feelings about their work were being driven largely by the poisonous work environment.

Results from a continuous monitoring people value survey, over a 3-month period (the sudden dismissal of the head of the agency has produced a pronounced effect in trends for both ratings and impact weights (from Fisher (2013), exhibit 6.9): (a) trends for ratings; (b) trends for per cent impact

The early availability of such information makes it possible for senior officers to act in a timely fashion to minimize the long-term damage, especially in terms of losing staff.

2.6. Managing partner value

Whereas the main structure of the value trees for customers and for people is reasonably generic in each case, the same is not so for partners or for the community.

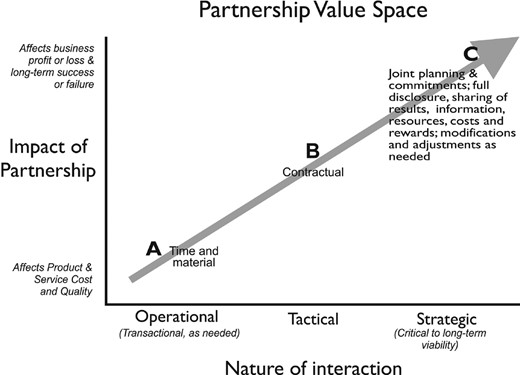

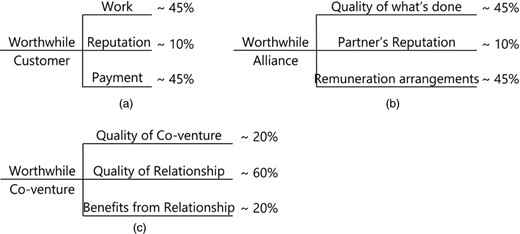

For partners, the nature of the partnership has a critical effect on the structure of the value tree. Fig. 8 displays the very different sorts of partnership that are possible, from a simple transactional, or customer–supplier, model (where, if needs be, one can simply change supplier at possibly negligible cost) to a strategic relationship in which, effectively, the enterprise and its co-venturers live or die together. Possible partnership value trees for the three positions A, B and C in Fig. 8 are shown in Fig. 9.

Partnerships can range from simple customer–supplier relationships to strategic relationships where there is complete mutual dependence: it can be helpful to distinguish three types of partnership, each with a characteristic concept of value (from Fisher (2013), exhibit 7.1)

(a) Operational model, suppliers, (b) tactical model, alliances, and (c) strategic model, co-ventures: value means something very different for the different types of partnership; just as importantly, the relative importance of the three value drivers in each model reflects the very different nature of each type (from Fisher (2013), exhibit 7.2)

Apart from operational relationships where the number of suppliers can be huge, only small amounts of data are collected in assessing partnership value (there being relatively few partners), so no formal statistical analysis is possible. Instead, the emphasis is on the process for summarizing and presenting the responses in such a way that a common understanding can be developed and priorities assessed (see Fisher (2013), chapter 7, and Fisher and Peacock (2017) for details and another example), so this is not pursued further here.

2.7. Managing community value

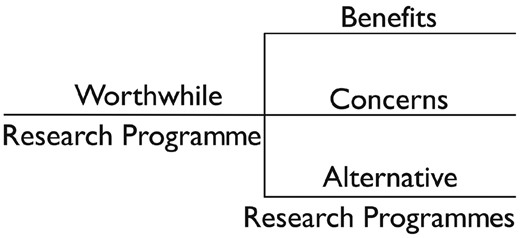

Failure to engage in meaningful dialogue with the community before making significant investment has been the downfall of many enterprises (Fisher et al., 2008). This is particularly so in relation to environmental issues such as attempts to introduce genetically modified crops or embarking on new mining ventures. The concept of value will vary according to the circumstance. For example, the Invasive Animals Cooperative Research Centre conducted a 3-year community national weekly survey in Australia to monitor community attitudes to management of invasive species of animals, to inform the communication activities (Fisher et al., 2008, 2012). In this context, one possible community value tree has the structure that is depicted in Fig. 10.

Example of community value and its drivers for an agency carrying out research into methods for managing pest animals: alternative research programmes refers to research by other agencies targeting different environmental issues; the concept of value is captured by the term worthwhile research programme

The study aimed to identify the critical factors determining the community's overall satisfaction with the research conducted by the Invasive Animals Cooperative Research Centre and then to track perceptions weekly with a view to identifying issues that needed to be addressed and to monitor the effect of communication initiatives. Again, the overall value score is of interest only in so far as it is possible to relate it to higher level business drivers (see Fig. 4) where, in this instance, the sorts of ‘loyalty’ questions of interest would take the form willingness to support widespread management of a specific pest (e.g. mice) by using a genetically modified virus to make females infertile, or willingness to support research into viral methods for control of other pests such as rabbits and foxes. This study used the same continuous survey modelling approach as described earlier.

2.8. Managing owner value

Who owns the enterprise?

Again, a variety of answers implies a variety of concepts of owner value. Here, we look at possibly the most complex of these: a publicly listed company whose owners are shareholders buying and selling their share of ownership on a stock exchange. This last point raises a new issue. For the most part, the owners will have only limited knowledge of the company that they own. They are not privy to detailed information about day-to-day operations, let alone to strategy and tactics about how the company seeks to gain and sustain competitive advantage. Rather, they have delegates collectively known as the board, who (supposedly) protect their interests in their investment and who do have privileged access to commercially sensitive information. So the term owner value for a publicly listed enterprise means, in practice, board value.

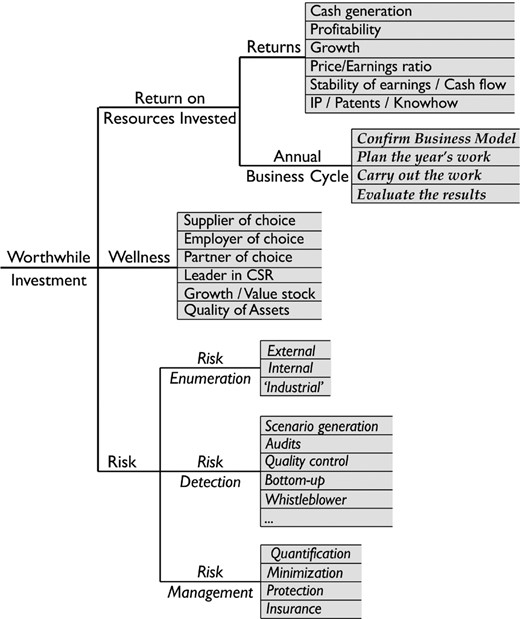

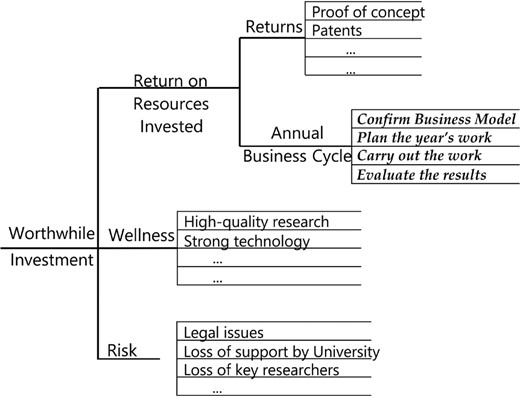

Fig. 11 provides a representation of value for such an enterprise. This was developed after extensive consultation with highly experienced business leaders (Board Chairs, Company Directors and Chief Executive Officers). It represents board value in terms of three principal drivers: return on resources invested, wellness and risk. (In this context, wellness refers to the health of an enterprise as characterized by the state of its relationships with its customers, its people, its partners and the community, the condition of its assets and the market view of the enterprise as an investment.) Return on resources invested is itself composed of returns (direct financial and other measures of wealth) and the annual business cycle (the business process that generates the returns). Risk has three processes as its drivers.

Example of a value tree for the board, as proxies for the owners: return is elaborated in terms of financial and non-financial performance quantities and assets, and the key business process which, for the board, is the annual planning cycle; risk is so important that it is elevated to the same status as return and wellness; items shown in italics are processes; the board value tree helps to identify the specific groups of lead indicators (shaded in the figure) that need to be monitored regularly (e.g. monthly or quarterly) by the leadership, to provide a concise overview of where the enterprise is headed (based on Fisher (2013), exhibits 9.2 and 10.1)

If this tree is accepted as a reasonable representation in terms of completeness of its coverage of important drivers and attributes of board value, it has two important implications. Firstly, the board value tree describes the scope of what regular board reports need to contain, to provide board members with the ability to exercise due diligence. And, secondly, the board value tree identifies items for which lead indicators are needed monthly or quarterly, namely those highlighted in Fig. 11.

In other words, an answer to the question of what should constitute the quantitative content in monthly or quarterly board reports flows logically from considerations of stakeholder value. This appears to be the first time that a logical basis for answering this question has been developed.

The actual format and content of the reports raise a host of presentation and statistical issues that go beyond the scope of this paper (see Fisher (2013), chapter 10, for a discussion) and provide plenty of opportunity for statisticians to be involved in devising lead indicators and cross-relating time series of lead and lag indicators.

Norbert Vogel, who has been involved in evaluation of enterprises against globally recognized business excellence award criteria such as the European Foundation for Quality Management and the Baldrige awards for over 30 years, has observed (personal communication) that the use of performance measurement to support decision making in enterprises is typically the area requiring greatest attention. It is arguable that this is attributable, at least in part, to the non-availability of a system for identifying and using appropriate performance measures. The system that is described here is itself consistent with the criteria and so would appear to offer a way forward for improved leadership and management of enterprises.

2.9. Other comments and comparative remarks

2.9.1. Objective and subjective measures

As will now be evident, the value and relative value metrics, which are the highest levels of metrics in the performance measurement system, are subjective: they are measures of perception.

In the context of so-called league tables when comparing institutional performance, Goldstein and Spiegelhalter (1996) commented that

‘In judging whether an institution has enhanced the welfare, health or performance of its members between their entry and exit, the term ‘value added’ has come to be used widely. This term is borrowed from economics, but it is difficult to justify in areas such as education or health.’

In fact, the term escaped its economics roots about 40 years ago: around 1980, it was being used by Richard Normann informally in the context of customers and, as we have seen, rather more formally a few years later by people at AT&T. The issue that arises here (and that recurs in the context of measuring research quality) is ‘Who is asking whether “the institution has enhanced the welfare, health or performance of its members”, and what is their concept of value?’. The answer to this can lead to a meaningful concept of value added.

2.9.2. Hierarchical models for value

Hierarchical models occur in many contexts in statistics, decision theory, operations research and elsewhere, and there are, in fact, similarities between the tree structures that are employed here and those in other settings. For example, the ‘analytic hierarchy process’ and ‘multiple-criteria decision analysis*’ are structured techniques for organizing and analysing complex decisions, based on identifying a number of supposedly relevant criteria. ‘Quality function deployment*’ also uses a tree-structured elaboration of customer requirements as a means of translating the customer requirements into engineering specifications and plans to meet those requirements, and it is thus relevant to understanding the attributes of the product branch in a value tree. Belton and Stewart (2002), page 66, explicitly described a concept of a value tree featuring the criteria and the competing options. Keeney (1992) devoted a whole book to ‘value-based thinking’. However, the goal of these methodologies is different from that of customer value management: they seek to make an ‘optimal’ choice from a number of options. In contrast, the purpose of customer value management is continuous improvement of value by on-going identification of process and system improvements (so that business processes appear in stakeholder value trees as a matter of necessity). With analytic hierarchy process or multiple-criteria decision analysis the number of decision makers is small. Typically, with customer value management, the number is large (the whole market). Further, customer value management provides assurance that no important factor has been omitted from consideration.

Although not explicitly tree structured, the ‘goal question metric strategies approach*’ offers a means of ensuring alignment of a specific set of metrics with higher level business goals and strategies. However, it does not lead in any obvious way to a complete performance measurement system satisfying any of the desiderata (a)–(c) in Section 2.1.

2.9.3. Balanced Scorecard

The Balanced Scorecard can now be positioned in a broader context. It reflects a philosophy of management that translates an organization's strategy into a set of objectives, measures, targets and initiatives and aligns the organization to them. As such, the Balanced Scorecard's vehicle for deployment throughout an organization is the organizational chart; consequently, an individual's performance metrics are aligned vertically through the chart to the objectives and strategies.

Unfortunately, as Myron Tribus has pointed out in many places (e.g. Tribus (1989, 1992)), work processes flow across an organization, not up and down, and so cut across internal organizational boundaries. For example, it is evident in Fig. 3 that the delivery process will involve, in turn, the marketing and sales, legal, service and accounts departments. People who are involved (should) have process ownership, accountability, responsibility and delegated authority relating to process flow, so the metrics that they (should) monitor, manage and report (should) relate to process outcomes and outputs and to in-process measures all of which, ideally, will be aligned with producing an excellent product and experience for the ultimate customer. Although it has a process perspective, the Balanced Scorecard says very little about process ownership, or about the differences between outcome, output or in-process measures, so appropriate alignment is likely to be due more to accident than to design.

There are several other issues, some closely related. The lack of distinction between outcome, output and in-process measures is a local manifestation of the failure to distinguish the three zones of measurement: strategic, tactical and operational. There is no explicit recognition of the need for relative measures that are benchmarked against what a company's competitors are doing, which is especially important at the strategic level. And there is no guarantee that there will be measures relating to all important stakeholders. If none of the strategies or objectives is concerned with partners, or the community, then there will be no Balanced Scorecard metrics for them. As a consequence, the board and senior leadership are not well placed to be duly diligent in managing all facets of risk.

Kaplan (2010) has argued vigorously against a stakeholder approach. However, the examples that are cited in Kaplan (2010) (pages 14–17) can equally well be regarded as issues of market segmentation and setting of priorities by leadership, and so do not fundamentally undermine the approach that is advocated here.

2.9.4. Six Sigma

In an interesting review of Six Sigma techniques, Montgomery and Woodall (2008) provided a neat encapsulation of the three ‘generations’ of Six Sigma described by Hahn et al. (2000):

‘Generation 1—mainly Motorola in a manufacturing setting—defect elimination, reduction of variability

Generation 2 (especially at GE)—relate these to projects and activities linked to improved business performance via improved product design and cost reduction

Generation 3—creating value throughout the organisation and for stakeholders’.

Thus our approach dovetails with third generation Six Sigma as a performance measurement front-end system.

2.9.5. Net promoter score

The net promoter score (Reichheld, 2003) is a now-ubiquitous stakeholder satisfaction metric for assessing customer satisfaction, supposedly rendering the whole customer value management process unnecessary. Fisher and Kordupleski (2019) explain why it is totally unsatisfactory.

2.9.6. Coda

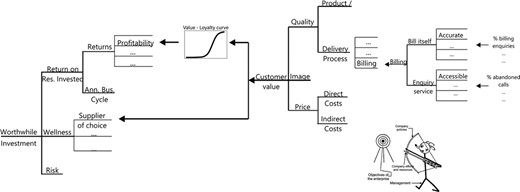

With all the models for value established, we can see the principle of alignment enacted, as shown in Fig. 12. It brings to mind Sarasohn's archer (inset in Fig. 12), a cartoon used 70 years ago (Sarasohn and Protzman 1948, 1998) to capture the same concept in the context of management.

The main diagram illustrates the implementation of the alignment principle, from the operational level of in-process metrics to the ultimate measure of impact, the owner's perception of the enterprise as a worthwhile investment: only the customer value tree is shown, but the value trees for people, partners and community provide similar alignment (the figure is derived from the profit tree diagram in Kordupleski (2003), page 82); the inset is an adaptation of the Sarasohn archer, a cartoon drawn by Homer Sarasohn that appeared in the training manual (Sarasohn and Protzman, 1948) used to teach management to top Japanese executives just after the Second World War; the accompanying caption read ‘The policies created by management must direct the efforts and resources of the company to a defined target—the fundamental objectives of the enterprise’

3. Applying a stakeholder value approach in research environments

Multifarious assessment processes are associated with academic and research institutions, relating to teaching and learning and to research, and ranging from evaluation of individual courses to worldwide ranking of universities. The outcomes of such processes have very significant consequences for the income and reputation of universities, the funding and reputation of researchers and research centres, and the learning experiences and subsequent careers for students. Evaluating these processes from the perspective of our general approach suggests that there is plenty of opportunity for improvement.

This section provides a brief review of some of the work that is associated with measuring the quality of research work and of academic institutions, and then looks at some specific problems using the methods of the previous section.

3.1. Current assessment methodologies

There is a widespread desire to be able to rank individuals in a given discipline, university departments in a given discipline, research centres, universities, and so on, for purposes such as promotion, recruitment of faculty and students, and for allocation of research funding and other resources. This leads to a host of issues, including

- (a)

a (bureaucratic?) desire to avoid subjective measures,

- (b)

a (bureaucratic?) desire to use data that are ‘objective’ and easy to collect,

- (c)

a (bureaucratic?) desire for repeatability,

- (d)

a (bureaucratic?) desire to encourage efficiency and productivity,

- (e)

the cost of carrying out rankings,

- (f)

whether to use rankings or ratings

and others that will be mentioned shortly.

Naturally, competing methodologies abound for tackling issues (a)–(f) in such a way as to address the desired assessment goal. These are listed in the on-line supplementary material, together with some comments on the issue of bibliometrics. For now, we look at how the concepts that were described above can be applied to some examples of assessment in academic institutions.

3.2. Some specific cases

3.2.1. Assessing the quality of graduate programmes

Applying the Tribus paradigm immediately raises the critical issue ‘Who is asking the question?’ … to which the answer is, potentially, many different sorts of people, including

- (a)

current and prospective students,

- (b)

current and prospective faculty members,

- (c)

current and prospective employers—academic, non-academic,

- (d)

other people in the university, not least Deans, Provosts or Vice-Chancellors and other senior officers,

- (e)

university financial officers,

- (f)

granting agencies

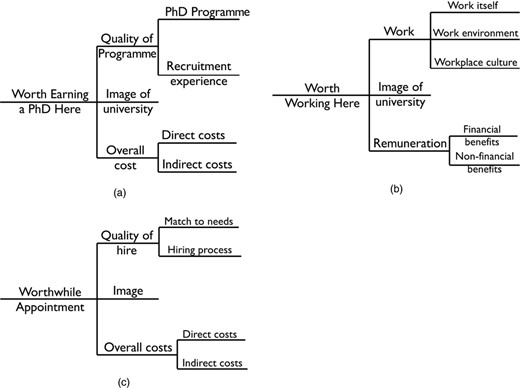

etc. And their conceptions of what constitutes quality vary considerably. Fig. 13 shows plausible top level quality trees for the first three groups. Clearly, they are very different. If that is so, how likely is it that a simple set of measures of the ‘quality’ of a graduate programme can make sense? Quality, like beauty, is in the eye of the beholder.

Plausible value trees—three examples of the differing types of people interested in the quality of a graduate programme: (a) a prospective student (quality of programme is a principal driver of value); (b) a prospective faculty member (quality of programme probably enters through work environment and image); (c) a prospective academic employer of graduates from the programme (quality of programme will be an important component of image); their views about what represents quality and metrics that might be useful in making a decision will differ markedly from situation to situation

There are several points to be made about the issue of how the various sorts of performance measures fit together, and in light of the discussion in Section 2.2.

- (a)

Not all performance measures are equal. For example, in the current context, there is a hierarchy of measures, leading to the measure of perceived quality (and, ultimately, of overall perceived value).

- (b)

There is a lead–lag interplay between hard and soft measures. In the transaction survey (Fig. 5), the internal measures are chosen as ‘hard’ (i.e. ‘objective’) measures that are likely to be good lead indicators of attribute ratings (perceptual measures of low level outcomes). Further, these hard measures are output process measures and so susceptible to management and improvement by applying standard quality improvement methods, leading to improvement in the perceptual measures.

- (c)

Good hard measures may be difficult to find. This point has already been noted in Section 2.3, point (e). It is also at the heart of the problem of measuring ‘quality’ of a department, school or university. Many obvious examples of bad hard measures come to mind immediately.

- (d)

Outcome measures are measures of perception (i.e. soft, or subjective, measures). Output measures may be hard or soft. Outcomes are rarely if ever one dimensional. However, viewed as measures of perception, they may be captured by a single measure once the key components (drivers) of the desired outcome have been identified.

Two further points flow from this: firstly, that the starting point for performance measurement must be to clarify the overall desired outcome and then to ensure alignment with this outcome; secondly that it is difficult to see how using a mixture of hard and soft measures to define the outcome makes sense.

3.2.2. Measuring the quality of applied research centres

The Australian Research Council's ‘Excellence in research in Australia’ (ERA) assessment process provides a potential basis for allocating research funding. An ERA assessment of a research group arrives at an overall summary in the form of a single number: 5 (‘Well above world standard’), 4 (‘Above world standard’), 3 (‘World standard’), 2 (‘Below world standard’) and 1 (‘Well below world standard’).

One of the ERA's stated objectives is to ‘identify excellence across the full spectrum of research performance’ (http://www.arc.gov.au/pdf/era12/ERA%20Factsheet_Nov%202012.pdf). However, it is evident that the process favours researchers publishing frequently in high quality peer-reviewed journals or authoring research monographs with leading publishing houses. Whereas some long-established Australian universities have a lengthy tradition of research that is amenable to such assessment, a number of younger universities have a charter with rather more emphasis on ‘doing research that benefits the community in this State’ (or words to that effect): in other words, useful applied research whose end customer is unlikely to be the reader of a peer-reviewed journal or book. Nonetheless, their researchers compete for Australian Research Council research funding.

One of these universities commissioned a research project to develop a way to assess the quality of applied research groups, with two goals:

- (a)

providing a defensible means of calibrating the work of the research group on the ERA five-point scale and

- (b)

identifying ways in which the university could work with the group to improve its ERA-type rating.

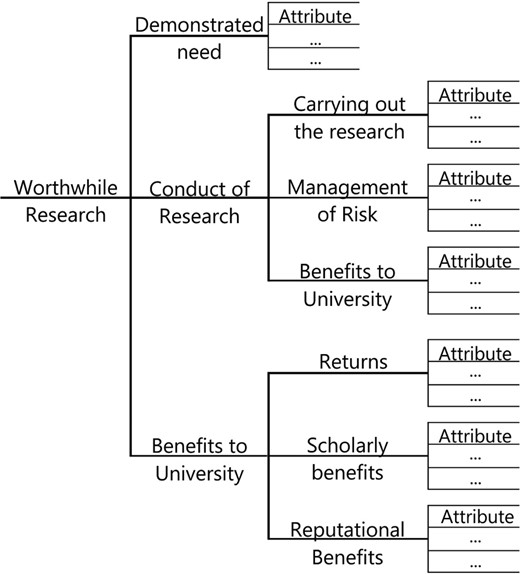

A process for doing this was developed and then carried out for one of the university's research groups. The university had already established three key criteria that they wanted to use, so an applied research group quality tree was developed with these as the main branches; Fig. 14. Each main branch will be examined in detail.

Applied research group quality tree as a basis for developing an overall ERA rating and for improvement

3.2.2.1. Demonstrated need

The purpose of demonstrated need is to provide evidence of beneficial impact of the university's research on the wider community. There are two distinct situations to be considered—

the principal beneficiaries are a few major investors in the research, for whom the impact is relatively soon after the conclusion of the research and

the impact is well removed in time and space, not infrequently a group in society who are completely unaware of the research being done

—and that calls for two quite different models.

For demonstrated need (a), overall investor value can be assessed by building a suitable version of Fig. 11, as shown in Fig. 15. Additionally, investors can be asked for business impact responses, such as willingness to reinvest with another project by this group or willingness to recommend this group to another investor for a different research-and-development project.

Possible model for investor value, for a small number of investors in a university research group

For demonstrated need (b), overall community value can be measured by building a suitable version of Fig. 10, as shown in Fig. 16. Selection of attributes may require discussion with large funding agencies.

3.2.2.2. Conduct of research

The purpose of this driver is to elicit evidence of how the Centre goes about identifying and responding to external needs. These processes will be the main focus of improvement activities. The attributes are identified by interviewing senior university officers.

3.2.2.3. Benefits to university

The purpose of this driver is to identify the range of benefits that are provided to the university by the Centre's work. Again, the attributes are identified by interviewing senior university officers. The research group is then requested to prepare a submission. This is not a lengthy document. For each major research programme, the group is asked to submit a brief report that provides

- (a)

information about the proportion of the Centre's resources involved,

- (b)

a summary of research outputs and outcomes—scholarly research, tactical research, research into dissemination and university-specific outcomes—and

- (c)

a two-page narrative that demonstrates the need for the research to be done, describes stages of the research, particularly how the research activities reach the end-users, and provides qualitative evidence of the impact of the work; and demonstrates that the university has gained benefit.