-

PDF

- Split View

-

Views

-

Cite

Cite

Adwaye M Rambojun, Hend Komber, Jennifer Rossdale, Jay Suntharalingam, Jonathan C L Rodrigues, Matthias J Ehrhardt, Audrey Repetti, Uncertainty quantification in computed tomography pulmonary angiography, PNAS Nexus, Volume 3, Issue 1, January 2024, pgad404, https://doi.org/10.1093/pnasnexus/pgad404

Close - Share Icon Share

Abstract

Computed tomography (CT) imaging of the thorax is widely used for the detection and monitoring of pulmonary embolism (PE). However, CT images can contain artifacts due to the acquisition or the processes involved in image reconstruction. Radiologists often have to distinguish between such artifacts and actual PEs. We provide a proof of concept in the form of a scalable hypothesis testing method for CT, to enable quantifying uncertainty of possible PEs. In particular, we introduce a Bayesian Framework to quantify the uncertainty of an observed compact structure that can be identified as a PE. We assess the ability of the method to operate under high-noise environments and with insufficient data.

Computed tomography (CT) imaging in medicine is widely used to visualize internal organs for diagnostic purposes. In the context of pulmonary embolism (PE) detection in the setting of acute chest pain, the PE can appear in CT scans as small structures with weak amplitude. So PE detection can be challenging in practice for clinicians, who have to decide whether the structures are PEs or not. This ambiguity can occur due to imperfect data acquisition (e.g. insufficient data, high-noise environment). In this work, we propose a computational tool to help clinicians to decide whether an observed structure is a PE or an artifact due to imperfect data. Our method quantifies the uncertainty of the structure, leveraging optimization and Bayesian theory.

Introduction

Pulmonary embolism detection with computed tomography angiography

Most medical image modalities such as computed tomography (CT), ultrasound, and magnetic resonance imaging are the result of an intricate image reconstruction process that uses noisy and incomplete captured data. In particular, CT is a popular imaging modality used to diagnose various types of pathologies, such as acute inflammatory conditions, strokes, and malignancy. X-rays are passed through the patient’s body from multiple angles and an attenuation coefficient is calculated depending on the densities of the different tissues the X-rays pass through. A reconstruction algorithm is used to create final 3D image. This algorithm is subject to creation of artifacts, i.e. structures not present in the ground truth image being captured (1). They can interfere with conclusions drawn by radiologists, who then have to infer if structures appearing in CT images are pathological or artifactual due to the inaccuracy of the data acquisition.

This is quite common when assessing CT scans for the presence of acute pulmonary embolism (PE), which is a major cause of mortality with approximately 30,000 deaths per year in the United Kingdom (2). Assessment and detection of PE and its cardiovascular complications is routinely performed with a CT pulmonary angiography (CTPA) (3). Chronic thromboembolic pulmonary hypertension is also a potential long-term disabling complication of acute PE, and CTPA is an important diagnostic tool as well as being useful to assess for operability (4). However, a variety of patient- and protocol-related factors can result in image artifacts that may impact the clinicoradiological confidence of image interpretation. If a false positive diagnosis is made, this can result in inappropriate patient treatment with anticoagulation, which is associated with an unnecessary increase in bleeding rates (5).

In this context, quantifying uncertainty of the PE-like structures observed in reconstructed CT thorax images would improve diagnosis accuracy. In this paper, we present an uncertainty quantification (UQ) framework to perform hypothesis tests on PE-like structures, and determine whether they are present in the patient thorax or are artifacts arising from inaccurate data acquisition.

Bayesian inference for imaging

Reconstruction of images from CT data can be formulated as an inverse problem. The objective is to find an estimate of an unknown image (i.e. patient’s thorax) from measurements acquired with a CT scanner (6, 7). Following a Bayesian framework (8), the image and the data are related through a statistical model. Then the estimate is inferred from according to its posterior distribution, which combines information from the likelihood, related to the observations , and the prior, used to introduce a priori information on the target image. The prior is used to regularize the model, to help to overcome ill-posedness and/or ill-conditionedness of the inverse problem. Common choices are to impose feasibility constraints, and to promote smoothness or sparsity of , possibly in some transformed domain such as wavelet, Fourier, or total variation (TV) (9).

Sampling methods, e.g. Markov chain Monte Carlo methods (MCMC), draw random samples according to the posterior distribution. These methods then allow us to form estimators [e.g. minimum mean square error estimator, posterior mean or maximum a posteriori (MAP) estimator], and to perform UQ through confidence intervals and hypothesis testing (10, 11). The main drawback of these methods is their high computational cost making them inefficient for high-dimensional problems, as encountered in imaging. Indeed, for CT imaging, the dimension of is often of the order of in the case of high-resolution lung scans (12). Although multiple works have emerged in the last years to help scaling sampling methods, e.g. (13–15), they usually remain prohibitive in such high dimensions.

Methods of choice for handling high-dimensional problems are proximal splitting optimization algorithms (16–18). These are known to be very efficient to form MAP estimates. Nevertheless, these methods only provide a point estimate, without quantifying the uncertainty on the delivered solution. To overcome this issue, recently a Bayesian Uncertainty Quantification by Optimization (BUQO) approach has been proposed in Refs. (19–21), to perform hypothesis testing on particular structures appearing on MAP estimates. The method determines whether the structures of interest are true, or are reconstruction artifacts due to acquisition inaccuracy. BUQO has the advantage of being scalable for high-dimensional problems, as the UQ problem is recast in an optimization framework, to leverage proximal splitting optimization algorithms.

UQ for PE

UQ is the main tool to assist doctors for accurate decision-making processes. Ill-posed and ill-conditioned inverse problems result in high uncertainty about the estimate. In this work, we focus on quantifying uncertainty of PE-like structures in CT thorax images. Specifically, we design a method based on BUQO to determine whether these structures are PEs, or if they are reconstruction artifacts.

Methods

In this section, we describe the steps of the proposed PE UQ technique. First, we form the CT image using an optimization algorithm (see below). Second, we identify PE-like structures in the image estimate, and postulate the null hypothesis that these structures are not present in the ground truth image, i.e. they are not in the patient’s thorax, but instead are reconstruction artifacts arising due to the ill-posedness of the problem. Third, we use our method to decide whether the null hypothesis can be rejected or not.

Bayesian inference and optimization for CT imaging

In general, the gantry of a CT scanner, which includes multiple X-ray sources and multiple detectors, will rotate around the patient’s chest. This generates an M-dimensional array of data, denoted by , consisting of attenuated X-ray intensities (6, 7). The pattern of attenuation is determined by the geometry of the area through which the beams are directed. The aim of CT reconstruction is to recover a voxel array of dimension N, denoted by , that represents the geometry of the organs inside the thorax given the observed noisy data . This can be reasonably approximated as a linear inverse problem of the form

where represents the CT measurement operator described above and is a realization of an additive independent and identically distributed (i.i.d.) random noise.

Using a Bayesian formulation, the posterior distribution of the problem, which combines information from the likelihood and the prior, can be expressed as

where f is assumed to be a log-concave likelihood associated with the statistical model of Eq. 1, and g is a log-concave prior distribution for . The usual approach to estimate is to use a MAP approach, that consists in defining as a minimizer of the negative logarithm of 2, i.e.

In this work, we assume that the exact noise distribution is unknown, but that it has a bounded energy, i.e. , where is the usual Euclidean norm, and . Then, a typical choice is to take to be the indicator function of the ball , centered in with radius . In addition, a common choice for the prior term is to promote sparsity of the image of interest in some basis (e.g. wavelet or TV). Then, Eq. 3 can be rewritten as

where the operator models a linear transform, chosen such that has only few nonzero coefficients. Eq. 4 can be solved efficiently using proximal splitting algorithms (16–18).

High-dimensional hypothesis testing

The method described in the previous section provides a point estimate of , without additional information regarding its uncertainty. In this work, we propose to perform a hypothesis test on structures that can be identified as PEs in the MAP estimate.

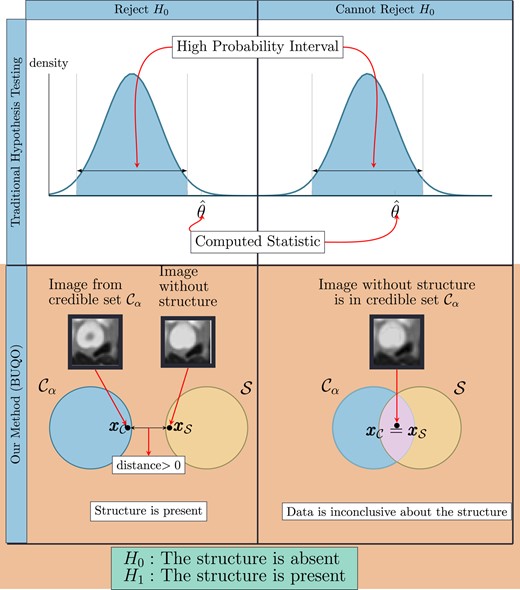

To illustrate our approach, we recall the basics of hypothesis testing. Typically, we postulate a null hypothesis, i.e. we make a claim about the distribution of observed data. We use the observed data to compute a statistic . We decide to reject or not the null hypothesis depending if lies in a high-probability interval (see Fig. 1).

Difference between the traditional hypothesis testing and our method. In traditional hypothesis testing, one computes the credible interval and the test statistic from data. The null hypothesis H0 is rejected if is not in the credible region. Similarly for our proposed method, we compute the high posterior density region and an image with the structure removed (similar to the test statistic). We reject the null hypothesis (which states that the structure is absent) if does not lie inside the credible region. This is determined by the distance between and , which are the two elements of and , respectively, that are closest to each other. If this distance is zero, we conclude that otherwise, .

This can be extended to computational imaging (20, 21), to quantify uncertainty on structures appearing on the MAP estimate , obtained by solving 4. In this context, we postulate the null hypothesis and the alternative hypothesis as follows:

: The structure is absent from the true image

: The structure is present in the true image

Formally, using Bayesian decision theory (10), we can conclude that is rejected in favor of if , where denotes the level of significance of the test. Such probability can be approximated by MCMC approaches (11); however, it becomes intractable for high-dimensional problems such as CT imaging. To overcome this difficulty, we introduce a subset of , associated with , containing all the possible images without the structure of interest.

To perform the hypothesis test, we will compare with a posterior credible set , corresponding to the set of possible solutions where most of the posterior probability mass of lies (19). Formally, satisfies . Again computing such probability in high dimension is intractable. Instead, Pereyra (19) introduced a conservative credible region , in the sense that , that does not require any additional computational cost other than building a MAP estimate , i.e. solving 4. Note that, by construction, we have , and consists of defining a feasibility set around .

The BUQO approach adopted in this work consists in determining if the intersection between and is empty. If it is empty, it means that , hence is rejected. To determine if Ø, we aim to find an image belonging to . If such image exists, it means that Ø, and it is possible to find (at least) one image supported by the data without the structure of interest, hence cannot be rejected. Otherwise Ø, and is rejected (see the second row of Fig. 1).

Hypothesis test for PE detection

In this section, we explain the proposed method to determine whether is empty or not. In addition, we give mathematical definitions of sets and , tailored for the PE UQ problem.

To find the closest image to the the MAP estimate , belonging to , one can project into . We denote this projected image. The first step is to verify if . If it is the case, then we have found an image in the intersection , and cannot be rejected, i.e. we are uncertain that the PE is present. If , it does not mean that is empty, and there might still be an image which belongs to both sets. To ascertain if the intersection is empty, we propose to equivalently compute the distance between and , denoted , and to verify if it is zero or positive. If , then we can conclude that Ø, so is rejected in favor of . Otherwise, if , there exists (at least) one image in the intersection, and hence cannot be rejected.

To evaluate , we need to minimize the distance between an element of and an element of , i.e. we want to

For our problem, the conservative credible set, associated with Eq. 4, is defined as , where . Given a candidate area that is to be assessed for the presence of a PE, we define the set as the set of images that do not contain PE-like structures at the candidate location. In particular, we want the pixel intensity profile within the structure’s area to be similar to the pixel intensity profile of a neighborhood of the structure. To this aim, we propose to define as the intersection of three sets, i.e. , given by

where is a linear operator selecting the pixels of the image corresponding to the PE area. The first set I is the positive orthant, to ensure images in are intensity images. The second set E controls the energy in the structure, ensuring that pixels inside the structure’s area are taking values around a predefined mean value , chosen according to its neighborhood. The third set S is a smoothness constraint, to control the pixel intensity variation in the structure’s area to be close to a mean value corresponding to the variations in its neighborhood. For both E and S, and are positive predefined constants to control the similarity between the structure’s area and its neighborhood.

Experiments

In this section, we present experimental results on synthetic CT data. We apply the BUQO method to real CT slices that contain a PE and assess the ability of the algorithm to detect the PEs under different noise levels and detector setups. We also apply the BUQO method to test for the presence of reconstruction artifacts that were created when simulating the forward problem. The selected reconstruction artifacts were deemed to be similar enough to PEs to be included in our study by trained radiologists. Hence, although we did not produce artifacts via the routinely encountered mechanisms such as beam hardening, their appearance had enough clinical significance for our purposes.

Experiment settings

Dataset description

CTPA was performed on multidetector array scanners (SOMATOMⓇ Drive and Definition Edge, Siemens Healthineers, Erlangen, Germany). The parameters were as follows: slice thickness, 1.2 pitch, 0.5 s rotation time, 145 kVp tube voltage, and 120 mAs with automatic dose modulation. Sixty milliliters of nonionic intravenous contrast medium (iohexol, 350 mg iodine/ml; Omnipaque 350, Amersham Health, England) were administered at 6 ml/s via an 18 G cannula. The acquisition was triggered by bolus tracking of the main pulmonary artery, with a threshold of 100 Hounsfield units (HU) and 4-s delay after triggering. The study received approval from the Research Ethics Committee and Health Research Authority (IRAS ID 284089). Informed written consent was not required.

Measurements

From these data, we consider two slices of reconstructed clinical images containing PEs. Using these slices, we simulate data to study the effect of CT acquisition quality on PE detection. To this end, we consider the model described in Eq. 1, with a forward operator modeling a parallel beam geometry with a fixed number of detectors and a variable number of acquisition angles . We generate in Eq. 1 as a realization of an i.i.d. Gaussian noise vector of size and variance . We then reconstruct the CT image by solving Eq. 4 to obtain the MAP estimate.

PE definition

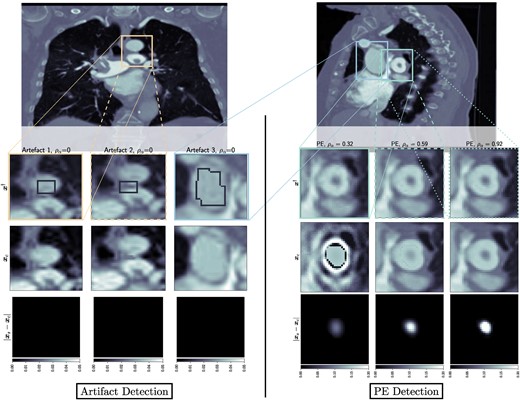

To create the masks related to the operator in Eqs. 7 and 8, we used MITK (22). Two types of masks were created by experienced clinical radiologists: masks identifying the location of real PEs appearing in the CTPA scans and masks identifying the location of PE-like artifacts appearing in the CTPA scans due to low quality of the acquired data. In Fig. 2 we show, for both slices, the PEs, and the artifacts of interest arising from the reconstruction process.

Left: Output of BUQO when used to quantify uncertainty of reconstruction artifacts. The forward problem parameters are chosen to be for all the artifacts. Right: Output of BUQO when used to quantify uncertainty of PEs, as the value of increases. The forward problem parameters are chosen to be (from the left to right column): , , and . First row: MAP estimates, zoomed on the structures of interest. Second row: Output image from BUQO. Third row: Difference images .

The set , as defined in Section 22.3, captures the pixel profile for an artery that does not have a PE. In the definition of , some parameters related to the energy and smoothness constraints must be chosen (see Eqs. 7 and 8, respectively). We propose to choose them automatically, by looking at histograms of pixel intensities and gradients in a neighborhood of the mask. Precisely, we sample pixels around the area of interest and compute the histogram of the intensities of the sampled pixel. Then, in Eq. 7, is set to be the median of this histogram, and is set to be the maximum of the difference between the upper 60th percentile and the median; and the difference between the median and the lower 60th percentile. The same is done to compute and in Eq. 8, but with the histogram of sampled gradients instead.

Result interpretation

To assess the effect of the acquisition quality (i.e. noise level σ and number of angles ) on the ability of our method to detect true structures, we introduce a structure confidence quantity

If , then and we can conclude that there exists an image without the observed structure that lies in the credible set . If , then , and the null hypothesis is rejected. The closer to one the value of is, the more certain we are that the null hypothesis should be rejected, and thus that the structure of interest is present in the true image. In practice, numerical errors must be taken into account, and the two above conditions should be relaxed as and , respectively, for some tolerance δ to be determined by the user.

Note that provides additional information than only an accept/reject hypothesis test. It can be interpreted as a percentage of the structure’s energy that is confirmed by the data. So when a selected PE-like structure is probed for UQ, provides a percentage of the structure’s energy that can be trusted.

In Fig. 1, we compare our method to traditional hypothesis testing in statistics. It is therefore natural to interpret as being equivalent to a P-value in hypothesis testing. However, accepting or rejecting the null hypothesis in our cases does not depend on some hard threshold on . There are two reasons for this. First, traditional hypothesis testing is a frequentist method, where one would typically take the output of models at face value. Our method is a Bayesian method, where one is more interested about prior and posterior distribution. As such, shows us the percentage of the structure that can be explained by the data. Setting a threshold on when to accept or reject the null hypothesis should be an application-specific matter. Second, the method we have proposed does not only generate but also generates and , whose qualitative contribution to the decision to accept or reject the null hypothesis is as important as the quantitative contribution of . Figure 2 shows the images and difference images , for different detector settings, and therefore different values of . It can be seen that nonnegative values of do not necessarily correspond to images that would be considered normal by a radiologist. However, very high values of (close to 1) tend to correspond to high fidelity images, which mimic real CT scans very well.

Results

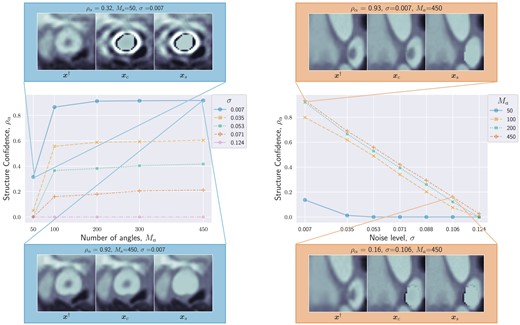

Confidence with respect to measurements

We show in Fig. 3 the behavior of for two assessed PE structures, with respect to the noise level σ for a fixed number of angles (left), and with respect to the number of angles for a fixed noise level (right). It can be observed that the ability of the algorithm to confirm the presence of PEs improves with decreasing noise levels and increasing number of angles.

Structure confidence as a function of number of angles (left) and noise level σ (right). High and low structure confidence are illustrated with qualitative examples of , and . Both plots show that as the data quality (i.e. number of angles and signal-to-noise ratio) increases, the structure confidence increases too, and we are more certain of the presence of the structure.

For the PE structure in Fig. 3(left), we provide additional results in Fig. 2(right). The images show the results of BUQO when considering , , and . In particular, the last row shows the differences (in absolute values) between and . This corresponds to the residual PE structure that is probed by BUQO. It can be seen as a 2D map version of quantity , giving the intensity value per pixel that is validated by the data. We can see that when the acquisition quality improves (i.e. σ decreases and/or increases), the intensity value per pixel that is validated by the data increases.

In Fig. 2(left), we show results of BUQO for three PE-like structures that are reconstruction artifacts. For these structures, the last row shows that the intensity value per pixel that is validated by the data is equal to 0 (i.e. ). Hence, our method cannot reject , and the data cannot support the existence of the structure.

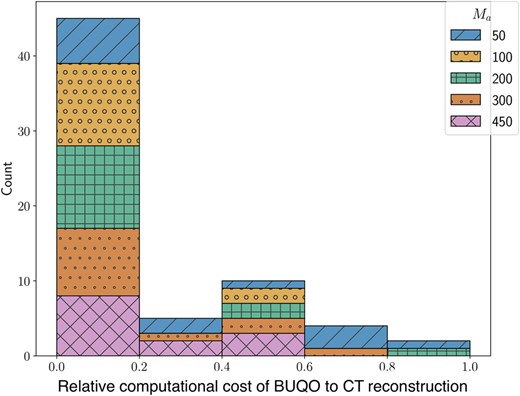

Complexity

In our experiments (see Fig. 4), we found that the numerical complexity of the proposed UQ is usually negligible compared with that of the reconstruction algorithm providing the MAP estimate. The computational bottleneck is usually the evaluation of the forward operator and its adjoint. The complexity is assessed in terms of the total number of iterations (i.e. number of evaluations of the forward operators and their adjoints) to reach convergence of the algorithms used to evaluate the MAP and for BUQO (primal-dual algorithms in both cases). Convergence is assumed to have occurred when all constrained are satisfied, and the estimates are stable, up to a fixed tolerance.

We measure the computational cost using the number of forward operator evaluations needed. The figure is a histogram of BUQO computational cost relative (as a ratio of) CT reconstruction computational cost across our experiments. In the majority of cases, the computation cost of BUQO amounts to 20% that of the image reconstruction. The overhead is not only small but also does not depend on the imaging setting.

Discussion

We have introduced an UQ method in CT imaging that can be used to assess PE-like structures observed in CT scans. We have simulated different acquisition environments by varying the number of measurements and the noise level in the forward problem and used the resulting MAP estimate to investigate the behavior of the proposed method to quantify uncertainty of PE-like structures. Our method demonstrates diminishing confidence with a decrease in data quality, while correctly identifying reconstruction artifacts produced in simulation using low-quality data. In this closing section, we go over the strengths and weaknesses of the proposed method.

Manual annotations

The proposed method requires three inputs, namely the MAP estimate, the mask that isolates the area under investigation, and the set , which represents our prior knowledge.

Currently, the mask is the result of a time-consuming manual segmentation exercise, done by experienced clinical radiologists which can be replaced by PE and artifact detection models that leverage artificial intelligence (23, 24).

The set is built making use of a constraint defined in the gradient domain of the image (which is unsuitable for artifacts appearing close to a boundary) and is done by manual sampling (which is time consuming). Instead, the set could be the result of a data-driven method such as generative Artificial Intelligence (25). Hence, a full pipeline would consist of data-driven methods for PE and artifact detection, a generative model to define the set and finally the BUQO method to quantify the uncertainty of observed features.

Clinical use

Acute PE carries a significant associated morbidity and mortality and thus improvement in the degree of radiologist certainty in the positive identification of acute PEs in clinical practice is paramount. It is also important to improve the degree of radiologist certainty in identifying artifacts as such rather than false positive PEs, in order to avoid inappropriate treatment with anticoagulation and unnecessary bleeding risks. Further work is needed to validate the described method in clinical practice. In particular, the artifact creation should be more realistic to reflect those ecnountered in routine clinical care. Those presented in this paper served the purpose of a proof-of-concept study only.

Note

Here, N is the product of the individual dimensions of the 3D voxel array.

Funding

M.J.E. acknowledges support from the EPSRC (EP/S026045/1, EP/T026693/1, EP/V026259/1) and the Leverhulme Trust (ECF-2019-478). A.R. acknowledges support from the Royal Society of Edinburgh. All authors were supported by the Research Capability Funding of the Royal United Hospital.

Author Contributions

A.M.R.—conceptualization, methodology, software, data curation, writing-original draft preparation, investigation, visualization. H.K.—data curation, resources, validation. J.R.—data curation, resources. J.S.—funding acquisition. J.C.L.R.—project administration, funding acquisition, resources, validation. M.J.E.—conceptualization, methodology, writing–reviewing and editing, supervision, project administration, funding acquisition. A.R.—conceptualization, methodology, software, writing–reviewing and editing, supervision, project administration, funding acquisition.

Preprints

This manuscript was posted on arxiv as a preprint arXiv.2301.02467.

Data Availability

The matlab code to reproduce the results along with links to the data can be found at https://github.com/adwaye/test˙ct.

References

Siemens somatom manual [accessed 2022 Aug 30]. https://www.manualslib.com/manual/524455/Siemens-Somatom.html.

Author notes

M.J.E. and A.R. are joint last authors.

Competing Interest: J.C.L.R. makes the following disclosures: speaker’s fees—Sanofi, consultancy fees—NHSX, physician services—HeartFlow, co-founder and share holder—Heart & Lung Imaging LTD, part-time employee and share holder—RadNet. J.S. has received speakers fees, consultancy fees, and travel grants from AstraZeneca, Chiesi, MSD, and Janssen Pharmaceuticals.