-

PDF

- Split View

-

Views

-

Cite

Cite

Georgios Lioutas, Andreas Bauswein, Theodoros Soultanis, Rüdiger Pakmor, Volker Springel, Friedrich K Röpke, General relativistic moving-mesh hydrodynamic simulations with arepo and applications to neutron star mergers, Monthly Notices of the Royal Astronomical Society, Volume 528, Issue 2, February 2024, Pages 1906–1929, https://doi.org/10.1093/mnras/stae057

Close - Share Icon Share

ABSTRACT

We implement general relativistic hydrodynamics in the moving-mesh code arepo. We also couple a solver for the Einstein field equations employing the conformal flatness approximation. The implementation is validated by evolving isolated static neutron stars using a fixed metric or a dynamical space–time. In both tests, the frequencies of the radial oscillation mode match those of independent calculations. We run the first moving-mesh simulation of a neutron star merger. The simulation includes a scheme to adaptively refine or derefine cells and thereby adjusting the local resolution dynamically. The general dynamics are in agreement with independent smoothed particle hydrodynamics and static-mesh simulations of neutron star mergers. Coarsely comparing, we find that dynamical features like the post-merger double-core structure or the quasi-radial oscillation mode persist on longer time scales, possibly reflecting a low numerical diffusivity of our method. Similarly, the post-merger gravitational wave emission shows the same features as observed in simulations with other codes. In particular, the main frequency of the post-merger phase is found to be in good agreement with independent results for the same binary system, while, in comparison, the amplitude of the post-merger gravitational wave signal falls off slower, i.e. the post-merger oscillations are less damped. The successful implementation of general relativistic hydrodynamics in the moving-mesh arepo code, including a dynamical space–time evolution, provides a fundamentally new tool to simulate general relativistic problems in astrophysics.

1 INTRODUCTION

Numerical simulations are an important tool to study astrophysical systems involving compact objects such as core-collapse supernovae and binary mergers (Faber & Rasio 2012; Janka 2012; Rosswog 2015b; Baiotti & Rezzolla 2017; Foglizzo 2017; Janka 2017; Kotake & Kuroda 2017; Martínez-Pinedo et al. 2017; Roberts & Reddy 2017; Baiotti 2019; Bauswein & Stergioulas 2019; Duez & Zlochower 2019; Shibata & Hotokezaka 2019; Bernuzzi 2020; Radice, Bernuzzi & Perego 2020; Dietrich, Hinderer & Samajdar 2021; Janka & Bauswein 2022). The interpretation of the observations and the extraction of physics from such astronomical measurements to a large extent rely on numerical modelling of these events. For instance, observing binary neutron star (BNS) mergers provides the opportunity to study properties of high-density matter and the formation of heavy elements among several other fascinating aspects like short gamma-ray bursts. The recent simultaneous measurement of the inspiral stage of the BNS merger GW170817 and its electromagnetic counterparts (Villar et al. 2017; Abbott et al. 2017a, b), and especially the conclusions drawn from it, highlight the importance of numerical studies of these systems. Simulating compact objects can be challenging because many scenarios require the concurrent resolution of disparate length and time-scales of a highly dynamical system in three dimensions and the inclusion of various physical effects.

The bulk dynamics of such systems is governed by relativistic hydrodynamics in combination with a dynamical space–time. There exist several approaches to numerically treat relativistic hydrodynamics: most prominent are Eulerian grid-based methods (including finite-difference, finite-volume, or discontinuous Galerkin schemes) and Lagrangian smoothed particle hydrodynamics (SPH; for reviews see e.g. Wilson & Mathews 2003; Alcubierre 2008; Font 2008; Baumgarte & Shapiro 2010; Rezzolla & Zanotti 2013; Martí & Müller 2015; Shibata 2015; Rosswog 2015a, and references therein). Font (2008), Baiotti & Rezzolla (2017), and Foucart et al. (2022) provide a survey of codes currently used to tackle general relativistic hydrodynamic (GRHD) problems mostly in the context of binary mergers; some recent relativistic SPH tools are presented in Liptai & Price (2019) (adopting a fixed metric) and in Rosswog & Diener (2021) and Diener, Rosswog & Torsello (2022) (including a dynamical evolution of the space–time and applications to neutron star mergers).

Both Eulerian grid-based methods and SPH have specific advantages and limitations (see the above references for more detailed discussions). Eulerian methods solve the GRHD equations on a static mesh, where many modern codes include (adaptive) mesh refinement techniques to resolve specific regions of interest. These methods accurately resolve shocks and fluid instabilities through the implementation of high-resolution shock-capturing methods. However, they may suffer from grid effects, require a special treatment of vacuum regions, and, in the case of compact object mergers, following small amounts of high-velocity ejecta at large distances can be challenging.

SPH solves the Lagrangian hydrodynamic equations (comoving with the fluid) on particles representing a certain amount of rest mass. Advection and rest mass conservation are treated with high accuracy and the scheme offers an inherently adaptive resolution. Vacuum regions do not require a special treatment and tracer particles for nucleosynthesis calculations are trivially implemented. Traditionally, SPH is considered to resolve shocks and fluid instabilities poorer compared to high-resolution shock-capturing methods (but see e.g. Rosswog 2015a, and references therein for more modern techniques showing significant improvements). Notably, the Einstein equations cannot be solved in a particle-based discretization and thus require the inclusion of an additional computational grid and corresponding communication between both computational structures (similar problems may arise for treating other non-zero fields in the vacuum like magnetic fields).

Springel (2010) introduced the moving-mesh code arepo, which combines some of the advantages of Lagrangian SPH and Eulerian mesh-based hydrodynamics. arepo solves Newtonian hydrodynamics with a finite-volume approach on a moving unstructured mesh, which is constructed based on a set of mesh-generating points. The moving-mesh approach retains many of the advantages of mesh-based methods, while the mesh-generating points can move in an arbitrary way (see Springel 2010, for more details). Over the last years, Arepo has been employed for a wide range of astrophysical problems in cosmology, Type Ia supernovae, the common envelope phase in binary stars and various other systems (see e.g. Pakmor et al. 2013; Vogelsberger et al. 2014; Ohlmann et al. 2016; Weinberger et al. 2017; Koudmani et al. 2019; Schneider et al. 2019; Gronow et al. 2021; Pakmor et al. 2022). A number of other moving-mesh codes have subsequently been developed and applied to various astrophysical problems (Duffell & MacFadyen 2011, 2012; Gaburov, Johansen & Levin 2012; Duffell & MacFadyen 2013; Yalinewich, Steinberg & Sari 2015; Vandenbroucke & De Rijcke 2016; Chang, Wadsley & Quinn 2017; Ayache, van Eerten & Eardley 2022) with several works investigating high-order schemes on moving meshes (see e.g. Dumbser, Uuriintsetseg & Zanotti 2013; Dumbser et al. 2017; Gaburro et al. 2020, and references therein). All these applications have generally shown the usefulness and benefits of the moving-mesh approach as compared to more traditional schemes.

Moving-mesh codes can follow the fluid motion and allow to flexibly place resolution in physically interesting regions. Hence, they offer adaptive resolution, which follows the matter motion including the possibility to split or merge cells and by this to adaptively increase or decrease the resolving power. The quasi-Lagrangian nature of the scheme reduces numerical advection errors. These elements make moving-mesh codes particularly interesting for simulating compact objects and in particular BNS systems. In recent years, some moving-mesh codes have been extended to include GRHD (Ryan & MacFadyen 2017; Chang & Etienne 2020). However, all these implementations currently employ a fixed space–time, and to date no moving-mesh code evolves the space–time dynamically, as it would for instance be required to simulate neutron star mergers.

In this work, we extend arepo to simulate general relativistic systems (based on the upgraded implementation described in Pakmor et al. 2016). We implement GRHD into the code employing the Valencia formulation (Banyuls et al. 1997) and couple to it a solver for a dynamical space–time. The Einstein equations are solved on an independent overlaid grid adopting the conformal flatness approximation (Isenberg & Nester 1980; Wilson, Mathews & Marronetti 1996). We also include some additional modules to simulate neutron stars such as a high-density equation of state (EOS). We demonstrate the performance of the code in relativistic shock tube and blast wave problems. We further validate our implementation by computing equilibrium models of isolated neutron stars, which are benchmarked by comparing pulsation frequencies to perturbative results and other codes. Finally, we perform the first moving-mesh simulation of a BNS merger. We evolve the system for almost 40 ms into the post-merger phase and discuss the dynamical properties of the remnant and the characteristics of the GW signal.

The paper is structured as follows. Section 2 introduces the theoretical framework of our work. In Section 3, we provide details of our numerical implementation focusing on modifications with respect to the original code (Springel 2010; Pakmor et al. 2016). In Section 4, we present simulations of isolated, static stars. In Section 5, we describe the initial data for BNSs and present a BNS merger simulation. In the last section, we provide a summary of our work and outline future plans. We also include appendices where we provide additional details on the theoretical formulation (Appendix A), present relativistic shock tube (Appendix B) and blast waves tests (Appendix C), and investigate certain aspects of the numerical treatment with additional isolated neutron star and BNS merger simulations (Appendix D). Throughout this work we set c = G = 1, unless otherwise specified. Greek indices denote space–time components, while Latin indices refer to spatial components.

2 THEORETICAL FORMULATION

We briefly present the basic equations implemented in the relativistic version of arepo.

2.1 Field equations

We adopt the ADM formalism (Arnowitt, Deser & Misner 2008) to foliate the space–time into a set of non-intersecting space-like hypersurfaces with a constant coordinate time t. The general metric element then reads

where gμν is the space–time 4-metric, α denotes the lapse function, βi is the shift vector, and γij the spatial 3-metric.

In this work, we impose the conformal flatness condition (Isenberg & Nester 1980; Wilson, Mathews & Marronetti 1996), which approximates the spatial part of the metric as

where ψ is the conformal factor and |$\hat{\gamma }_{ij}$| is the flat metric, i.e. |$\hat{\gamma }_{ij}=\delta _{ij}$| in Cartesian isotropic coordinates, which we use in our treatment.

Adopting the maximal slicing condition Tr(Kij) = 0, where Kij is the extrinsic curvature, the Einstein equations reduce to a set of five coupled non-linear elliptic differential equations and read

where E, S, and Si are matter sources terms. We adopt the energy–momentum tensor of a perfect fluid, namely

where ρ is the rest-mass density, h = 1 + ϵ + p/ρ is the specific enthalpy, ϵ is the specific internal energy, p is the pressure, and uμ is the 4-velocity of the fluid. Then, in the system of differential equations (3)–(5), the various matter contributions in the source terms are given by

Within the conformal flatness approximation, the extrinsic curvature follows directly from the metric elements as

Following Baumgarte et al. (1998), we introduce the definition |$\beta ^i=B^i-\frac{1}{4}\partial _i\chi$|. Then equation (5) can be rewritten as two Poisson-like differential equations for the two auxiliary fields Bi and χ, which read

and can be solved iteratively.

For more details about the numerical implementation see Section 3.7 and Oechslin, Rosswog & Thielemann (2002) and Oechslin, Janka & Marek (2007).

2.2 General relativistic hydrodynamics

The equations of GRHD result from the conservation laws for the energy–momentum tensor Tμν and matter current density Jμ = ρuμ. By choosing a set of appropriate conserved variables, the conservation laws can be written in the form of a first-order flux-conservative hyperbolic system of equations which reads

where |$\boldsymbol {U}$| is the state vector, |$\boldsymbol {F}^i$| the flux vector, |$\boldsymbol {S}$| is the source vector and |$\gamma =\det (\gamma _{ij})$| the determinant of the 3-metric (Banyuls et al. 1997; Font 2008).

The state, flux, and source vectors are functions of the primitive variables |$\boldsymbol {W} = (\rho , \upsilon ^i, \epsilon)$|, where υi = (ui/u0 + βi)/α is the fluid 3-velocity. The state vector consists of the conserved variables and reads

where W = αu0 = (1 − γijυiυj)−1/2 is the Lorentz factor. Furthermore, the flux and source vectors are given by

and

respectively. Here, |$\Gamma ^\lambda _{\nu \mu }$| are the Christoffel symbols of the metric. In the following sections, we also employ the definitions |$\boldsymbol {\mathcal {U}}=\sqrt{\gamma }\boldsymbol {U}$| and |$\boldsymbol {\mathcal {F}}^i=\sqrt{\gamma }\boldsymbol {F}^i$|.

2.3 Equation of state

In order to close the system of GRHD equations (13), one needs to specify an EOS. We implement three different options for the EOS.

The first option is an (isentropic) polytropic EOS

where K is the polytropic constant and Γ is the polytropic index.

The polytropic EOS is suitable for an evolution of the system, where the equation for τ is not evolved. The value of the specific internal energy is instead analytically computed based on equation (18).

An evolution with the polytropic EOS fails to capture a number of dynamical processes (e.g. shocks). Hence, we implement also an ideal gas EOS

which we use for some of our tests (see Section 4.1).

Finally, we include a module for hybrid EOSs, which employs a zero-temperature tabulated microphysical EOS complemented by an ideal-gas component to capture thermal effects (Janka, Zwerger & Moenchmeyer 1993). In this EOS, the pressure and specific internal energy read

where pcold and ϵcold refer to the microphysical EOS and are functions of ρ. The thermal pressure is given by

where ϵth follows from ϵth = ϵ − ϵcold(ρ) and Γth is an appropriately chosen constant, typically in the range between 1.5 and 2 for neutron star applications (Bauswein, Janka & Oechslin 2010). Within this framework, we estimate the temperature Tth based on the thermal energy through

where k is the Boltzmann constant and mB the baryon mass.

3 NUMERICAL IMPLEMENTATION

We describe the most important steps of our numerical implementation focusing on the modifications and additions to the original arepo code (Springel 2010; Pakmor et al. 2016). This includes a number of standard methods, as well as additional techniques specific to the moving-mesh approach which we adopt for solving the GRHD equations. Based on the employed schemes, our implementation is formally second-order both in space and time.

3.1 Time update

arepo constructs an unstructured Voronoi mesh based on the positions of a set of mesh-generating points. The equations of hydrodynamics are discretized on this mesh in a finite-volume fashion (Springel 2010). Mesh-generating points can be moved simultaneously to the hydrodynamical evolution and this allows a dynamical reconfiguration of the computational grid. For each cell i, the volume-integrated conserved variables read

The state |$\boldsymbol {Q}_i^n$| at time tn is evolved to the next time-step tn + 1 using Heun’s method, which is a second-order Runge–Kutta scheme (Pakmor et al. 2016). The time-updated state |$\boldsymbol {Q}_i^{n+1}$| is given by

where the index j runs over all neighbouring cells of cell i and Δt is the time-step. Aij is the interface area between cells i and j, while |$\hat{\boldsymbol {F}}_{ij}$| is an approximate Riemann solver estimate for the fluxes through Aij (see Section 3.3). The fluxes depend on |$\boldsymbol {W}_{ij}$| and |$\boldsymbol {W}_{ji}$|, which are the reconstructed primitive variables from the centre of cell i (or j respectively) to the cell interfaces (see Section 3.2). |$\boldsymbol {\mathcal {S}}_i = \int _{V_i} \boldsymbol {S} {\rm d}V$| are the volume-integrated source terms computed for cell i.

Within the Heun method a forward Euler integration has to be performed, which estimates the states at the end of the time-step as

These estimates are used to compute the fluxes |$\hat{\boldsymbol {F}}^{\prime }_{ij}$| and source terms |$\boldsymbol {\mathcal {S}}_i^{\prime }$| at the end of the time-step, where the primitives are recovered and reconstructed from |$\boldsymbol {Q}_i^{\prime }$|.

Within the time-step we also move the mesh-generating points and construct a new mesh. As a result, the mesh geometry is different at the beginning and the end of the time-step. This is already apparent in equation (25), where we employ different terms for the face areas at the beginning and end of the time-step, i.e. |$A_{ij}^n$| and |$A_{ij}^{\prime }$|, respectively. We update the positions of the mesh-generating points as

where |$\boldsymbol {r}_i$| denotes the coordinates of the mesh-generating point and |$\boldsymbol {w}_i$| is the point’s velocity. As described in Pakmor et al. (2016), we keep the velocity of each mesh-generating point constant throughout the whole time-step (i.e. |$\boldsymbol {w}^{\prime }_i=\boldsymbol {w}^n_i$|). By doing so, the mesh which is constructed at the end of the current time-step matches the mesh at the beginning of the next time-step (i.e. |$A_{ij}^{\prime }\equiv A_{ij}^{n+1}$|). This highlights the benefit of using Heun’s method because it requires practically only one mesh construction per time-step.

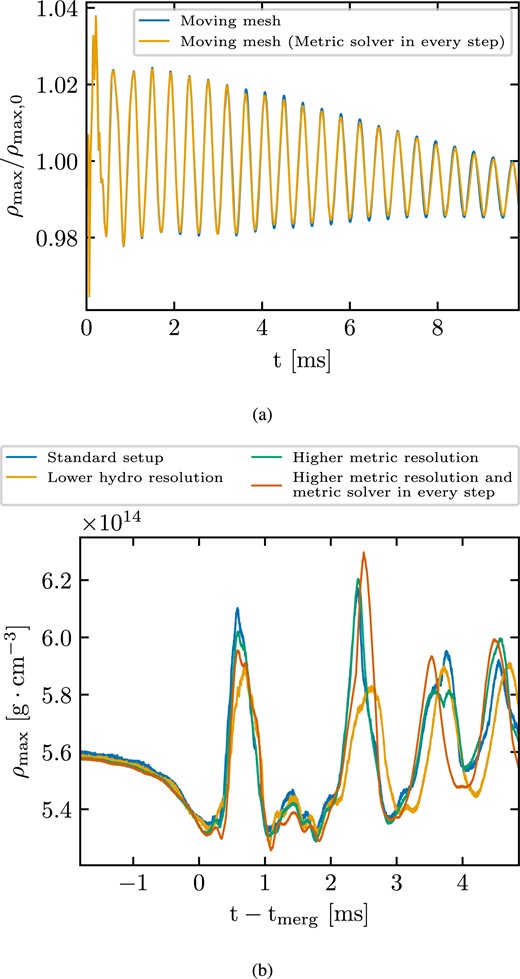

In the applications discussed in this paper, the metric fields do not change too rapidly, in the sense that their variations over a time-step are small. In this situation, one may avoid solving the metric field equations in every time-step to save computing time. We can explicitly solve the field equations every few time-steps and use this information to extrapolate the metric in the intermediate time-steps. We note that similar approaches are used also in other codes which employ the conformal flatness condition (see e.g. Dimmelmeier, Font & Müller 2002; Bucciantini & Del Zanna 2011; Cheong, Lin & Li 2020; Cheong et al. 2021). In the simulations performed in this work, we update the metric at the beginning of each of the two substeps of Heun’s method. We explicitly solve the metric field equations in each Heun substep for the first nine time-steps. Subsequently, we call the metric solver in the first Heun substep of every fifth time-step. For the remaining substeps, we estimate the metric using a parabolic extrapolation based on the last three metric solutions. The extrapolation is performed on the metric grid. Explicitly solving the metric fields equations in the first time-steps ensures the stability of the scheme after importing initial data and provides the necessary number of collocation points for the extrapolation. We test this procedure and evaluate the agreement with simulations where we solve the metric equations in every substep in Appendix D2. The extrapolation significantly reduces the computational effort.

arepo can update cell states based on an individual time step for each cell. It employs a power-of-two hierarchy to account for different cell sizes and achieve synchronization (see Springel 2010 for more details). We have not yet experimented with this feature, which we plan to test in future work. Hence, at the moment, we do not employ this functionality and use a single global time-step instead. In all the simulations presented in this work we apply the Courant–Friedrichs–Lewy (CFL) condition with a CFL factor CCFL = 0.3 to compute the maximum allowed time-step Δti for each cell. For a cell with volume Vi, we employ [3Vi/(4π)]1/3 as an effective radius for the cell in order to compute Δti. Then the global time-step is given by

where Ttot is the total simulation time, which is a free parameter, and N is the smallest integer value for which |$\Delta t \lt \min \limits _i \Delta t_i$| holds.

3.2 Reconstruction of primitive variables

To compute the flux terms in equation (25), we need to reconstruct the primitive variables from the cell centre to the mid-points of the faces. arepo linearly approximates any quantity ϕ from the centre of mass of the cell |$\boldsymbol {s}_i$| to any other point within the cell |$\boldsymbol {r}$| as

where 〈∇ϕ〉i is an estimate of the gradient of ϕ within the cell (see Pakmor et al. 2016, for more details on computing the gradient estimate).

In addition, the gradients are slope limited. The original implementation of arepo replaces the gradient estimate 〈∇ϕ〉i by αi〈∇ϕ〉i, where αi = min (1, ψij) (Springel 2010). The index j refers to neighbouring cells of cell i and the quantity ψij is defined as

where |$\Delta \phi _{ij} = \langle \nabla \phi \rangle _i \cdot (\boldsymbol {f}_{ij}-\boldsymbol {s}_i)$| is the estimate of the change in ϕ between the centre of cell i and the centroid of the interface between cells i and j (denoted by |$\boldsymbol {f}_{ij}$|; Barth & Jespersen 1989). Furthermore, |$\phi _i^\mathrm{max}$| and |$\phi _i^\mathrm{min}$| are the maximum and minimum values among all the neighbours, including cell i.

Alternatively, we apply the monotonized central (MC) difference slope limiter (Van Leer 1977) to the gradient estimate in order to ensure that the scheme is total variation diminishing (Harten 1983, 1984). We follow the approach outlined in Darwish & Moukalled (2003) for the extension of slope limiters to unstructured grids. Other choices for the slope limiter are also possible. However, the MC slope limiter was shown to perform better in simulations of single relativistic stars (Font et al. 2002).

For the simulations that we present in this work, we employ slope-limited reconstruction with the MC slope limiter, unless otherwise stated. We also highlight that there is ongoing work on higher order reconstruction schemes on moving meshes (see e.g. Dumbser et al. 2017; Gaburro et al. 2020, and references therein).

3.3 Riemann problem

The original (Newtonian) implementation of arepo solves the Riemann problem at each face in the rest frame of the face. This involves estimating the velocity |$\tilde{\boldsymbol {w}}_{ij}$| of the common face between each pair of neighbouring cells i and j (see section 3.3 in Springel 2010 for how to estimate |$\tilde{\boldsymbol {w}}_{ij}$|) and boosting the corresponding cell states by |$\tilde{\boldsymbol {w}}_{ij}$|. The states on both sides of the mid-point of the face (denoted as left/right) follow from reconstructing the primitive variables. In order to apply an approximate 1D Riemann solver, the left/right states need to be rotated such that the x-axis aligns with the normal vector of the face. The solution of the Riemann problem follows from sampling the self-similar solution along x/t = 0. The solution is then rotated and boosted back to the initial ‘lab’ frame and used to compute the flux terms in equation (25).

For simplicity, in our general relativistic treatment we do not perform the exact same steps. Instead, we follow a different methodology introduced in Duffell & MacFadyen (2011) and solve the Riemann problem in the ‘lab’ frame. In particular, we employ the HLLE solver (Harten, Lax & Leer 1983; Einfeldt 1988) and sample the solution along |$x/t=\tilde{\boldsymbol {w}} \cdot \hat{\eta }$| to capture the correct HLLE state, where |$\hat{\eta }$| is the (outward) normal vector to the face. The numerical fluxes, which enter equation (25), then read

where |$\boldsymbol {\mathcal {F}}_{ij}^{1\mathrm{D}}$| and |$\boldsymbol {\mathcal {U}}_{ij}^{1\mathrm{D}}$| are computed by the HLLE solver in the ‘lab’ frame. The second term in equation (31) accounts for advection by the moving face.

3.4 Conversion from conserved to primitive variables

It is evident from equation (25) that at the end of each time-step we know the volume-integrated conserved variables |$\boldsymbol {Q}$|, or in turn the conserved variables |$\boldsymbol {U}$|. To solve the GRHD equations, one needs to compute quantities (e.g. the fluxes |$\boldsymbol {F}^i$| and sources |$\boldsymbol {S}$|) that require the primitive variables. While |$\boldsymbol {U}$| analytically follows from the primitive variables, obtaining the primitive from the conserved variables requires a numerical solution. The recovery of primitive variables is a common intricate task of GRHD schemes.

We employ a widely used and tested method (see e.g. Rezzolla & Zanotti 2013), which is based on a Newton–Raphson scheme. As a first step, we express the density and specific internal energy as

based on equations (14) and the definitions S2 = γijSiSj and Q = τ + p + D. Then, for a generic EOS p = p(ρ, ϵ), we employ a Newton–Raphson method to solve the equation

starting from an initial guess for the pressure p (e.g. the pressure at the cell centre in the previous time-step for accelerated root-finding). We compute the necessary derivatives |$\partial \hat{p}/\partial \rho$| and |$\partial \hat{p}/\partial \epsilon$| numerically. As an additional measure we reset the primitive variables to atmosphere values if for a cell p < 0 or the density ρ is below a threshold value ρthr (see Section 3.6 for details). The conserved variables are then recomputed for the new primitives.

3.5 Mesh geometry

Arguably two of the most important aspects in a moving-mesh simulation are the initial positions of the mesh-generating points and how the points move during the simulation. The initial distribution of the points determines the initial geometry of the mesh, while point motion determines how the mesh geometry evolves. A mesh which is well-adapted to the geometrical and physical aspects of the problem at hand captures the physics more accurately even with fewer cells, since resolution is distributed more appropriately in the simulation domain.

In the various tests that we present in the following sections, we use different initial mesh-generating point distributions for different tests. Furthermore, we perform both moving-mesh, as well as static-mesh simulations, i.e. calculations where the cells move or remain fixed at their initial positions, respectively. In our moving-mesh simulations, each point moves with the local fluid coordinate velocity with possibly a small correction to this velocity to ensure that the mesh does not become too irregular (see section 4 in Springel 2010 for more details). For the mesh regularity, we adopt a more recent criterion proposed in Vogelsberger et al. (2012, see Weinberger, Springel & Pakmor 2020 for a summary). For each cell i, we define

to estimate how round the cell is based on the area of each face Aj and its distance from the mesh-generating point hj. We identify cells which satisfy αmax > 0.75β as irregular, where β is a free parameter typically set to 2.25. For irregular cells, we include a corrective velocity component to the motion of the mesh-generating point, which drifts the point closer to the centre of mass of the respective cell. The corrective velocity reads

where di is the distance between the centre of mass (|$\boldsymbol {s}_i$|) and the mesh-generating point (|$\boldsymbol {r}_i$|). We consider a fraction fshaping (typically 0.5) of the characteristic speed vchar, while we set vchar to the sound speed in the cell.

Distributing the mesh-generating points carefully and allowing them to follow the fluid motion ensures that resolution is focused on the physically interesting regions and minimizes advection across cells. However, some problems might additionally benefit from increasing or decreasing the resolution locally in a flexible way. arepo allows for cell refinement or derefinement based on nearly arbitrary criteria (see section 6 in Springel 2010). Different criteria can be employed to dynamically change the local geometry of the mesh, effectively adding resolution where it is needed and reducing the resolution where it is deemed redundant.

We provide details regarding the initial mesh geometry and whether we enable cell refinement/derefinement in the discussion of each test, since the choices strongly depend on the concrete application. We emphasize that a direct comparison between moving-mesh and static-mesh simulations is not necessarily straightforward, even in cases where the initial meshes are identical in moving-mesh and static-mesh simulations. The reason is that in moving-mesh calculations the cells rearrange over time. Hence, the mesh can in principle evolve to a set-up that differs significantly from the initial geometry.

3.6 Additional code details

Grid-based hydrodynamic approaches require a special treatment for vacuum regions. We employ an artificial atmosphere, i.e. we place cells with a very low-density ρatm in vacuum regions. During the evolution, the numerical treatment resets the density to this value if the density of a cell falls below a threshold value ρthr. In the tests which we present in the following sections we set ρthr = 10 × ρatm and ρatm = (10−7−10−8) × ρmax(t), where ρmax(t) is the maximum density throughout the whole domain at any given time t. Hence, the atmosphere density changes in time if the maximum density oscillates. This criterion captures cells outside the neutron stars, where one should formally have vacuum. In these cells, we also set the velocities to zero, while the pressure and specific internal energy follow from a polytropic EOS with K = 100 and Γ = 2. Subsequently, we update the conserved variables in these atmospheric cells based on the new set of primitives. We note that the values of ρatm and ρthr can be adjusted based on the aspects of the problem at hand. For instance, to follow BNS merger ejecta, lower atmosphere values are desirable, which we will explore in future work.

Formally, we adopt periodic boundary conditions for the hydro mesh in our calculations. We emphasize that this choice does not affect the evolution of the system because the outer boundaries are placed far away from the regions of physical interest, where all the cells have densities below the atmosphere threshold throughout the whole simulation (i.e. no matter reaches the boundaries). The size of the numerical domain varies in different tests to cover the whole physical system. As said, the exact size does not play any role since we ensure that in all simulations the physical domain of interest is surrounded by atmosphere cells. Hence, the size of the numerical domain can in principle be chosen arbitrarily large.

If the numerical domain is chosen to be very large compared to the physical system under consideration, we would typically fill the outer parts of the numerical domain with increasingly larger cells, i.e. by placing the mesh-generating points more sparsely, to minimize the computational overhead. The chosen configuration should not lead to an abortion of the mesh construction algorithm, but we did not face any issues in this regard.

3.7 Solution of field equations

We solve the metric field equations (3), (4), (11), and (12) on an independent uniform Cartesian grid. We employ a multigrid algorithm (see e.g. Briggs, Henson & McCormick 2000) and solve the differential equations iteratively until they converge. Boundary conditions for the solution of equations (3) and (4) are computed based on a multipole expansion of the fields. Formally, we can write the solution to equation (3) at a point with coordinates |$\boldsymbol {r}$| as

where Sψ collectively refers to the terms on the right-hand side of equation (3) and we integrate over the metric grid (|$\boldsymbol {r}^\prime$| is a coordinate vector; e.g. Oechslin, Janka & Marek 2007). Then, boundary conditions for equation (3) follow from expanding equation (37) up to quadrupole order. We note that we consider only the monopole contribution from the non-compact term in Sψ (i.e. the term involving KijKij). We follow a similar procedure to compute boundary conditions for equation (4).

We impose fall-off boundary conditions in order to solve equations (11) and (12), namely we approximate the fields Bi, χ at a point |$\boldsymbol {r}$| (e.g. at the boundaries) as

where |$\boldsymbol {r}_p$| is the projection of |$\boldsymbol {r}$| on the grid boundary. Here, bi and c capture the lowest order fall-off behaviour of the respective fields and read bx = y/r3, by = x/r3, bz = xyz/r7, and c = xy/r5 in the employed coordinates (see Baumgarte et al. 1998, but note the different coordinate system).

The metric solver implementation originates from Oechslin, Rosswog & Thielemann (2002), where they provide more details. The grid size is chosen such that it covers the physical domain of interest well. The metric grid can be smaller than the hydro grid. For the BNS simulations discussed below, the metric grid will well cover the orbit of the binary but matter can extend beyond the metric grid (e.g. ejecta) and the boundaries of the hydro grid are much farther out. Beyond the metric grid, we employ the same treatment of the metric fields as on the boundaries of the metric grid using the aforementioned expansion and fall-off conditions. With the treatment beyond the metric grid being consistent with the boundary conditions of the metric grid, we do not notice any spurious effects when matter leaves the domain of the metric grid.

In our implementation, hydrodynamic quantities and metric potentials are solved on different grids. To solve the GRHD equations, we need to interpolate the metric fields to various positions, e.g. the mesh-generating point positions, the centre of mass of Voronoi cells, or the mid-point of the interfaces between neighbouring cells. Vice versa, solving the metric field equations requires knowledge of the hydrodynamic variables at the positions of the metric grid points. We perform the mappings in the following way:

Metric grid to hydro mesh: Knowing the metric fields on a uniform Cartesian grid, we interpolate to any point if it lies within the metric grid. We interpolate the metric fields with a third-order Lagrange polynomial. We cannot apply the same approach to compute the metric fields at points outside the metric grid domain. Instead, we employ the multipole expansions and fall-off conditions that we used to compute the metric boundary conditions for these distant cells.

Hydro mesh to metric grid: The inverse mapping is significantly more complicated because the hydrodynamics mesh is unstructured. The main component is a tree walk (Springel 2010) to locate the mesh-generating point which lies closest to each metric grid point. At the end of the tree search, each metric grid point is placed in a hydrodynamics cell. We then directly adopt all necessary hydrodynamical variables from the moving-mesh cell for the respective metric grid point.

We have found this simple approach to be rather robust. As expected, its accuracy improves as the number of hydrodynamic cells increases. Furthermore, it benefits from the fact that physically important regions are more densely populated with mesh points. As a result, the distance between the centres of the moving-mesh cells and the metric grid points is typically quite small. As a way to monitor the accuracy of the approach, we compare the baryonic mass on the different grids for the simulations discussed in this work and find that they agree to at least 0.1 per cent. The mass on the metric grid oscillates by this magnitude around the value of the unstructured hydro mesh without any systematic trend. The impact of this mismatch on for instance the phase evolution is certainly surpassed by the use of the conformal flatness approximation in the first place. We plan to further examine the mismatch in the future. Simple extensions (e.g. via the use of gradients 〈∇ϕ〉 discussed in Section 3.2) can potentially prove more accurate without significantly adding to the computational cost.

Finally, we remark that the treatment of a black hole requires certain modifications of the gravity solver within the conformal flatness approximation as in Bauswein et al. (2014) and Just et al. (2015), which we leave for future work.

3.8 Gravitational wave back-reaction and extraction

The conformal flatness approximation does not include gravitational radiation by construction, which, however, can be important in some applications like for instance BNSs. Therefore, we complement our approach by adding a back-reaction scheme to emulate gravitational wave energy and angular momentum losses.

We closely follow the implementation of Oechslin, Janka & Marek (2007), which consists of adding a small, non-conformally flat correction to the metric based on a post-Newtonian analysis presented in Faye & Schäfer (2003, see also Blanchet, Damour & Schaefer 1990). We outline the formalism in Appendix A. For neutron star merger simulations, this approach shows generally a good agreement with fully general relativistic simulations comparing for instance the post-merger gravitational wave emission, black-hole formation, or ejecta and torus masses (see e.g. Bauswein et al. 2012; Bauswein, Goriely & Janka 2013; Bauswein et al. 2021; Kölsch et al. 2022).

4 TOLMAN–OPPENHEIMER–VOLKOFF STAR

We present shock tube tests and relativistic blast waves in Appendices B and C, and focus in this section on the evolution of isolated neutron stars. We evolve a static equilibrium neutron star and extract its fundamental radial mode frequency to verify our implementation. For a first test, we evolve a neutron star described by a polytrope while keeping the metric fixed (Cowling approximation). This analysis targets our general relativistic hydrodynamics implementation alone. As a second test, we evolve a neutron star described by a microphysical EOS, while dynamically evolving the space–time as well. This set-up tests our GRHD implementation, our metric solver and their coupling, as well as our microphysics modules.

Table 1 summarizes the features and parameters of all simulations discussed in this paper including the BNS merger runs described in Section 5 and Appendix D.

Summary of the simulations presented in this study. The first column specifies the type of system simulated. Second column denotes if the space–time was fixed or evolved dynamically. Third column indicates if the mesh was moving during the simulation. The fourth column contains information about the symmetry of the initial grids, which we employ in the different simulations. Fifth column indicates whether we employ cell refinement and derefinement in the respective simulation. In the sixth column, we provide an estimate for the resolution. In moving-mesh simulations, the resolution changes dynamically (see main text for more details on each simulation). In the case of the BNS systems, the resolution refers to the high-density regime in the post-merger phase. Columns seven and eight list the EOS and masses of the systems. Finally, the last column reports the characteristic frequency extracted in every simulation. In the case of TOV stars this refers to the frequency of the radial mode (f0), while for the BNS systems it is the dominant frequency in the post-merger phase. The seventeenth row ‘pert. TOV star’ provides the perturbative result of the isolated TOV star for comparison. The TOV simulations marked with * and † refer to the simulation with arepo’s standard slope-limited gradient and the run with the regularization parameters set to (fshaping, β) = (0.5, 3), respectively. The systems marked with ‡ refer to the simulations discussed in Appendix D2. For the BNS simulation labelled with ‡ we do not provide a characteristic frequency, because we evolve the system for <5 ms in the post-merger phase. For simulations labelled ‘case (i)’, ‘case (ii)’, and ‘case (iii)’ see Appendix D1.

| System . | Space–time . | Mesh . | Hydro grid set-up . | Cell refinement/ . | Resolution . | EOS . | Gravitational . | Characteristic . |

|---|---|---|---|---|---|---|---|---|

| . | . | motion . | (region around stars) . | derefinement . | (m) . | . | Mass (M⊙) . | Frequency . |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈221.4 | Ideal gas | 1.4 | f0 = 2.664 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈184.5 | Ideal gas | 1.4 | f0 = 2.668 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈110.7 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star* | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.677 kHz |

| TOV star† | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Spherical | No | ≈191 | Ideal gas | 1.4 | f0 = 2.661 kHz |

| TOV star | Fixed | Static | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.682 kHz |

| TOV star | Fixed | Static | Cartesian (lower crust resolution) | No | ≈147.6 & 295.3 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.318 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.316 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.343 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.344 kHz |

| TOV star | Dynamical | Moving | Spherical + Random | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.349 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈162.4 | H4 + Γth = 1.75 | 1.41 | f0 = 2.352 kHz |

| TOV star | Dynamical | Static | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.358 kHz |

| pert. TOV star | Dynamical | – | – | – | – | H4 | 1.41 | f0 = 2.385 kHz |

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| on mass distribution) | ||||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈182 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.55 kHz |

| (case (i)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| (case (ii)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈155 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.57 kHz |

| (case (iii)) | on mass distribution) | |||||||

| BNS merger‡ | Dynamical | Moving | Spherical (based | Yes | ≈170 | DD2 + Γth = 1.75 | 1.35 + 1.35 | – |

| on mass distribution) |

| System . | Space–time . | Mesh . | Hydro grid set-up . | Cell refinement/ . | Resolution . | EOS . | Gravitational . | Characteristic . |

|---|---|---|---|---|---|---|---|---|

| . | . | motion . | (region around stars) . | derefinement . | (m) . | . | Mass (M⊙) . | Frequency . |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈221.4 | Ideal gas | 1.4 | f0 = 2.664 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈184.5 | Ideal gas | 1.4 | f0 = 2.668 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈110.7 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star* | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.677 kHz |

| TOV star† | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Spherical | No | ≈191 | Ideal gas | 1.4 | f0 = 2.661 kHz |

| TOV star | Fixed | Static | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.682 kHz |

| TOV star | Fixed | Static | Cartesian (lower crust resolution) | No | ≈147.6 & 295.3 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.318 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.316 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.343 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.344 kHz |

| TOV star | Dynamical | Moving | Spherical + Random | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.349 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈162.4 | H4 + Γth = 1.75 | 1.41 | f0 = 2.352 kHz |

| TOV star | Dynamical | Static | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.358 kHz |

| pert. TOV star | Dynamical | – | – | – | – | H4 | 1.41 | f0 = 2.385 kHz |

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| on mass distribution) | ||||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈182 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.55 kHz |

| (case (i)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| (case (ii)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈155 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.57 kHz |

| (case (iii)) | on mass distribution) | |||||||

| BNS merger‡ | Dynamical | Moving | Spherical (based | Yes | ≈170 | DD2 + Γth = 1.75 | 1.35 + 1.35 | – |

| on mass distribution) |

Summary of the simulations presented in this study. The first column specifies the type of system simulated. Second column denotes if the space–time was fixed or evolved dynamically. Third column indicates if the mesh was moving during the simulation. The fourth column contains information about the symmetry of the initial grids, which we employ in the different simulations. Fifth column indicates whether we employ cell refinement and derefinement in the respective simulation. In the sixth column, we provide an estimate for the resolution. In moving-mesh simulations, the resolution changes dynamically (see main text for more details on each simulation). In the case of the BNS systems, the resolution refers to the high-density regime in the post-merger phase. Columns seven and eight list the EOS and masses of the systems. Finally, the last column reports the characteristic frequency extracted in every simulation. In the case of TOV stars this refers to the frequency of the radial mode (f0), while for the BNS systems it is the dominant frequency in the post-merger phase. The seventeenth row ‘pert. TOV star’ provides the perturbative result of the isolated TOV star for comparison. The TOV simulations marked with * and † refer to the simulation with arepo’s standard slope-limited gradient and the run with the regularization parameters set to (fshaping, β) = (0.5, 3), respectively. The systems marked with ‡ refer to the simulations discussed in Appendix D2. For the BNS simulation labelled with ‡ we do not provide a characteristic frequency, because we evolve the system for <5 ms in the post-merger phase. For simulations labelled ‘case (i)’, ‘case (ii)’, and ‘case (iii)’ see Appendix D1.

| System . | Space–time . | Mesh . | Hydro grid set-up . | Cell refinement/ . | Resolution . | EOS . | Gravitational . | Characteristic . |

|---|---|---|---|---|---|---|---|---|

| . | . | motion . | (region around stars) . | derefinement . | (m) . | . | Mass (M⊙) . | Frequency . |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈221.4 | Ideal gas | 1.4 | f0 = 2.664 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈184.5 | Ideal gas | 1.4 | f0 = 2.668 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈110.7 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star* | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.677 kHz |

| TOV star† | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Spherical | No | ≈191 | Ideal gas | 1.4 | f0 = 2.661 kHz |

| TOV star | Fixed | Static | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.682 kHz |

| TOV star | Fixed | Static | Cartesian (lower crust resolution) | No | ≈147.6 & 295.3 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.318 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.316 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.343 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.344 kHz |

| TOV star | Dynamical | Moving | Spherical + Random | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.349 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈162.4 | H4 + Γth = 1.75 | 1.41 | f0 = 2.352 kHz |

| TOV star | Dynamical | Static | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.358 kHz |

| pert. TOV star | Dynamical | – | – | – | – | H4 | 1.41 | f0 = 2.385 kHz |

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| on mass distribution) | ||||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈182 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.55 kHz |

| (case (i)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| (case (ii)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈155 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.57 kHz |

| (case (iii)) | on mass distribution) | |||||||

| BNS merger‡ | Dynamical | Moving | Spherical (based | Yes | ≈170 | DD2 + Γth = 1.75 | 1.35 + 1.35 | – |

| on mass distribution) |

| System . | Space–time . | Mesh . | Hydro grid set-up . | Cell refinement/ . | Resolution . | EOS . | Gravitational . | Characteristic . |

|---|---|---|---|---|---|---|---|---|

| . | . | motion . | (region around stars) . | derefinement . | (m) . | . | Mass (M⊙) . | Frequency . |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈221.4 | Ideal gas | 1.4 | f0 = 2.664 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈184.5 | Ideal gas | 1.4 | f0 = 2.668 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Uniform Cartesian | No | ≈110.7 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star* | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.677 kHz |

| TOV star† | Fixed | Moving | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.672 kHz |

| TOV star | Fixed | Moving | Spherical | No | ≈191 | Ideal gas | 1.4 | f0 = 2.661 kHz |

| TOV star | Fixed | Static | Uniform Cartesian | No | ≈147.6 | Ideal gas | 1.4 | f0 = 2.682 kHz |

| TOV star | Fixed | Static | Cartesian (lower crust resolution) | No | ≈147.6 & 295.3 | Ideal gas | 1.4 | f0 = 2.674 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.318 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈253.7 | H4 + Γth = 1.75 | 1.41 | f0 = 2.316 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.343 kHz |

| TOV star‡ | Dynamical | Moving | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.344 kHz |

| TOV star | Dynamical | Moving | Spherical + Random | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.349 kHz |

| TOV star | Dynamical | Moving | Spherical | No | ≈162.4 | H4 + Γth = 1.75 | 1.41 | f0 = 2.352 kHz |

| TOV star | Dynamical | Static | Spherical | No | ≈191 | H4 + Γth = 1.75 | 1.41 | f0 = 2.358 kHz |

| pert. TOV star | Dynamical | – | – | – | – | H4 | 1.41 | f0 = 2.385 kHz |

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| on mass distribution) | ||||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈182 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.55 kHz |

| (case (i)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈162 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.56 kHz |

| (case (ii)) | on mass distribution) | |||||||

| BNS merger | Dynamical | Moving | Spherical (based | Yes | ≈155 | DD2 + Γth = 1.75 | 1.35 + 1.35 | fpeak = 2.57 kHz |

| (case (iii)) | on mass distribution) | |||||||

| BNS merger‡ | Dynamical | Moving | Spherical (based | Yes | ≈170 | DD2 + Γth = 1.75 | 1.35 + 1.35 | – |

| on mass distribution) |

4.1 Cowling approximation

4.1.1 Initial data

We solve the Tolman–Oppenheimer–Volkoff (TOV) equations and compute a 1.4 M⊙ polytropic neutron star with K = 100 and Γ = 2 (central density ρc = 1.28 × 10−3 in c = G = 1 units). This stellar model is a common choice and allows us to compare our evolutions within the Cowling approximation with results from previous works (Font et al. 2002).

We map the primitive quantities from the 1D TOV solution to a mesh-generating point distribution which is used to construct a Voronoi mesh. In this simulation the hydrodynamical simulation domain is a cube with side length 58 M⊙ ≈ 85.6 km; hence, significantly larger than the stellar radius (R ≈ 12 km in isotropic coordinates). We set up the mesh-generating points to obtain a uniform Cartesian grid with high resolution in the centre of the computational domain to cover the star. This particular mesh set-up allows us to compare directly to Font et al. (2002), where also a Cartesian grid is employed. The central, high-resolution mesh is a cube with side length 24 M⊙ ≈ 35.4 km and a cell size h = 0.1 M⊙ ≈ 147.6 m. We cover the rest of the simulation domain with points that lead to a low resolution mesh. Throughout the whole evolution, these outer parts of the computational domain are atmosphere cells and thus the exact point distribution is irrelevant. Even a very low number of mesh-generating points is already sufficient (in the case of this particular set-up 0.2 per cent of all cells), provided the mesh-construction algorithm can create a mesh.

We excite the radial mode by adding a radial 3-velocity perturbation of the form

where A = −0.005, r is the radial distance from the stellar centre, and R is the stellar radius (see e.g. Dimmelmeier, Stergioulas & Font 2006).

In our Cowling tests, we compute the metric fields at any point in our simulation domain (e.g. mesh-generating point positions, centres of mass of the hydrodynamic cells) by interpolating the high-resolution metric function profiles which we obtain from our TOV solution. We set the atmosphere density to ρatm = 10−8 × ρmax and consider any cell with ρ < 10 × ρatm to be part of the atmosphere. We evolve the polytropic initial data with an ideal gas EOS and thus also evolve the energy equation.

4.1.2 Simulations

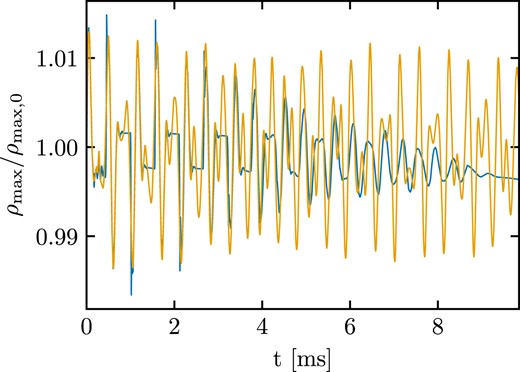

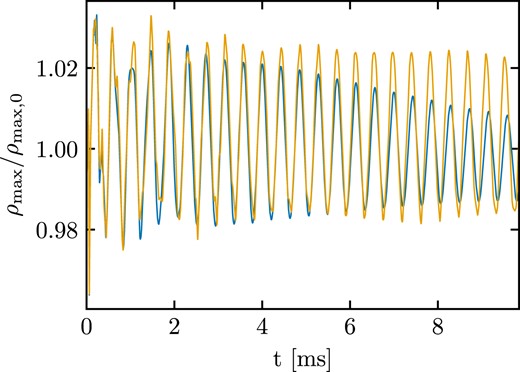

Fig.1 shows the evolution of the maximum density normalized to its initial value. The blue line refers to a moving-mesh simulation, while the orange line to a static-mesh run where the mesh-generating points do not move. We note that both simulations preserve the initial TOV solution, i.e. the whole radial density profile, with only a minor density drift during the roughly 10 ms evolution. The moving-mesh simulation features a stronger damping of the excited oscillations and a somewhat more pronounced density drift in comparison to the static-mesh case. We extract the main radial pulsation frequency by a Fourier transform of the density oscillations. We obtain 2.672 kHz for the moving mesh and 2.682 kHz for the static mesh, which are both in excellent agreement with previous results (2.696 kHz in Font et al. 2002). In Fig. 2, we present the power spectrum of the normalized maximum density (see Fig. 1). We consider the whole signal and apply a Tukey window with a shape parameter of 0.1. In addition, we zero pad the signal, which effectively leads to smoother curves in the power spectrum. We note that a number of overtones are excited as well. In particular, in the moving-mesh simulation we identify 6 overtones, which all agree within less than 2 per cent with the values reported in Font et al. (2002). The presence of several overtones is in line with the observation of several box-shaped oscillation cycles in Fig. 1 as the Fourier transform of a pulse wave is given by a number of higher overtones with decaying magnitude. In the static mesh simulation higher overtones seem to be less excited (or stronger damped) and only the first two appear prominently. We also extract the FWHM of the first three peaks in Fig. 2 and find 129, 130, and 132 Hz (161, 212, and 321 Hz) for the static-mesh (moving-mesh) calculations.

Evolution of the maximum rest-mass density normalized to its initial value for a 1.4 M⊙ TOV neutron star modelled as a polytrope with K = 100 and Γ = 2. The blue line refers to a moving-mesh set-up, while the orange line to a static-mesh set-up. Both simulations adopt the Cowling approximation. In both cases, a radial velocity perturbation with amplitude −0.005 was applied. See the main text for details regarding the mesh set-up.

Frequency spectrum of the maximum rest-mass density evolution of a 1.4 M⊙ TOV neutron star described by a polytropic EOS with K = 100 and Γ = 2 employing the Cowling approximation (see Fig. 1). The blue line corresponds to a moving-mesh simulation, while the orange line refers to a static-mesh calculation. The vertical dashed lines correspond to the frequencies computed in Font et al. (2002). The units of the vertical axis are arbitrary.

The moving-mesh and static-mesh evolutions are rather similar for the first few milliseconds. However, at later times the moving-mesh set-up exhibits some damping in contrast to the static-mesh. This possibly originates to some extend from the surface layers. Initially, the star contracts while atmosphere cells do not move. This results in a small gap between mesh-generating points that correspond to stellar material and thus closely follow the fluid motion, and points belonging to the atmosphere. This leads to larger cells close to the surface and thus the resolution at the stellar surface effectively drops. When the star expands, the stellar surface moves into the atmosphere. During expansion and contraction phases of the star, cells can cross the atmosphere threshold. Cells belonging to the star can become atmosphere, and atmosphere cells can accumulate material to become ‘active’ stellar cells. Overall, this leads to a decrease in the resolution close to the surface already after the first few ms. This behaviour is shown in Fig. 3, which displays the rest-mass density in the z = 0 plane after evolving the system for roughly 2 ms. The left panel refers to the moving-mesh simulation, while the right panel corresponds to the static-mesh simulation. In both panels, we employ white lines to display cell boundaries, which reveals the mesh geometry. Fig. 3 captures how the moving mesh evolves compared to the static-mesh simulation (i.e. also how the moving mesh evolves compared to the initial mesh geometry). In particular, the static-mesh and moving-mesh simulations have similar resolutions in the interior of the neutron star up to a few hundreds of meters beneath the surface. In the outer meters of the crust, the moving-mesh simulation has a lower resolution, which is one of the reasons for the higher damping. Finally, in the moving-mesh simulation a thin high-resolution shell forms right at the surface because of cells which originally belonged to either the star or the atmosphere.

![Rest-mass density at the z = 0 plane for the moving-mesh (left panel) and static-mesh (right-panel) simulations on a fixed space–time. The thin white lines represent the cell boundaries and thus display the mesh geometry. The subpanels at the top right corner of each panel depict a zoomed-in version of the region [11, 13] × [0, 2] in the respective plot. Both snapshots are taken at a time t = 1.97 ms.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mnras/528/2/10.1093_mnras_stae057/1/m_stae057fig3.jpeg?Expires=1749216160&Signature=tsDxPzFD0puTUMtJBN0Avs0HTa2c19utnsKru3SkWXvlFh6I0s8Xo0ilXrgg--nanRq9v1JRCkZZOmgU5EeI0lA8rDYsaHz7ENofNOhjWmVsWfacx8HZOIGqBxGG3LlZ8CPCaZ-Q2~amh4wzt2LdNz2OyCExbg-4u6EEBgOQW8oX8qUpN~U-GdVLEYMpOnPTV-MSrl3FHyXMXuDFMogrOFq-lYVpYdbP9pdAXKOb2AILgZGXsGrbospv1AQRxG9YfgAqB8WtwO-sGLTfHqRNN~VKsOI8zf8DVDsA-RvPfRBbRIEvg8BRsxw5I206CnA88rxeneyZTg0E-xKOJbb0RQ__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Rest-mass density at the z = 0 plane for the moving-mesh (left panel) and static-mesh (right-panel) simulations on a fixed space–time. The thin white lines represent the cell boundaries and thus display the mesh geometry. The subpanels at the top right corner of each panel depict a zoomed-in version of the region [11, 13] × [0, 2] in the respective plot. Both snapshots are taken at a time t = 1.97 ms.

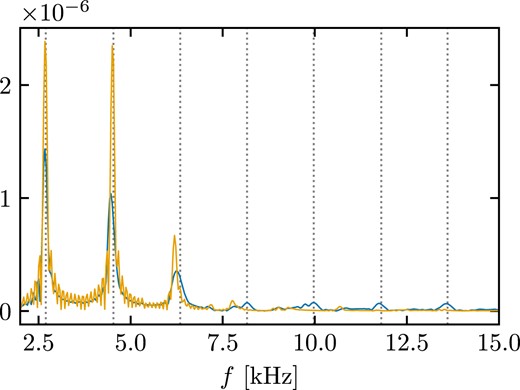

To evaluate the effect that a lower resolution close to the surface has on the evolution, we perform an additional static-mesh simulation. We construct a mesh where we distribute the mesh-generating points to obtain a uniform Cartesian grid with a cell size of 0.1 M⊙ within a radius of 7.8 M⊙ ≈ 11.51 km (i.e. 96 per cent of the stellar radius) surrounded by a uniform Cartesian grid with a cell size of 0.2 M⊙ outside the radius of 7.8 M⊙. We present the evolution of the rest-mass density in Fig. 4(a). We find that the evolution of the maximum density features some damping in this new static-mesh simulation and the amplitude of the oscillation has decreased by a factor of roughly 2 after ≈10 ms of evolution. In addition, we extract a frequency of 2.674 kHz for the main radial pulsation, i.e. rather similar to the moving-mesh simulation. This observation supports that a lower resolution close to the surface can partially explain the damping in the maximum rest-mass density oscillation in the moving-mesh simulation.

Rest-mass density evolution of a 1.4 M⊙ TOV neutron star modelled as a polytrope with K = 100 and Γ = 2 within the Cowling approximation for different simulation setups. Panel (a): Effect of different numerical choices (see legend and main text in Section 4.1.2). In the legend, we denote moving- and static-mesh simulations as MM or SM, respectively. Panel (b): Impact of resolution on the moving-mesh calculations starting from a Cartesian initial mesh geometry. The default set-up (h = 0.1) matches the blue line in Fig. 1.

Furthermore, we perform a set of additional simulations to investigate the oscillation behaviour in Fig. 1 and we already refer to Section 4.2 showing that the use of the Cowling approximation is a major reason for damping in moving-mesh calculations. We investigate a number of aspects of the numerical description and their effects on the overall evolution:

Effect of resolution: We perform three moving-mesh simulations, where the initial mesh-generating point distribution is similar to the one described in Section 4.1.1, but we vary the cell size of the central, high-resolution mesh to be h = 0.075, 0.125, and 0.15, respectively (i.e. one higher-resolution and two lower-resolution simulations compared to the default set-up). Fig. 4(b) shows the evolution of the maximum rest-mass density for the different resolutions. Increasing the resolution leads to less damping of the oscillation and reduces the minor density drift. The damping is not fully removed for the resolutions considered here. However, below we show that the set-up for this resolution study (e.g. the initially Cartesian mesh) is not ideal and other aspects of the numerical treatment strongly improve the evolution. The extracted frequency of the main radial oscillation is 2.664, 2.668, and 2.674 kHz for h = 0.15, 0.125, and 0.075, respectively, i.e. there is a very minor increase of the frequency with increasing resolution.

Mesh geometry: We consider a moving-mesh simulation with an initial set-up of mesh-generating points which leads to a spherical distribution of cells. The initial mesh is identical to the standard resolution set-up described in detail in Section 4.2.1, where more details can be found. We emphasize that the equidistant radial separation between consecutive shells is ≈191 m, i.e. the resolution in this simulation is more comparable to the run with h = 0.125 from point (i) above. We compare the number of cells within the star at the beginning of each simulation. The cells within the star in the simulation on the spherical mesh are |$\approx 92~{{\ \rm per\ cent}}$| of the cells covering the star in the simulation on the (initially) Cartesian mesh with h = 0.125. The differences in the number of cells covering the star between the spherical mesh simulation and the h = 0.075, 0.1, and 0.15 simulations are larger. Hence, the spherical mesh simulation should be compared with the h = 0.125 run in Fig. 4(b), while the first features a somewhat lower resolution. Evidently, the evolution of the rest-mass density on the spherical mesh features less damping compared to the (initially) Cartesian h = 0.125 mesh [compare orange line in Fig. 4(a) and green line in Fig. 4(b), respectively]. Using a spherical mesh does not completely remove the damping, but the comparison shows that a mesh which better captures the symmetries of the physical system is a more appropriate starting point for a moving-mesh simulation.

Gradient slope limiter: We set up a moving-mesh simulation with an identical set-up as the original one (i.e. Cartesian initial mesh with h = 0.1), but we employ the standard slope-limited gradient in Arepo (equation (30)) instead of the MC slope limiter (see Section 3.2). We find that the maximum density in this new simulation evolves in a rather similar manner to the original moving-mesh simulation with the MC slope limiter in the first few ms. However, at later times the maximum rest-mass density oscillation exhibits noticeably less damping in the new simulation and can still be clearly identified after roughly 10 ms [see green line in Fig. 4(a), see also the discussion on slope limiters in Font et al. (2002)]. Employing the original slope-limiting procedure of arepo may benefit from the fact that information about all the neighbouring cells enters the definition of ψij (see equation 30) and not just the gradient estimate. Thus, it might be a more appropriate choice for moving-mesh simulations compared to the MC slope limiter.

Regularization scheme: We consider the impact of the parameters β and fshaping, which enter the regularization scheme1 (see equation 36 and Section 3.5). We run a total of four additional moving-mesh simulations with an identical initial mesh as in the original run, where we systematically vary the values of the two parameters (β, fshaping) to be (1.5, 0.5), (3, 0.5), (2.25, 0.3), and (2.25, 0.7). Fig. 4(a) shows the impact of increasing β, i.e. applying less regularization as compared to the original simulation. The evolution features less damping than in the original set-up and the oscillation can still be identified at the end of the simulation. We note that decreasing β has the opposite effect, while changing the value of fshaping has no noticeable effect (not shown). These results align with point (ii), namely that the moving-mesh approach performs better if less regularization of the mesh is required and thus the cell motion more closely follows the local fluid motion.

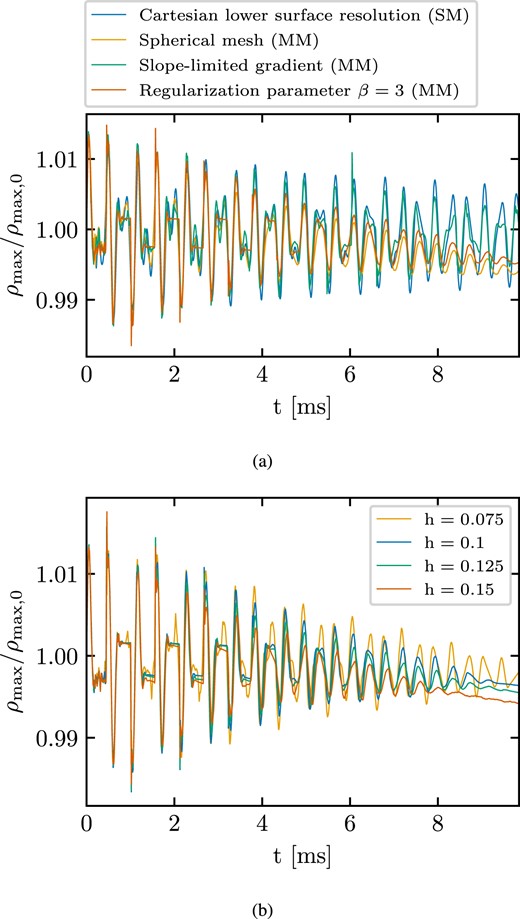

To quantify the impact of the regularization, we compute the fraction of cell motion stemming from the mesh regularization compared to the fluid velocity. Fig. 5 shows this fraction as a function of the density. We compute the fraction as an average over the density (by defining density intervals [10N, 10N + 1] g cm−3 with N being an integer in [7,14]) and over time by considering several snapshots (produced every 100 code units, i.e. ≈0.49 ms). We report the different numerical set-ups in panel 5(a) and the results for different resolutions in panel 5(b). We observe two general trends. First, the motion due to the mesh regularization is generally sizable (at all densities) and more pronounced in the outer layers, in line with the discussion above and Fig. 3. Secondly, Figs 4 and 5 clearly show that the magnitude of damping in moving-mesh simulations is related to the relative strength of mesh regularization. Numerical setups that require less regularization feature less numerical damping.2 The effects discussed under points (i) to (iii) can thus be traced back to their impact on the mesh regularization. A more significant contribution from the mesh regularization to the cell motion arguably leads to more damping, as mesh regularization precisely means to introduce cell motion which does not follow the fluid motion and thus spoils the specific advantage of a moving mesh. For comparison, we also provide the relative fraction of the corrective cell velocity from regularization for the BNS merger simulation discussed in Section 5, which shows a much smaller relative impact of the mesh regularization.

Fraction of the corrective velocity due to regularization over the fluid velocity for the Cowling simulations of the TOV polytropic neutron star. The ratio is averaged over the density and over time (see the main text). Panel (a): Includes all the moving-mesh setups from Fig. 4(a). The blue line refers to the standard (initially) Cartesian moving-mesh set-up with h = 0.1 (note that the blue line in Fig. 4(a) is a static-mesh run). The black line refers to the BNS merger simulation discussed in Section 5. Panel (b): Impact of resolution for all the (initially) Cartesian set-ups shown in Fig. 4(b). The blue line is the same as in panel (a).

Overall, we emphasize that the tests in this section demonstrate that further effort is required to identify set-ups that lead to better moving-mesh evolutions of TOV stars in the Cowling approximation. Based on our investigation, certain aspects of the numerical modelling (e.g. initial mesh geometry, slope limiter, regularization scheme parametrization) positively influence the moving-mesh evolution. We have chosen our default settings to be as close as possible to the set-up in Font et al. (2002) for a better comparison, but obviously other choices seem more appropriate for TOV systems. As apparent in Fig. 5, TOV stars may not be an easy target for moving-mesh simulations because of their quasi-stationary nature where, in contrast to dynamical systems like BNS mergers, the motion of the mesh is strongly determined by regularization.

We note that the cell rearrangement in the moving-mesh evolution highlights that a direct comparison between a static-mesh and a moving-mesh simulation is not necessarily straightforward. Even if the initial mesh geometries match, the moving mesh quickly rearranges and does not have a single resolution that one can compare to the fixed mesh. In addition, allowing the cells to move without taking the mass distribution of the system into account can lead to issues close to the surface as reported. The rearrangement of cells close to the surface can create small cells. If these cells are not derefined, which we do not do in our TOV simulations, they can in principle reduce the (global) time-step, hence increasing the required computational effort. We note that the mesh-generation algorithm typically requires more time to construct a Cartesian grid compared to other distributions with the same number of mesh-generating points due to the extra cost required to resolve geometric degeneracies during mesh construction.

4.2 Dynamical space–time

4.2.1 Initial data

We construct TOV data for a 1.41 M⊙ neutron star configuration (central density ρc = 9.545 × 10−4) described by the H4 EOS (Lackey, Nayyar & Owen 2006) modelled as a piecewise polytrope (Read et al. 2009). We complement H4 with a Γth = 1.75 thermal ideal-gas component.

The initial mesh-generating point distribution and subsequently the mesh geometry is different from our Cowling tests. We map the 1D TOV data to a spherical distribution of cells located at the centre of the simulation domain. We use a total of 85 shells extending up to a distance of 11 M⊙ ≈ 16.2 km, with an equidistant radial separation ≈191 m between consecutive shells. In each shell, we distribute |$12N_\mathrm{side}^2$| cells based on the HEALPix tessellation by Górski et al. (2005), where for a shell with inner radius rlower and outer radius rupper we set |$N_\mathrm{side}=\sqrt{\pi /12} \times (r_\mathrm{lower}+r_\mathrm{upper})/(r_\mathrm{upper}-r_\mathrm{lower})$| (see also Pakmor et al. 2012). In addition to these shells, we place a coarse Cartesian grid to fill the rest of the computational domain. This set-up is our standard resolution run. In addition, we construct two similar set-ups with 100 shells (i.e. a resolution of ≈162.4 m) and 64 shells (namely a resolution of ≈253.7 m), which we employ for higher and lower resolution (moving-mesh) simulations, respectively. Finally, we also test a mesh which is similar to our standard resolution, but we randomly place the mesh-generating points on each shell to eliminate possible grid orientation effects.

In this section, we compare the radial mode frequency from our simulations to a calculation with an independent linear perturbation code, which we developed following the approach outlined in Gondek, Haensel & Zdunik (1997). Unlike Section 4.1, we do not compare to results from an independent Cartesian hydrodynamics code.3 Since the perturbative result is practically exact and the comparison does not rely on choosing the same grid set-up, we employ a grid that captures well the geometry of the physical system.

In contrast to the previous test, the space–time evolves dynamically. We solve the metric field equations on a uniform Cartesian grid with 1293 points with a resolution hM = 0.3 M⊙. Similar to the Cowling tests, we excite the radial oscillation with a perturbation of the form (40) with A = −0.001 and we set ρatm = 10−8 × ρmax and ρthr = 10 × ρatm.

4.2.2 Simulations

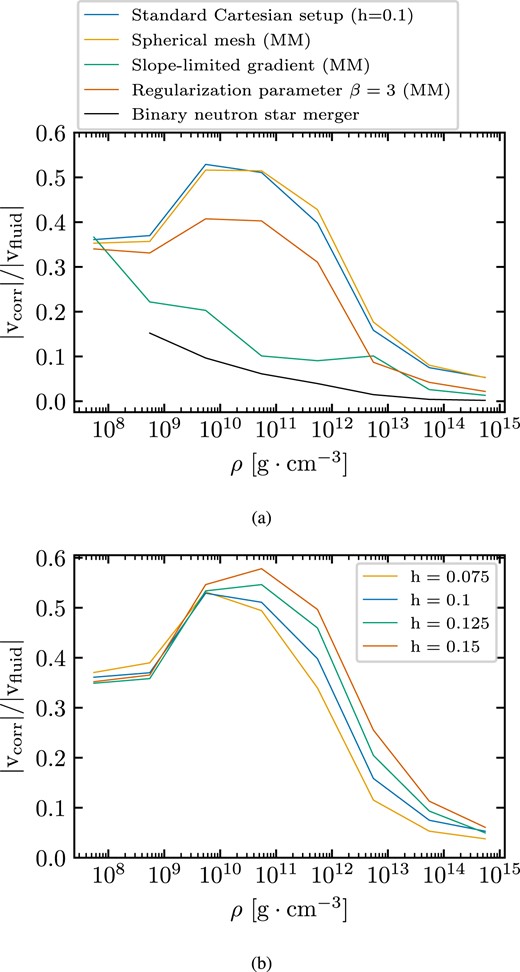

In Fig. 6, we present the time evolution of the normalized maximum density of the 1.41 M⊙ H4 stellar model with a moving mesh (blue) and a static mesh (orange) with our standard resolution set-up. Again, we compute the fundamental radial pulsation frequency. Using a Fourier transform of the density oscillations, we obtain 2.343 kHz for the moving mesh and 2.358 kHz for the static mesh. For comparison, the perturbative calculation gives 2.385 kHz. Deviations of the order of 1 per cent are comparable to what is found by other codes, e.g. Font et al. (2002).

Normalized maximum rest-mass density from a moving-mesh (blue line) and a static-mesh (orange line) evolution of a 1.41 M⊙ star described by the H4 EOS. The space–time is evolved dynamically and the metric field equations are solved on a grid with 1293 points and resolution 0.3 M⊙. The pulsation is excited with a radial velocity perturbation with amplitude −0.001. See the main text for a description of the initial mesh geometry.

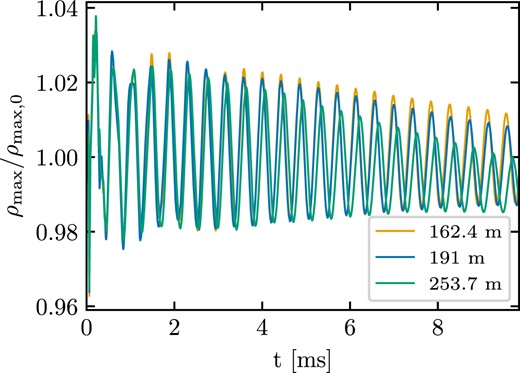

Moving-mesh runs with the higher and lower resolution (see Fig. 7) result in frequencies of 2.352 and 2.318 kHz, respectively, i.e. increasing the resolution leads to frequencies which lie closer to the perturbative result. In addition, we perform a moving-mesh simulation with an initial mesh set-up with standard resolution (≈191 m) including a random component to slightly offset the mesh-generating points. We obtain 2.349 kHz, which is slightly higher than the result from the same resolution set-up without the random component. We note that including the random component in the mesh set-up reduces grid effects, while it only slightly increases the damping in the maximum density oscillation. The overall agreement in the frequencies validates our implementation of GRHD and the metric solver, as well as their coupling in a realistic set-up that employs a microphysical EOS.

Evolution of the normalized maximum rest-mass density in moving-mesh simulations with different resolutions. The legend provides the radial separation between consecutive shells in the initial (spherical) mesh. The space–time is dynamically evolved. Note that the standard resolution set-up (blue line) matches the blue line in Fig. 6.

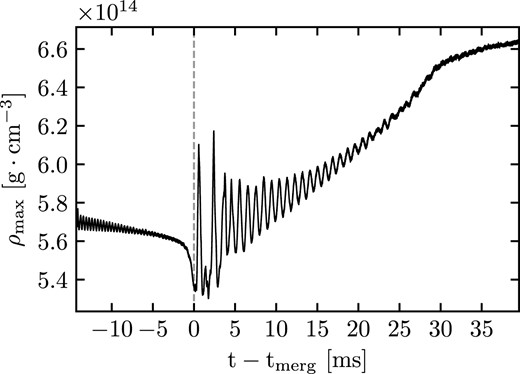

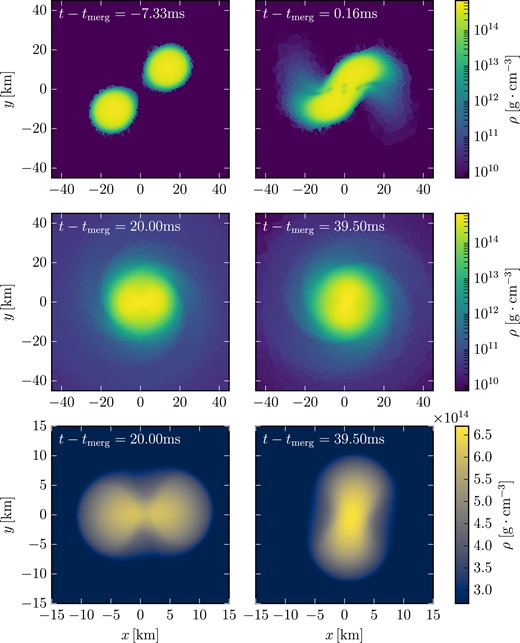

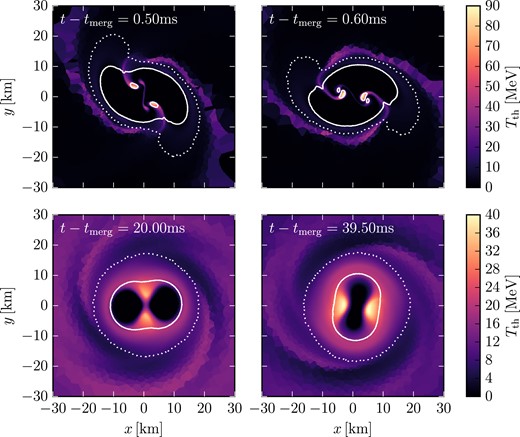

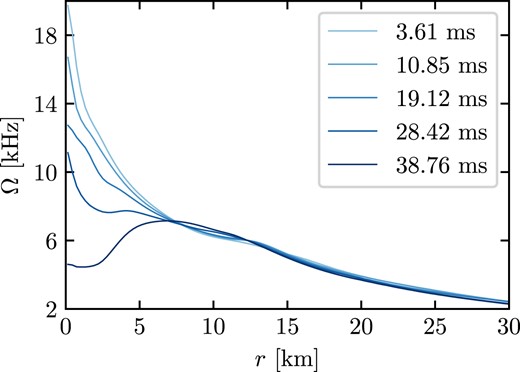

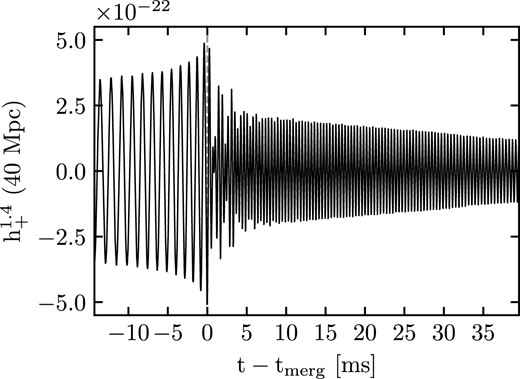

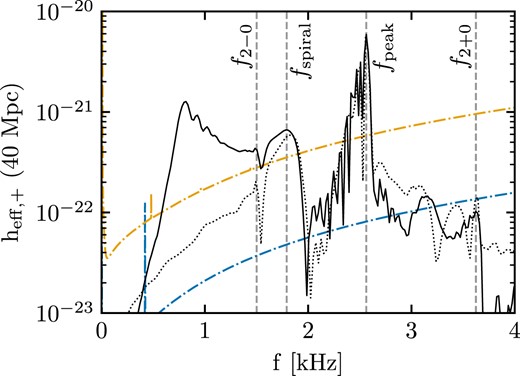

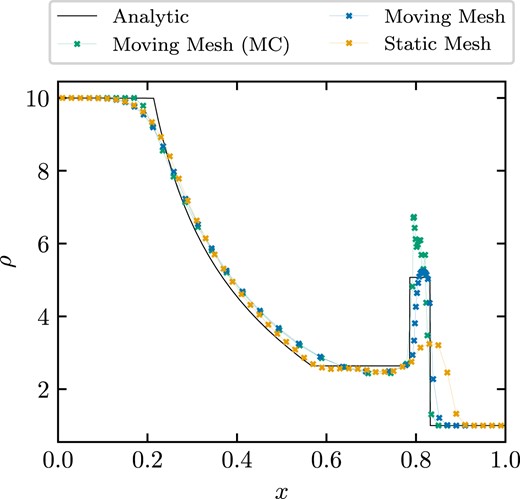

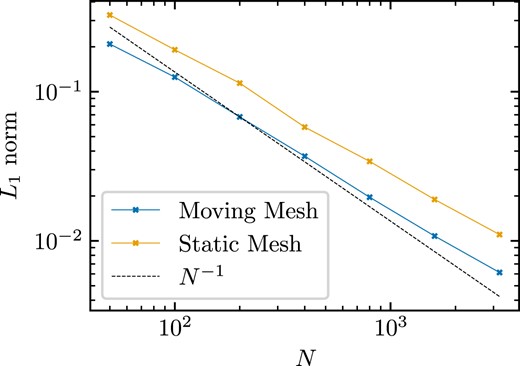

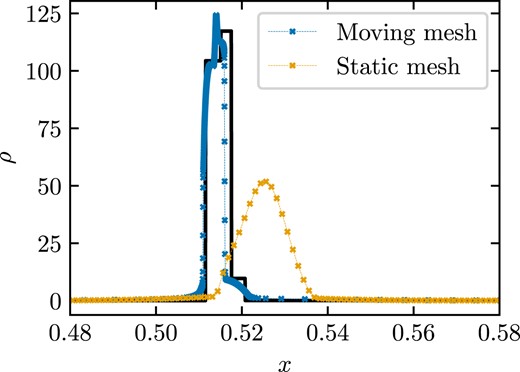

The moving-mesh and static-mesh standard resolution simulations of the star preserve the initial TOV solution during the whole simulation time with only a very mild drift in the central density, which is also seen in other simulations (e.g. Font et al. 2002). The drift diminishes with higher resolution, see Fig. 7. Increasing the resolution also yields less damping in the rest-mass density oscillation.