-

PDF

- Split View

-

Views

-

Cite

Cite

Daniel Forero-Sánchez, Chia-Hsun Chuang, Sergio Rodríguez-Torres, Gustavo Yepes, Stefan Gottlöber, Cheng Zhao, A machine learning approach to correct for mass resolution effects in simulated halo clustering statistics, Monthly Notices of the Royal Astronomical Society, Volume 513, Issue 3, July 2022, Pages 4318–4331, https://doi.org/10.1093/mnras/stac1239

Close - Share Icon Share

ABSTRACT

The increase in the observed volume in cosmological surveys imposes various challenges on simulation preparations. First, the volume of the simulations required increases proportionally to the observations. However, large-volume simulations are quickly becoming computationally intractable. Secondly, on-going and future large-volume survey are targeting smaller objects, e.g. emission line galaxies, compared to the earlier focus, i.e. luminous red galaxies. They require the simulations to have higher mass resolutions. In this work, we present a machine learning (ML) approach to calibrate the halo catalogue of a low-resolution (LR) simulation by training with a paired high-resolution (HR) simulation with the same background white noise, thus we can build the training data by matching HR haloes to LR haloes in a one-to-one fashion. After training, the calibrated LR halo catalogue reproduces the mass–clustering relation for mass down to 2.5 × 1011 h−1 M⊙ within 5 per cent at scales |$k\lt 1\,h\, \rm Mpc^{-1}$|. We validate the performance of different statistics including halo mass function, power spectrum, two-point correlation function, and bispectrum in both real and redshift space. Our approach generates HR-like halo catalogues (>200 particles per halo) from LR catalogues (>25 particles per halo) containing corrected halo masses for each object. This allows to bypass the computational burden of a large-volume real high-resolution simulation without much compromise in the mass resolution of the result. The cost of our ML approach (∼1 CPU-h) is negligible compared to the cost of a N-body simulation (e.g. millions of CPU-h), The required computing time is cut a factor of 8.

1 INTRODUCTION

The size of cosmological redshift surveys has been increasing exponentially over the last few decades. The 2dF Galaxy Redshift Survey observed ∼390 000 galaxy spectra in an area of |$2\times 10^3\, \rm deg^2$|, corresponding to a volume of |$0.12~h^{-3}\, \rm Gpc^3$| (Colless et al. 2001). Later, the Sloan Digital Sky Survey1’s (SDSS) Baryon Oscillation Spectroscopic Survey (BOSS) observed 1.5 million galaxies within |$\sim 10^4 \rm deg^2$| (Eisenstein et al. 2011; Dawson et al. 2013; a volume of |$\sim 2.5~h^{-3}\, \rm Gpc^3$|). The extended BOSS expanded these observations through the inclusion of different matter tracers and expanding surveyed redshift range (Dawson et al. 2016) resulted in an extended volume of |$\sim 1.5~h^{-3}\, \rm Gpc^3$|. Currently, the Dark Energy Spectroscopic Instrument2 (DESI) is observing a |$14\times 10^3\, \rm deg^2$| field and is expected to acquire more than 30 million redshifts (Levi et al. 2019) in a volume of |$\sim 20~h^{-3}\, \rm Gpc^3$|. When finished, DESI will provide the largest observed cosmological volume available. Meanwhile, future-generation large surveys are already being developed, e.g. 4-metre Multi-Object Spectroscopic Telescope3 (de Jong et al. 2019), Hobby-Eberly Telescope Dark Energy Experiment4 (Hill et al. 2008), Subaru Prime Focus Spectrograph5 (Takada et al. 2014), Euclid6 (Laureijs et al. 2011), and the Roman Space Telescope7 (Spergel et al. 2013). These surveys aim to investigate the properties of dark energy (DE) through a precise measurement of the Baryon Acoustic Oscillations (BAO) scale and the growth rate of the Universe through redshift space distortions (RSD). In summary, the volume of observed Universe can be expected to grow significantly over the next decades.

To constrain cosmological parameters using the observed galaxy distribution, we need to compare the distribution with the prediction based on theoretical models. At linear and quasi-linear scales, one can build the models based on perturbation theories. However, to extract cosmological information from small-scale clustering, we need to take into account the non-linear effects while building the models. This can be achieved by modelling non-linearities with semi-analytical or empirical models that rely on approximations of the structure formation process (Wang 2017). To validate these approximations, make use of numerical solutions, i.e. N-body simulations which encode a complete description of gravitational evolution (Alam et al. 2021). However, to provide an accurate and precise prediction, a simulation should have a high resolution (HR) to resolve proper haloes, hosting the observed galaxies and a large volume to reduce cosmic variance. While large volume simulations may be obtained in a reasonable time by reducing the mass resolution, simulations complying with both these requirements are very computationally expensive.

Due to their high computational costs, N-body cosmological simulations have challenged generations of hardware architectures. Despite developments in hardware and software (Springel 2005; Ishiyama, Fukushige & Makino 2009; Habib et al. 2016; Potter, Stadel & Teyssier 2017; Garrison, Eisenstein & Pinto 2019), the requirements of modern cosmological surveys – both in terms of number of realizations and their resolution – may soon render exact N-body computations intractable. Current software and hardware takes upwards of two million CPU hours for a single |$1~h^{-1}\rm Gpc$| HR simulation used in this study, while we require the simulation volume to be much larger than observed volume (e.g. |$\sim 20~h^{-3}\rm {Gpc}^3$| for DESI) to minimize the theoretical uncertainties.

The increasing availability of large data sets has facilitated the development of machine learning (ML) methods, as well as their application to various aspects of science and technology. In particular, ML in the physical sciences has helped overcome great barriers in computation related to forward modelling and simulations in various different fields. Various types of ML techniques, ranging from classical methods such as Kernel Ridge Regression (Bartók, Kondor & Csányi 2013) to modern deep architectures such as Generative Adversarial Networks (GAN; Mustafa et al. 2019) have been found valuable for their specific research fields. A complete review of applications of ML in physics (and vice-versa) can be found in Carleo et al. (2019).

Moreover, astronomical and astrophysical research has also seen a significant increase in the applications of ML techniques. For example, Convolutional Neural Networks have been widely used to analyse weak lensing maps (Gupta et al. 2018; Ribli, Pataki & Csabai 2019; Lu, Haiman & Zorrilla Matilla 2022). Additionally, other architectures, such as Variational Autoencoders, have been used in Cosmic Microwave Background data analysis (Yi et al. 2020). Classical ML methods, such as random forests (RF) are also widely used due to their interpretability and capacity, for example in photometric redshift estimation (Carrasco Kind & Brunner 2013; Mountrichas et al. 2017). Within the analysis of the large-scale structure of the Universe, ML techniques have been developed to perform BAO reconstruction (Mao et al. 2021), and specially to generate and emulate large cosmological simulations (He et al. 2019). Examples of the latter take advantage of GANs to generate new simulations from a random state (Feder, Berger & Stein 2020) and even increase the resolution of density maps (Li et al. 2021; Ni et al. 2021).

Many of these methods use well-studied neural network architectures that operate on pixelated data. This fact poses challenges in terms of storage size and computational cost of training, due to the necessity of extending these models for 3D inputs and outputs. Therefore, the cosmological volume and the resolution that the network can be trained on is largely limited and high-performance computational facilities and large computing time allocations may still be necessary to train and infer from these models. In this work, we present an alternative that does not rely on neural networks on pixelated data, but on individual halo properties and engineered environmental features. This approach allows grid-independent training and inference.

In the next section (Section 2), we introduce the N-body simulations our model is based on and describe the halo catalogues extracted from them. In Section 3, we explain how the training data set is constructed from the base simulations and halo catalogues. We also introduce the feature-set of the different haloes. Next, Section 5 describes the ML models used in this work. Section 6 describes the different metrics used to evaluate the performance of our model. Section 7 presents the results. Finally, in Section 9, we conclude and state future work directions.

2 DATA

In this section, we introduce the data used for this project and some of the processing steps from the raw simulations to dark matter haloes.

2.1 UNIT simulations

The Universe N-body simulations for the Investigation of Theoretical models from galaxy surveys8 (UNIT; Chuang et al. 2019) is a set of cosmological N-body simulations that aims to provide low-variance clustering statistics from a gravity solver while avoiding the computational cost of a large ensemble of independent simulations. The simulations are based on the method proposed by Angulo & Pontzen (2016), which shows that a single pair of fixed-amplitude and phase-paired simulations would yield clustering measurements similar to those of 50 averaged independent simulations. More precisely, the pair of simulations has the initial mode amplitudes fixed to the ensemble-averaged power spectrum and the phases of the random field are chosen to be perfectly off-phase such that the noise is cancelled out. Thus, only a small number of simulations is required.

In the present work, we use two sets of UNIT simulations evolved from the same initial conditions but with different mass resolutions. HR UNIT simulations are obtained using the Gadget (Springel 2005) code with 40963 particles of mass 1.2 × 109 h−1 M⊙ in a volume of |$1~(h^{-1}\rm Gpc)^3$|. This mass resolution is expected to be consistent with the requirements of current-generation surveys. In particular, the HR simulations should resolve haloes hosting emission line galaxies (ELGs), that is, of mass ∼1011 h−1 M⊙ (Gonzalez-Perez et al. 2018). Low resolution (LR) simulations are also done with Gadget, but with 20483 particles of mass 9.9 × 109 h−1 M⊙ in the same volume.

From these simulations, HR haloes are extracted using the Friend-of-Friends (FOF) algorithm described in appendix B of Riebe et al. (2013). The chosen linking length corresponds to 0.2 times the mean inter-particle separation. To build the training set, we choose a minimum of 100 HR particles per halo and a minimum of 8 LR particles per halo. The extracted halo catalogues contain a number of halo properties that are used in our experiments as features of the model. These are enumerated in Table 1 along with their definitions.

Table summarizing FOF halo properties and their definitions. See appendix B of Riebe et al. (2013) for more details. G is the gravitational constant.

| Name . | Halo property . | Definition . |

|---|---|---|

| log_halo_mass | (Log) Halo mass, log (Mhalo) | Total mass of the FOF halo. Sum of particle masses within the FOF group. |

| delta | Mean overdensity, δ | Overdensity computed using the density defined by the mass enclosed in a sphere of radius rsph. |

| log_sigma | (Log) Particle position dispersion within halo, log σ | Dispersion of simulation particle positions within the FOF group. |

| log_sigma_v | (Log) Velocity dispersion within halo, log σv | Dispersion of simulation particle velocities within the FOF group. |

| log_r_sph | (Log) Halo radius, log rsph | Halo size defined as the radius of the sphere containing the same volume as the FOF group. |

| log_spin_p | (Log) Spin parameter log λ | |$\lambda = \frac{JE_{\rm kin}^{1/2}}{GM_{\rm halo}^{5/2}}$| defined by the angular momentum J and the kinetic energy Ekin. |

| v | Centre of mass speed, v. | Norm of the centre of mass halo velocity |$v = \left| {{v}}\right| $| |

| Name . | Halo property . | Definition . |

|---|---|---|

| log_halo_mass | (Log) Halo mass, log (Mhalo) | Total mass of the FOF halo. Sum of particle masses within the FOF group. |

| delta | Mean overdensity, δ | Overdensity computed using the density defined by the mass enclosed in a sphere of radius rsph. |

| log_sigma | (Log) Particle position dispersion within halo, log σ | Dispersion of simulation particle positions within the FOF group. |

| log_sigma_v | (Log) Velocity dispersion within halo, log σv | Dispersion of simulation particle velocities within the FOF group. |

| log_r_sph | (Log) Halo radius, log rsph | Halo size defined as the radius of the sphere containing the same volume as the FOF group. |

| log_spin_p | (Log) Spin parameter log λ | |$\lambda = \frac{JE_{\rm kin}^{1/2}}{GM_{\rm halo}^{5/2}}$| defined by the angular momentum J and the kinetic energy Ekin. |

| v | Centre of mass speed, v. | Norm of the centre of mass halo velocity |$v = \left| {{v}}\right| $| |

Table summarizing FOF halo properties and their definitions. See appendix B of Riebe et al. (2013) for more details. G is the gravitational constant.

| Name . | Halo property . | Definition . |

|---|---|---|

| log_halo_mass | (Log) Halo mass, log (Mhalo) | Total mass of the FOF halo. Sum of particle masses within the FOF group. |

| delta | Mean overdensity, δ | Overdensity computed using the density defined by the mass enclosed in a sphere of radius rsph. |

| log_sigma | (Log) Particle position dispersion within halo, log σ | Dispersion of simulation particle positions within the FOF group. |

| log_sigma_v | (Log) Velocity dispersion within halo, log σv | Dispersion of simulation particle velocities within the FOF group. |

| log_r_sph | (Log) Halo radius, log rsph | Halo size defined as the radius of the sphere containing the same volume as the FOF group. |

| log_spin_p | (Log) Spin parameter log λ | |$\lambda = \frac{JE_{\rm kin}^{1/2}}{GM_{\rm halo}^{5/2}}$| defined by the angular momentum J and the kinetic energy Ekin. |

| v | Centre of mass speed, v. | Norm of the centre of mass halo velocity |$v = \left| {{v}}\right| $| |

| Name . | Halo property . | Definition . |

|---|---|---|

| log_halo_mass | (Log) Halo mass, log (Mhalo) | Total mass of the FOF halo. Sum of particle masses within the FOF group. |

| delta | Mean overdensity, δ | Overdensity computed using the density defined by the mass enclosed in a sphere of radius rsph. |

| log_sigma | (Log) Particle position dispersion within halo, log σ | Dispersion of simulation particle positions within the FOF group. |

| log_sigma_v | (Log) Velocity dispersion within halo, log σv | Dispersion of simulation particle velocities within the FOF group. |

| log_r_sph | (Log) Halo radius, log rsph | Halo size defined as the radius of the sphere containing the same volume as the FOF group. |

| log_spin_p | (Log) Spin parameter log λ | |$\lambda = \frac{JE_{\rm kin}^{1/2}}{GM_{\rm halo}^{5/2}}$| defined by the angular momentum J and the kinetic energy Ekin. |

| v | Centre of mass speed, v. | Norm of the centre of mass halo velocity |$v = \left| {{v}}\right| $| |

The cosmological parameters used in the UNIT simulations and through this work are Ωm = 0.3089, h = 0.6774, ns = 0.9667, and σ8 = 0.8147 (Planck Collaboration 2016).

Given that we have only a single pair of UNIT simulations, we divide it in training, validation, and test sets of sizes of (0.5 × 1 × 1), (0.5 × 0.5 × 1), and |$(0.5\times 0.5\times 1)~h^{-3}\rm Gpc^3$|, respectively. The training set is used to fit the model parameters, while the validation test is used to optimize the different hyperparameters. Finally, the test set is used to evaluate the performance of the model once the hyperparameters are all fixed.

3 CONSTRUCTION OF THE TRAINING SET

To build the training data, we need to match haloes from the low-resolution simulation to haloes from the HR simulation. In this section, we first introduce the feature selection and engineering. Then, we describe how the targets of the model are obtained via one-to-one halo matching.

3.1 One-to-one halo matching

In a general sense, the halo matching procedure assigns a target mass – taken from a HR halo – to a LR halo. This task is not trivial, due to the possibility that the LR simulation does not resolve all individual HR haloes. None the less, we aim to find the best one-to-one matching between catalogues of both resolutions. The assignment is designed to take into account two factors.

The pair of matched haloes must be spatially close.

The masses of the haloes must be close since the LR haloes considered should be reasonably resolved and we aim at calibrating their masses.

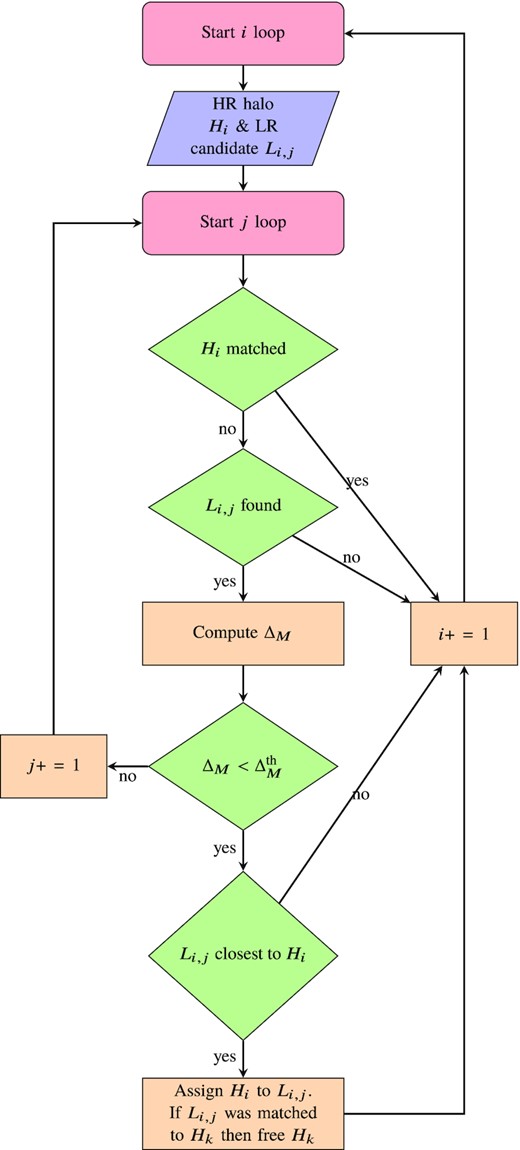

During the halo matching, we iterate over all the HR haloes (i.e. haloes with >100 particles) and look for their counterparts in the LR halo catalogue. It is possible, however, that multiple HR haloes are assigned to the same LR halo. If a LR halo L has initially been assigned to a HR halo H0, but it is later found that a different HR halo H1 is a better match (i.e. is spatially closer), then L is matched with H1 and H0 is marked as unmatched. We loop multiple times over the whole the HR catalogue to allow the unmatched haloes to be paired with their next-best candidate. In practice, we find five iterations to be enough for pairing most of the HR haloes and iterations yield no new pairings. Fig. 1 shows the flow diagram corresponding to this mass matching procedure and Fig. 2 illustrates the case in which multiple HR haloes are assigned to the same LR halo.

Loop for halo matching. The distance between all Li, j and its match is initialized to infinity. It is possible that there are less than knb neighbours found within rmatch, in which case the candidate Li, j may not exist. Nearest neighbours are sorted by distance such that Li, j is closer than Li, j + 1 for all |$i,\, j$|.

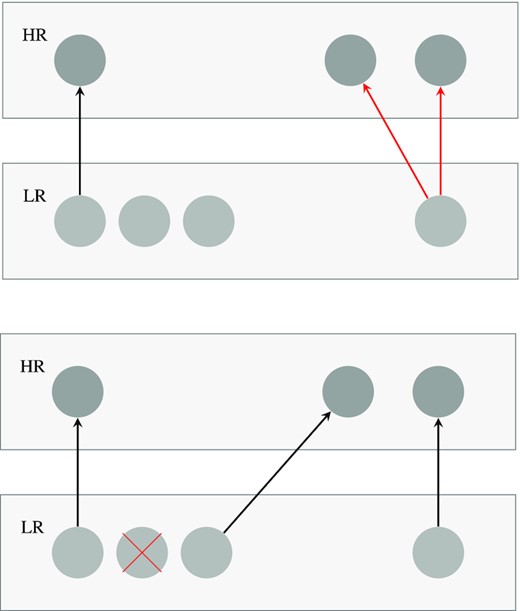

Halo matching process: under the condition |$\Delta _M \lt \Delta _M^{\rm th}$|, haloes in the HR box find nearest haloes in the LR box as their matches. Upper panel: arrows indicate the nearest haloes found. Red arrows indicate competitions (multiple HR haloes found the same LR halo as their nearest haloes). Lower panel: when a competition occurs, the closer HR halo gets to keep the LR halo and the other HR halo needs to find the next nearest LR halo. At the end, we discard the rest of the LR haloes indicated with the red cross.

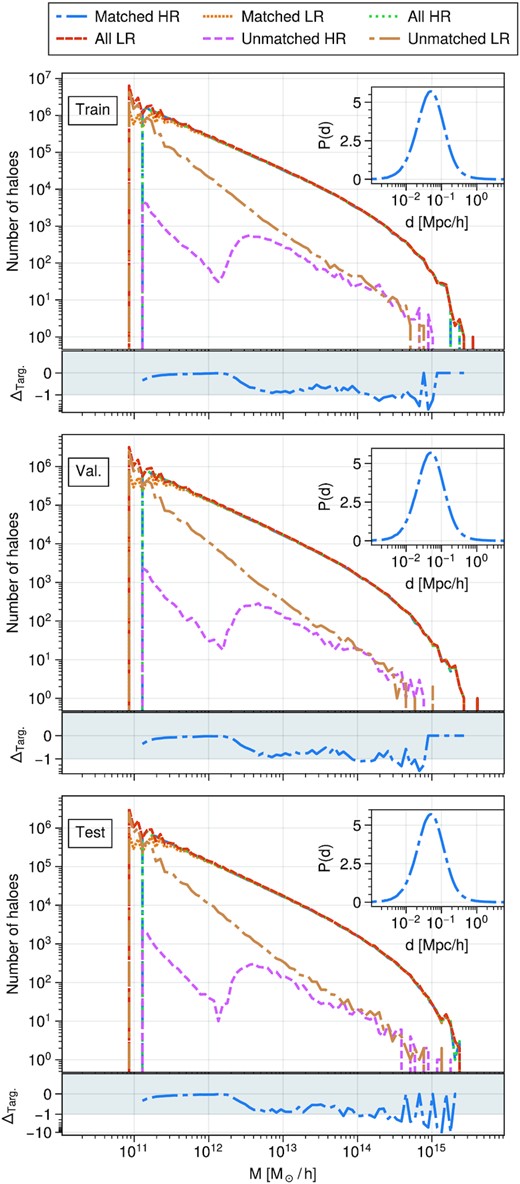

Fig. 3 shows the results of the halo matching procedure applied to the train, validation, and test sets with knb = 10, |$r_{\rm match} = 5~h^{-1}\rm Mpc$|, and |$\Delta _M^{\rm th} = 0.95$|. We show first that the halo mass function (HMF) of the matched HR haloes closely approximates that of the complete HR catalogue. This subset is hereon referred to as Target HR. For clarity, in the sub-panels, we show the relative difference between the target HR and the actual HR HMF, which is 1 per cent in the mass range considered. We also show the mass functions of all LR haloes and the matched ones. It is evident that the bulk of the unmatched LR haloes is in the low-mass end of the distribution. This is expected, given that the HR mass cut is larger than the analogous cut in the LR catalogue.

HMFs resulting from the halo matching procedure for the training, validation, and test sets. The sub-panels show Δtarg = 100 × (HMF − HMFtarg)/HMFtarg, the per cent difference to the real HR HMF. The matched HR matches the complete HR to 1 per cent precision. The mass distributions of all and unmatched haloes are also shown for reference. In the insets, we show the probability distributions P(d) of a LR halo being a distance d from its match. One can see that the distribution of distance is well smaller than rmatch we use. The peak is at around |$0.05 h^{-1} \rm Mpc$|.

The hyperparameters knb and rmatch are chosen ad hoc to decrease the computational burden of the procedure. The latter permits the kd-tree structure to be pruned, which speeds up the candidate search. The former decreases the number of loops in the mass assignment step, though it is sub-dominant for the overall performance of the halo matching procedure. In fact, |$\Delta _M^{\rm th}$| plays the most important role in controlling the HMF of the matched haloes. Moreover, the insets in Fig. 3 show the probability that a pair of matched haloes be separated a distance d. Notice that most haloes find a match within |$d\sim 10^{-1}~h^{-1}\, \rm Mpc$|. This means that the chosen |$r_{\rm match} = 5~h^{-1}\, \rm Mpc$| is large enough to accommodate all matches; and while it allows for faster tree queries, it does not affect the result of the matching itself. Table 2 shows the hyperparameters introduced by the one-to-one halo matching procedure and the values used in this work. The corresponding matching statistics are shown in Table 3. Note that the matching statistics are similar for the different sets, which is desired.

| Parameter . | Value . |

|---|---|

| knb | 10 |

| rmatch | |$5~h^{-1}\rm Mpc$| |

| |$\Delta _M^{\rm th}$| | 0.95 |

| Parameter . | Value . |

|---|---|

| knb | 10 |

| rmatch | |$5~h^{-1}\rm Mpc$| |

| |$\Delta _M^{\rm th}$| | 0.95 |

| Parameter . | Value . |

|---|---|

| knb | 10 |

| rmatch | |$5~h^{-1}\rm Mpc$| |

| |$\Delta _M^{\rm th}$| | 0.95 |

| Parameter . | Value . |

|---|---|

| knb | 10 |

| rmatch | |$5~h^{-1}\rm Mpc$| |

| |$\Delta _M^{\rm th}$| | 0.95 |

Matching statistics of the three data sets using the hyperparameter values chosen (see Table 2).

| . | Train . | Validation . | Test . |

|---|---|---|---|

| Fraction of HR matched | 0.9983 | 0.9983 | 0.9983 |

| Fraction of LR matched | 0.5414 | 0.5411 | 0.5400 |

| . | Train . | Validation . | Test . |

|---|---|---|---|

| Fraction of HR matched | 0.9983 | 0.9983 | 0.9983 |

| Fraction of LR matched | 0.5414 | 0.5411 | 0.5400 |

Matching statistics of the three data sets using the hyperparameter values chosen (see Table 2).

| . | Train . | Validation . | Test . |

|---|---|---|---|

| Fraction of HR matched | 0.9983 | 0.9983 | 0.9983 |

| Fraction of LR matched | 0.5414 | 0.5411 | 0.5400 |

| . | Train . | Validation . | Test . |

|---|---|---|---|

| Fraction of HR matched | 0.9983 | 0.9983 | 0.9983 |

| Fraction of LR matched | 0.5414 | 0.5411 | 0.5400 |

3.2 Feature selection

The features our model takes into account play a significant role in its performance. In principle, the FoF halo catalogues provide a number of halo properties (e.g. see Table 1) that can be used as features of the model. However, these may contain only local information and therefore ignore the structure of the cosmic web. In order to enhance the predictive power of the model, we are also interested in including properties of the environment of each halo.

To this end, we attach to each halo the local halo overdensity at its position as log δh. The overdensity field is computed in grid sizes Ngrid of 2048, 1024, 512, 256, 128, and 64 bins, along the longest side of the sub-box in order to include information from different scales. These correspond to cubic cell sizes of 0.49, 0.98, 1.95, 3.90, 7.81, 15.63, and 31.25|$~h^{-1}\rm Mpc$|, respectively. These features are hereon denoted log_densi, where i indicates the value of Ngrid.

In Fig. 4, we show the correlation matrix of the halo features computed from the test set. Different halo properties are found to be highly correlated among them. These high correlations suggest that these properties provide redundant information to the model and can thus be safely discarded, without degrading the model’s performance. Notably, the engineered features, log_densNgrid, are the ones showing the smallest correlations with the halo properties. Furthermore, the decorrelation with the LR halo mass decreases along the grid size, meaning that averaging the overdensity over a larger neighbourhood does indeed provide more information to the model. None the less, it is not clear if the model will use this extra information efficiently to perform the prediction. We also show the correlations of the features with the target halo mass obtained through the one-to-one halo matching procedure described in Section 3.1. The correlation of the HR mass with the LR halo properties is weaker than it is with the LR mass; and may be useful to identify the most relevant features for the model.

4 MODEL BENCHMARK

We test our model against two different techniques to assign halo masses to the LR haloes.

4.1 Abundance matching

We first test an abundance matching (AM) technique, in which we rank halo masses in both the HR and LR catalogues but assign as ‘prediction’ the largest HR masses to the most massive LR haloes and so on. Given that our LR catalogue has more objects than the HR catalogue, the discarded LR haloes in this case are the least massive. This approach, by construction, matches perfectly the HR HMF and ignores any spatial information, so it remains to evaluate how the clustering is affected.

4.2 HADRON code

The hadron code (Zhao et al. 2015) was devised to assign halo masses to approximate mock catalogues, such that the halo clustering in different mass bins matches those of a given reference simulation. This is done by extracting the halo bias from the reference simulation as a multidimensional probability distribution over a set of features. These features are related to the cosmic environment of the haloes of given masses, such as whether they are located in voids, sheets, filaments, or knots and – in the case of the latter – the knot mass. This halo bias distribution therefore encodes not only the local density but also the dynamics of matter flows and halo exclusions. These features are analogously extracted from the mock catalogues such that the bias may be sampled for the required quantity, in this case, the halo mass. The concept of the bias as a multidimensional probability distribution has been shown to be very versatile and powerful. It has allowed not only to assign halo masses but also halo positions in order to create realistic mock catalogues (Balaguera-Antolínez et al. 2019, 2020) and even assign baryonic properties to dark matter catalogues (Sinigaglia et al. 2021).

We stress that the ‘training’ of this code does not involve the one-to-one matched catalogues but relies entirely on the dark matter properties of the HR simulation. For our tests, we use the complete |$1~h^{-3}\rm Gpc^3$| HR to train and the ‘test’ quarter of the LR. We compute the halo environmental features from a 5123 dark matter density field. Moreover, we have tuned the hadron parameters, such as the number of bins for probability distributions, to optimize its performance.

5 MACHINE LEARNING MODEL

Our main objective can be interpreted as a regression task that computes an appropriate mass for each LR halo. Table 3 shows that most (>99 per cent) of the HR haloes are matched, however, the fraction for LR hales is only about 50 per cent. This implies that the model should be able to discard LR haloes that are not matched, resulting in a classification task. In this work, the positive and negative classes (i.e. matched and unmatched) are roughly equally sampled, which facilitates training the model for classification; however this is not the case in general. Care should be taken when training a model for classification without taking into account unevenly sampled classes in the training set.

The input of our model consists in a LR halo object which contains various features. The targets (|${y}$|) of our model are a Boolean label, indicating whether the halo has a match and a target halo mass (i.e. log-mass). For the haloes that have not been matched (label 0), the target halo mass has been set to zero.

5.1 Random forests

The ML method we use for this study is an RF (Ho 1995), which is an ensemble of decision trees. A decision tree is a model that outputs a value or vector based on a set of decision boundaries imposed on the training data. In a geometric sense, the decision tree recursively partitions the feature space. As a result, the decision tree does not infer parameters from the training data, but places test data into these partitions. These trees are known to be sensitive to small changes in the training data. To mitigate this, they are usually implemented in ensembles called RFs. The output of a RF is the mode or average of the output of the decision trees, depending on the task the forest is trained for.

RFs are known to be very capable models, but prone to overfitting due to the fact that the trees strongly depend on the training data. A large number of trees (ntree) acts as a regularization for these models, and increasing the maximum depth (dmax) of the trees increases their capacity. The tree depth is the maximum number of partitions of the feature space per branch, that is, the maximum number of nested if – else conditions imposed on the features. Forests also provide a feature importance metric, which corresponds to the fraction of times each feature is used to set a decision boundary. This can be used to perform feature selection in case some features are found to be of little importance.

In our case, we use the scikit-learn’s implementation of a RF regressor. The RF outputs two numbers |${\hat{y}} = (p_{\rm keep}, \log (M_{\rm halo}))$|, which correspond to the probability of keeping the halo and the target log-mass, respectively. We implement the classification task as a regression of the probability of keeping the LR halo. We explored the possibility of separating the classification and regression task and applying two separate models in series, however we found that this is not more effective than a single model that performs both tasks in parallel but costs twice as much in terms of memory use.

For this work, the model consists of an RF with ntree = 10 and dmax = 64. These hyperparameters are fixed to control the memory usage of the model, which strongly depends on ntree. We tested on a subset of the training catalogue and with ntree = 100, which yields comparable results to those of our fiducial choice. The maximum depth is fixed to be a large value due to the large amount of training data (i.e. LR haloes), which means that many partitions are needed to correctly allocate the data.

6 EVALUATION METRICS

In this section we introduce the metrics used to evaluate the performance of the model proposed. We first introduce some metrics commonly related to the classification performance in ML. Then, we introduce the physically relevant metrics such as the HMF and two- and three-point clustering statistics.

6.1 Classification performance metrics

There are a number of metrics available for evaluating classifiers besides the naive accuracy or fraction of misclassified samples. In a general sense, the relevant quantities that define these metrics are the number of true positives, true negatives, false positives, and false negatives, given that these encode the output probabilities conditioned on the true labels. In our study, the true positives are the haloes that were matched and kept (M & K) by our model, the true negatives correspond to unmatched and discarded (U & D) haloes, the false positives are the unmatched and kept (U & K) haloes, and false negatives are matched haloes that are wrongly discarded (M & D). The 2 × 2 matrix showing the probabilities of the combination of true/false and positive/negative cases, i.e. confusion matrix, will be shown later.

We can quantify the performance of the classifier using the Area Under the Curve (AUC) metric, which is defined as the integral of the ROC curve. An AUC value close to 0.5 will therefore imply a diagonal ROC, thus poor performance of the classifier. A perfect classifier would show an AUC close to 1, meaning that the TPR is maximized irrespective of the threshold γ.

6.2 Halo mass function

The HMF used through this work is defined as the distribution of halo masses. This statistic is of physical relevance due to the fact that the mass distribution of dark matter haloes is closely related to the evolution of the Universe, especially in the matter domination era (Lukić et al. 2007). However, the accuracy on the lower mass end is not critical for constructing galaxy catalogues since we do not assign galaxies to every haloes with lower halo mass (Alam et al. 2020). In this case, an unbiased mass–clustering relation (respecting to HR) is more useful for constructing a robust galaxy catalogue. We introduce the clustering statistics in the following sections.

6.3 Power spectrum and two-point correlation function

Zz denotes the redshift space position along the third axis, vpec is the halo’s peculiar velocity along the line of sight, a is the cosmological scale factor, and H the Hubble parameter.

6.4 Bispectrum

The bispectrum contains important information on the large-scale structure of the distribution of tracers and can be used to constrain the tracer bias. It has also been used to constrain various cosmological parameters (Sefusatti et al. 2006; Gil-Marín et al. 2017). Moreover, due to its non-linear nature, it is a relevant statistic to constrain primordial non-Gaussianity (Gangui et al. 1994; Sefusatti & Komatsu 2007; Welling, van der Woude & Pajer 2016) and deviation from general relativity (Borisov & Jain 2009; Gil-Marín et al. 2011). Because of this, a precise estimation of the bispectrum is desired from the model.

In this work, we use the Pylians 39 library to compute the bispectra.

7 RESULTS

In this section, we present the results of the RF model used on the test set. We experiment with a varying number of features among the ones shown in Fig. 4 and find that the number of features included is an important hyperparameter. We observe that a large number of features allows the model to closely fit the HMF but has a detrimental effect on the power spectra by introducing a negative bias with respect to the HR mass-binned spectra. In what follows, we show the results that correspond to the use of three features. These are chosen to be the most positively correlated ones with the target halo mass (log_halo_mass_hr, Fig. 4): log_halo_mass, log_sigma_v and delta. In addition to the model with three features, we will also show a model with four features (i.e. add one more feature: log_sigma) for comparison.

7.1 Halo abundance

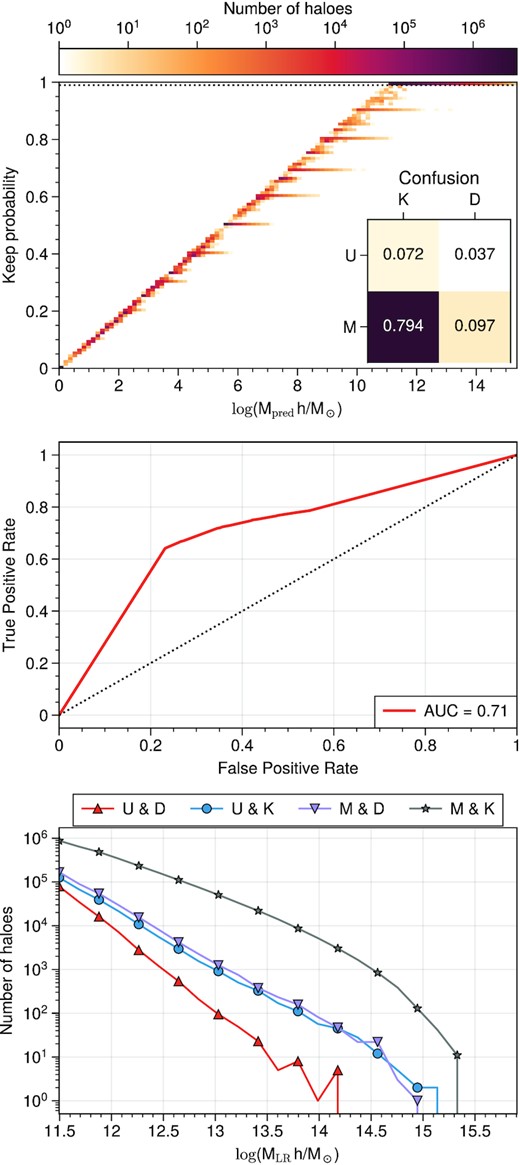

The first task our model should perform is to be able to discard some haloes in the input to account for the LR haloes that were not matched. The true labels for this task are therefore matched (‘M’) and unmatched (‘U’), while the predicted labels are either kept (‘K’) or discarded (‘D’).

Fig. 5 shows the results of this task. The keep probability pkeep is shown to be highly correlated to the predicted halo mass log (Mpred), as is expected. Our model does predict halo masses continuously between zero and the maximum training halo mass. We must then choose a threshold value for pkeep such that only relevant masses are included. It is also seen that the vast majority of haloes are predicted to be in the relevant mass range (log (Mpred h M⊙−1) > 11.4). We select the threshold to be at 0.99, which means that all the decision trees must have had a high output confidence of the halo being matched.

Top: probability of keeping the halo |$p_{r\rm keep}$| as a function of the predicted halo mass Mpred. Horizontal dotted line shows the threshold keep probability of 0.99. Middle: ROC to evaluate the classification performance of the model. The diagonal shows the expected curve from a random classifier. Bottom: halo mass distributions of haloes under different conditions. ‘U’: Unmatched, ‘M’: Matched, ‘K’: Kept, ‘D’: Discarded. In this sense, U&K denotes false positives, U&D true negatives, M&D false negatives, and M&K true positives.

We use the ROC to show that the classifier has indeed learnt to identify which haloes should be removed, which is better quantified in the value of the AUC of 0.71. In addition, from the ROC, it is clear that aiming for a high TPR – as we have done – comes at the cost of increasing the FPR. We suspect the performance of this classifier would improve if it were trained using a loss function more suitable for classification than the MSE.

We then estimate the impact of a high FPR in the physically motivated evaluation metrics. First, we observe the confusion matrix of the predictions (inset in Fig. 5), which show that the fraction of false positives (incorrectly kept haloes) and false negatives (incorrectly discarded haloes) are similar (8 per cent). If the haloes in these subsets have similar masses and are located in similar environments, it would be reasonable to assume that the samples are roughly interchangeable. We plot the HMFs of these subsamples (bottom panel Fig. 5) and observe that their mass distributions are consistent with one another. Given the similar fractions of FP and FN, we further assume that if an unmatched halo is misclassified, it would most likely be replaced by a similar halo, which locates at a similar environment (see Fig. 6). This would imply that interchanging haloes among the FP and FN samples does not introduce very significant biases in the HMF and clustering measurements. Because of this, we keep the classifier trained with the MSE loss and leave a more complex training scheme for future work.

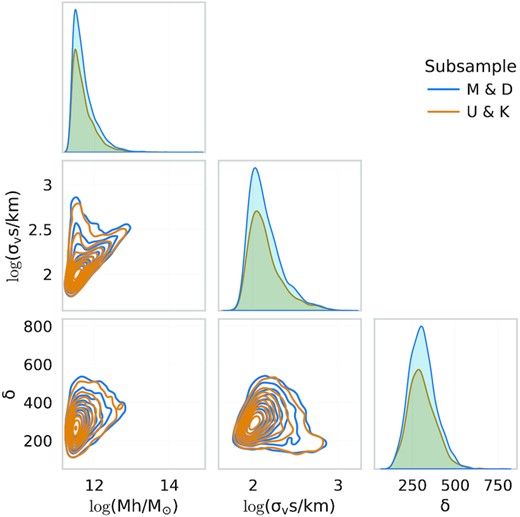

Corner plot showing the distribution of properties of a subset of haloes from the false positives (U&K) and false negatives (M&D) subsamples. The halo properties of these are very similar, thus validating the model performance despite the classification errors.

Finally, we find that in the mass range of 1011.4–1015.5 h−1 M⊙, the classifier keeps over 80 per cent of the samples, which is in line with the fact that the largest number of unmatched LR haloes was located in the lowest mass end, where the LR and HR HMF do not overlap (log mass <11.4), while for larger masses, most LR haloes are matched (see Fig. 3).

7.2 Halo mass function

Through this section, we evaluate the performance of the model in terms of purely predicting the HR halo masses, i.e. the regression task. We compare with a second model that uses an extra feature: log_sigma. These two models show the best overall performance. We find that naively including all halo features and engineered quantities does yield a good performance in terms of HMF but is detrimental to the clustering statistics (see Section 7.3).

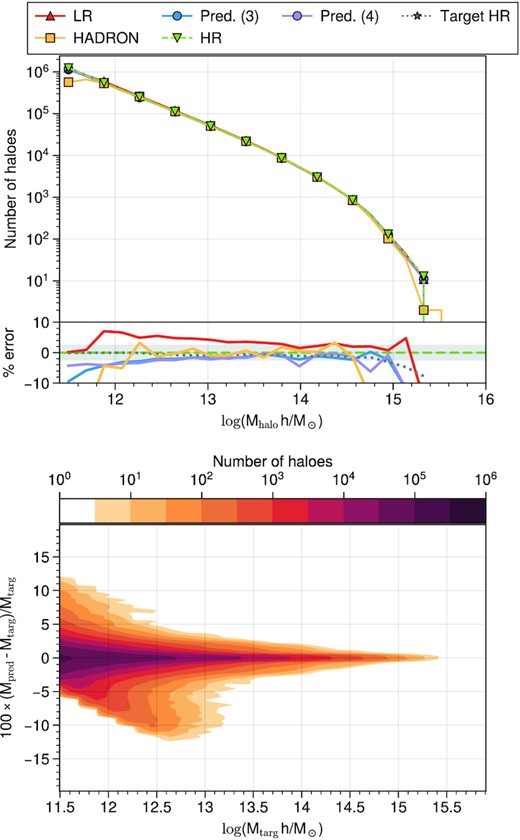

In Fig. 7, we plot the HMFs of the LR catalogue, the HR catalogue, the Target HR (the subset of HR that was matched), the hadron prediction, and the predicted HR from the models with tree and four features. The agreement between all of these is good in this mass range. The sub-panel shows the per cent error difference of the different samples with respect to the real HR. The predicted HMF from the model with four features closely approximates both the target and the real HR HMF. The prediction using three features shows a slightly larger discrepancy with the reference, however, the agreement is still better than 3 per cent from log (M/M⊙) ∼ 12.5 up to log (M/M⊙) ∼ 14.5. The hadron prediction has a similar behaviour to the RF for low masses, but deviates from the reference at a smaller mass on the large-mass end.

Top: HMFs of the low resolution, HR, prediction, and target catalogues. We show the prediction of models with three (Pred. (3)) and four (Pred. (4)) features. The sub-panel shows the per cent difference of the LR, Target HR, and Prediction HMFs with respect to the real HR HMF. Shaded area highlights the 2.5 per cent error band. Bottom: map showing the distribution of the per cent error of the predicted masses against the target masses as a function of the target mass.

Moreover, we compare the distribution of target versus predicted values from our fiducial (three feature) model using the relative error to the target. We note that the majority of the haloes (|$\sim \, 90$| per cent) lie well within the 5 per cent error in the prediction, while a small percentage of the catalogue shows errors of up to 12 per cent. Notably, for the majority of the haloes, the error in the prediction increases as the mass decreases.

Cui et al. (2012) have shown that the presence of baryons can bias the HMF by a few per cent with respect to a dark matter-only simulation. Moreover, Beltz-Mohrmann, Berlind & Szewciw (2020) have shown that this bias can be as large as 20 per cent for low masses and that differences between the HMF with and without baryons can be highly dependent on the hydrodynamical model used in the simulations. While we have not explicitly included baryonic effects in our model, our predictions are accurate enough for halo occupation distribution (HOD) modelling. Note that, in practice, a few per cent error in the HMF at lower mass region is not to be critical for building a galaxy catalogue using the galaxy–halo relation, such as HOD model, since the model will randomly drop a significant fraction of haloes anyway. Thus, for modelling galaxy clustering, having correct halo clustering statistics is more important than an accurate HMF.

A similar model to the one presented in this work can potentially be trained to learn to include baryonic effects in the output or even predict some baryonic properties. We reserve these studies for future work.

7.3 Two-point clustering

As mentioned, for galaxy clustering modelling, we are interested in whether the model is able to reproduce large-scale structure statistics in the HR data. In this section, we explore the two-point statistics of the predictions that are not explicitly optimized by our model.

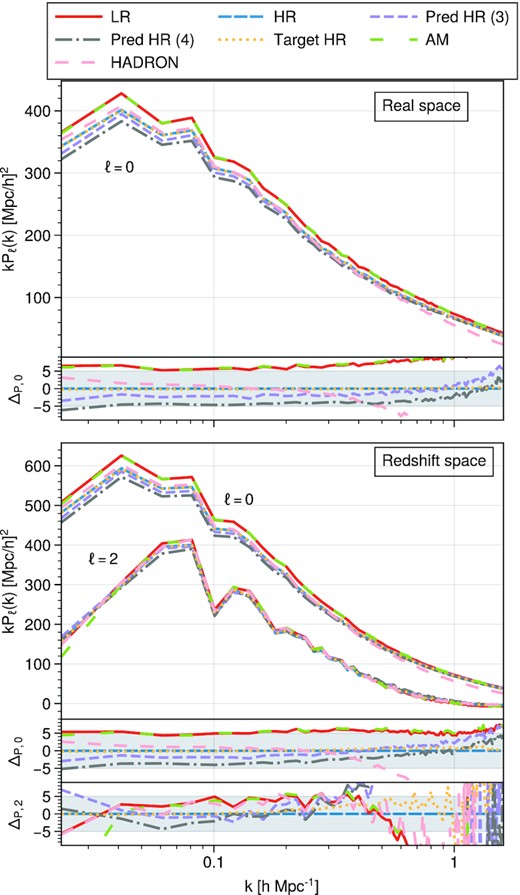

Fig. 8 shows the power spectra multipoles of the input/output data, as well as the predictions of the AM approach and our RF model. In order to compute the power spectra in redshift space, we apply RSD using the UNIT cosmology. The velocities used for the predicted catalogues are the ones originally in the LR catalogue.

Power spectra monopoles and quadrupoles in real (top panel) and redshift (bottom panel) space. The sub-panel in the top shows the per cent error to the HR power spectrum. The sub-panels in the bottom show the per cent error defined as |$\Delta _{P,\ell } = 100\times \frac{P_\ell - P_{\ell ,\rm HR}}{P_{\ell ,\rm HR}}$| in both the mono- and quadrupoles. The shaded area highlights the 5 per cent error range.

The abundance-matched two-point correlation does not differ significantly from the LR power spectrum in neither real nor redshift space. In contrast, the clustering of the catalogue predicted with our three-feature RF does match the HR power spectra within a 3 per cent error in the for |$k\in (0.03, 1)~h\, \rm Mpc^{-1}$|. The four-feature RF shows a larger negative bias with respect to the reference but is still well within the 5 per cent error band. We remark that though the velocities are not modified by our model, the resulting redshift space clustering is consistent with that of the actual HR catalogue. In fact, the power spectrum quadrupole of the prediction closely matches (<5 per cent error) the HR power spectra up to |$k\sim 0.4~h\, \rm Mpc^{-1}$|.

The fact that our multiple-feature model perform better than the AM model shows that information other than LR halo mass is necessary for recovering the clustering statistics of a HR halo sample. Through the halo one-to-one matching procedure, we encode highly relevant environmental information such that the model recovers the reference clustering accurately. The engineered features added to the data were in the end not as relevant. We performed tests with higher numbers of features and find that some of the environmental densities are used by the forest, though with a low relative importance.

Moreover, the power spectra from hadron are also within the 5 per cent error range up to |$k\sim 5~h\, \rm Mpc^{-1}$|. This implies that the environmental features encoded in the halo bias relation extracted by hadron are useful in recovering the clustering statistics on large scales. On small scales, however, the hadron results do show a large negative bias of the power spectrum. This may be the result of the random downsampling the code is forced to do in order to match the number of objects from the reference. It is possible that a modified version of hadron would perform better for this precise task. We explore this possibility in a future study.

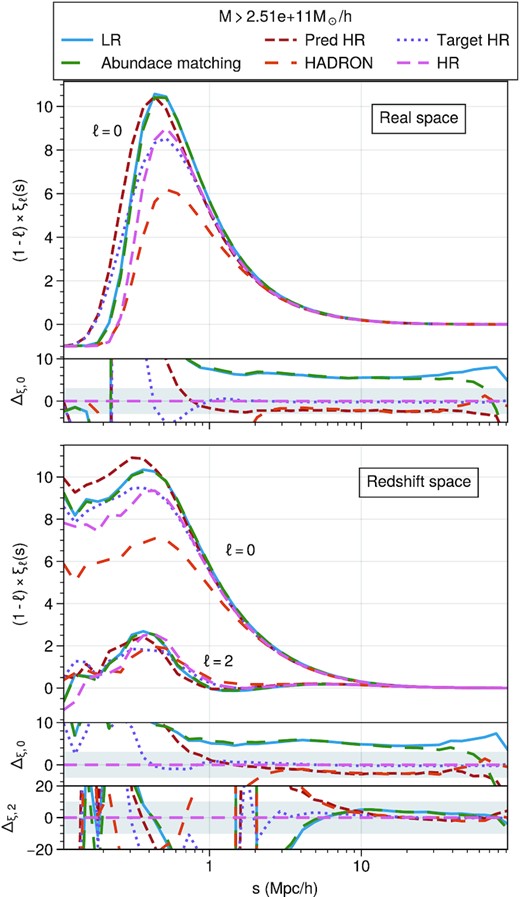

We also evaluate the clustering in configuration space in Fig. 9. In this figure, we show only the prediction of our fiducial three-feature model and the AM approach. The monopole error over separations of |$0.5~h^{-1}\rm Mpc$| is consistent with the HR reference within 3 per cent. However, it should be noted that the target HR also shows a significant deviation from the complete HR catalogue on the smallest scales. We attribute these differences to the matching procedure, given that small scales would be most sensitive to the procedure. Furthermore, the deviations from the complete HR reference of the target and our model’s prediction are consistent with each other. To further improve the performance, besides calibrating halo masses, we will need to calibrate the halo positions, we leave this for a future study.

Correlation function monopoles and (negative) quadrupoles in real (top panel) and redshift (bottom panel) space. The sub-panel in the top shows the per cent error to the HR correlation function. The sub-panels in the bottom show the per cent error defined as |$\Delta _{\xi ,\ell } = 100\times \frac{\xi _\ell - \xi _{\ell ,\rm HR}}{\xi _{\ell ,\rm HR}}$| in both the mono- (top) and quadrupoles (bottom). The shaded areas highlight the 3 and 10 per cent error range for the monopole and quadrupole, respectively.

Our model is also able to improve upon hadron’s results, which deviate over 2.5 per cent from the reference at scales of |$\sim 2~h^{-1}\, \rm Mpc$| in both real and redshift space. These deviations are also consistent with the larger error on large k observed in the Fourier space results.

Despite the deviations due to matching, the improvement of our model over the LR catalogue is significant. The redshift-space 2PCF quadrupole seems more sensitive to the matching, given that the target shows deviations from the HR catalogue for |$\sim 1~h^{-1}\rm Mpc$|. Our model’s prediction also does not seem to perform as well in this metric and shows deviations of over 20 per cent at these scales.

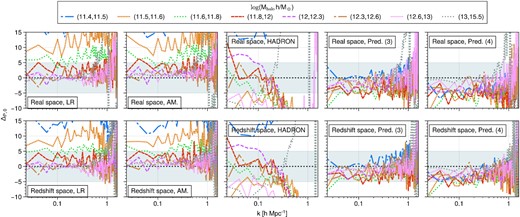

In addition, we are interested in recovering the clustering in mass bins. Fig. 10 shows the per cent error difference of the mass-binned power spectra relative to the analogous HR spectra. Once more we observe that the AM coincides with the LR results and is not effective in recovering the clustering. Moreover, hadron does influence the mass-binned clustering significantly and yields an error of less than 10 per cent up to |$k\sim 0.5~h\, \rm Mpc^{-1}$| for all but the least massive bin. This may be due to the fact that hadron prioritizes more massive haloes when implementing halo exclusions. In contrast, our three-feature RF (centre-right panels) is able to match the target with under 5 per cent error for all mass bins. Evidently, the low mass bins are the ones that show the largest improvement, while for the large mass bins our model introduces a small negative bias. When using the four-feature RF (right-most panel), the bias introduced is larger; specially in the low mass bins which seem to be over corrected with respect to the reference. Moreover, we experimented with larger numbers of features and found that, while the HMF is more closely recovered (see Fig. 7), the bias introduced in the clustering was even larger. There is a trade-off between approximating the HMF and the two-point clustering. In choosing a fiducial model, we take into account that a precise clustering is more important than a precise low mass HMF when constructing galaxy catalogues that mimic observations. We therefore chose our fiducial three-feature model such that the HMF is sufficiently well-approximated and the bias in the clustering is small enough.

Percent error to the mass-binned HR power spectrum. The left-most panels show the ratios of the LR power spectra, the middle panels correspond to the AM approach and the right-most panels show the results of our RF model. The shaded area highlights the 5 per cent error range. Our model succeeds in matching the HR power spectra to 5 per cent accuracy in the mass bins shown.

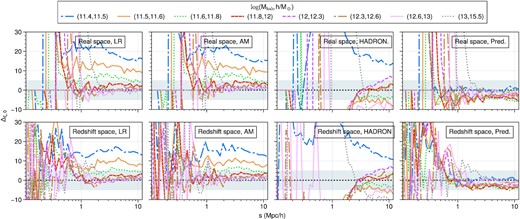

We further test the massed binned clustering in configuration space in Fig. 11, where we no longer show the four feature model results. The configuration space results confirm the Fourier space observations, with 2PCFs resulting from the RF model consistent with the HR clustering at 5 per cent level for |$s \gt 1~h^{-1}\rm Mpc$|. Smaller scales show large discrepancies consistent with the ones observed in Fig. 9. We observe that larger mass bins start showing a large discrepancies at larger scales than the lower mass counterparts. The reason is that larger mass haloes suffer an exclusive effect at larger scales, which make the correlation function to be zero or even negative. We would see large percentage error while the correlation function is close to zero.

Percent error to the mass-binned HR correlation function. Analogous to Fig. 10. Configuration space results confirm that our model succeeds in matching the HR 2PCFs to 5 per cent accuracy in the mass bins shown in scales larger than |$1~h^{-1}\rm Mpc$|. Large deviations near |$s\sim 0.3~h^{-1}\rm Mpc$| are due to the 2PCFs crossing zero.

7.4 Three-point clustering

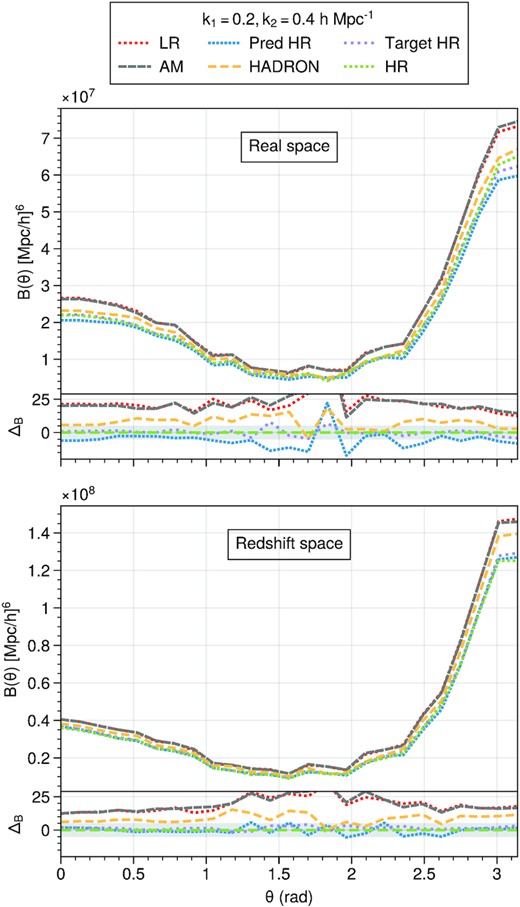

Higher order statistics are also useful for extracting cosmological constraints and studies galaxy properties. Therefore, we also compare the three-point clustering of our model’s output against the HR reference. We use a configuration with |$k_2=2k_1=0.4~h\, \rm Mpc^{-1}$|.

Fig. 12 shows the real- and redshift- space bispectra for the different samples and their per cent difference to the HR reference. In real- and redshift- space, the LR and AM curves overlap, which confirms that the mass information is not enough to correct the LR catalogue masses. In addition, these curves show a per cent error of ∼20–25 per cent over the whole θ range. Moreover, we note that the Target HR curve differs slightly (∼5 per cent) from the real HR bispectrum as θ approaches π, however the curves approximate each other from θ = 0 up to θ = 3. hadron is able to retrieve bispectra consistent with the reference within a ∼15 per cent difference in both real ad redshift space. Our three feature prediction shows a significant improvement over the LR bispectra, specially in redshift space where the predicted bispectrum lies within a 5 per cent error to the reference HR. In real space, the bispectrum of our prediction is slightly negatively biased by ∼5 per cent but is noisy near π/2. Overall, our prediction shows a 20 per cent improvement in the bias of the bispectra with respect to the reference.

Analogous plot to Fig. 8 for the reduced bispectra of the different catalogues. The per cent error difference is defined as |$\Delta _B = 100\times \frac{B - B_{\rm target}}{B_{\rm target}}$|.

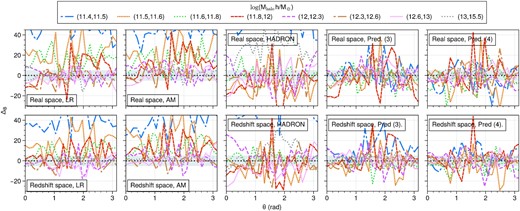

Analogous to our analysis of the two-point information, we compute the bispectra in mass bins and report the per cent differences to the reference. Fig. 13 shows these results. We observe again that the AM fails to correct the bias of the low-mass tracers and may even bias the high mass tracers, especially in redshift space. Despite the relatively good performance of hadron over the whole mass range, the mass binned bispectra are often more biased than the LR ones. hadron helps in recovering the bispectra for some mass bins, but introduces larger biases for others, such as the most and least massive ones. This is possibly because hadron only implements a single halo exclusion length for all haloes. A mass-dependent exclusion distance may help solve this issue. We explore this in future work. On the fourth panel of the same figure, we observe that the prediction of our three-feature model shows three-point clustering that is consistent with the reference in all the mass bins shown. Even though the curves obtained show an oscillating behaviour, they seem to oscillate around zero. For completeness, we add the results of the four-feature model in which we observe similar results to the three-feature bispectra for most mass bins. The least massive bin from the four-feature model is less biased than the three-feature, which is consistent with the larger negative biased observed in the two-point clustering of the four-feature compared to the three-feature model.

Percent errors in the mass-binned three point statistics with respect to the HR sample. Analogous to Fig. 10. We show the 5 per cent error region for reference.

8 RELATED WORK

The use of ML techniques in cosmology has been an area of great interest in the recent years, mainly due to the potential of these model to replace expensive computations. Kodi Ramanah, Charnock & Lavaux (2019) implemented a convolutional GAN capable of generating halo fields from cheap Lagrangian perturbation theory initial conditions. While this approach is significantly cheaper than an N-body simulation, it is entirely field-based, thus it generates haloes in a mesh rather than discrete haloes. The use of mesh-structured data in this case limits the spatial resolution of the output at the grid cell size but allows for a faithful reproduction of the large scales. While the final product is somewhat similar to our method, we have the advantage of not being limited by any grid spatial resolution.

The approach presented in Li et al. (2021) and Ni et al. (2021) is more similar to what we aim at accomplishing with our method. Li et al. (2021) develops a convolutional GAN that generates large displacement fields that allow them to move initial particle data. While the displacements themselves are limited by the grid size, the spatial resolution of the particle data is not and the output of the model is a particle catalogue rather than a field. Ni et al. (2021) extends this model to produce not only displacements but also velocity fields. The output of their models is then used to create halo catalogues that agree at a ∼10 per cent level with those of their HR reference. Moreover, the authors quote ∼10 per cent agreement with their reference in two- and three-point clustering measurements on the dark matter particles. The resolution difference between input and output is a factor 8 per dimension, going from 643 to 5123 particles.

9 CONCLUSIONS

With the deployment of latest redshift surveys and the development of new and larger ones, the amount of cosmological data is expected to increase by orders of magnitude. The reduced statistical uncertainty that comes with larger data volumes may cause that systematical errors that can currently be safely ignored, become important in the future. This implies that large cosmological volumes must be simulated with a high-mass resolution in order to be able to study these effects. However, the cost of full N-body simulations with the required size and resolution is quickly becoming too large for the latest hardware and software. To overcome this issue, we present an ML approach that generates an approximate HR halo catalogue by modifying the halo masses of a low resolution FoF halo catalogue, thereby avoiding the full computation of the HR simulation.

In this work, we have presented an RF regressor that predicts HR halo masses when given the dark matter overdensity, halo mass, and halo particle velocity dispersion obtained from a low-resolution halo catalogue. We base our method in matching pairs of low and HR haloes based on spatial distance and a mass threshold. We show that the HMF is reproduced within 3 per cent error for masses larger than 1012.5 h−1 M⊙ and larger error for smaller masses (e.g. 10 per cent at 1011.5 h−1 M⊙). This is an improvement compared to the original LR catalogue of which HMF is biased by more than 3 per cent at <1013.5 h−1 M⊙. On the other hand, we argue that these errors in HMF are not important for real applications such as HOD. Moreover, our approach includes a classifier that is able to discard low-resolution haloes that are not likely to have an HR halo match.

Even though our approach only predicts halo masses, the two-point clustering is recovered for haloes of masses larger than ∼2.5 × 1011h−1 M⊙, which amounts to ∼200 HR and 25 LR dark matter particles. These haloes are small enough to potentially host ELGs, which are a major tracer for galaxy clustering for DESI. The power spectrum of the prediction is shown to be within a 3 per cent error with respect to the real HR catalogue down to scales of |$k\approx 1~h\, \rm Mpc^{-1}$| in both real and redshift space. Moreover, the power spectrum is also recovered within 5 per cent error in various mass bins. In configuration space, the low-scales (|$s\sim 1 h^{-1}\rm Mpc$|) show large discrepancies between the one-to-one matched and the real HR catalogues, which affect the prediction of our model. These discrepancies are therefore attributed to our matching algorithm rather than the model itself.

The predicted catalogue also shows three-point clustering consistent with the reference one within 10 per cent, which is a large improvement compared to the low-resolution catalogue. The mass-binned bispectra are shown to be bias corrected as well, though there are still obvious variances with θ after the correction.

We attribute the success of the model in recovering these various statistics to our unique one-to-one approach and the halo matching procedure we devised for creating the training data set. Indeed, there seems to be enough environmental information encoded by the matching such that the designed environmental halo features |$\delta ^{\rm M}_h$| were not fundamental for the RFs and are therefore discarded in our fiducial model. We also find that the halo discarding procedure does not need to be perfect given that interchanging a pair of low mass, low-resolution haloes is unlikely to change the clustering very significantly.

We highlight that our approach is advantageous in terms of hardware requirements when compared with models based on deep learning techniques applied to mesh-based data. The RF is able to take halo features agnostic to the halo’s position, meaning that it is scalable and independent on mesh size or resolution. None the less, an RF of the characteristics we found to give good predictions requires a large amount of RAM (∼60 Gb in this study). In addition, our model seems to be robust to random initialization.

Further work is needed to devise a similar model that is able to use Rockstar (Behroozi, Wechsler & Wu 2013) halo features. Moreover, modern graph-based architectures are an interesting direction to be explored given the spatial structure of our data. Low-resolution realizations may be created with a faster mesh-based N-body method such as FastPM (Feng et al. 2016), in which case the computational gain from a improved resolution method like ours will be even more significant.

ACKNOWLEDGEMENTS

DFS acknowledges support from the Swiss national Science Foundation (SNF) grant 200020_175751. GY and SRT acknowledge financial support from the Ministerio de Ciencias Innovación y Universidades (MICIU)/Fondo Europeo de Desarrollo Regional (FEDER) (Spain) under project grant PGC2018-094975-C21.

The Universe N-body Simulations for the Investigation of Theoretical models from galaxy surveys (UNIT simulations) used in this work have been done in the MareNostrum Supercomputer at the Barcelona Supercomputing Center (Spain), thanks to the CPU time awarded by the Partnership for Advanced Computing in Europe (PRACE) under project grant number 2016163937. Friends-of-Friends catalogues have been calculated at the Leibniz Supercomputing Centre of the Bavarian Academy of Sciences and Humanities (LRZ) Munich within the project pr74no.

DATA AVAILABILITY

The halo catalogues will be publicly available through the UNIT simulation website http://www.unitsims.org.