-

PDF

- Split View

-

Views

-

Cite

Cite

Ilya N Pashchenko, Using the bootstrap to assess uncertainties of VLBI results – I. The method and image-based errors, Monthly Notices of the Royal Astronomical Society, Volume 482, Issue 2, January 2019, Pages 1955–1965, https://doi.org/10.1093/mnras/sty2654

Close - Share Icon Share

Abstract

Very long baseline interferometric (VLBI) observations of quasar jets enable one to measure many theoretically expected effects. Estimating the significance of observational findings is complicated by the correlated noise in the image plane. A reliable and well justified approach to estimating the uncertainties of VLBI results is needed as well as significance testing criteria. We propose to use the bootstrap for both tasks. Using simulations we find that bootstrap-based errors for the full intensity, rotation measure, and spectral index maps have coverage closer to the nominal values than conventionally obtained errors. The proposed method naturally takes into account heterogeneous interferometric arrays (such as Space VLBI) and can be easily extended to account for instrumental calibration factors.

1 INTRODUCTION

Very long baseline interferometry (VLBI) is a powerful method for exploring the most compact emitting celestial objects. It allows one to investigate the structure of relativistic quasar jets (e.g. Britzen et al. 2010; Molina et al. 2014), their magnetic fields (e.g. Hovatta et al. 2012; Cohen et al. 2014, 2015; Kravchenko, Kovalev & Sokolovsky 2017), particle content (e.g. Homan et al. 2009) etc. with the highest angular resolution (Gómez et al. 2016). Typical VLBI results are images (distributions of Stokes parameters or their combinations, e.g. fractional linear polarization, spectral index or rotation measure (RM) maps) or direct models of the interferometric visibilities, which are conventionally represented by several simple components (e.g. circular or elliptical Gaussians, Pearson 1999).

Despite the great wealth of information obtained using VLBI, the uncertainties of the VLBI results, their robustness and statistical significance remain an open issue. This is because the procedure for VLBI data processing is very complex and includes numerous non-linear transformations. Reliable uncertainty estimates are crucial for testing the significance of the obtained results. At the same time even the most recent Space VLBI results with the highest angular resolution (e.g. Giovannini et al. 2018) were obtained using an imaging algorithm that lacks an uncertainty output.

In this paper we propose to use the bootstrap – a well known method of assessing the uncertainties – to estimate the errors in the VLBI images and their combinations.

In Section 2 we review conventional methods for estimating errors, their possible shortcomings, and those earlier studies that attempted to address these issues. In Section 3 we describe the methodology, present the algorithm for the VLBI case, and outline the possibilities for extending it. In Section 4 we test the method using simulations with artificially generated data sets and compare our results with the conventional method for estimating image errors. In Section 5 we discuss the limitations and advantages of using the bootstrap for estimating uncertainties of VLBI results. We summarize our findings in Section 6. We apply a real-life test to analyse the significance of RM gradients in Appendix A.

2 CONVENTIONAL METHODS

2.1 Quantifying the image uncertainty

An interferometer measures the Fourier components of the spatial spectra of the source (i.e. interferometric visibility function or visibilities) at certain points in the spatial frequency plane ((u, |$v$|)-plane). To obtain the image of the source one needs to deconvolve the Fourier transform (FT) of the observed visibilities (dirty image). The most widely used deconvolution algorithm is CLEAN (Högbom 1974, and also its modern modifications, e.g. Clark CLEAN, Clark 1980; multi-scale CLEAN, Wakker & Schwarz 1988). It effectively fits the observed visibilities with the FT of a number of delta functions (Schwarz 1978). The output of the algorithm is the model of the observed visibility function consisting of a list of delta functions in the image plane (called CLEAN components, |$\rm CC$|). Its Fourier transform equals the observed visibilities within errors.

Due to using point sources for representing the source structure CLEAN does extrapolation in the (u, |$v$|)-plane. Briggs (1995) found that this extrapolation is done noticeably incorrectly. To exclude the information obtained from extrapolated high spatial frequencies the CCs are convolved with a Gaussian beam. The result of the convolution is called a CLEAN image. The residual image left after deconvolution is traditionally added back to the CLEAN image to account for possible uncleaned flux and to estimate the noise (Briggs 1995).

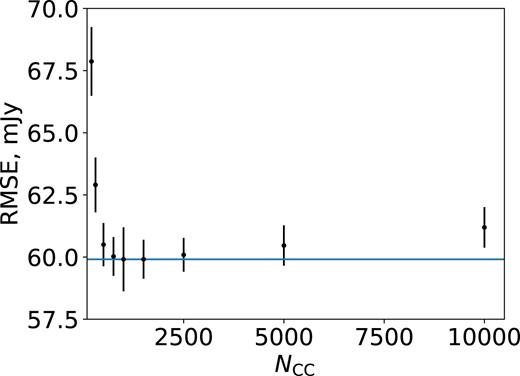

Cross-validation (CV) score versus number of CLEAN iterations for source 0055+300.

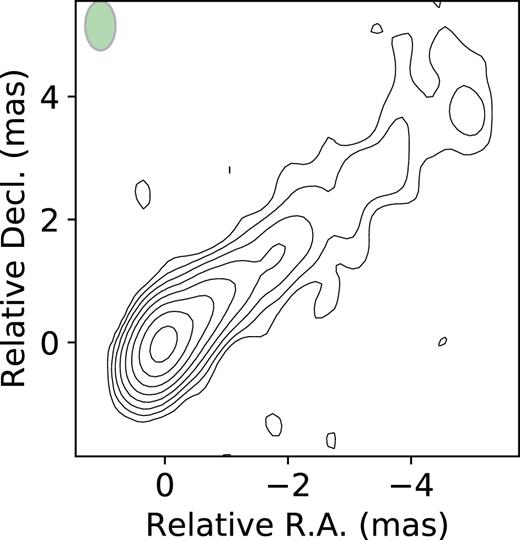

CLEAN image of source 0050+300 obtained using the number of iterations suggested by CV. The green ellipse in the image shows the synthesized beam. Contours are plotted with a factor of 2. The lowest contour value is 1.9 mJy beam−1.

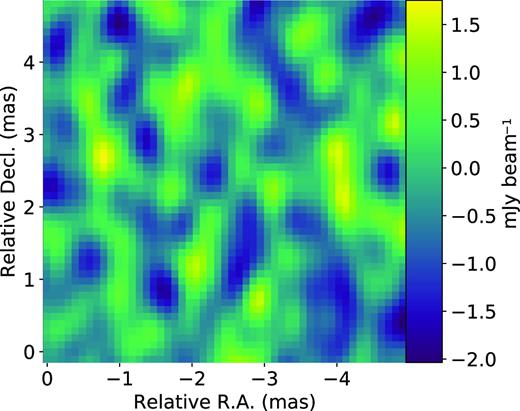

Part of the residual image at a distance of 1 arcsec from the CLEANing window. The green ellipse in the image shows the synthesized beam.

2.2 Quantifying the uncertainty of combinations of images

Spectral index

Multifrequency VLBI observations of AGN jets provide important information on the physics of the outflows. Maps of the spectral index α, where the spectral flux Sν observed at the frequency ν is Sν ∝ να, can be used to constrain the nature of the jet components (e.g. Hovatta et al. 2014) or the VLBI core (Lisakov et al. 2017), jet magnetic field configuration and acceleration mechanism of the emitting particles (Clausen-Brown, Lyutikov & Kharb 2011). When calculating the uncertainties of the spectral index distribution the correlated noise in the image plane is generally ignored (Fromm et al. 2013). However, it could be the source of fake patterns in the spectral index distribution and thus should be taken into account.

Significance of transverse rotation measure gradients

Transverse RM gradients were predicted for jets carrying a helical magnetic field (Blandford 1993) and successfully discovered by Asada et al. (2002) in source 3C 273. Subsequent works (Gabuzda, Murray & Cronin 2004; Contopoulos et al. 2009) claimed detection of many RM gradients. The failure to detect RM gradients in even well resolved quasar jets and the results of simulations with physical models of the helical magnetic fields (Broderick & McKinney 2010) resulted in Taylor & Zavala (2010) proposing a set of criteria for the significance of the RM gradient. The most stringent requirement put forward was that a significant RM gradient should be detected with a length not smaller than three ‘resolution elements’ (usually considered as three beamwidths).

It was noted in Mahmud et al. (2013) that the criteria proposed by Taylor & Zavala (2010) are too conservative and they, therefore, increase the significance of the RM gradient detections by decreasing the statistical power (i.e. probability of detecting the true RM gradient). The influence of noise on the significance of the observed RM gradients was investigated in Hovatta et al. (2012). Simulations with artificial sources with RM = 0 (i.e. unaffected by Faraday rotation) allowed Hovatta et al. (2012) to estimate the false positives rates (FPR) for detecting RM gradients with their observational set-up. They concluded that with FPR nearly equal to |$1{{\ \rm per\ cent}}$| one can use criteria such as two beamwidths as a minimal gradient length and 3 σ as a minimal change of RM value, where σ is the mean RM error at the endpoints of a transverse slice of a jet.

However, the proposed criteria could be criticized in several ways. First, a non-local hypothesis test of the RM gradient significance depends only on the two endpoints of the corresponding RM slice. It is desirable for the test to account for all the data. Secondly, the endpoints used in the test depend on the chosen clipping level of a polarized flux. Finally, further we show that the rms-based approach to estimating the error in the pixel flux results in statistically non-optimal estimates.

Moreover the proposed criteria is only applicable to the distribution of the polarized flux used in simulations. This null hypothesis model consists of the distribution of Stokes parameters Q and U equal to some constant fraction of the Stokes I distribution. The real distribution of the polarized flux is actually unknown due to the uncertain RM contribution, noise, and convolution with the beam. While it seems natural to assume a constant RM distribution in the simulations that estimate FPR, the possible dependence of the RM estimates on the true (i.e. at infinite frequency) polarized intensity could result in an unaccounted bias in the FPR estimate. To partially overcome this one should use the null model of the polarized flux that is equal to the estimated one (i.e. corrected for the measured RM).

With these caveats in mind it is desirable to formulate a well justified procedure for estimating the significance of the RM gradients that relies only on the data at hand, uses them all, and is free of subjective criteria. In Section 4.4 we propose a criterion for a statistically significant RM gradient that addresses these issues.

3 METHOD

The bootstrap (Efron 1979) is a kind of resampling method (Feigelson & Babu 2012) that is used mainly for assessing the accuracy of estimates. It is a robust alternative to the asymptotic parametric methods when their assumptions could be violated or when it is difficult or impossible to obtain a closed-form analytical expression for uncertainty estimates. The main idea of the bootstrap is to approximate the process of data generation and to explore the sampling properties of the estimate of interest using a large number of artificially generated data sets.

We follow Efron & Tibshirani (1994) in describing the bootstrap and different bootstrapping methods. In general one observes some data |$\mathbf {y}$|, (yi, |$i=\overline{1, N}$|) – the vector of the measured data values – and estimates some statistic (that is a function of the data) of interest |$s(\mathbf {y})$| and its associated uncertainty. There is an underlying probability mechanism P (or data-generating process, DGP) that produces the measured data, e.g. |$\mathbf {y} = f(\mathbf {x}, \mathbf {\theta }) + \mathbf {\epsilon }$|, where |$f(\mathbf {x}, \mathbf {\theta })$| is some underlying unknown model with a vector of covariates |$\mathbf {x}$|, e.g. points where data are measured, |$\mathbf {\theta }$| is a vector of model parameters and |$\mathbf {\epsilon }$| is the model of noise (e.g. independent and identically distributed Gaussian noise). One estimates |$s(\mathbf {y})$| using e.g. best-fitting values of the model parameters: |$\skew6\hat{\mathbf {\theta }} = s(\mathbf {y})$|. In general the algorithm used to estimate |$s(\mathbf {y})$| could be some highly non-linear algorithm or even a computer algorithm (Chernick 2007).

The bootstrap allows one to estimate the error of |$s(\mathbf {y})$| by approximating the data-generating probability mechanism P and simulating the observed data many (B) times with the approximated DGP, each time obtaining the so-called bootstrap sample|$\mathbf {y}_{\mathrm{boot}}$|. Then each of the generated bootstrapped samples is used to estimate the desired statistic |$s(\mathbf {y}_{\mathrm{boot}})$|. Their distribution is used to estimate the uncertainty of the statistic s for the original (i.e. the observed) sample |$s(\mathbf {y})$|.

Thus the bootstrap consists of two main approximations: approximation of the probability mechanism P; and approximation due to the finite number B of bootstrapped samples. The latter is ∝B−0.5 and can be made negligible relative to the former (Efron & Tibshirani 1994). Depending on the approximation used the bootstrap is called a non-parametric or ‘pairs’ bootstrap if (2) is used (originally introduced by Efron 1979), a residuals bootstrap if (3) is used, and a parametric bootstrap if (4) is used. These definitions reflect the way in which bootstrap samples are generated under the selected approximation of the data-generating process. In the pairs bootstrap the whole observations |$\mathbf {y}_{\mathrm{obs}}$| are resampled from the corresponding |$F_{y_{\mathrm{obs}}}$|, where |$\mathbf {y}_{\mathrm{obs}}$| can include covariates in the regression problem. Because each of the N observed data points has a probability of 1/N, resampling occurs by sampling with replacement from the original data set N times. The pairs bootstrap is the most robust to model misspecification because its approximation of the data-generating process (2) does not make any assumptions about the true model.

In the residuals bootstrap the data set is replicated by sampling with replacement from the empirical distribution of the residuals between the observed data and fitted model and then followed by adding the obtained residuals to the model predictions. The residuals bootstrap assumes that Fε does not depend on covariates i.e. the residuals are homoscedastic. However, model P could be modified to account for the heteroscedasticity of the residuals, i.e. their dependence on covariates (Efron & Tibshirani 1994; Davison & Hinkley 1997). The residuals bootstrap is more sensitive to a model-specification error than the pairs bootstrap. Nevertheless, the model does not have to fit the observed data perfectly to give a reasonable result (Efron & Tibshirani 1994). However, if it is a good approximation then the residuals bootstrap delivers more efficient uncertainty estimates than the pairs bootstrap.

Conventional methods like least-squares fitting basically make the same assumptions as the parametric bootstrap,6 but some methods do not have a closed-form expression for error estimates (e.g. non-linear regression or some sophisticated computer algorithm). If the model approximates DGP well then the parametric bootstrap delivers the most efficient error estimates. According to the ‘bias-variance trade-off’ this comes at the cost of the biased error estimates when the model used is a bad approximation to the true model underlying the DGP (see Section 5.1).

3.1 Bootstrap for VLBI data

In the case of VLBI observational data |$\mathbf {y} = \mathbf {V}_{\mathrm{obs}} = (V_{\mathrm{obs},i}),$||$i=\overline{1, N_{\mathrm{obs}}}$|, where |$\mathbf {V}_{\mathrm{obs}}$| are Nobs measurements of the visibility function at certain points |$\mathbf {x} = (\mathbf {u}, \mathbf {v}) = (u_i, v_i)$| of the (u, |$v$|)-plane. The uncertainty of some visibility data statistic |$s(\mathbf {V}_{\mathrm{obs}})$| is estimated using traditional methods, i.e. image deconvolution, direct visibility modelling, where s could be the flux of certain pixel(s) in the CLEAN image, parameters of a simple model fitted directly to the interferometric visibilities |$\mathbf {V}_{\mathrm{obs}}$| etc. Thus, in general, a certain function with parameters |$\mathbf {\theta }$| is ‘fitted’ to the observed visibilities |$\mathbf {V}_{\mathrm{obs}}$| by some algorithm. To bootstrap the visibility data one has to decide which approximation from (2)–(4) to use. Resampling visibilities with their (u, |$v$|)-points, i.e. using approximation (2), could result in biased estimates due to the degraded resolution. It is expected that e−1 (36.8 per cent) of data points will not get into a single bootstrapped data set (Hastie et al. 2001). Thus the resampling procedure that corresponds to (2) effectively reduces the coverage of the (u, |$v$|)-plane in every single bootstrapped sample.

To avoid the problem of different coverage of the (u, |$v$|)-plane one can use approximation (3) and resample the residuals between the observed and model visibilities. This resampling scheme keeps the (u, |$v$|)-coverage for each of the bootstrapped samples (and, thus, its informational content, Davison & Hinkley 1997) the same as for the original data set. Thus P in VLBI observations could be approximated by |$\skew5\hat{P} = (\skew6\hat{\mathbf {\theta }}, \skew5\hat{F}_{\epsilon })$|, with |$\mathbf {V}_{\mathrm{obs}} = f((\mathbf {u}, \mathbf {v}), \mathbf {\theta }) + \mathbf {\epsilon }$|, where |$f((\mathbf {u}, \mathbf {v}), \mathbf {\theta })$| is the model fitted by some algorithm7 (e.g. a deconvolution algorithm), and |$\skew6\hat{\mathbf {\theta }}$| are the estimated parameters of the model (e.g. a list of CLEAN components for CLEAN-deconvolution, a set of Gaussians for direct fitting of the visibility measurements). |$\skew5\hat{F}_{\epsilon }$| is the empirical distribution of the residuals between the observed visibilities and model values. If |$\skew5\hat{F}_{\epsilon }$| may be approximated by some parametric form, e.g. Gaussian, one can go further and assume a fully parametric approximation (4).

As already noted, (3) requires that the residuals distribution should not depend on covariates (ui, |$v$|i). For VLBI observations different baselines of the interferometer may have different sensitivities and this is especially true for Space VLBI. Thus one should alter the model of P to include the distribution of the residuals on different baselines individually |$\skew5\hat{P} = (\skew6\hat{\mathbf {\theta }}, \skew5\hat{F}_{\epsilon , j})$|, where |$j=\overline{1, N_{\mathrm{baselines}}}$| is the baseline index. This effectively assumes a different value of noise on different baselines. Baseline-independent resampling of residuals could bias the resulting uncertainty estimates in the case of a single ‘noisy’ baseline that supplies residuals to other more sensitive baselines in the bootstrapped data sets.

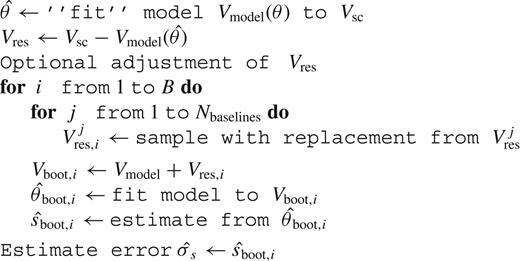

3.2 Implementation

Taking into account the results of Section 3.1, the algorithm of the error estimation for certain visibility data statistics can be specified as follows (see Algorithm 1 for a schematic representation). First, one obtains self-calibrated visibilities Vsc and parameters |$\skew6\hat{\theta }$| of the model fitted to visibilities. The estimate of interest is often some function of the model parameters (e.g. flux of a pixel(s) in the CLEAN image or position of a single Gaussian component). Then one calculates the residuals Vres between the observed and model visibilities. For each baseline the residuals are optionally adjusted, e.g. filtered from outliers and fitted with some probability density model (see Section 3.3). Then the bootstrapped data set is created B times by sampling with replacements from the residuals (or from their density estimate) on each baseline and adding the obtained resamples to the model visibilities. The bootstrap data sets Vboot,i are analysed in exactly the same way as the observed one. The obtained distribution of the statistic of interest s(Vsc) is used to estimate its uncertainty |$\skew5\hat{\sigma }_{s}$|.

Bootstrapping self-calibrated visibility data Vsc using model Vmodel (with parameters θ) to estimate the uncertainty of some statistic s(Vsc)

3.3 Modifications

If the distribution of the residuals between the observed and model data can be approximated by some parametric density (e.g. Gaussian or t-distribution), then one can repeatedly draw samples from the fitted distribution instead of directly sampling from the raw residuals. This effectively uses the parametric bootstrap (4). One can also fit some non-parametric model to the residuals distribution on each baseline, e.g. kernel density estimate (KDE) or Gaussian mixture model (Hastie et al. 2001). Finally, one can just use raw residuals and resample from them. The advantage of using the more constrained model of the residuals is that it results in more effective error estimates in the case of a good approximation of the underlying DGP. Conversely, if the model is clearly wrong, using the parametric bootstrap introduces a bias to the resulting errors. As discussed above, the resampling step should be done on a per-baseline basis, especially if the sensitivities of the individual baselines are different. Heteroscedastic residuals within the individual baselines could be treated using the ‘wild’ bootstrap (Wu 1986).

Our implementation consists of the following steps. First, we search for outliers on each baseline, frequency band, and visibility hands8 separately using the DBSCAN algorithm (Ester et al. 1996). We treat 1D residuals of the real and imaginary parts of self-calibrated visibilities as well as 2D residuals on the complex plane as features for a DBSCAN algorithm. DBSCAN is a density-based clustering algorithm that marks points as outliers if they are isolated in low-density regions. The KDE of the outlier-filtered residuals distributions for real and imaginary parts are obtained.9 The width of the KDE Gaussian kernel is found using five-fold cross-validation (Hastie et al. 2001). Only positively weighted visibility measurements were used during this procedure.

As already noted, this scheme could be extended to account for instrumental effects, e.g. self-calibration errors. In that case the model f underlying DGP will include gains of the antennas as parameters. In a similar manner the influence of any other instrumental effect (e.g. polarization leakage or overall amplitude calibration offset) on the uncertainty estimate can be accounted for if its value can be estimated.

4 COMPARING BOOTSTRAP-BASED ERRORS TO CONVENTIONAL ERRORS BY USING SIMULATIONS

4.1 Coverage

Recall that by definition the confidence interval (CI) is a region that contains the ‘true’ value of the parameter of interest (e.g. flux of a pixel) with a specified frequency or confidence level (Wasserman 2010), say 95 |${{\ \rm per\ cent}}$|. This means that the confidence interval is supposed to contain the true value of the parameter with frequency equal to 0.95 under the repeated constructions of CI using independent observational data sets. But if some assumptions underlying the procedure used to build CI are violated, e.g. data sets are not normally distributed for the asymptotic CI of the mean, then the fraction of CIs that contain true values could differ from the nominal value that is described by the confidence level (0.95 in this case). The coverage probability or coverage of the interval estimate of a parameter (e.g. confidence interval) is the long-run probability for this estimate to contain the true value of the parameter. For VLBI observations the coverage of the CI for the pixel flux could be represented as follows. The source is observed with VLBI and the resulting data set is calibrated and imaged with the CLEAN algorithm. By using a certain procedure CI for the pixels, the flux is obtained. Then the same source is observed again many times with the same VLBI array (with the same sensitivity, time interval, and (u, |$v$|)-plane coverage). The source is assumed to be stationary. Then the coverage of the CI for the pixels flux is the fraction of cases where those CIs contain true values. The same applies for any other function of the observed data, e.g. the size of the fitted Gaussian component or the shift between images obtained at two frequencies. As the definition of coverage involves the notion of the true value of the parameter, simulations with a known model are required to assess the coverage.

Optionally the 1 − α confidence interval (that is, the CI with a 1 − α confidence level) should have coverage 1 − α, where α is the significance of the corresponding hypothesis test, i.e. the Type I error rate. The actual coverage could depart from the nominal value if some assumptions that are used in the error estimation procedure are broken. If the coverage is less then the nominal one, then the statistical significance of the results based on such confidence regions will be overestimated. This situation could result from biased estimates or from errors that are too small. Otherwise, if the coverage is higher than the nominal value, the errors are overestimated and the statistical power of the corresponding hypothesis test (with the null hypothesis being that the parameter equals some specified value) decreases.

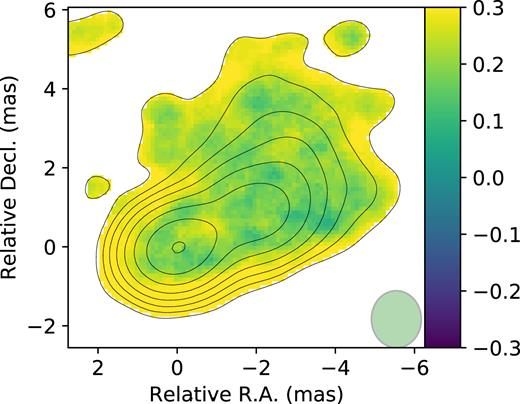

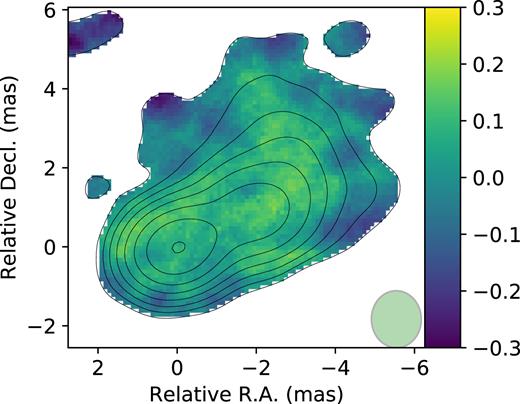

4.2 Stokes I intensity images

In order to compare the coverages of the CI for the CLEAN image pixel flux constructed using the bootstrap and conventional (rms-based) approaches we conducted simulations with a known model. We selected two AGN radiosources with different declinations to compare the results for different (u, |$v$|)-coverages: 1749+701 and 1514−241. We fetched self-calibrated data from the MOJAVE (Lister et al. 2009) database (8.1 GHz X-band and epochs 2006 April 5 and 2006 April 28) and used the corresponding CLEAN models as ‘ground truth’ models. Then we created a sample of 100 simulated observations by adding noise to model visibilities with the value estimated on each baseline of the original data sets. Finally we deconvolved the resulting artificial data sets using CLEAN and obtained two maps of the coverage of the confidence intervals for the pixels’ flux: one for bootstrap-based errors and one for conventional rms-based errors. We followed the procedure described in Section 3.3 using B = 100 bootstrap replications for each data set of the simulated sample to obtain the bootstrap-based errors. The resulting maps of the coverage for 68 |${{\ \rm per\ cent}}$| confidence intervals are shown for source 1749+701 (Figs 4 and 5 for rms- and bootstrap-based errors) and source 1514−241 (Figs 6 and 7). Colours show the deviation of the coverage from the nominal value (0.68 in this case). A positive value means that the coverage is higher than the nominal one. Two facts are apparent. First, the coverage is non-uniform, especially for rms-based errors. Secondly, rms-based errors are substantially over-covered relative to the nominal value. Bootstrap-based errors are closer to the nominal coverage and more uniform, especially in the outer parts of the source structure with relatively low signal-to-noise ratio (SNR).

Image of deviations from the nominal coverage of the rms-based 68 |${{\ \rm per\ cent}}$| confidence interval for Stokes I for 1749+701. The green ellipse in the image shows the synthesized beam.

Image of deviations from the nominal coverage of the bootstrap-based 68 |${{\ \rm per\ cent}}$| confidence interval for Stokes I for 1749+701.

Image of deviations from the nominal coverage of the rms-based 68 |${{\ \rm per\ cent}}$| confidence interval for Stokes I for 1514−241.

Image of deviations from the nominal coverage of the bootstrap 68 |${{\ \rm per\ cent}}$| confidence interval for Stokes I for 1514−241.

4.3 Rotation measure maps

Extending the bootstrapping approach to the problem of estimating the uncertainty of a non-linear combinations of images is straightforward.

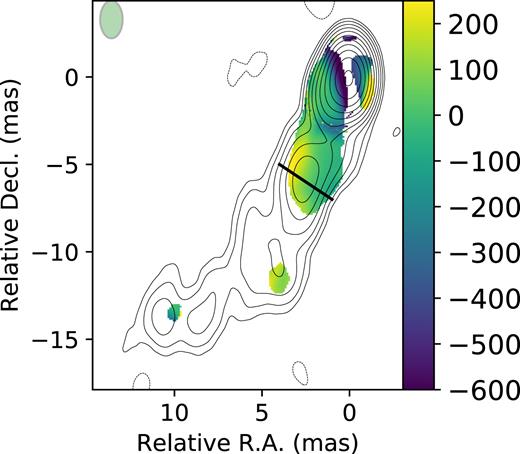

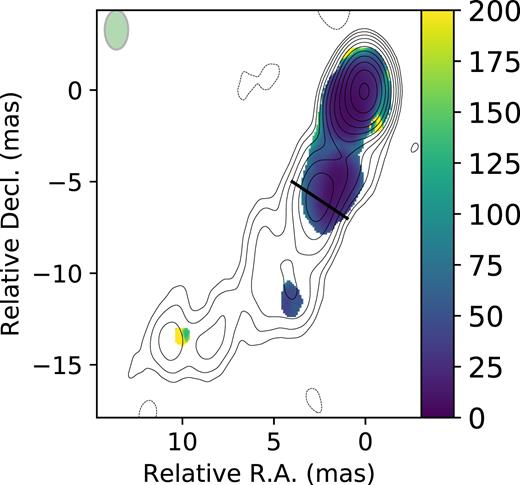

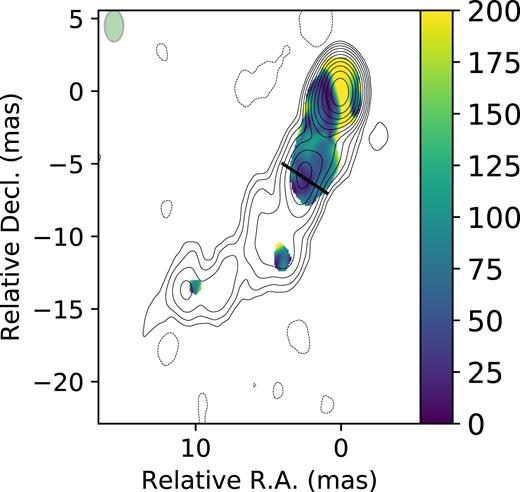

The rotation measure (RM) map of the source 2230+314 and the map of the 68 per cent CI of RM obtained using the bootstrap are shown in Figs 8 and 9 respectively. Errors given by the asymptotic covariance matrix (Hovatta et al. 2012) are presented in Fig. 10. The contribution from the absolute electric vector position angle (EVPA) calibration error is not included to enhance the difference between both approaches.

Rotation measure (RM) map overlaid on Stokes I contours for source 2230+314. The green ellipse in the image shows the synthesized beam. The colour bar is in |$\rm rad\,m^{-2}$|.

Bootstrap error distribution for the RM map presented in Fig. 8.

Conventional error distribution for the RM map presented in Fig. 8.

When comparing bootstrap-based (Fig. 9) and conventional (rms-based, Fig. 10) errors the most striking difference appears in the core region. While the former are minimal here (nearly 10 |$\rm rad\, \rm m^{-2}$|), rms-based errors of up to 150 |$\rm rad\, \rm m^{-2}$| are observed in the core, which is nearly two times as large as the error in the low-SNR jet region. This counter-intuitive fact could be understood as the result of the broken linear dependence between the linear polarization positional angle (PPA) and the squared wavelength in the core region. Indeed, part of this region is masked by the procedure that compares χ2 values with the critical value for the given number of degrees of freedom as described in Hovatta et al. (2012). That means that the linear model used in the fit is a poor approximation of the observed λ2-dependence. Asymptotic errors from the covariance matrix are useless in a situation with evident model-specification bias because they can estimate neither the variance (the method’s assumptions are violated) nor the bias (one must know the true model to estimate the bias).

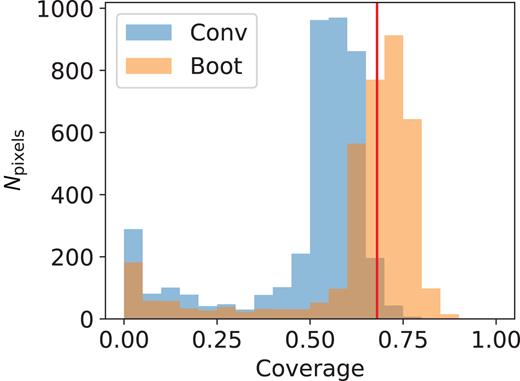

We also conducted calibration tests to compare the coverages of the conventional and bootstrap-based errors. We used the model of the inhomogeneous jet from Blandford & Königl (1979) and MOJAVE (Lister et al. 2009) real data at 8.1, 8.4, 12.1 and 15.4 GHz for source 1458+718 at epoch 2006 September 6. We transformed model images to the (u, |$v$|)-plane at the observed (u, |$v$|)-points and added noise estimated from real data sets at each frequency band. We used a constant fraction (0.2) of the Stokes I image as a model for Stokes Q and U. Using different realizations of noise we created a sample of artificial sources. For each source the RM map as well as its uncertainty map were obtained using the approach10 of Hovatta et al. (2012). We also calculated the uncertainty maps using B = 100 bootstrap replications of each artificial data set by resampling the residuals in the (u, |$v$|)-plane (as described in Section 3.3). Then coverage maps were created for both types of uncertainties and the true model. The last one was obtained from the model distribution of Blandford & Königl (1979) in the same way as our artificial sample but with the noise added that equals a small fraction (0.01) of the observed value. The histograms of the coverage across the source are presented in Fig. 11. The red line shows the nominal coverage (0.68). It is apparent that bootstrap-based errors have coverage closer to the nominal one and rms-based errors are under-covered.

Histogram of pixel coverage distribution for the RM errors obtained using simulations described in Section 4.3. Both bootstrap-based errors and conventional errors are shown. The red vertical line shows the nominal coverage.

4.4 Significance of the RM gradients

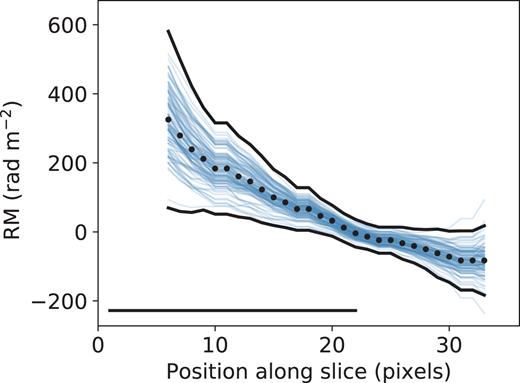

The significance of the RM gradient could easily be tested statistically once reliable estimates of the uncertainties are obtained. Recall the duality between confidence intervals and hypothesis testing (Lehmann & Romano 2005). Using bootstrap replications of the RM image one can build so-called simultaneous confidence bands (SCB, Lenhoff et al. 1999) for the estimated RM profile in any slice of the RM map (e.g. Fig. 12). It is important to use simultaneous confidence bands to avoid the problem of multiple comparisons. This situation arises while testing several statistical hypotheses simultaneously and results in an overestimated significance. Any RM curve that can be embraced by an SCB is consistent with the observed data at the selected level of significance α (e.g. 0.05 for |$95{{\ \rm per\ cent}}$| CB). Thus, we can reject the null hypothesis that the RM gradient is absent when the SCB fails to embrace at least one horizontal line.11 Here the horizontal line represents the absence of the RM gradient. In other words SCB must be pierced by at least one horizontal line from both sides for the corresponding RM gradient to be statistically significant. By using the simultaneous CB in the criterion we require that the horizontal line will be embraced by the corresponding CIs in each point of the slice. Thus the number of simultaneously tested hypotheses equals the number of slice points. Using pointwise CB consisting of the CIs of the individual slice points could then result in a situation where the horizontal line misses a particular CI simply by chance. With the slice consisting of n points and a single point CI with 1 − α confidence level the probability of the occurrence of at least one such point is 1 − (1 − α)n. For a typical quasar jet width n ∼ 10 and the conventional value of 1 − α = 0.95 this probability is ∼0.4, which is much less then the specified confidence level (0.95).

Measured values of RM along a transverse slice of the 2230+114 jet (shown as a black line in Fig. 8) with a simultaneous confidence band made from 100 bootstrap realizations. The straight black line in the slice show the synthesized beam.

The criteria for the significant RM gradient used in Hovatta et al. (2012) depend on a user-specified level at which PPA images are clipped before calculating the RM map. If clipping is done at a different level the errors at the slice edges will change accordingly and the criteria of Hovatta et al. (2012) could give different results in low signal-to-noise jet regions.12 Given the proposed criterion of a significant RM gradient one does not need to clip PPA images before producing RM maps. At the source-free part of the RM image the SCB of the RM profile will be wide because only noise contributes to the measured RM. However, the criterion concerns the regions of the SCB that are steep and/or narrow enough to be pierced by a horizontal line. Therefore it is free from the crude step of choosing the width of the transverse slice with a hypothetical RM gradient. We would like to note that the proposed criterion does not depend on the number of resolution elements spanned by the transverse RM slice. Indeed, the effective resolution depends on the SNR and cannot be the same across the source.

4.5 Spectral index maps

We conducted calibration tests for spectral index maps using the same procedure as for the rotation measure maps (see Section 4.3). The spectral index in each pixel was calculated by fitting a single power law to all four frequency bands. We calculated conventional errors following Hovatta et al. (2014) but without the contribution of the uncertainty of the amplitude calibration. The results are presented in Fig. 13. The coverage of bootstrapped-based errors is closer to the nominal value and is more uniform.

Histogram of pixel coverage distribution for spectral index errors obtained using simulations described in Section 4.5. Both bootstrap-based errors and conventional errors are shown. The red vertical line shows the nominal coverage.

5 DISCUSSION

5.1 Possible limitations of applying the bootstrap to VLBI

With the assumptions and approximations implied by the method and presented in Section 3 we can identify the following cases in which application of the bootstrap to VLBI data should be done with caution:

Model is clearly inconsistent with data. When the model is clearly inconsistent with the data the distribution of the residuals in the uv-domain will depend on the uv-point inside a single baseline, thus violating the assumption of their independence. Using the method blindly will then result in biased errors of the model parameters.13 Thus one should check the model before using the method to be sure that the obtained uncertainty estimates are reliable. If the model is clearly inconsistent with the data the best that one can do is to use the fully parametric bootstrap and resample not the modified residuals but the Gaussian noise with the variance estimated from the observed visibilities. One can use e.g. the successive differences approach (Briggs 1995) or Stokes V visibilities that are supposed to have a source signal at a level of only tenths of a per cent for quasar jets (Vitrishchak et al. 2008).

Simple model fitted to complex brightness distributions. Using models that are too simple to account for the observed brightness distribution brings issues similar to those mentioned above. But we would like to distinguish this case in a separate item to highlight the consequences of fitting simplistic models to complex brightness distributions. The squared error of the model prediction can be decomposed into a sum of the variance (random or estimation error) and the bias (systematic or model approximation error) squared.14 The variance shows how stable the model predictions are when one uses different data sets for fitting the model. The bias represents the error due to using a simplistic function to approximate the intrinsically more complex relation. A model that is clearly too simple to account for the observed brightness distribution will have significant bias but, at the same time, its variance will be small relative to the bias due to the ‘bias–variance trade-off’ (Hastie et al. 2001). The bootstrap allows one to estimate the random errors of the model parameters, i.e. errors that are due to the model variance and not to the bias. Thus the model parameters could be highly constrained by the data (i.e. reveal relatively small estimated errors) even if the model does not represent the observed data well. This is especially pronounced in the case of high-SNR data sets. The value of SNR at which the bias begins to dominate in the total error depends on the model used and the size of the data set. ‘Learning curves’15 (Raschka 2015) could be used to quantify the complexity of the model and to ensure that the bias is not a dominant factor in the total error; otherwise the errors estimated using the bootstrap will be underestimated. One can use e.g. the method described in Kenney & Gu (2016) to estimate the model approximation error in such cases using the bootstrap. We will discuss this in the context of estimating the errors of simple models fitted directly to the interferometric visibilities in a subsequent paper.

Small data sets.A problem may occur in snapshot observations when the residuals distribution is highly skewed. Then the observed residuals could poorly represent their population distribution. One can use the parametric bootstrap and sample the residuals from the Gaussian noise with the variance that has been estimated from the observed data.

Outlier visibility measurements.It is well known that a short-time amplitude error in the uv-domain results in ‘waves’ of erroneous flux in the image-domain (Ekers 1999). Using such outlier visibility in resampling would result in replication of the outliers in the bootstrapped data samples. Most of these outlier visibility measurements are flagged by attributing zero or negative weight to them. But when they have a positive weight one should take care not to consider outliers in the resampling or stick to a parametric bootstrap with robustly estimated noise.

5.2 Advantages

One of the main advantages of using the bootstrap is its wide applicability in assessing the uncertainties of VLBI results. The algorithm of error estimation is the same for both the deconvolved image and the non-linear combination of images or direct model of interferometric visibility. One does not need any other software except those used for image deconvolution/visibility fitting and that used to generate the bootstrapped data sets. In case of image-based estimates (VLBI maps and their non-linear combinations) the bootstrap allows one to account for the correlated and non-uniform noise in the image plane in contrast to the traditional rms-based approach. We also highlight the ability of the method to account for the inhomogeneous sensitivity of VLBI arrays, especially in the case of Space VLBI (e.g. RadioAstron, Kardashev et al. 2013). As already noted, the bootstrap can be extended beyond our implementation to account for self-calibration errors and other instrumental effects if these effects can be estimated. Finally the bootstrap can be used for hypothesis testing for any image or image combination structure, e.g. RM gradient.

6 CONCLUSIONS

The bootstrap is a well known and widely used method for assessing uncertainties in cases when the errors cannot be estimated analytically. However, up to now the bootstrap has been completely ignored by the VLBI community, despite the fact that many widely used algorithms of VLBI data processing lack uncertainty outputs. In this paper we have demonstrated that the bootstrap is a natural and efficient tool to estimate the uncertainties of the image-based statistics obtained using the inherently complex and non-linear VLBI data-processing algorithms. It also naturally accounts for inhomogeneous sensitivity arrays (e.g. Space VLBI).

We conducted simulations with artificially generated data sets and found out that the bootstrap provides error estimates for the images obtained using CLEAN and their combinations with better coverage than those obtained using traditional rms-based uncertainties. The bootstrap allows one to formulate the criterion of a statistically significant rotation measure gradient in a image slice that is free of irrelevant points such as the width of the gradient or RM errors at some locations of the slice.

Using MOJAVE multi-frequency VLBA data we confirm that there are statistically significant (at level α = 0.05) transverse RM gradients in jets of 0923+392, 0945+408 and 2230+114 (Appendix A). For 1641+399 the RM gradient is found to be statistically non-significant.

ACKNOWLEDGEMENTS

The author would like to thank the anonymous referees for their thoughtful comments, which resulted in numerous improvements in this paper. The author also thanks Evgenya Kravchenko, Mikhail Lisakov and Alexander Kutkin for useful discussions, Evgenya Kravchenko, Yury Yu. Kovalev and especially Dimitry Litvinov for reading the manuscript and constructive suggestions. This work is supported by a Russian Science Foundation grant 16-12-10481. This research has made use of data from the MOJAVE database that is maintained by the MOJAVE team (Lister et al. 2009).

This research made use of NASA’s Astrophysics Data System.

This research made use of astropy, a community-developed core Python package for astronomy (Astropy Collaboration et al. 2013), numpy (Van Der Walt, Colbert & Varoquaux 2011) and scipy (Jones et al. 2001). The matplotlib Python package (Hunter 2007) was used for generating all plots in this paper.

Footnotes

A dirty beam is the FT of the (u, |$v$|)-plane sampling function that equals one in the sampled points and zero elsewhere. A dirty image is the convolution of the true image with the dirty beam. Poor coverage of the (u, |$v$|)-plane results in a dirty beam with large sidelobes.

Cross-validation estimates the prediction performance of the model on the independent (not used in fitting) data set.

For example CLEAN faces difficulties representing the extended structure because the model consists of point sources (δ-functions).

This is shown to be significant for linear polarization (i.e. for Stokes parameters Q and U, but for Stokes I it should be small in most cases).

We will subsequently call this pixel rms.

In general the parametric bootstrap agrees with the maximum likelihood (Hastie et al. 2001).

The model can include instrumental parameters, e.g. antenna gains, not only the parameters that determine the source brightness distribution.

Parallel 〈RR*〉, 〈LL*〉 and cross-hand 〈RL*〉, 〈LR*〉 correlations, where R and L denote voltages from left and right circularly polarized feeds, the brackets indicate averaging and the star denotes a complex conjugate (Thompson, Moran & Swenson 2017).

The Scikit-learn Python package (Pedregosa et al. 2011) was used for these steps.

We have not taken such instrumental effects as D-terms and EVPA-calibration uncertainty into account because we are interested in the influence of the correlated noise. Nevertheless, our analysis can be easily extended as discussed in Section 3.3.

We note that this criterion should be considered only as an observational one. Other, i.e. physical criteria, should also be fulfilled (see e.g. Taylor & Zavala 2010). Thus the proposed criterion is necessary but not sufficient.

Quasar jets are typically linearly polarized at a level of 10 |${{\ \rm per\ cent}}$| (Lister & Homan 2005).

It should be noted that in some sense the pairs bootstrap (2) can be used as a proxy to estimate the model approximation error. Indeed, when the model is a bad approximation of the data the errors of the model parameters become larger. See also Kenney & Gu (2016) for using the bootstrap to estimate the model error.

There is also a contribution from the irreducible error due to noise.

That is, the dependence of the prediction performance of the model on the amount of data used to fit it.

REFERENCES

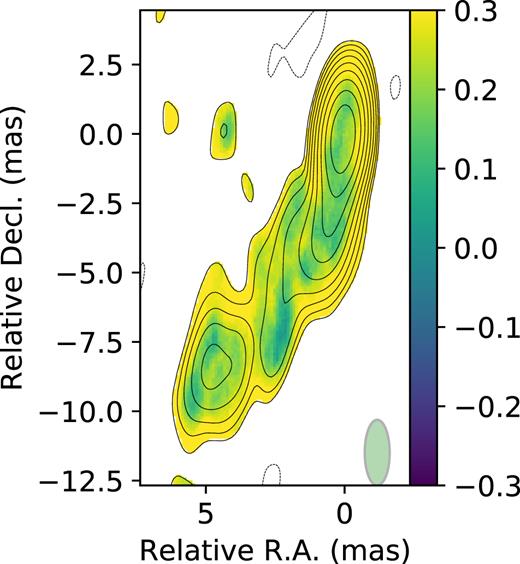

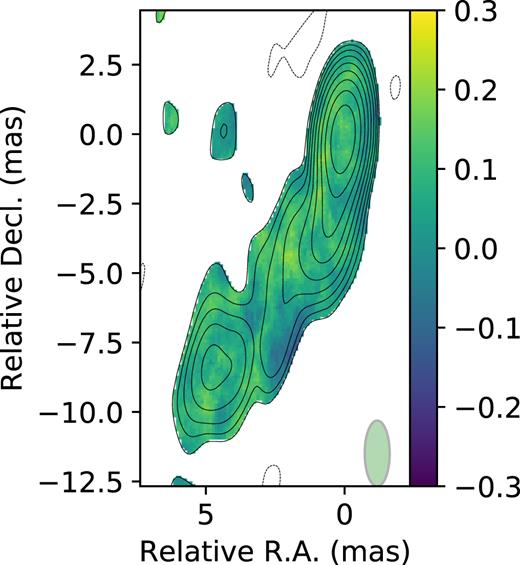

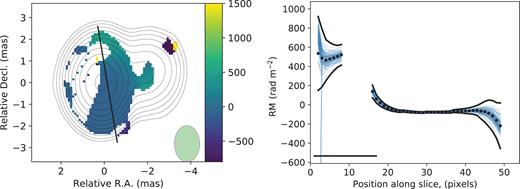

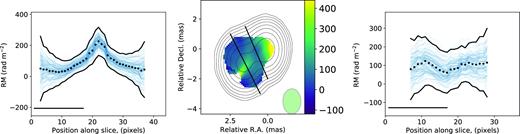

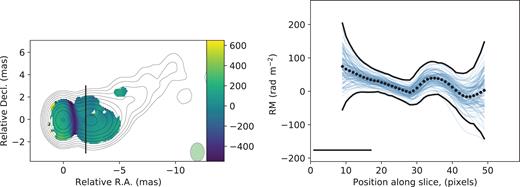

APPENDIX A: REANALYSIS OF THE SUSPICIOUS RM GRADIENTS

We have applied the proposed criterion (see Section 4.4) to estimate the statistical significance of RM gradients for several sources from Hovatta et al. (2012). These sources were considered as probable candidates for significant gradients, but did not meet the strict requirements stated by Hovatta et al. (2012). We found that there are statistically significant (at level α = 0.05, Section 4.1) transverse RM gradients in jets of 0923+392 (Fig. A1), 0945+408 (Fig. A2) and 2230+114 (Fig. 12). For 1641+399 (Fig. A3) the RM gradient is found to be statistically non-significant.

The rotation measure map (left) and its slice (right) with a significant transverse RM gradient for 0923+392. The green ellipse in the image and the straight black line in the slice show the synthesized beam. The colour scheme is given in |$\rm rad\,\, m^{-2}$|. The black dots show the observed RM values; the blue thin lines, bootstrap realizations and the black thick line, SCB. Note that five bootstrap realizations have exceptionally low RM values in the border point of the slice due to low SNR.

The rotation measure map (middle) and slices with significant (left) and non-significant (right) transverse RM gradients for 0945+408.

The rotation measure map and slice with non-significant RM gradient for 1641+399.