-

PDF

- Split View

-

Views

-

Cite

Cite

Phuong Nguyen, Melody K Schiaffino, Zhan Zhang, Hyung Wook Choi, Jina Huh-Yoo, Toward alert triage: scalable qualitative coding framework for analyzing alert notes from the Telehealth Intervention Program for Seniors (TIPS), JAMIA Open, Volume 6, Issue 3, October 2023, ooad061, https://doi.org/10.1093/jamiaopen/ooad061

Close - Share Icon Share

Abstract

Combined with mobile monitoring devices, telehealth generates overwhelming data, which could cause clinician burnout and overlooking critical patient status. Developing novel and efficient ways to correctly triage such data will be critical to a successful telehealth adoption. We aim to develop an automated classification framework of existing nurses’ notes for each alert that will serve as a training dataset for a future alert triage system for telehealth programs.

We analyzed and developed a coding framework and a regular expression-based keyword match approach based on the information of 24 931 alert notes from a community-based telehealth program. We evaluated our automated alert triaging model for its scalability on a stratified sampling of 800 alert notes for precision and recall analysis.

We found 22 717 out of 24 579 alert notes (92%) belonging to at least one of the 17 codes. The evaluation of the automated alert note analysis using the regular expression-based information extraction approach resulted in an average precision of 0.86 (SD = 0.13) and recall 0.90 (SD = 0.13).

The high-performance results show the feasibility and the scalability potential of this approach in community-based, low-income older adult telehealth settings. The resulting coded alert notes can be combined with participants’ health monitoring results to generate predictive models and to triage false alerts. The findings build steps toward developing an automated alert triaging model to improve the identification of alert types in remote health monitoring and telehealth systems.

Lay Summary

Community-based telehealth care and telehealth in general can end up creating vast amounts of data from the technology used to monitor patients and the information that has to be transmitted (measurements, alerts). This can overwhelm nurses and doctors with information and take a lot of time to review as they try to provide high-quality care. Telehealth is helpful for older adults who benefit from being able to monitor their health closer to home. However, it is important that the information created from telehealth technology is as correct and accurate as possible and efficiently delivered to the telehealth providers. To achieve this goal, the first step is generating data called training data which helps researcher develop a future and improved system. To that end, we developed a coding method based on the information of 24 931 alert notes from a community-based telehealth program, which provides remote monitoring to low-income older adults in the northeast region of the United States. Our method helped to code the information from each participants’ check-in, any alert they might have made, their vitals, and what happened as a result of the alert. We found it is feasible to code and understand large amounts of data and help identify false information and reduce the burden on the telehealth providers. This in turn would help the providers to give better quality of care to older adults. Our findings inform the field practice and future research on developing an automated alert triaging system for remote patient monitoring and telehealth services.

INTRODUCTION

Background

Telehealth is the provision of health care intended to overcome physical or geographic barriers, it can be delivered by telephone, tablet, smartphone, or video. Growing evidence demonstrates improved access to care, especially for people who have difficulties with mobility, or live in rural communities.1 Telehealth utilization during the early stages of the novel coronavirus (COVID-19) pandemic (∼the last week of March 2020) showed an increase in use of 154% compared with the same period in 2019.2 Telehealth was not only the optimal solution for the immediate response during the pandemic but it is also becoming a solution for long-term population health sustainability. Adopting telehealth reduces costs for the health system by reducing travel time for patients and reducing time lost between interacting with patients.3 Home-based telehealth interventions benefit patients, improving access to care and health outcomes for old adults suffering from long-term conditions such as heart failure and diabetes.4,5 Hospital readmission and emergency visits drop when mobile phones and wireless technology are implemented in postsurgical care.6

With all those benefits, telehealth is a potential community-based healthcare delivery method for older adults, as is the case with the Telehealth Intervention Program for Seniors (TIPS)—an ongoing tristate community-based telehealth program implemented at 17 sites in 2011 with 1878 participants in the states of New Jersey, Pennsylvania, and New York. The program consists of a tablet-based kiosk installed in retirement residences and community facilities with staff-trained technicians that help older adult participants measure their blood pressure, weight, and pulse oximetry, these results are transferred to a facility remotely monitored by program nurses using a “store-and-forward” approach thus the data are collected, saved, and then uploaded after the encounter. When a measurement is out of a participant’s acceptable or healthy range, an alert is triggered notifying program nurses. The nurses then call the participant to probe on the alert causes and advise patients on next steps. Depending on the results, the participants are advised to visit their primary care provider or instructed to self-monitor their health status.7 Previously published literature for this program has demonstrated reductions in readmission rates among participants associated with the alerts from the remote monitoring program.8

A remote monitoring system like that employed by TIPS consists not only of alerts triggered at select check-ins resulting in improved patient outcomes. Conversely, these check-in can also result in false alerts that can lead to increased clinician burden.9 One study attempted to use mixed methods that combined quantitative correlation analysis of patient characteristics data with the number of telehealth alerts and qualitative analysis of telehealth and visiting nurse’s notes to study alerts to date. The study found that most false alerts were caused either by incorrect measurement techniques, not having telehealth alert threshold ranges revised, or delays in clinical management.9 Another study suggests the content of the alarm note that leads to the informativeness of the alerts plays a critical role in a high proportion of alerts overridden or ignored.10 How these alerts can be efficiently managed in telehealth can help eliminate the burden on healthcare workers and improve the quality of care.

Significance

To our knowledge, this is one of the first studies to evaluate the feasibility of generating training data to inform the development of a triage system for a community-based telehealth intervention. For the present study, the term alert notes refers to the telehealth program’s nurses’ detailed notes about an out-of-range reading when a remote check-in occurs, providing larger context to existing monitoring. These notes show which check-in triggered an alert, if that alert was false or true, and then context for the true alerts. The alter notes detail the context or trigger for the out-of-range alert, and how the nurse resolves the alert. Further, alert notes provide a record of their suggested next steps tailored to alert type, as a participant can trigger more than one at each check-in. Improving our understanding of the content of such notes can support the development of a scalable approach to effectively improve clinical workflows and support automated alert triage in future technology. In this paper, we present findings of a scalable qualitative coding framework developed for the community-based telehealth that provides services to low-income older adults. This framework will help operationalize the sequence of events leading from alert generation to receipt, analysis of patient state, and resolution for each including next steps by nursing personnel.

METHODS

Our dataset included N = 24 931 alert notes generated between 2011 and December 2019 from the Telehealth Intervention Program for Seniors (TIPS). While the unit of analysis for the present study is not patient level, we include a brief summary of the population characteristics. A prior publication contains individual participant demographics in greater detail.11 Briefly, 23.3% of participants reported being limited English proficient, which is a well-validated indicator that a person speaks English less than “very well” and this is strongly associated with barriers to seeking, accessing, and utilizing health services,12 and 36% of participants reported being Medicaid eligible.11 This study was approved by the Pace University Institutional Review Board (IRB# 18-11), and under reliance agreements with Drexel University and San Diego State University. TIPS participants consented to the use of their generated data (eg, physiological data and alerts) for research purposes.

Coding framework development

Based on the alert notes, the research team established a codebook, which describes the definition of each code—how these codes represent sequence of events in each alert note, what keywords can be used to automatically detect the alert notes that contain the code’s relevant content, and an example alert note. For the codebook development, we first consulted TIPS program managers who worked closely with healthcare providers to understand the processes, guidelines, and rules for alert processing (eg, one program manager was also responsible for training and managing the tele-nurses). This allowed the research team to understand what types of alerts may be generated, how it might be triaged, how the tele-nurses should respond to the alerts, and what information could be included in the alert notes. Two study team coders were assigned 100 randomly ordered alerts for annotation. Each coded alert note was assigned a vector of 17 binary variables representing each code, which shows whether the related content to the code was present in the alert note. For example, “Prior wt reading inaccurate CTM” was given a vector in which the variables Review History and False Alarm were recorded as present (“1”). The remaining variables were coded as not present (“0”). The 2 coders then counted the number of alert notes that represented each code. If a code did not have at least 5 alert notes representing that code, additional alert notes were assigned for annotation until each code obtained at least 5 examples. After the annotation of the first 100 alert notes, the codes: Technical Error; False Alarm; No Further Action Needed; and Discuss Symptoms did not have 5 alert notes to represent each of them. Therefore, another 100 alert notes were randomly selected and annotated. An additional 100 alert notes were then randomly selected for annotation that provided enough examples for the code Discuss Symptoms. Finally, an additional round of selecting 52 alert notes for annotation were performed to have code No Further Action Needed had at least 5 alert notes examples, thereby, ensuring that all codes had enough examples. In total, 352 alert notes were annotated. Only 2 codes (Technical Error, False Alarm) had enough representative examples in the first 100 iterations. We then generated regular expressions-based keywords that captured the notes representing each code as we examined the manually annotated notes.

Regular expression-based keywords match

We excluded the initial 352 manually annotated alert notes that were used to develop the codebook. Then, we performed keyword searches based on regular expressions using MySQL queries to search all the remaining alert notes containing information for each corresponding code.

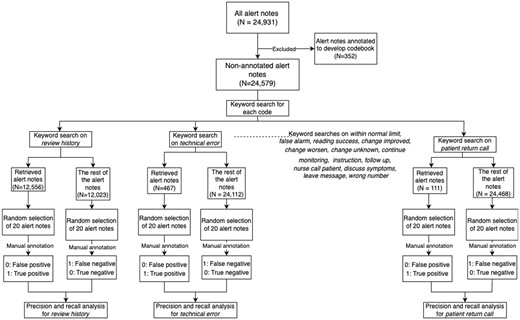

Evaluation of the regular expression-based keywords

To evaluate how well the keywords matched relevant alert notes, from the keyword search results of each code, 20 alert notes were retrieved at random from each keyword search as well as 20 alert notes from the rest of the alert notes dataset that were not part of the keyword search results, resulting in a total of 680 alert notes for this evaluation. We observed this sample size was enough to reach saturation of results, as we were able to see repeated patterns within the sample without new findings in line with similar content analysis approaches.13 We then manually annotated the 680 alert notes to identify a confusion matrix for precision and recall analysis. True positives are defined as alert notes retrieved from each keyword search that were manually annotated as having content related to the selected code (“1”). False positives are defined as alert notes retrieved from each keyword search that was manually annotated and not having content related to the selected code (“0”). Likewise, true negatives are alert notes not retrieved from each keyword search that were manually annotated as not having content related to the code (“0”). The false negatives are defined as alert notes not retrieved from each keyword search that were manually annotated as having content related to the code (“1”).

The precision and recall are defined by the following equations:

The process of evaluating the keyword search results is shown in Figure 1.

The process of the evaluation of the regular expression-based keywords.

RESULTS

Coding framework development

The final codebook consisted of 17 codes representing multiple sequences of events that begin with an alert triggered by a community check in that ends with a call from a nurse and the resulting plan for the patient. We clustered those codes into 4 actions—“Action 1” categorizes a false alert; “Action 2” determines patient status if further action is needed; “Action 3” is the note disposition that either results in resolving the note or proceeds into one more action; “Action 4” is the context of a final intervention consisting of a follow-up call by the nurse.

We identified a total of 108 common expression keywords for 17 codes. The codebook (see Supplementary Table S1) includes the definition of each code, a list of keywords under that code, and an example note for the code. For example, code Technical Error represents alert data reported were inaccurate due to technical errors such as a leaking blood pressure cuff or equipment malfunction. The list of keywords under code Technical Error included: “fit_cuff,” “leak_cuff,” “equipment,” “issue_machine,” “malfunction,” and “not_work_properly.” Some example alert notes that were coded with Technical Error were: “BP 104/91.? (sic) malfunction of equipment. Repeat 114/75. No further action required.”

Regular expression-based keywords match results

Table 1 shows the keyword match results for a total of 24 579 non-annotated alert notes with 108 common expression keywords that identified 22 717 alert notes belonging to at least one of the 17 codes. The code Review History had the most keywords identified with 13 keywords. The code Reading Success had the highest number of alert notes retrieved from the keyword search with 12 919 (52.56%) alert notes. The code Patient Return Call had the least frequency with 111 (0.45%) alert notes retrieved from the keyword search as only 4 keywords were identified. See Supplementary Table S2 for more detail on the keywords list.

| Code . | No. of keywords (N = 108 total) . | Alert notes retrieved from keyword search (N = 22 717) . | |

|---|---|---|---|

| No. of alert notes . | Percent (%) . | ||

| Review history | 13 | 12 556 | 51.08 |

| Technical error | 6 | 467 | 1.90 |

| Within normal limit | 6 | 8208 | 33.39 |

| False alarm | 6 | 1051 | 4.28 |

| Reading success | 11 | 12 919 | 52.56 |

| No further action needed | 4 | 1962 | 7.98 |

| Change improved | 8 | 659 | 2.68 |

| Change worsen | 5 | 4658 | 18.95 |

| Change unknown | 10 | 11 418 | 46.45 |

| Continue monitoring | 7 | 202 | 0.82 |

| Instruction | 7 | 1507 | 6.13 |

| Follow-up | 7 | 4429 | 18.02 |

| Nurse call patient | 9 | 5988 | 24.36 |

| Discuss symptoms | 9 | 3590 | 14.61 |

| Leave message | 7 | 2559 | 10.41 |

| Wrong number | 2 | 135 | 0.55 |

| Patient return call | 4 | 111 | 0.45 |

| Code . | No. of keywords (N = 108 total) . | Alert notes retrieved from keyword search (N = 22 717) . | |

|---|---|---|---|

| No. of alert notes . | Percent (%) . | ||

| Review history | 13 | 12 556 | 51.08 |

| Technical error | 6 | 467 | 1.90 |

| Within normal limit | 6 | 8208 | 33.39 |

| False alarm | 6 | 1051 | 4.28 |

| Reading success | 11 | 12 919 | 52.56 |

| No further action needed | 4 | 1962 | 7.98 |

| Change improved | 8 | 659 | 2.68 |

| Change worsen | 5 | 4658 | 18.95 |

| Change unknown | 10 | 11 418 | 46.45 |

| Continue monitoring | 7 | 202 | 0.82 |

| Instruction | 7 | 1507 | 6.13 |

| Follow-up | 7 | 4429 | 18.02 |

| Nurse call patient | 9 | 5988 | 24.36 |

| Discuss symptoms | 9 | 3590 | 14.61 |

| Leave message | 7 | 2559 | 10.41 |

| Wrong number | 2 | 135 | 0.55 |

| Patient return call | 4 | 111 | 0.45 |

| Code . | No. of keywords (N = 108 total) . | Alert notes retrieved from keyword search (N = 22 717) . | |

|---|---|---|---|

| No. of alert notes . | Percent (%) . | ||

| Review history | 13 | 12 556 | 51.08 |

| Technical error | 6 | 467 | 1.90 |

| Within normal limit | 6 | 8208 | 33.39 |

| False alarm | 6 | 1051 | 4.28 |

| Reading success | 11 | 12 919 | 52.56 |

| No further action needed | 4 | 1962 | 7.98 |

| Change improved | 8 | 659 | 2.68 |

| Change worsen | 5 | 4658 | 18.95 |

| Change unknown | 10 | 11 418 | 46.45 |

| Continue monitoring | 7 | 202 | 0.82 |

| Instruction | 7 | 1507 | 6.13 |

| Follow-up | 7 | 4429 | 18.02 |

| Nurse call patient | 9 | 5988 | 24.36 |

| Discuss symptoms | 9 | 3590 | 14.61 |

| Leave message | 7 | 2559 | 10.41 |

| Wrong number | 2 | 135 | 0.55 |

| Patient return call | 4 | 111 | 0.45 |

| Code . | No. of keywords (N = 108 total) . | Alert notes retrieved from keyword search (N = 22 717) . | |

|---|---|---|---|

| No. of alert notes . | Percent (%) . | ||

| Review history | 13 | 12 556 | 51.08 |

| Technical error | 6 | 467 | 1.90 |

| Within normal limit | 6 | 8208 | 33.39 |

| False alarm | 6 | 1051 | 4.28 |

| Reading success | 11 | 12 919 | 52.56 |

| No further action needed | 4 | 1962 | 7.98 |

| Change improved | 8 | 659 | 2.68 |

| Change worsen | 5 | 4658 | 18.95 |

| Change unknown | 10 | 11 418 | 46.45 |

| Continue monitoring | 7 | 202 | 0.82 |

| Instruction | 7 | 1507 | 6.13 |

| Follow-up | 7 | 4429 | 18.02 |

| Nurse call patient | 9 | 5988 | 24.36 |

| Discuss symptoms | 9 | 3590 | 14.61 |

| Leave message | 7 | 2559 | 10.41 |

| Wrong number | 2 | 135 | 0.55 |

| Patient return call | 4 | 111 | 0.45 |

Evaluation of the regular expression-based keywords

There was a total of 680 alert notes in the precision and recall analysis. Four out of 17 codes had a precision score of 100% (No Further Action Needed, Change Improved, Wrong Number, Patient Return Call). Nine out of 17 codes had precision scores between 80% and 95% (Review History, Technical Error, Within Normal Limit, False Alarm, Reading Success, Change Unknown, Continue Monitoring, Nurse Call Patient, Leave Message). Four out of 17 had precision scores between 60% and 80% (Change Worsen, Instruction, Follow Up, Discuss Symptoms). The code Follow Up had the lowest precision score of 60%. Nine of 17 codes had a recall score of 100% (Technical Error, False Alarm, Change Improved, Change Worsen, Instruction, Nurse Call Patient, Discuss Symptoms, Wrong Number, Patient Return Call). Five of 17 codes had recall scores between 80% and 95% (Within Normal Limit, No Further Action Needed, Continue Monitoring, Follow Up, Leave Message). The last 3 codes had a recall score of less than 80% (Review History, Reading Success, Change Unknown). The code Reading Success had the lowest recall score of 62%. The final precision score was 86% and the final recall score was 90%. Details of results are shown in Table 2.

Confusion table for testing keyword search results (N = 20 classified as True, N = 20 classified as False according to keyword search results)

| . | True . | False . | Precision . | Recall . |

|---|---|---|---|---|

| Review history | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.73 |

| Negative | 7 (35%) | 13 (65%) | ||

| Technical error | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Within normal limit | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| False alarm | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Reading success | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.62 |

| Negative | 11 (55%) | 9 (45%) | ||

| No further action needed | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 0.87 |

| Negative | 3 (15%) | 17 (85%) | ||

| Change improved | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change worsen | ||||

| Positive | 15 (75%) | 5 (25%) | 0.75 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change unknown | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.64 |

| Negative | 10 (50%) | 10 (50%) | ||

| Continue monitoring | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| Instruction | ||||

| Positive | 14 (70%) | 6 (30%) | 0.7 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Follow-up | ||||

| Positive | 12 (60%) | 8 (40%) | 0.6 | 0.8 |

| Negative | 3 (15%) | 17 (85%) | ||

| Nurse call patient | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Discuss symptoms | ||||

| Positive | 13 (65%) | 7 (35%) | 0.65 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Leave message | ||||

| Positive | 16 (80%) | 4 (20%) | 0.8 | 0.89 |

| Negative | 2 (10%) | 18 (90%) | ||

| Wrong number | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Patient return call | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| . | True . | False . | Precision . | Recall . |

|---|---|---|---|---|

| Review history | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.73 |

| Negative | 7 (35%) | 13 (65%) | ||

| Technical error | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Within normal limit | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| False alarm | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Reading success | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.62 |

| Negative | 11 (55%) | 9 (45%) | ||

| No further action needed | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 0.87 |

| Negative | 3 (15%) | 17 (85%) | ||

| Change improved | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change worsen | ||||

| Positive | 15 (75%) | 5 (25%) | 0.75 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change unknown | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.64 |

| Negative | 10 (50%) | 10 (50%) | ||

| Continue monitoring | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| Instruction | ||||

| Positive | 14 (70%) | 6 (30%) | 0.7 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Follow-up | ||||

| Positive | 12 (60%) | 8 (40%) | 0.6 | 0.8 |

| Negative | 3 (15%) | 17 (85%) | ||

| Nurse call patient | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Discuss symptoms | ||||

| Positive | 13 (65%) | 7 (35%) | 0.65 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Leave message | ||||

| Positive | 16 (80%) | 4 (20%) | 0.8 | 0.89 |

| Negative | 2 (10%) | 18 (90%) | ||

| Wrong number | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Patient return call | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

Confusion table for testing keyword search results (N = 20 classified as True, N = 20 classified as False according to keyword search results)

| . | True . | False . | Precision . | Recall . |

|---|---|---|---|---|

| Review history | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.73 |

| Negative | 7 (35%) | 13 (65%) | ||

| Technical error | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Within normal limit | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| False alarm | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Reading success | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.62 |

| Negative | 11 (55%) | 9 (45%) | ||

| No further action needed | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 0.87 |

| Negative | 3 (15%) | 17 (85%) | ||

| Change improved | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change worsen | ||||

| Positive | 15 (75%) | 5 (25%) | 0.75 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change unknown | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.64 |

| Negative | 10 (50%) | 10 (50%) | ||

| Continue monitoring | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| Instruction | ||||

| Positive | 14 (70%) | 6 (30%) | 0.7 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Follow-up | ||||

| Positive | 12 (60%) | 8 (40%) | 0.6 | 0.8 |

| Negative | 3 (15%) | 17 (85%) | ||

| Nurse call patient | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Discuss symptoms | ||||

| Positive | 13 (65%) | 7 (35%) | 0.65 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Leave message | ||||

| Positive | 16 (80%) | 4 (20%) | 0.8 | 0.89 |

| Negative | 2 (10%) | 18 (90%) | ||

| Wrong number | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Patient return call | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| . | True . | False . | Precision . | Recall . |

|---|---|---|---|---|

| Review history | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.73 |

| Negative | 7 (35%) | 13 (65%) | ||

| Technical error | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Within normal limit | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| False alarm | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Reading success | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.62 |

| Negative | 11 (55%) | 9 (45%) | ||

| No further action needed | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 0.87 |

| Negative | 3 (15%) | 17 (85%) | ||

| Change improved | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change worsen | ||||

| Positive | 15 (75%) | 5 (25%) | 0.75 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Change unknown | ||||

| Positive | 18 (90%) | 2 (10%) | 0.9 | 0.64 |

| Negative | 10 (50%) | 10 (50%) | ||

| Continue monitoring | ||||

| Positive | 19 (95%) | 1 (5%) | 0.95 | 0.91 |

| Negative | 2 (10%) | 18 (90%) | ||

| Instruction | ||||

| Positive | 14 (70%) | 6 (30%) | 0.7 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Follow-up | ||||

| Positive | 12 (60%) | 8 (40%) | 0.6 | 0.8 |

| Negative | 3 (15%) | 17 (85%) | ||

| Nurse call patient | ||||

| Positive | 17 (85%) | 3 (15%) | 0.85 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Discuss symptoms | ||||

| Positive | 13 (65%) | 7 (35%) | 0.65 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Leave message | ||||

| Positive | 16 (80%) | 4 (20%) | 0.8 | 0.89 |

| Negative | 2 (10%) | 18 (90%) | ||

| Wrong number | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

| Patient return call | ||||

| Positive | 20 (100%) | 0 (0%) | 1 | 1 |

| Negative | 0 (0%) | 20 (100%) | ||

DISCUSSION

Our findings demonstrate how community-based telehealth alert notes, which include a plethora of information, can be used to build an automated system that helps to triage alerts to prevent alert fatigue among healthcare workers and improve the quality of care. More specifically, our findings are unique in showing the feasibility of successful automations in community-based organizations with a large low-income population where we tested this approach. While the findings may be limited to a single telehealth project, community-driven telehealth projects are ubiquitous with many congregate housing facilities seeking technology innovations to serve their populations. Driving understanding and guidance to community-based organizations who are trying to leverage technology to help their older adult populations are innovating as they care for patients.

For instance, existing studies used various text mining techniques that performed phrase or word matches from various kinds of narrative documents within electronic health records to identify adverse events, medical errors, or screening for the risk of falls.14–16 Results from these studies varied. One study identified a broad range of medical errors by searching 5 keywords “mistake,” “error,” “incorrect,” and “iatrogenic” in discharge summaries, sign-out notes, and outpatient visit notes and obtained a positive predictive range of only 3.4%–24.4%.17 Meanwhile, another study performed text searching on discharge summaries to identify a broad range of adverse events. The system turned 59% of discharge summaries with a predictive value of 52%.18 The most recent study identified 7 adverse events in narrative documents using a keyword search approach. The study achieved positive predictive values as low as 5.37% and up to 83.83%, depending on the kind of adverse event.19

Our findings show that our approach of using a simple keyword search based on the codebook developed from manual annotation performs well in terms of precision (M = 86%, SD = 13%) and recall (M = 90%, SD = 13%). Out of 17 codes, 4 codes showed a precision of 100% and 3 codes had both precision and recall scores of 100%. These are promising results in attempting to build a triage system of telehealth alerts, even with community-based organizations that have a large proportion of low-income populations. The codes directly related to triaging that are about requiring nurses’ intervention or not requiring nurses’ reaction—Technical Error, False Alarm, No Further Action Needed, Change Worsen, Instruction, Nurse Call Patient, Discuss Symptoms—have all resulted in either high precision or recall.

Codes requiring high recall over precision would mean true positives cannot be missed. However, the only low recall scores of our findings occur in non-triage related codes, such as Review History, Reading Success, or Change Unknown. Codes requiring high precision over recall would mean we cannot tolerate false negatives. Relatively lower precision scores happened in codes about follow-up and discussing symptoms. Nurses can still follow up or discuss symptoms without any harms or increased risks of the patient. As a next step, building a user-friendly interface for nurses to simply revise notes based on predicted notes and nurses helping the predictive model by giving feedback will continue to improve and add more uniquely relevant keywords. This change will not only help the nurses to more efficiently handle adding alert notes and following up on alert notes but also eventually improve the triaging process for reduced false alerts. The resulting dataset will work again as a training dataset for a triage system, which can continue to be improved based on nurses’ feedback and the reinforcement learning mechanisms.20

Although our results are specific to the TIPS program, our approach of developing the workflow and testing keyword-based identification can be applied to identifying false alerts in other systems using alerts as well as implications for addressing alert fatigue. Making healthcare workers to become desensitized to safety alerts from overload of alerts results in failed or inappropriate responses to such warnings. Alert fatigue can cause a burden for healthcare workers, leading to bypassing true alerts that need clinicians’ attention and resulting in severe patient safety consequences.21,22 Although alert fatigue has been extensively discussed in the context of electronic health records, little is known about its relevance in community-based telehealth.

Since we worked with a sample of the alert notes for the coding framework development and keyword generation, we excluded other possible codes or keywords that can detect the alerts related to other activities of interest. Future studies with various domains of alert notes will help add evidence to this triage approach.

To the best of our knowledge, this study is the first approach to using narrative telehealth data to extract information and build possibilities for future prediction models for alerts in the context of community-driven intervention programs for low-income older adults. Our results provide a benchmark reference for the analysis of clinical notes of telehealth that is scalable to develop an automated alert triaging system that detects false alerts and streamlines the workflow from alert review to respond to the patients. Further research can explore the generalizability of the findings to other contexts and investigate the integration of the system with electronic health records and other clinical decision support systems to streamline the alert review process. Community-driven telehealth is a growing area of commercialization, but with little support for not-for-profits and CBOs who make these investments with little evidence, this work makes a critical contribution to this space in which automated approaches lack evidence. Our findings pave the way for evidence-based toolkits for CBOs to make informed investments that can ensure patient safety, high-quality care, and save them money.

CONCLUSION

Our study introduces the potential of an automated approach to triage alerts within the telehealth system with mobile health monitoring data using a simple keyword search technique. We showed a feasibility of a simple, automating method for a community-driven telehealth program primarily serving low-income older adult population. Building on our work as a training dataset, an advanced machine learning technique can be examined to further evaluate the feasibility of automatically detecting false alerts and improve the quality and efficacy of telehealth.

FUNDING

This work was in part supported by National Science Foundation (2144880 and 2237097) and National Institutes of Health (K01AG068592 and R21HS028104). Helene and Grant Wilson Center for Social Entrepreneurship at Pace University funded a portion of this study.

AUTHOR CONTRIBUTIONS

PN: contributed to the conception or design of the work, analysis, and interpretation of data for the work; drafted the work and reviewed it critically for important intellectual content; gave final approval of the version to be published; and agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. MKS: contributed to the conception and design of the work, analysis, and interpretation of data for the work; drafted the work and reviewed it critically for important intellectual content; gave final approval of the version to be published; agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. ZZ: contributed to the conception and design of the work, the acquisition, analysis, and interpretation of data for the work; drafted the work and reviewed it critically for important intellectual content; gave a final approval of the version to be published; agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. HWC: contributed to the analysis and interpretation of data for the work; drafted the work and reviewed it critically for important intellectual content; gave a final approval of the version to be published; agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. JH-Y: contributed to the conception and design of the work, analysis, and interpretation of data for the work; drafted the work and reviewed it critically for important intellectual content; gave a final approval of the version to be published; agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

SUPPLEMENTARY MATERIAL

Supplementary material is available at JAMIA Open online.

ACKNOWLEDGMENTS

The authors would like to thank Tiffany Chin for her clinical perspective in preparation for this study.

CONFLICT OF INTEREST STATEMENT

None declared.

DATA AVAILABILITY

The data underlying this article cannot be shared publicly due to the privacy of individuals that participated in the program. The data will be shared upon reasonable request to the corresponding author.