-

PDF

- Split View

-

Views

-

Cite

Cite

Jared M Wohlgemut, Erhan Pisirir, Evangelia Kyrimi, Rebecca S Stoner, William Marsh, Zane B Perkins, Nigel R M Tai, Methods used to evaluate usability of mobile clinical decision support systems for healthcare emergencies: a systematic review and qualitative synthesis, JAMIA Open, Volume 6, Issue 3, October 2023, ooad051, https://doi.org/10.1093/jamiaopen/ooad051

Close - Share Icon Share

Abstract

The aim of this study was to determine the methods and metrics used to evaluate the usability of mobile application Clinical Decision Support Systems (CDSSs) used in healthcare emergencies. Secondary aims were to describe the characteristics and usability of evaluated CDSSs.

A systematic literature review was conducted using Pubmed/Medline, Embase, Scopus, and IEEE Xplore databases. Quantitative data were descriptively analyzed, and qualitative data were described and synthesized using inductive thematic analysis.

Twenty-three studies were included in the analysis. The usability metrics most frequently evaluated were efficiency and usefulness, followed by user errors, satisfaction, learnability, effectiveness, and memorability. Methods used to assess usability included questionnaires in 20 (87%) studies, user trials in 17 (74%), interviews in 6 (26%), and heuristic evaluations in 3 (13%). Most CDSS inputs consisted of manual input (18, 78%) rather than automatic input (2, 9%). Most CDSS outputs comprised a recommendation (18, 78%), with a minority advising a specific treatment (6, 26%), or a score, risk level or likelihood of diagnosis (6, 26%). Interviews and heuristic evaluations identified more usability-related barriers and facilitators to adoption than did questionnaires and user testing studies.

A wide range of metrics and methods are used to evaluate the usability of mobile CDSS in medical emergencies. Input of information into CDSS was predominantly manual, impeding usability. Studies employing both qualitative and quantitative methods to evaluate usability yielded more thorough results.

When planning CDSS projects, developers should consider multiple methods to comprehensively evaluate usability.

Lay Summary

Healthcare professionals must make safe, accurate decisions, especially during medical emergencies. Researchers design and develop tools that can help medical experts make these decisions. These tools are called Clinical Decision Support Systems (CDSSs). CDSSs obtain and process information about a patient, and display information to the healthcare professional (user) to aid decision-making. Whether the user finds the system easy to use or useful is referred to the system’s usability. Usability affects how likely the CDSS is to be adopted and implemented into practice. We carefully searched the published literature and found 23 papers which measured the usability of CDSSs designed for medical emergencies. We found that CDSSs’ efficiency and usefulness were measured the most, and effectiveness and memorability the least. More studies used questionnaires and user testing than interviews or specific “heuristic” evaluations. However, we found that interviews and heuristic evaluations identified more usability issues than did the questionnaires and user tests. Studies which tested the usability of CDSS by using both numerical methods (quantitative) and narrative methods (qualitative) were better at identifying the most issues. We advised both numerical and narrative methods to test the usability of CDSS, because it will be most comprehensive.

BACKGROUND

Introduction

Clinical decision support systems (CDSSs) have been developed as potentially powerful diagnostic adjuncts in many clinical situations.1 A CDSS is a form of technology, designed to provide information to clinicians at the time of a decision to improve clinical judgment.1–4 In order for a CDSS to be implemented and adopted into clinical practice, it must be considered usable and useful to the end users of the technology.5,6 A systematic review of CDSSs found little evidence that these systems improved clinician diagnostic performance. It was suggested that 1 method to address this issue is to better understand and improve human-computer interaction prior to CDSS implementation.7 For this reason, early evaluation of the usability and usefulness of CDSSs is important to increase the likelihood of successful implementation and adoption. However, for CDSSs designed for clinicians treating patients with medical emergencies, few usability studies exist to guide the development process of these technologies.

Usability is defined as a “quality attribute that assesses how easy interfaces are to use”, which has several components: learnability, efficiency, memorability, errors, and satisfaction.8 The ISO (International Organisation for Standardisation) Standard 9241-11:2018 defines usability more specifically as “the extent to which a product can be used to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use”.9 A recent systematic review showed that almost half of studies also described usefulness as a usability metric.10 Usefulness refers to the degree to which using a technology will enhance job performance.11

Mobile health (mHealth) refers to applications (apps) which are developed on handheld devices (such as smartphones or tablets) for use in healthcare—either by healthcare professionals, patients, or carers.12 The potential benefits of mHealth to healthcare systems include time saving, reduced error rates, and cost savings.13,14 Types of app uses include diagnostics and decision-making, behavior change intervention, digital therapeutics, and disease-related education.14 There are numerous apps tailored to specific professions, specialties, patient groups, or clinical situations, including healthcare emergencies.15,16

Some CDSSs have been designed for use in healthcare emergencies. Healthcare emergencies can be defined as any situation where a person requires immediate medical attention in order to preserve life or prevent catastrophic loss of function. There are multiple clinical situations which could be considered healthcare emergencies, and many healthcare professionals who may care for these patients. Examples include problems with the patient’s airway (eg, airway obstruction), breathing (eg, pulmonary embolism), circulation (eg, heart attack or stroke), or multi-system conditions such as injury or burns.17,18 These scenarios are time-critical, requiring timely decision-making and action.

Study motivation

Design of mobile CDSSs used in healthcare emergencies is important because it must be easy to use, useful, and seamlessly fit into the clinical workflow. The input must be minimal and ideally automatic, while the outputs must be simple, intuitive, and immediately applicable in order to avoid workflow disruption.19–21 Usability of CDSSs designed for emergencies is therefore arguably more important than for CDSSs designed for nonemergency (ie, elective) clinical settings.

There are multiple methods of usability testing. Though systematic reviews have been published which address usability methods used for CDSS evaluation,10,22–25 none have focused on mobile CDSSs designed or used in healthcare emergencies. For stakeholders, including academics, clinicians, healthcare managers, and information technologists, who are designing mobile CDSS for use in healthcare emergencies, the methods for testing usability, and associated standards must be understood in this unique context.

OBJECTIVE

This study answers the question: “What methods are employed to assess the usability of mobile clinical decision support systems designed for clinicians treating patients experiencing medical emergencies?” Our primary aim was to determine the methods of usability evaluation used by researchers of mobile healthcare decision support in clinical emergencies. Our secondary aims were to determine the characteristics of healthcare decision support in emergencies which underwent usability evaluations; and to determine the quantitative and qualitative standards and results achieved, utilizing descriptive quantitative and qualitative evidence synthesis (Supplementary Table S1).

MATERIALS AND METHODS

This systematic review was conducted according to the Preferred Reporting Items for Systematic reviews and Meta-Analysis (PRISMA) guidelines (Supplementary Table S2),26 and it was prospectively registered with the PROSPERO database, ID number CRD42021292014.27

Search strategy

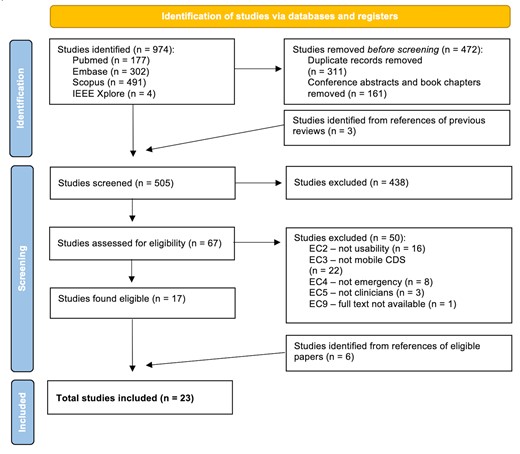

Relevant publications were identified by an electronic search of the Pubmed/Medline, Embase, Scopus, and IEEE Xplore databases using combinations of the following keywords and their synonyms: “usability”, “assessment”, “mobile”, “application”, “decision support”, “healthcare”, and “emergency”. The full search strategy is available in Supplementary Table S3. Searches were limited to Title and Abstract, and English-language only (Supplementary Table S4). The search was performed on December 9, 2021. The search results were uploaded to Endnote X9.3.3 (Clarivate analytics, Philadelphia, PA, USA), in order to identify and delete duplicates, conference abstracts, and book chapters. Two authors (JW and EP) independently screened individual citations against the inclusion criteria using Rayyan software (Rayyan Systems Inc, Cambridge, MA, USA).28 Two authors then independently assessed the full text of all identified citations for eligibility. Disagreements were resolved by a third independent reviewer (EK). Reasons for excluding studies were recorded (Figure 1). The reference lists of included articles, as well as excluded systematic reviews, were searched to identify additional publications.

Eligibility criteria and study designs/settings

Inclusion and exclusion criteria are listed in Table 1. The study eligibility criteria used the PECOS (population, exposure, comparator/control, outcomes, study designs/settings) framework. The population was any study testing/evaluating usability using human participants. The exposure was any study which tested usability of a healthcare-related mobile application which provided clinical decision support to clinicians. There was no comparator/control used. The outcomes included studies which provided empirical results from an evaluation of a system’s usability (either quantitative, qualitative, or both). The setting was studies which evaluated a CDSS which was designed for use by clinicians in healthcare emergencies.

| Inclusion criteria | |

| 1 | The paper tests/evaluates usability |

| 2 | The paper is focused on a healthcare-related technology/application/software/system including mobile, smartphone, tablet, digital, electronic, handheld/portable device, or website |

| 3 | The paper provides empirical results (quantitative or qualitative) |

| 4 | The system provides decision support/aid/tool, or risk prediction, or prognosis or diagnosis for decision-making |

| 5 | The system is designed for use in healthcare emergencies |

| Exclusion criteria | |

| 1 | Not written in English |

| 2 | Not testing usability, or does not describe the methods adequately |

| 3 | Not mobile clinical decision-support |

| 4 | Not designed for or tested in clinical emergencies |

| 5 | Not targeting clinicians as users |

| 6 | Not human participants |

| 7 | Not an empirical study (is a theory or review paper) |

| 8 | Study protocol only |

| 9 | Full text is not available |

| Inclusion criteria | |

| 1 | The paper tests/evaluates usability |

| 2 | The paper is focused on a healthcare-related technology/application/software/system including mobile, smartphone, tablet, digital, electronic, handheld/portable device, or website |

| 3 | The paper provides empirical results (quantitative or qualitative) |

| 4 | The system provides decision support/aid/tool, or risk prediction, or prognosis or diagnosis for decision-making |

| 5 | The system is designed for use in healthcare emergencies |

| Exclusion criteria | |

| 1 | Not written in English |

| 2 | Not testing usability, or does not describe the methods adequately |

| 3 | Not mobile clinical decision-support |

| 4 | Not designed for or tested in clinical emergencies |

| 5 | Not targeting clinicians as users |

| 6 | Not human participants |

| 7 | Not an empirical study (is a theory or review paper) |

| 8 | Study protocol only |

| 9 | Full text is not available |

| Inclusion criteria | |

| 1 | The paper tests/evaluates usability |

| 2 | The paper is focused on a healthcare-related technology/application/software/system including mobile, smartphone, tablet, digital, electronic, handheld/portable device, or website |

| 3 | The paper provides empirical results (quantitative or qualitative) |

| 4 | The system provides decision support/aid/tool, or risk prediction, or prognosis or diagnosis for decision-making |

| 5 | The system is designed for use in healthcare emergencies |

| Exclusion criteria | |

| 1 | Not written in English |

| 2 | Not testing usability, or does not describe the methods adequately |

| 3 | Not mobile clinical decision-support |

| 4 | Not designed for or tested in clinical emergencies |

| 5 | Not targeting clinicians as users |

| 6 | Not human participants |

| 7 | Not an empirical study (is a theory or review paper) |

| 8 | Study protocol only |

| 9 | Full text is not available |

| Inclusion criteria | |

| 1 | The paper tests/evaluates usability |

| 2 | The paper is focused on a healthcare-related technology/application/software/system including mobile, smartphone, tablet, digital, electronic, handheld/portable device, or website |

| 3 | The paper provides empirical results (quantitative or qualitative) |

| 4 | The system provides decision support/aid/tool, or risk prediction, or prognosis or diagnosis for decision-making |

| 5 | The system is designed for use in healthcare emergencies |

| Exclusion criteria | |

| 1 | Not written in English |

| 2 | Not testing usability, or does not describe the methods adequately |

| 3 | Not mobile clinical decision-support |

| 4 | Not designed for or tested in clinical emergencies |

| 5 | Not targeting clinicians as users |

| 6 | Not human participants |

| 7 | Not an empirical study (is a theory or review paper) |

| 8 | Study protocol only |

| 9 | Full text is not available |

Quality of studies assessment

The methodological quality of included studies were assessed using a modified Downs and Black (D&B) checklist by 1 study author (JW).29 The D&B checklist was developed to evaluate the quality of both randomized and nonrandomized studies of healthcare interventions on the same scale.29 We omitted questions 5, 9, 12, 14, 17, 25, 26 of the 27, because they were deemed not appropriate for assessing the included papers’ methods of usability assessment (Supplementary Table S5).10 We did not exclude articles due to poor quality. Quality of Studies (QOS) was classified according to the proportion of modified D&B categories present per paper, as low (<50%), medium (50–74%), and high (≥75%) quality.

Data extraction

Data were extracted and tabulated in Microsoft Excel (Microsoft, Redmond, WA, USA), according to the study aims (Supplementary Table S1). Demographic data were collected by JW. Two authors (JW and EP) independently extracted data relating to the study aims, using a standardized proforma, which were combined for analysis. Any discrepancies were resolved by consensus. The following data were extracted from each study: Study demographics (citation details, country of study conduct, type of study); Aim (1) method of usability evaluation, including usability definition, metrics and methods used to evaluate usability, number and characteristics of participants, and quantitative and qualitative results reported; Aim (2) characteristics of the CDSS, including type and number of medical specialties targeted, number and type of conditions targeted, CDSS input (number, type, method, and description), CDSS computation (complexity, method, and description), CDSS output (number, type, and description), device used, guideline on which the CDSS is based, stage of CDSS (Development, Feasibility, Evaluation, Implementation),30 and CDSS name and description (Supplementary Table S1). Supplemental material was sought if available. Any links in the paper to external information (app website, web calculator, etc.), or articles cited which contain missing information (such as published article describing app development) were sought. Missing or unclear information was discussed between JW and EP, and if uncertainty remained, study authors were contacted. Missing data were not included in quantitative or qualitative analysis for individual study metrics.

Strategy for data synthesis

Data synthesis was descriptive only for quantitative data addressing the primary and secondary outcomes. Results from individual studies were summarized and reported individually, with no meta-analysis planned or performed.

To describe the qualitative standards and results achieved of assessing usability of CDSSs in medical emergencies, qualitative evidence synthesis methods were used. The PerSPecTIF (perspective, setting, phenomenon of interest, environment, comparison, timing, and findings) question formulation framework was used to define the context and basis for qualitative evidence synthesis (Supplementary Table S6).31 Inductive thematic analysis of qualitative results in included studies was undertaken to identify usability-related barriers and facilitators to adoption of mobile CDSS in healthcare emergencies, using a 6-step inductive thematic analysis method: (1) familiarization with the data, (2) generating initial codes, (3) searching for themes, (4) reviewing themes, (5) defining and naming themes, and (6) producing the report/manuscript.32 For qualitative evidence synthesis, our research questions were “what were the themes of usability-related barriers to, and facilitators of adoption of mobile CDSS in emergency settings, and what is the relationship between these themes and the method used to assess usability?” Qualitative data were extracted from individual studies and imported into NVIVO software version 12.0 (QSR International, Melbourne, Australia).

RESULTS

Study inclusion

The systematic search identified 974 studies. Of 505 unique full-text studies, 67 appeared to meet inclusion criteria from screening, and 23 were included in the analysis after full-text review (Figure 1). For 7 studies, there was disagreement between 2 reviewers after full text review, in which the papers appeared to meet inclusion. A third reviewer (EK) included 4 of these, excluding 3 papers: 1 because it was not usability,33 1 because it was not testing mobile CDSS,34 and 1 because it was not a healthcare emergency.35 Overall, key reasons for exclusions (n = 50) were the paper did not evaluate usability (n = 16), did not report mobile clinical decision support (n = 22), was not a healthcare emergency (n = 8), did not assess clinicians (n = 3), or full text was unavailable (n = 1) (Figure 1).

Characteristics of included studies

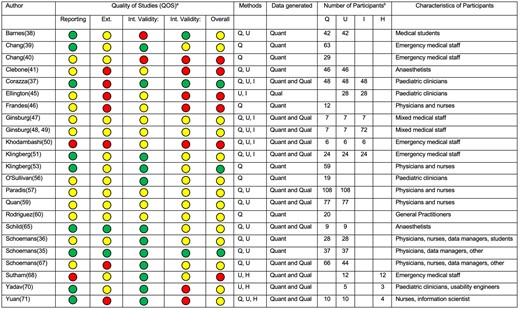

Twenty studies (87%) were observational, 1 was a randomized controlled trial,36 1 was a proof of concept experiment,37 and 1 was a pilot nonrandomized controlled study (Table 2; Supplementary Table S7).38 All included studies were published between 2003 and 2021. The majority of studies (n = 13; 57%) were published between 2017 and 2021, with 8 (35%) studies published between 2012 and 2016, and 2 (9%) published between 2002 and 2011. The geographical distribution of studies, by participant location, included 8 in Europe (35%), 6 in North America (26%), 5 in Africa (22%), 3 in Asia (13%), and 1 in South America (4%). The most common method used to assess usability was a questionnaire (n = 20; 87%), followed by user testing (n = 17; 74%), interviews (n = 6; 26%), and heuristic evaluations (n = 3; 13%). Combinations of these methodologies were also used, with a quarter (n = 6; 26%) of studies using 1 method, half (n = 11; 48%) using 2 methods, and a quarter (n = 6; 26%) using 3 methods. Quantitative methods were used in 10 (43%) studies, qualitative methods in 1 (4%) study, and both quantitative and qualitative methods were used in 12 (52%) studies.

| Year . | First author and reference . | Countrya . | Study design . | Methodsb . | Validated methods . | Participants . | Conditions . | Device . | Name of system . | Guideline on which CDSS is based . | Stage(s) of CDSSc . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2015 | Barnes39 | UK | Observational, comparative (app vs paper) | Q, U | NA | Medical students | Burns | Mobile (smartphone, tablet) | Mersey Burns App | Parkland formula for burns | Evaluation and implementation |

| 2003 | Chang40 | Taiwan | Observational, comparative (PDS vs terminal) | Q | TAM6 | Emergency medical staff | Multiple: allergy, hypertension, diabetes, trauma, nontrauma | Mobile (PDA) | NA | NA | Development and feasibility |

| 2004 | Chang41 | Taiwan | Observational | Q | TAM6 | Emergency medical staff | Multiple: mass gathering-related, including trauma and infectious disease | Mobile (PDA) | NA | NA | Feasibility |

| 2019 | Clebone42 | USA | Observational | Q, U | SUS43 | Anesthetists | Multiple: airway, nonairway | Mobile (smartphone) | Pedi Crisis 2.0 App | Society for Pediatric Anesthesia 26 Pediatric Crisis checklists | Development and feasibility |

| 2020 | Corazza38 | Italy | Pilot nonrandomized controlled | Q, U, I | UEQ,44 NASA-TLX45 | Pediatric clinicians | Pediatric cardiac arrest | Mobile (tablet) | PediARREST App | American Heart Association Pediatric Advanced Life Support 2015 | Development and feasibility |

| 2021 | Ellington46 | Uganda | Observational | U, I | NA | Pediatric clinicians | Pediatric acute lower respiratory Illness | Mobile (smartphone) | ALRITE | WHO Integrated Management of Childhood Illnesses—Acute Lower Respiratory Illnesses guidelines | Development and feasibility |

| 2015 | Frandes47 | Romania | Observational | Q | NA | Physicians and nurses | Diabetic ketoacidosis (DKA) | Mobile (smartphone, tablet) | mDKA | Medical standards for diabetes care | Development and feasibility |

| 2015 | Ginsburg48 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Development and feasibility |

| 2016 | Ginsburg49,50 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Feasibility |

| 2017 | Khodambashi51 | Norway | Observational | Q, U, I | SUS43 | Emergency medical staff | Mental illness (suicidal or violent) | Mobile (smartphone, tablet) | NA | Norwegian laws related to forensic psychiatry | Development and feasibility |

| 2018 | Klingberg52 | South Africa | Observational | Q, U, I | Health-ITUES53 | Emergency medical staff | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Evaluation and implementation |

| 2020 | Klingberg54 | South Africa | Observational | Q | TAM,6 IDT,55 and TPB56 | Physicians and nurses | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Feasibility |

| 2014 | O'Sullivan57 | Canada | Observational | Q | NA | Pediatric clinicians | Asthma exacerbations | Mobile (tablet); Desktop (web app) | MET3-AE | Bayes prediction of asthma exacerbation severity within 2h of nursing triage | Development and feasibility |

| 2018 | Paradis58 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, and neck injuries | Mobile (smartphone, tablet) | Ottawa Rules App | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Quan60 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, neck, and head injuries | Mobile (smartphone, tablet) | Ottawa Rules App 3.0.2 | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Rodriguez61 | Colombia | Observational | Q | mERA,62 iSYScore index,63,MARS,64 and uMARS65 | General practitioners | Multiple: acute febrile syndromes | Mobile (smartphone) | FeverDx | Colombian Ministry of Health’s clinical practice guidelines for diagnosis and management of arboviruses | Development and feasibility |

| 2019 | Schild66 | Germany | Observational | Q, U | SUS43 | Anesthetists | Multiple: anesthetic emergencies | Mobile (tablet); Desktop (web app) | NA | German Cognitive Aid Working Group | Development and feasibility |

| 2016 | Schoemans37 | Belgium | Proof of Concept Experimental | Q, U | TAM6 and PSSUQ67 | Physicians, nurses, data managers, and students | Graft versus host disease (GVHD) | Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Development and feasibility |

| 2018 | Schoemans36 | Belgium | Randomized Controlled Trial | Q, U | TAM6 and PSSUQ67 | Physicians, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet); Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Evaluation |

| 2018 | Schoemans68 | France | Observational, comparative (app vs self-assessment) | Q, U | NA | Physicians, nurses, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet, laptop) | NA | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Feasibility |

| 2020 | Sutham69 | Thailand | Observational, comparative (app vs handbook vs experienced) | U, H | Nielsen’s Heuristics70 | Emergency medical staff | Multiple: trauma, nontrauma | Mobile (smartphone) | Triagist App | National Institute for Emergency Medicine of Thailand Criteria-Based Dispatch | Development and feasibility |

| 2015 | Yadav71 | USA | Observational | U, H | Nielsen’s Heuristics70 | Pediatric clinicians, usability engineers | Pediatric head injuries | Desktop (web app) | NA |

| Development and feasibility |

| 2013 | Yuan72 | USA | Observational | Q, U, H | NASA TLX,45 Nielsen’s Heuristics70 | Nurses, information scientist | Multiple: heart attack, pleurisy, reflux/indigestion, pneumothorax, myocardial infarction | Mobile (tablet) | NA | NA | Development and feasibility |

| Year . | First author and reference . | Countrya . | Study design . | Methodsb . | Validated methods . | Participants . | Conditions . | Device . | Name of system . | Guideline on which CDSS is based . | Stage(s) of CDSSc . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2015 | Barnes39 | UK | Observational, comparative (app vs paper) | Q, U | NA | Medical students | Burns | Mobile (smartphone, tablet) | Mersey Burns App | Parkland formula for burns | Evaluation and implementation |

| 2003 | Chang40 | Taiwan | Observational, comparative (PDS vs terminal) | Q | TAM6 | Emergency medical staff | Multiple: allergy, hypertension, diabetes, trauma, nontrauma | Mobile (PDA) | NA | NA | Development and feasibility |

| 2004 | Chang41 | Taiwan | Observational | Q | TAM6 | Emergency medical staff | Multiple: mass gathering-related, including trauma and infectious disease | Mobile (PDA) | NA | NA | Feasibility |

| 2019 | Clebone42 | USA | Observational | Q, U | SUS43 | Anesthetists | Multiple: airway, nonairway | Mobile (smartphone) | Pedi Crisis 2.0 App | Society for Pediatric Anesthesia 26 Pediatric Crisis checklists | Development and feasibility |

| 2020 | Corazza38 | Italy | Pilot nonrandomized controlled | Q, U, I | UEQ,44 NASA-TLX45 | Pediatric clinicians | Pediatric cardiac arrest | Mobile (tablet) | PediARREST App | American Heart Association Pediatric Advanced Life Support 2015 | Development and feasibility |

| 2021 | Ellington46 | Uganda | Observational | U, I | NA | Pediatric clinicians | Pediatric acute lower respiratory Illness | Mobile (smartphone) | ALRITE | WHO Integrated Management of Childhood Illnesses—Acute Lower Respiratory Illnesses guidelines | Development and feasibility |

| 2015 | Frandes47 | Romania | Observational | Q | NA | Physicians and nurses | Diabetic ketoacidosis (DKA) | Mobile (smartphone, tablet) | mDKA | Medical standards for diabetes care | Development and feasibility |

| 2015 | Ginsburg48 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Development and feasibility |

| 2016 | Ginsburg49,50 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Feasibility |

| 2017 | Khodambashi51 | Norway | Observational | Q, U, I | SUS43 | Emergency medical staff | Mental illness (suicidal or violent) | Mobile (smartphone, tablet) | NA | Norwegian laws related to forensic psychiatry | Development and feasibility |

| 2018 | Klingberg52 | South Africa | Observational | Q, U, I | Health-ITUES53 | Emergency medical staff | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Evaluation and implementation |

| 2020 | Klingberg54 | South Africa | Observational | Q | TAM,6 IDT,55 and TPB56 | Physicians and nurses | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Feasibility |

| 2014 | O'Sullivan57 | Canada | Observational | Q | NA | Pediatric clinicians | Asthma exacerbations | Mobile (tablet); Desktop (web app) | MET3-AE | Bayes prediction of asthma exacerbation severity within 2h of nursing triage | Development and feasibility |

| 2018 | Paradis58 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, and neck injuries | Mobile (smartphone, tablet) | Ottawa Rules App | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Quan60 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, neck, and head injuries | Mobile (smartphone, tablet) | Ottawa Rules App 3.0.2 | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Rodriguez61 | Colombia | Observational | Q | mERA,62 iSYScore index,63,MARS,64 and uMARS65 | General practitioners | Multiple: acute febrile syndromes | Mobile (smartphone) | FeverDx | Colombian Ministry of Health’s clinical practice guidelines for diagnosis and management of arboviruses | Development and feasibility |

| 2019 | Schild66 | Germany | Observational | Q, U | SUS43 | Anesthetists | Multiple: anesthetic emergencies | Mobile (tablet); Desktop (web app) | NA | German Cognitive Aid Working Group | Development and feasibility |

| 2016 | Schoemans37 | Belgium | Proof of Concept Experimental | Q, U | TAM6 and PSSUQ67 | Physicians, nurses, data managers, and students | Graft versus host disease (GVHD) | Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Development and feasibility |

| 2018 | Schoemans36 | Belgium | Randomized Controlled Trial | Q, U | TAM6 and PSSUQ67 | Physicians, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet); Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Evaluation |

| 2018 | Schoemans68 | France | Observational, comparative (app vs self-assessment) | Q, U | NA | Physicians, nurses, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet, laptop) | NA | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Feasibility |

| 2020 | Sutham69 | Thailand | Observational, comparative (app vs handbook vs experienced) | U, H | Nielsen’s Heuristics70 | Emergency medical staff | Multiple: trauma, nontrauma | Mobile (smartphone) | Triagist App | National Institute for Emergency Medicine of Thailand Criteria-Based Dispatch | Development and feasibility |

| 2015 | Yadav71 | USA | Observational | U, H | Nielsen’s Heuristics70 | Pediatric clinicians, usability engineers | Pediatric head injuries | Desktop (web app) | NA |

| Development and feasibility |

| 2013 | Yuan72 | USA | Observational | Q, U, H | NASA TLX,45 Nielsen’s Heuristics70 | Nurses, information scientist | Multiple: heart attack, pleurisy, reflux/indigestion, pneumothorax, myocardial infarction | Mobile (tablet) | NA | NA | Development and feasibility |

Country of study conduct.

Q, U, I, H are questionnaire, user-testing, interview, and heuristic evaluation studies, respectively;.

Stage(s) of CDSS (Development, Feasibility, Evaluation or Implementation) are based on MRC/NIHR framework for developing and evaluating complex interventions.30

NA: not applicable; TAM: technology acceptance model; SUS: system usability scale; UEQ: user experience questionnaire; NASA TLX: National Aeronautics and Space Administration task load index; Health-ITUES: health information technology usability evaluation scale; IDT: innovation diffusion theory; TPB: theory of planned behavior; mERA: mobile health evidence reporting and assessment checklist; MARS: mobile application rating scale; uMARS: user version of the mobile application rating scale; PSSUQ: poststudy system usability questionnaire; TRI: technology readiness index.

| Year . | First author and reference . | Countrya . | Study design . | Methodsb . | Validated methods . | Participants . | Conditions . | Device . | Name of system . | Guideline on which CDSS is based . | Stage(s) of CDSSc . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2015 | Barnes39 | UK | Observational, comparative (app vs paper) | Q, U | NA | Medical students | Burns | Mobile (smartphone, tablet) | Mersey Burns App | Parkland formula for burns | Evaluation and implementation |

| 2003 | Chang40 | Taiwan | Observational, comparative (PDS vs terminal) | Q | TAM6 | Emergency medical staff | Multiple: allergy, hypertension, diabetes, trauma, nontrauma | Mobile (PDA) | NA | NA | Development and feasibility |

| 2004 | Chang41 | Taiwan | Observational | Q | TAM6 | Emergency medical staff | Multiple: mass gathering-related, including trauma and infectious disease | Mobile (PDA) | NA | NA | Feasibility |

| 2019 | Clebone42 | USA | Observational | Q, U | SUS43 | Anesthetists | Multiple: airway, nonairway | Mobile (smartphone) | Pedi Crisis 2.0 App | Society for Pediatric Anesthesia 26 Pediatric Crisis checklists | Development and feasibility |

| 2020 | Corazza38 | Italy | Pilot nonrandomized controlled | Q, U, I | UEQ,44 NASA-TLX45 | Pediatric clinicians | Pediatric cardiac arrest | Mobile (tablet) | PediARREST App | American Heart Association Pediatric Advanced Life Support 2015 | Development and feasibility |

| 2021 | Ellington46 | Uganda | Observational | U, I | NA | Pediatric clinicians | Pediatric acute lower respiratory Illness | Mobile (smartphone) | ALRITE | WHO Integrated Management of Childhood Illnesses—Acute Lower Respiratory Illnesses guidelines | Development and feasibility |

| 2015 | Frandes47 | Romania | Observational | Q | NA | Physicians and nurses | Diabetic ketoacidosis (DKA) | Mobile (smartphone, tablet) | mDKA | Medical standards for diabetes care | Development and feasibility |

| 2015 | Ginsburg48 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Development and feasibility |

| 2016 | Ginsburg49,50 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Feasibility |

| 2017 | Khodambashi51 | Norway | Observational | Q, U, I | SUS43 | Emergency medical staff | Mental illness (suicidal or violent) | Mobile (smartphone, tablet) | NA | Norwegian laws related to forensic psychiatry | Development and feasibility |

| 2018 | Klingberg52 | South Africa | Observational | Q, U, I | Health-ITUES53 | Emergency medical staff | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Evaluation and implementation |

| 2020 | Klingberg54 | South Africa | Observational | Q | TAM,6 IDT,55 and TPB56 | Physicians and nurses | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Feasibility |

| 2014 | O'Sullivan57 | Canada | Observational | Q | NA | Pediatric clinicians | Asthma exacerbations | Mobile (tablet); Desktop (web app) | MET3-AE | Bayes prediction of asthma exacerbation severity within 2h of nursing triage | Development and feasibility |

| 2018 | Paradis58 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, and neck injuries | Mobile (smartphone, tablet) | Ottawa Rules App | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Quan60 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, neck, and head injuries | Mobile (smartphone, tablet) | Ottawa Rules App 3.0.2 | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Rodriguez61 | Colombia | Observational | Q | mERA,62 iSYScore index,63,MARS,64 and uMARS65 | General practitioners | Multiple: acute febrile syndromes | Mobile (smartphone) | FeverDx | Colombian Ministry of Health’s clinical practice guidelines for diagnosis and management of arboviruses | Development and feasibility |

| 2019 | Schild66 | Germany | Observational | Q, U | SUS43 | Anesthetists | Multiple: anesthetic emergencies | Mobile (tablet); Desktop (web app) | NA | German Cognitive Aid Working Group | Development and feasibility |

| 2016 | Schoemans37 | Belgium | Proof of Concept Experimental | Q, U | TAM6 and PSSUQ67 | Physicians, nurses, data managers, and students | Graft versus host disease (GVHD) | Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Development and feasibility |

| 2018 | Schoemans36 | Belgium | Randomized Controlled Trial | Q, U | TAM6 and PSSUQ67 | Physicians, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet); Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Evaluation |

| 2018 | Schoemans68 | France | Observational, comparative (app vs self-assessment) | Q, U | NA | Physicians, nurses, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet, laptop) | NA | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Feasibility |

| 2020 | Sutham69 | Thailand | Observational, comparative (app vs handbook vs experienced) | U, H | Nielsen’s Heuristics70 | Emergency medical staff | Multiple: trauma, nontrauma | Mobile (smartphone) | Triagist App | National Institute for Emergency Medicine of Thailand Criteria-Based Dispatch | Development and feasibility |

| 2015 | Yadav71 | USA | Observational | U, H | Nielsen’s Heuristics70 | Pediatric clinicians, usability engineers | Pediatric head injuries | Desktop (web app) | NA |

| Development and feasibility |

| 2013 | Yuan72 | USA | Observational | Q, U, H | NASA TLX,45 Nielsen’s Heuristics70 | Nurses, information scientist | Multiple: heart attack, pleurisy, reflux/indigestion, pneumothorax, myocardial infarction | Mobile (tablet) | NA | NA | Development and feasibility |

| Year . | First author and reference . | Countrya . | Study design . | Methodsb . | Validated methods . | Participants . | Conditions . | Device . | Name of system . | Guideline on which CDSS is based . | Stage(s) of CDSSc . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2015 | Barnes39 | UK | Observational, comparative (app vs paper) | Q, U | NA | Medical students | Burns | Mobile (smartphone, tablet) | Mersey Burns App | Parkland formula for burns | Evaluation and implementation |

| 2003 | Chang40 | Taiwan | Observational, comparative (PDS vs terminal) | Q | TAM6 | Emergency medical staff | Multiple: allergy, hypertension, diabetes, trauma, nontrauma | Mobile (PDA) | NA | NA | Development and feasibility |

| 2004 | Chang41 | Taiwan | Observational | Q | TAM6 | Emergency medical staff | Multiple: mass gathering-related, including trauma and infectious disease | Mobile (PDA) | NA | NA | Feasibility |

| 2019 | Clebone42 | USA | Observational | Q, U | SUS43 | Anesthetists | Multiple: airway, nonairway | Mobile (smartphone) | Pedi Crisis 2.0 App | Society for Pediatric Anesthesia 26 Pediatric Crisis checklists | Development and feasibility |

| 2020 | Corazza38 | Italy | Pilot nonrandomized controlled | Q, U, I | UEQ,44 NASA-TLX45 | Pediatric clinicians | Pediatric cardiac arrest | Mobile (tablet) | PediARREST App | American Heart Association Pediatric Advanced Life Support 2015 | Development and feasibility |

| 2021 | Ellington46 | Uganda | Observational | U, I | NA | Pediatric clinicians | Pediatric acute lower respiratory Illness | Mobile (smartphone) | ALRITE | WHO Integrated Management of Childhood Illnesses—Acute Lower Respiratory Illnesses guidelines | Development and feasibility |

| 2015 | Frandes47 | Romania | Observational | Q | NA | Physicians and nurses | Diabetic ketoacidosis (DKA) | Mobile (smartphone, tablet) | mDKA | Medical standards for diabetes care | Development and feasibility |

| 2015 | Ginsburg48 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Development and feasibility |

| 2016 | Ginsburg49,50 | Ghana | Observational | Q, U, I | SUS43 | Mixed medical staff | Childhood pneumonia | Mobile (tablet) | mPneumonia | WHO Integrated Management of Childhood Illnesses guidelines | Feasibility |

| 2017 | Khodambashi51 | Norway | Observational | Q, U, I | SUS43 | Emergency medical staff | Mental illness (suicidal or violent) | Mobile (smartphone, tablet) | NA | Norwegian laws related to forensic psychiatry | Development and feasibility |

| 2018 | Klingberg52 | South Africa | Observational | Q, U, I | Health-ITUES53 | Emergency medical staff | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Evaluation and implementation |

| 2020 | Klingberg54 | South Africa | Observational | Q | TAM,6 IDT,55 and TPB56 | Physicians and nurses | Burns | Mobile (smartphone) | Vula App | Burns size calculation and Parkland formula | Feasibility |

| 2014 | O'Sullivan57 | Canada | Observational | Q | NA | Pediatric clinicians | Asthma exacerbations | Mobile (tablet); Desktop (web app) | MET3-AE | Bayes prediction of asthma exacerbation severity within 2h of nursing triage | Development and feasibility |

| 2018 | Paradis58 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, and neck injuries | Mobile (smartphone, tablet) | Ottawa Rules App | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Quan60 | Canada | Observational | Q, U | TRI59 | Physicians and nurses | Multiple: knee, ankle, neck, and head injuries | Mobile (smartphone, tablet) | Ottawa Rules App 3.0.2 | The Ottawa Rules | Feasibility and evaluation |

| 2020 | Rodriguez61 | Colombia | Observational | Q | mERA,62 iSYScore index,63,MARS,64 and uMARS65 | General practitioners | Multiple: acute febrile syndromes | Mobile (smartphone) | FeverDx | Colombian Ministry of Health’s clinical practice guidelines for diagnosis and management of arboviruses | Development and feasibility |

| 2019 | Schild66 | Germany | Observational | Q, U | SUS43 | Anesthetists | Multiple: anesthetic emergencies | Mobile (tablet); Desktop (web app) | NA | German Cognitive Aid Working Group | Development and feasibility |

| 2016 | Schoemans37 | Belgium | Proof of Concept Experimental | Q, U | TAM6 and PSSUQ67 | Physicians, nurses, data managers, and students | Graft versus host disease (GVHD) | Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Development and feasibility |

| 2018 | Schoemans36 | Belgium | Randomized Controlled Trial | Q, U | TAM6 and PSSUQ67 | Physicians, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet); Desktop (web app) | eGVHD App | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Evaluation |

| 2018 | Schoemans68 | France | Observational, comparative (app vs self-assessment) | Q, U | NA | Physicians, nurses, data managers, other | Graft versus host disease (GVHD) | Mobile (smartphone, tablet, laptop) | NA | Acute (Glucksberg and IBMTR scores) and chronic (NIH criteria) GVHD | Feasibility |

| 2020 | Sutham69 | Thailand | Observational, comparative (app vs handbook vs experienced) | U, H | Nielsen’s Heuristics70 | Emergency medical staff | Multiple: trauma, nontrauma | Mobile (smartphone) | Triagist App | National Institute for Emergency Medicine of Thailand Criteria-Based Dispatch | Development and feasibility |

| 2015 | Yadav71 | USA | Observational | U, H | Nielsen’s Heuristics70 | Pediatric clinicians, usability engineers | Pediatric head injuries | Desktop (web app) | NA |

| Development and feasibility |

| 2013 | Yuan72 | USA | Observational | Q, U, H | NASA TLX,45 Nielsen’s Heuristics70 | Nurses, information scientist | Multiple: heart attack, pleurisy, reflux/indigestion, pneumothorax, myocardial infarction | Mobile (tablet) | NA | NA | Development and feasibility |

Country of study conduct.

Q, U, I, H are questionnaire, user-testing, interview, and heuristic evaluation studies, respectively;.

Stage(s) of CDSS (Development, Feasibility, Evaluation or Implementation) are based on MRC/NIHR framework for developing and evaluating complex interventions.30

NA: not applicable; TAM: technology acceptance model; SUS: system usability scale; UEQ: user experience questionnaire; NASA TLX: National Aeronautics and Space Administration task load index; Health-ITUES: health information technology usability evaluation scale; IDT: innovation diffusion theory; TPB: theory of planned behavior; mERA: mobile health evidence reporting and assessment checklist; MARS: mobile application rating scale; uMARS: user version of the mobile application rating scale; PSSUQ: poststudy system usability questionnaire; TRI: technology readiness index.

Studies used a number of validated tools to assess usability: The system usability scale (SUS43), and the technology acceptance model (TAM6) were each included in 5 (22%) studies, Nielsen’s Heuristics70 in 3 (13%) studies, NASA Task Load Index (TLX) in 2 (9%) studies, technology readiness index (TRI) in 2 (9%) studies, the poststudy system usability questionnaire (PSSUQ) in 2 (9%) of studies, and 8 other validated methods were used in 1 included study each (Table 2). Five (22%) studies used no validated method. All studies included clinician participants, while 3 studies also included data managers,36,37,68 1 study included usability engineers,71 and 1 study had information scientists as participants.72

Characteristics of mobile CDSSs in healthcare emergencies

The targeted emergency conditions included multiple conditions in 9 (39%) studies,40–42,58,60,61,66,69,72 burns in 3 studies (13%),39,52,54 graft versus host disease in 3 studies (13%),36,37,68 pediatric respiratory illness in 3 studies (13%),46,48,50 and 1 study addressing each of: pediatric cardiac arrest,38 diabetic ketoacidosis,47 mental illness (suicidal or violent),51 asthma,57 and pediatric head injuries (Supplementary Table S7).71 Nine studies evaluated mobile CDSS designed for multiple device types,36,39,47,51,57,58,60,66,68 6 for smartphones,42,46,52,54,61,69 4 for tablets,38,48,50,72 2 for desktop web apps,37,71 and 2 for personal digital assistants.40,41 Nearly, all CDSSs (n = 20; 87%) were based on a guideline, and most were in development (n = 14; 61%) or feasibility (n = 20; 87%) stages, while a minority were in evaluation (n = 5; 22%) or implementation (n = 2; 9%) stages. The majority (n = 18; 78%) of CDSSs required manual checkbox/radio button inputs, with a minority (n = 2; 9%) incorporating a form of automatic input (Supplementary Table S7). Nearly, all (n = 22; 96%) had text output, while nearly half (n = 10; 43%) had numerical input, and few (n = 2; 9%) had image or video (n = 1; 4%) input. A majority (n = 18; 78%) of CDSSs provided a clinical recommendation, a quarter (n = 6; 26%) a specific treatment, and a quarter (n = 6; 26%) a score, risk level, likelihood of diagnosis (Supplementary Tables S7 and S8). Over half (n = 13; 57%) of studies had descriptions of the number of CDSS inputs: Of these, there were a median of 50 inputs (interquartile range [IQR] 11–78) (Supplementary Table S8). Twenty (87%) studies had descriptions or figures outlining the number of CDSS output; of these, there were a median of 2 outputs (IQR 1–3) (Supplementary Table S8).

Quality of studies

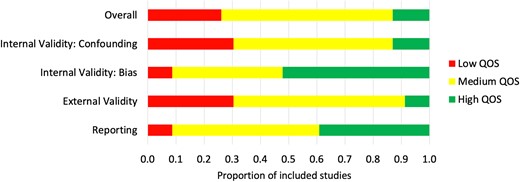

Results for the modified Downs and Black (D&B) quality assessment of included studies (QOS) showed that overall, only 3 studies (13%) had high QOS, 14 (61%) had medium QOS, and 6 (26%) had low QOS (Figure 2). Studies which employed more methods to evaluate usability did not have a substantial difference in risk of bias (Figure 3). There was, however, lower risk of bias overall in studies which used mixed methods (both qualitative and quantitative), rather than only quantitative or only qualitative methods of usability evaluation (Figure 3). A median of 29 (IQR 12–51) participants were recruited for questionnaire-based studies, 28 (IQR 9–44) participants for user trials, 26 (IQR 11–43) participants for interview-based studies, and 4 (IQR 4–8) participants for heuristics studies.

Quality of studies (QOS) summary: the proportion of included studies which scored low, medium or high, overall and for each QOS subcategory.

Quality of studies (QOS) summary and individual study characteristics. aGreen: high QOS; yellow: moderate QOS; red: low QOS. bQ, U, I, H are questionnaire, user-testing, interview, and heuristic evaluation studies, respectively. Int: internal; Ext: external; Quant: quantitative; Qual: qualitative.

Definition of usability in included studies

Of the 23 included studies, 13 (57%) did not define usability. Of the 10 which provided a definition, 3 (30%) used the definition provided by the ISO (ISO 9241-11),9 which is the “extent to which a system, product or service can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use”.51,52,57 Two (20%) defined usability as “the design factors that affect the user experience of operating the application’s device and navigating the application for its intended purpose”.46,50 Other definitions of usability included:

Differentiating “content usability” (data completeness and reassurance of medical needs), from “efficiency improvement” (quicker and easier evaluation), and “overall usefulness of systems”41

“ease of use, confidence in input, preference in an emergency setting, speed, accuracy, ease of calculation, and ease of shading”39

“efficiency, perspicuity, dependability”38

“functionality, convenience, triage accuracy, and accessibility.”69

Usability evaluation metrics used

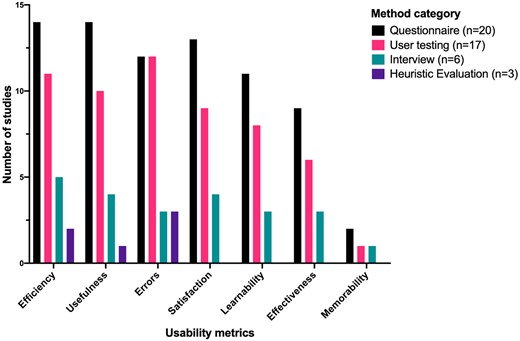

Though not all studies defined usability explicitly, all studies reported how usability was evaluated. The most frequent evaluation metrics were Efficiency and Usefulness, measured in 15 (65%) studies. User Errors were measured in 14 (61%), Satisfaction in 13 (57%), Learnability in 11 (48%), Effectiveness in 9 (39%), and Memorability in 2 (9%) studies. The frequency of usability evaluation metrics was similar between studies utilizing questionnaire, user testing, and interview methods, though studies using heuristics only measured Usefulness, Efficiency, and user Errors (Figure 4).

Usability metrics evaluation in the included studies, presented as the number of metrics use in studies using each method. Ordered from most-commonly used on the left, to least commonly used on the right.

Description of quantitative results

Descriptive quantitative results from included studies are summarized in Supplementary Table S9. The 5 studies which used SUS as a method all achieved acceptable usability scores (>67). The 5 studies which used TAM as a method achieved mixed results, with 1 study demonstrating worse usability than the existing system,40 and another study having different usability depending on user group (physicians vs nurses).41 Both studies which used NASA TLX to measure mental effort found it was acceptably low, with 1 study stating that perceived workload was comparable whether the app was used or not.38 Of the 2 studies which employed the TRI, 1 found no difference based on demographics, and 1 found that younger users were more ready for the technology.60 Of the 3 studies which employed Nielsen’s Heuristics, 2 identified usability issues in each of the 10 design heuristics categories.71,72

Qualitative results synthesis

Themes of usability-related barriers to adoption included: external issues, hardware issues, input problems, output problems, poor software navigation, poor user interface design, user barrier, and user emotion or experience (Table 3). A higher proportion of codes (of barriers and facilitators to adoption) were generated by interviews and heuristics evaluation methods, than questionnaire or user testing methods (Table 3). Themes of usability-related facilitators of adoption included: automaticity, user interface design, efficiency, feasibility, learnability, patient benefit, trustworthiness, ease of use, usefulness, and user experience (Table 4). A more complete identification of themes (of barriers and facilitators to adoption) occurred when included studies used interviews and heuristic evaluation, compared to user testing or questionnaire (Table 4).

Qualitative evidence synthesis of included studies (n = 13/23): usability-related themes and codes of barriers to adoption, by usability method category

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| External issues | 0 | 0 | 3 | 1 | External issues | 0 | 0 | 3 | 1 |

| Hardware issues | 0 | 3 | 5 | 1 | Hardware issues | 0 | 3 | 5 | 1 |

| Input problems | 4 | 6 | 37 | 24 | Difficult tasks | 0 | 0 | 2 | 2 |

| Inaccurate results | 0 | 1 | 3 | 0 | |||||

| Instructions unclear | 1 | 1 | 11 | 9 | |||||

| Mismatch with reality | 0 | 1 | 2 | 2 | |||||

| Not automated | 1 | 0 | 0 | 1 | |||||

| Not efficient | 1 | 3 | 7 | 1 | |||||

| Not enough information | 1 | 0 | 2 | 4 | |||||

| Not incorporating standard practices | 0 | 0 | 1 | 2 | |||||

| Not intuitive | 0 | 0 | 9 | 3 | |||||

| Output problems | 0 | 1 | 10 | 10 | Interrupting workflow | 0 | 1 | 2 | 1 |

| Minimizes group situational awareness | 0 | 0 | 0 | 1 | |||||

| Not clinically useful | 0 | 0 | 2 | 3 | |||||

| Not updating user | 0 | 0 | 2 | 5 | |||||

| Recommendations unclear | 0 | 0 | 4 | 0 | |||||

| Poor software navigation | 1 | 7 | 8 | 3 | Poor software navigation | 1 | 7 | 8 | 3 |

| Poor user interface design | 4 | 5 | 16 | 15 | Poor user interface design | 3 | 5 | 14 | 11 |

| Information overload | 1 | 0 | 1 | 1 | |||||

| Poor formatting | 0 | 0 | 1 | 3 | |||||

| User barrier | 2 | 10 | 29 | 6 | Impact on other patients | 0 | 0 | 1 | 0 |

| Lack of familiarity | 0 | 4 | 8 | 1 | |||||

| Medico-legal concern | 0 | 1 | 0 | 0 | |||||

| Need for training | 0 | 1 | 5 | 0 | |||||

| Not used as intended | 1 | 0 | 1 | 1 | |||||

| Patient not willing | 0 | 0 | 3 | 0 | |||||

| User mistakes | 1 | 4 | 11 | 4 | |||||

| User emotion or experience | 0 | 0 | 14 | 3 | Fear to use | 0 | 0 | 2 | 0 |

| Frustration when using | 0 | 0 | 3 | 1 | |||||

| Hesitancy towards CDSS | 0 | 0 | 1 | 1 | |||||

| Not understanding instructions | 0 | 0 | 5 | 1 | |||||

| Purpose needs explaining | 0 | 0 | 2 | 0 | |||||

| Uncomfortable when using | 0 | 0 | 1 | 0 | |||||

| Total themes identified | 4 | 6 | 8 | 8 | Total codes identified | 11 | 32 | 122 | 63 |

| Themes missed | 4 | 2 | 0 | 0 | Codes missed | 24 | 21 | 3 | 9 |

| Proportion identified (n = 8) | 50% | 75% | 100% | 100% | Proportion identified (n = 33) | 27% | 36% | 91% | 73% |

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| External issues | 0 | 0 | 3 | 1 | External issues | 0 | 0 | 3 | 1 |

| Hardware issues | 0 | 3 | 5 | 1 | Hardware issues | 0 | 3 | 5 | 1 |

| Input problems | 4 | 6 | 37 | 24 | Difficult tasks | 0 | 0 | 2 | 2 |

| Inaccurate results | 0 | 1 | 3 | 0 | |||||

| Instructions unclear | 1 | 1 | 11 | 9 | |||||

| Mismatch with reality | 0 | 1 | 2 | 2 | |||||

| Not automated | 1 | 0 | 0 | 1 | |||||

| Not efficient | 1 | 3 | 7 | 1 | |||||

| Not enough information | 1 | 0 | 2 | 4 | |||||

| Not incorporating standard practices | 0 | 0 | 1 | 2 | |||||

| Not intuitive | 0 | 0 | 9 | 3 | |||||

| Output problems | 0 | 1 | 10 | 10 | Interrupting workflow | 0 | 1 | 2 | 1 |

| Minimizes group situational awareness | 0 | 0 | 0 | 1 | |||||

| Not clinically useful | 0 | 0 | 2 | 3 | |||||

| Not updating user | 0 | 0 | 2 | 5 | |||||

| Recommendations unclear | 0 | 0 | 4 | 0 | |||||

| Poor software navigation | 1 | 7 | 8 | 3 | Poor software navigation | 1 | 7 | 8 | 3 |

| Poor user interface design | 4 | 5 | 16 | 15 | Poor user interface design | 3 | 5 | 14 | 11 |

| Information overload | 1 | 0 | 1 | 1 | |||||

| Poor formatting | 0 | 0 | 1 | 3 | |||||

| User barrier | 2 | 10 | 29 | 6 | Impact on other patients | 0 | 0 | 1 | 0 |

| Lack of familiarity | 0 | 4 | 8 | 1 | |||||

| Medico-legal concern | 0 | 1 | 0 | 0 | |||||

| Need for training | 0 | 1 | 5 | 0 | |||||

| Not used as intended | 1 | 0 | 1 | 1 | |||||

| Patient not willing | 0 | 0 | 3 | 0 | |||||

| User mistakes | 1 | 4 | 11 | 4 | |||||

| User emotion or experience | 0 | 0 | 14 | 3 | Fear to use | 0 | 0 | 2 | 0 |

| Frustration when using | 0 | 0 | 3 | 1 | |||||

| Hesitancy towards CDSS | 0 | 0 | 1 | 1 | |||||

| Not understanding instructions | 0 | 0 | 5 | 1 | |||||

| Purpose needs explaining | 0 | 0 | 2 | 0 | |||||

| Uncomfortable when using | 0 | 0 | 1 | 0 | |||||

| Total themes identified | 4 | 6 | 8 | 8 | Total codes identified | 11 | 32 | 122 | 63 |

| Themes missed | 4 | 2 | 0 | 0 | Codes missed | 24 | 21 | 3 | 9 |

| Proportion identified (n = 8) | 50% | 75% | 100% | 100% | Proportion identified (n = 33) | 27% | 36% | 91% | 73% |

Q: questionnaire; U: user testing; I: interview; H: heuristic evaluation studies.

Qualitative evidence synthesis of included studies (n = 13/23): usability-related themes and codes of barriers to adoption, by usability method category

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| External issues | 0 | 0 | 3 | 1 | External issues | 0 | 0 | 3 | 1 |

| Hardware issues | 0 | 3 | 5 | 1 | Hardware issues | 0 | 3 | 5 | 1 |

| Input problems | 4 | 6 | 37 | 24 | Difficult tasks | 0 | 0 | 2 | 2 |

| Inaccurate results | 0 | 1 | 3 | 0 | |||||

| Instructions unclear | 1 | 1 | 11 | 9 | |||||

| Mismatch with reality | 0 | 1 | 2 | 2 | |||||

| Not automated | 1 | 0 | 0 | 1 | |||||

| Not efficient | 1 | 3 | 7 | 1 | |||||

| Not enough information | 1 | 0 | 2 | 4 | |||||

| Not incorporating standard practices | 0 | 0 | 1 | 2 | |||||

| Not intuitive | 0 | 0 | 9 | 3 | |||||

| Output problems | 0 | 1 | 10 | 10 | Interrupting workflow | 0 | 1 | 2 | 1 |

| Minimizes group situational awareness | 0 | 0 | 0 | 1 | |||||

| Not clinically useful | 0 | 0 | 2 | 3 | |||||

| Not updating user | 0 | 0 | 2 | 5 | |||||

| Recommendations unclear | 0 | 0 | 4 | 0 | |||||

| Poor software navigation | 1 | 7 | 8 | 3 | Poor software navigation | 1 | 7 | 8 | 3 |

| Poor user interface design | 4 | 5 | 16 | 15 | Poor user interface design | 3 | 5 | 14 | 11 |

| Information overload | 1 | 0 | 1 | 1 | |||||

| Poor formatting | 0 | 0 | 1 | 3 | |||||

| User barrier | 2 | 10 | 29 | 6 | Impact on other patients | 0 | 0 | 1 | 0 |

| Lack of familiarity | 0 | 4 | 8 | 1 | |||||

| Medico-legal concern | 0 | 1 | 0 | 0 | |||||

| Need for training | 0 | 1 | 5 | 0 | |||||

| Not used as intended | 1 | 0 | 1 | 1 | |||||

| Patient not willing | 0 | 0 | 3 | 0 | |||||

| User mistakes | 1 | 4 | 11 | 4 | |||||

| User emotion or experience | 0 | 0 | 14 | 3 | Fear to use | 0 | 0 | 2 | 0 |

| Frustration when using | 0 | 0 | 3 | 1 | |||||

| Hesitancy towards CDSS | 0 | 0 | 1 | 1 | |||||

| Not understanding instructions | 0 | 0 | 5 | 1 | |||||

| Purpose needs explaining | 0 | 0 | 2 | 0 | |||||

| Uncomfortable when using | 0 | 0 | 1 | 0 | |||||

| Total themes identified | 4 | 6 | 8 | 8 | Total codes identified | 11 | 32 | 122 | 63 |

| Themes missed | 4 | 2 | 0 | 0 | Codes missed | 24 | 21 | 3 | 9 |

| Proportion identified (n = 8) | 50% | 75% | 100% | 100% | Proportion identified (n = 33) | 27% | 36% | 91% | 73% |

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| External issues | 0 | 0 | 3 | 1 | External issues | 0 | 0 | 3 | 1 |

| Hardware issues | 0 | 3 | 5 | 1 | Hardware issues | 0 | 3 | 5 | 1 |

| Input problems | 4 | 6 | 37 | 24 | Difficult tasks | 0 | 0 | 2 | 2 |

| Inaccurate results | 0 | 1 | 3 | 0 | |||||

| Instructions unclear | 1 | 1 | 11 | 9 | |||||

| Mismatch with reality | 0 | 1 | 2 | 2 | |||||

| Not automated | 1 | 0 | 0 | 1 | |||||

| Not efficient | 1 | 3 | 7 | 1 | |||||

| Not enough information | 1 | 0 | 2 | 4 | |||||

| Not incorporating standard practices | 0 | 0 | 1 | 2 | |||||

| Not intuitive | 0 | 0 | 9 | 3 | |||||

| Output problems | 0 | 1 | 10 | 10 | Interrupting workflow | 0 | 1 | 2 | 1 |

| Minimizes group situational awareness | 0 | 0 | 0 | 1 | |||||

| Not clinically useful | 0 | 0 | 2 | 3 | |||||

| Not updating user | 0 | 0 | 2 | 5 | |||||

| Recommendations unclear | 0 | 0 | 4 | 0 | |||||

| Poor software navigation | 1 | 7 | 8 | 3 | Poor software navigation | 1 | 7 | 8 | 3 |

| Poor user interface design | 4 | 5 | 16 | 15 | Poor user interface design | 3 | 5 | 14 | 11 |

| Information overload | 1 | 0 | 1 | 1 | |||||

| Poor formatting | 0 | 0 | 1 | 3 | |||||

| User barrier | 2 | 10 | 29 | 6 | Impact on other patients | 0 | 0 | 1 | 0 |

| Lack of familiarity | 0 | 4 | 8 | 1 | |||||

| Medico-legal concern | 0 | 1 | 0 | 0 | |||||

| Need for training | 0 | 1 | 5 | 0 | |||||

| Not used as intended | 1 | 0 | 1 | 1 | |||||

| Patient not willing | 0 | 0 | 3 | 0 | |||||

| User mistakes | 1 | 4 | 11 | 4 | |||||

| User emotion or experience | 0 | 0 | 14 | 3 | Fear to use | 0 | 0 | 2 | 0 |

| Frustration when using | 0 | 0 | 3 | 1 | |||||

| Hesitancy towards CDSS | 0 | 0 | 1 | 1 | |||||

| Not understanding instructions | 0 | 0 | 5 | 1 | |||||

| Purpose needs explaining | 0 | 0 | 2 | 0 | |||||

| Uncomfortable when using | 0 | 0 | 1 | 0 | |||||

| Total themes identified | 4 | 6 | 8 | 8 | Total codes identified | 11 | 32 | 122 | 63 |

| Themes missed | 4 | 2 | 0 | 0 | Codes missed | 24 | 21 | 3 | 9 |

| Proportion identified (n = 8) | 50% | 75% | 100% | 100% | Proportion identified (n = 33) | 27% | 36% | 91% | 73% |

Q: questionnaire; U: user testing; I: interview; H: heuristic evaluation studies.

Qualitative evidence synthesis of included studies (n = 13/23): usability-related themes and codes of facilitators of adoption, by usability method category

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| Automaticity | 0 | 0 | 6 | 5 | Automatic functioning | 0 | 0 | 6 | 5 |

| User interface design | 5 | 2 | 13 | 7 | Ability to correct mistake error | 0 | 0 | 0 | 2 |

| Clear design | 1 | 0 | 1 | 2 | |||||

| Few problems | 2 | 1 | 1 | 1 | |||||

| Good design | 2 | 1 | 3 | 1 | |||||

| Good internal (app) flow | 0 | 0 | 1 | 0 | |||||

| Simple design | 0 | 0 | 4 | 0 | |||||

| Familiarity with technology | 0 | 0 | 2 | 1 | |||||

| Size and shape of device | 0 | 0 | 1 | 0 | |||||

| Efficiency | 1 | 2 | 7 | 1 | Time efficiency | 1 | 2 | 7 | 1 |

| Feasibility | 0 | 0 | 4 | 3 | Feasible to implement | 0 | 0 | 2 | 0 |

| Minimally disruptive to work flow | 0 | 0 | 2 | 3 | |||||

| Learnability | 0 | 2 | 2 | 0 | Learnability and intuitiveness | 0 | 2 | 2 | 0 |

| Patient benefit | 0 | 0 | 2 | 0 | Patient benefit including noninvasive | 0 | 0 | 2 | 0 |

| Trustworthiness | 1 | 1 | 15 | 5 | Improves safety | 0 | 0 | 3 | 2 |

| Accuracy | 0 | 1 | 7 | 1 | |||||

| Improves trust | 0 | 0 | 2 | 2 | |||||

| Multiple types of people approve | 0 | 0 | 1 | 0 | |||||

| Thoroughness systematic | 1 | 0 | 2 | 0 | |||||

| Ease of use | 5 | 0 | 7 | 0 | Comforting | 1 | 0 | 0 | 0 |

| Convenience | 1 | 0 | 0 | 0 | |||||

| Easy to use | 3 | 0 | 7 | 0 | |||||

| Usefulness | 3 | 0 | 28 | 8 | Adds knowledge | 0 | 0 | 2 | 1 |

| Help diagnosis | 0 | 0 | 7 | 2 | |||||

| Helpful for communication | 0 | 0 | 2 | 1 | |||||

| Helpful for inexperienced clinicians | 2 | 0 | 0 | 1 | |||||

| Helpful for work | 0 | 0 | 6 | 0 | |||||

| Important information prominent to user | 0 | 0 | 0 | 3 | |||||

| Improves assessment | 0 | 0 | 3 | 0 | |||||

| Improves patient management | 0 | 0 | 2 | 0 | |||||

| Leads to increased demand for services | 0 | 0 | 1 | 0 | |||||

| Reduces paperwork | 0 | 0 | 1 | 0 | |||||

| Useful | 1 | 0 | 3 | 0 | |||||

| Useful in other contexts | 0 | 0 | 1 | 0 | |||||

| User experience | 0 | 0 | 13 | 0 | Novelty of technology | 0 | 0 | 3 | 0 |

| Practice and instruction | 0 | 0 | 4 | 0 | |||||

| Good user experience | 0 | 0 | 1 | 0 | |||||

| Preference compared to current method | 0 | 0 | 2 | 0 | |||||

| Word of mouth positive | 0 | 0 | 1 | 0 | |||||

| Would use again | 0 | 0 | 2 | 0 | |||||

| Total themes identified | 5 | 4 | 10 | 6 | Total codes identified | 15 | 7 | 97 | 29 |

| Themes missed | 5 | 6 | 0 | 4 | Codes missed | 30 | 35 | 5 | 24 |

| Proportion identified (n = 8) | 50% | 40% | 100% | 60% | Proportion identified (n = 40) | 25% | 13% | 88% | 40% |

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| Automaticity | 0 | 0 | 6 | 5 | Automatic functioning | 0 | 0 | 6 | 5 |

| User interface design | 5 | 2 | 13 | 7 | Ability to correct mistake error | 0 | 0 | 0 | 2 |

| Clear design | 1 | 0 | 1 | 2 | |||||

| Few problems | 2 | 1 | 1 | 1 | |||||

| Good design | 2 | 1 | 3 | 1 | |||||

| Good internal (app) flow | 0 | 0 | 1 | 0 | |||||

| Simple design | 0 | 0 | 4 | 0 | |||||

| Familiarity with technology | 0 | 0 | 2 | 1 | |||||

| Size and shape of device | 0 | 0 | 1 | 0 | |||||

| Efficiency | 1 | 2 | 7 | 1 | Time efficiency | 1 | 2 | 7 | 1 |

| Feasibility | 0 | 0 | 4 | 3 | Feasible to implement | 0 | 0 | 2 | 0 |

| Minimally disruptive to work flow | 0 | 0 | 2 | 3 | |||||

| Learnability | 0 | 2 | 2 | 0 | Learnability and intuitiveness | 0 | 2 | 2 | 0 |

| Patient benefit | 0 | 0 | 2 | 0 | Patient benefit including noninvasive | 0 | 0 | 2 | 0 |

| Trustworthiness | 1 | 1 | 15 | 5 | Improves safety | 0 | 0 | 3 | 2 |

| Accuracy | 0 | 1 | 7 | 1 | |||||

| Improves trust | 0 | 0 | 2 | 2 | |||||

| Multiple types of people approve | 0 | 0 | 1 | 0 | |||||

| Thoroughness systematic | 1 | 0 | 2 | 0 | |||||

| Ease of use | 5 | 0 | 7 | 0 | Comforting | 1 | 0 | 0 | 0 |

| Convenience | 1 | 0 | 0 | 0 | |||||

| Easy to use | 3 | 0 | 7 | 0 | |||||

| Usefulness | 3 | 0 | 28 | 8 | Adds knowledge | 0 | 0 | 2 | 1 |

| Help diagnosis | 0 | 0 | 7 | 2 | |||||

| Helpful for communication | 0 | 0 | 2 | 1 | |||||

| Helpful for inexperienced clinicians | 2 | 0 | 0 | 1 | |||||

| Helpful for work | 0 | 0 | 6 | 0 | |||||

| Important information prominent to user | 0 | 0 | 0 | 3 | |||||

| Improves assessment | 0 | 0 | 3 | 0 | |||||

| Improves patient management | 0 | 0 | 2 | 0 | |||||

| Leads to increased demand for services | 0 | 0 | 1 | 0 | |||||

| Reduces paperwork | 0 | 0 | 1 | 0 | |||||

| Useful | 1 | 0 | 3 | 0 | |||||

| Useful in other contexts | 0 | 0 | 1 | 0 | |||||

| User experience | 0 | 0 | 13 | 0 | Novelty of technology | 0 | 0 | 3 | 0 |

| Practice and instruction | 0 | 0 | 4 | 0 | |||||

| Good user experience | 0 | 0 | 1 | 0 | |||||

| Preference compared to current method | 0 | 0 | 2 | 0 | |||||

| Word of mouth positive | 0 | 0 | 1 | 0 | |||||

| Would use again | 0 | 0 | 2 | 0 | |||||

| Total themes identified | 5 | 4 | 10 | 6 | Total codes identified | 15 | 7 | 97 | 29 |

| Themes missed | 5 | 6 | 0 | 4 | Codes missed | 30 | 35 | 5 | 24 |

| Proportion identified (n = 8) | 50% | 40% | 100% | 60% | Proportion identified (n = 40) | 25% | 13% | 88% | 40% |

Q: questionnaire; U: user testing; I: interview; H: heuristic evaluation studies.

Qualitative evidence synthesis of included studies (n = 13/23): usability-related themes and codes of facilitators of adoption, by usability method category

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| Automaticity | 0 | 0 | 6 | 5 | Automatic functioning | 0 | 0 | 6 | 5 |

| User interface design | 5 | 2 | 13 | 7 | Ability to correct mistake error | 0 | 0 | 0 | 2 |

| Clear design | 1 | 0 | 1 | 2 | |||||

| Few problems | 2 | 1 | 1 | 1 | |||||

| Good design | 2 | 1 | 3 | 1 | |||||

| Good internal (app) flow | 0 | 0 | 1 | 0 | |||||

| Simple design | 0 | 0 | 4 | 0 | |||||

| Familiarity with technology | 0 | 0 | 2 | 1 | |||||

| Size and shape of device | 0 | 0 | 1 | 0 | |||||

| Efficiency | 1 | 2 | 7 | 1 | Time efficiency | 1 | 2 | 7 | 1 |

| Feasibility | 0 | 0 | 4 | 3 | Feasible to implement | 0 | 0 | 2 | 0 |

| Minimally disruptive to work flow | 0 | 0 | 2 | 3 | |||||

| Learnability | 0 | 2 | 2 | 0 | Learnability and intuitiveness | 0 | 2 | 2 | 0 |

| Patient benefit | 0 | 0 | 2 | 0 | Patient benefit including noninvasive | 0 | 0 | 2 | 0 |

| Trustworthiness | 1 | 1 | 15 | 5 | Improves safety | 0 | 0 | 3 | 2 |

| Accuracy | 0 | 1 | 7 | 1 | |||||

| Improves trust | 0 | 0 | 2 | 2 | |||||

| Multiple types of people approve | 0 | 0 | 1 | 0 | |||||

| Thoroughness systematic | 1 | 0 | 2 | 0 | |||||

| Ease of use | 5 | 0 | 7 | 0 | Comforting | 1 | 0 | 0 | 0 |

| Convenience | 1 | 0 | 0 | 0 | |||||

| Easy to use | 3 | 0 | 7 | 0 | |||||

| Usefulness | 3 | 0 | 28 | 8 | Adds knowledge | 0 | 0 | 2 | 1 |

| Help diagnosis | 0 | 0 | 7 | 2 | |||||

| Helpful for communication | 0 | 0 | 2 | 1 | |||||

| Helpful for inexperienced clinicians | 2 | 0 | 0 | 1 | |||||

| Helpful for work | 0 | 0 | 6 | 0 | |||||

| Important information prominent to user | 0 | 0 | 0 | 3 | |||||

| Improves assessment | 0 | 0 | 3 | 0 | |||||

| Improves patient management | 0 | 0 | 2 | 0 | |||||

| Leads to increased demand for services | 0 | 0 | 1 | 0 | |||||

| Reduces paperwork | 0 | 0 | 1 | 0 | |||||

| Useful | 1 | 0 | 3 | 0 | |||||

| Useful in other contexts | 0 | 0 | 1 | 0 | |||||

| User experience | 0 | 0 | 13 | 0 | Novelty of technology | 0 | 0 | 3 | 0 |

| Practice and instruction | 0 | 0 | 4 | 0 | |||||

| Good user experience | 0 | 0 | 1 | 0 | |||||

| Preference compared to current method | 0 | 0 | 2 | 0 | |||||

| Word of mouth positive | 0 | 0 | 1 | 0 | |||||

| Would use again | 0 | 0 | 2 | 0 | |||||

| Total themes identified | 5 | 4 | 10 | 6 | Total codes identified | 15 | 7 | 97 | 29 |

| Themes missed | 5 | 6 | 0 | 4 | Codes missed | 30 | 35 | 5 | 24 |

| Proportion identified (n = 8) | 50% | 40% | 100% | 60% | Proportion identified (n = 40) | 25% | 13% | 88% | 40% |

| Themes . | Q . | U . | I . | H . | Codes . | Q . | U . | I . | H . |

|---|---|---|---|---|---|---|---|---|---|

| Automaticity | 0 | 0 | 6 | 5 | Automatic functioning | 0 | 0 | 6 | 5 |

| User interface design | 5 | 2 | 13 | 7 | Ability to correct mistake error | 0 | 0 | 0 | 2 |

| Clear design | 1 | 0 | 1 | 2 | |||||

| Few problems | 2 | 1 | 1 | 1 | |||||

| Good design | 2 | 1 | 3 | 1 | |||||

| Good internal (app) flow | 0 | 0 | 1 | 0 | |||||

| Simple design | 0 | 0 | 4 | 0 | |||||

| Familiarity with technology | 0 | 0 | 2 | 1 | |||||

| Size and shape of device | 0 | 0 | 1 | 0 | |||||

| Efficiency | 1 | 2 | 7 | 1 | Time efficiency | 1 | 2 | 7 | 1 |

| Feasibility | 0 | 0 | 4 | 3 | Feasible to implement | 0 | 0 | 2 | 0 |

| Minimally disruptive to work flow | 0 | 0 | 2 | 3 | |||||

| Learnability | 0 | 2 | 2 | 0 | Learnability and intuitiveness | 0 | 2 | 2 | 0 |

| Patient benefit | 0 | 0 | 2 | 0 | Patient benefit including noninvasive | 0 | 0 | 2 | 0 |

| Trustworthiness | 1 | 1 | 15 | 5 | Improves safety | 0 | 0 | 3 | 2 |

| Accuracy | 0 | 1 | 7 | 1 | |||||

| Improves trust | 0 | 0 | 2 | 2 | |||||

| Multiple types of people approve | 0 | 0 | 1 | 0 | |||||

| Thoroughness systematic | 1 | 0 | 2 | 0 | |||||

| Ease of use | 5 | 0 | 7 | 0 | Comforting | 1 | 0 | 0 | 0 |

| Convenience | 1 | 0 | 0 | 0 | |||||

| Easy to use | 3 | 0 | 7 | 0 | |||||

| Usefulness | 3 | 0 | 28 | 8 | Adds knowledge | 0 | 0 | 2 | 1 |

| Help diagnosis | 0 | 0 | 7 | 2 | |||||

| Helpful for communication | 0 | 0 | 2 | 1 | |||||

| Helpful for inexperienced clinicians | 2 | 0 | 0 | 1 | |||||

| Helpful for work | 0 | 0 | 6 | 0 | |||||

| Important information prominent to user | 0 | 0 | 0 | 3 | |||||

| Improves assessment | 0 | 0 | 3 | 0 | |||||

| Improves patient management | 0 | 0 | 2 | 0 | |||||

| Leads to increased demand for services | 0 | 0 | 1 | 0 | |||||

| Reduces paperwork | 0 | 0 | 1 | 0 | |||||

| Useful | 1 | 0 | 3 | 0 | |||||

| Useful in other contexts | 0 | 0 | 1 | 0 | |||||

| User experience | 0 | 0 | 13 | 0 | Novelty of technology | 0 | 0 | 3 | 0 |

| Practice and instruction | 0 | 0 | 4 | 0 | |||||

| Good user experience | 0 | 0 | 1 | 0 | |||||

| Preference compared to current method | 0 | 0 | 2 | 0 | |||||

| Word of mouth positive | 0 | 0 | 1 | 0 | |||||

| Would use again | 0 | 0 | 2 | 0 | |||||

| Total themes identified | 5 | 4 | 10 | 6 | Total codes identified | 15 | 7 | 97 | 29 |

| Themes missed | 5 | 6 | 0 | 4 | Codes missed | 30 | 35 | 5 | 24 |

| Proportion identified (n = 8) | 50% | 40% | 100% | 60% | Proportion identified (n = 40) | 25% | 13% | 88% | 40% |

Q: questionnaire; U: user testing; I: interview; H: heuristic evaluation studies.

DISCUSSION

The standardized framework for defining usability (ISO) was established in 1998, and updated in 2018 (ISO 9241-11:2018).9 Despite this, the majority of included papers in this review demonstrated deviation in the definition of usability used. Importantly, this standard does not describe specific methods of design, development, or evaluation of usability. Nevertheless, differing definitions of usability likely contributed to the evidence generated from this systematic review, which revealed that a wide range of metrics and methods are used to assess usability of mobile CDSSs. Researchers favored evaluation metrics, including efficiency, user errors, usefulness, and satisfaction over measures such as effectiveness, learnability, and memorability. Qualitative evidence synthesis including thematic analysis identified that more codes and themes were generated from studies utilizing interview and heuristic evaluation, than studies which employed user testing or questionnaires to assess usability of CDSSs. Synthesis of quantitative results was not attempted, due to the multiple different methods used (validated and nonvalidated) to measure usability quantitatively across included studies.

Implications

There are 5 main implications of this study. Firstly, the study reveals that a plethora of approaches are evident, which suggests that comparison of usability metrics between different CDSS is inherently difficult and could contribute to confusion and misunderstanding when attempting to understand the value of these tools to practitioners, patients, and health systems. The lack of consistency in evaluating the usability of CDSS is a material problem for the field. In particular, the quantitative approaches used by included studies were so diverse that no meaningful data synthesis could be made. There is a dire need for a standard approach to quantitative analysis on the usability of CDSS. There are multiple validated methodologies in current use.73 The best solution likely involves a combination or amalgamation of commonly used methodologies, focusing on those with few items and high reliability.73