-

PDF

- Split View

-

Views

-

Cite

Cite

Po-Yin Yen, Suzanne Bakken, Review of health information technology usability study methodologies, Journal of the American Medical Informatics Association, Volume 19, Issue 3, May 2012, Pages 413–422, https://doi.org/10.1136/amiajnl-2010-000020

Close - Share Icon Share

Abstract

Usability factors are a major obstacle to health information technology (IT) adoption. The purpose of this paper is to review and categorize health IT usability study methods and to provide practical guidance on health IT usability evaluation. 2025 references were initially retrieved from the Medline database from 2003 to 2009 that evaluated health IT used by clinicians. Titles and abstracts were first reviewed for inclusion. Full-text articles were then examined to identify final eligibility studies. 629 studies were categorized into the five stages of an integrated usability specification and evaluation framework that was based on a usability model and the system development life cycle (SDLC)-associated stages of evaluation. Theoretical and methodological aspects of 319 studies were extracted in greater detail and studies that focused on system validation (SDLC stage 2) were not assessed further. The number of studies by stage was: stage 1, task-based or user–task interaction, n=42; stage 2, system–task interaction, n=310; stage 3, user–task–system interaction, n=69; stage 4, user–task–system–environment interaction, n=54; and stage 5, user–task–system–environment interaction in routine use, n=199. The studies applied a variety of quantitative and qualitative approaches. Methodological issues included lack of theoretical framework/model, lack of details regarding qualitative study approaches, single evaluation focus, environmental factors not evaluated in the early stages, and guideline adherence as the primary outcome for decision support system evaluations. Based on the findings, a three-level stratified view of health IT usability evaluation is proposed and methodological guidance is offered based upon the type of interaction that is of primary interest in the evaluation.

A number of health information technologies (IT) assist clinicians in providing efficient, quality care. However, just as health IT can offer potential benefits, it can also interrupt workflow, cause delays, and introduce errors.1–3 Health IT evaluation is difficult and complex because it is often intended to serve multiple functions and is conducted from the perspective of a variety of disciplines.4 Lack of attention to health IT evaluation may result in an inability to achieve system efficiency, effectiveness, and satisfaction.5 Consequences may include frustrated users, decreased efficiency coupled with increased cost, disruptions in workflow, and increases in healthcare errors.6

To ensure the best utilization of health IT, it is essential to be attentive to health IT usability, keeping in mind its intended users (eg, physicians, nurses, or pharmacists), task (eg, medication management, free-text data entry, or patient record search), and environment (eg, operation room, ward, or emergency room). When clinicians experience problems with health IT, one might wonder if the system was designed to be ‘usable’ for clinicians.

Many health IT usability studies have been conducted to explore usability requirements, discover usability problems, and design solutions. However, challenges include: the complexity of the evaluation object: evaluation usually involves not only hardware, but also the information process in a given environment; the complexity of an evaluation project: evaluation is usually based on various research questions from a sociological, organizational, technical or clinical point of view; and the motivation for evaluation: evaluation can only be conducted with sufficient funds and participants.7 Various experts have conducted reviews that identified knowledge gaps and subsequently suggested possible solutions. Ammenwerth et al7 summarized general recommendations for IT evaluation based on the three challenges listed in this section. Kushniruk and Patel8 provided a methodological review for cognitive and usability engineering methods. Ammenwerth and de Keizer9 established an inventory to categorize IT evaluation studies conducted from 1982 to 2002. Rahimi and Vimarlund10 reviewed general methods used to evaluate health IT. In their classic textbook, Friedman and Wyatt11 created a categorization of study designs by primary purpose and provided an overview of general evaluation methods for biomedical informatics research. There is a need to update and build upon the valuable knowledge provided by these earlier reviews and to more explicitly consider user–task–system–environment interaction.12,13 Therefore, the purposes of this paper are to review and categorize commonly used health IT usability study methods using an integrated usability specification and evaluation framework and to provide practical guidance on health IT usability evaluation. The review includes studies published from 2003 to 2009. The practical guidance aims to assist researchers and those who develop and implement systems to apply theoretical frameworks and usability evaluation approaches based on evaluation goals (eg, user–task interaction vs user–task–system–environment interaction) and the system development life cycle (SDLC) stages. This has not been done in previous reviews or studies.

Background

The definition of usability

The concept of usability was defined in the field of human–computer interaction (HCI) as the relationship between humans and computers. The International Organization for Standardization (ISO) proposed two definitions of usability in ISO 9241 and ISO 9126. ISO 9241 defines usability as ‘the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use’.5 In ISO 9126, usability compliance is one of five product quality categories, in addition to understandability, learnability, operability, and attractiveness.14 Consistent with authors who contend that usability depends on the interaction between user and task in a defined environment,12,15 ISO 9126 defines usability as ‘the capability of the software product to be understood, learned, used and attractive to the user, when used under specified conditions’.14 ‘Quality in use’ is defined as ‘the capability of the software product to enable specified users to achieve specified goals with effectiveness, productivity, safety, and satisfaction in specified contexts of use’.14 In this paper, we use the broader definition of usability, that is, quality in use. The usability of a technology is determined not only by its user–computer interactions, but also by the degree to which it can be successfully integrated to perform tasks in the intended work environment. Therefore, usability is evaluated through the interaction of user, system, and task in a specified setting.12,13 The sociotechnical perspective also indicates that the technical features of health IT interact with the social features of a healthcare work environment.16,17 The meaning of usability should therefore be composed of four major components: user, tool, task, and environment.12,13

It is believed that usability depends on the interaction of users performing tasks through a tool in a specified environment. As a result, any change to the components alters the entire interaction, and therefore influences the usability of the tool. For example, although helpful for medication management,18 barcode systems do not support free text to allow the entry of rich clinical data (change in task). In addition, speech recognition systems work well when vocabularies are limited and dictation tasks are performed in isolated, dedicated workspaces, such as radiology or pathology,19 but are much less suitable in noisy public spaces, where performance is poor and the confidentiality of patient health information is threatened (change in environment). Tablet personal computers are generally accepted by physicians; however, their weight and fragility reduce acceptability by nurses (change in user).20,21

System development life cycle

Usability can be evaluated during different stages of product development.22 Iterative usability evaluation during the development stages makes the product more specific to users' needs.23 Stead et al24 first proposed a framework that linked stages of the SDLC to levels of evaluation for medical informatics in 1994. Kaufman and colleagues6 further illustrated its use as an evaluation framework for health information system design, development, and implementation. A comparison between the five stages of the Stead framework and Friedman and Wyatt's nine generic study types11 is shown in table 1; both point out the importance of iterative evaluation to continuously assess and refine system design for ultimate system usability.

| Stead SDLC stage . | Friedman and Wyatt study type . |

|---|---|

| A. Specification | 1. Needs assessment |

| B. Component development | 2. Design validation |

| 3. Structure validation | |

| C. Combination of components into a system | 4. Usability test |

| 5. Laboratory function study | |

| 7. Laboratory user effect study | |

| D. Integration of system into environment | 6. Field function study |

| 8. Field user effect study | |

| E. Routine use | 9. Problem impact study |

| Stead SDLC stage . | Friedman and Wyatt study type . |

|---|---|

| A. Specification | 1. Needs assessment |

| B. Component development | 2. Design validation |

| 3. Structure validation | |

| C. Combination of components into a system | 4. Usability test |

| 5. Laboratory function study | |

| 7. Laboratory user effect study | |

| D. Integration of system into environment | 6. Field function study |

| 8. Field user effect study | |

| E. Routine use | 9. Problem impact study |

SDLC, system development life cycle.

| Stead SDLC stage . | Friedman and Wyatt study type . |

|---|---|

| A. Specification | 1. Needs assessment |

| B. Component development | 2. Design validation |

| 3. Structure validation | |

| C. Combination of components into a system | 4. Usability test |

| 5. Laboratory function study | |

| 7. Laboratory user effect study | |

| D. Integration of system into environment | 6. Field function study |

| 8. Field user effect study | |

| E. Routine use | 9. Problem impact study |

| Stead SDLC stage . | Friedman and Wyatt study type . |

|---|---|

| A. Specification | 1. Needs assessment |

| B. Component development | 2. Design validation |

| 3. Structure validation | |

| C. Combination of components into a system | 4. Usability test |

| 5. Laboratory function study | |

| 7. Laboratory user effect study | |

| D. Integration of system into environment | 6. Field function study |

| 8. Field user effect study | |

| E. Routine use | 9. Problem impact study |

SDLC, system development life cycle.

An integrated usability specification and evaluation framework

The SDLC indicates ‘when’ an evaluation occurs, while the four components of usability (user, tool, task, and environment) indicate ‘what’ to evaluate. Furthermore, ‘when to evaluate what’ answers the integrative question of evaluation timeline and focus. Therefore, we proposed an integrated usability specification and evaluation framework to combine the usability model of Bennett12 and Shackel13 and the SDLC into a comprehensive evaluation framework (table 2).

| SDLC stage . | Evaluation type . | Evaluation goal . |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| SDLC stage . | Evaluation type . | Evaluation goal . |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The first column indicates system development life cycle (SDLC) stages.

The second column, evaluation type, was added based on Bennett and Shackel's usability model.

Each stage has potential evaluation types that indicate component (user, task, system and environment) interaction in Bennett and Shackel's usability model, such as user–task and system–user–task.

In the last column, evaluation goals represent the expectations for each evaluation type.

| SDLC stage . | Evaluation type . | Evaluation goal . |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| SDLC stage . | Evaluation type . | Evaluation goal . |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The first column indicates system development life cycle (SDLC) stages.

The second column, evaluation type, was added based on Bennett and Shackel's usability model.

Each stage has potential evaluation types that indicate component (user, task, system and environment) interaction in Bennett and Shackel's usability model, such as user–task and system–user–task.

In the last column, evaluation goals represent the expectations for each evaluation type.

The first column shows the five SDLC stages. In stage 1, the evaluation type starts from the simplest level, ‘type 0: task’, which aims to understand the task itself, and another level, ‘type 1: user–task’ interaction, to discover essential requirements for system design, such as information flow, system functionality, user interface, etc. In stages 2 and 3, the evaluation examines ‘type 2: system–task’ interaction, which is focused on system validation and performance, and also ‘type 3: user–task–system’ interaction to assess simple HCI performance in the laboratory setting. The evaluation becomes more complicated in stages 4 and 5 with ‘type 4: user–task–system–environment’ interactions.

For evaluation goals, we used the usability aspects (efficiency, effectiveness, and satisfaction) suggested by ISO 9241. However, ISO definitions (table 3) for goals or subgoals and the level of effectiveness lack specificity. Therefore, a system may meet all usability criteria for lower-level goals, such as task completion rate and performance speed, but may be unable to fulfil the requirements for higher-level goals, such as users' cognitive or physical workload and job satisfaction. A system may be useful for achieving a specific task, but may not be beneficial to users' general work life. This indicates a need for stratification of evaluation types; therefore, we defined goals based on the evaluation type being measured. For example, in stage 2, system–task interaction aims to confirm validity—the first level of effectiveness, while system–user–task assesses performance—the second level of effectiveness, and system–user–task–environment (stages 4 and 5) evaluates quality—the third level of effectiveness, and impact—the highest level of effectiveness. This also implies that the complexity of IT evaluation increases in the final stage of the SDLC.

| Usability aspects . | Definition . |

|---|---|

| Effectiveness | The goals or subgoals of the user to the accuracy and completeness with which their goals can be achieved |

| Efficiency | The level of effectiveness achieved to the expenditure of resources |

| Satisfaction | User attitude towards the use of the product, including both short-term and long-term measures (rate of absenteeism, health problem reports, or frequency of which users request transfer to another job) |

| Usability aspects . | Definition . |

|---|---|

| Effectiveness | The goals or subgoals of the user to the accuracy and completeness with which their goals can be achieved |

| Efficiency | The level of effectiveness achieved to the expenditure of resources |

| Satisfaction | User attitude towards the use of the product, including both short-term and long-term measures (rate of absenteeism, health problem reports, or frequency of which users request transfer to another job) |

| Usability aspects . | Definition . |

|---|---|

| Effectiveness | The goals or subgoals of the user to the accuracy and completeness with which their goals can be achieved |

| Efficiency | The level of effectiveness achieved to the expenditure of resources |

| Satisfaction | User attitude towards the use of the product, including both short-term and long-term measures (rate of absenteeism, health problem reports, or frequency of which users request transfer to another job) |

| Usability aspects . | Definition . |

|---|---|

| Effectiveness | The goals or subgoals of the user to the accuracy and completeness with which their goals can be achieved |

| Efficiency | The level of effectiveness achieved to the expenditure of resources |

| Satisfaction | User attitude towards the use of the product, including both short-term and long-term measures (rate of absenteeism, health problem reports, or frequency of which users request transfer to another job) |

Evaluation thus begins with a two-component interaction (user–task and system–task). Thereafter, a three-component interaction and four-component interaction are evaluated. This approach may potentially simplify the identification of usability problems through focusing on a specific interaction.

Evaluation types are the key to usability evaluation. Most stages have more than one evaluation type because iterative evaluation is needed to test multiple interactions. Each stage is also associated with specific goals. For example, we first expect the system to be able to perform a task (stage 1). Then, we expect that users can operate the system to perform a task (stage 2 and stage 3). Next, we expect the system to be useful for the task (stage 4). Eventually, we expect that the system can have a great impact on work effectiveness, process efficiency and job satisfaction (stage 5).

Research questions

The research questions for the review are: based on SDLC stages, when do health IT usability studies usually occur? and what are the theoretical frameworks and methods used in current health IT usability studies? The analysis is informed by the proposed integrated usability specification and evaluation framework that combines a usability model and the SDLC.

Methods

We conducted a review of usability study methodologies, including studies with diverse designs (eg, experimental, non-experimental, and qualitative) to obtain a broad overview.

Search strategy

Our search of MEDLINE included terms for health IT (eg, computerized patient record, health information system, and electronic health record) and usability evaluation (eg, system evaluation, user–computer interface, and technology acceptance) and was limited to studies published between 2003 and 2009. Reviews, commentaries, editorials, and case studies were only included for background information or discussion, not for the review because of the methodological focus of the review. Search terms and detailed strategy are available as a data supplement online only.

Inclusion/exclusion criteria

Studies included in the review defined health IT usability as their primary objective and provided detailed information related to methods. Titles and abstracts were first reviewed for inclusion. Full-text articles were retrieved and examined to identify final eligible studies. To specifically understand usability studies in a healthcare environment, the review focused on health IT used by clinicians for patient care. Articles evaluating systems for public health, education, research purposes, and bioinformatics were excluded, as these systems were not intended to be used for patient care. In addition, we excluded studies that used electronic health records to answer research questions, but did not actually evaluate health IT, and informatics studies that did not have health IT usability as a primary objective (eg, information-seeking behavior, computer literacy, and general evaluations of computer or personal digital assistant usage). We also excluded studies evaluating methods or models because health IT usability evaluation was not their primary aim, and system demonstrations that had little or no information about health IT usability evaluation.

The unit of analysis was the system. So, if a system was studied at different stages in different publications, it was included as one system with multiple evaluations. The search strategy first used both medical subject headings (MeSH) and keywords to identify potential health IT studies. Animal and non-English studies and literature published before 2003 were excluded, along with non-studies, such as reviews, commentaries, and letters.

Data extraction and management

We used EndNote XII to organize coding processes and Microsoft Access to organize data extraction. Information extracted from each article included study design, method, participants, sample size, and health IT type. Health IT types were based on the classification of Gremy and Degoulet25 and three types of decision support functions described by Musen et al.26 We organized studies based on the usability specification and evaluation framework (table 2).

Categorization of each system's evaluation based on the five SDLC stages requires a clear definition for each stage. Stage 1 is a needs assessment for system development. Stages 2 and 3 often overlap because most system validation studies examined whole systems with user-initiated interaction. Stages 4 and 5 evaluate interactions among user, system, task, and environment. Even though the goals of these stages are clearly defined (system validation for stage 4, efficiency and effectiveness evaluation for stage 5), most of the published studies did not clearly divide these goals.

For categorization purposes, studies related to system validation, such as sensitivity and specificity, were considered to be stage 2. Studies that focused on HCI in the laboratory setting, such as outcome quality, user perception, and user performance, were categorized as stage 3. Pilot studies or experimental studies with control group comparison in one setting were considered to be stage 4 because their systems were not officially implemented in the organization and may not have had full environmental support. Stage 5 comprised studies of health IT implemented in a fully supported environment; study designs included experimental or quasi-experimental designs and control groups in multiple sites including before and after implementation, or with postimplementation evaluation only. Table 4 summarizes the study categorization criteria.

| SDLC stage . | Study categorization criteria . |

|---|---|

| Stage 1 | Needs assessments with design methods described |

| Stage 2 | System validation evaluations, such as sensitivity and specificity examinations, ROC curve, and observer variation |

| Stage 3 | Human–computer interaction evaluations focusing on outcome quality, user perception, and user performance in the laboratory setting |

| Stage 4 | Field testing; experimental or quasi-experimental designs with control groups in one setting |

| Stage 5 | Field testing; experimental or quasi-experimental designs with control groups in multiple sites; post-implementation evaluation only; self-control, such as evaluation before and after implementation |

| SDLC stage . | Study categorization criteria . |

|---|---|

| Stage 1 | Needs assessments with design methods described |

| Stage 2 | System validation evaluations, such as sensitivity and specificity examinations, ROC curve, and observer variation |

| Stage 3 | Human–computer interaction evaluations focusing on outcome quality, user perception, and user performance in the laboratory setting |

| Stage 4 | Field testing; experimental or quasi-experimental designs with control groups in one setting |

| Stage 5 | Field testing; experimental or quasi-experimental designs with control groups in multiple sites; post-implementation evaluation only; self-control, such as evaluation before and after implementation |

ROC, Receiver operating characteristic; SDLC, system development life cycle.

| SDLC stage . | Study categorization criteria . |

|---|---|

| Stage 1 | Needs assessments with design methods described |

| Stage 2 | System validation evaluations, such as sensitivity and specificity examinations, ROC curve, and observer variation |

| Stage 3 | Human–computer interaction evaluations focusing on outcome quality, user perception, and user performance in the laboratory setting |

| Stage 4 | Field testing; experimental or quasi-experimental designs with control groups in one setting |

| Stage 5 | Field testing; experimental or quasi-experimental designs with control groups in multiple sites; post-implementation evaluation only; self-control, such as evaluation before and after implementation |

| SDLC stage . | Study categorization criteria . |

|---|---|

| Stage 1 | Needs assessments with design methods described |

| Stage 2 | System validation evaluations, such as sensitivity and specificity examinations, ROC curve, and observer variation |

| Stage 3 | Human–computer interaction evaluations focusing on outcome quality, user perception, and user performance in the laboratory setting |

| Stage 4 | Field testing; experimental or quasi-experimental designs with control groups in one setting |

| Stage 5 | Field testing; experimental or quasi-experimental designs with control groups in multiple sites; post-implementation evaluation only; self-control, such as evaluation before and after implementation |

ROC, Receiver operating characteristic; SDLC, system development life cycle.

Results

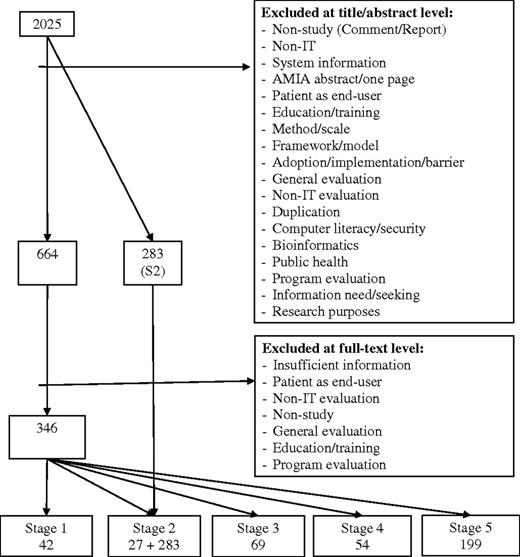

Our MEDLINE search retrieved a total of 2025 references that fit the study's inclusion criteria (available as a data supplement online only). Our search identified a fair number of studies (n=310) evaluating system validity for computer-assisted diagnosis systems. System validation was performed via sensitivity and specificity testing, receiver operating characteristic (ROC) curve, or observer variation. Because system validation is an important part of the SDLC, we included such studies (n=283) in stage 2 based on review of the title and abstract (figure 1). However, we did not further extract methodological data because of limited information regarding usability specification and evaluation methods.

Data management flowchart. In stage 2, system validation was performed by sensitivity and specificity testing, receiver operating characteristic curve, or observer variation and can be identified at title and abstract level with MeSH search. We identified 27 articles from 346 full-text articles and 283 articles at the title and abstract level.

A total of 664 studies was considered for full text review. Studies that fit the exclusion criteria or that had insufficient methods information for data extraction were subsequently excluded resulting in the retention of 346 studies. Figure 1 provides a flowchart illustrating the process of filtering and coding the included studies to the five evaluation stages. Some studies reported more than one evaluation stage. Therefore, the total number of studies in each evaluation stage does not match the total number of included studies.

Types of health IT evaluated

Table 5 summarizes the health IT types identified in the review. Often, the type of health IT was ambiguous because of overlapping and complex functions. The predominant type of health IT that we evaluated was decision support systems or had decision support features; computer-based provider order entry within hospital information systems were also well studied. The third most frequently occurring type of health IT was electronic health records.

| Category . | Number . |

|---|---|

| 1. Population-based systems—registry | 4 |

| 2. Hospital information system | 4 |

| a. Computerized provider order entry system | 63 |

| b. Picture archiving and communication system | 10 |

| 3. Clinical information system | 22 |

| a. Electronic health record | 43 |

| b. Nursing information system | 5 |

| c. Nursing documentation system | 2 |

| d. Anesthesia information system | 3 |

| e. Medication administration system | 9 |

| f. Speech recognition system | 8 |

| 4. Laboratory information system—radiology information system | 13 |

| 5. Clinical decision support system | 4 |

| a. For information management (eg, information needs) | 12 |

| b. For focusing attention (eg, reminder/alert system) | 41 |

| c. For providing patient-specific recommendations | 65 |

| 6. Telehealth system | |

| a. Provider–provider consultation | 7 |

| b. Provider–patient consultation | 5 |

| 7. Adverse event reporting system | 5 |

| Category . | Number . |

|---|---|

| 1. Population-based systems—registry | 4 |

| 2. Hospital information system | 4 |

| a. Computerized provider order entry system | 63 |

| b. Picture archiving and communication system | 10 |

| 3. Clinical information system | 22 |

| a. Electronic health record | 43 |

| b. Nursing information system | 5 |

| c. Nursing documentation system | 2 |

| d. Anesthesia information system | 3 |

| e. Medication administration system | 9 |

| f. Speech recognition system | 8 |

| 4. Laboratory information system—radiology information system | 13 |

| 5. Clinical decision support system | 4 |

| a. For information management (eg, information needs) | 12 |

| b. For focusing attention (eg, reminder/alert system) | 41 |

| c. For providing patient-specific recommendations | 65 |

| 6. Telehealth system | |

| a. Provider–provider consultation | 7 |

| b. Provider–patient consultation | 5 |

| 7. Adverse event reporting system | 5 |

| Category . | Number . |

|---|---|

| 1. Population-based systems—registry | 4 |

| 2. Hospital information system | 4 |

| a. Computerized provider order entry system | 63 |

| b. Picture archiving and communication system | 10 |

| 3. Clinical information system | 22 |

| a. Electronic health record | 43 |

| b. Nursing information system | 5 |

| c. Nursing documentation system | 2 |

| d. Anesthesia information system | 3 |

| e. Medication administration system | 9 |

| f. Speech recognition system | 8 |

| 4. Laboratory information system—radiology information system | 13 |

| 5. Clinical decision support system | 4 |

| a. For information management (eg, information needs) | 12 |

| b. For focusing attention (eg, reminder/alert system) | 41 |

| c. For providing patient-specific recommendations | 65 |

| 6. Telehealth system | |

| a. Provider–provider consultation | 7 |

| b. Provider–patient consultation | 5 |

| 7. Adverse event reporting system | 5 |

| Category . | Number . |

|---|---|

| 1. Population-based systems—registry | 4 |

| 2. Hospital information system | 4 |

| a. Computerized provider order entry system | 63 |

| b. Picture archiving and communication system | 10 |

| 3. Clinical information system | 22 |

| a. Electronic health record | 43 |

| b. Nursing information system | 5 |

| c. Nursing documentation system | 2 |

| d. Anesthesia information system | 3 |

| e. Medication administration system | 9 |

| f. Speech recognition system | 8 |

| 4. Laboratory information system—radiology information system | 13 |

| 5. Clinical decision support system | 4 |

| a. For information management (eg, information needs) | 12 |

| b. For focusing attention (eg, reminder/alert system) | 41 |

| c. For providing patient-specific recommendations | 65 |

| 6. Telehealth system | |

| a. Provider–provider consultation | 7 |

| b. Provider–patient consultation | 5 |

| 7. Adverse event reporting system | 5 |

Stage of SDLC

Table 6 summarizes the usability study methods and number of studies at each stage of the SDLC.

| Stage . | Type . | Goal . | Methods (no of studies) . |

|---|---|---|---|

| Stage 1 (n=42) | Type 0: task-based | System specification |

|

| Type 1: user–task | System specification |

| |

| Stage 2 (n=310) | Type 2: system–task | System validation | (Not analyzed) |

| Stage 3 (n=69) | Type 3: user–task–system |

| Log analysis/observation (41) |

| Interaction |

| ||

| Perception |

| ||

| Stage 4 (n=54) | Type 4: user–task–system–environment | Perception |

|

| Workflow | Observation (1) | ||

| Efficiency | Time-and-motion (2) | ||

| Utilization, patient outcomes, guideline adherence, medication errors | Chart review/log analysis (32) | ||

| Stage 5 (n=199) | Type 4: user–task–system–environment | Perception |

|

| Workflow |

| ||

| Efficiency |

| ||

| Activity proportion | Work-sampling (3) | ||

| Utilization, patient outcomes, guideline adherence, medication errors, accuracy, document quality, cost-effectiveness | Chart review/log analysis (115) |

| Stage . | Type . | Goal . | Methods (no of studies) . |

|---|---|---|---|

| Stage 1 (n=42) | Type 0: task-based | System specification |

|

| Type 1: user–task | System specification |

| |

| Stage 2 (n=310) | Type 2: system–task | System validation | (Not analyzed) |

| Stage 3 (n=69) | Type 3: user–task–system |

| Log analysis/observation (41) |

| Interaction |

| ||

| Perception |

| ||

| Stage 4 (n=54) | Type 4: user–task–system–environment | Perception |

|

| Workflow | Observation (1) | ||

| Efficiency | Time-and-motion (2) | ||

| Utilization, patient outcomes, guideline adherence, medication errors | Chart review/log analysis (32) | ||

| Stage 5 (n=199) | Type 4: user–task–system–environment | Perception |

|

| Workflow |

| ||

| Efficiency |

| ||

| Activity proportion | Work-sampling (3) | ||

| Utilization, patient outcomes, guideline adherence, medication errors, accuracy, document quality, cost-effectiveness | Chart review/log analysis (115) |

| Stage . | Type . | Goal . | Methods (no of studies) . |

|---|---|---|---|

| Stage 1 (n=42) | Type 0: task-based | System specification |

|

| Type 1: user–task | System specification |

| |

| Stage 2 (n=310) | Type 2: system–task | System validation | (Not analyzed) |

| Stage 3 (n=69) | Type 3: user–task–system |

| Log analysis/observation (41) |

| Interaction |

| ||

| Perception |

| ||

| Stage 4 (n=54) | Type 4: user–task–system–environment | Perception |

|

| Workflow | Observation (1) | ||

| Efficiency | Time-and-motion (2) | ||

| Utilization, patient outcomes, guideline adherence, medication errors | Chart review/log analysis (32) | ||

| Stage 5 (n=199) | Type 4: user–task–system–environment | Perception |

|

| Workflow |

| ||

| Efficiency |

| ||

| Activity proportion | Work-sampling (3) | ||

| Utilization, patient outcomes, guideline adherence, medication errors, accuracy, document quality, cost-effectiveness | Chart review/log analysis (115) |

| Stage . | Type . | Goal . | Methods (no of studies) . |

|---|---|---|---|

| Stage 1 (n=42) | Type 0: task-based | System specification |

|

| Type 1: user–task | System specification |

| |

| Stage 2 (n=310) | Type 2: system–task | System validation | (Not analyzed) |

| Stage 3 (n=69) | Type 3: user–task–system |

| Log analysis/observation (41) |

| Interaction |

| ||

| Perception |

| ||

| Stage 4 (n=54) | Type 4: user–task–system–environment | Perception |

|

| Workflow | Observation (1) | ||

| Efficiency | Time-and-motion (2) | ||

| Utilization, patient outcomes, guideline adherence, medication errors | Chart review/log analysis (32) | ||

| Stage 5 (n=199) | Type 4: user–task–system–environment | Perception |

|

| Workflow |

| ||

| Efficiency |

| ||

| Activity proportion | Work-sampling (3) | ||

| Utilization, patient outcomes, guideline adherence, medication errors, accuracy, document quality, cost-effectiveness | Chart review/log analysis (115) |

Stage 1: specify needs and setting

Stage 1 is measured in a laboratory or field environment. We found a total of 42 studies at stage 1. The goal of this stage is to identify users' needs in order to inform system design and establish system components. Therefore, the key questions for this evaluation stage are ‘What are the needs/tasks?’ and ‘How can a system be used to support the needs/tasks?’

To identify system elements/components, some developers reviewed the literature, including published guidelines and documents related to system structures. For instance, researchers conducted a literature review to identify standardized criteria for nursing diagnoses classification.27 Another study reviewed existing documents to identify phrases and concepts for the development of a terminology-based electronic nursing record system.28 Many studies in this review also relied on interviews, focus groups, expert panels and observations for gathering information related to users' needs.29–31

Researchers often use workflow analysis and work sampling to learn about users' work environments. Workflow analysis, as defined by the Workflow Management Coalition (WfMC), is used to understand how multiple tasks, subtasks, or team work are accomplished according to procedural rules.32 Focus groups and observational methods may be combined to provide a comprehensive view of clinical workflow.33 One method used to represent the outcome of workflow analysis involves activity diagrams.31 Another study demonstrated workflow analysis by using cognitive task analysis to characterize clinical processes in the emergency department in order to suggest possible technological solutions.34 Work sampling is used to measure the amount of time spent on a task. One study used work sampling to identify nurses' needs for the development of an electronic information gathering and dissemination system.30

Methods used by researchers in this review to inform health IT interface designs included colored sticky notes, focus groups,35 cognitive work analysis,36 and card sorting.37 We categorized system redesign as stage 1. Four studies facilitated the redesign process in order to improve existing systems.37–40 For example, one study used log files to identify the most frequent user activities and provided a list of popular queries and selected orders at the top of the pick-list for a more efficient computerized provider order entry system interface.39

Stage 2: system component development

The goal of stage 2 is system validation. Therefore, the key question for this evaluation type is ‘Does the system work for the task?’ If a potential stage 2 study contained any MeSH terms such as ‘user–computer interface’, ‘task performance and analysis’, ‘attitude to computers’, and ‘user performance’, we further evaluated it at the full-text level to avoid missing publications for classification as stages 3, 4, or 5. Therefore, a total of 310 publications was identified as stage 2 system validation studies. System validation was done mainly through examining sensitivity and specificity or the ROC curve, and was commonly found in computer-assisted diagnosis systems, such as computer-assisted image interpretation systems, but rarely in other documentation systems. This is likely due to the system's decision support role.

Stage 3: combination of components

In stage 3, the user is added to the interaction to see if the system can minimize human errors and help users accomplish the task. Therefore, the key questions for this evaluation type are: ‘Does the system violate usability heuristics?’ (the user interface design conforms to good design principles); ‘Can the user use the system to accomplish the task?’ (users are able to correctly interact with the system); ‘Is the user satisfied with the way the system helps perform the task?’ (users are satisfied with the interaction); and ‘What is the user and system interaction performance, in terms of output quality, speed, accuracy, and completeness?’ (users are able to operate the system efficiently with quality system output).

We found a total of 69 studies that evaluated HCI in a laboratory setting. Only one study used Nielsen's 10 heuristic principles41 to assess the fit between the system.42 Heuristic evaluation is not commonly used in stage 3, likely because it requires HCI experts or work domain experts to perform the evaluation.

Five studies found in our literature review reported using cognitive walkthrough and think-aloud protocol;43–47 10 others used think-aloud only to determine if users were able accomplish tasks. Cognitive walkthrough48 is a usability inspection method performed by an expert to assess the degree of difficulty to accomplish tasks using a system, by identifying actions and goals needed to accomplish each task. Think-aloud protocol encourages users to express out loud what they are looking at, thinking, doing, and feeling, as they perform a task.49

Objective measures that researchers used at stage 3 included system validity (eg, accuracy and completeness) and efficiency (eg, speed and learnability). Common methods included observation, log file analysis, chart review, and comparison to a gold standard.

User satisfaction is a subjective measure that can be assessed in the laboratory setting. Methods include interview, focus group, and questionnaire. Thirty (78%) studies in this review used questionnaires to assess users' perceptions and attitudes. Among these studies, the most frequently used questionnaires were the questionnaire for user interaction satisfaction, the modified technology acceptance model questionnaire, and the IBM usability questionnaire.50–53 However, more than half of the studies used study-generated questionnaires that were not previously validated.

Stage 4: integrate health IT into a real environment

Stage 4 includes the environmental factor in the interaction. We found 54 studies that evaluated health IT at stage 4. The key questions regarding environment are similar to those in stage 3. Even if health IT is efficient and effective in a laboratory setting, implementation in a real environment may have different results. Therefore, usability evaluation questions are: ‘What is the user, task, system, and environment interaction performance in terms of output quality, speed, accuracy, and completeness?’; ‘Is the user satisfied with the way the system helps perform a task in the real setting?’; and ‘Does the system change workflow effectiveness or efficiency?’

Twelve studies used observation, log files, and/or chart review to assess interaction performance, such as accuracy, time, completeness, and general workflow. Methods to evaluate user satisfaction included questionnaires (n=37), interviews (n=9), and focus groups (n=4). The third question aims to understand users' work quality, such as workflow and process efficiency. One study54 used workflow analysis to evaluate the work process before and after health IT was implemented.33

The motivation for adopting nursing information systems is often increased time for direct patient care.55 Researchers use time-and-motion studies to collect work activity information for time efficiency before and after using health IT.56 Two stage 4 studies in this literature review conducted randomized controlled trials to measure the efficiency of health IT.57,58 Although system impact is not the primary focus of stage 4, 32 studies assessed system utilization, patient outcomes, guideline adherence, and medication errors.

Stage 5: routine use

Most studies (n=196) included in this review evaluated health IT at stage 5 of the SDLC. The main purpose of evaluation at this stage is to understand the impact of health IT beyond the short-term measures of system–user–task–environment interaction. Therefore, the key question is ‘How does the system impact healthcare?’

One hundred and fifteen stage 5 studies evaluated the efficacy of health IT using log files or chart reviews. Other methods measured outcomes including guideline adherence, utilization, accuracy, document quality, medication error, patient outcomes, and cost-effectiveness.

Study design and data analysis in stages 4 and 5

Because stages 4 and 5 involve field testing, which examines higher levels of health IT effectiveness and efficiency, such as utilization, process efficiency and prescribing behaviors, we further analyzed studies in stages 4 and 5. More quantitative studies were conducted than qualitative studies. Qualitative methods included observation, interview, and focus group. Fourteen studies (20%) in stage 4 and 50 studies (26%) in stage 5 were qualitative. We also categorized the quantitative studies based on their objectivity or subjectivity (appendix IV, available as a data supplement online only). The most common study design was a quasi-experimental study design using one group with pretest and posttest comparison, or with only posttest assessment. The MEDLINE search retrieved an equal number of subjective evaluations (eg, self-report questionnaire) and objective evaluations (eg, log file analysis, cognitive walkthrough, and chart review).

With regard to analysis methods, only a small number of studies reported using multivariate analysis (eg, linear regression, logistical regression, general equation modeling); most used descriptive and comparative methods. For example, in studies in which questionnaires were used to assess clinicians' perceptions of health IT, descriptive analysis was usually used with comparisons between different types of users, such as physicians versus nurses.

Theories and methods used in usability studies

Theories being applied in health IT usability studies can be grouped into four categories: general system development/design framework, HCI, technology acceptance and technology adoption. Researchers use these theories/models to support their study rationales. We present these theories/models, their references and example studies in appendix V (available as a data supplement online only). Methods used in the usability studies address research questions to understand system specification, interface design, task/workflow identification, user–task–system interaction, field observation and multitask performance. We also categorized methods used in the usability studies, their references and example studies in appendix VI (available as a data supplement online only). Two additional methods59,60 that were not within our original search scope, but which were subsequently discovered are also included because of their potential value to health IT usability evaluation. Online appendices V and VI can be used to guide researchers in the selection of theories and methods for usability studies in the future.

Guidance for health IT usability evaluation

The usability specification and evaluation framework (table 2), which we adapted for application in our review, identifies the time points and interaction types for evaluation and facilitates the selection of theoretical models, outcome measures, and evaluation methods. A guide based upon the framework is summarized in table 7. This guide has the potential to assist researchers and those who develop and implement systems to design usability studies that are matched to specific system and evaluation goals.

Guide for selection of theories, outcomes, and methods based on type of interaction

| Type . | Theory applied (online supplement) . | Examples of outcomes measured . | Methods (online supplement) . |

|---|---|---|---|

| Type 0: task-based | NA | System specification | System element identification |

| Type 1: user–task | General system development/design framework | System specification |

|

| Type 2: system–task | NA | System validation | Sensitivity and specificity |

| Type 3: user–task–system |

|

| Chart review/log analysis |

| Interaction | User–system–task interaction | ||

| Perception |

| ||

| Type 4: user–task–system–environment |

| Workflow | Task/workflow analysis |

|

| ||

| Perception |

| ||

| Chart review/log analysis |

| Type . | Theory applied (online supplement) . | Examples of outcomes measured . | Methods (online supplement) . |

|---|---|---|---|

| Type 0: task-based | NA | System specification | System element identification |

| Type 1: user–task | General system development/design framework | System specification |

|

| Type 2: system–task | NA | System validation | Sensitivity and specificity |

| Type 3: user–task–system |

|

| Chart review/log analysis |

| Interaction | User–system–task interaction | ||

| Perception |

| ||

| Type 4: user–task–system–environment |

| Workflow | Task/workflow analysis |

|

| ||

| Perception |

| ||

| Chart review/log analysis |

Guide for selection of theories, outcomes, and methods based on type of interaction

| Type . | Theory applied (online supplement) . | Examples of outcomes measured . | Methods (online supplement) . |

|---|---|---|---|

| Type 0: task-based | NA | System specification | System element identification |

| Type 1: user–task | General system development/design framework | System specification |

|

| Type 2: system–task | NA | System validation | Sensitivity and specificity |

| Type 3: user–task–system |

|

| Chart review/log analysis |

| Interaction | User–system–task interaction | ||

| Perception |

| ||

| Type 4: user–task–system–environment |

| Workflow | Task/workflow analysis |

|

| ||

| Perception |

| ||

| Chart review/log analysis |

| Type . | Theory applied (online supplement) . | Examples of outcomes measured . | Methods (online supplement) . |

|---|---|---|---|

| Type 0: task-based | NA | System specification | System element identification |

| Type 1: user–task | General system development/design framework | System specification |

|

| Type 2: system–task | NA | System validation | Sensitivity and specificity |

| Type 3: user–task–system |

|

| Chart review/log analysis |

| Interaction | User–system–task interaction | ||

| Perception |

| ||

| Type 4: user–task–system–environment |

| Workflow | Task/workflow analysis |

|

| ||

| Perception |

| ||

| Chart review/log analysis |

Discussion

Most studies evaluated health IT at stage 4 and stage 5. Some noted that the health IT had been evaluated before it went into field testing. Health IT that is evaluated only in stages 4 or 5 may be commercial products, and therefore, organizations may have lacked the opportunity for earlier evaluation. Studies identified adoption barriers due to system validation, usefulness, ease of use, system flow, workflow interruption, insufficient system training, or technology support.61–66 Some of these barriers may have been minimized by applying evaluation methods during stage 1 to stage 3. In this section we summarize methodological issues in existing studies and describe a stratified view of health IT usability evaluation.

Methodological issues in existing studies

We discovered several problems in existing studies. Even though there is an increased awareness regarding the importance of usability evaluation, most studies in this review provided limited information about associated evaluations relevant to each SDLC stage.

Lack of theoretical framework/model

Theoretical frameworks/models are essential to research studies, suggesting rationale for hypothesized relationships and providing the basis for verification. However, the majority of publications in this review lacked an explicit theoretical framework/model. This is consistent with a previous literature review of evaluation designs for health information systems.10 Most theoretical frameworks used were adapted from HCI, information system management, or cognitive behavioral science. In addition, there are no clear guidelines or recommendations for the utilization of a theoretical framework in health IT usability studies. The guidance provided in table 7 can potentially assist with this issue.

Lack of details regarding qualitative study approaches

Qualitative studies generally use interviews or observations to explore indepth knowledge of users' experiences, work patterns and human behaviors that methods such as surveys or system logs cannot capture. However, most qualitative studies did not provide detailed information regarding the study approaches applied to answer the research questions. For example, phenomenology, ethnography and ground theory are commonly used to understand users' experience, culture and decision-making process, respectively. Research in HCI and computer-supported cooperative work (CSCW) has also established user-centered approaches such as participatory design and contextual enquiry to understand the relationship between technology and human activities in health care.67–71 In our review, 29 qualitative studies integrated clinicians from the beginning stages of the system development process to define clinician needs, system specification or workflow as is done with participatory design approaches. However, the studies reviewed did not directly refer to a specific study approach such as participatory design. Explication of the qualitative approach is important to determine study rigor with regard to criteria such as credibility, trust worthiness, and auditability.

Single evaluation focus

Although usability evaluation ideally examines the relationship of users, tools, and tasks in a specific working environment, most of the studies in this review focused on single measures, such as time efficiency or user acceptance, which cannot convey the whole picture of usability. Furthermore, some potentially useful methods (eg, task analysis and workflow analysis) were rarely used in the studies in our review.

Environmental factors not evaluated in the early stages

Among studies in the development stage (stage 1), only four reported conducting task or workflow analysis. Even though identifying system features, functions, and interfaces from the user perspective is essential for the design of usable system, the perspective is frequently lacking. As a possible consequence, studies have identified workarounds and barriers to adoption due to lack of fit between system and workflow or environment.72,73

Guideline adherence as the primary outcome for clinical decision support systems

Clinical decision support systems were typically evaluated for their effectiveness by clinician adherence to guideline recommendations. However, guideline adherence is influenced by providers' estimates of the time required to resolve reminders resulting in low adherence rates when providers estimate a long resolution time.74 Other usability barriers related to computerized reminders in this review included workflow problems, such as receipt of reminders while not with a patient, thus impairing data acquisition and/or the implementation of recommended actions; and poor system interface. Facilitators included: limiting the number of reminders; strategic location of the computer workstations; integration of reminders into workflow; and the ability to document system problems and receive prompt administrator feedback.65

Physicians are more adherent to guideline recommendations when they are less familiar with the patient.75 However, greater adherence does not suggest better treatment, because additional knowledge beyond the electronic health record was found to be the major reason for non-adherence.76 Therefore, measuring guideline adherence rates may not capture pure usability; adjustments should be made for other confounders, such as familiarity with patients and physicians' experience. Moreover, as with other application areas, relevant techniques should be applied at earlier stages of SDLC to tease out user interface versus workflow issues (see appendix IV, available online only). For example, application of a Wizard of Oz approach77,78 in stage 3 would allow the examination of HCI issues without the presence of a fully developed rules engine.

A stratified view of health IT usability evaluation

The SDLC provides a commonly accepted guide for system development and evaluation. However, it does not focus on the type of interactions that are evaluated. Consequently, it is difficult to ascertain if a system issue is due to system–task interaction, user–system–task interaction or user–system–task–environment interaction.

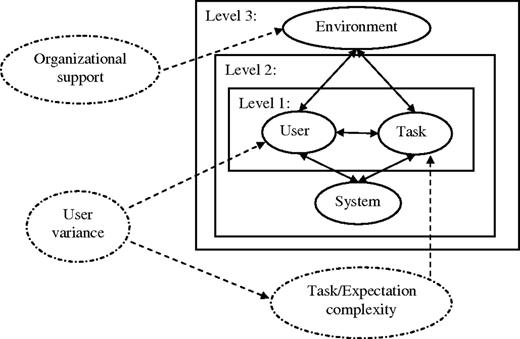

Inspired by Bennett and Shackel's usability model, the health IT usability specification and evaluation framework (table 2), which we adapted and applied in our review, provides a categorization of study approaches by evaluation types. Existing models or frameworks successfully identify potential factors that influence health IT usability. However, because of the varied manner in which the factors influence interactions, it is difficult to determine if problems stem from health IT usability, user variance, or organizational factors. Therefore, a stratified view of health IT usability evaluation (figure 2) may potentially provide a better understanding for health IT evaluation.

A stratified view of health information technology evaluation. Level 1 targets system specification to understand user-task interaction for system development. Level 2 examines task performance to assess system validation and human–computer interaction. Level 3 aims to incorporate environmental factors to identify work processes and system impacts in real settings. Task/expectation complexity, user variances, and organizational support are factors that influence the use of the system, but are not problems of the system itself, and need to be differentiated from system-related issues.

In the stratified view, level 1 targets system specification to understand user–task interaction for system development, which is usually conducted in SDLC stage 1. Level 2 examines the task performance to assess system validation and HCI, which is generally evaluated in SDLC stages 2 and 3. Level 3 aims to incorporate environmental factors to identify work processes and system impact in a real setting, which is commonly assessed in SDLC stages 4 and 5. However, in many situations health IT is evaluated only at SDLC stages 4 and 5; evaluation also should occur at earlier stages in order to determine which level of interaction is problematical.

Task/expectation complexity, user variances, and organizational support are factors that influence the use of the system, but are not problems of the system itself, and need to be differentiated from system-related issues. For example, at level 1, through application of user-centered design, we can control some user variance by recruiting the targeted users as participants. In addition, task/expectation complexity can be measured to identify system specifications. At level 3, we can minimize user variance by user training and providing sufficient organizational support. An evaluation of perceived usability based on the level of task/expectations would then be able to reveal the system usability at each level of task/expectations.

Friedman and Wyatt's table of nine generic study types describes similar ideas of incorporating users and tasks into the usability evaluation at certain system development time points.11 The stratified view of health IT usability evaluation extends the concept of evaluating with users and tasks to considering levels of user–task–system–environment interaction, as well as identifying confounding factors, task/expectation complexity, user variances, and organizational support, that directly or indirectly influence the results of usability evaluation. The stratified view potentially provides a clearer explication of interactions and factors influencing interactions, and can assist those conducting usability evaluations to focus on the interactions without overlooking the ultimate goal, health IT adoption, which is also influenced by non-interaction factors.

Limitations

There are a number of limitations to the review. First, the usability review used only one database (MEDLINE). Therefore, we may have missed methods that were used only in HCI or CSCW studies published in other scientific databases. To estimate the extent of this limitation, we searched Scopus to identify any additional theories and methods in HCI and CSCW-related journals or conference proceedings that were not found in the studies that we retrieved from MEDLINE. Although we found only one theoretical framework and one method not covered in our review, approaches such as participatory design and ethnography provide overall frameworks that link sets of methods together in a manner that is greater than the sum of their parts.79 Therefore, a review that included searches of additional databases such as PubMed, Web of Science and Scopus would provide additional practical guidance beyond that derived from our analysis in this review. Second, because we used MeSH and keywords to retrieve studies, studies with inappropriate indexing or that lacked sufficient information in their abstracts may not have been retrieved. Third, the number of reviewers was small. Studies were analyzed by one author (PYY) with review by the second (SB). Both authors agreed upon the study extraction and categorization. Fourth, our review focused on identifying methods and scales used, but did not evaluate best methods or scales for usability evaluation because the selection of methods and scales also depends on the type of health IT, evaluation goals, and other variables. However, this was the first review of health IT studies using an integrated usability specification and evaluation framework, and it provides an inventory of evaluation frameworks and methods and practical guidance for their application.

Conclusion

Although consideration of user–task–system–environment interaction for usability evaluation is important,12,13 existing reviews do not provide guidance based on the user–task–system–environment interaction. Therefore, we reviewed and categorized current health IT usability studies and provided a practical guide for health IT usability evaluation that integrates theories and methods based on user–task–system–environment interaction. To better identify usability problems at different levels of interaction, we also provided a stratified view of health IT usability evaluation to assist those conducting usability evaluations to focus on the interactions without overlooking some non-interaction factors. There is no doubt that the usability of health IT is critical to achieving its promise in improving health care and the health of the public. Toward such goals, these materials have the potential to assist conducting usability studies at different SDLC stages and in measuring different evaluation outcomes for specific evaluation goals based on users' needs and levels of expectation.

Funding

The research was supported by the Center for Evidence-based Practice in the Underserved (P30NR010677).

Competing interests

None.

Provenance and peer review

Not commissioned; externally peer reviewed.

References