-

PDF

- Split View

-

Views

-

Cite

Cite

Guanjian Chen, Yequan Xie, Bin Yang, JiaNan Tan, Guangyu Zhong, Lin Zhong, Shengning Zhou, Fanghai Han, Artificial intelligence model for perigastric blood vessel recognition during laparoscopic radical gastrectomy with D2 lymphadenectomy in locally advanced gastric cancer, BJS Open, Volume 9, Issue 1, February 2025, zrae158, https://doi.org/10.1093/bjsopen/zrae158

Close - Share Icon Share

Abstract

Radical gastrectomy with D2 lymphadenectomy is standard surgical protocol for locally advanced gastric cancer. The surgical experience and skill in recognizing blood vessels and performing lymph node dissection differ between surgeons, which may influence intraoperative safety and postoperative oncological outcomes. Hence, the aim of this study was to develop an accurate and real-time deep learning-based perigastric blood vessel recognition model to assist intraoperative performance.

This was a retrospective study assessing videos of laparoscopic radical gastrectomy with D2 lymphadenectomy. The model was developed based on DeepLabv3+. Static performance was evaluated using precision, recall, intersection over union, and F1 score. Dynamic performance was verified using 15 intraoperative videos.

The study involved 2460 images captured from 116 videos. Mean(s.d.) precision, recall, intersection over union, and F1 score for the artery were 0.9442(0.0059), 0.9099(0.0163), 0.8635(0.0146), and 0.9267(0.0084) respectively. Mean(s.d.) precision, recall, intersection over union, and F1 score for the vein were 0.9349(0.0064), 0.8491(0.0259), 0.8015(0.0206), and 0.8897(0.0127) respectively. The model also performed well in recognizing perigastric blood vessels in 15 dynamic test videos. Intersection over union and F1 score in difficult image conditions, such as bleeding or massive surgical smoke in the field of view, were reduced, while images from obese patients resulted in satisfactory vessel recognition.

The model recognized the perigastric blood vessels with satisfactory predictive value in the test set and performed well in the dynamic videos. It therefore shows promise with regard to increasing safety and decreasing accidental bleeding during laparoscopic gastrectomy.

Introduction

Radical gastrectomy with D2 lymphadenectomy is the standard surgical protocol for locally advanced gastric cancer (LAGC)1–3. The most important aspects of gastric cancer surgery involve dealing with perigastric blood vessels (identifying or ligating them) and performing D2 dissection of the lymph nodes distributed along the perigastric blood vessels. Surgical outcomes rely heavily on surgeon experience, as even experienced surgeons are occasionally challenged by the variability of the perigastric blood vessels4–6. Bleeding can easily occur if surgery is performed with inadequate recognition of the vasculature. Hence, an accurate, real-time perigastric blood vessel recognition tool for laparoscopic radical gastrectomy with D2 lymphadenectomy for LAGC could be helpful.

Recently, artificial intelligence has achieved tremendous developments in medicine. Several image- or video-based recognition deep learning (DL) models have been applied in surgical areas to recognize specific anatomical structures7–10. The applications of DL in medicine therefore present an opportunity to develop a perigastric blood vessel recognition model (PGBVRM). Laparoscopic gastrectomy facilitates surgical video recording11–14. In the present study, a PGBVRM was trained by using images captured from oncological laparoscopic gastrectomy videos for the purpose of developing a model to aid surgeons in quickly recognizing the perigastric blood vessels. The PGBVRM in the present study is the first real-time and accurate perigastric blood vessel recognition DL model in navigating radical gastrectomy with D2 lymphadenectomy for LAGC.

Methods

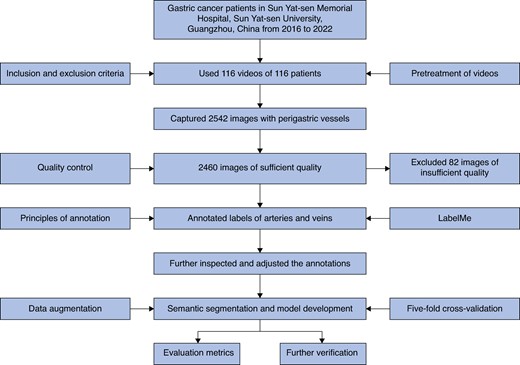

Patients and video data sets

In this study, operation videos of patients with LAGC of the Department of Gastrointestinal Surgery of Sun Yat-sen Memorial Hospital, Sun Yat-sen University, Guangzhou, China from 2016 to 2022 were collected according to the inclusion and exclusion criteria. The inclusion criteria were: patient age greater than or equal to 14 years; confirmed diagnosis of LAGC (cT1–2 N+ or cT3–4a Nany, cM0), according to the 8th edition of the American Joint Committee on Cancer (AJCC) Gastric Cancer Staging Manual; and radical laparoscopic gastrectomy with D2 lymphadenectomy performed in Sun Yat-sen Memorial Hospital, Sun Yat-sen University, Guangzhou, China. The exclusion criteria were: any history of upper abdominal surgery; incomplete video recordings or clinical data; poor-quality videos; emergency surgery for obstruction and perforation; interventional embolization of perigastric vessels conducted before the operation; conversion to open surgery; and operation combined with resection of other organs. All videos were anonymized and the patients’ personal information was removed. This retrospective study was approved by the Ethics Committee of Sun Yat-sen Memorial Hospital, Sun Yat-sen University, Guangzhou, China (Ethical approval number SYSKY-2024-044-01) and the need for written informed consent was waived because of the retrospective nature of this study.

Video pretreatment and image acquisition

All videos were converted to a unified MP4 format with a display resolution of 1920 × 1080 pixels and a frame rate of 30 frames per second. Two experienced surgeons who had completed several hundred laparoscopic radical gastrectomies with D2 lymphadenectomy captured the images in which the perigastric blood vessels were clearly seen without massive surgical smoke or other cover, and then saved them in JPG or PNG format with a display resolution of 1920 × 1080 pixels. The main perigastric blood vessels the study focused on were: celiac trunk, left gastric artery and vein, right gastric artery and vein, splenic artery and vein, left gastroepiploic artery and vein, right gastroepiploic artery and vein, portal vein, common hepatic artery, and proper hepatic artery. The images were captured at an interval of at least 300 frames (10 s), between which the images were considered as having changes. Subsequently, another more experienced surgeon with at least 20 years of experience in gastric cancer surgery finally examined all images and deleted unsatisfactory images that were extremely similar to each other or were confusing to ensure the quality of the images.

Annotation of perigastric blood vessels

Two experienced surgeons conducted annotation using the LabelMe open-source annotation software15. The annotation principles were: dots were drawn around the vessel edges in the images to form the labels, then named A (artery) or V (vein); when any vessel annotation was uncertain, the uncertainty was initially discussed by exchanging opinions between the two annotators to reach consensus and, when the discrimination remained unsolved, another more experienced surgeon was consulted for the final decision; when vessels were partly covered by fat or blood, the fat and blood were not included in the annotated labels; and after completing the annotations in one image, the labels were saved in json format. Then, the most experienced surgeon in the department inspected and adjusted the annotations for the purpose of effective model development.

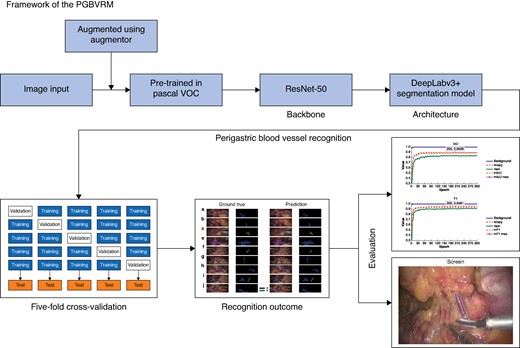

Semantic segmentation and model development

DeepLabv3+16 was then used as the architecture combined with ResNet-50 as the backbone to perform the semantic segmentation task of vessels. The model was pre-trained using the pascalVOC public data set. Data were augmented using the open-source framework called augmentor, which included image rotation at 90°, 180°, and 270° and slight stretching within 25°. Among the final images of the model development group, 10% were randomly assigned to the test set and the other 90% were split into five subsets. Each subset was used as a validation set, while the other four subsets were combined as a training set to perform five-fold cross-validation. The images in the training set did not appear in the validation and test set. The model was trained for 300 epochs. Figure 1 presents an overview of the model development and evaluation.

Overview of model development and verification

PGBVRM, perigastric blood vessel recognition model.

Model evaluation metrics and dynamic verification

The model performance was assessed based on precision, recall, intersection over union (IoU), and F1 score. Precision determines the percentage of true positive classifications (TP) within the predicted positive classifications, and recall defines how many TP the model succeeded in finding within all positive classifications. IoU, the ratio of the intersection, is typically used to evaluate the predicted ability of the model. The F1 score is also a common metric that evaluates model predictive performance by combining the precision and recall metrics. IoU values and F1 score greater than 0.5 are typically considered a ‘good’ prediction. The mean(s.d.) values of these metrics among the five-fold cross-validations are reported. The mIoU and mF1 score are the mean IoU and mean F1 score of the background, artery, and vein in each epoch. These evaluation metrics are calculated as follows:

Precision = (TP)/(TP + FP), where TP and FP stand for true positive classifications and false positive classifications.

Recall = (TP)/(TP + FN), where FN stands for false negative classifications.

IoU = |X ∩ Y| / |X ∪ Y| = (TP)/(TP + FN+ FP), where X stands for the ground truth manually annotated by surgeons and Y stands for the area predicted by the model.

F1 score = 2 × (precision × recall) / (precision + recall) = (2 × TP) / (2 × TP + FN+ FP).

Because the model was trained using static images, the practical application in dynamic videos needed to be verified. Hence, the dynamic performance of the PGBVRM was further verified by utilizing it to recognize the perigastric blood vessels in separate intraoperative videos that were recorded in 2023 from 15 patients in the dynamic verification group who met the inclusion and exclusion criteria and were not used in model development.

Hardware and software

All model development procedures were performed using a script written in Python 3.8 (Python Software Foundation, Wilmington, DE, USA). The model was trained using the open-source machine learning framework PaddlePaddle (https://github.com/PaddlePaddle). Model development and validation were completed using a computer equipped with an NVIDIA RTX3060 GPU with 24 GB VRAM (NVIDIA, Santa Clara, CA, USA) and 12th Gen Intel® Core™ i9-12900K 3.19 GHz with 64 GB RAM.

Statistical analysis

To describe the clinical and pathological characteristics of patients in this study, descriptive statistics were utilized. Among continuous variables, those that followed a normal distribution are described using mean(s.d.) and Student’s t test was used to compare differences, whereas skewed continuous variables are described using median (interquartile range) and the Mann–Whitney U test was used to compare differences. Categorical variables are described using n (%) and the chi-squared test or Fisher’s exact test was used to assess differences. Analyses were all performed using SPSS® (IBM, Armonk, NY, USA; version 26 for Windows). P < 0.050 was considered statistically significant.

Results

Clinical and pathological characteristics of patients in the model development group and the dynamic verification group

Eventually, 116 patients were enrolled in model development group and the dynamic verification group consisted of 15 patients. The clinical and pathological characteristics of patients in the two groups are summarized in Table 1. There were no statistically significant differences in gender, age, body mass index (BMI), AJCC cStage, tumour location, resection type, tumour size, tumour differentiation, or AJCC pStage between the two groups.

| . | Model development group (n = 116) . | Dynamic verification group (n = 15) . | P . |

|---|---|---|---|

| Sex | 0.685 | ||

| Male | 80 (69.0) | 9 (60.0) | |

| Female | 36 (31.0) | 6 (40.0) | |

| Age (years), median (i.q.r.) | 60 (52–64) | 59 (53–65) | 0.682 |

| BMI (kg/m2), mean(s.d.) | 22.20(3.23) | 22.12(4.46) | 0.597 |

| cT | 0.155 | ||

| cT1 | 5 (4.3) | 1 (6.7) | |

| cT2 | 25 (21.6) | 1 (6.7) | |

| cT3 | 65 (56.0) | 7 (46.7) | |

| cT4a | 21 (18.1) | 6 (40.0) | |

| cN | 0.419 | ||

| cN0 | 31 (26.7) | 2 (13.3) | |

| cN+ | 85 (73.3) | 13 (86.7) | |

| cStage | 0.242 | ||

| cStage IIA | 30 (25.9) | 2 (13.3) | |

| cStage IIB | 31 (26.7) | 2 (13.3) | |

| cStage III | 55 (47.4) | 11 (73.3) | |

| Tumour location | 0.675 | ||

| Upper | 20 (17.2) | 4 (26.7) | |

| Middle | 23 (19.8) | 2 (13.3) | |

| Lower | 73 (62.9) | 9 (60.0) | |

| Resection type | 0.510 | ||

| Total gastrectomy | 29 (25.0) | 5 (33.3) | |

| Distal gastrectomy | 82 (70.7) | 9 (60.0) | |

| Proximal gastrectomy | 5 (4.3) | 1 (6.7) | |

| Tumour size (cm), median (i.q.r.) | 4.00 (2.55–5.50) | 4.50 (3.00–7.00) | 0.329 |

| Tumour differentiation | 0.908 | ||

| Well/moderate | 37 (31.9) | 4 (26.7) | |

| Poor/undifferentiated | 79 (68.1) | 11 (73.3) | |

| pT | 0.812 | ||

| pT1 | 20 (17.2) | 1 (6.7) | |

| pT2 | 12 (10.3) | 2 (13.3) | |

| pT3 | 19 (16.4) | 3 (20.0) | |

| pT4 | 65 (56.0) | 9 (60.0) | |

| pN | 0.979 | ||

| pN0 | 31 (26.7) | 4 (26.7) | |

| pN1 | 13 (11.2) | 1 (6.7) | |

| pN2 | 17 (14.7) | 3 (20.0) | |

| pN3a | 26 (22.4) | 3 (20.0) | |

| pN3b | 29 (25.0) | 4 (26.7) | |

| pStage | 0.670 | ||

| pStage I | 24 (20.7) | 3 (20.0) | |

| pStage II | 20 (17.2) | 1 (6.7) | |

| pStage III | 72 (62.1) | 11 (73.3) |

| . | Model development group (n = 116) . | Dynamic verification group (n = 15) . | P . |

|---|---|---|---|

| Sex | 0.685 | ||

| Male | 80 (69.0) | 9 (60.0) | |

| Female | 36 (31.0) | 6 (40.0) | |

| Age (years), median (i.q.r.) | 60 (52–64) | 59 (53–65) | 0.682 |

| BMI (kg/m2), mean(s.d.) | 22.20(3.23) | 22.12(4.46) | 0.597 |

| cT | 0.155 | ||

| cT1 | 5 (4.3) | 1 (6.7) | |

| cT2 | 25 (21.6) | 1 (6.7) | |

| cT3 | 65 (56.0) | 7 (46.7) | |

| cT4a | 21 (18.1) | 6 (40.0) | |

| cN | 0.419 | ||

| cN0 | 31 (26.7) | 2 (13.3) | |

| cN+ | 85 (73.3) | 13 (86.7) | |

| cStage | 0.242 | ||

| cStage IIA | 30 (25.9) | 2 (13.3) | |

| cStage IIB | 31 (26.7) | 2 (13.3) | |

| cStage III | 55 (47.4) | 11 (73.3) | |

| Tumour location | 0.675 | ||

| Upper | 20 (17.2) | 4 (26.7) | |

| Middle | 23 (19.8) | 2 (13.3) | |

| Lower | 73 (62.9) | 9 (60.0) | |

| Resection type | 0.510 | ||

| Total gastrectomy | 29 (25.0) | 5 (33.3) | |

| Distal gastrectomy | 82 (70.7) | 9 (60.0) | |

| Proximal gastrectomy | 5 (4.3) | 1 (6.7) | |

| Tumour size (cm), median (i.q.r.) | 4.00 (2.55–5.50) | 4.50 (3.00–7.00) | 0.329 |

| Tumour differentiation | 0.908 | ||

| Well/moderate | 37 (31.9) | 4 (26.7) | |

| Poor/undifferentiated | 79 (68.1) | 11 (73.3) | |

| pT | 0.812 | ||

| pT1 | 20 (17.2) | 1 (6.7) | |

| pT2 | 12 (10.3) | 2 (13.3) | |

| pT3 | 19 (16.4) | 3 (20.0) | |

| pT4 | 65 (56.0) | 9 (60.0) | |

| pN | 0.979 | ||

| pN0 | 31 (26.7) | 4 (26.7) | |

| pN1 | 13 (11.2) | 1 (6.7) | |

| pN2 | 17 (14.7) | 3 (20.0) | |

| pN3a | 26 (22.4) | 3 (20.0) | |

| pN3b | 29 (25.0) | 4 (26.7) | |

| pStage | 0.670 | ||

| pStage I | 24 (20.7) | 3 (20.0) | |

| pStage II | 20 (17.2) | 1 (6.7) | |

| pStage III | 72 (62.1) | 11 (73.3) |

Values are n (%) unless otherwise indicated. Clinical and pathological staging are based on the 8th edition of the American Joint Committee on Cancer Gastric Cancer Staging Manual. i.q.r., interquartile range; BMI, body mass index.

| . | Model development group (n = 116) . | Dynamic verification group (n = 15) . | P . |

|---|---|---|---|

| Sex | 0.685 | ||

| Male | 80 (69.0) | 9 (60.0) | |

| Female | 36 (31.0) | 6 (40.0) | |

| Age (years), median (i.q.r.) | 60 (52–64) | 59 (53–65) | 0.682 |

| BMI (kg/m2), mean(s.d.) | 22.20(3.23) | 22.12(4.46) | 0.597 |

| cT | 0.155 | ||

| cT1 | 5 (4.3) | 1 (6.7) | |

| cT2 | 25 (21.6) | 1 (6.7) | |

| cT3 | 65 (56.0) | 7 (46.7) | |

| cT4a | 21 (18.1) | 6 (40.0) | |

| cN | 0.419 | ||

| cN0 | 31 (26.7) | 2 (13.3) | |

| cN+ | 85 (73.3) | 13 (86.7) | |

| cStage | 0.242 | ||

| cStage IIA | 30 (25.9) | 2 (13.3) | |

| cStage IIB | 31 (26.7) | 2 (13.3) | |

| cStage III | 55 (47.4) | 11 (73.3) | |

| Tumour location | 0.675 | ||

| Upper | 20 (17.2) | 4 (26.7) | |

| Middle | 23 (19.8) | 2 (13.3) | |

| Lower | 73 (62.9) | 9 (60.0) | |

| Resection type | 0.510 | ||

| Total gastrectomy | 29 (25.0) | 5 (33.3) | |

| Distal gastrectomy | 82 (70.7) | 9 (60.0) | |

| Proximal gastrectomy | 5 (4.3) | 1 (6.7) | |

| Tumour size (cm), median (i.q.r.) | 4.00 (2.55–5.50) | 4.50 (3.00–7.00) | 0.329 |

| Tumour differentiation | 0.908 | ||

| Well/moderate | 37 (31.9) | 4 (26.7) | |

| Poor/undifferentiated | 79 (68.1) | 11 (73.3) | |

| pT | 0.812 | ||

| pT1 | 20 (17.2) | 1 (6.7) | |

| pT2 | 12 (10.3) | 2 (13.3) | |

| pT3 | 19 (16.4) | 3 (20.0) | |

| pT4 | 65 (56.0) | 9 (60.0) | |

| pN | 0.979 | ||

| pN0 | 31 (26.7) | 4 (26.7) | |

| pN1 | 13 (11.2) | 1 (6.7) | |

| pN2 | 17 (14.7) | 3 (20.0) | |

| pN3a | 26 (22.4) | 3 (20.0) | |

| pN3b | 29 (25.0) | 4 (26.7) | |

| pStage | 0.670 | ||

| pStage I | 24 (20.7) | 3 (20.0) | |

| pStage II | 20 (17.2) | 1 (6.7) | |

| pStage III | 72 (62.1) | 11 (73.3) |

| . | Model development group (n = 116) . | Dynamic verification group (n = 15) . | P . |

|---|---|---|---|

| Sex | 0.685 | ||

| Male | 80 (69.0) | 9 (60.0) | |

| Female | 36 (31.0) | 6 (40.0) | |

| Age (years), median (i.q.r.) | 60 (52–64) | 59 (53–65) | 0.682 |

| BMI (kg/m2), mean(s.d.) | 22.20(3.23) | 22.12(4.46) | 0.597 |

| cT | 0.155 | ||

| cT1 | 5 (4.3) | 1 (6.7) | |

| cT2 | 25 (21.6) | 1 (6.7) | |

| cT3 | 65 (56.0) | 7 (46.7) | |

| cT4a | 21 (18.1) | 6 (40.0) | |

| cN | 0.419 | ||

| cN0 | 31 (26.7) | 2 (13.3) | |

| cN+ | 85 (73.3) | 13 (86.7) | |

| cStage | 0.242 | ||

| cStage IIA | 30 (25.9) | 2 (13.3) | |

| cStage IIB | 31 (26.7) | 2 (13.3) | |

| cStage III | 55 (47.4) | 11 (73.3) | |

| Tumour location | 0.675 | ||

| Upper | 20 (17.2) | 4 (26.7) | |

| Middle | 23 (19.8) | 2 (13.3) | |

| Lower | 73 (62.9) | 9 (60.0) | |

| Resection type | 0.510 | ||

| Total gastrectomy | 29 (25.0) | 5 (33.3) | |

| Distal gastrectomy | 82 (70.7) | 9 (60.0) | |

| Proximal gastrectomy | 5 (4.3) | 1 (6.7) | |

| Tumour size (cm), median (i.q.r.) | 4.00 (2.55–5.50) | 4.50 (3.00–7.00) | 0.329 |

| Tumour differentiation | 0.908 | ||

| Well/moderate | 37 (31.9) | 4 (26.7) | |

| Poor/undifferentiated | 79 (68.1) | 11 (73.3) | |

| pT | 0.812 | ||

| pT1 | 20 (17.2) | 1 (6.7) | |

| pT2 | 12 (10.3) | 2 (13.3) | |

| pT3 | 19 (16.4) | 3 (20.0) | |

| pT4 | 65 (56.0) | 9 (60.0) | |

| pN | 0.979 | ||

| pN0 | 31 (26.7) | 4 (26.7) | |

| pN1 | 13 (11.2) | 1 (6.7) | |

| pN2 | 17 (14.7) | 3 (20.0) | |

| pN3a | 26 (22.4) | 3 (20.0) | |

| pN3b | 29 (25.0) | 4 (26.7) | |

| pStage | 0.670 | ||

| pStage I | 24 (20.7) | 3 (20.0) | |

| pStage II | 20 (17.2) | 1 (6.7) | |

| pStage III | 72 (62.1) | 11 (73.3) |

Values are n (%) unless otherwise indicated. Clinical and pathological staging are based on the 8th edition of the American Joint Committee on Cancer Gastric Cancer Staging Manual. i.q.r., interquartile range; BMI, body mass index.

Evaluation metrics

In total, 116 operation videos of 116 patients with a total of 2542 images were included in the model development group for initial assessment. After removing images of insufficient quality, 2460 images captured from the 116 videos remained for image annotation and then were used to develop the PGBVRM. Figure 2 presents the overall study flow chart. Figure S1 shows representative images from the videos. In visual evaluation, the qualitative accuracy of the PGBVRM in recognizing vessels fulfilled clinical need subjectively. Figure S2 shows representative predictive outcomes from the test set, in which the annotated ground truth is shown on the left side and the prediction of the DL model is shown on the right side of the figure. Additionally to visual checks, the accuracy of the PGBVRM was quantitatively assessed by evaluation metrics in five-fold cross-validation, presented in Table 2. In the five-fold cross-validation, the maximum mIoU and mF1 score were obtained in Epoch 200, Epoch 267, Epoch 249, Epoch 256, and Epoch 177 in the first fold, second fold, third fold, fourth fold, and fifth fold respectively (shown in Figure S3). The metrics of these epochs in every fold were used to evaluate the model. The mean(s.d.) of precision, recall, IoU, and F1 score among the five-fold cross-validation for the artery were 0.9442(0.0059), 0.9099(0.0163), 0.8635(0.0146), and 0.9267(0.0084) respectively. The mean(s.d.) of precision, recall, IoU, and F1 score among the five-fold cross-validation for the vein were 0.9349(0.0064), 0.8491(0.0259), 0.8015(0.0206), and 0.8897(0.0127) respectively. Generally, the values of IoU and F1 score in this study indicated that the model the authors trained had good predictive performance in recognizing perigastric blood vessels.

Evaluation metrics of the model when the mean intersection over union and mean F1 score reached their maximum in every fold

| Metric . | Target . | First fold . | Second fold . | Third fold . | Fourth fold . | Fifth fold . | Mean(s.d.) . |

|---|---|---|---|---|---|---|---|

| Precision | Artery | 0.9491 | 0.9346 | 0.9486 | 0.9457 | 0.9430 | 0.9442(0.0059) |

| Vein | 0.9298 | 0.9314 | 0.9336 | 0.9337 | 0.9460 | 0.9349(0.0064) | |

| Recall | Artery | 0.9251 | 0.9263 | 0.9117 | 0.8961 | 0.8906 | 0.9099(0.0163) |

| Vein | 0.8806 | 0.8604 | 0.8162 | 0.8588 | 0.8296 | 0.8491(0.0259) | |

| IoU | Artery | 0.8814 | 0.8699 | 0.8688 | 0.8522 | 0.8451 | 0.8635(0.0146) |

| Vein | 0.8257 | 0.8091 | 0.7715 | 0.8094 | 0.7920 | 0.8015(0.0206) | |

| F1 score | Artery | 0.9369 | 0.9304 | 0.9298 | 0.9202 | 0.9160 | 0.9267(0.0084) |

| Vein | 0.9045 | 0.8945 | 0.8710 | 0.8947 | 0.8840 | 0.8897(0.0127) |

| Metric . | Target . | First fold . | Second fold . | Third fold . | Fourth fold . | Fifth fold . | Mean(s.d.) . |

|---|---|---|---|---|---|---|---|

| Precision | Artery | 0.9491 | 0.9346 | 0.9486 | 0.9457 | 0.9430 | 0.9442(0.0059) |

| Vein | 0.9298 | 0.9314 | 0.9336 | 0.9337 | 0.9460 | 0.9349(0.0064) | |

| Recall | Artery | 0.9251 | 0.9263 | 0.9117 | 0.8961 | 0.8906 | 0.9099(0.0163) |

| Vein | 0.8806 | 0.8604 | 0.8162 | 0.8588 | 0.8296 | 0.8491(0.0259) | |

| IoU | Artery | 0.8814 | 0.8699 | 0.8688 | 0.8522 | 0.8451 | 0.8635(0.0146) |

| Vein | 0.8257 | 0.8091 | 0.7715 | 0.8094 | 0.7920 | 0.8015(0.0206) | |

| F1 score | Artery | 0.9369 | 0.9304 | 0.9298 | 0.9202 | 0.9160 | 0.9267(0.0084) |

| Vein | 0.9045 | 0.8945 | 0.8710 | 0.8947 | 0.8840 | 0.8897(0.0127) |

IoU, intersection over union.

Evaluation metrics of the model when the mean intersection over union and mean F1 score reached their maximum in every fold

| Metric . | Target . | First fold . | Second fold . | Third fold . | Fourth fold . | Fifth fold . | Mean(s.d.) . |

|---|---|---|---|---|---|---|---|

| Precision | Artery | 0.9491 | 0.9346 | 0.9486 | 0.9457 | 0.9430 | 0.9442(0.0059) |

| Vein | 0.9298 | 0.9314 | 0.9336 | 0.9337 | 0.9460 | 0.9349(0.0064) | |

| Recall | Artery | 0.9251 | 0.9263 | 0.9117 | 0.8961 | 0.8906 | 0.9099(0.0163) |

| Vein | 0.8806 | 0.8604 | 0.8162 | 0.8588 | 0.8296 | 0.8491(0.0259) | |

| IoU | Artery | 0.8814 | 0.8699 | 0.8688 | 0.8522 | 0.8451 | 0.8635(0.0146) |

| Vein | 0.8257 | 0.8091 | 0.7715 | 0.8094 | 0.7920 | 0.8015(0.0206) | |

| F1 score | Artery | 0.9369 | 0.9304 | 0.9298 | 0.9202 | 0.9160 | 0.9267(0.0084) |

| Vein | 0.9045 | 0.8945 | 0.8710 | 0.8947 | 0.8840 | 0.8897(0.0127) |

| Metric . | Target . | First fold . | Second fold . | Third fold . | Fourth fold . | Fifth fold . | Mean(s.d.) . |

|---|---|---|---|---|---|---|---|

| Precision | Artery | 0.9491 | 0.9346 | 0.9486 | 0.9457 | 0.9430 | 0.9442(0.0059) |

| Vein | 0.9298 | 0.9314 | 0.9336 | 0.9337 | 0.9460 | 0.9349(0.0064) | |

| Recall | Artery | 0.9251 | 0.9263 | 0.9117 | 0.8961 | 0.8906 | 0.9099(0.0163) |

| Vein | 0.8806 | 0.8604 | 0.8162 | 0.8588 | 0.8296 | 0.8491(0.0259) | |

| IoU | Artery | 0.8814 | 0.8699 | 0.8688 | 0.8522 | 0.8451 | 0.8635(0.0146) |

| Vein | 0.8257 | 0.8091 | 0.7715 | 0.8094 | 0.7920 | 0.8015(0.0206) | |

| F1 score | Artery | 0.9369 | 0.9304 | 0.9298 | 0.9202 | 0.9160 | 0.9267(0.0084) |

| Vein | 0.9045 | 0.8945 | 0.8710 | 0.8947 | 0.8840 | 0.8897(0.0127) |

IoU, intersection over union.

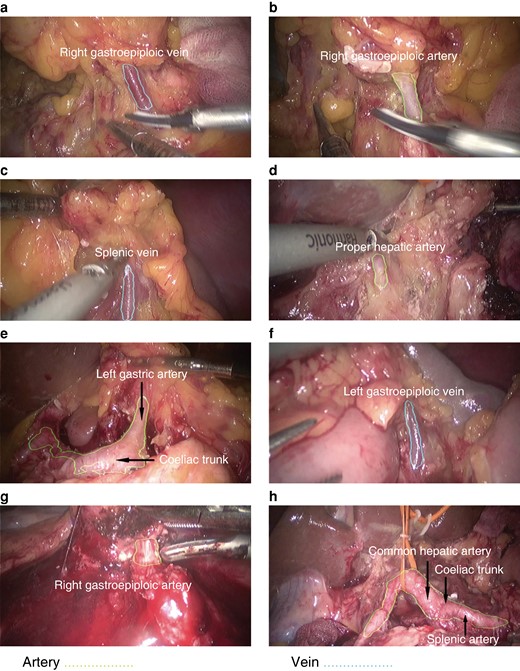

Further verification outcomes

During dynamic verification, the model worked at a speed of 30 frames per second, which was the same as that of the videos. To display the dynamic performance of the PGBVRM, five short video clips randomly extracted from the 15 videos show the dynamic applications of the PGBVRM Videos S1-S5. In dynamic verification, once the blood vessels were exposed in the surgical field of view, the artery and vein were automatically prominently displayed by green and blue dashed lines in real time respectively. Generally, the model functioned well in recognizing the artery and vein during the operation process. In this way, the model may have potential value in assisting surgeons to accurately recognize blood vessels in real time for the purpose of avoiding accidental vascular damage. Figure 3 shows representative frames from the intraoperative test videos. To objectively quantify the performance of the model in video verification, the authors annotated the five video clips and then imported them into the model to calculate the IoU and F1 score for recognition of the artery and vein. The results are shown in Table S1. Although the evaluation metrics were not as high as before, the values of IoU and F1 score for recognizing the artery and vein were all greater than 0.5 and the model may therefore have clinical applications in the future.

Representative frames from the test videos

Notably, the artery could still be recognized when the field of view was full of blood (g).

Testing the model in complex circumstances, the PGBVRM could recognize the artery when the field of view was full of blood (Fig. 3g), which indicated that the model had the potential to recognize blood vessels in difficult image conditions. To further evaluate vessel recognition ability in difficult image conditions, such as in the case of lots of blood or massive surgical smoke in the field of view or in the case of obese patients (BMI greater than or equal to 30 kg/m2), the authors additionally collected and annotated 150 images for these three conditions for further testing; the images were captured from videos recorded in 2023 and were not used in model development. IoU and F1 score were calculated. The test results are shown in Table S1. The IoU and F1 score for recognizing the artery and vein in images with lots of blood or massive surgical smoke were all less than 0.5 except the value of F1 score for recognizing the artery in images with lots of blood. The values of IoU and F1 score for recognizing the artery and vein in images of obese patients were all greater than 0.5.

Discussion

In the present preclinical feasibility study, the IoU and F1 score of a newly developed model for vessel recognition were satisfactory. The IoU and F1 score were 0.8635 and 0.9267 for the artery and 0.8015 and 0.8897 for the vein respectively. In short, the PGBVRM had significant predictive value in intraoperative vessel recognition. A total of 15 operation videos were used to further verify its predictive ability in real-time operations, in which the perigastric blood vessels were detected in real-time and accurately without obvious delay or mis-detection. In the evaluation of the five representative annotated video clips, the IoU and F1 score for recognizing the artery and vein were all greater than 0.5, which suggested that the model has potential value in blood vessel recognition and protection during surgery. Interestingly, the PGBVRM could even recognize the artery when the field of view was full of blood. However, further testing in difficult image conditions showed that the vessel recognition ability was relatively poor compared with in the test set and test videos. The values of IoU and F1 score for recognizing the artery and vein were greater than 0.5 in images of obese patients. Although the value of F1 score for recognizing the artery in images with lots of blood was greater than 0.5, other metrics for recognizing the vessels in those images with lots of blood and massive surgical smoke were less than 0.5. This means that the model in these two types of difficult image conditions failed to accurately recognize the vessels. As the initial image data set was of high quality and the artery and vein were clearly visible, the current ability of the PGBVRM to recognize the artery and vein in images with lots of blood and massive surgical smoke lacks data to train and has to be regarded as relatively poor. In contrast, the field of view was not obstructed in images of obese patients, and the recognition ability of the model was satisfactory for such images.

As the PGBVRM can recognize blood vessels automatically, it may have several promising applications in clinical practice and surgical education to promote technological progress and standardization of laparoscopic radical gastrectomy with D2 lymphadenectomy. An observational study in Korea (KLASS-02-QC) evaluated the quality of D2 dissection and reported that only 44.4% of observed surgeons were qualified in the initial evaluation17. The D2 dissection procedure is difficult and even experienced surgeons consider it technically challenging5,17,18. Besides, significant technical differences exist with regard to blood vessel identification and ligation among surgeons due to surgeons’ different clinical experiences and understanding of vascular anatomy, which may lead to unexpected surgical outcomes. Although the widespread use of laparoscopy has provided a much larger surgical view and improved the accuracy of surgery, it remains difficult and technically challenging for inexperienced surgeons to quickly and accurately identify blood vessels during gastric cancer surgery. However, it is crucial to accurately recognize the perigastric blood vessels along which the lymph nodes distribute to conduct a high-quality gastrectomy. The PGBVRM presents an alternative choice to assist in vessel recognition and avoid occurrences of bleeding. During laparoscopic gastric cancer surgery, any exposed or partly exposed perigastric vessel can be recognized by the PGBVRM, which might prevent accidental vessel injury and reduce bleeding events, ensuring the intraoperative safety of patients.

Furthermore, potentially, the PGBVRM might be applied to medical education, surgeon training, and surgical experience communication. In medical schools and teaching hospitals, operation videos are real and vivid teaching materials for students and inexperienced surgeons. However, for those with limited experience of laparoscopic gastric cancer surgery, recognizing the important structures and grasping the blood vessel ligation and lymph node dissection skills via unprocessed videos is exceedingly challenging. Moreover, it is inconvenient and time-consuming for trainers to manually annotate the structures in videos. After being processed by the PGBVRM, the perigastric blood vessels are easily displayed in the videos. Additionally, surgeons can use the PGBVRM to clearly display the perigastric blood vessels, as well as to dissect lymph nodes along the blood vessels.

Nevertheless, eliminating misrecognition remains challenging. Some pixels of fat or other non-vascular tissue in the background were recognized as pixels of vessels, while some pixels of vessels were ignored. Between vessels, artery pixels were occasionally recognized as vein pixels and vice versa. The reasons for the misrecognition were based on several aspects. First, most blood vessels in natural situations are covered by fat or other non-vascular tissues, which are clearly exposed only after dissection is completed. Although the authors avoided including obvious non-vascular tissues in the annotations, tiny layers of fat or other tissues were inevitably attached to vessels and might have been included in the annotations, leading to the PGBVRM misrecognizing the characteristics of fats or other tissues as parts of vessels. Second, slight bleeding occurred when performing separation and dissection, which made the blood vessels and other structures similar in terms of colour. Furthermore, arteries and veins share similar features in some aspects under the laparoscopic field of view; thus, the model sometimes recognized arteries as veins or vice versa. Theoretically, the PGBVRM may produce six types of misrecognition based on the reasons mentioned above: background misrecognized as artery (B→A), artery misrecognized as background (A→B), background misrecognized as vein (B→V), vein misrecognized as background (V→B), artery misrecognized as vein (A→V), and vein misrecognized as artery (V→A). Interestingly, only five types of misrecognition were found in the test set (A→V was undetected), whereas all six types of misrecognition were detected in the test videos. The reason why A→V was undetected in the test set might be the relatively limited scale of the test set. Figures S4, S5 present some examples of the six types of misrecognition in the test set and dynamic videos.

This study has several limitations. First, a certain amount of data is required to develop the PGBVRM, but the number of images used was relatively small due to the selection of only high-quality images. Second, the model was trained based on the images from a laparoscopic radical gastrectomy with D2 lymphadenectomy video data set, so the recognition ability might not apply to other upper abdominal surgeries, such as benign upper gastrointestinal or bariatric surgery, pancreaticoduodenectomy, and hepatectomy. Further experiments and probably model adjustment are needed to test the generalizability of the PGBVRM. Moreover, although blood vessels were identified in most frames in the dynamic videos, recognition of the blood vessels in some frames was relatively unsatisfactory and the IoU and F1 score were lower than in the test set, which might be related to blood vessel movement in the videos and the continuity of the videos instead of stillness. Besides, the ability to recognize vessels in images with lots of blood and massive surgical smoke was decreased, a reminder that the model needs more image data from difficult conditions to improve its recognition ability. Misrecognition is another limitation mentioned above. All these limitations indicate that the model should be modified in future studies.

Despite the limitations mentioned above, this study indicates a potential promising future in terms of the application of the PGBVRM in recognizing perigastric blood vessels in intraoperative images and videos. In subsequent studies, the authors plan to directly connect the PGBVRM to laparoscopic systems to assess whether it aids in improving the accuracy and efficiency of surgeons in recognizing and protecting vessels during surgery.

Funding

This study was funded by the National Natural Science Foundation of China (82003253), Guangdong Basic and Applied Basic Research Foundation (2021A1515111113), Key Research and Development Plan of Guangzhou City (202206080007) and Talent introduction project of The Affiliated Guangdong Second Provincial General Hospital (YY2024-006). The funding source had no role in the design, execution, interpretation, or writing of the work.

Acknowledgements

G.C. and Y.X. contributed equally to this work.

Author contributions

Guanjian Chen (Data curation, Investigation, Writing—original draft), Yequan Xie (Data curation, Investigation, Writing—original draft), Bin Yang (Data curation, Formal analysis, Methodology), JiaNan Tan (Data curation, Formal analysis, Methodology), Guangyu Zhong (Validation, Visualization), Lin Zhong (Validation, Visualization), Shengning Zhou (Conceptualization, Funding acquisition, Writing—review & editing), and Fanghai Han (Conceptualization, Funding acquisition, Writing—review & editing)

Disclosure

The authors declare no conflict of interest.

Supplementary material

Supplementary material is available at BJS Open online.

Data availability

The data that support the findings of this study are available from the corresponding authors upon reasonable request.