-

PDF

- Split View

-

Views

-

Cite

Cite

Sayan Ghosal, Michael C Schatz, Archana Venkataraman, BEATRICE: Bayesian fine-mapping from summary data using deep variational inference, Bioinformatics, Volume 40, Issue 10, October 2024, btae590, https://doi.org/10.1093/bioinformatics/btae590

Close - Share Icon Share

Abstract

We introduce a novel framework BEATRICE to identify putative causal variants from GWAS statistics. Identifying causal variants is challenging due to their sparsity and high correlation in the nearby regions. To account for these challenges, we rely on a hierarchical Bayesian model that imposes a binary concrete prior on the set of causal variants. We derive a variational algorithm for this fine-mapping problem by minimizing the KL divergence between an approximate density and the posterior probability distribution of the causal configurations. Correspondingly, we use a deep neural network as an inference machine to estimate the parameters of our proposal distribution. Our stochastic optimization procedure allows us to sample from the space of causal configurations, which we use to compute the posterior inclusion probabilities and determine credible sets for each causal variant. We conduct a detailed simulation study to quantify the performance of our framework against two state-of-the-art baseline methods across different numbers of causal variants and noise paradigms, as defined by the relative genetic contributions of causal and noncausal variants.

We demonstrate that BEATRICE achieves uniformly better coverage with comparable power and set sizes, and that the performance gain increases with the number of causal variants. We also show the efficacy BEATRICE in finding causal variants from the GWAS study of Alzheimer’s disease. In comparison to the baselines, only BEATRICE can successfully find the APOE allele, a commonly associated variant of Alzheimer’s.

BEATRICE is available for download at https://github.com/sayangsep/Beatrice-Finemapping.

1 Introduction

Genome-wide association studies (GWAS) provide a natural way to quantify the contribution each genetic variant to the observed phenotype (Uffelmann et al. 2021). However, the univariate nature of GWAS does not take into account the correlation structure shared between the genetic variants due to low recombination of nearby DNA regions (Visscher et al. 2012). Strong correlations can inflate the effect size of a non-causal genetic variant, thus leading to false positive identifications (Brzyski et al. 2017). Fine-mapping (Maller et al. 2012, Spain and Barrett 2015) addresses this problem by analyzing the correlation structure of the data to identify small subsets of causal genetic variants (Spain and Barrett 2015, Schaid et al. 2018). These subsets, known as credible sets, capture the uncertainty of finding the true causal variant within a highly correlated region (Hutchinson et al. 2020). Unlike P values, the corresponding posterior inclusion probabilities (PIPs) computed during fine-mapping can be compared across studies of different sample sizes.

Traditional fine-mapping methods can be grouped into two general categories. The first category uses a penalized regression model to predict the output phenotype based on the collection of genetic variants (Cho et al. 2009, Sabourin et al. 2015). Popular regularizations like LASSO (Tibshirani 1996) and Elastic Net (Sabourin et al. 2015) simultaneously perform effect size estimation while slowly shrinking the smaller effect sizes to zero. The drawback of penalized regression models is that they optimize phenotypic prediction and, due to the correlation structure, do not always identify the true causal variants. The second category relies on Bayesian modeling. Here, the phenotype is modeled as a linear combination of the genetic variants, with sparsity incorporated into the prior distribution for the model weights. Approximate inference techniques, such as Markov Chain Monte Carlo (MCMC) (Guan and Stephens 2011) and variational methods (Carbonetto and Stephens 2012) have been used to infer the effect sizes, PIPs, and credible sets. While these approaches represent valuable contributions to the field, they require the raw genotype and phenotype information, which raises privacy and regulatory concerns, particularly in the cases of publicly shared datasets. MCMC sampling also requires a burn-in period, which adds a substantial (100×) runtime overhead.

In response to these concerns, fine-mapping approaches have moved towards using summary statistics, which can be easily shared across sites. For example, the works of (Hormozdiari et al. 2014, Chen et al. 2015, Benner et al. 2016) use a stochastic or exhaustive search to identify the posterior probabilities of the causal configurations. However, exhaustive search based methods are restricted by the number of assumed causal variants, as this leads to an exponential increase in the dimensionality of the approximate posterior distribution. Stochastic search approaches (Benner et al. 2016) are less computationally expensive, but, by construction, they cannot handle infinitesimal effects from noncausal variants. Another fine-mapping approach is SuSiE (Zou et al. 2022), which estimates the variant effect sizes as a sum of “single effects.” These “single effect” vectors contain one nonzero element representing a causal variant and are estimated using a Bayesian step-wise selection approach. SuSiE provides a simple framework to robustly estimate PIPs and credible sets; however, there is limited evidence for its performance given the presence of infinitesimal genetic effects. Such scenarios can appear due to polygenicity of the trait, trans-interactions of variants, or varying correlation structure of the genomic region. Finally, the most recent fine-mapping method is CARMA (Yang et al. 2023). Unlike previous methods, CARMA assumes a spike-slab prior over the effect sizes and uses a stochastic shotgun bases sampling approach for estimating posterior probabilities.

In this article, we introduce BEATRICE, a novel framework for Bayesian finE-mapping from summAry daTa using deep vaRiational InferenCE (https://github.com/sayangsep/Beatrice-Finemapping). In contrast to prior work, we approximate the posterior distribution of the causal variants given the GWAS summary statistics as a binary concrete distribution (Jang et al. 2016, Maddison et al. 2016), whose parameters are estimated by a deep neural network. This unique formulation allows BEATRICE to use computationally efficient gradient-based optimization to minimize the KL divergence between the proposal binary concrete distribution and the posterior distribution of the causal variants. In addition, our unique optimization strategy samples a representative set of causal configurations in the process of minimizing the empirical KL divergence; these configurations can be used to obtain the PIPs and the credible sets. We compare our model with two state-of-the-art fine-mapping approaches, SuSiE (Zou et al. 2022) and FINEMAP (Benner et al. 2016). We perform an extensive simulation study and quantify the performance of each model across increasing numbers of causal variants and increasing noise, as determined by the degree to which non-causal variants explain the phenotype variance. The runtimes of both SuSiE and BEATRICE are under one minute, which is significantly less than the runtimes of FINEMAP and CARMA. On average BEATRICE achieves a 2.2 fold increase in coverage, a 10% improvement in AUPRC, and similar power in comparison to SuSiE and FINEMAP.

2 Materials and methods

2.1 Generative assumptions of fine-mapping

2.2 Genome-wide association studies

2.3 The deep Bayesian variational model

BEATRICE uses a variational inference framework for fine mapping. For convenience, we represent the diagonal elements of by the vector , where c encodes the causal variant locations. Given that we do not know the effect sizes and locations of the causal and noncausal variants, rather than fixing in Equation (3), we assume that the diagonal elements of are drawn from a binary concrete distribution, which can be viewed as a continuous relaxation of the Bernoulli distribution. Under this assumption, the variance of the effect size is modeled as , where is a binary concrete random variable. The binary concrete distribution provides support for small nonzero values, which can be automatically learned from the data during inference, as described below.

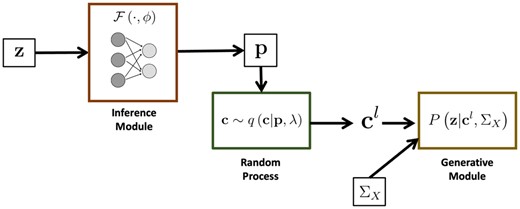

Figure 1 provides an overview of BEATRICE. Our framework consists of three main components: An inference module, a random sampler, and a generative module. The inputs to BEATRICE are the summary statistics z and the LD matrix . The inference module estimates the parameters p of our proposal distribution using a neural network. The random process sampler uses the parameters p to sample potential causal vectors c according to the given proposal distribution. Finally, the generative module calculates the likelihood of the observed summary statistics z via Equation (4).

Overview of BEATRICE. The inputs to our framework are the LD matrix and the summary statistics z. The inference module uses a neural network to estimate the underlying probability map p. The random process generates random samples for the Monte Carlo integration in Equation (12). Finally, the generative module calculates the likelihood of the summary statistics from the sample causal vectors .

2.3.1 Proposal distribution

The goal of fine mapping is to infer the posterior distribution , where c corresponds to the diagonal elements of . Due to the prior formulation in Equations (2) and (3), solving for the true posterior distribution is computationally intractable, as it requires a combinatorial search over the possible causal configurations. Thus, we approximate the posterior distribution with a binary concrete distribution (Maddison et al. 2016), where the parameters p of the distribution are functions of the inputs . Samples c generated under a binary concrete distribution can be viewed as continuous relaxations of independent Bernoulli random variables. This reparametrization (Jang et al. 2016) allows us to learn p from the data using standard gradient descent.

Intuitively, every element of the random vector c can be regarded as a continuous relaxation from a Bernoulli random variable, where controls the extent of relaxation from the 0/1 Bernoulli distribution. This continuous representation allows us to model the infinitesimal effects of the noncausal variants. Additionally, the underlying probability map p captures the relative importance of a variant containing a causal signal. The two unique properties of the probability maps are and . The first property indicates that controls the degree to which assumes low values close to 0 and high values close to 1. This property also give BEATRICE flexibility to handle genetic variants with different levels of association, thus aligning with our generative process that assumes some noncausal variants may have small non-zero effects. The second property implies that a high probability at location i is highly indicative of a causal variant. Taken together, the binary concrete distribution has an easily optimized parameterization with desirable properties.

2.3.2 Variational inference

During optimization, the relaxation parameter is annealed (Jang et al. 2016, Maddison et al. 2016) to a small nonzero value (0.01) with fixed constant rate, and the underlying probability map p is optimized using gradient descent. Specifically, we use a neural network to generate the vector . The details of the neural network architecture are provided in Supplementary Section S1.4. Practically speaking, the neural network ties the input data to the parameter space of the proposal distribution in a data-driven fashion. Empirically, we find that generating p as a function of the input data regularizes the model and leads to a stable optimization.

We have defined for notational convenience.

2.3.3 Optimization strategy

Since Equation (9) does not have closed-form expressions, we use Monte Carlo integration to accurately approximate in the regime of small , i.e. when the binary concrete distribution behaves similarly to a Bernoulli distribution.

In our optimization process, we further take advantage of the sparsity of the causal vector, leading to significant improvements in computational complexity. The complexity analysis is provided in the Supplementary Section S1.5.

Optimization scheme to minimize Equation (12)

Initialize

fordo

Generate

Randomly sample according to Equation (6)

end for

3 Verification and comparison

3.1 Causal configurations and PIPs

We evaluate posterior inclusion probabilities (PIPs) and credible sets of each method. PIPs estimate how likely each variant is causal as a measure of its importance. Credible sets are subsets of variants that likely contain a causal variant, which captures the uncertainty of finding the true variant.

Our experiments use a threshold to binarize the vectors . The threshold can be viewed as a user-specified lower bound for , where fixing preserves only variants with an estimated effect size variance greater than . Interestingly, the threshold can also be viewed as a sparsity penalty that loosely controls the size of the reduced set of causal configurations . For example, when is large, we include only a small set of causal configurations with high posterior probability, whereas when is small, we allow for more configurations to be included in our analysis. A higher threshold is often beneficial in the presence of large interaction effects from non-causal variants, and a lower threshold is useful when the causal variants are weakly associated with the outcome. Finally, we note that is also linked to the computational overhead of BEATRICE. When is large we will need fewer samples to estimate posterior configuration, as compared to smaller values of when , leading to lower time complexity. While we fix the default value for at 0.1, this parameter can be adjusted by the user as desired. In Supplementary Section S1.10, the sensitivity of the results with different values of .

Finally, we identify the credible sets in two steps. First, in a conditional step-wise fashion, we identify the variants with the highest conditional probability given the previously selected variants. This strategy identifies the set of “key” variants with a high probability of being causal. Second, we determine the credible set for each key variant, by computing the conditional inclusion probabilities of each variant given the key variants and adding variants to the credible set. A detailed description of this process can be found in the Supplementary Methods document (Supplementary Section S1.3 in S1 text).

3.2 Baselines

We compare our approach with these state-of-the-art methods:

Finemap: This approach (Benner et al. 2016) uses a stochastic shotgun search to estimate the PIPs and the credible sets.

SuSiE: (Wang et al. 2020, Zou et al. 2022) introduced an iterative Bayesian selection approach for fine-mapping that represents the causal vector as a sum of “single-effect” vectors.

CARMA: The work of Yang et al. (2023) introduced a Bayesian approach for fine-mapping using a spike-and-slab prior over the effect sizes to model the GWAS summary statistics.

Further details are provided in Supplementary Section S1.15.

3.3 Evaluation strategy

We evaluate several metrics of performance for each method.

3.3.1 Evaluating PIPs

We compare the quality of the PIPs via the AUPRC metric. AUPRC (area under the precision-recall curve) is computed by sweeping a threshold on the PIPs and computing precision and recall against the true configuration of causal and noncausal variants. High precision indicates a low false positive rate in the estimated causal variants. High recall indicates that the model correctly identifies more of the causal variants. Thus, the AUPRC, can be viewed as a holistic measure of performance across both classes. AUPRC is also robust to severe class imbalance (Davis and Goadrich 2006), which is the case in fine mapping, as the number of causal variants is small. Additionally, in Supplementary Section S1.8 we visualize power vs. FDR for different thresholds of the PIPs. Following standard nomenclature, both power and recall measures the probability of detection and FDR (1-precision) measures the type-I error (). Therefore, the power versus FDR curve provides a visual comparison, while AUPRC gives the numeric quantification of the performances.

3.3.2 Coverage, power and size of the credible sets

We follow the strategy of Zou et al. (2022) and Wang et al. (2020) to define a credible set. A credible set is defined as a collection of variants that contain a single causal variant with a probability equal to the coverage. Given that the number of causal variants can be arbitrary, we use two metrics to assess the quality of the credible sets: Coverage and power. Coverage is the proportion of credible sets that contain a causal variant, and power is the proportion of causal variants identified by all the credible sets. Higher coverage indicates that the method is confident about its prediction of each causal variant, whereas higher power indicates the method can accurately identify all the causal variants.

One caveat is that a method can generally achieve both higher coverage and higher power simply by adding variants to the credible sets. To counter this trend, we report the average size of the credible sets identified by each method. Ideally, we would like the credible sets to be as small as possible while retaining high coverage and high power.

4 Experimental results

4.1 Setup for simulation experiments

4.1.1 Genotype simulations

We use the method proposed by Dimitromanolakis et al. (2019) to simulate genotypes X based on data from the 1000 Genomes Project. We select an arbitrary sub-region () from Chromosome 2 as the base. After removing rare variants (MAF), the remaining 3.5K variants are used to simulate pairs of haplotypes to generate 10 000 unrelated individuals. We do not run any filtering of variants on our simulated data. We chose a MAF threshold of 0.02, as it lies in the middle of the range 0.01–0.05 used in GWAS studies (Gibbs et al. 2003). In each experiment below, we randomly select variants and individuals to generate the phenotype data.

4.1.2 Phenotype generation

We generate the phenotype y from a standard mixed linear model (Pirinen et al. 2013), where the influences of the causal variants are modeled as fixed effects, and the influences of other noncausal variants are modeled as random effects. In this case, the genetic risk for a trait is spread over the entire dataset, with each variant having small individual effects, as per the polygenicity assumption of a complex trait. We randomly select the causal variants in our simulations. Thus, some simulations will have causal variants in LD, while others will select causal variants with low correlation.

To replicate real-world scenarios, we use a GWAS setup to estimate the effect size of each variant i based on the phenotype and the unnormalized genotype data . Here, we use a simple linear model and ordinary least squares to estimate the effect sizes. From here, we convert the estimated effect sizes to z-scores via , where denotes the standard error. The LD matrix is computed as , where X is the normalized genotype data. The z-scores and LD matrix are input to each of the fine-mapping methods above.

4.1.3 Noise configurations

We evaluate the performance of each method while varying the number of causal variants d, the total genotype variance , and the proportion of this variance associated with the causal variants p. Formally, we sweep over the ranges , , and . For each parameter setting, we randomly generate 20 datasets by independently re-sampling the causal variant locations, the effect sizes , the noncausal component , and the noise . We run all fine-mapping methods over a total of configurations.

4.2 Application to real-world SNP data

We compare the performance of each fine-mapping method on a GWAS study of Alzheimer’s Disease (AD). AD is a polygenic disorder, making it an ideal test bed to evaluate each model. We use the publicly available GWAS summary statistics released by Wightman et al. (2021) to obtain the z-scores and the UK Biobank data to generate the LD matrices. We compare the fine-mapping results of SuSiE and BEATRICE. Notice that we cannot run FINEMAP because the reported GWAS statistics only contain the z-scores, whereas FINEMAP requires the effect sizes and the corresponding standard errors to perform fine mapping. This real-world study also highlights a major drawback of FINEMAP, should the original effect sizes and standard errors be unavailable. Both SuSiE and BEATRICE were run with their default setting.

4.2.1 Data acquisition

The recent GWAS analysis performed by Wightman et al. (2021) identified multiple statistically significant index SNPs in AD. We clumped the GWAS statistics to 175 regions and fine mapped the top 20 regions sorted by the P-values of the index SNPs. Additionally, we sub-select SNPs from the GWAS summary statistics that overlap with both the publicly available 1000 Genome Phase-3 data and the UK Biobank data.

4.2.2 Data preprocessing

We first filter out variants that are not common to both the 1000 Genome database and the UK Biobank database. We further removed the strand ambiguous SNPs (i.e. those with complementary alleles, either C/G or A/T SNPs) due to the lack of strand information. For example, an A/T allele can be mapped to T/A, or A/T based on the strand information. Without this information, we cannot map the allele in the base data and GWAS results. After filtering, we verify that the counted alleles are present in the GWAS summary statistics for AD and the UK biobank LD matrices. We identify 175 clumps using the GWAS statistics, where each clump contains SNPs less than 250KB away from the index SNP with and . We note that the GWAS statistics published by Wightman et al. (2021) reported multiple SNPs in the first clump with z-scores of infinity. For numerical stability, we clip these z-scores to 200. Finally, we merge overlapping clumped regions into a single region. Further details about the clumped variants are reported in Supplementary Table S1. The resulting variants within each clump are used to generate LD matrices using the publicly available LD matrices derived from 337K subjects of the UK Biobank database (Weissbrod et al. 2020) (https://registry.opendata.aws/ukbb-ld/).

4.3 Model performance

4.3.1 Simulated experiments

4.3.1.1 Varying the number of causal variants

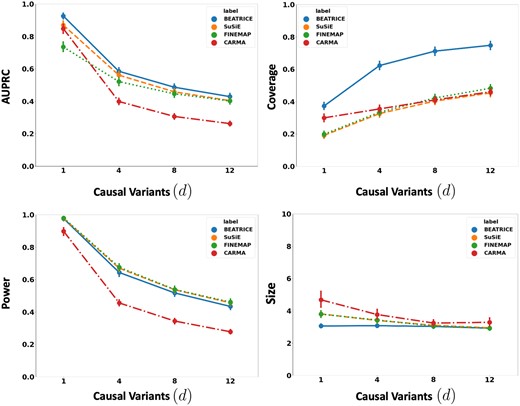

Figure 2 illustrates the performance of each method (BEATRICE, FINEMAP, SuSiE, and CARMA) while increasing the number of causal variants from to . The points denote the mean performance across all noise configurations for fixed d, and the error bars represent the 95% confidence interval across these configurations. We note that BEATRICE achieves a uniformly higher AUPRC than all baseline methods, which suggests that BEATRICE can better estimate the PIPs than CARMA, FINEMAP or SuSiE. BEATRICE also provides a 0.9–1.4-fold increase in coverage than the baselines while maintaining a similar power, which indicates that the credible sets generated by BEATRICE are more likely to contain a causal variant than the baselines. Finally, we note that BEATRICE, identifies the same or smaller credible set sizes than CARMA, FINEMAP, and SuSiE. Taken together, as the number of causal variants increases, BEATRICE gives us a better estimate of the PIPs. Unlike the baseline methods, BEATRICE does not impose any prior assumptions over the total number of causal variants, which may lead to its improved performance. Finally, we observe that CARMA achieves notably lower AUPRC and power than all other methods and is comparable to SuSiE and FINEMAP in the other metrics.

The performance metrics for the three methods across varying numbers of causal variants. Along the x-axis, we plot the number of causal variants, and across the y-axis, we plot the mean and confidence interval (95%) of each metric. We calculate the mean by fixing d to a specific value and sweep over all the noise settings where .

4.3.1.2 Increasing the genotype contribution

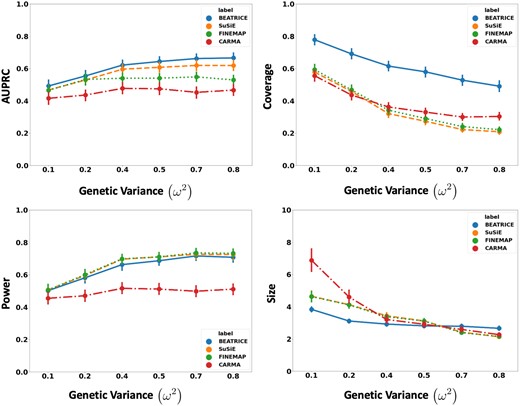

Figure 3 shows the performance of each method while increasing the genetically explained variance from to . Similar to the above experiment, the points in the figure denote the mean performance across all other noise configurations for fixed , and the error bars represent the 95% confidence intervals across these configurations. We note that BEATRICE achieves a significantly higher AUPRC than FINEMAP and CARMA and a slightly higher AUPRC than SuSiE. When evaluating the credible sets, we observe similar trends in coverage (BEATRICE is 0.25–2.34 folds higher) and power (similar performance across methods). Once again, CARMA achieves significantly lower AUPRC and power. All four methods identify credible sets of similar size. We submit that BEATRICE achieves the best trade-off across the four performance metrics.

The performance metric for increasing phenotype variance is explained by genetics. Along the x-axis, we plot the variance explained by genetics , and across the y-axis, we plot each metric’s mean and confidence interval (95%). We calculate the mean by fixing to a specific value and sweep over all the noise settings where .

4.3.1.3 Varying the contributions of causal and noncausal variants

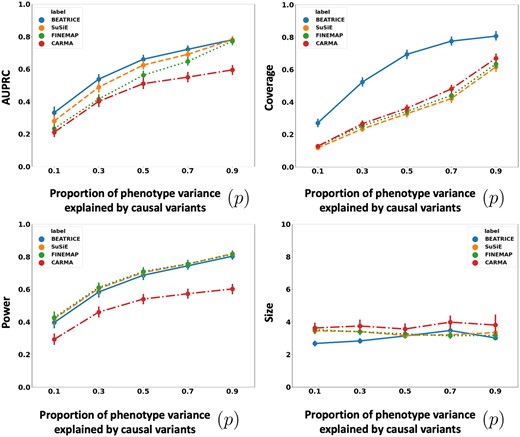

Figure 4 illustrates the performance of each method while increasing the contribution of the causal variants from to . Again, the points denote the mean performance across all other noise configurations for fixed p, and the error bars represent the 95% confidence intervals across these configurations. From an application standpoint, the presence of noncausal variants with small non-zero effects makes it difficult to detect the true causal variants. Accordingly, we observe a performance boost across all methods when p is larger. Similar to our previous experiments, BEATRICE provides the best AUPRC, with converging performance as . In addition, BEATRICE identifies smaller credible sets with significantly higher coverage while maintaining power. Thus, we conclude that BEATRICE is the most robust of the three methods to the presence of noise from noncausal variants. This performance gain may arise from our binary concrete proposal distribution for the causal vector c, which provides flexibility to accommodate varying degrees of association. Finally, we note that compared to all the approaches, CARMA has significantly lower AUPRC and power, which suggests that it fails to identify the true causal SNPs across the various noise paradigms. Moreover, as shown in Fig. 10, the runtime of CARMA is significantly higher than the others due to its MCMC procedure. Thus, we omit it from our subsequent analyses.

The performance metric for multiple levels of noise introduced by noncausal variants. The noise level is explained by the variance ratio of noncausal variants vs. causal variants. Along the x-axis, we plot the noise level ; across the y-axis, we plot each metric’s mean and confidence interval (95%). We calculate the mean by fixing p to a specific value and sweep over all the noise settings where .

4.3.2 Fine-mapping results on real-world AD data

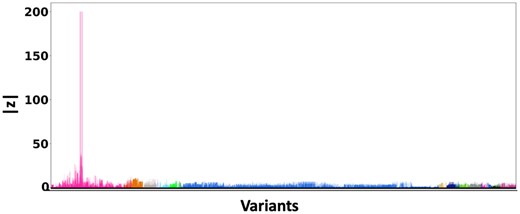

Figure 5 shows the absolute z-scores of the SNPs in each of the 20 clumps. The GWAS statistics of Wightman et al. (2021) report multiple z-scores with high values in each clump. The inflation in the GWAS statistics could result from the infinitesimal effects of multiple SNPs within each clump. This scenario is similar to the simulation settings when p is small.

z-scores of the variants present each of the 20 clumps. The scores are obtained from a GWAS study of Alzheimer’s Disease (Wightman et al. 2021) colored by locus. The x-axis denotes the SNP index present in each locus. The y-axis reports the absolute z-scores.

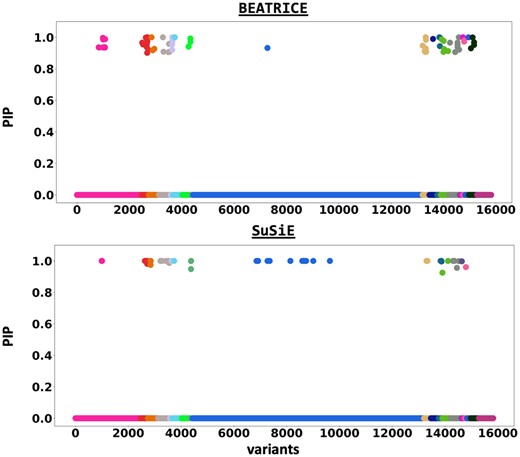

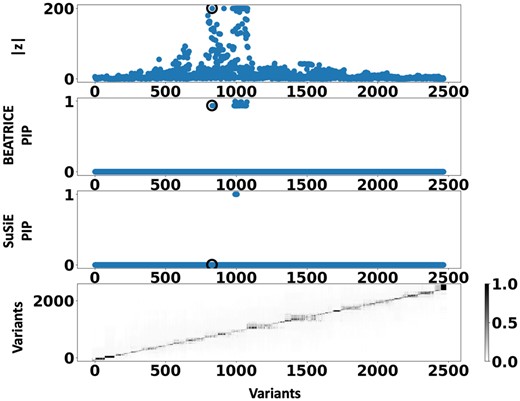

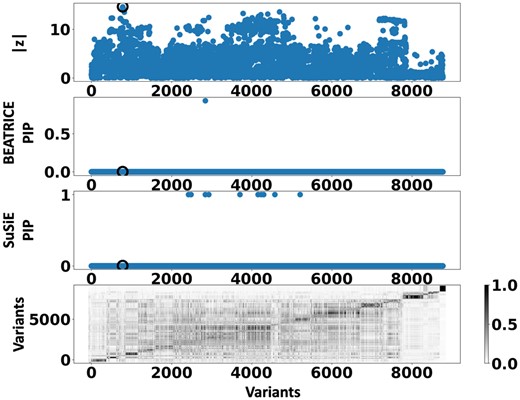

Figure 6 shows the PIPs () identified by each fine-mapping method, colored by clump (i.e. locus). The x-axis corresponds to the SNP index, and the y-axis reports the corresponding PIP values. We explore the variants from the first clump (chr19:44515074–46457976), which contains the TOMM40, APOC1, and APOE genes. These genes have been repeatedly identified as potential disease-causing loci for AD (Lund et al. 2014, Zhou et al. 2014, Cooper et al. 2022, Chen et al. 2023). In Supplementary Tables S2 and S3, we report the SNPs with PIP for BEATRICE and SuSiE, respectively. Figure 7 provides a detailed view of the z-scores, PIPs estimated by BEATRICE and SuSiE, and the LD structure of the variants within this clump. Interestingly, one of the high PIP SNP identified by BEATRICE is rs7412, the Apolipoprotein E (APOE) 2 allele, which is a common landmark “risk” factor for Alzheimer’s disease (Kulminski et al. 2020). As a second point of reference, Fig. 8 provides a detailed view of the variants in the ninth clump. Clump 9 (chr6:32123639–32900787) is the largest clump (8000 SNPs) in our analyses and overlies the HLA region, which is commonly known for complex LD (Evseeva et al. 2010) structure.

The posterior inclusion probabilities obtained by running fine mapping on the top 20 clumps from the Alzheimer’s Disease GWAS. We report the PIPs of SNPs with PIP . The x-axis denotes the SNP index present in each locus. The y-axis reports the PIP values.

Detailed results for Clump 1: Top row: absolute z-scores of the included variants; the index SNP is indicated by a black circle. Second row: PIPs identified by BEATRICE. Third row: PIPs identified by SuSiE. Bottom row: LD structure between the variants, where the color bar indicates the values.

Detailed results for Clump 9: Top row: absolute z-scores of the included variants; the index SNP is indicated by a black circle. Second row: PIPs identified by BEATRICE. Third Row: PIPs identified by SuSiE. Bottom row: LD structure between the variants, where the color bar indicates the values.

In an exploratory analysis, we investigate the biological consequences of the SNPs with high PIPs () of the first clump, as identified by each method. Further details about this analysis are presented in Supplementary Section S1.17.

5 Discussion and summary

BEATRICE is a novel and general-purpose tool for fine mapping that can be used across a variety of studies. One key contribution of BEATRICE over methods like FINEMAP and SuSiE is its ability to discern infinitesimal effects from noncausal variants, including those in high LD with true causal variants. Our simulated experiments in Section 4.3.1 and Supplementary Section S1.8 capture this improved performance by sweeping the proportion of the observed variance attributed to causal (fixed effects) and noncausal (random effects) genetic variants. This parameter is swept over its natural domain, such that implies that the only link between the genotype and phenotype comes from the causal variants. At this extreme, all methods achieve comparable performance (Fig. 4). However, as p decreases, meaning that the effects of noncausal variants increase, BEATRICE outperforms both baselines. In Fig. 9, we show an example of fine mapping using BEATRICE for a simulation setting . Notice that the z-score for the causal SNP is not the largest, which accurately highlights the importance of fine mapping. In Supplementary Section S6, we compare examples of the effects of SNP heritability explained by the noncausal SNPs. We show that when noncausal SNPs explain most of the genetic heritability, only BEATRICE can successfully assign the highest PIP to the causal SNPs. This result shows that BEATRICE can successfully use the binary concrete distribution to model noncausal variants with non-zero effects while the sparsity term of prioritizes potentially causal variants.

The fine-mapping performance of BEATRICE, SuSiE, and FINEMAP at a noise setting of . (a) The absolute z-score of each variant as obtained from GWAS. (b) Pairwise correlation between the variants. (c–e) illustrate the posterior inclusion probabilities of each variant, as estimated by the three methods. The circle marked by an arrow shows the location of the causal variant. The other nonzero markers represent the variants assigned to a credible set, coded based on the assignment.

A second contribution of BEATRICE is our strategic integration of neural networks within a larger statistical framework. Specifically, we use the neural network in Supplementary Fig. S2 as an inference engine to estimate the parameters p of our proposal distribution. Effectively, we leverage the neural network as a universal function approximator to establish the relationship between the parameter space and the input data space. We choose neural networks as our inference engine over other types of functions due to their flexibility, scalability, and ease of optimization via backpropagation. We demonstrate in Sections 4.3.1 and Supplementary Sections S1.8 and S1.9 that the deep neural network can successfully generate sample causal configurations that well approximate the true posterior distribution leading to improved AUPR, power, coverage, and FDR. Moreover, BEATRICE leverages the continuous representation of the causal vectors to backpropagate the gradients through the random sampler and train the network. These continuous representations of result in low-variance gradients with respect to the underlying probability map, thus leading to a stable optimization.

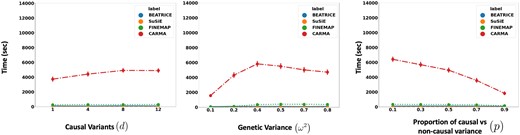

Related to the above point, a third contribution of BEATRICE is its ability to identify the representative sets of causal configurations from the exponential search space to compute the PIPs and credible sets. In Supplementary Sections S1.11, S1.12, and S1.14, we show that BEATRICE generates well-calibrated PIPs in the presence of model misspecification. The improved performance can be attributed to our random sampling process, which ensures that the randomly sampled causal vectors slowly converge to the causal configurations with nonnegligible posterior probability. Furthermore, this strategy allows us to efficiently estimate the PIPs in finite run-time. However, we note that the current implementation of BEATRICE does not estimate the posterior variant effect sizes. Instead, BEATRICE uses the binary concrete vectors to model the variance of the effect sizes. This property allows our model to adjust for infinitesimal effects from the noncausal variants. Figure 10 compares the average run-time of each method across all parameter settings. We observe that the run-time of BEATRICE and SuSiE are less than 1 min. FINEMAP requires five minutes on average to converge. CARMA requires approximately 100 min to converge, likely due to the slow MCMC sampling procedure for generating posterior estimates.

The runtime comparison of BEATRICE, SuSiE, and FINEMAP across all the simulation settings.

The final contribution of BEATRICE is its simple and flexible design. Importantly, BEATRICE can easily incorporate priors based on the functional annotations of the variants. Specifically, in the current setup, the prior over c is effectively constant, as captured by . We can integrate functional information simply by modifying the distribution of across the variants. Going one step further, a recent direction in fine mapping is to aggregate data across multiple studies to identify causal variants (LaPierre et al. 2021). This extension would amount to adding multiple log-likelihood terms in Equation (12) corresponding to the different GWAS inputs matrices across studies. A similar extension can be used for multiple ancestries or traits. In this case, the inputs would reflect a particular ancestry or trait. The main difference between BEATRICE and these extensions is that the parameters p would be generated as a function of multiple scores, i.e. .

In this work, we have shown that BEATRICE is highly efficient in handling the complexity that arises due to infinitesimal effects and out-of-sample LD matrices (Supplementary Section S1.13). Thus, we believe that the advantages of BEATRICE will be more evident when considering polygenic traits and diseases. Additionally, the high coverage and small size of credible sets reported in Figs 2–4 show that BEATRICE can successfully prioritize variants in the presence of LD. This property is in stark contrast with the baseline fine-mapping approaches that generate a large number of credible sets that do not contain a causal variant. Taken together, we believe BEATRICE could be useful in eQTL studies, where multiple variants within a locus can show strong association due to the complex LD structure present in the human genome (Zou et al. 2019).

In summary, we present BEATRICE, a novel Bayesian framework for fine mapping that identifies potentially causal variants within GWAS risk loci through the shared LD structure. Using a variational approach, we approximate the posterior probability of the causal location(s) via a binary concrete distribution. In conjunction, we introduce a new strategy to build a reduced set of causal configurations within the exponential search space that can be neatly folded into our optimization routine. This reduced set is used to approximate the PIPs and identify credible sets. We have demonstrated through a comprehensive simulation the advantages of BEATRICE under different noise settings and that BEATRICE outperforms existing fine-mapping methods. Hence, BEATRICE is a powerful tool to refine the results of a GWAS or eQTL analysis. It is also flexible enough to accommodate a variety of experimental settings.

Supplementary data

Supplementary data are available at Bioinformatics online.

Conflict of interest

The authors do not have any conflicting interests.

Funding

This work was supported by the National Science Foundation CAREER Award 1845430 [to A.V.], the National Institutes of Health Awards R01-HD108790 (to A.V.), U24-HG010263 (to M.C.S.), and U41-HG006620 (to M.C.S.).

Data availability

We have compiled the code for BEATRICE and its dependencies into a docker image, which can be found at https://github.com/sayangsep/Beatrice-Finemapping. We have also provided details about the usage and outputs of the model in Supplementary Section S1.20.