-

PDF

- Split View

-

Views

-

Cite

Cite

Xiaorui Su, Lun Hu, Zhuhong You, Pengwei Hu, Lei Wang, Bowei Zhao, A deep learning method for repurposing antiviral drugs against new viruses via multi-view nonnegative matrix factorization and its application to SARS-CoV-2, Briefings in Bioinformatics, Volume 23, Issue 1, January 2022, bbab526, https://doi.org/10.1093/bib/bbab526

Close - Share Icon Share

Abstract

The outbreak of COVID-19 caused by SARS-coronavirus (CoV)-2 has made millions of deaths since 2019. Although a variety of computational methods have been proposed to repurpose drugs for treating SARS-CoV-2 infections, it is still a challenging task for new viruses, as there are no verified virus-drug associations (VDAs) between them and existing drugs. To efficiently solve the cold-start problem posed by new viruses, a novel constrained multi-view nonnegative matrix factorization (CMNMF) model is designed by jointly utilizing multiple sources of biological information. With the CMNMF model, the similarities of drugs and viruses can be preserved from their own perspectives when they are projected onto a unified latent feature space. Based on the CMNMF model, we propose a deep learning method, namely VDA-DLCMNMF, for repurposing drugs against new viruses. VDA-DLCMNMF first initializes the node representations of drugs and viruses with their corresponding latent feature vectors to avoid a random initialization and then applies graph convolutional network to optimize their representations. Given an arbitrary drug, its probability of being associated with a new virus is computed according to their representations. To evaluate the performance of VDA-DLCMNMF, we have conducted a series of experiments on three VDA datasets created for SARS-CoV-2. Experimental results demonstrate that the promising prediction accuracy of VDA-DLCMNMF. Moreover, incorporating the CMNMF model into deep learning gains new insight into the drug repurposing for SARS-CoV-2, as the results of molecular docking experiments reveal that four antiviral drugs identified by VDA-DLCMNMF have the potential ability to treat SARS-CoV-2 infections.

1 Introduction

Coronaviruses (CoVs) have become a major public health concern due to two severe CoV outbreaks at the beginning of 21st century, including severe acute respiratory syndrome-associated coronavirus (SARS-CoV) in 2002 [4] and Middle East respiratory syndrome-associated coronavirus (MERS-CoV) in 2012 [8]. Unfortunately, the coronavirus disease 2019 (COVID-19) caused by a new enveloped RNA |$\beta $|-CoV [55], named SARS-CoV-2, has produced a global pandemic since December 2019 [72] with tens of millions of infected people and millions of death. At present, there are still no proven effective drugs for SARS-CoV-2, despite increasing efforts made by pharmaceutical companies in drug development [15].

Due to the ability of significantly accelerating the drug development process, reducing overall costs and avoiding risks [31], drug repositioning is believed as a promising and efficient computational way to discover new indications of approved drugs. Hence, in order to response the urgent demand for effectively treating SARS-CoV-2 infections, a variety of drug repositioning methods have been recently proposed and they are broadly classified into two categories including structure-based and network-based methods [9]. Among them, structure-based methods target to identify chemical compounds that may act on SARS-CoV-2 by using molecular docking [49], whereas network-based ones predict novel virus-drug associations (VDAs), which associate SARS-CoV-2 to approved drugs, from a given VDA network. Though promising, structure-based methods suffer from the disadvantage of being time-consuming when searching for an effective compound against SARS-CoV-2 [1]. It is for this reason that we focus our study on network-based methods. However, for new viruses, such as SARS-CoV-2, without any known VDAs, predicting their potential drugs would certainly result in a well-known cold-start problem, which inhibits the development of accurate network-based methods to repurpose approved drugs [46].

To address this problem, network-based methods often integrate the biological knowledge of viruses into VDA networks, thus alleviating the influence exerted by the lack of VDAs involving new viruses [18]. In particular, IRNMFVDA [58] first constructs a VDA matrix based on VDAs, a drug similarity matrix and a virus similarity matrix. An indicator matrix is then used to determine the most likely drugs for SARS-CoV-2 with nonnegative matrix factorization (NMF). Similar to IRNMFVDA, SCPMF [40] also combines VDAs and the similarity information of drugs and viruses to generate a heterogeneous network and then identifies novel VDAs related to SARS-CoV-2 by utilizing similarity constrained probabilistic NMF. In addition to these NMF-based models, there are also several attempts made from an alternative view. For example, VDA-KATZ [70] considers the identification of novel VDAs as a problem of counting the number of connection paths between viruses and drugs in a heterogeneous network and applies a network-based association prediction model to infer possible drugs associated with SARS-CoV-2. Inspired by the link prediction works of layer attention graph convolution network (GCN)[66] and multi-view GCN [11], SANE [56] designs an attentive network embedding model by considering the sequence information of drugs and viruses as node attributes and potential drugs against COVID-19 can be identified with an attention-based pre-depth-first-search strategy. VDA-RWR [47] incorporates VDAs with the similarity information of drugs and viruses and then applies a random walk with restart method to estimate the probability of antiviral drugs against SARS-CoV-2.

Hence, the development of network-based methods has been inspired by the increasing coverage of genomic data [33], which gains new insights into the patterns characterizing already known VDAs to identify the missing ones. As mentioned above, state-of-the-art network-based methods rely on the perception that similar viruses are possibly be treated by the same drug. To this end, they construct a VDA matrix for a given VDA network and refine it by additionally considering the similarity information of drugs and viruses. However, such refinement fails to capture the structural and genomic forces that govern VDA networks if without placing further constraints to strengthen the perception of interest [17]. Taking NMF-based methods as an example, they adopt traditional NMF models to project viruses and drugs into a low-rank latent feature space (LFS), where not all similarity information of viruses and drugs are consistently preserved when compared with those in their original feature space [32]. Moreover, according to the results presented in Section of Ablation study, we note that the similarity between viruses and drugs, which is impossible to exist in reality, is observed from the visualization of their latent features obtained with NMF. With such noisy information, NMF-based methods assign a larger prediction score to virus-drug pairs that share more similar partners including not only drugs but also viruses and are prone to identify more false-positive VDAs by confusing the perception about how new viruses are associated with drugs. Our results suggest that the fundamental reason for the failure of network-based methods is the lack of such a constraint that accounts for the enhancement and consistency of our perception during refinement.

In this work, we propose a novel constrained multi-view nonnegative matrix factorization (CMNMF) model to ensure that, for drugs and viruses, their respective similarity information in a low-rank LFS are consistent with those in their original feature space by generating few noisy information. To do so, two similarity matrices are first constructed for drugs and viruses by using chemical structures and genome sequences respectively. Combining them with the VDA matrix, we further obtain an enhanced association matrix, each element of which indicates the association strength between corresponding drug and virus from a more comprehensive perspective. Modified from traditional NMF, CMNMF formulates additional constraints on these three matrices. In doing so, the optimization procedure of CMNMF is targeted toward to preserving the similarity information of drugs and viruses in LFS as much as possible. By taking the latent feature vectors of drugs and viruses as their initial node representations, we apply a graph convolutional network with attention-based neighbor sampling to optimize the representation of drugs and viruses in a given VDA network and then develop a deep learning method, namely VDA-DLCMNMF, for predicting potential drugs that can be used to treat infections caused by new viruses. The major contributions of this work are summarized as follows.

|$\bullet $| Regarding network-based drug repurposing methods, our experimental results suggest that the fundamental reason for their failure is the lack of such a constraint that accounts for the enhancement and consistency of the intuitive perception about the potential drugs that new viruses are more likely to associate with.

|$\bullet $| A novel CMNMF model is proposed to ensure that the respective similarity information of viruses and drugs are preserved when projected from their own feature spaces onto a unified LFS by generating few noisy information. To avoid random initialization, we take the latent feature vectors of viruses and drugs as their initial node representations and then develop a deep learning method, namely VDA-DLCMNMF, to precisely prioritize known drugs for new viruses.

|$\bullet $| Experimental results on three VDA datasets with different size demonstrate the promising performance of VDA-DLCMNMF in repurposing antiviral drugs against SARS-CoV-2 in terms of several metrics. Besides, the results of molecular docking experiments reveal that incorporating CMNMF into deep learning gains new insight into the drug repurposing for SARS-CoV-2, as four novel drugs identified by our method are proved to have the potential ability to bind with important functional receptors of SARS-CoV-2.

The rest of this article is organized as follows. The section of Materials and Methods first describes the VDA datasets used in the experiments and then presents the details of VDA-DLCMNMF. Comparing VDA-DLCMNMF with several state-of-the-art models, we give the experimental results in the section of Experiments, following which we end the article with a in-depth discussion and a conclusion.

2 Materials and Methods

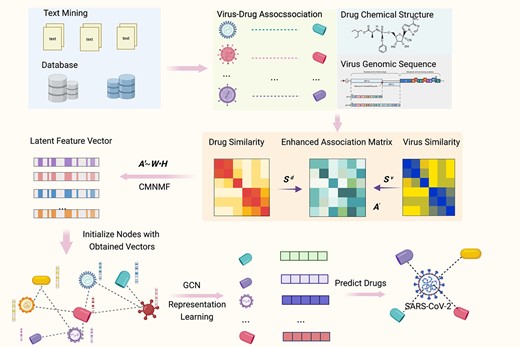

As shown in Figure 1, VDA-DLCMNMF is composed of three steps, including enhanced VDA matrix construction, LFS extraction with CMNMF and GCN-based drug repurposing. Before presenting the details of VDA-DLCMNMF, we describe the VDA datasets used in our experiments.

ROC and AUPR curves obtained by VDA-DLCMNMF and other competing methods on three datasets based on 5-fold CV.

2.1 VDA datasets

To evaluate the performance of VDA-DLCMNMF, three datasets with different sizes are collected for discovering potential drugs against SARS-CoV-2, and they are denoted as HDVD, VDA1 and VDA2, respectively. The statistics of these three datasets are shown in Table 1.

| Datasets . | Viruses . | Drugs . | VDAs . |

|---|---|---|---|

| HDVD | 34 | 219 | 455 |

| VDA1 | 11 | 78 | 96 |

| VDA2 | 69 | 128 | 770 |

| Datasets . | Viruses . | Drugs . | VDAs . |

|---|---|---|---|

| HDVD | 34 | 219 | 455 |

| VDA1 | 11 | 78 | 96 |

| VDA2 | 69 | 128 | 770 |

| Datasets . | Viruses . | Drugs . | VDAs . |

|---|---|---|---|

| HDVD | 34 | 219 | 455 |

| VDA1 | 11 | 78 | 96 |

| VDA2 | 69 | 128 | 770 |

| Datasets . | Viruses . | Drugs . | VDAs . |

|---|---|---|---|

| HDVD | 34 | 219 | 455 |

| VDA1 | 11 | 78 | 96 |

| VDA2 | 69 | 128 | 770 |

HDVD [40] is a database for experimentally supported human drug-virus associations, built by assembling a significant number of experimentally validated drug–virus interaction entries from relevant literatures with text mining technology. HDVD includes 34 viruses, 219 drugs and 455 confirmed human drug–virus interactions.

VDA1 dataset is constructed based on the 96 known VDAs between 11 viruses similar to SARS-CoV-2, such as SARS-CoV [4], MERS-CoV [8] and influenza A viruses [10] and 78 small molecular drugs. These interactions are collected from the DrugBank [63], NCBI [50] and PubMed [5] datasets.

VDA2 dataset is collected from the DrugVirus.info database [2], which provides various experimentally validated VDA-related resources. After removing the viruses with incomplete genome sequences, VDAs contain totally 770 VDAs between 69 viruses and 128 drugs.

2.2 Enhanced VDA matrix construction

As mentioned before, the main idea of drug repurposing for new viruses follows the perception that similar viruses are more likely to be treated by the same drugs. In this step of VDA-DLCMNMF, we target to construct an enhanced VDA matrix by seamlessly considering both VDAs and the biological knowledge of drugs and viruses. To this end, we first construct two similarity matrices based on chemical structures of drugs and genomic sequences of viruses and then design a new association measure to obtain the enhanced VDA matrix.

In addition, it is supposed to illustrate the notations used throughout this paper before introducing VDA-DLCMNMF in detail. The vector is denoted by lowercase boldface letters (e.g. |$\textbf{{v}} \in \mathbb{R}^{{d}}$|), matrix is denoted by uppercase boldface letters (e.g. |$\textbf{{X}} \in \mathbb{R}^{{m}\times{n}}$|). Then, we denote the set by |$\mathcal{D(\cdot )}$|, hyperparameter by uppercase letters (e.g. |${T}$|) and scalars by lowercase letters (e.g. |${d}$|, |${k}$|).

Regarding the similarity of viruses, the genomic sequences of viruses are used and they can be downloaded from the NCBI [50]. Let |$\textbf{{S}}^{\textbf{{v}}}\in \mathbb{R}^{{n}_{v}\times{n}_{v}}$|, where |${n}_{v}$| denotes the number of viruses, be the similarity matrix of viruses. For each element of |$\textbf{{S}}^{\textbf{{v}}}$|, its value can be obtained by using a sequence alignment software MAFFT [24].

2.3 The CMNMF model

Regarding the task of drug repurposing for new viruses, the failure of existing network-based methods is due to their incapability of capturing the structural and genomic forces that play an important role in determining VDAs. To overcome this problem, we propose the CMNMF model and present its details as below.

With (5) and (6), the optimization of |$\textbf{{W}}$| and |$\textbf{{H}}$| is driven not only by |$\textbf{{A}}^{^{\prime}}$| but also by |$\textbf{{S}}^{{d}}$| and |$\textbf{{S}}^{{v}}$|. A theoretical analysis is provided to verify the rationality behind these two constraints. Taking (5) as an example, since |$\textbf{{S}}^{{d}}_{{i},{m}}$| is a constant, the minimization of (5) can be achieved if the value of |${|| \textbf{{w}}_{\textbf{{i}}} - \textbf{{w}}_{\textbf{{m}}} ||}_F^{2}$| is small enough. In this regard, the latent feature vectors of drugs tend to gather together in the LFS. Moreover, if two drugs, i.e. |$d_i$| and |$d_m$|, are similar, they certainly have a larger value of |$\textbf{{S}}^{{d}}_{{i},{m}}$|, and thus |${|| \textbf{{w}}_{\textbf{{i}}} - \textbf{{w}}_{\textbf{{m}}} ||}_F^{2}$| should be smaller than others in order to minimize (5). In other words, the latent feature vectors of two drugs are much closer in the LFS if they are more similar. Given the above analysis, it is believed that the introduction of (5) and (6) allows CMNMF to preserve the similarity information of drugs and viruses in the LFS with only few noisy information generated.

An iterative procedure is applied by VDA-DLCMNMF to obtain the optimum results of |$\textbf{{W}}$| and |$\textbf{{H}}$| until a convergence is reached.

2.4 GCN-based drug repurposing

Without loss of generality, we still use |$\textbf{{W}}$| and |$\textbf{{H}}$| to denote their optimum results in terms of minimizing (8) and give the details of how VDA-DLCMNMF repurposes drugs for new viruses.

Compared with traditional network representation learning algorithms, such as DeepWalk [48] and Node2Vec [14], GNN has the advantage of processing and integrating node features [51]. As a specific type of GNN, spatial-based GCN [28, 68] receives much attention due to its high efficiency and flexibility in dealing with the heterogeneous information of input. Thus, VDA-DLCMNMF adopts it to learn the node representations of viruses and drugs in a given VDA network and then uses them to predict potential associations for new viruses.

A heuristic neighborhood sampling strategy is adopted by VDA-DLCMNMF to improve the efficiency of training a GCN. Taking |$d_i$|, or |$v_j$|, as an example, we select the top |$T$| viruses, or drugs, to compose |${\mathcal{T}}(d_i)$|, or |${\mathcal{T}}(v_j)$|, in the descending order of attention weights in |$\textbf{{W}}^{\textbf{{a}}}$|. Since |$\textbf{{W}}^{\textbf{{a}}}$| is already determined before training, this process is only applied once. The representations of drugs and viruses are then updated iteratively by using |${\mathcal{T}}(d_i)$| and |${\mathcal{T}}(v_j),$| respectively, rather than the whole VDA network, thus reducing the training time.

2.5 Complexity analysis

At each epoch, |$\textbf{{W}}$|, |$\textbf{{H}}$|,|$\textbf{{W}}^{a}$| and |$\textbf{{r}}^{(L)}$| are updated according to Equations (9), (10) and (13)–(15). The update of |$\textbf{{W}}$| and |$\textbf{{H}}$| takes time |${O}{({k} {n}_{{d}} {k} {n}_{{v}} )}$|. The time required to calculate |$\textbf{{W}}^{a}$| is |${O}{({n}_{{d}} {k} {n}_{{v}})}$|. After calculating the attention weight matrix, we select the top |${T}$| neighborhoods for each node in VDA network, which takes time |${O}{(2{n}_{{d}}T{n}_{{v}})}$|. The computation of |$\textbf{{r}}^{(L)}$| has the time complexity |${O}{({T}^{{L}})}$|. Hence, the time used for one iteration is |${O}{(({k} + {k}^{2} + 2{T}){n}_{{d}}{n}_{{v}} + {T}^{{L}})}$|. In this study, we normally have |${T} \ll{n}_{{d}}, {n}_{{v}}, {k}$| and |${L} = 2$|. As a result, the time complexity can be further simplified to |${O}({k}^{2}{n}_{{d}}{n}_{{v}})$|. Assuming that the number of epochs is |${E}$|, the overall time complexity is |${O}({E}{k}^{2}{n}_{{d}}{n}_{{v}})$|.

3 Results

3.1 Evaluation metrics and experimental settings

Five-fold cross-validation (CV) is used to evaluate the performance of VDA-DLCMNMF. We perform the 5-fold CVs by alternatively selecting one fold as the test set and the rest as the training set. The negative samples are selected on each fold to ensure that no unseen node is generated in negative dataset. In other words, negative samples are selected by paring up drugs and viruses whose associations are not found in each fold. To do so, we first obtain the complementary set of VDAs in each fold, then randomly select the negative samples with the same size of positive samples from the complementary set so as to compose the negative dataset. As a result, positive and negative samples are balanced in each fold.

In the experiments, VDA-DLCMNMF is compared with five state-of-the-art network-based drug repositioning methods, including DRHGCN [35], SANE [56], IRNMFVDA [58], SCPMF [40], VDA-RWR [47] and VDA-KATZ [70]. Among them, DRHGCN is a general drug repurposing method by introducing a layer attention mechanism to combine the embeddings of drugs and viruses from multiple graph convolution layers, SANE addresses the cold-start problem by introducing an LSTM unit to learn initial representations for drug and virus from drug chemical structure and virus genomic sequence, while the other three methods are specifically proposed for discovering potential antiviral drugs for SARS-CoV-2 as mentioned in the section of Introduction. All the experiments are performed on the working machine equipped with Intel Core I7 2.6GHz and 16GB RAM.

Regarding the parameters involved in VDA-DLCMNMF, an in-depth analysis of parameter sensitivity is performed to determine their optimal values with grid search. We determine their optimal values by using the method of control variates, which alternatively update each parameter. In specific, we vary the values of |$\alpha $|, |$\beta $|, |$\lambda _{W}$| and |$\lambda _{H}$| from 0 to 1 at a step size of 0.001. Given the constraints that |$\alpha = \beta $| and |$\lambda _{W} = \lambda _{H}$|, we conduct several trials with different combinations of parameter values and select the one with the best performance as the final values of these parameters. In addition, regarding the parameters used in GCN, we select the layer |${L} = 2$| and |${T} = 4$|. For the other competing methods, their parameters are assigned with the values recommended in relevant literature. The parameter settings for all the five methods are presented in Table 2.

Parameter settings used by VDA-DLCMNMF and four competing state-of-the-art methods

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . |

|---|---|---|---|

| IRNMFVDA | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| |

| SCPMF | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| |

| VDA-KATZ | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5,$| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| |

| VDA-RWR | |$r = 0.5, \mu = 0.7, \alpha = 0.7 $| | |$r = 0.7, \mu = 0.9, \alpha = 0.5 $| | |$r = 0.5, \mu = 0.9, \alpha = 0.9 $| |

| VDA-DLCMNMF | |$\alpha = \beta = 0.003, \lambda _{W} = \lambda _{H} = 0.005$| | |$\alpha = \beta = 0.005, \lambda _{W} = \lambda _{H} = 0.1$| | |$\alpha = \beta = 0.002, \lambda _{W} = \lambda _{H} = 0.1$| |

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . |

|---|---|---|---|

| IRNMFVDA | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| |

| SCPMF | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| |

| VDA-KATZ | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5,$| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| |

| VDA-RWR | |$r = 0.5, \mu = 0.7, \alpha = 0.7 $| | |$r = 0.7, \mu = 0.9, \alpha = 0.5 $| | |$r = 0.5, \mu = 0.9, \alpha = 0.9 $| |

| VDA-DLCMNMF | |$\alpha = \beta = 0.003, \lambda _{W} = \lambda _{H} = 0.005$| | |$\alpha = \beta = 0.005, \lambda _{W} = \lambda _{H} = 0.1$| | |$\alpha = \beta = 0.002, \lambda _{W} = \lambda _{H} = 0.1$| |

Parameter settings used by VDA-DLCMNMF and four competing state-of-the-art methods

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . |

|---|---|---|---|

| IRNMFVDA | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| |

| SCPMF | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| |

| VDA-KATZ | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5,$| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| |

| VDA-RWR | |$r = 0.5, \mu = 0.7, \alpha = 0.7 $| | |$r = 0.7, \mu = 0.9, \alpha = 0.5 $| | |$r = 0.5, \mu = 0.9, \alpha = 0.9 $| |

| VDA-DLCMNMF | |$\alpha = \beta = 0.003, \lambda _{W} = \lambda _{H} = 0.005$| | |$\alpha = \beta = 0.005, \lambda _{W} = \lambda _{H} = 0.1$| | |$\alpha = \beta = 0.002, \lambda _{W} = \lambda _{H} = 0.1$| |

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . |

|---|---|---|---|

| IRNMFVDA | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| | |$\alpha = \beta = 0.8, \lambda _1 = \lambda _2 = 0.1$| |

| SCPMF | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| | |$\lambda _{W} = \lambda _{H} = 1, \lambda _{1} = \lambda _{2} = 0.1$| |

| VDA-KATZ | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5,$| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| | |$\beta = 0.04, w = 0.9, \gamma _{v} = \gamma _{d} = 2.5 $| |

| VDA-RWR | |$r = 0.5, \mu = 0.7, \alpha = 0.7 $| | |$r = 0.7, \mu = 0.9, \alpha = 0.5 $| | |$r = 0.5, \mu = 0.9, \alpha = 0.9 $| |

| VDA-DLCMNMF | |$\alpha = \beta = 0.003, \lambda _{W} = \lambda _{H} = 0.005$| | |$\alpha = \beta = 0.005, \lambda _{W} = \lambda _{H} = 0.1$| | |$\alpha = \beta = 0.002, \lambda _{W} = \lambda _{H} = 0.1$| |

3.2 Performance comparison of different methods

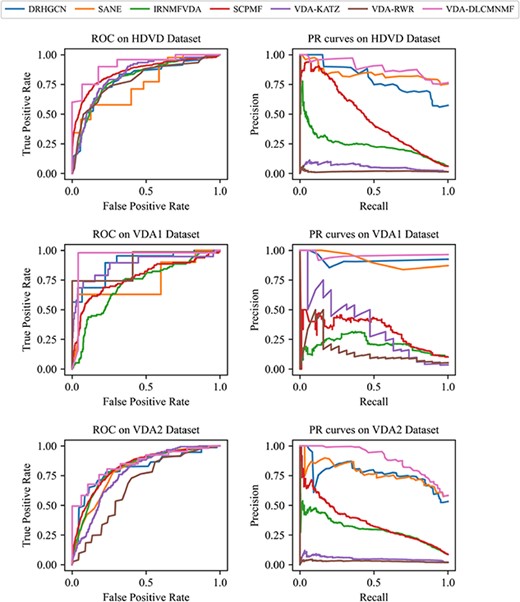

The detailed results of 5-fold CV are shown in Table 3. To visually compare the performance of all five methods, we also plot their ROC curves and PR curves in Figure 2.

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRHGCN | 0.7713 | 0.7689 | 0.7791 | 0.7713 | 0.7845 | 0.7298 | 0.7299 | 0.7841 | 0.8085 | 0.8271 | 0.7029 | 0.7030 | 0.7689 | 0.8177 | 0.7449 |

| SANE | 0.8352 | 0.8103 | 0.8580 | 0.8944 | 0.8598 | 0.7705 | 0.7489 | 0.7834 | 0.8080 | 0.8379 | 0.8019 | 0.7008 | 0.7307 | 0.8018 | 0.7553 |

| IRNMFVDA | 0.3856 | 0.7314 | 0.3631 | 0.8037 | 0.2156 | 0.6227 | 0.4852 | 0.6834 | 0.7102 | 0.2005 | 0.5317 | 0.6496 | 0.5205 | 0.8147 | 0.2971 |

| SCPMF | 0.7661 | 0.4693 | 0.7854 | 0.8549 | 0.4783 | 0.7150 | 0.4403 | 0.7464 | 0.7543 | 0.3684 | 0.7660 | 0.3969 | 0.8012 | 0.8293 | 0.3517 |

| VDA-KATZ | 0.5777 | 0.6995 | 0.5762 | 0.8253 | 0.0698 | 0.6691 | 0.6976 | 0.6684 | 0.8803 | 0.3380 | 0.7119 | 0.5441 | 0.7152 | 0.7743 | 0.0583 |

| VDA-RWR | 0.6550 | 0.7400 | 0.6700 | 0.7875 | 0.0218 | 0.8278 | 0.4824 | 0.7831 | 0.8582 | 0.1383 | 0.6613 | 0.5022 | 0.6643 | 0.6675 | 0.0322 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRHGCN | 0.7713 | 0.7689 | 0.7791 | 0.7713 | 0.7845 | 0.7298 | 0.7299 | 0.7841 | 0.8085 | 0.8271 | 0.7029 | 0.7030 | 0.7689 | 0.8177 | 0.7449 |

| SANE | 0.8352 | 0.8103 | 0.8580 | 0.8944 | 0.8598 | 0.7705 | 0.7489 | 0.7834 | 0.8080 | 0.8379 | 0.8019 | 0.7008 | 0.7307 | 0.8018 | 0.7553 |

| IRNMFVDA | 0.3856 | 0.7314 | 0.3631 | 0.8037 | 0.2156 | 0.6227 | 0.4852 | 0.6834 | 0.7102 | 0.2005 | 0.5317 | 0.6496 | 0.5205 | 0.8147 | 0.2971 |

| SCPMF | 0.7661 | 0.4693 | 0.7854 | 0.8549 | 0.4783 | 0.7150 | 0.4403 | 0.7464 | 0.7543 | 0.3684 | 0.7660 | 0.3969 | 0.8012 | 0.8293 | 0.3517 |

| VDA-KATZ | 0.5777 | 0.6995 | 0.5762 | 0.8253 | 0.0698 | 0.6691 | 0.6976 | 0.6684 | 0.8803 | 0.3380 | 0.7119 | 0.5441 | 0.7152 | 0.7743 | 0.0583 |

| VDA-RWR | 0.6550 | 0.7400 | 0.6700 | 0.7875 | 0.0218 | 0.8278 | 0.4824 | 0.7831 | 0.8582 | 0.1383 | 0.6613 | 0.5022 | 0.6643 | 0.6675 | 0.0322 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

Best results are bolded.

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRHGCN | 0.7713 | 0.7689 | 0.7791 | 0.7713 | 0.7845 | 0.7298 | 0.7299 | 0.7841 | 0.8085 | 0.8271 | 0.7029 | 0.7030 | 0.7689 | 0.8177 | 0.7449 |

| SANE | 0.8352 | 0.8103 | 0.8580 | 0.8944 | 0.8598 | 0.7705 | 0.7489 | 0.7834 | 0.8080 | 0.8379 | 0.8019 | 0.7008 | 0.7307 | 0.8018 | 0.7553 |

| IRNMFVDA | 0.3856 | 0.7314 | 0.3631 | 0.8037 | 0.2156 | 0.6227 | 0.4852 | 0.6834 | 0.7102 | 0.2005 | 0.5317 | 0.6496 | 0.5205 | 0.8147 | 0.2971 |

| SCPMF | 0.7661 | 0.4693 | 0.7854 | 0.8549 | 0.4783 | 0.7150 | 0.4403 | 0.7464 | 0.7543 | 0.3684 | 0.7660 | 0.3969 | 0.8012 | 0.8293 | 0.3517 |

| VDA-KATZ | 0.5777 | 0.6995 | 0.5762 | 0.8253 | 0.0698 | 0.6691 | 0.6976 | 0.6684 | 0.8803 | 0.3380 | 0.7119 | 0.5441 | 0.7152 | 0.7743 | 0.0583 |

| VDA-RWR | 0.6550 | 0.7400 | 0.6700 | 0.7875 | 0.0218 | 0.8278 | 0.4824 | 0.7831 | 0.8582 | 0.1383 | 0.6613 | 0.5022 | 0.6643 | 0.6675 | 0.0322 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRHGCN | 0.7713 | 0.7689 | 0.7791 | 0.7713 | 0.7845 | 0.7298 | 0.7299 | 0.7841 | 0.8085 | 0.8271 | 0.7029 | 0.7030 | 0.7689 | 0.8177 | 0.7449 |

| SANE | 0.8352 | 0.8103 | 0.8580 | 0.8944 | 0.8598 | 0.7705 | 0.7489 | 0.7834 | 0.8080 | 0.8379 | 0.8019 | 0.7008 | 0.7307 | 0.8018 | 0.7553 |

| IRNMFVDA | 0.3856 | 0.7314 | 0.3631 | 0.8037 | 0.2156 | 0.6227 | 0.4852 | 0.6834 | 0.7102 | 0.2005 | 0.5317 | 0.6496 | 0.5205 | 0.8147 | 0.2971 |

| SCPMF | 0.7661 | 0.4693 | 0.7854 | 0.8549 | 0.4783 | 0.7150 | 0.4403 | 0.7464 | 0.7543 | 0.3684 | 0.7660 | 0.3969 | 0.8012 | 0.8293 | 0.3517 |

| VDA-KATZ | 0.5777 | 0.6995 | 0.5762 | 0.8253 | 0.0698 | 0.6691 | 0.6976 | 0.6684 | 0.8803 | 0.3380 | 0.7119 | 0.5441 | 0.7152 | 0.7743 | 0.0583 |

| VDA-RWR | 0.6550 | 0.7400 | 0.6700 | 0.7875 | 0.0218 | 0.8278 | 0.4824 | 0.7831 | 0.8582 | 0.1383 | 0.6613 | 0.5022 | 0.6643 | 0.6675 | 0.0322 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

Best results are bolded.

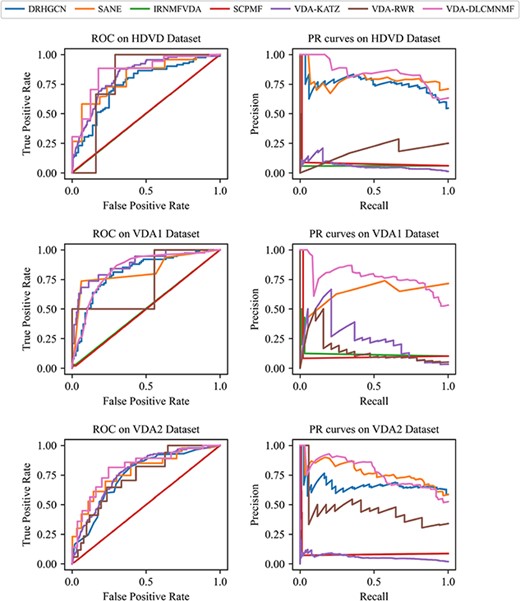

ROC and AUPR curves obtained by VDA-DLCMNMF and other competing methods on three datasets for de novo test.

For the performance of two NMF-based methods, we note that SCPMF performs better than IRNMFVDA across all three datasets, as the scores of Acc. obtained by SCPMF are better by 38.05%, 9.23% and 23.43% than those of IRNMFVDA on HDVD, VDA1 and VDA2, respectively. Besides, for IRNMFVDA, its fluctuation in Acc. is more intensive, as the standard deviation of Acc. yielded by IRNMFVDA is 0.098 larger than the others. The reasons attributable for the unsatisfactory performance of IRNMFVDA are 2-fold. First, the indicator matrix used by IRNMFVDA is constructed based on a VDA matrix and the attribute matrices of drugs and viruses and a simple concatenation of these three matrices makes the indicator matrix sparser. Taking HDVD as an example, the VDA network of HDVD is the sparsest when compared with those of the other two datasets and the worst performance of IRNMFVDA is observed on it. Second, when applied to different datasets, the generalization ability of IRNMFVDA is poor due to the lack of a proper normalization process.

Regarding VDA-KATZ and VDA-RWR, although both of them are network-based methods, they perform quite differently when predicting novel VDAs in the experiments. In particular, the best performance of VDA-KATZ is achieved in the 5-fold CV of VDA2, which could be an indicator that VDA-KATZ prefers dense VDA networks. The main reason for that phenomenon is ascribed to the motivation of VDA-KATZ, which is to utilize network paths to predict potential associations. In doing so, KDA-KATZ tends to yield a better performance for dense VDA networks with more network paths. Compared with VDA-KATZ, VDA-RWR is not always better than VDA-KATZ across all datasets. Among the results in Table 3, it is observed that for the VDA1 dataset, VDA-RWR performs the best among all methods except VDA-DLCMNMF in terms of Acc., whereas for the other two datasets, its performance in terms of Acc. is just fair. The main reason why VDA-RWR yields the second best score of Acc. on the VDA1 dataset is that VDA-RWR has a strong ability in learning the topological characteristics from small VDA networks. However, its worse performance obtained on HDVD and VDA2 demonstrates that its learning ability is constrained by the sparsity of VDA networks, which heavily affects the effectiveness of random walk from different perspectives. In particular, when dealing with sparse VDA networks, viruses and drugs with less degrees are difficult to be visited by the random walk of VDA-RWR, as their topological information is not sufficient. On the other hand, for dense VDA networks, more associations are involved during random walk, but the existence of false-positive associations could decrease the accuracy of VDA-RWR. Moreover, both VDA-KATZ and VDA-RWR achieve the low AUPR value with only 0.1554 and 0.0641 on average, which indicates that they are easily misled by false positive samples, especially when compared with those deep learning-based methods, including DRHGCN, SANE and VDA-CMNMF.

Regrading two deep learning-based methods DRHGCN and SANE, both of them have relatively stable performances among all metrics, which is mainly because of the attention mechanism adopted in them. Though both DRHGCN and SANE are constructed based on GCN, SANE performs better than DRHGCN across all three datasets, as the scores of Acc. obtained by SANE are better by 6.39%, 4.07% and 9.9% than those of DRHGCN on three datasets, respectively. The reason for this is that the information contained in SANE is not only the network topology but also the drug/virus attribute feature learned by LSTM from drug chemical structure/virus genomic sequence. On the other hand, though DRHGCN performs as not well as SANE, it still achieves the better performance than the other baselines, which further demonstrate that robustness of deep learning-based methods.

Among all drug repurposing methods compared in the experiments, VDA-DLCMNMF yields a bigger margin in terms of Acc., AUC and AUPR across all datasets, as it achieves the best performance on HDVD, VDA1 and VDA2. There are also several points worth further commentary. First, compared with NMF-based methods, VDA-DLCMNMF adopts the CMNMF model to preserve the similarity information of drugs and diseases when projecting them onto a unified LFS and thus yields a promising performance in drug repurposing. Second, the generalization ability of VDA-DLCMNMF is further improved by learning the network spatial structure with attention-based layer aggregation, and it is also for this reason that VDA-DLCMNMF yields the largest scores of Acc. and AUC in all cases. In summary, it is believed that VDA-DLCMNMF is a useful tool to discover novel VDAs.

3.3 De novo VDA prediction

To assess the capability of VDA-DLCMNMF in predicting potential indications for new drugs, we choose the drug pair with chemical similarity over 0.7 to conduct a de novo test. For each of this kind of drug pairs, we randomly select a drug and remove all VDAs related to it in turn as the test samples and other existing associations are used as training sample. The random selection procedure has been repeated for 50 times and the ROC and PR curves obtained by each prediction model are depicted in Figure 3.

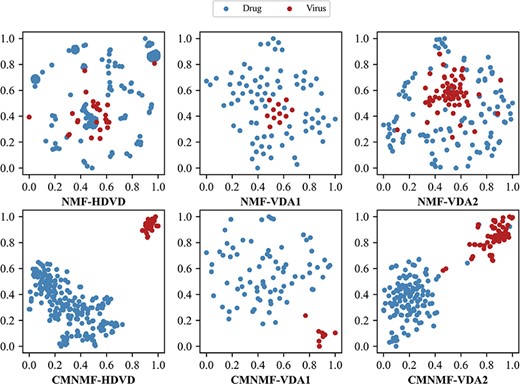

The latent feature vectors of drugs and viruses obtained by NMF and CMNMF are visualized in a 2D space by t-SNE.

First of all, according to the ROC and PR curves, we note that the overall performance of VDA-DLCMNMF is better than the other competing prediction models, as its average AUC and AUPR scores are the largest across all the three datasets. In this regard, we reason that VDA-DLCMNMF is more robust toward the bias resulted from the existence of redundancy drugs. Second, regarding the NMF-based models, i.e. IRNMFVDA and SCPMF, their ROC and PR curves indicate that their performances in the de novo test are much worse than those in the 5-fold CV. Hence, the performance of NMF-based models is heavily dependent on the similarity of drugs, which is an important information source for them to learn latent vectors. Lastly, the deep learning-based models, including DRHGCN and SANE, are less affected by the removal of redundant drugs, as they do not need the similarity information of drugs to perform their tasks.

3.4 Ablation study

To better demonstrate the advantage of VDA-DLCMNMF in drug repurposing, an in-depth ablation study has been conducted with extensive experiments. To do so, we first design four variants of VDA-DLCMNMF and evaluate their performance on the datasets of HDVD, VDA1 and VDA2. A detailed description about these variants are listed as below, and the difference between them and VDA-DLCMNMF are also discussed.

|$\bullet $|NMF is designed without considering any constraints. The input of NMF is only the VDA network, and the latent vectors of drugs and viruses are used as their final representations. Given a pair of drug and disease, their probability of being associated is computed with (16).

|$\bullet $|CMNMF is used alone to discover novel VDAs. Similar to NMF, the latent vectors of drugs and viruses learned from CMNMF are explicitly used to compute the probability with (16).

|$\bullet $||${\textbf{GCN}}_{{random}}$| is a variant of the last part of VDA-DLCMNMF, which selects neighbor receptive field in a random manner.

|$\bullet $|GCN is the last part of VDA-DLCMNMF. In the ablation study, the representation of drugs and viruses are randomly initialized for GCN.

|$\bullet $|NMF-GCN is implemented by combining NMF and GCN. The main difference between NMF-GCN and VDA-DLCMNMF lies in the initial representation of drugs and viruses for GCN, as NMF-GCN and VDA-DLCMNMF use the latent feature vectors learned by NMF and CMNMF, respectively.

Experimental results of the variants of VDA-DLCMNMF are shown in Table 4. As mentioned before, the CMNMF model is adopted by VDA-DLCMNMF to obtain the reliable initial representations of drugs and viruses. Compared with NMF, CMNMF integrates |$S_d$| and |$S_v$| into |$A$|, thus obtaining an enhanced association matrix, i.e. |$\textbf{{A}}^{^{\prime}}$|. To demonstrate the advantage and effectiveness of CMNMF, we first compare the performance of CMNMF with that of NMF on all the three datasets. As indicated by Table 4, it is seen that in terms of Acc., CMNMF perform better by 3.75%, 9.37% and 0.94% than NMF for the datasets of HDVD, VDA1 and VDA2, respectively. This could be a strong indicator that the latent vectors obtained by CMNMF are capable of retaining the characteristics of drugs and viruses from the perspectives of network topology and biological knowledge and hence they are able to improve the prediction accuracy of VDA-DLCMNMF.

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| NMF | 0.7810 | 0.7802 | 0.7824 | 0.7811 | 0.8268 | 0.7395 | 0.6974 | 0.7672 | 0.7400 | 0.8522 | 0.7680 | 0.7558 | 0.7765 | 0.7680 | 0.8063 |

| CMNMF | 0.8185 | 0.8154 | 0.8220 | 0.8185 | 0.8694 | 0.8332 | 0.8442 | 0.8295 | 0.8334 | 0.9059 | 0.7774 | 0.7309 | 0.7955 | 0.7849 | 0.8388 |

| |$\textrm{GCN}_{random}$| | 0.7081 | 0.8201 | 0.6318 | 0.7965 | 0.7157 | 0.6250 | 0.6767 | 0.5833 | 0.5967 | 0.5670 | 0.7000 | 0.6423 | 0.7422 | 0.7769 | 0.7573 |

| GCN | 0.7516 | 0.6669 | 0.7761 | 0.8034 | 0.7761 | 0.6547 | 0.6137 | 0.7204 | 0.7606 | 0.7204 | 0.7558 | 0.7658 | 0.7656 | 0.8191 | 0.7657 |

| NMF-GCN | 0.8000 | 0.8656 | 0.7541 | 0.8979 | 0.8532 | 0.8500 | 0.8667 | 0.9095 | 0.9133 | 0.9333 | 0.7290 | 0.7343 | 0.7278 | 0.8018 | 0.7852 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| NMF | 0.7810 | 0.7802 | 0.7824 | 0.7811 | 0.8268 | 0.7395 | 0.6974 | 0.7672 | 0.7400 | 0.8522 | 0.7680 | 0.7558 | 0.7765 | 0.7680 | 0.8063 |

| CMNMF | 0.8185 | 0.8154 | 0.8220 | 0.8185 | 0.8694 | 0.8332 | 0.8442 | 0.8295 | 0.8334 | 0.9059 | 0.7774 | 0.7309 | 0.7955 | 0.7849 | 0.8388 |

| |$\textrm{GCN}_{random}$| | 0.7081 | 0.8201 | 0.6318 | 0.7965 | 0.7157 | 0.6250 | 0.6767 | 0.5833 | 0.5967 | 0.5670 | 0.7000 | 0.6423 | 0.7422 | 0.7769 | 0.7573 |

| GCN | 0.7516 | 0.6669 | 0.7761 | 0.8034 | 0.7761 | 0.6547 | 0.6137 | 0.7204 | 0.7606 | 0.7204 | 0.7558 | 0.7658 | 0.7656 | 0.8191 | 0.7657 |

| NMF-GCN | 0.8000 | 0.8656 | 0.7541 | 0.8979 | 0.8532 | 0.8500 | 0.8667 | 0.9095 | 0.9133 | 0.9333 | 0.7290 | 0.7343 | 0.7278 | 0.8018 | 0.7852 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

Best results are bolded.

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| NMF | 0.7810 | 0.7802 | 0.7824 | 0.7811 | 0.8268 | 0.7395 | 0.6974 | 0.7672 | 0.7400 | 0.8522 | 0.7680 | 0.7558 | 0.7765 | 0.7680 | 0.8063 |

| CMNMF | 0.8185 | 0.8154 | 0.8220 | 0.8185 | 0.8694 | 0.8332 | 0.8442 | 0.8295 | 0.8334 | 0.9059 | 0.7774 | 0.7309 | 0.7955 | 0.7849 | 0.8388 |

| |$\textrm{GCN}_{random}$| | 0.7081 | 0.8201 | 0.6318 | 0.7965 | 0.7157 | 0.6250 | 0.6767 | 0.5833 | 0.5967 | 0.5670 | 0.7000 | 0.6423 | 0.7422 | 0.7769 | 0.7573 |

| GCN | 0.7516 | 0.6669 | 0.7761 | 0.8034 | 0.7761 | 0.6547 | 0.6137 | 0.7204 | 0.7606 | 0.7204 | 0.7558 | 0.7658 | 0.7656 | 0.8191 | 0.7657 |

| NMF-GCN | 0.8000 | 0.8656 | 0.7541 | 0.8979 | 0.8532 | 0.8500 | 0.8667 | 0.9095 | 0.9133 | 0.9333 | 0.7290 | 0.7343 | 0.7278 | 0.8018 | 0.7852 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

| Methods\ Datasets . | HDVD . | VDA1 . | VDA2 . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| NMF | 0.7810 | 0.7802 | 0.7824 | 0.7811 | 0.8268 | 0.7395 | 0.6974 | 0.7672 | 0.7400 | 0.8522 | 0.7680 | 0.7558 | 0.7765 | 0.7680 | 0.8063 |

| CMNMF | 0.8185 | 0.8154 | 0.8220 | 0.8185 | 0.8694 | 0.8332 | 0.8442 | 0.8295 | 0.8334 | 0.9059 | 0.7774 | 0.7309 | 0.7955 | 0.7849 | 0.8388 |

| |$\textrm{GCN}_{random}$| | 0.7081 | 0.8201 | 0.6318 | 0.7965 | 0.7157 | 0.6250 | 0.6767 | 0.5833 | 0.5967 | 0.5670 | 0.7000 | 0.6423 | 0.7422 | 0.7769 | 0.7573 |

| GCN | 0.7516 | 0.6669 | 0.7761 | 0.8034 | 0.7761 | 0.6547 | 0.6137 | 0.7204 | 0.7606 | 0.7204 | 0.7558 | 0.7658 | 0.7656 | 0.8191 | 0.7657 |

| NMF-GCN | 0.8000 | 0.8656 | 0.7541 | 0.8979 | 0.8532 | 0.8500 | 0.8667 | 0.9095 | 0.9133 | 0.9333 | 0.7290 | 0.7343 | 0.7278 | 0.8018 | 0.7852 |

| VDA-DLCMNMF | 0.8649 | 0.8625 | 0.9118 | 0.9299 | 0.9097 | 0.9000 | 0.9667 | 0.9333 | 0.9250 | 0.9715 | 0.7849 | 0.7688 | 0.8361 | 0.8631 | 0.8770 |

Best results are bolded.

In addition to the quantitative analysis, we visualize the latent vectors of drugs and viruses in a 2D space by t-SNE [59] and expect that the advantage of CMNMF can be better appreciated. According to Figure 4, it is observed that CMNMF is able to clearly distinguish drugs and viruses while introducing only few noisy information. In other words, the latent feature vectors obtained by CMNMF are more representative than those obtained by NMF. Moreover, we also note from Table 4 and Figure 4 that CMNMF contributes more in improving the accuracy of VDA-DLCMNMF on VDA1 than on the other two datasets, and the reasons are 2-fold. First, among all datasets, VDA1 is the smallest one with only 96 VDAs, and such a small VDA network is more sensitive to the quality of latent feature vectors of drugs and viruses. Second, comparing the distributions of latent vectors in 2D space, we note that the latent vectors obtained by NMF from VDA1 are uniformly distributed and they are not separated into different clusters as CMNMF do. In this regard, the rationality behind the proposal of CMNMF can be verified.

Due to the advantage of CMNMF, VDA-DLCMNMF performs better than both NMF-GCN, GCN|$_{random}$| and GCN, which further indicates that initializing the representation of drugs and viruses with their latent feature vectors obtained by CMNMF provides a more effective way for VDA-DLCMNMF to discover novel VDAs. It is noted that regrading GCN|$_{random}$|, its performance in terms of Acc. is the worst among all competing models for each dataset. This could be a strong indicator that for GCN without attention mechanism, its performance is prone to be influenced by the noisy in VDA networks. However, the performance of GCN is still not as good as NMF-GCN, even if when it adopts attention mechanism, a conclusion thus be made that GCN is not applicable to predict isolated nodes in a given network, such as new viruses in VDA network, due to the random initialization of node representation. On the other hand, according to the performance of VDA-DLCMNMF and NMF-GCN, the accuracy of GCN can be improved by integrating with either CMNMF or NMF, which is able to solve the cold-start problem for isolated nodes. Additionally, the performance of CMNMF and NMF is also improved with the integration of GCN that strengthens the learning ability in terms of network representation. Therefore, concerning the respective advantages of CMNMF and GCN, it is the integration of them that leads to the promising performance of VDA-DLCMNMF in drug repurposing.

3.5 Application to drug repositioning of diseases

In order to prove the robustness of the DLCMNMF, we implement it on two golden standard datasets, including Fdataset [13] and Cdataset [37], for drug repurposing of diseases. We then compare the performance of CMNMF with two cutting-edge methods DRRS [36] and BNNR [65] on two datasets, respectively. In this section, 5-fold CV is used to evaluate the performance of DLCMNMF and two baselines, and all parameters are the same as their original works. The results are shown in Table 5.

| Methods\ Datasets . | Fdataset . | Cdataset . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRRS | 0.8314 | 0.5241 | 0.8374 | 0.9093 | 0.3512 | 0.8345 | 0.5249 | 0.8378 | 0.9093 | 0.3489 |

| BNNR | 0.9576 | 0.3637 | 0.9638 | 0.9280 | 0.5634 | 0.9632 | 0.4236 | 0.9683 | 0.9407 | 0.6566 |

| DLCMNMF | 0.8541 | 0.9501 | 0.7965 | 0.9297 | 0.9090 | 0.8432 | 0.9058 | 0.7737 | 0.9501 | 0.9266 |

| Methods\ Datasets . | Fdataset . | Cdataset . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRRS | 0.8314 | 0.5241 | 0.8374 | 0.9093 | 0.3512 | 0.8345 | 0.5249 | 0.8378 | 0.9093 | 0.3489 |

| BNNR | 0.9576 | 0.3637 | 0.9638 | 0.9280 | 0.5634 | 0.9632 | 0.4236 | 0.9683 | 0.9407 | 0.6566 |

| DLCMNMF | 0.8541 | 0.9501 | 0.7965 | 0.9297 | 0.9090 | 0.8432 | 0.9058 | 0.7737 | 0.9501 | 0.9266 |

Best results are bolded.

| Methods\ Datasets . | Fdataset . | Cdataset . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRRS | 0.8314 | 0.5241 | 0.8374 | 0.9093 | 0.3512 | 0.8345 | 0.5249 | 0.8378 | 0.9093 | 0.3489 |

| BNNR | 0.9576 | 0.3637 | 0.9638 | 0.9280 | 0.5634 | 0.9632 | 0.4236 | 0.9683 | 0.9407 | 0.6566 |

| DLCMNMF | 0.8541 | 0.9501 | 0.7965 | 0.9297 | 0.9090 | 0.8432 | 0.9058 | 0.7737 | 0.9501 | 0.9266 |

| Methods\ Datasets . | Fdataset . | Cdataset . | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . | Acc. . | Sen. . | Spe. . | AUC . | AUPR . |

| DRRS | 0.8314 | 0.5241 | 0.8374 | 0.9093 | 0.3512 | 0.8345 | 0.5249 | 0.8378 | 0.9093 | 0.3489 |

| BNNR | 0.9576 | 0.3637 | 0.9638 | 0.9280 | 0.5634 | 0.9632 | 0.4236 | 0.9683 | 0.9407 | 0.6566 |

| DLCMNMF | 0.8541 | 0.9501 | 0.7965 | 0.9297 | 0.9090 | 0.8432 | 0.9058 | 0.7737 | 0.9501 | 0.9266 |

Best results are bolded.

Although both of DRRS and BNNR are trained on the same heterogeneous drug-disease networks, BNNR performs better than DRRS across all evaluation metrics expect Sen., and the main reason for that phenomenon is that BNNR specifically designs a relaxed penalty function to process noisy entries [65]. Regarding the performance of VDA-DLCMNMF, we note that VDA-DLCMNMF performs better by 0.17% and 35.46%, 0.94% and 27% than BNNR in terms of AUC and AUPR on Fdataset and Cdataset, respectively. Since BNNR demonstrates its ability in distinguishing negative samples as indicated by Spe., it also yields the best performance in terms of Acc.. However, for the task of drug repurposing, we are more concerned with the ability of discovering precise drug-disease associations. In this regard, VDA-DLCMNMF is preferred over BNNR, as the Sen. scores obtained by VDA -DLCMNMF are almost 60% and 48% larger than those of BNNR on FDataset and CDataset, respectively. In other words, the prediction accuracy of VDA-DLCMNMF in terms of Sen. AUC and AUPR could be a strong indicator that VDA-DLCMNMF is better in distinguishing between true positive samples and false negative samples when compared with DRRS and BNNR. Hence, we have reason to believe that VDA-DLCMNMF is also promising tool for the task of drug repurposing.

3.6 Identifying potential drugs for SARS-CoV-2

Taking SARS-CoV-2 as an example, we apply VDA-DLCMNMF to discover potential drugs that can be used to treat SARS-CoV-2 from the datasets of HDVD, VDA1 and VDA2. Specifically, for each VDA network of these datasets, we first apply VDA-DLCMNMF to obtain the probability of being associated with SARS-CoV-2 representation for each drug with (16) and then select the top 10 drugs in ascending order of probability for giving a detailed analysis. The results are shown in Table 6.

| Datasets . | Rank . | Drug name . | Evidence . | Rank . | Drug name . | Evidence . |

|---|---|---|---|---|---|---|

| HDVD | 1 | Remdesivir | PMID:32020029 | 6 | Chloroquine | PMID:32074550 |

| 2 | Tenofovir | – | 7 | Rimantadine | PMID:31133031; PMID:15288617 | |

| 3 | EIDD-2801 | [53] | 8 | Equilin | PMID:27169275; PMID:32194980 | |

| 4 | Dactinomycin | PMID:1335030; PMID:32194980 | 9 | Camostat | PMID:22496216 | |

| 5 | Ribavirin | PMID:22555152 | 10 | Berberine | – | |

| VDA1 | 1 | Remdesivir | PMID:32020029 | 6 | Indinavir | PMID:15144898 |

| 2 | Cobicistat | – | 7 | Camostat | PMID:22496216 | |

| 3 | Mycophenolic acid | PMID:5799033 | 8 | Tenofovir | – | |

| 4 | Ribavirin | PMID:22555152 | 9 | FK506 | – | |

| 5 | Chloroquine | PMID:32074550 | 10 | Zanamivir | PMID:15200845 | |

| VDA2 | 1 | Chlorpromazine | PMID:8811199 | 6 | Quinacrine | PMID:23301007 |

| 2 | Chloroquine | PMID:32074550 | 7 | Tenofovir | – | |

| 3 | Gemcitabine | PMID:24841273 | 8 | Indomethacin | PMID:5284360 | |

| 4 | Ribavirin | PMID:22555152 | 9 | Camostat | PMID:22496216 | |

| 5 | Favipiravir | [44] | 10 | Zanamivir | PMID:15200845 |

| Datasets . | Rank . | Drug name . | Evidence . | Rank . | Drug name . | Evidence . |

|---|---|---|---|---|---|---|

| HDVD | 1 | Remdesivir | PMID:32020029 | 6 | Chloroquine | PMID:32074550 |

| 2 | Tenofovir | – | 7 | Rimantadine | PMID:31133031; PMID:15288617 | |

| 3 | EIDD-2801 | [53] | 8 | Equilin | PMID:27169275; PMID:32194980 | |

| 4 | Dactinomycin | PMID:1335030; PMID:32194980 | 9 | Camostat | PMID:22496216 | |

| 5 | Ribavirin | PMID:22555152 | 10 | Berberine | – | |

| VDA1 | 1 | Remdesivir | PMID:32020029 | 6 | Indinavir | PMID:15144898 |

| 2 | Cobicistat | – | 7 | Camostat | PMID:22496216 | |

| 3 | Mycophenolic acid | PMID:5799033 | 8 | Tenofovir | – | |

| 4 | Ribavirin | PMID:22555152 | 9 | FK506 | – | |

| 5 | Chloroquine | PMID:32074550 | 10 | Zanamivir | PMID:15200845 | |

| VDA2 | 1 | Chlorpromazine | PMID:8811199 | 6 | Quinacrine | PMID:23301007 |

| 2 | Chloroquine | PMID:32074550 | 7 | Tenofovir | – | |

| 3 | Gemcitabine | PMID:24841273 | 8 | Indomethacin | PMID:5284360 | |

| 4 | Ribavirin | PMID:22555152 | 9 | Camostat | PMID:22496216 | |

| 5 | Favipiravir | [44] | 10 | Zanamivir | PMID:15200845 |

| Datasets . | Rank . | Drug name . | Evidence . | Rank . | Drug name . | Evidence . |

|---|---|---|---|---|---|---|

| HDVD | 1 | Remdesivir | PMID:32020029 | 6 | Chloroquine | PMID:32074550 |

| 2 | Tenofovir | – | 7 | Rimantadine | PMID:31133031; PMID:15288617 | |

| 3 | EIDD-2801 | [53] | 8 | Equilin | PMID:27169275; PMID:32194980 | |

| 4 | Dactinomycin | PMID:1335030; PMID:32194980 | 9 | Camostat | PMID:22496216 | |

| 5 | Ribavirin | PMID:22555152 | 10 | Berberine | – | |

| VDA1 | 1 | Remdesivir | PMID:32020029 | 6 | Indinavir | PMID:15144898 |

| 2 | Cobicistat | – | 7 | Camostat | PMID:22496216 | |

| 3 | Mycophenolic acid | PMID:5799033 | 8 | Tenofovir | – | |

| 4 | Ribavirin | PMID:22555152 | 9 | FK506 | – | |

| 5 | Chloroquine | PMID:32074550 | 10 | Zanamivir | PMID:15200845 | |

| VDA2 | 1 | Chlorpromazine | PMID:8811199 | 6 | Quinacrine | PMID:23301007 |

| 2 | Chloroquine | PMID:32074550 | 7 | Tenofovir | – | |

| 3 | Gemcitabine | PMID:24841273 | 8 | Indomethacin | PMID:5284360 | |

| 4 | Ribavirin | PMID:22555152 | 9 | Camostat | PMID:22496216 | |

| 5 | Favipiravir | [44] | 10 | Zanamivir | PMID:15200845 |

| Datasets . | Rank . | Drug name . | Evidence . | Rank . | Drug name . | Evidence . |

|---|---|---|---|---|---|---|

| HDVD | 1 | Remdesivir | PMID:32020029 | 6 | Chloroquine | PMID:32074550 |

| 2 | Tenofovir | – | 7 | Rimantadine | PMID:31133031; PMID:15288617 | |

| 3 | EIDD-2801 | [53] | 8 | Equilin | PMID:27169275; PMID:32194980 | |

| 4 | Dactinomycin | PMID:1335030; PMID:32194980 | 9 | Camostat | PMID:22496216 | |

| 5 | Ribavirin | PMID:22555152 | 10 | Berberine | – | |

| VDA1 | 1 | Remdesivir | PMID:32020029 | 6 | Indinavir | PMID:15144898 |

| 2 | Cobicistat | – | 7 | Camostat | PMID:22496216 | |

| 3 | Mycophenolic acid | PMID:5799033 | 8 | Tenofovir | – | |

| 4 | Ribavirin | PMID:22555152 | 9 | FK506 | – | |

| 5 | Chloroquine | PMID:32074550 | 10 | Zanamivir | PMID:15200845 | |

| VDA2 | 1 | Chlorpromazine | PMID:8811199 | 6 | Quinacrine | PMID:23301007 |

| 2 | Chloroquine | PMID:32074550 | 7 | Tenofovir | – | |

| 3 | Gemcitabine | PMID:24841273 | 8 | Indomethacin | PMID:5284360 | |

| 4 | Ribavirin | PMID:22555152 | 9 | Camostat | PMID:22496216 | |

| 5 | Favipiravir | [44] | 10 | Zanamivir | PMID:15200845 |

We note that 8, 7 and 9 out of the Top 10 drugs discovered in HDVD, VDA1 and VDA2, respectively, have been validated by recent publications. Among these validated drugs, Remdesivir obtains the largest probability scores in both HDVD and VDA1, and it has been recently recognized as a promising antiviral drug against a wide array of RNA viruses infection in cultured cells, mice and nonhuman primate models [39, 61]. As an adenosine analogue, Remdesivir incorporates into nascent viral RNA chains, thus resulting in the pre-mature termination. Moreover, Remdesivir is able to inhibit the viral infection of Vero-E6 cells by clinically isolating SARS-CoV-2 in an in vitro assay [61]. In the dataset of VDA2, chlorpromazine is predicted as the most likely drug for the treatment of SARS-CoV-2, and it is widely used to study virus entry by clathrin-mediated endocytosis of several viruses, including West Nile virus and influenza virus [6]. Since SARS-CoV also utilizes the clathrin-mediated endocytosis pathway for entry into host cell [6], it is possible for chlorpromazine to act similarly on MERS-CoV and SARS-CoV as a potential broad-spectrum CoV inhibitor.

It can also be observed from Table 6 that three drugs, including ribavirin, chloroquine and camostat, are found among the Top 10 drugs in all the three datasets. In particular, ribavirin is an approved antiviral drug to inhibit the production of Inosine-5|$^{^{\prime}}$|-monophosphate dehydrogenase, which interacts with the viral protein nsp14 [64]. It is for this reason that ribavirin has been recommended in the clinical practice for SARS-CoV-2 pneumonia diagnosis and Treatment Plan Edition 5-Revised [27]. As a traditional drug for the treatment of malaria, chloroquine phosphate is shown to have apparent efficacy and acceptable safety against COVID-19 associated pneumonia based on multi-center clinical trials conducted in China [12]. In addition to the ability of increasing the endosomal PH required for virus/cell fusion, Chloroquine is capable of interfering with the glycosylation of cellular receptors of SARS-CoV [29]. As a result, chloroquine is recommended in the next version of the Guidelines for the Prevention, Diagnosis, and Treatment of COVID-19 pneumonia, which is issued by the National Health Commission of the People’s Republic of China for the treatment of COVID-19 infection for larger populations in future [12]. Known as one of commercial serine protease inhibitors, Camostat partially blocks the infection of SARS-CoV [20]. Moreover, when used together with cathepsin inhibitor EST, it can effectively prevent both cell entry and the multistep growth of SARS-CoV in human Calu-3 airway epithelial cells [25, 71].

In summary, the above analysis demonstrates the promising performance of VDA-DLCMNMF in discovering potential drugs for SARS-CoV-2, as most of the Top 10 drugs predicted by VDA-DLCMNMF are found to be effective when used to treat SARS-CoV-2 according to a careful literature review. Moreover, such a high accuracy could be also a strong indicator that VDA-DLCMNMF is able to precisely discover potential drugs for a new virus.

3.7 Molecular docking experiment

To further explain the reliability of VDA-DLCMNMF, we have conducted structure-based molecular docking experiments [42] to all the drugs listed in Table 6. For each drug, we compute its intermolecular binding ability with SARS-CoV-2 spike protein or human angiotensin-converting enzyme 2 (ACE2), which are important functional receptors for SARS and other CoVs [19, 34].

Specifically, we first download the structures of SARS-CoV-2 spike receptor-binding domain bound with ACES (PDB ID: 6M0J) from RCSB Protein Data Bank [3], and the chemical structures of drugs are obtained from the DrugBank in the PDB format. After that, the PDB data of drugs are converted into pdbqt files by AutoDockTools [41]. For each drug, its pdbqt file is considered as the input of AutoDock software, with which we are able to complete the molecular docking experiment by taking the spike protein and ACE2 as receptors and each drug as a ligand of interest. The experimental results of molecular docking on all the 20 drugs in Table 6 are shown in Table 7, where the binding energies of these drugs are recorded. When using AutoDock to conduct molecular docking experiments, one should note that the binding energy is the binding free energy. For an arbitrary drug, the lower its binding energy is, the stronger its binding ability is.

Binding energies between predicted drugs and the SARS-CoV-2 spike protein/ACE2

| Drug name . | Binding energy (kcal/mol) . | Drug name . | Binding energy (kcal/mol) . |

|---|---|---|---|

| Berberine | |$-7.39$| | Camostat | |$-7.43$| |

| Chloroquine | |$-6.40$| | Chlorpromazine | |$-6.82$| |

| Cobicistat | |$-7.93$| | Dactinomycin | |$-2.29$| |

| EIDD-2801 | |$-5.45$| | Equilin | |$-7.68$| |

| Favipiravir | |$-4.24$| | FK506 | |$-9.72$| |

| Gemcitabine | |$-4.89$| | Indinavir | |$-8.95$| |

| Indomethacin | |$-6.43$| | Mycophenolic acid | |$-5.60$| |

| Quinacrine | |$-6.50$| | Remdesivir | |$-7.25$| |

| Ribavirin | |$-6.87$| | Rlmantadine | |$-6.67$| |

| Tenofovir | |$-6.44$| | Zanamivir | |$-5.80$| |

| Drug name . | Binding energy (kcal/mol) . | Drug name . | Binding energy (kcal/mol) . |

|---|---|---|---|

| Berberine | |$-7.39$| | Camostat | |$-7.43$| |

| Chloroquine | |$-6.40$| | Chlorpromazine | |$-6.82$| |

| Cobicistat | |$-7.93$| | Dactinomycin | |$-2.29$| |

| EIDD-2801 | |$-5.45$| | Equilin | |$-7.68$| |

| Favipiravir | |$-4.24$| | FK506 | |$-9.72$| |

| Gemcitabine | |$-4.89$| | Indinavir | |$-8.95$| |

| Indomethacin | |$-6.43$| | Mycophenolic acid | |$-5.60$| |

| Quinacrine | |$-6.50$| | Remdesivir | |$-7.25$| |

| Ribavirin | |$-6.87$| | Rlmantadine | |$-6.67$| |

| Tenofovir | |$-6.44$| | Zanamivir | |$-5.80$| |

Binding energies between predicted drugs and the SARS-CoV-2 spike protein/ACE2

| Drug name . | Binding energy (kcal/mol) . | Drug name . | Binding energy (kcal/mol) . |

|---|---|---|---|

| Berberine | |$-7.39$| | Camostat | |$-7.43$| |

| Chloroquine | |$-6.40$| | Chlorpromazine | |$-6.82$| |

| Cobicistat | |$-7.93$| | Dactinomycin | |$-2.29$| |

| EIDD-2801 | |$-5.45$| | Equilin | |$-7.68$| |

| Favipiravir | |$-4.24$| | FK506 | |$-9.72$| |

| Gemcitabine | |$-4.89$| | Indinavir | |$-8.95$| |

| Indomethacin | |$-6.43$| | Mycophenolic acid | |$-5.60$| |

| Quinacrine | |$-6.50$| | Remdesivir | |$-7.25$| |

| Ribavirin | |$-6.87$| | Rlmantadine | |$-6.67$| |

| Tenofovir | |$-6.44$| | Zanamivir | |$-5.80$| |

| Drug name . | Binding energy (kcal/mol) . | Drug name . | Binding energy (kcal/mol) . |

|---|---|---|---|

| Berberine | |$-7.39$| | Camostat | |$-7.43$| |

| Chloroquine | |$-6.40$| | Chlorpromazine | |$-6.82$| |

| Cobicistat | |$-7.93$| | Dactinomycin | |$-2.29$| |

| EIDD-2801 | |$-5.45$| | Equilin | |$-7.68$| |

| Favipiravir | |$-4.24$| | FK506 | |$-9.72$| |

| Gemcitabine | |$-4.89$| | Indinavir | |$-8.95$| |

| Indomethacin | |$-6.43$| | Mycophenolic acid | |$-5.60$| |

| Quinacrine | |$-6.50$| | Remdesivir | |$-7.25$| |

| Ribavirin | |$-6.87$| | Rlmantadine | |$-6.67$| |

| Tenofovir | |$-6.44$| | Zanamivir | |$-5.80$| |

We note that for Remdesivir, its binding energy with SARS-CoV-2 spike protein/ACE2 is |$-7.39$| kcal/mol while that for Chlorpromazine is |$-6.82$| kcal/mol. For ribavirin, chloroquine and camostat that are listed among the Top 10 drugs in all datasets, their binding energies are |$-6.87$| kcal/mol, |$-6.40$| kcal/mol and |$-7.43$| kcal/mol, respectively. Overall, the binding energies of these five drugs are positioned at a relatively lower level as indicated by Table 7. This finding further validates the eligibility of these drugs in treating SARS-CoV-2.

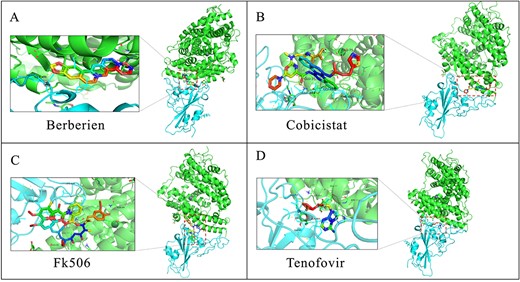

According to the Table 6, there are a total of four drugs, including tenofovir [26], Berberine [16], cobicistat [30] and FK506 [52], yet to be validated, as there is no evidence to confirm their effort for the treatment of SARS-CoV-2. Hence, we have also conducted molecular docking experiments for these four drugs and presented their binding sites in Figure 5, where the green and cyan parts denote the structures of ACE2 and SARS-CoV-2 spike protein, respectively. It is observed from Table 7 that the binding synergies of these four drugs are even lower than several validated drugs, such as favipiravir, gemcitabine and dactinomycin, thus indicating a strong association they have with SARS-CoV-2. Moreover, particular attention is given to cobicistat and FK506, which obtain lower binding energies when compared with ribavirin and camostat. In particular, FK506 has the lowest binding energy with SARS-CoV-2 spike protein/ACE2 among all the 20 drugs. Overall, we reason that the associations of these four drugs are possibly existed, but missed by laboratory experiments, and thus they are likely to have therapeutic effects against SARS-CoV-2. It also should be noted that molecular docking do not necessarily prove that the drug can treat SARS-CoV-2, the results obtained by molecular docking just provide a therapeutic possibility. Accurate results require in-depth follow-up experimental verifications.

Molecular docking results for Berberine, cobicistat, FK506 and Tenofovir bound with SARS-CoV-2 spike protein/ACE2.

4 Discussion and Conclusion

To facilitate the development of antiviral drugs against new diseases, we propose a novel deep learning-based method, namely VDA-DLCMNMF, for drug repurposing with CMNMF and apply it to discover novel drugs that are more likely to treat SARS-CoV-2. Regarding the drug repurposing for new viruses, the major difficulty lying in here is that there are no known associations between new viruses and existing drugs; hence, it is of great significance to effectively solve the cold-start problem. To this end, we first construct an enhanced association matrix by integrating VDAs, chemical structures of drugs and genomic sequences of viruses. After that, the CMNMF model is designed to address the cold-start problem by precisely constructing the latent feature vectors of drugs and viruses in a unified LFS from the structural and genomic perspectives. VDA-DLCMNMF then adopts a GCN with attention-based neighbor sampling to learn the representations of drugs and viruses, which are initialized with their latent feature vectors at beginning. The probability of a drug being associated with a new virus can thus be computed based on their final representations. Extensive experiments have been conducted to evaluate the performance of VDA-DLCMNMF, and their results demonstrate the superior accuracy of VDA-DLCMNMF on three datasets created for SARS-CoV-2 when comparing it with several state-of-the-art drug repurposing methods. Moreover, for each dataset, most of the top 10 drugs predicted by VDA-DLCMNMF are validated by literature review. Even for those without any evidence, the results of molecular docking, to some extent, indicate their potential ability in treating SARS-CoV-2.

There are several reasons to explain the success of VDA-DLCMNMF in drug repurposing for SARS-CoV-2. First of all, the selection of proper biological knowledge of drugs and viruses, as well as how to process them, provides a solid basis for the following steps of VDA-DLCMNMF. Obviously, it is impossible for existing drug repurposing methods to discover novel VDAs of new viruses if without any other source of information about viruses, especially in an effort to discover potential drugs for new viruses. To this end, we make use of the chemical structures of drugs and the genomic sequences of viruses, and integrate them into a given VDA network for the purpose of constructing an enhanced association matrix. With such a matrix, we are able to strengthen our perception about the formation of VDAs involving new viruses, which is that similar viruses are more likely to be associated with the same drugs. Moreover, as indicated by [38], it is the integration with the biological knowledge of drugs and viruses that offers us an alternative view to improve the quality of VDA networks.

Second, our experimental results reveal that the fundamental reason accounting for the failure of existing network-based models is the lack of such an ability that precisely captures the characteristics of drugs and viruses, which are used to govern VDA networks respectively from the structural and genomic perspectives. Besides, the cold-start problem introduced by new viruses can also be addressed by properly capturing the characteristics of new viruses. In this regard, we develop the CMNMF model modified from traditional NMF. For the CMNMF model, its main purpose is to reconstruct the enhanced association matrix, rather than the original VDA matrix, by using the latent features of drugs and viruses. Moreover, additional constraints are defined from different views, thus ensuring that the similarity information of drugs and viruses are completely projected onto a unified LFS. An extra benefit of doing so is to avoid the noisy information generated after projection, such as the unexpected similarity between drugs and viruses. According to experimental results, the CMNMF model plays a critical role in contributing to the promising performance of CMNMF.

Last, but not least, VDA-DLCMNMF takes advantage of the powerful representation ability of GCN to learn the representations of drugs and viruses from a given VDA matrix. There are three points worth noting: (i) since GCN only accepts the adjacency matrix as input, we could not be able to apply the enhanced association matrix to GCN; (ii) instead of randomly initializing the representation of drugs and viruses, we use their latent feature vectors obtained with CMNMF to complete the initialization task; (iii) to accelerate the training of GCN, we adopt a heuristic neighborhood sampling strategy, which updates the representation of a virus, or a drug, by only using a part of viruses, or drugs, with high quality as indicated by their attention weights; and (iv) VDA-DLCMNMF integrates an attention mechanism into GCN, thus enhancing the information granularity by combining the latent features of drugs and viruses with the topological feature of VDA network.

Though experimental results indicate that VDA-DLCMNMF is a promising tool for repurposing drugs for new viruses, there is still room for further improvement. Specifically, we would like to adapt different solutions, such as variational inference [21, 54] and reparameterization techniques [43], to address the CMNMF model in a more efficient manner. Furthermore, as our future work, we are interested in exploring the possibility of using more biological knowledge, such as intracellular gene regulatory networks [7], drug–drug interactions [69] and drug–disease interactions [57], to construct complex heterogeneous networks and also using higher-order structures [22, 23] to enrich the representations of drugs and viruses.

An enhance association matrix is designed by integrating chemical structures of drugs and the genomic sequences of viruses into a given VDA network, thus strengthening our perception about the potential drugs that new viruses are more likely to associate with.

We propose a novel CMNMF model to address the cold-start problem related to new viruses by reconstructing the enhance association matrix with the constraints from different views. The similarity information of drugs and viruses can thus be completely projected onto a unified latent feature space.

We develop a drug repositioning model, namely VDA-DLCMNMF, to identify potential drugs for new viruses with GCN. The latent feature vectors learned from CMNMF are used as the initial representations of drugs and viruses. VDA-DLCMNMF also adopts an attention-based neighbor sampling strategy to train GCN for drug repurposing.

Experimental results on three VDA datasets demonstrate the promising performance of VDA-DLCMNMF in repurposing antiviral drugs against SARS-CoV-2. Four novel drugs identified by our method are proved to have the potential ability to bind with important functional receptors of SARS-CoV-2.

5 Data availability

The dataset and source code can be freely downloaded from https://github.com/Blair1213/DLMNMF.

6 Author contributions statement

X.S., L.H. and L.W. conceived the experiments; X.S. and L.H. conducted the experiments; Z.Y. and B.Z. analyzed the results.

Acknowledgments

The authors would like to thank colleagues and the anonymous reviewers who have provided valuable feedback to help improve the paper.

Funding

This work was supported in part by the Natural Science Foundation of Xinjiang Uygur Autonomous Region (2021D01D05), in part by the Pioneer Hundred Talents Program of Chinese Academy of Sciences, in part by the National Natural Science Foundation of China (62172355), in part by the Awardee of the NSFC Excellent Young Scholars Program (61722212), in part by the Science and Technology Innovation 2030-New Generation Artificial Intelligence Major Project (2018AAA0100100) and the Tianshan youth-Excellent Youth (2019Q029).

Xiaorui Su is a doctoral student in Xinjiang Technical Institute of Physics and Chemistry, Chinese Academy of Science, Urumqi, China. Her research interests include machine learning, network representation learning, computational biology and bioinformatics.

Lun Hu, PhD, is a professor in Xinjiang Technical Institute of Physics & Chemistry, Chinese Academy of Science, Urumqi, China. His research interests include machine learning, big data analysis and its applications in bioinformatics.

Zhuhong You, PhD, is a professor in School of Computer Science, Northwestern Polytechnical University, Xi’an, China. His research interests include neural networks, intelligent information processing, sparse representation and its applications in bioinformatics.

Pengwei Hu, PhD, is a professor in Xinjiang Technical Institute of Physics & Chemistry, Chinese Academy of Science, Urumqi, China. His research interests include machine learning, big data analysis and its applications in bioinformatics.

Lei Wang, PhD, is a professor in Big Data and Intelligent Computing Research Center, Guangxi Academy of Science, Nanning, China. His research interests include data mining, pattern recognition, machine learning, deep learning, computational biology and bioinformatics.

Bowei Zhao now is a doctoral student in Xinjiang Technical Institute of Physics and Chemistry, Chinese Academy of Science, Urumqi, China. His research interests include machine learning, complex networks analysis, graph neural network and their applications in bioinformatics.

References