-

PDF

- Split View

-

Views

-

Cite

Cite

Delora Baptista, Pedro G Ferreira, Miguel Rocha, Deep learning for drug response prediction in cancer, Briefings in Bioinformatics, Volume 22, Issue 1, January 2021, Pages 360–379, https://doi.org/10.1093/bib/bbz171

Close - Share Icon Share

Abstract

Predicting the sensitivity of tumors to specific anti-cancer treatments is a challenge of paramount importance for precision medicine. Machine learning(ML) algorithms can be trained on high-throughput screening data to develop models that are able to predict the response of cancer cell lines and patients to novel drugs or drug combinations. Deep learning (DL) refers to a distinct class of ML algorithms that have achieved top-level performance in a variety of fields, including drug discovery. These types of models have unique characteristics that may make them more suitable for the complex task of modeling drug response based on both biological and chemical data, but the application of DL to drug response prediction has been unexplored until very recently. The few studies that have been published have shown promising results, and the use of DL for drug response prediction is beginning to attract greater interest from researchers in the field. In this article, we critically review recently published studies that have employed DL methods to predict drug response in cancer cell lines. We also provide a brief description of DL and the main types of architectures that have been used in these studies. Additionally, we present a selection of publicly available drug screening data resources that can be used to develop drug response prediction models. Finally, we also address the limitations of these approaches and provide a discussion on possible paths for further improvement. Contact: [email protected]

Computational methods are essential to make sense of the several large drug screening data sets made available to the public in recent years.

The 1st studies that have used DL to predict the effects of single drugs or drug combinations on cancer cell lines have shown promising results, with the majority outperforming traditional ML models.

The future success of DL in this field will depend not only on the improvement of the generalization capacity of the models but also their interpretability and their ability to translate to the clinic.

1 Introduction

Precision medicine represents a challenge for this century, with the search for personalized cancer treatments being one of the most prominent endeavors in the field. The hope with precision medicine is that by profiling tumors at the molecular level it will be possible to design treatments specifically adapted to the characteristics of a particular molecular subgroup of tumors or even individual patients, improving treatment outcomes. The success of precision medicine relies, therefore, on effectively translating the combination of clinical data with genomics and other ‘omics’ data into prognostic and predictive biomarkers.

Besides the characterization of tumors at the molecular level, another relevant task for precision oncology is to generate drug response profiles, spanning a wide range of drugs and cancer subtypes. In recent years, data from several large-scale drug screening initiatives [1–4] have been made publicly available, helping to further the field of precision oncology. These projects have screened known and candidate anti-cancer drugs against cancer cell lines, which have been extensively characterized at the molecular level. The data from these initiatives have already enabled the identification of putative drug response biomarkers using computational methods (elastic net regression) [1, 5] and the development of predictive models, such as elastic net and random forest (RF) models to predict drug sensitivity [6].

Indeed, computational methods are crucial to make sense of these large drug screening data sets. Although high-throughput screening is a common 1st step in drug discovery, experimentally screening all possible candidate drugs or drug combinations is not feasible, for both practical and financial reasons [7, 8]. Therefore, the development of computational strategies to predict drug response is essential to limit the search space and guide the discovery process, reducing the experimental effort required. Since performing screening assays in cell cultures is currently the only alternative enabling high-throughput drug screening, computational methods are also needed to translate the knowledge obtained from cell line-based screens to drug response profiles of specific patients.

A variety of computational methods for drug response prediction and the discovery of drug response biomarkers have already been reported in the literature, including machine learning (ML)-based approaches such as support vector machines (SVMs) [9], Bayesian multitask multiple kernel learning [10, 11], RFs [6, 12–14] and neural network [15] models. Nevertheless, there is still much room for improvement of these computational models in terms of predictive performance and model generalizability [16].

A particular subclass of ML algorithms referred to collectively as deep learning (DL) might be well suited to the prediction of drug response based on pharmacological and cell line omics data. Since DL methods can handle large volumes of high-dimensional and noisy data, they may be able to capture the nonlinear and complex relationships typical of biological data better than other types of ML algorithms. Furthermore, DL has already been successfully applied to a wide range of other drug discovery-related tasks. For most of these tasks, the predictive performance of DL-based models is at least on par with other ML approaches, if not better [17]. For instance, DL has been shown to outperform traditional ML approaches in the prediction of compound activity [18–20], compound toxicity [21] and other compound properties [22]. Nevertheless, the application of DL to drug response prediction problems has been under-explored until very recently.

In this article, we review the state-of-the-art of DL-based drug response prediction methods. First, we introduce the concept of deep learning and describe the main types of DL architectures (Table 1 defines some of the technical terms that will be used throughout the manuscript). We then present a selection of publicly available drug screening data resources that can be used to develop drug response prediction models. Next, we briefly describe and comment on the DL models for drug response prediction that have been reported in the literature. We address both the strengths and limitations of these models and discuss suggestions for further improvement. The insights gleaned from these studies will undoubtedly be useful in guiding the future development of computational methods for the rational design of effective anti-cancer treatments.

| Term . | Definition . |

|---|---|

| Activation function | A function that each unit in a neural network applies to the weighted sum of its inputs to calculate its output value [23] |

| Attention mechanism | A method from the field of machine translation that identifies which parts of an input sequence are relevant to the output [24] |

| Backpropagation | An algorithm that propagates the prediction error of a neural network backwards, from the output layer to the input layer so that the gradients with respect to the weights of each unit can be computed [23] |

| Drug response biomarker | A biological characteristic that is predictive of the response of a tumor to a given treatment [25] |

| Classification | A supervised learning task where the output variable is categorical |

| Cross-validation | A model validation technique where data are divided into several subsets (folds) that are successively held out from the training set and used to estimate model performance |

| Data augmentation | Increasing the number of training data points |

| Discriminative model | Learns the conditional probability of the output given the inputs [26] |

| Dose-response relationship | The relationship between the observed effect (response) of a drug and its concentration (dose) [27] |

| Dropout | A technique that ‘drops’ some neurons from a neural network in each iteration to reduce overfitting [28] |

| Drug sensitivity | The susceptibility of a cell line/tumor to a drug |

| Drug synergy | A phenomenon where the response to a combination is enhanced, going beyond the effect that would be expected based on the responses to each individual drug [29] |

| End-to-end learning | A learning approach where feature learning/extraction and outcome prediction are automatically performed by a single neural network instead of requiring multiple steps |

| Ensemble learning | Methods that combine the predictions of multiple ML models (base models), forming a single model |

| Feature selection | A method to reduce model complexity by only considering smaller subsets of the original variables. |

| Generative model | Generative models learn the joint probability distribution of input and output variables being able to generate new inputs for a given distribution [26] |

| High-throughput screening | In the context of this paper, high-throughput screening refers to experiments where many candidate drugs are screened at varying concentrations across a panel of cancer cell lines and response to the drug is measured [1] |

| Hyperparameter | A model parameter that is not learned during training and must be set beforehand |

| Loss function | A function that measures the penalty associated with prediction errors [30] |

| Machine learning | A subfield of artificial intelligence that refers to algorithms that can learn information directly from data and make accurate predictions using a model that is inferred from input data alone [31] |

| Molecular descriptors | Experimentally determined or theoretical properties of compounds summarized in numerical form [32] |

| Molecular fingerprints | A representation of the structure in the form of numerical vectors (e.g. binary fingerprints represent the presence of absence of certain chemical substructures within a molecule) [32] |

| Molecular graph | A representation of the structure of a compound in the form of a graph, where nodes represent atoms and edges represent bonds [33] |

| Multimodal learning | Using models that can relate and learn from data from different modalities (i.e. different input data types) [34] |

| Omics data | Fields of study in biology that focus on characterizing particular biological entities and interactions. Genomics studies genomes of organisms, transcriptomics the set of RNA transcripts, epigenomics the epigenetic modifications, proteomics the proteins of an organism and metabolomics the concentration of compounds |

| One-hot encoding | Converting categorical variables into a numerical (binary) form |

| Overfitting | Overfitting occurs when a model fits the training data well but is unable to generalize to unseen data |

| Regression | A supervised learning task where the output variable is continuous |

| Representation learning | The process through which relevant features are learned automatically from the input data |

| Semi-supervised learning | A type of ML task where an estimator is trained on both labeled and unlabeled data to learn a function mapping input variables to output variables. |

| SMILES strings | A representation of compound structures in the form of ASCII strings |

| Supervised learning | A type of ML task where an estimator is trained on labeled data (a data set that contains a set of input features and the corresponding output values) to predict the output for unseen samples of input data |

| (Model) Training (fitting) | The process through which the parameters of a model (in DL, the weights in a neural networks) are estimated |

| Transfer learning | An ML method where a model developed for one prediction task (a pre-trained network) is reused as the starting point for a similar task [35] |

| Unsupervised learning | A type of ML where the goal is for the algorithm to find structure in unlabeled data |

| Term . | Definition . |

|---|---|

| Activation function | A function that each unit in a neural network applies to the weighted sum of its inputs to calculate its output value [23] |

| Attention mechanism | A method from the field of machine translation that identifies which parts of an input sequence are relevant to the output [24] |

| Backpropagation | An algorithm that propagates the prediction error of a neural network backwards, from the output layer to the input layer so that the gradients with respect to the weights of each unit can be computed [23] |

| Drug response biomarker | A biological characteristic that is predictive of the response of a tumor to a given treatment [25] |

| Classification | A supervised learning task where the output variable is categorical |

| Cross-validation | A model validation technique where data are divided into several subsets (folds) that are successively held out from the training set and used to estimate model performance |

| Data augmentation | Increasing the number of training data points |

| Discriminative model | Learns the conditional probability of the output given the inputs [26] |

| Dose-response relationship | The relationship between the observed effect (response) of a drug and its concentration (dose) [27] |

| Dropout | A technique that ‘drops’ some neurons from a neural network in each iteration to reduce overfitting [28] |

| Drug sensitivity | The susceptibility of a cell line/tumor to a drug |

| Drug synergy | A phenomenon where the response to a combination is enhanced, going beyond the effect that would be expected based on the responses to each individual drug [29] |

| End-to-end learning | A learning approach where feature learning/extraction and outcome prediction are automatically performed by a single neural network instead of requiring multiple steps |

| Ensemble learning | Methods that combine the predictions of multiple ML models (base models), forming a single model |

| Feature selection | A method to reduce model complexity by only considering smaller subsets of the original variables. |

| Generative model | Generative models learn the joint probability distribution of input and output variables being able to generate new inputs for a given distribution [26] |

| High-throughput screening | In the context of this paper, high-throughput screening refers to experiments where many candidate drugs are screened at varying concentrations across a panel of cancer cell lines and response to the drug is measured [1] |

| Hyperparameter | A model parameter that is not learned during training and must be set beforehand |

| Loss function | A function that measures the penalty associated with prediction errors [30] |

| Machine learning | A subfield of artificial intelligence that refers to algorithms that can learn information directly from data and make accurate predictions using a model that is inferred from input data alone [31] |

| Molecular descriptors | Experimentally determined or theoretical properties of compounds summarized in numerical form [32] |

| Molecular fingerprints | A representation of the structure in the form of numerical vectors (e.g. binary fingerprints represent the presence of absence of certain chemical substructures within a molecule) [32] |

| Molecular graph | A representation of the structure of a compound in the form of a graph, where nodes represent atoms and edges represent bonds [33] |

| Multimodal learning | Using models that can relate and learn from data from different modalities (i.e. different input data types) [34] |

| Omics data | Fields of study in biology that focus on characterizing particular biological entities and interactions. Genomics studies genomes of organisms, transcriptomics the set of RNA transcripts, epigenomics the epigenetic modifications, proteomics the proteins of an organism and metabolomics the concentration of compounds |

| One-hot encoding | Converting categorical variables into a numerical (binary) form |

| Overfitting | Overfitting occurs when a model fits the training data well but is unable to generalize to unseen data |

| Regression | A supervised learning task where the output variable is continuous |

| Representation learning | The process through which relevant features are learned automatically from the input data |

| Semi-supervised learning | A type of ML task where an estimator is trained on both labeled and unlabeled data to learn a function mapping input variables to output variables. |

| SMILES strings | A representation of compound structures in the form of ASCII strings |

| Supervised learning | A type of ML task where an estimator is trained on labeled data (a data set that contains a set of input features and the corresponding output values) to predict the output for unseen samples of input data |

| (Model) Training (fitting) | The process through which the parameters of a model (in DL, the weights in a neural networks) are estimated |

| Transfer learning | An ML method where a model developed for one prediction task (a pre-trained network) is reused as the starting point for a similar task [35] |

| Unsupervised learning | A type of ML where the goal is for the algorithm to find structure in unlabeled data |

| Term . | Definition . |

|---|---|

| Activation function | A function that each unit in a neural network applies to the weighted sum of its inputs to calculate its output value [23] |

| Attention mechanism | A method from the field of machine translation that identifies which parts of an input sequence are relevant to the output [24] |

| Backpropagation | An algorithm that propagates the prediction error of a neural network backwards, from the output layer to the input layer so that the gradients with respect to the weights of each unit can be computed [23] |

| Drug response biomarker | A biological characteristic that is predictive of the response of a tumor to a given treatment [25] |

| Classification | A supervised learning task where the output variable is categorical |

| Cross-validation | A model validation technique where data are divided into several subsets (folds) that are successively held out from the training set and used to estimate model performance |

| Data augmentation | Increasing the number of training data points |

| Discriminative model | Learns the conditional probability of the output given the inputs [26] |

| Dose-response relationship | The relationship between the observed effect (response) of a drug and its concentration (dose) [27] |

| Dropout | A technique that ‘drops’ some neurons from a neural network in each iteration to reduce overfitting [28] |

| Drug sensitivity | The susceptibility of a cell line/tumor to a drug |

| Drug synergy | A phenomenon where the response to a combination is enhanced, going beyond the effect that would be expected based on the responses to each individual drug [29] |

| End-to-end learning | A learning approach where feature learning/extraction and outcome prediction are automatically performed by a single neural network instead of requiring multiple steps |

| Ensemble learning | Methods that combine the predictions of multiple ML models (base models), forming a single model |

| Feature selection | A method to reduce model complexity by only considering smaller subsets of the original variables. |

| Generative model | Generative models learn the joint probability distribution of input and output variables being able to generate new inputs for a given distribution [26] |

| High-throughput screening | In the context of this paper, high-throughput screening refers to experiments where many candidate drugs are screened at varying concentrations across a panel of cancer cell lines and response to the drug is measured [1] |

| Hyperparameter | A model parameter that is not learned during training and must be set beforehand |

| Loss function | A function that measures the penalty associated with prediction errors [30] |

| Machine learning | A subfield of artificial intelligence that refers to algorithms that can learn information directly from data and make accurate predictions using a model that is inferred from input data alone [31] |

| Molecular descriptors | Experimentally determined or theoretical properties of compounds summarized in numerical form [32] |

| Molecular fingerprints | A representation of the structure in the form of numerical vectors (e.g. binary fingerprints represent the presence of absence of certain chemical substructures within a molecule) [32] |

| Molecular graph | A representation of the structure of a compound in the form of a graph, where nodes represent atoms and edges represent bonds [33] |

| Multimodal learning | Using models that can relate and learn from data from different modalities (i.e. different input data types) [34] |

| Omics data | Fields of study in biology that focus on characterizing particular biological entities and interactions. Genomics studies genomes of organisms, transcriptomics the set of RNA transcripts, epigenomics the epigenetic modifications, proteomics the proteins of an organism and metabolomics the concentration of compounds |

| One-hot encoding | Converting categorical variables into a numerical (binary) form |

| Overfitting | Overfitting occurs when a model fits the training data well but is unable to generalize to unseen data |

| Regression | A supervised learning task where the output variable is continuous |

| Representation learning | The process through which relevant features are learned automatically from the input data |

| Semi-supervised learning | A type of ML task where an estimator is trained on both labeled and unlabeled data to learn a function mapping input variables to output variables. |

| SMILES strings | A representation of compound structures in the form of ASCII strings |

| Supervised learning | A type of ML task where an estimator is trained on labeled data (a data set that contains a set of input features and the corresponding output values) to predict the output for unseen samples of input data |

| (Model) Training (fitting) | The process through which the parameters of a model (in DL, the weights in a neural networks) are estimated |

| Transfer learning | An ML method where a model developed for one prediction task (a pre-trained network) is reused as the starting point for a similar task [35] |

| Unsupervised learning | A type of ML where the goal is for the algorithm to find structure in unlabeled data |

| Term . | Definition . |

|---|---|

| Activation function | A function that each unit in a neural network applies to the weighted sum of its inputs to calculate its output value [23] |

| Attention mechanism | A method from the field of machine translation that identifies which parts of an input sequence are relevant to the output [24] |

| Backpropagation | An algorithm that propagates the prediction error of a neural network backwards, from the output layer to the input layer so that the gradients with respect to the weights of each unit can be computed [23] |

| Drug response biomarker | A biological characteristic that is predictive of the response of a tumor to a given treatment [25] |

| Classification | A supervised learning task where the output variable is categorical |

| Cross-validation | A model validation technique where data are divided into several subsets (folds) that are successively held out from the training set and used to estimate model performance |

| Data augmentation | Increasing the number of training data points |

| Discriminative model | Learns the conditional probability of the output given the inputs [26] |

| Dose-response relationship | The relationship between the observed effect (response) of a drug and its concentration (dose) [27] |

| Dropout | A technique that ‘drops’ some neurons from a neural network in each iteration to reduce overfitting [28] |

| Drug sensitivity | The susceptibility of a cell line/tumor to a drug |

| Drug synergy | A phenomenon where the response to a combination is enhanced, going beyond the effect that would be expected based on the responses to each individual drug [29] |

| End-to-end learning | A learning approach where feature learning/extraction and outcome prediction are automatically performed by a single neural network instead of requiring multiple steps |

| Ensemble learning | Methods that combine the predictions of multiple ML models (base models), forming a single model |

| Feature selection | A method to reduce model complexity by only considering smaller subsets of the original variables. |

| Generative model | Generative models learn the joint probability distribution of input and output variables being able to generate new inputs for a given distribution [26] |

| High-throughput screening | In the context of this paper, high-throughput screening refers to experiments where many candidate drugs are screened at varying concentrations across a panel of cancer cell lines and response to the drug is measured [1] |

| Hyperparameter | A model parameter that is not learned during training and must be set beforehand |

| Loss function | A function that measures the penalty associated with prediction errors [30] |

| Machine learning | A subfield of artificial intelligence that refers to algorithms that can learn information directly from data and make accurate predictions using a model that is inferred from input data alone [31] |

| Molecular descriptors | Experimentally determined or theoretical properties of compounds summarized in numerical form [32] |

| Molecular fingerprints | A representation of the structure in the form of numerical vectors (e.g. binary fingerprints represent the presence of absence of certain chemical substructures within a molecule) [32] |

| Molecular graph | A representation of the structure of a compound in the form of a graph, where nodes represent atoms and edges represent bonds [33] |

| Multimodal learning | Using models that can relate and learn from data from different modalities (i.e. different input data types) [34] |

| Omics data | Fields of study in biology that focus on characterizing particular biological entities and interactions. Genomics studies genomes of organisms, transcriptomics the set of RNA transcripts, epigenomics the epigenetic modifications, proteomics the proteins of an organism and metabolomics the concentration of compounds |

| One-hot encoding | Converting categorical variables into a numerical (binary) form |

| Overfitting | Overfitting occurs when a model fits the training data well but is unable to generalize to unseen data |

| Regression | A supervised learning task where the output variable is continuous |

| Representation learning | The process through which relevant features are learned automatically from the input data |

| Semi-supervised learning | A type of ML task where an estimator is trained on both labeled and unlabeled data to learn a function mapping input variables to output variables. |

| SMILES strings | A representation of compound structures in the form of ASCII strings |

| Supervised learning | A type of ML task where an estimator is trained on labeled data (a data set that contains a set of input features and the corresponding output values) to predict the output for unseen samples of input data |

| (Model) Training (fitting) | The process through which the parameters of a model (in DL, the weights in a neural networks) are estimated |

| Transfer learning | An ML method where a model developed for one prediction task (a pre-trained network) is reused as the starting point for a similar task [35] |

| Unsupervised learning | A type of ML where the goal is for the algorithm to find structure in unlabeled data |

2 Deep learning

DL refers to a distinct class of ML algorithms based on artificial neural networks (NNs), which, as the name suggests, are inspired by their biological counterparts. Artificial NNs consist of several connected layers, each containing multiple units, also called neurons. A shallow NN is composed of an input layer, a single hidden layer and an output layer, while deep neural networks (DNN) are typically composed of multiple processing layers [23]. This characteristic allows these models to learn complex nonlinear functions. Furthermore, unlike most traditional ML methods, DL approaches typically do not require extensive feature selection before training, since they have the ability to learn higher-order representations directly from raw input data [36].

In the hidden or output layers, each unit receives its inputs from the preceding layer. The connections between nodes in adjacent layers, called edges, each have an associated weight, reflecting the relative importance of a given input. Each unit applies an activation function to the weighted sum of its inputs to calculate its output value [23]. This forward propagation of information is continued until the final output values (last layer) are predicted.

Training an NN is an optimization problem, where the goal is to minimize the difference between the predicted output values and the real values, that is, to minimize the error defined by a suitable loss function. Once an input example has been forward propagated until the output layer, the predicted and real output values are compared, and the error is determined using the defined loss function. To train the network, the gradient of the loss function can be calculated, and then the backpropagation algorithm can be applied so that the error is propagated backwards, from the output layer to the input layer [23]. In this manner, the gradients with respect to the weights can be computed. The weights can then be adjusted using gradient methods, such as stochastic gradient descent (SGD) or variants such as the Adam algorithm [37]. Therefore, learning is achieved by iteratively modifying the weights.

There is a wide variety of DL architectures, some of which are illustrated in Figure 1. In the following paragraphs, we will describe the main types DL models that have been applied to the problem of drug response prediction.

![DL architectures that have been used in drug response prediction models. (A) A fully connected feedforward DNN. (B) An RNN and the corresponding computational graph unfolded in time (t-1, t and t+1 denote different time steps); adapted from [23]. (C) A DBN; adapted from [38]. (D) A CNN. (E) An AE. (F) A VAE; μ and σ are vectors of parameters defining the distributions of the latent variables.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/bib/22/1/10.1093_bib_bbz171/2/m_bbz171f1.jpeg?Expires=1750208846&Signature=1T4Y~SDJrg9D9Mmb9zN53Ph56J~Ak-MWheWTDE1~8~PoSA6ZNIf5sj-gGUjbU~xNCxsTH-OuWKEcb-1fN4yvZffwRYUGawmRn0-DBmm6nUm3VCqRgs1lL8AUzlRInNTj~Dxmig6qYKqo6D-RLql76rLYOUyF43QB49eM6R3uVp-LClaRyo0MV1vSPNoWZflS5k2DZJ13qr9dGUKYExWCuhVzdI5GqrN5mZ-YIISt~m2uUP0OmuT3ltPj~jfc890zRY2Xu-1qjxZWxo9Dybgv07-lk0xB~I8n~GwkLrZCsOCSW6dawU8eoFdTVz8QCSgQC2lyiUhoxYk-W2UTiJchjQ__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

DL architectures that have been used in drug response prediction models. (A) A fully connected feedforward DNN. (B) An RNN and the corresponding computational graph unfolded in time (t-1, t and t+1 denote different time steps); adapted from [23]. (C) A DBN; adapted from [38]. (D) A CNN. (E) An AE. (F) A VAE; μ and σ are vectors of parameters defining the distributions of the latent variables.

The simplest DL models are fully connected DNNs (Figure 1a). They are similar to the previously described shallow (single hidden layer) artificial NNs but have a greater number of hidden layers. These networks are feedforward networks, which means that they constitute an acyclic graph in which information flows in only one direction, from input nodes to output nodes [35]. DNNs have already been used to estimate drug response in cancer cell lines [39]. More complex models composed of multiple subnetworks (described in Section 5) also usually use a DNN as the final subnetwork that predicts drug response.

Convolutional neural networks (CNN) (Figure 1d) represent another type of feedforward DL model [40]. As the name implies, these NNs apply convolutions in some of their layers, usually in the initial layers of the network. Other common operations in CNNs include pooling and normalization, while the final layers of these networks are usually fully connected layers to allow for supervised classification or regression. Unlike DNNs, CNNs have sparse or local connectivity, which means that units in one layer are only directly connected to certain units in the previous layer [23]. This characteristic helps to preserve the local structure of the data [41]. CNNs also have comparatively fewer parameters to learn as the weights are shared, making them easier to train [23]. Two-dimensional CNNs are particularly well suited to handle input data in the form of a grid (multidimensional arrays), such as images [23], and they can be used for drug response prediction if the input data is represented in the required format (e.g. using compound images as input [42]). One-dimensional CNNs are more appropriate for data in the form of sequences, such as compound Simplified Molecular-Input Line-Entry System (SMILES) strings.

Recurrent neural networks (RNNs) (Figure 1b) are a distinct class of NNs characterized by the existence of cycles in the networks, typically formed due to edges that connect adjacent time steps (recurrent edges) [43]. Nodes with incoming recurrent connections can receive as input not only the current data point but also the values of hidden units from previous time steps. This makes RNNs suitable to model data that are sequential in nature, such as natural language or time series. An RNN can be unfolded in time and represented as deep feedforward networks with the same weights being shared among layers [23]. In drug response prediction models, feature encoders with recurrent layers can be used to learn representations from SMILES strings, for example [44].

Unsupervised DL methods also exist. Autoencoders (AEs) are typically used for dimensionality reduction and feature representation learning before using other ML or DL methods for prediction (Figure 1e). An AE is a NN that learns to reconstruct its inputs [45]. By restricting the number of units in the hidden layers of the network and creating a bottleneck, the AE can learn a low-dimensional latent representation of the original input data, called a ‘code’. A basic AE is therefore composed of two parts: an encoder, which produces the code; and a decoder, which attempts to reconstruct the input from the code [46]. Deep AEs are formed by stacking several AEs. AEs can be used to encode both compounds and omics data, and these learned representations can be fed into a predictive model to estimate drug response [47–49].

There are other variants of AEs, such as variational autoencoders (VAEs) [50], for instance (Figure 1f). A VAE is a generative model based on approximate inference. A VAE models the underlying probability distribution of the latent representations of the inputs. Like other AEs, VAEs are also composed of two networks. The encoder network learns a Gaussian distribution of the possible values of the latent representation from which a given sample could have been generated, while the decoder learns the distribution of the possible values of a sample that could be produced given a certain latent representation [50].

A less common type of DL model is the deep belief network (DBN) [51] (Figure 1c). A DBN is a generative model that consists of several layers of latent variables. The connections between the 1st two layers are undirected, while the connections between the remaining layers are all directed. DBNs can be considered stacks of restricted Boltzmann machines (RBMs) [52], which are composed of a visible layer and a hidden layer, with undirected connections between the two layers and without connections between units in the same layer. They can thus be trained layer by layer [51]. With the addition of a discriminative fine-tuning step at the end, DBNs can also be transformed into discriminative models [51]. DBN models have already been used to predict the response of cancer cell lines to drug combinations [53].

DL models can be implemented using one of the many open source DL libraries that are available. Some of the most popular DL libraries are Python-based, including PyTorch [54] and the TensorFlow [55] Python application programming interface (API). Keras [56] is a higher-level DL API written in Python that facilitates the use of other lower-level libraries such as TensorFlow. Most of the models described in this review were implemented using one of these Python libraries. Another Python library that may be of interest to researchers in this field is DeepChem [57], a DL framework built on top of TensorFlow that offers implementations of chemistry-specific DL architectures and featurization techniques.

3 Data resources

The high-throughput screening of compounds is a common step in the drug discovery process. Its purpose is to determine which compounds or combinations of compounds will potentially result in effective treatments. In recent years, several large-scale anti-cancer drug screens have been undertaken, the results of which have been made available through public repositories. Projects such as Genomics of Drug Sensitivity in Cancer (GDSC) [2], Cancer Cell Line Encyclopedia (CCLE) [1], Cancer Therapeutics Response Portal (CTRP) [3, 4] and NCI-60 [58] provide access to drug sensitivity profiles for a wide variety of cancer cell lines. An extended list of single-drug screening data sets is provided in Table 2. Large pan-cancer drug combination screening data sets have also been made available to the public (Table 2). These data sets can serve as the basis for the development of DL-based drug response prediction models.

In addition to the dose-response data gathered from the high-throughput screening experiments, some of these databases also provide access to omics data characterizing the cancer cell lines that the compounds were screened against. The cancer cell lines used in the National Cancer Institute 60 Human Cancer Cell Line Screen (NCI-60), CCLE and GDSC screening panels, for example, have all been extensively characterized at the molecular level. All three projects provide genomic, transcriptomic and epigenomic data characterizing the cell lines, and proteomics [60, 66] and metabolomics [61] data are available for the NCI-60 and CCLE cell lines. If there is a considerable overlap between cell lines, then the omics data available from these databases can also be useful to extend other screening data sets that only include pharmacological dose-response data, such as the CTRPv2 data set, for example.

| Data set . | Website . | # Compounds . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| NCI-60[58] | https://dtp.cancer.gov/discovery_development/nci-60/ | ˜50 000 | 60 | x | x | x | x | x | x | x | x |

| GlaxoSmithKline [59] | 19 | 311 | x | ||||||||

| CCLE [1, 60, 61] | https://portals.broadinstitute.org/ccle | 24 | 479* | x | x | x | x | x | x | x | x |

| GDSC1 [2] | https://www.cancerrxgene.org/ | 320 | 988 | x | x | x | x | x | |||

| GDSC2 [2] | https://www.cancerrxgene.org/ | 175 | 810 | x | x | x | x | x | |||

| CTRPv1 [3] | https://portals.broadinstitute.org/ctrp.v1/ | 354 | 242 | x | |||||||

| CTRPv2 [4] | https://portals.broadinstitute.org/ctrp/ | 481 | 860 | x | |||||||

| gCSI [62] | http://research-pub.gene.com/gCSI-cellline-data/ | 16 | 410 | x | x | x | x | ||||

| FIMM [63] | 52 | 50 | x |

| Data set . | Website . | # Compounds . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| NCI-60[58] | https://dtp.cancer.gov/discovery_development/nci-60/ | ˜50 000 | 60 | x | x | x | x | x | x | x | x |

| GlaxoSmithKline [59] | 19 | 311 | x | ||||||||

| CCLE [1, 60, 61] | https://portals.broadinstitute.org/ccle | 24 | 479* | x | x | x | x | x | x | x | x |

| GDSC1 [2] | https://www.cancerrxgene.org/ | 320 | 988 | x | x | x | x | x | |||

| GDSC2 [2] | https://www.cancerrxgene.org/ | 175 | 810 | x | x | x | x | x | |||

| CTRPv1 [3] | https://portals.broadinstitute.org/ctrp.v1/ | 354 | 242 | x | |||||||

| CTRPv2 [4] | https://portals.broadinstitute.org/ctrp/ | 481 | 860 | x | |||||||

| gCSI [62] | http://research-pub.gene.com/gCSI-cellline-data/ | 16 | 410 | x | x | x | x | ||||

| FIMM [63] | 52 | 50 | x |

*1457 characterized cell lines in total PHARM, Pharmaceutical data; MUT, mutation data; CNV, copy number variation data; mRNA EXP, mRNA expression data; miRNA EXP, microRNA expression data; METHYL, methylation data; PROT, proteomics data; METAB, metabolomics data

| Data set . | Website . | # Compounds . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| NCI-60[58] | https://dtp.cancer.gov/discovery_development/nci-60/ | ˜50 000 | 60 | x | x | x | x | x | x | x | x |

| GlaxoSmithKline [59] | 19 | 311 | x | ||||||||

| CCLE [1, 60, 61] | https://portals.broadinstitute.org/ccle | 24 | 479* | x | x | x | x | x | x | x | x |

| GDSC1 [2] | https://www.cancerrxgene.org/ | 320 | 988 | x | x | x | x | x | |||

| GDSC2 [2] | https://www.cancerrxgene.org/ | 175 | 810 | x | x | x | x | x | |||

| CTRPv1 [3] | https://portals.broadinstitute.org/ctrp.v1/ | 354 | 242 | x | |||||||

| CTRPv2 [4] | https://portals.broadinstitute.org/ctrp/ | 481 | 860 | x | |||||||

| gCSI [62] | http://research-pub.gene.com/gCSI-cellline-data/ | 16 | 410 | x | x | x | x | ||||

| FIMM [63] | 52 | 50 | x |

| Data set . | Website . | # Compounds . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| NCI-60[58] | https://dtp.cancer.gov/discovery_development/nci-60/ | ˜50 000 | 60 | x | x | x | x | x | x | x | x |

| GlaxoSmithKline [59] | 19 | 311 | x | ||||||||

| CCLE [1, 60, 61] | https://portals.broadinstitute.org/ccle | 24 | 479* | x | x | x | x | x | x | x | x |

| GDSC1 [2] | https://www.cancerrxgene.org/ | 320 | 988 | x | x | x | x | x | |||

| GDSC2 [2] | https://www.cancerrxgene.org/ | 175 | 810 | x | x | x | x | x | |||

| CTRPv1 [3] | https://portals.broadinstitute.org/ctrp.v1/ | 354 | 242 | x | |||||||

| CTRPv2 [4] | https://portals.broadinstitute.org/ctrp/ | 481 | 860 | x | |||||||

| gCSI [62] | http://research-pub.gene.com/gCSI-cellline-data/ | 16 | 410 | x | x | x | x | ||||

| FIMM [63] | 52 | 50 | x |

*1457 characterized cell lines in total PHARM, Pharmaceutical data; MUT, mutation data; CNV, copy number variation data; mRNA EXP, mRNA expression data; miRNA EXP, microRNA expression data; METHYL, methylation data; PROT, proteomics data; METAB, metabolomics data

The CCLE, GDSC and NCI-60 projects only offer baseline cell line data, that is, data obtained before treatment. The Connectivity Map (CMap) [67, 68] and the Library of Integrated Network-Based Cellular Signatures (LINCS) [69, 70] projects are resources that provide data on the transcriptional responses of cancer cells after treatment with small molecules. Another project recently analyzed the response of cells to treatment with small molecules at the proteomic and epigenomic levels [71]. These cellular response signatures can complement the data from other drug screening initiatives and may be a very valuable source of information when building drug response prediction models.

Smaller-scale and more specific (single cancer) drug screening data sets that were not included in Table 2 or Table 3 may cover other cell lines or compounds that were not contemplated by the larger screening initiatives. These data sets may be used to fine-tune models trained on larger pan-cancer data sets, making them more specific to certain tumor types.

Ideally, the data sets used to train drug response prediction models would come from patient cohorts, as cancer cell lines may not be representative of their tumors of origin [72, 73]. Although high-throughput screening has already been attempted using patient-derived xenografts [74] and organoids [75], the vast majority of anti-cancer drug response data sets are currently from cell line-based screening experiments. As a result, DL models are usually trained on the more abundant cell line-based screening data. Nevertheless, patient-derived data can be particularly useful to validate and refine drug response prediction models trained on in vitro data, improving their clinical applicability. For example, patient-level data can be incorporated into DL models of cellular drug response by using the molecular data characterizing patient tumors in a pre-training step, as proposed in a recent study [49]. Large-scale patient-level omics data sets can be obtained from resources such as the Genomic Data Commons (GDC) Portal [76] and the International Cancer Genome Consortium (ICGC) [77].

| Data set . | Website . | # Compounds . | # Combinations . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Merck Compound Screen [64] | 38 | 583 | 39 | x | ||||||||

| NCI-ALMANAC [65] | https://dtp.cancer.gov/ncialmanac | 104 | 5232 | 60 | x | x | x | x | x | x | x | x |

| AstraZeneca-Sanger DREAM [8] | https://www.synapse.org/∖#!Synapse:syn4231880 | 118 | 910 | 85 | x |

| Data set . | Website . | # Compounds . | # Combinations . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Merck Compound Screen [64] | 38 | 583 | 39 | x | ||||||||

| NCI-ALMANAC [65] | https://dtp.cancer.gov/ncialmanac | 104 | 5232 | 60 | x | x | x | x | x | x | x | x |

| AstraZeneca-Sanger DREAM [8] | https://www.synapse.org/∖#!Synapse:syn4231880 | 118 | 910 | 85 | x |

PHARM, Pharmaceutical data; MUT, mutation data; CNV, copy number variation data; mRNA EXP, mRNA expression data; miRNA EXP, microRNA expression data; METHYL, methylation data; PROT, proteomics data; METAB, metabolomics data

| Data set . | Website . | # Compounds . | # Combinations . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Merck Compound Screen [64] | 38 | 583 | 39 | x | ||||||||

| NCI-ALMANAC [65] | https://dtp.cancer.gov/ncialmanac | 104 | 5232 | 60 | x | x | x | x | x | x | x | x |

| AstraZeneca-Sanger DREAM [8] | https://www.synapse.org/∖#!Synapse:syn4231880 | 118 | 910 | 85 | x |

| Data set . | Website . | # Compounds . | # Combinations . | # Cell lines . | PHARM . | MUT . | CNV . | mRNA EXP . | miRNA EXP . | METHYL . | PROT . | METAB . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Merck Compound Screen [64] | 38 | 583 | 39 | x | ||||||||

| NCI-ALMANAC [65] | https://dtp.cancer.gov/ncialmanac | 104 | 5232 | 60 | x | x | x | x | x | x | x | x |

| AstraZeneca-Sanger DREAM [8] | https://www.synapse.org/∖#!Synapse:syn4231880 | 118 | 910 | 85 | x |

PHARM, Pharmaceutical data; MUT, mutation data; CNV, copy number variation data; mRNA EXP, mRNA expression data; miRNA EXP, microRNA expression data; METHYL, methylation data; PROT, proteomics data; METAB, metabolomics data

Prior knowledge from additional data sources can complement and enrich the data from these publicly available drug screening data sets. Further information on the compounds screened in these experiments can be retrieved from PubChem [78], ChEMBL [79] or DrugBank [80], for instance. Information on drug targets and the pathways underlying drug response can be acquired from public databases, such as search tool for interactions of chemicals (STITCH) [81] and the Guide to Pharmacology [82].

External data sources can also be useful when exploring cell line omics data. The Catalogue Of Somatic Mutations In Cancer (COSMIC) database [83] is an important source of information on somatic mutations in cancer. Project Achilles [84], now a part of Cancer Dependency Map (DepMap) [85], is a project that aims to provide information on gene essentiality for cancer cell lines that have been extensively characterized at the molecular level. The Pharmacogenomics Knowledgebase (PharmGKB) [86] provides information regarding the influence of genetic variation on drug response. Besides these, many other sources of prior biological knowledge can be leveraged to enrich the drug response data sets and create more biologically informed models.

The Dialogue on Reverse Engineering Assessment and Methods (DREAM) challenges initiative is a community effort aimed at developing new computational approaches to address important biological and human health questions. In recent years, the DREAM community has proposed several challenges, which can be useful sources of drug response data. In 2012, the community launched the National Cancer Institute (NCI)-DREAM Drug Sensitivity [10] and Drug Synergy [7] Challenges. The Drug Sensitivity challenge was aimed at building models to predict and rank the sensitivity of breast cancer cell lines to individual compounds, while the goal of the Drug Synergy challenge [7] was to predict the effect of drug combinations on a diffuse large B-cell lymphoma cell line. In September 2015, the DREAM community launched the AstraZeneca-Sanger Drug Combination Prediction challenge [8] to gather more insight on the factors underlying drug synergy and to accelerate the development of methods to predict drug combination effects. Challenge participants had access to a drug combination screening data set provided by AstraZeneca which has now been published [8].

Other similar, but not cancer-specific, DREAM challenges can also be important sources of data that can complement the large anti-cancer drug screening data sets. For instance, the NIEHS-NCATS-UNC DREAM Toxicogenetics Challenge [87], which was aimed at predicting the cytotoxicity of compounds in humans, provides access to data that can be used to determine the toxic effects of anti-cancer drugs on patients.

4 Implementing deep learning workflows for drug response prediction

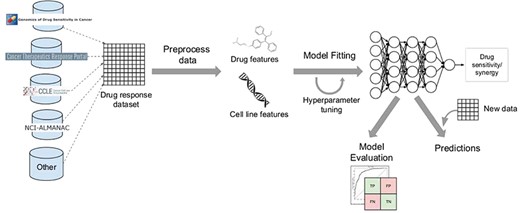

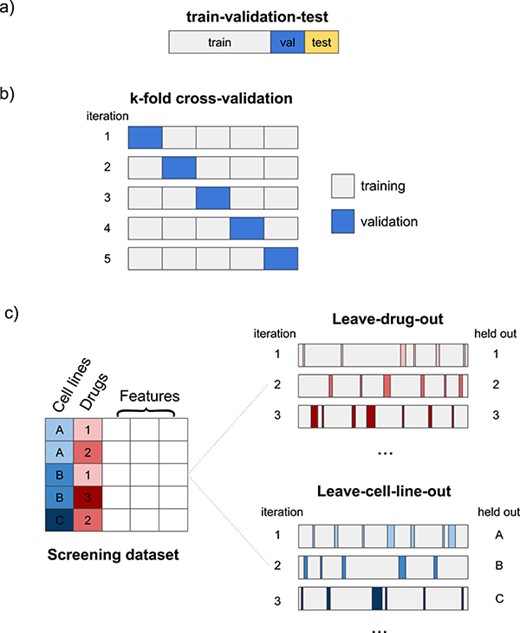

Most drug response prediction workflows follow the same general steps, which are shown in Figure 2. These include the following:

Selecting a DL framework to implement the model.

Defining the prediction problem (drug sensitivity versus drug synergy prediction).

Selecting the data set(s) used to train the model (see Tables 2 and 3 for lists of data sets for each type of problem).

Defining which data types will be used as inputs (Figure 3 provides an overview of the most common types of input data and output variables used in these models, as well as some of the most common preprocessing steps).

Deciding how multiple data types will be handled. Users must decide whether they will concatenate all features irrespective of data type or if they will use a multimodal strategy with separate feature-encoding subnetworks for each data type.

Defining the model architecture. Input data type will determine the types of DL architectures that can be used for each network, as well as the preprocessing methods that need to be applied. For example, RNN-based architectures will require input data that is sequential in nature, such as SMILES strings.

Preprocessing and selecting appropriate data representations.

Training the model and tuning hyperparameters. Hyperparameter optimization can be achieved through manual tuning, by performing a search across all or a subset of possible combinations of user-specified values or by using other optimization techniques.

Evaluating model performance. Researchers need to select appropriate scoring metrics, define how the screening data set will be split into training and validation/test sets and select any external data sets that will be used to validate the model.

Interpreting and explaining model predictions. Many of the model explanation methods mentioned in Section 8 have been implemented as Python packages and can be easily incorporated into the workflow. Other post hoc analyses of the predictions can also be performed, as described in many of the studies reviewed here.

![Input and output data types commonly used when building DL-based drug response prediction models. Compound structures are usually represented as SMILES strings in drug screening data sets. SMILES can be fed directly into DL models that learn embeddings, or they can be used to calculate molecular descriptors or molecular fingerprints. SMILES strings can also be one-hot encoded, transformed into the corresponding molecular graphs, which can then be used as input to graph convolutional networks, or converted into an image of the compound, which can be used as input to regular convolutional neural networks. Cell line input features are usually somatic mutations, copy number variations and gene expression data, although other omics data (epigenomics, proteomics etc.) can also be incorporated into the models. Somatic mutations are usually binarized (presence/absence of an alteration). Copy number variations can be summarized as binary features, as scores (e.g. G-scores [88]), or in some other form. Gene expression features are usually continuous features, which have undergone some form of normalization. Target information, when used, is usually used to derive features that reflect the pathways a particular target is associated with. The outputs of drug response prediction models are values describing the dose–response relationships. For single drugs, this is usually half maximal inhibitory concentration ($IC^{50}$), 50% growth inhibition $(GI^{50})$ or area under the dose–response curve (AUC). For drug combinations, the output variable is usually a score that quantifies drug combination effects based on a given reference model, such as the Loewe additivity [89] or Bliss independence [90] models.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/bib/22/1/10.1093_bib_bbz171/2/m_bbz171f2.jpeg?Expires=1750208846&Signature=DJ0RFKAsS0RixT-aer4Hhyn7mMH19YI~EVtRoqPXVbOV19amhLuGhgRIYq9SEqTaNVeE8~bEyMVX~rs4u~nute2wbW2nqLmciIiB0h5lUsNqO~tuN71fJu~nYRvTCUnM7cwSKSzpVCfm12CLFfs0FUeaGZ86gUOoonPgf5vXr0DchpEK8QSc5KRhKJUW2vMyO0RJ0V-qBWJ6q8rskw~2RbirVRQsdC7NCcEzzFOJEc19H5kvZ52l10e0NG-BmXBYFn-A01WRps4h0pNYcJVQMvL1ZNoHAOds-xmxrdnr~NnRJAMlIusRxH6HKncBmCXtCFQPNYv~HX0WkFH7ieROnw__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Input and output data types commonly used when building DL-based drug response prediction models. Compound structures are usually represented as SMILES strings in drug screening data sets. SMILES can be fed directly into DL models that learn embeddings, or they can be used to calculate molecular descriptors or molecular fingerprints. SMILES strings can also be one-hot encoded, transformed into the corresponding molecular graphs, which can then be used as input to graph convolutional networks, or converted into an image of the compound, which can be used as input to regular convolutional neural networks. Cell line input features are usually somatic mutations, copy number variations and gene expression data, although other omics data (epigenomics, proteomics etc.) can also be incorporated into the models. Somatic mutations are usually binarized (presence/absence of an alteration). Copy number variations can be summarized as binary features, as scores (e.g. G-scores [88]), or in some other form. Gene expression features are usually continuous features, which have undergone some form of normalization. Target information, when used, is usually used to derive features that reflect the pathways a particular target is associated with. The outputs of drug response prediction models are values describing the dose–response relationships. For single drugs, this is usually half maximal inhibitory concentration (|$IC^{50}$|), 50% growth inhibition |$(GI^{50})$| or area under the dose–response curve (AUC). For drug combinations, the output variable is usually a score that quantifies drug combination effects based on a given reference model, such as the Loewe additivity [89] or Bliss independence [90] models.

The usual steps in a DL-based drug response prediction workflow. A drug screening dataset is obtained from GDSC, CCLE or other resources. Complementary data and prior knowledge from other databases can also be collected at this stage. The data are then preprocessed according to data type. This results in a set of drug features and multiple omics features characterizing the cell lines. Afterwards, these distinct sets of features are either merged into a single training set or fed into separate encoding subnetworks. The model is then fit on the training set. Model hyperparameters can be optimized during the training stage. Different model validation techniques are used to evaluate model performance and select the best model. After training, the model can be used to predict drug response for new samples.

To illustrate these steps, we present a hypothetical drug response prediction workflow for the problem of drug sensitivity prediction. The 1st steps could be selecting the GDSC data set for model training and deciding to use gene expression data and one-hot encoded SMILES strings as input data. We could opt to use a multimodal model with a subnetwork for gene expression composed of fully connected layers and an encoding subnetwork for compounds that is a 1D CNN, both linked to a final prediction DNN. Such a network could be implemented using the Keras Python package. Hyperparameter optimization could be performed using grid search with cross-validation. After training the model, a model interpretability tool such as the shap Python package could be used to explain the model and determine feature importance. Model performance could be evaluated using the leave-drug-out and leave-cell-line out approaches, and the model could be further validated on the CCLE data set. Considering that the problem is a regression task, we would use regression-specific scoring metrics such as |$R^2$| to measure model performance.

The workflow would be very similar for a hypothetical drug synergy prediction problem. The main differences would be the screening data sets used for training and external validation, the output variable (measuring drug combination effects instead of drug sensitivity) and the model architecture would have to be adapted to allow for more than one drug. Since the hypothetical NN described in the previous example is modular and already includes a compound-encoding subnetwork, it could simply be extended by, for example, adding a subnetwork for the 2nd compound that could share weights with the 1st compound subnetwork.

5 Drug response prediction models

Until recently, DL had seldom been applied to pharmacogenomics problems such as drug response prediction, but interest in DL approaches has greatly increased in the past few years. In this section, we first introduce readers to the main steps involved in DL-based workflows for drug response prediction, and then we briefly review the DL models for drug response prediction that have been published so far. For the most part, we did not consider preprints that have not been peer-reviewed yet. Table 4 summarizes the 1st few studies that have included DL in some form in their drug response prediction workflows. Tables 5 and 6 summarize the performance scores achieved by DL-based drug response prediction models for single drugs and drug combinations, respectively, as reported by the original studies.

| Study . | Model . | Training data sets . | Input data types . | Prediction task . |

|---|---|---|---|---|

| Ding et al. [47] | Deep AEs + elastic nets/SVMs | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| Dr.VAE [91] | VAE + logistic regression | Cmap & CTRPv2 | mRNA EXP (before & after treatment) | Joint modeling of drug sensitivity & drug perturbation signatures |

| DeepDSC [48] | Stacked AE + DNN | CCLE & GDSC | mRNA EXP, FP | Drug sensitivity |

| DeepDR [49] | DNN with separate feature-encoding subnetworks for each data type (encoders pre-trained on patient data) | GDC & CCLE & GDSC | MUT, mRNA EXP | Drug sensitivity |

| PaccMann [44] | DL models with a gene expression encoder with an attention mechanism and a compound encoder (bRNN, SCNN, SA, CA or MCA) | GDSC | mRNA EXP, SMILES | Drug sensitivity |

| MOLI [92] | DNN with separate feature-encoding subnetworks for each data type | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| tCNNS [93] | 1D CNN, with separate encoders for drugs and genomic data | GDSC | MUT, CNV, SMILES | Drug sensitivity |

| KekuleScope [42] | CNN models pre-trained on ImageNet | ChEMBL | Compound images (Kekulé structures) | Drug sensitivity |

| Matlock et al. [94] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, or synthetic data | mRNA EXP and/or DSCRPTR, target information | Drug sensitivity |

| ELDAP [95] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, LINCS | mRNA EXP, drug activity and cell line sensitivity signatures derived from drug-induced gene expression profiles | Drug sensitivity |

| CDRScan [96] | Ensemble of 5 CNNs | COSMIC, GDSC | MUT, FP & DSCRPTR | Drug sensitivity |

| DeepSynergy [39] | DNN | GDSC & Merck Compound Screen | mRNA EXP, FP & other compound features | Drug synergy |

| Xia et al. [97] | DNN with separate feature-encoding subnetworks for each data type | NCI-ALMANAC & NCI-60 | mRNA EXP, PROT, miRNA EXP, DSCRPTR | Drug synergy |

| Chen et al. [53] | DBN | AstraZeneca-Sanger DREAM & GDSC | mRNA EXP, ontology fingerprints | Drug synergy |

| ‘DMIS new model’ [8] | DL model with four different feature-encoding modules | AstraZeneca-Sanger DREAM & GDSC | MUT, target, drug & cell line-related features | Drug synergy |

| Study . | Model . | Training data sets . | Input data types . | Prediction task . |

|---|---|---|---|---|

| Ding et al. [47] | Deep AEs + elastic nets/SVMs | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| Dr.VAE [91] | VAE + logistic regression | Cmap & CTRPv2 | mRNA EXP (before & after treatment) | Joint modeling of drug sensitivity & drug perturbation signatures |

| DeepDSC [48] | Stacked AE + DNN | CCLE & GDSC | mRNA EXP, FP | Drug sensitivity |

| DeepDR [49] | DNN with separate feature-encoding subnetworks for each data type (encoders pre-trained on patient data) | GDC & CCLE & GDSC | MUT, mRNA EXP | Drug sensitivity |

| PaccMann [44] | DL models with a gene expression encoder with an attention mechanism and a compound encoder (bRNN, SCNN, SA, CA or MCA) | GDSC | mRNA EXP, SMILES | Drug sensitivity |

| MOLI [92] | DNN with separate feature-encoding subnetworks for each data type | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| tCNNS [93] | 1D CNN, with separate encoders for drugs and genomic data | GDSC | MUT, CNV, SMILES | Drug sensitivity |

| KekuleScope [42] | CNN models pre-trained on ImageNet | ChEMBL | Compound images (Kekulé structures) | Drug sensitivity |

| Matlock et al. [94] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, or synthetic data | mRNA EXP and/or DSCRPTR, target information | Drug sensitivity |

| ELDAP [95] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, LINCS | mRNA EXP, drug activity and cell line sensitivity signatures derived from drug-induced gene expression profiles | Drug sensitivity |

| CDRScan [96] | Ensemble of 5 CNNs | COSMIC, GDSC | MUT, FP & DSCRPTR | Drug sensitivity |

| DeepSynergy [39] | DNN | GDSC & Merck Compound Screen | mRNA EXP, FP & other compound features | Drug synergy |

| Xia et al. [97] | DNN with separate feature-encoding subnetworks for each data type | NCI-ALMANAC & NCI-60 | mRNA EXP, PROT, miRNA EXP, DSCRPTR | Drug synergy |

| Chen et al. [53] | DBN | AstraZeneca-Sanger DREAM & GDSC | mRNA EXP, ontology fingerprints | Drug synergy |

| ‘DMIS new model’ [8] | DL model with four different feature-encoding modules | AstraZeneca-Sanger DREAM & GDSC | MUT, target, drug & cell line-related features | Drug synergy |

MUT, mutation data; CNV, copy number variation data; mRNA EXP, mRNA expression data; miRNA EXP, microRNA expression data; PROT, proteomics data; SMILES, Simplified Molecular-Input Line-Entry System strings; FP, molecular fingerprints; DSCRPTR, molecular descriptors; bRNN, bidirectional recurrent neural network; SCNN, stacked convolutional neural network; SA, self-attention; CA, contextual attention; MCA, multichannel convolutional attentive

| Study . | Model . | Training data sets . | Input data types . | Prediction task . |

|---|---|---|---|---|

| Ding et al. [47] | Deep AEs + elastic nets/SVMs | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| Dr.VAE [91] | VAE + logistic regression | Cmap & CTRPv2 | mRNA EXP (before & after treatment) | Joint modeling of drug sensitivity & drug perturbation signatures |

| DeepDSC [48] | Stacked AE + DNN | CCLE & GDSC | mRNA EXP, FP | Drug sensitivity |

| DeepDR [49] | DNN with separate feature-encoding subnetworks for each data type (encoders pre-trained on patient data) | GDC & CCLE & GDSC | MUT, mRNA EXP | Drug sensitivity |

| PaccMann [44] | DL models with a gene expression encoder with an attention mechanism and a compound encoder (bRNN, SCNN, SA, CA or MCA) | GDSC | mRNA EXP, SMILES | Drug sensitivity |

| MOLI [92] | DNN with separate feature-encoding subnetworks for each data type | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| tCNNS [93] | 1D CNN, with separate encoders for drugs and genomic data | GDSC | MUT, CNV, SMILES | Drug sensitivity |

| KekuleScope [42] | CNN models pre-trained on ImageNet | ChEMBL | Compound images (Kekulé structures) | Drug sensitivity |

| Matlock et al. [94] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, or synthetic data | mRNA EXP and/or DSCRPTR, target information | Drug sensitivity |

| ELDAP [95] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, LINCS | mRNA EXP, drug activity and cell line sensitivity signatures derived from drug-induced gene expression profiles | Drug sensitivity |

| CDRScan [96] | Ensemble of 5 CNNs | COSMIC, GDSC | MUT, FP & DSCRPTR | Drug sensitivity |

| DeepSynergy [39] | DNN | GDSC & Merck Compound Screen | mRNA EXP, FP & other compound features | Drug synergy |

| Xia et al. [97] | DNN with separate feature-encoding subnetworks for each data type | NCI-ALMANAC & NCI-60 | mRNA EXP, PROT, miRNA EXP, DSCRPTR | Drug synergy |

| Chen et al. [53] | DBN | AstraZeneca-Sanger DREAM & GDSC | mRNA EXP, ontology fingerprints | Drug synergy |

| ‘DMIS new model’ [8] | DL model with four different feature-encoding modules | AstraZeneca-Sanger DREAM & GDSC | MUT, target, drug & cell line-related features | Drug synergy |

| Study . | Model . | Training data sets . | Input data types . | Prediction task . |

|---|---|---|---|---|

| Ding et al. [47] | Deep AEs + elastic nets/SVMs | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| Dr.VAE [91] | VAE + logistic regression | Cmap & CTRPv2 | mRNA EXP (before & after treatment) | Joint modeling of drug sensitivity & drug perturbation signatures |

| DeepDSC [48] | Stacked AE + DNN | CCLE & GDSC | mRNA EXP, FP | Drug sensitivity |

| DeepDR [49] | DNN with separate feature-encoding subnetworks for each data type (encoders pre-trained on patient data) | GDC & CCLE & GDSC | MUT, mRNA EXP | Drug sensitivity |

| PaccMann [44] | DL models with a gene expression encoder with an attention mechanism and a compound encoder (bRNN, SCNN, SA, CA or MCA) | GDSC | mRNA EXP, SMILES | Drug sensitivity |

| MOLI [92] | DNN with separate feature-encoding subnetworks for each data type | GDSC | MUT, CNV, mRNA EXP | Drug sensitivity |

| tCNNS [93] | 1D CNN, with separate encoders for drugs and genomic data | GDSC | MUT, CNV, SMILES | Drug sensitivity |

| KekuleScope [42] | CNN models pre-trained on ImageNet | ChEMBL | Compound images (Kekulé structures) | Drug sensitivity |

| Matlock et al. [94] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, or synthetic data | mRNA EXP and/or DSCRPTR, target information | Drug sensitivity |

| ELDAP [95] | Heterogeneous ensembles that include DNNs | CCLE, GDSC, LINCS | mRNA EXP, drug activity and cell line sensitivity signatures derived from drug-induced gene expression profiles | Drug sensitivity |

| CDRScan [96] | Ensemble of 5 CNNs | COSMIC, GDSC | MUT, FP & DSCRPTR | Drug sensitivity |

| DeepSynergy [39] | DNN | GDSC & Merck Compound Screen | mRNA EXP, FP & other compound features | Drug synergy |

| Xia et al. [97] | DNN with separate feature-encoding subnetworks for each data type | NCI-ALMANAC & NCI-60 | mRNA EXP, PROT, miRNA EXP, DSCRPTR | Drug synergy |

| Chen et al. [53] | DBN | AstraZeneca-Sanger DREAM & GDSC | mRNA EXP, ontology fingerprints | Drug synergy |

| ‘DMIS new model’ [8] | DL model with four different feature-encoding modules | AstraZeneca-Sanger DREAM & GDSC | MUT, target, drug & cell line-related features | Drug synergy |

MUT, mutation data; CNV, copy number variation data; mRNA EXP, mRNA expression data; miRNA EXP, microRNA expression data; PROT, proteomics data; SMILES, Simplified Molecular-Input Line-Entry System strings; FP, molecular fingerprints; DSCRPTR, molecular descriptors; bRNN, bidirectional recurrent neural network; SCNN, stacked convolutional neural network; SA, self-attention; CA, contextual attention; MCA, multichannel convolutional attentive

Performance scores for some of the drug response prediction models for single compounds referred to in this review

| Model . | Screening data set . | Validation scheme . | MSE . | RMSE . | |$R^2$| . | r . | |$\textit{r}_s$| . | AUROC . | AUPRC . | TPR . | TNR . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ding et al. [47] (per-drug results) | GDSC | 25-fold CV | 1.96 | 0.82 | 0.82 | ||||||

| Ding et al. [47] (per-cell line results) | GDSC | 25-fold CV | 0.80 | 0.82 | |||||||

| Ding et al. [47] | CCLE | External test set | 0.67 | ||||||||

| Dr. VAE (per-drug models) | CTRPv2 | 100 train-validation-test splits (20 x 5-fold CV) | 0.706 | 0.718 | |||||||

| DeepDSC [48] | CCLE | 10-fold CV | 0.23 | 0.78 | |||||||

| DeepDSC [48] | GDSC | 10-fold CV | 0.52 | 0.78 | |||||||

| DeepDSC [48] | CCLE | Leave-one-tissue-out | 0.28 | 0.73 | |||||||

| DeepDSC [48] | GDSC | Leave-one-tissue-out | 0.64 | 0.66 | |||||||

| DeepDSC [48] | CCLE | Leave-one-drug-out | 0.61 | 0.05 | |||||||

| DeepDSC [48] | GDSC | Leave-one-drug-out | 1.24 | 0.04 | |||||||

| DeepDR [49] | GDSC | 100 train-validation-test splits | 1.96 (median) | ||||||||

| PaccMann [44] (bRNN) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.118 | ||||||||

| PaccMann [44] (SCNN) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.133 | ||||||||

| PaccMann [44] (SA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.112 | ||||||||

| PaccMann [44] (CA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.110 | ||||||||

| PaccMann [44] (MCA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.120 | ||||||||

| tCNNS [93] | GDSC | 50 train-validation-test splits (80%/10%/10%) (different interaction pairs in each set) | 0.027 | 0.826 | 0.909 | ||||||

| tCNNS [93] | GDSC | Leave-one-tissue-out | 0.039 | 0.665 | 0.818 | ||||||

| CDRScan [96] | GDSC | train-test split (95%/5%) | 1.069 | 0.843 | 0.98 | ||||||

| CDRScan [96] | GDSC | 5-fold CV | 0.847 |

| Model . | Screening data set . | Validation scheme . | MSE . | RMSE . | |$R^2$| . | r . | |$\textit{r}_s$| . | AUROC . | AUPRC . | TPR . | TNR . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ding et al. [47] (per-drug results) | GDSC | 25-fold CV | 1.96 | 0.82 | 0.82 | ||||||

| Ding et al. [47] (per-cell line results) | GDSC | 25-fold CV | 0.80 | 0.82 | |||||||

| Ding et al. [47] | CCLE | External test set | 0.67 | ||||||||

| Dr. VAE (per-drug models) | CTRPv2 | 100 train-validation-test splits (20 x 5-fold CV) | 0.706 | 0.718 | |||||||

| DeepDSC [48] | CCLE | 10-fold CV | 0.23 | 0.78 | |||||||

| DeepDSC [48] | GDSC | 10-fold CV | 0.52 | 0.78 | |||||||

| DeepDSC [48] | CCLE | Leave-one-tissue-out | 0.28 | 0.73 | |||||||

| DeepDSC [48] | GDSC | Leave-one-tissue-out | 0.64 | 0.66 | |||||||

| DeepDSC [48] | CCLE | Leave-one-drug-out | 0.61 | 0.05 | |||||||

| DeepDSC [48] | GDSC | Leave-one-drug-out | 1.24 | 0.04 | |||||||

| DeepDR [49] | GDSC | 100 train-validation-test splits | 1.96 (median) | ||||||||

| PaccMann [44] (bRNN) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.118 | ||||||||

| PaccMann [44] (SCNN) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.133 | ||||||||

| PaccMann [44] (SA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.112 | ||||||||

| PaccMann [44] (CA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.110 | ||||||||

| PaccMann [44] (MCA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.120 | ||||||||

| tCNNS [93] | GDSC | 50 train-validation-test splits (80%/10%/10%) (different interaction pairs in each set) | 0.027 | 0.826 | 0.909 | ||||||

| tCNNS [93] | GDSC | Leave-one-tissue-out | 0.039 | 0.665 | 0.818 | ||||||

| CDRScan [96] | GDSC | train-test split (95%/5%) | 1.069 | 0.843 | 0.98 | ||||||

| CDRScan [96] | GDSC | 5-fold CV | 0.847 |

MSE, mean squared error; RMSE, root mean squared error; MAE, mean absolute error; |$R^2$|, coefficient of determination; r, Pearson correlation coefficient; |$\textit{r}_s$|, Spearman’s rank correlation coefficient; AUROC, area under the receiver operating characteristic curve; AUPRC, area under the precision-recall curve; TPR, true positive rate/recall/sensitivity; TNR, true negative rate/specificity; CV, cross-validation; bRNN, bidirectional recurrent neural network; SCNN, stacked convolutional neural network; SA, self-attention; CA, contextual attention; MCA, multichannel convolutional attentive

Performance scores for some of the drug response prediction models for single compounds referred to in this review

| Model . | Screening data set . | Validation scheme . | MSE . | RMSE . | |$R^2$| . | r . | |$\textit{r}_s$| . | AUROC . | AUPRC . | TPR . | TNR . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ding et al. [47] (per-drug results) | GDSC | 25-fold CV | 1.96 | 0.82 | 0.82 | ||||||

| Ding et al. [47] (per-cell line results) | GDSC | 25-fold CV | 0.80 | 0.82 | |||||||

| Ding et al. [47] | CCLE | External test set | 0.67 | ||||||||

| Dr. VAE (per-drug models) | CTRPv2 | 100 train-validation-test splits (20 x 5-fold CV) | 0.706 | 0.718 | |||||||

| DeepDSC [48] | CCLE | 10-fold CV | 0.23 | 0.78 | |||||||

| DeepDSC [48] | GDSC | 10-fold CV | 0.52 | 0.78 | |||||||

| DeepDSC [48] | CCLE | Leave-one-tissue-out | 0.28 | 0.73 | |||||||

| DeepDSC [48] | GDSC | Leave-one-tissue-out | 0.64 | 0.66 | |||||||

| DeepDSC [48] | CCLE | Leave-one-drug-out | 0.61 | 0.05 | |||||||

| DeepDSC [48] | GDSC | Leave-one-drug-out | 1.24 | 0.04 | |||||||

| DeepDR [49] | GDSC | 100 train-validation-test splits | 1.96 (median) | ||||||||

| PaccMann [44] (bRNN) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.118 | ||||||||

| PaccMann [44] (SCNN) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.133 | ||||||||

| PaccMann [44] (SA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.112 | ||||||||

| PaccMann [44] (CA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.110 | ||||||||

| PaccMann [44] (MCA) | GDSC | 25-fold CV (only different cell lines & drugs in test fold) | 0.120 | ||||||||

| tCNNS [93] | GDSC | 50 train-validation-test splits (80%/10%/10%) (different interaction pairs in each set) | 0.027 | 0.826 | 0.909 | ||||||

| tCNNS [93] | GDSC | Leave-one-tissue-out | 0.039 | 0.665 | 0.818 | ||||||

| CDRScan [96] | GDSC | train-test split (95%/5%) | 1.069 | 0.843 | 0.98 | ||||||

| CDRScan [96] | GDSC | 5-fold CV | 0.847 |

| Model . | Screening data set . | Validation scheme . | MSE . | RMSE . | |$R^2$| . | r . | |$\textit{r}_s$| . | AUROC . | AUPRC . | TPR . | TNR . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ding et al. [47] (per-drug results) | GDSC | 25-fold CV | 1.96 | 0.82 | 0.82 | ||||||

| Ding et al. [47] (per-cell line results) | GDSC | 25-fold CV | 0.80 | 0.82 | |||||||

| Ding et al. [47] | CCLE | External test set | 0.67 | ||||||||

| Dr. VAE (per-drug models) | CTRPv2 | 100 train-validation-test splits (20 x 5-fold CV) | 0.706 | 0.718 | |||||||

| DeepDSC [48] | CCLE | 10-fold CV | 0.23 | 0.78 | |||||||

| DeepDSC [48] | GDSC | 10-fold CV | 0.52 | 0.78 | |||||||

| DeepDSC [48] | CCLE | Leave-one-tissue-out | 0.28 | 0.73 | |||||||

| DeepDSC [48] | GDSC | Leave-one-tissue-out | 0.64 | 0.66 | |||||||

| DeepDSC [48] | CCLE | Leave-one-drug-out | 0.61 | 0.05 | |||||||

| DeepDSC [48] | GDSC | Leave-one-drug-out | 1.24 | 0.04 | |||||||

| DeepDR [49] | GDSC | 100 train-validation-test splits | 1.96 (median) | ||||||||