-

PDF

- Split View

-

Views

-

Cite

Cite

Elizabeth W Diemer, Sonja A Swanson, Diemer and Swanson Reply to “Considerations Before Using Pandemic as Instrument”, American Journal of Epidemiology, Volume 190, Issue 11, November 2021, Pages 2280–2283, https://doi.org/10.1093/aje/kwab175

Close - Share Icon Share

Abstract

Dimitris and Platt (Am J Epidemiol. 2021;190(11):2275-2279) take on the challenging topic of using “shocks” such as the severe acute respiratory system coronavirus 2 (SARS-CoV-2) pandemic as instrumental variables to study the effect of some exposure on some outcome. Evoking our recent lived experiences, they conclude that the assumptions necessary for an instrumental variable analysis will often be violated and therefore strongly caution against such analyses. Here, we build upon this warranted caution while acknowledging that such analyses will still be pursued and conducted. We discuss strategies for evaluating or reasoning about when such an analysis is clearly inappropriate for a given research question, as well as strategies for interpreting study findings with special attention to incorporating plausible sources of bias in any conclusions drawn from a given finding.

Abbreviations

Editor’s note: The opinions expressed in this article are those of the authors and do not necessarily reflect the views of the American Journal of Epidemiology.

Dimitris and Platt (1) take on the challenging topic of using “shocks” such as the severe acute respiratory system coronavirus 2 (SARS-CoV-2) pandemic as instrumental variables to study the effect of some exposure on some outcome. Using thoughtful examples, they generally conclude that the assumptions necessary for an instrumental variable analysis will often be violated and therefore strongly caution against such analyses. The primary, but not only, assumption they take issue with is the exclusion restriction. In essence, they remind us that—for example—to study the effects of remote working on various health outcomes, an instrumental variable analysis of this topic would require blinding ourselves to the many other changes in 2020 that also affected health.

We tend to be as cautious as these authors (2), but we are also realists who acknowledge that such analyses will be pursued or done regardless. Looking forward, this raises some key questions. First, how can we as a research community encourage researchers to use instrumental variable methods when they are potentially appropriate for a particular research question, and likewise discourage the methods’ misuse when clearly inappropriate? Second, knowing that a potentially large number of analyses proposing the SARS-CoV-2 pandemic as an instrument are coming, how can we make sense of the information or added value from such studies? After all, instrumental variable analyses are not the only causal inference approach vulnerable to bias, and there are plenty of examples of potentially intractable biases in the published epidemiologic literature. Indeed, the reason that researchers might be eager to leverage a “shock” such as the SARS-CoV-2 pandemic to estimate the causal effects of socioeconomic exposures is because such exposures are difficult to measure accurately, and other observational studies are likely to be affected by substantial unmeasured confounding (3–5). The goal for our research community is not to completely eliminate the possibility of biased studies (an impossible task), but to minimize bias to the greatest extent possible, and to interpret estimated effects with special attention to how plausible sources of bias might affect a given finding. In the case of studies proposing shocks such as the SARS-CoV-2 pandemic as instruments, the answer then might not necessarily be a message of abstinence (“don’t do this analysis!”) but rather one of harm reduction, focusing on how to empower the entire research community (including researchers, reviewers, editors, and readers) with strategies to scrutinize the underlying assumptions, tools to interpret findings given plausible violations of the assumptions, and a road map for understanding what circumstances do provide useful or useable information.

MAKING SENSE OF AN ESTIMATED EFFECT OF THE PROPOSED INSTRUMENT

First, as with some (but not all) instrumental variable analyses, it is helpful to step back and ask whether the effect of the proposed instrument is itself of interest. In this case, we indeed might want to estimate temporal changes around the pandemic or aspects of the pandemic response. For example, such estimates could be informative for preparing for future epidemics, natural or manmade disasters, or other “shocks” (6–8). Note that interpreting any temporal change as the effect of the pandemic or pandemic response requires no other strong time trends, and the underlying assumptions for inferring that any estimates are transportable to future “shocks” would need to be carefully weighed.

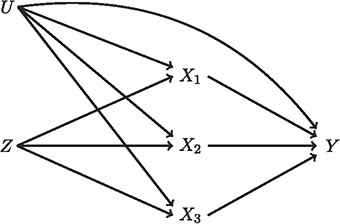

Causal directed acyclic graph showing a variable Z with an effect on Y that is mediated through 3 separate mechanisms (X1, X2, and X3) that share unmeasured causes U with Y. Investigators might interpret a non-null association between Z and Y as evidence that X1, X2, and/or X3 affect Y, although this interpretation presumes that this assumed causal structure is correct (e.g., no other pathways exist from Z to Y) and that faithfulness holds.

Some authors also suggest this effect might be informative for hypothesis generation around particular mechanisms. For example, we note that changes in premature birth were observed in early 2020 in multiple settings (9–11). Intuitively, this time trend alone suggests that one of the substantial changes in pregnant persons’ experiences in 2020 might be a major risk factor for premature birth (e.g., changes in type and magnitude of personal stressors, changes in exposure to other viruses, changes in air pollution, etc.). We note that critically evaluating the evidence from such hypothesis generation is dependent on context and itself requires assumptions. Take for example the causal diagram shown in Figure 1, which illustrates a setting in which the relationship between pregnancy before versus during the SARS-CoV-2 pandemic (Z) and preterm birth (Y) is mediated by exactly 3 factors, maternal SARS-CoV-2 infection (X1), maternal physical labor outside the home (X2), and air pollution (X3), each of which shares unmeasured causes with preterm birth. Under this causal diagram and faithfulness, one could use the association between the SARS-CoV-2 pandemic and preterm birth to test a sharp joint causal null hypothesis that maternal SARS-CoV-2 infection, maternal physical labor, and air pollution have no effect on preterm birth. Interpreting the non-null association between the SARS-CoV-2 pandemic and preterm birth as evidence against this null hypothesis requires believing the assumptions underlying this causal diagram, namely that those 3 exposures are the only mediators. Moreover, this conclusion also requires faithfulness, as it is theoretically possible that the mediators’ effects balance each other out. While perfect balancing is generally considered unlikely to occur, it is worth noting that proposed mechanisms by which the SARS-CoV-2 pandemic might have affected preterm birth include both factors believed to increase risk of preterm birth (e.g., SARS-CoV-2 infection, increased maternal stress) and factors believed to decrease risk of preterm birth (e.g., reduced physical labor outside the home, reduced air pollution exposure, reduced risk of maternal viral infections other than SARS-CoV-2) (12). Given that such hypothesis generation relies on specifying the causal structure to some degree, we caution that the information gleaned from this approach is likely limited except in settings for which the set of potential pathways is reasonably finite.

MAKING SENSE OF AN ESTIMATED EFFECT OF AN EXPOSURE, PROPOSING THE PANDEMIC AS AN INSTRUMENTAL VARIABLE

Dimitris and Platt make a compelling case for why many exposure-outcome pairings would be prone to exclusion restriction violations. Because seemingly minor violations of the assumptions underlying instrumental variable analyses can lead to large and counterintuitive biases, being wary of any of these plausible violations should be the default (2). Of course, evaluation of assumption plausibility needs to happen on a case-by-case basis. Perhaps there will be some settings in which, although the magnitude of bias is unknowable, the direction of bias can be inferred. Perhaps there are settings in which the magnitude of bias can be reasonably believed to be limited. Although bringing subject matter expertise to instrumental variable analyses often focuses on the exposure, the outcome is relevant here, too. For example, the exclusion restriction is violated in an overwhelming number of ways if the goal is to estimate the effect of particular social distancing measures on risk of major depressive episodes; yet perhaps we can begin to imagine estimating the effect of social distancing on influenza hospitalizations.

In addition to reasoning about causal questions of interest using substantive expertise, there are a number of falsification tools available to researchers considering an instrumental variable analysis (13, 14). The instrumental variable model implies a set of constraints, known as the instrumental inequalities, that can be used to falsify (but not verify) a particular instrumental variable model (15–18). An advantage of routinely applying these inequalities to all applicable instrumental variable analyses is that it does not require additional subject matter knowledge or parametric assumptions. Moreover, under the assumption that there are no unmeasured shared causes of the proposed instrument and outcome, the instrumental inequalities can also be interpreted as bounds on the controlled direct effect of the proposed instrument on the outcome, setting the index exposure to a specific level (19). Although these bounds do not give evidence as to the specific mechanism through which such a shock might affect the outcome, they do provide exploratory evidence about the presence and potential magnitudes of other pathways by which the SARS-CoV-2 pandemic might affect outcomes of interest and could complement some of the “hypothesis generation” analyses discussed earlier.

There are additional falsification strategies that can help rule out invalid instrumental variable analyses, although the applicability of each requires additional substantive information that might be context-specific. Though we cannot squeeze an exhaustive list into this article (see, for examples, Labrecque and Swanson (13) and Glymour et al. (14)), let us consider one example here. If there is a known subgroup of the population in which the proposed instrument is not associated with the exposure, any association between the proposed instrument and outcome in that subgroup must be due to bias (20, 21). As such, to study the effects of school closures on obesity, we might falsify our instrumental variable assumptions if we see a change in obesity rates among children who were continually home-schooled before and during or after the pandemic. Importantly, this approach does also require that conditioning on such a subgroup does not introduce a selection bias (22).

Beyond these falsification strategies, there is an arsenal of sensitivity and bias analyses that can be conducted to understand the range of effect sizes consistent with plausible biases, contextualize the magnitude of bias that would need to exist to alter conclusions, or compare the relative bias to non–instrumental variable analyses (13, 14, 17). Some of these types of bias analysis can be done post-hoc on published aggregate results, including the use of E-values in instrumental variable settings (23) and some approaches to quantifying bias due to departures from the no-“defiers” assumption (24).

ON LIVED EXPERIENCES INFORMING JUDICIOUS ANALYSES

Dimitris and Platt end their comments by imploring epidemiologists to use our collective lived experience to apply instrumental variable analyses more judiciously. Let us echo and underscore this sentiment as applicable to all types of causal effect estimation. While epidemiologists spend a lot of energy on unmeasured confounding, this pandemic is a vivid reminder that interference and heterogeneity are features of our lives. The magnitude and direction of the effect of childcare closures on a young child’s well-being is inevitably tied to aspects of and circumstances surrounding their identity, family, community, and country. Moreover, that child’s well-being is not affected just by whether their childcare closed but also by the extent to which other children stayed home both nearby and halfway across the globe. Although epidemiologists will continue to use our many methods that require homogeneity or no-interference assumptions, let us remember that these oft-unspoken assumptions are implausible too. Overall, then, our best path forward is to carry our own lived experiences, and the experiences of other members of our communities and the communities we serve, into our research both when applying existing causal inference techniques and when pursuing new avenues of methods development.

ACKNOWLEDGMENTS

Author affiliations: Department of Epidemiology, Harvard T. H. Chan School of Public Health, Boston, Massachusetts, United States (Elizabeth W. Diemer, Sonja A. Swanson); and Department of Epidemiology, Erasmus Medical Center, Rotterdam, The Netherlands (Sonja A. Swanson).

Conflicts of interest: none declared.