-

PDF

- Split View

-

Views

-

Cite

Cite

Richard Murphy, Felix Weinhardt, Top of the Class: The Importance of Ordinal Rank, The Review of Economic Studies, Volume 87, Issue 6, November 2020, Pages 2777–2826, https://doi.org/10.1093/restud/rdaa020

Close - Share Icon Share

Abstract

This article establishes a new fact about educational production: ordinal academic rank during primary school has lasting impacts on secondary school achievement that are independent of underlying ability. Using data on the universe of English school students, we exploit naturally occurring differences in achievement distributions across primary school classes to estimate the impact of class rank. We find large effects on test scores, confidence, and subject choice during secondary school, even though these students have a new set of peers and teachers who are unaware of the students’ prior ranking in primary school. The effects are especially pronounced for boys, contributing to an observed gender gap in the number of Maths courses chosen at the end of secondary school. Using a basic model of student effort allocation across subjects, we distinguish between learning and non-cognitive skills mechanisms, finding support for the latter.

1. Introduction

Education is an important determinant of welfare, both individually and nationally. As a result, there exists an encyclopaedic body of literature examining educational choices and production. Yet the lasting impacts of a student’s ordinal rank within their class, conditional on achievement, have not been considered. Why might rank matter? It is human nature to make social comparisons in terms of characteristics, traits, and abilities (Festinger, 1954), and people often use cognitive shortcuts when doing so (Tversky and Kahneman, 1974). One such heuristic is to use simple ordinal rank information.

Recent papers have shown that an individual’s rank impacts their well-being and job satisfaction, conditional on their cardinal relative position (Brown et al., 2008; Card et al., 2012). In other words, people are influenced not only by their position relative to their peers but also by their ordinal ranking amongst them. This influence may ultimately impact beliefs and outcomes through its effects on an individual’s actions and investment decisions, or those of others around them.

We apply this idea to education and present the first empirical evidence that a student’s academic rank during primary school has a lasting impact on their secondary school performance. Our analysis proposes a novel approach for isolating rank effects by exploiting idiosyncratic variation in the test score distributions across primary school classes. This variation occurs because classes are small and students vary in ability. As a result, students with the same test scores may have different ranks depending upon which class they attend.

A key concern is that test scores are not comparable across classes. Classes with better resources may be able to generate higher test scores, and we account for this by including class fixed effects. We estimate rank effects based on the remaining variation in peer achievement distributions across classes. This means we are now effectively comparing students in different classes who have the same test score relative to their class mean, but different rankings due to the test score distributions of their classes. We discuss in detail the variation and assumptions required to estimate rank effects in Section 2.

We use administrative data on five cohorts of the entire English state school population covering almost 2.3 million students attending 14,500 different primary schools. All students are tested in national exams in three subjects (English, Maths, and Science) at the end of primary school at age 11 and twice more during secondary school at ages 14 and 16. We calculate the rank of each student in every primary school in each subject. As the median primary school has only 27 students per cohort and the legal maximum primary class size is 30, we consider these school-subject-cohort (SSC) groupings as classes. Therefore, when we refer to “classes,” we are referring to SSCs (e.g. a maths class in school A in cohort 1).

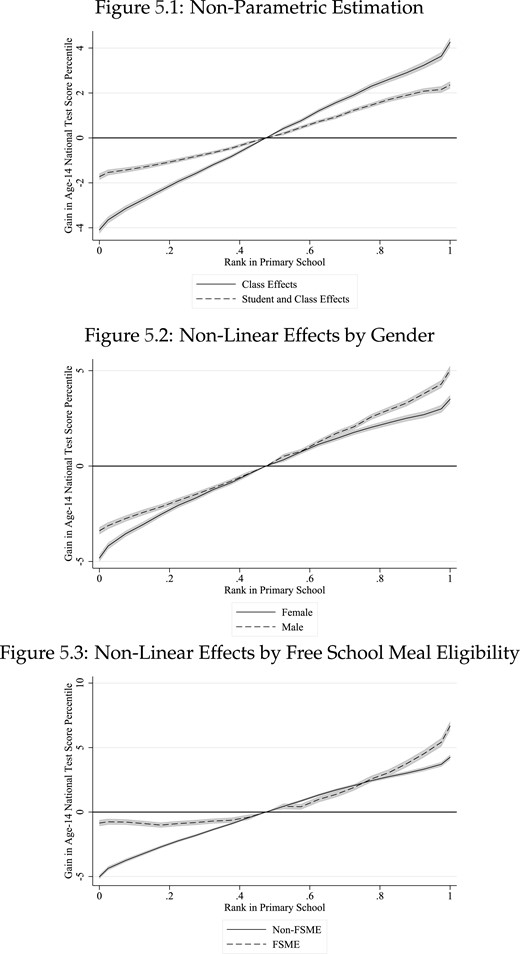

Our main finding is that students achieve higher test scores in a subject throughout secondary school if they had a higher rank in that subject during primary school. A one standard deviation increase in rank in primary school improves age-14 and age-16 test scores by around 0.08 standard deviations. This is comparable to estimates of the impact on test scores of being taught by a teacher one standard deviation better than the average (Rivkin et al., 2005; Aaronson et al., 2007). This rank impact is four times larger than the presence of a single disruptive peer in a class throughout elementary school on high school outcomes (Carrell et al., 2018). These effects vary by pupil characteristics. Boys are more affected by their primary rank than girls. Students who are free school meal eligible (FSME) are not negatively impacted by low ranks, but gain more from high ranks compared to non-FSME students. This heterogeneity allows for the possibility of net gains in test scores from regrouping students. We discuss this in the conclusion.

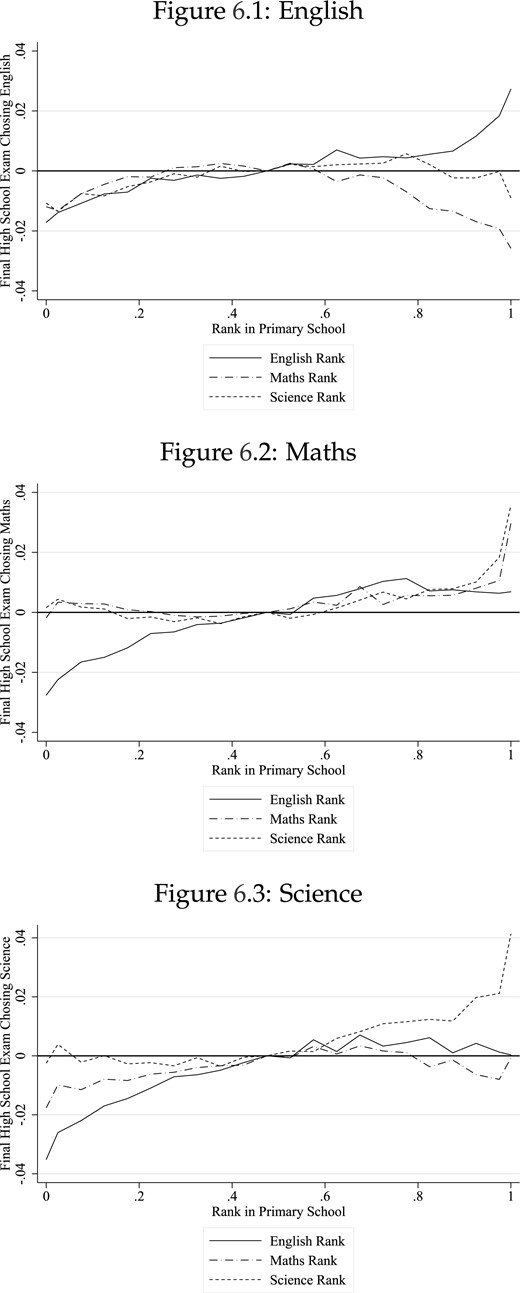

Having an observation for each student in each subject also allows us to analyse subject-specific effects and spillovers. We find the impact of rank to be similar for English, Maths, and Science, and there are considerable spillover effects across subjects. While these spillover effects are positive for student achievement, we also show that students who are highly ranked in maths are less likely to specialize in English at the end of secondary school. The choice of subjects taken at the end of secondary school in England is important as it determines which degree courses can then be taken at university. We find that being at the top of the class in a subject during primary school, rather than at the median, increases the probability of an individual choosing that subject by almost 20 percent. We provide evidence that this previously undiscovered channel contributes to the well-documented gender gap in the STEM (Science, Technology, Engineering, and Mathematics) subjects (Guiso et al., 2008; Joensen and Nielsen, 2009; Bertrand et al., 2010). Equalizing the primary rankings in subjects across genders would reduce the total STEM gender gap by about 7%.

Claiming that these rank effects are causal requires two key assumptions. The first is that student rank, conditional on student test scores, demographics, and SSC effects, is “as good as” random. To test this, we provide evidence that parents are not sorting to primary schools on the basis of rank, and that systematic measurement error in test scores is not driving the results. The second assumption is that any specification error is orthogonal to rank. To test this, we show that our estimates are robust to a range of functional forms for prior test scores, including higher order polynomials and even non-parametric specifications. We also examine the effects of allowing the impact to vary by school, subject, or cohort.1

We go on to consider several explanations for what could be causing these rank effects. By combining the administrative and survey data of 12,000 students, we test the channels of competitiveness, parental investment (through time or money), school environment favouring certain ranks (i.e. tracking), and confidence. We find that primary school rank in a subject has an impact on self-reported confidence in that subject during secondary school. In parallel to what we find with regard to academic achievement, we also find that boys’ confidence is more affected by their school rank than girls’.

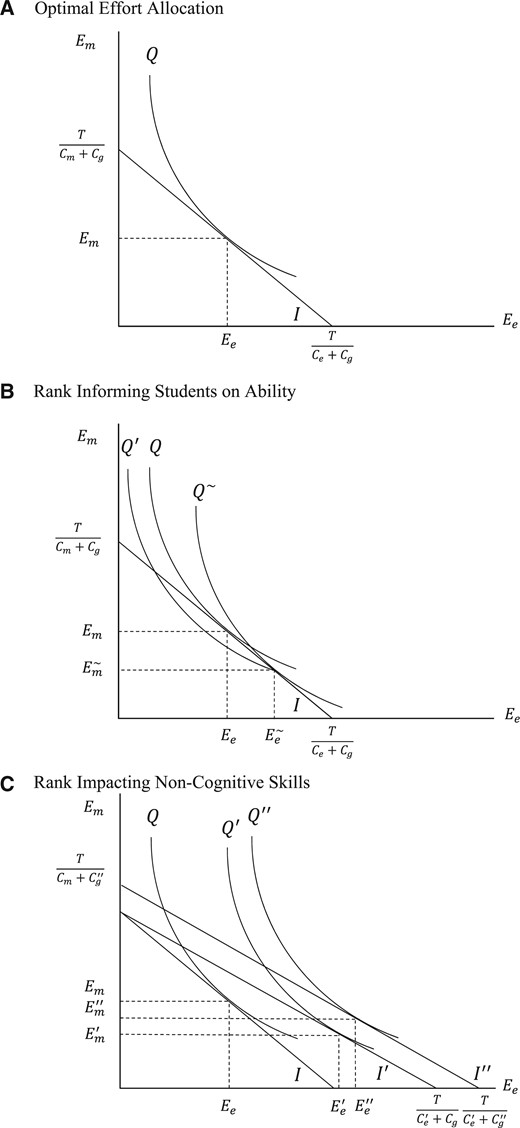

This higher confidence could be indicative of two distinct mechanisms. First, confidence could be reflective of students learning about their own strengths and weaknesses. This is similar to Azmat and Iriberri, (2010) or Ertac, (2006), where students use their relative test scores to update beliefs, but in our case they additionally use their rank. Alternatively, confidence due to rank could improve non-cognitive skills and lower the cost of effort in that subject. This is commonly known as the Big-Fish-Little-Pond effect, a phenomenon which has been identified in many countries and institutional settings (Marsh et al., 2008). Using a stylized model where students try to maximize test scores across subjects for a given total effort and subject ability levels, we derive a test to distinguish the Big-Fish-Little-Pond effect from the learning mechanism and find evidence in favour of the former.

It is important to point out how this article relates to a number of existing literatures. First, the classic peer effects papers typically consider the mean characteristics of others in the group (Sacerdote, 2001; Whitmore, 2005; Kremer and Levy, 2008; Carrell et al., 2009; Lavy et al., 2012). While other studies have examined more complex relationships (Lavy et al., 2012), the common theme amongst them all is that individuals benefit from being surrounded by higher-performing peers. In contrast, we find that having had a higher rank (and therefore worse-performing peers) during primary school increases secondary school test scores.2 Our approach departs from those found in the existing peer effects literature in that we estimate the impacts of previous peers on individuals’ outcomes when surrounded by new peers, rather than the direct impact of contemporaneous peers. Doing so averts issues relating to reflection, and establishes that these effects are long-lasting in students.3 This is similar to Carrell et al., (2018), who use cohort variation during elementary school to estimate the causal effect of disruptive peers on long-run outcomes.

Second, this study is also related to the literature on status concerns and relative feedback. Tincani, (2015) and Bursztyn and Jensen, (2015) find evidence that students have status concerns and will invest more effort if gains in ranks are easier to achieve, while Kuziemko et al., (2014) find evidence for last-place aversion in laboratory experiments.4 These results are similar to findings from non-education settings where individuals may have rank concerns, such as in sports tournaments (Genakos and Pagliero, 2012) or in firms with relative performance accountability systems (i Vidal and Nossol, 2011). We differ from this literature because we estimate the effects of rank in a new environment, where status concerns about prior ranks have already been resolved.5

Finally, the most closely related literature is that on rank itself. These papers account for relative achievement measures and estimate the additional impact of rank on contemporaneous measures of well-being (Brown et al., 2008) and job satisfaction (Card et al., 2012). We contribute to this literature by establishing lasting effects of rank on objective educational outcomes (e.g. national test scores) and subject choice, and by setting out the framework to estimate these effects non-experimentally.6 We also discuss the policy implications of our findings, and examine how group reorganization can lead to net gains in the presence of rank effects.

Our findings help to reconcile a number of topics in education. Rank effects could speak to why some achievement gaps increase over the education cycle (Fryer and Levitt, 2006; Hanushek and Rivkin, 2006, 2009), as they would amplify small differences in early attainment. The existence of rank effects implies that there is a trade-off from attending a more selective school. In this light, the lack of consensus on the positive effects from attending such schools may not be surprising (Angrist and Lang, 2004; Cullen et al., 2006; Kling et al., 2007; Clark, 2010; Abdulkadiroglu et al., 2014).

The rest of the article is structured as follows: Section 2 discusses the empirical strategy and identification. Section 3 describes the English educational system, the data, and the definition of rank. Section 4 presents the main results. Sections 5 and 6 show robustness and heterogeneity. Section 7 explores potential mechanisms and provides additional survey evidence. In Section 8, we conclude by discussing policy implications and other topics in education that corroborate these findings.

2. A Rank-Augmented Education Production Function

2.1. Specification

To account for any factors that either have a constant impact on outcomes (|$\boldsymbol{x_{i}^{'}\beta}$|, |$\beta_{Rank}R_{ijsc}$|, |$\mu_{jsc}$|, |$\tau_{i}$|) or only impact the initial period (|$\boldsymbol{x_{i}^{'}\beta^{0}}$|, |$\beta_{Rank}^{0}R_{ijsc}$|, |$\mu_{jsc}^{0}$|, |$\tau_{i}^{0}$|), we condition on baseline test scores |$Y_{ijsc}^{0}$| when we estimate effects on |$Y_{ijsc}^{1}$|. Critically, |$Y_{ijsc}^{0}$| accounts for any type of contemporaneous peer effect during primary school, including that of rank. We are not estimating the immediate impact of peers on academic achievement. Instead, we are estimating the effects of peers from a previous environment on outcomes in a subsequent time period. Therefore, we express secondary period outcomes as a function of prior test scores, primary rank, student characteristics, and unobservable effects.

The residual |$\epsilon_{ijsc}^{1}$| is comprised of two components: |$\tau_{i}^{1}$|, the unobserved, individual-specific shocks that occur between |$t=0$| and |$t=1$|, and |$\varepsilon_{ijsc}^{1}$|, an idiosyncratic error term. Since we have repeated observations over three subjects for all students, we stack the data over subjects in our main analysis so that there are three observations per student. In all of our estimations, we allow for unobserved correlations by clustering the error term at the level of the secondary school.8 Having multiple observations over time for each student also allows us to include individual student effects to recover |$\tau_{i}^{1}$|, the average growth of individual |$i$| in secondary period. This changes the interpretation of the rank parameter: it only represents the increase in test scores due to subject-specific rank, rather than due to a general gain across all subjects (which are absorbed by the student fixed effect). Therefore, in our main specification we do not account for individual effects.9

In summary, if individuals react to ordinal information in addition to cardinal information, then we expect the rank parameter |$\beta_{Rank}^{1}$| in Specification 1 and |$\beta_{R=0,\ }^{1}\sum_{\lambda=1,\lambda{{\neq}}10}^{20}\beta_{\lambda}^{1},\ \beta_{R=1}^{1}$| in Specification 2 to have significant effects.

2.2. Identification

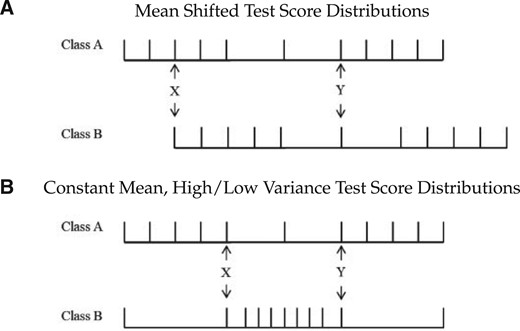

Our analysis proposes a novel approach for isolating rank effects by exploiting idiosyncratic variation in the test score distributions across primary school classes. This variation in rank exists conditional on primary school achievement (|$Y_{ijsc}^{0}$| in Specifications 1 and 2) and occurs because classes are small and students vary in ability. As a result, students with the same achievement score in primary school may have different ranks depending upon which class they attend. Panel A of Figure 1 shows students in Class A have higher ranks than students in Class B for the same given test score.

Rank dependent on test score distribution.

Notes: These figures show two classes of eleven students, and each mark represents a student’s test score, increasing from left to right. In (A), the classes have similar score distributions, but the distribution of Class B is shifted higher. This means students with the same absolute scores would have a lower rank in Class B compared to Class A. In (B) the classes have the same mean, minimum, and maximum student test scores, but Class B has a smaller variance. This means students with the same absolute and relative-to-the-class-mean test scores will have different ranks. A student with score Y above the mean will have a higher rank in Class B than Class A, but a student with score X below the mean will have a higher rank in Class A.

However, classes with better resources may be able to generate higher baseline test scores from a given set of students. This is a concern if the impact of such class-level inputs fades differentially to other inputs and if these inputs preserve rank. An extreme example would be if having a good teacher during primary school had a positive impact on baseline test scores, |$Y_{ijsc}^{0}$|, and no impact on secondary outcomes, |$Y_{ijsc}^{1}$|, but being high ability had an impact on both |$Y_{ijsc}^{0}$| and |$Y_{ijsc}^{1}$|. If this were the case, then there would be a problem comparing the test scores of students who were taught by teachers of different effectiveness, because test scores would no longer be a sufficient statistic for all prior inputs.10 Moreover, as any class-level shock would be rank preserving, student rank would become correlated with student ability, even conditional on attainment. The consequence is that transitory, class-level shocks could make class rank a proxy measure for ability, and so confound the estimated rank parameter.

To account for factors that would additively impact all students in a class, such as peers, teachers, or unexpected events on test day, we include fixed effects at the primary class level, denoted by |$\boldsymbol{\mu_{jsc}^{1}}$| in Specifications 1 and 2. These allow for the effects of such class inputs on secondary school outcomes to fade out differentially. In doing so, they account for the mean differences in test scores between Class A and Class B in Panel A. The inclusion of these class effects impacts on the variation that we use for identification. We are now effectively comparing students who have the same test score achievement relative to their class mean during primary school, but different primary ranks due to the test score distribution of their class. We exploit the differences in the test score distributions across schools, cohorts, and subjects.

This follows a similar strategy used by Hoxby (2000), among others, to compare the outcomes of students in adjacent cohorts within the same school. The critical difference is that in these papers, the variation in the treatment is at the school-cohort level (e.g. proportion female), while in our article, there is variation in treatment (rank) within each SSC. Therefore, even though the variation in the test score distribution is at the class level, the variation in the treatment is at the “relative test score by class distribution” level.

As an example, consider a low- and a high-variance class and students at a fixed distance above and below the mean in each class. We have illustrated such a scenario in Panel B of Figure 1. Both classes have the same mean, or have mean differences accounted for via class effects. Here, a student with a test score of Y in Class A would have a lower rank (R = 5) than a student with the same relative test score in Class B (R = 2). In this setting, students above the mean would have a higher rank if they were in the low-variance class compared to if they were in the high-variance class. In contrast, students below the mean would have a lower rank if they were in the low-variance class. So the same change in distribution (from high to low variance) impacts students within the same class differently depending on their relative test scores. That said, this simple illustration only uses variation in the second moment of the test score distribution; in reality, there is considerably more variation at higher moments.11

Note that if the ability distribution of students in a class has an immediate impact on student achievement, this will be captured in the baseline test scores, |$Y_{ijsc}^{0}$|. If the ability distribution of the class impacts the growth in student test scores in that class, then this will be accounted for through the inclusion of class effects, |$\boldsymbol{\mu_{jsc}^{1}}$|.

In our setting, this means we assume that rank is unrelated to potential outcomes conditional on a function of past test scores |$f(Y_{ijsc}^{0})$|, student observables |$\boldsymbol{x_{i}}$|, and SSC attended |$\mu_{jsc}^{1}$| for all ranks. The relation to the thought experiment is that a student’s rank is “as good as” random after conditioning on these factors. There are two types of potential violations. The first type is if |$Y_{ijsc}^{0}$| does not sufficiently capture prior inputs during primary school conditional on primary class fixed effects, |$\boldsymbol{\mu_{jsc}^{1}}$|, which we assume to be additively separable. This would mean that unobserved shocks at |$t=0$| could affect |$Y^{0}$| and |$Y^{1}$| differentially and correlate with rank at the individual-subject level. An example of such a transitory shock would be primary school teachers who teach to specific parts of the achievement distribution and whose impact is only revealed in secondary school outcomes. The second type is if students sort into classes based on what their rank would be. Note, conditioning on |$\boldsymbol{\mu_{jsc}^{1}}$| accounts for any sorting on the basis of class characteristics. Violations of both types are tested for in the robustness analyses in Sections 5.3 and 5.1, respectively.

3. Institutional Setting, Data, and Descriptive Statistics

This section explains the administrative data and institutional setting in England that we use to estimate the rank effect using the specifications of Section 2.1.

3.1. The English school system

The compulsory education system in England is comprised of four key stages. At the end of each stage, students take national exams. Key Stage 2 is taught during primary school, when students are between the ages of 7 and 11. Within each primary school year group, students are further divided into classes of no more than 30 students. English primary schools are typically small, with a median cohort size of 27 students. Coincidentally, the mean class size of a primary school is also 27 students (Falck et al., 2011), so while we do not have data on student class assignment within a primary school-cohort, in the vast majority of cases there will be only one class per school-cohort. We therefore consider a SSC primary rank to be equivalent to the class rank in that subject.12 At the end of the final year of primary school, when the students are age 11, they take tests in English, Maths, and Science. These national tests are externally scored on a scale of 0 to 100, and these results from our baseline measure of achievement.

After completing primary school, students enrol in secondary school and start working towards Key Stage 3. The average primary school sends students to six different secondary schools, while the typical secondary school receives students from 16 different primary schools. Hence, upon arrival at secondary school, the average student has 87% new peers. This large re-mixing of peers allows us to estimate the impact of rank from a previous peer group on subsequent outcomes. If this were not the case and students instead kept their same peers from primary school, our primary rank measure would be correlated with rank in secondary school, and so the rank parameter would also capture the impact of contemporaneous rank.13 Importantly, since 1998, it has been unlawful for schools to select students on the basis of ability; therefore, admission into secondary school does not depend on end-of-primary test scores or student ranking.14 This means that the age-11 exams are low-stakes with respect to secondary school choice. Key Stage 3 takes place over three years; at the end of the final year, at age 14, students take another set of national examinations in the same three subjects. Like the age-11 exams, the age-14 exams are externally scored on a scale of 0 to 100.15

At the end of Key Stage 3, students can choose to take a number of GCSE (General Certificate of Secondary Education) subjects for the Key Stage 4 assessment, which occurs two years later at the age of 16 and marks the end of compulsory education in England. The final grades consist of nine levels (A{*}, A, B, C, D, E, F, G, and U), to which we have assigned points according to the Department for Education’s guidelines (Falck et al., 2011). However, students have some discretion in choosing the number, subject, and level of GCSEs they study. Thus, GCSE grade scores are inferior measures of student achievement compared to age-14 examinations, which are scored on a finer scale and examine all students in the same compulsory subjects. Therefore, we focus on age-14 test scores as the main outcome measure, but also present results for the higher-stakes age-16 examinations.

After Key Stage 4, some students choose to stay in school to study A-Levels for two years, which are a precursor for university-level education. This constitutes a high level of specialization, as students typically only enrol in three A-Level subjects out of a set of 40. For example, a student could choose to study biology, economics, and geography, but not English or maths. Importantly, the A-Level courses they choose will determine the majors they can enrol in during university, which have longer run effects on careers and earnings (Kirkeboen et al., 2016). For example, chemistry as an A-Level is required to apply for medicine degrees and maths is a prerequisite for studying engineering.16 To study the lasting impact of rank, we examine how primary school rank in three subjects affects the likelihood that a student will choose to stay on at school and study those subjects at A-Level.

3.2. Student administrative data

The Department for Education collects data on all students and all schools in the English state education system in the National Pupil Database (NPD).17 The NPD contains data for each individual student, including the schools they attended and their demographic information [gender, Free School Meals Eligibility (FSME), and ethnicity]. It also tracks each student’s attainment data throughout their key stage progression.

Our sample follows the population of five cohorts of students from age 10/11, when they took their Key Stage 2 examinations, through to age 17/18, when they completed their A-Levels. This student sample took their age-11 exams in the academic years 2000–01 to 2004–05; hence, it follows that their age-14 exams took place in 2003–04 to 2007–08, and that the data from completed A-Levels comes from the years 2007–08 to 2011–12.18

We impose a set of restrictions on the data to obtain a balanced panel of students. We use only students who can be tracked with valid age-11 and age-14 exam information and background characteristics. This constitutes 83% of the five cohort population. Next, we exclude students who appear to be double counted (1,060). We also remove students whose school identifiers do not match within a year across datasets, which excludes approximately 0.6% of the remaining sample (12,900). Finally, we remove all students who attended a primary school where the cohort size was less than 10, as these small schools are likely to be atypical in a number of dimensions (e.g. classes formed of students from more than one cohort/year-group). This represents 2.8% of students.19 This leaves us with approximately 454,000 students per cohort, with a final sample of just under 2.3 million students, or 6.8 million student-subject observations.

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student test scores | ||||

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.285 | 28.027 | 1 | 100 |

| |$\qquad$| Maths | 50.515 | 28.189 | 1 | 100 |

| |$\qquad$| Science | 50.005 | 28.026 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.488 | 0.296 | 0 | 1 |

| |$\qquad$| Maths | 0.491 | 0.296 | 0 | 1 |

| |$\qquad$| Science | 0.485 | 0.295 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.138 | 0.087 | 0 | 0.577 |

| |$\quad$| Age-14 national test scores percentile | ||||

| |$\qquad$| English | 51.233 | 28.175 | 1 | 100 |

| |$\qquad$| Maths | 52.888 | 27.545 | 1 | 100 |

| |$\qquad$| Science | 52.908 | 27.525 | 1 | 100 |

| |$\quad$| Age-16 national test scores percentile | ||||

| |$\qquad$| English | 41.783 | 26.724 | 1 | 94 |

| |$\qquad$| Maths | 43.074 | 27.014 | 1 | 96 |

| |$\qquad$| Science | 41.807 | 26.855 | 1 | 94 |

| |$\quad$| Age-18 subjects completed | ||||

| |$\qquad$| English | 0.123 | 0.328 | 0 | 1 |

| |$\qquad$| Maths | 0.084 | 0.277 | 0 | 1 |

| |$\qquad$| Science | 0.108 | 0.31 | 0 | 1 |

| Panel B: Student background characteristics | ||||

| |$\quad$| FSME | 0.146 | 0.353 | 0 | 1 |

| |$\quad$| Male | 0.499 | 0.5 | 0 | 1 |

| |$\quad$| Minority | 0.163 | 0.37 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 2,271,999 | |||

| |$\quad$| Primary schools | 14,500 | |||

| |$\quad$| Secondary schools | 3,800 | |||

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student test scores | ||||

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.285 | 28.027 | 1 | 100 |

| |$\qquad$| Maths | 50.515 | 28.189 | 1 | 100 |

| |$\qquad$| Science | 50.005 | 28.026 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.488 | 0.296 | 0 | 1 |

| |$\qquad$| Maths | 0.491 | 0.296 | 0 | 1 |

| |$\qquad$| Science | 0.485 | 0.295 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.138 | 0.087 | 0 | 0.577 |

| |$\quad$| Age-14 national test scores percentile | ||||

| |$\qquad$| English | 51.233 | 28.175 | 1 | 100 |

| |$\qquad$| Maths | 52.888 | 27.545 | 1 | 100 |

| |$\qquad$| Science | 52.908 | 27.525 | 1 | 100 |

| |$\quad$| Age-16 national test scores percentile | ||||

| |$\qquad$| English | 41.783 | 26.724 | 1 | 94 |

| |$\qquad$| Maths | 43.074 | 27.014 | 1 | 96 |

| |$\qquad$| Science | 41.807 | 26.855 | 1 | 94 |

| |$\quad$| Age-18 subjects completed | ||||

| |$\qquad$| English | 0.123 | 0.328 | 0 | 1 |

| |$\qquad$| Maths | 0.084 | 0.277 | 0 | 1 |

| |$\qquad$| Science | 0.108 | 0.31 | 0 | 1 |

| Panel B: Student background characteristics | ||||

| |$\quad$| FSME | 0.146 | 0.353 | 0 | 1 |

| |$\quad$| Male | 0.499 | 0.5 | 0 | 1 |

| |$\quad$| Minority | 0.163 | 0.37 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 2,271,999 | |||

| |$\quad$| Primary schools | 14,500 | |||

| |$\quad$| Secondary schools | 3,800 | |||

Notes: 6,815,997 student-subject observations over 5 cohorts. Cohort 1 takes age-11 examinations in 2001, age-14 examinations in 2004, age-16 examinations in 2006, and A-levels at age 18 in 2008. Test scores are percentalized by cohort-subject and come from externally marked national exams. Age-16 test scores mark the end of compulsory education. Age-18 information could be merged for a sub-sample of 5,147,193 observations from cohorts 2 to 5. For a detailed description of the data, see Section 3.

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student test scores | ||||

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.285 | 28.027 | 1 | 100 |

| |$\qquad$| Maths | 50.515 | 28.189 | 1 | 100 |

| |$\qquad$| Science | 50.005 | 28.026 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.488 | 0.296 | 0 | 1 |

| |$\qquad$| Maths | 0.491 | 0.296 | 0 | 1 |

| |$\qquad$| Science | 0.485 | 0.295 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.138 | 0.087 | 0 | 0.577 |

| |$\quad$| Age-14 national test scores percentile | ||||

| |$\qquad$| English | 51.233 | 28.175 | 1 | 100 |

| |$\qquad$| Maths | 52.888 | 27.545 | 1 | 100 |

| |$\qquad$| Science | 52.908 | 27.525 | 1 | 100 |

| |$\quad$| Age-16 national test scores percentile | ||||

| |$\qquad$| English | 41.783 | 26.724 | 1 | 94 |

| |$\qquad$| Maths | 43.074 | 27.014 | 1 | 96 |

| |$\qquad$| Science | 41.807 | 26.855 | 1 | 94 |

| |$\quad$| Age-18 subjects completed | ||||

| |$\qquad$| English | 0.123 | 0.328 | 0 | 1 |

| |$\qquad$| Maths | 0.084 | 0.277 | 0 | 1 |

| |$\qquad$| Science | 0.108 | 0.31 | 0 | 1 |

| Panel B: Student background characteristics | ||||

| |$\quad$| FSME | 0.146 | 0.353 | 0 | 1 |

| |$\quad$| Male | 0.499 | 0.5 | 0 | 1 |

| |$\quad$| Minority | 0.163 | 0.37 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 2,271,999 | |||

| |$\quad$| Primary schools | 14,500 | |||

| |$\quad$| Secondary schools | 3,800 | |||

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student test scores | ||||

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.285 | 28.027 | 1 | 100 |

| |$\qquad$| Maths | 50.515 | 28.189 | 1 | 100 |

| |$\qquad$| Science | 50.005 | 28.026 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.488 | 0.296 | 0 | 1 |

| |$\qquad$| Maths | 0.491 | 0.296 | 0 | 1 |

| |$\qquad$| Science | 0.485 | 0.295 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.138 | 0.087 | 0 | 0.577 |

| |$\quad$| Age-14 national test scores percentile | ||||

| |$\qquad$| English | 51.233 | 28.175 | 1 | 100 |

| |$\qquad$| Maths | 52.888 | 27.545 | 1 | 100 |

| |$\qquad$| Science | 52.908 | 27.525 | 1 | 100 |

| |$\quad$| Age-16 national test scores percentile | ||||

| |$\qquad$| English | 41.783 | 26.724 | 1 | 94 |

| |$\qquad$| Maths | 43.074 | 27.014 | 1 | 96 |

| |$\qquad$| Science | 41.807 | 26.855 | 1 | 94 |

| |$\quad$| Age-18 subjects completed | ||||

| |$\qquad$| English | 0.123 | 0.328 | 0 | 1 |

| |$\qquad$| Maths | 0.084 | 0.277 | 0 | 1 |

| |$\qquad$| Science | 0.108 | 0.31 | 0 | 1 |

| Panel B: Student background characteristics | ||||

| |$\quad$| FSME | 0.146 | 0.353 | 0 | 1 |

| |$\quad$| Male | 0.499 | 0.5 | 0 | 1 |

| |$\quad$| Minority | 0.163 | 0.37 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 2,271,999 | |||

| |$\quad$| Primary schools | 14,500 | |||

| |$\quad$| Secondary schools | 3,800 | |||

Notes: 6,815,997 student-subject observations over 5 cohorts. Cohort 1 takes age-11 examinations in 2001, age-14 examinations in 2004, age-16 examinations in 2006, and A-levels at age 18 in 2008. Test scores are percentalized by cohort-subject and come from externally marked national exams. Age-16 test scores mark the end of compulsory education. Age-18 information could be merged for a sub-sample of 5,147,193 observations from cohorts 2 to 5. For a detailed description of the data, see Section 3.

The key stage test scores at each age group are transformed to have a uniform distribution by subject and cohort. Specifically, test scores are converted into a national test score percentile, so that each individual has nine test scores between zero and 100 (ages 11, 14, and 16). This does not impinge on our estimation strategy, which relies only on variation in test score distributions at the SSC level.20

Table 1 shows descriptive statistics for the estimation sample. Given that the test scores are represented in percentiles, all three subject test scores at age 11, 14, and 16 have a mean of around 50, with a standard deviation of about 28. Recall that the age-11 and age-14 exams are graded out of 100, while the age-16 exam is scored on a much coarser letter-grade scale. This difference explains why the age-16 average percentile scores are lower. Almost 60% of students decide to stay and continue their education until the A-Levels, which are the formal gateway requirement for university admission. Although there are many subjects to choose from, about 14% of students choose to sit an A-Level exam in English, while in maths and science the proportions are about 9% and 11%, respectively.

Information relating to the background characteristics of the students is shown in Panel B of Table 1. Half of the students in the population are male, and more than four-fifths are white British. About 15% are FSME students, which is used as a standard measure of low parental income. The within-student standard deviation across the three subjects (English, maths, and science) is 12.68 national percentile points at age 11, with similar variation in the age-14 tests. This is important, as it shows that there is within-student variation, which is used in student fixed effects regressions.

3.3. Measuring ordinal rank

As explained in Section 3.1, all students take the end-of-primary national exam at age 11. For each subject, we transform the score into national student percentiles by cohort. We use these percentiles to rank students in each subject within their primary school cohort. We take this rank measure as a proxy for perceived ranking based on interactions with peers over the previous six years of primary school, along with repeated teacher feedback. We assume test performance to be highly correlated with everyday classroom performance, and representative of previous performance on any informal class examinations.21

This percentile class rank remains ordinal in nature and still does not carry cardinal information (i.e. information about relative ability distances). For the ease of exposition, we will refer to |$R_{ijsc}$| as the ordinal class rank for the remainder of this article. Panel A of Table 1 shows descriptive statistics of the rank variable.

Now that we have defined our measurement of rank, it is relevant to consider what students will know about their academic rank. While we have complete access to the finely graded test score data, students are instead given only one of five broad attainment levels, with 85% of students achieving one of the top two levels.23 Therefore, neither the students nor their teachers are informed of our ranking metric based on these age-11 test scores. This means our main dependent variable is prone to measurement error due to student perception.

While we cannot know for certain if our measurement of rank based on students’ academic achievement is a good proxy for student perceptions, we have three facts that support this claim. First, a longstanding body of literature from the field of psychology has established that individuals have accurate perceptions of their rank within a group but not of their absolute ability (e.g. Anderson et al., 2006). Second, using merged survey data we find that, conditional on test scores, students with higher ranks in a subject have higher confidence in that subject (Section 7.4.1). And third, if individuals (students, teachers, or parents) had no perception of the rankings, then we would expect not to find an impact of our rank measurement at all. To this extent, the rank coefficient |$\beta_{Rank}^{1}$| from Section 2 will be attenuated, and we are estimating a reduced form of perceived rank using actual rank. In Section 5.3, we also simulate increasingly large measurement errors in the age-11 test scores, which we use to calculate rank, to document what would occur if these tests were less representative of students’ abilities and social interactions. We show that increased measurement error in baseline achievement slightly attenuates the rank estimate, which is consistent with students having a poor perception of their academic rank.

3.4. Survey data: the longitudinal study of young people in England

We have additional information about a sub-sample of students through a representative survey of 16,122 students from the first cohort. The Longitudinal Survey of Young People in England (LSYPE) is managed by the Department for Education and follows a single cohort of teenagers, collecting detailed information on their parental background, academic achievements, and subject confidence.

We merge survey responses with our administrative data using a unique student identifier. Not all students could be matched, as the LSYPE also surveys students attending private schools that are not included in the national datasets, and not all students could be accurately tracked over time. A total of 3,731 survey responses could not be matched. Additionally, 823 state school students did not fully answer the relevant questions and so could not be used in the analysis. This leaves us with 11,558 usable student observations. Even though this does not contain information on each student in a school-cohort, by matching the main data, we can calculate where each LSYPE-student is ranked during primary school.

Students taking the LSYPE survey at age 14 are asked how good they consider themselves in the subjects of English, maths, and science. We code five possible responses in the following way: (2) – Very Good; (1) – Fairly Good; (0) – Don’t Know; (|$-$|1) – Not Very Good; (|$-$|2) – Not Very Good At All. We use this simple scale as a measure of subject-specific confidence (Table 2, Panel A).24 The LSYPE respondents are very similar to students in the main sample, with the mean age-11 scores always being within one national percentile point. That said, the two samples do have differences, with the LSYPE sample having a higher proportion of FSME (18% versus 14.6%) and minority (33.7% versus 16.3%) students. This is to be expected, however, as the LSYPE intended to over sample students from disadvantaged groups.25

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student descriptive statistics | ||||

| |$\quad$| How good do you think you are at... | ||||

| |$\qquad$| English | 0.928 | 0.928 | -2 | 2 |

| |$\qquad$| Maths | 0.944 | 0.917 | -2 | 2 |

| |$\qquad$| Science | 0.904 | 1.008 | -2 | 2 |

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.114 | 27.725 | 1 | 100 |

| |$\qquad$| Maths | 50.783 | 28.378 | 1 | 100 |

| |$\qquad$| Science | 49.453 | 28.287 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.496 | 0.295 | 0 | 1 |

| |$\qquad$| Maths | 0.501 | 0.297 | 0 | 1 |

| |$\qquad$| Science | 0.489 | 0.294 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.137 | 0.089 | 0 | 0.575 |

| |$\quad$| Student characteristics | ||||

| |$\qquad$| FSME | 0.180 | 0.384 | 0 | 1 |

| |$\qquad$| Male | 0.498 | 0.500 | 0 | 1 |

| |$\qquad$| Minority | 0.337 | 0.473 | 0 | 1 |

| Panel B: parental descriptive statistics | ||||

| |$\quad$| Any post-secondary qualification | 0.323 | 0.468 | 0 | 1 |

| |$\quad$| Gross household income>£33,000 | 0.219 | 0.413 | 0 | 1 |

| |$\quad$| Occupation | ||||

| |$\qquad$| English | 0.014 | 0.119 | 0 | 1 |

| |$\qquad$| Maths | 0.031 | 0.175 | 0 | 1 |

| |$\qquad$| Science | 0.036 | 0.185 | 0 | 1 |

| |$\quad$| Parental time investment in schooling | ||||

| |$\qquad$| Number attending parents evening | 1.213 | 0.713 | 0 | 2 |

| |$\qquad$| Special meeting with teacher about child | 0.235 | 0.424 | 0 | 1 |

| |$\qquad$| Frequency of talking with child’s teacher | 2.124 | 0.957 | 1 | 5 |

| |$\qquad$| Involved in child’s school life | 2.969 | 0.782 | 1 | 4 |

| |$\quad$| Parental financial investment in schooling | ||||

| |$\quad$| Paying for any out of school tuition | 0.234 | 0.423 | 0 | 1 |

| |$\qquad$| English tuition | 0.057 | 0.232 | 0 | 1 |

| |$\qquad$| Maths tuition | 0.027 | 0.163 | 0 | 1 |

| |$\qquad$| Science tuition | 0.019 | 0.137 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 10,318 | |||

| |$\quad$| Primary schools | 4,137 | |||

| |$\quad$| Secondary schools | 780 | |||

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student descriptive statistics | ||||

| |$\quad$| How good do you think you are at... | ||||

| |$\qquad$| English | 0.928 | 0.928 | -2 | 2 |

| |$\qquad$| Maths | 0.944 | 0.917 | -2 | 2 |

| |$\qquad$| Science | 0.904 | 1.008 | -2 | 2 |

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.114 | 27.725 | 1 | 100 |

| |$\qquad$| Maths | 50.783 | 28.378 | 1 | 100 |

| |$\qquad$| Science | 49.453 | 28.287 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.496 | 0.295 | 0 | 1 |

| |$\qquad$| Maths | 0.501 | 0.297 | 0 | 1 |

| |$\qquad$| Science | 0.489 | 0.294 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.137 | 0.089 | 0 | 0.575 |

| |$\quad$| Student characteristics | ||||

| |$\qquad$| FSME | 0.180 | 0.384 | 0 | 1 |

| |$\qquad$| Male | 0.498 | 0.500 | 0 | 1 |

| |$\qquad$| Minority | 0.337 | 0.473 | 0 | 1 |

| Panel B: parental descriptive statistics | ||||

| |$\quad$| Any post-secondary qualification | 0.323 | 0.468 | 0 | 1 |

| |$\quad$| Gross household income>£33,000 | 0.219 | 0.413 | 0 | 1 |

| |$\quad$| Occupation | ||||

| |$\qquad$| English | 0.014 | 0.119 | 0 | 1 |

| |$\qquad$| Maths | 0.031 | 0.175 | 0 | 1 |

| |$\qquad$| Science | 0.036 | 0.185 | 0 | 1 |

| |$\quad$| Parental time investment in schooling | ||||

| |$\qquad$| Number attending parents evening | 1.213 | 0.713 | 0 | 2 |

| |$\qquad$| Special meeting with teacher about child | 0.235 | 0.424 | 0 | 1 |

| |$\qquad$| Frequency of talking with child’s teacher | 2.124 | 0.957 | 1 | 5 |

| |$\qquad$| Involved in child’s school life | 2.969 | 0.782 | 1 | 4 |

| |$\quad$| Parental financial investment in schooling | ||||

| |$\quad$| Paying for any out of school tuition | 0.234 | 0.423 | 0 | 1 |

| |$\qquad$| English tuition | 0.057 | 0.232 | 0 | 1 |

| |$\qquad$| Maths tuition | 0.027 | 0.163 | 0 | 1 |

| |$\qquad$| Science tuition | 0.019 | 0.137 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 10,318 | |||

| |$\quad$| Primary schools | 4,137 | |||

| |$\quad$| Secondary schools | 780 | |||

Notes: The LSYPE sample consists of 34,674 observations from the cohort 1 who took age-11 exams in 2001 and age-14 exams in 2004. For a detailed description of the data see Section 3.

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student descriptive statistics | ||||

| |$\quad$| How good do you think you are at... | ||||

| |$\qquad$| English | 0.928 | 0.928 | -2 | 2 |

| |$\qquad$| Maths | 0.944 | 0.917 | -2 | 2 |

| |$\qquad$| Science | 0.904 | 1.008 | -2 | 2 |

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.114 | 27.725 | 1 | 100 |

| |$\qquad$| Maths | 50.783 | 28.378 | 1 | 100 |

| |$\qquad$| Science | 49.453 | 28.287 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.496 | 0.295 | 0 | 1 |

| |$\qquad$| Maths | 0.501 | 0.297 | 0 | 1 |

| |$\qquad$| Science | 0.489 | 0.294 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.137 | 0.089 | 0 | 0.575 |

| |$\quad$| Student characteristics | ||||

| |$\qquad$| FSME | 0.180 | 0.384 | 0 | 1 |

| |$\qquad$| Male | 0.498 | 0.500 | 0 | 1 |

| |$\qquad$| Minority | 0.337 | 0.473 | 0 | 1 |

| Panel B: parental descriptive statistics | ||||

| |$\quad$| Any post-secondary qualification | 0.323 | 0.468 | 0 | 1 |

| |$\quad$| Gross household income>£33,000 | 0.219 | 0.413 | 0 | 1 |

| |$\quad$| Occupation | ||||

| |$\qquad$| English | 0.014 | 0.119 | 0 | 1 |

| |$\qquad$| Maths | 0.031 | 0.175 | 0 | 1 |

| |$\qquad$| Science | 0.036 | 0.185 | 0 | 1 |

| |$\quad$| Parental time investment in schooling | ||||

| |$\qquad$| Number attending parents evening | 1.213 | 0.713 | 0 | 2 |

| |$\qquad$| Special meeting with teacher about child | 0.235 | 0.424 | 0 | 1 |

| |$\qquad$| Frequency of talking with child’s teacher | 2.124 | 0.957 | 1 | 5 |

| |$\qquad$| Involved in child’s school life | 2.969 | 0.782 | 1 | 4 |

| |$\quad$| Parental financial investment in schooling | ||||

| |$\quad$| Paying for any out of school tuition | 0.234 | 0.423 | 0 | 1 |

| |$\qquad$| English tuition | 0.057 | 0.232 | 0 | 1 |

| |$\qquad$| Maths tuition | 0.027 | 0.163 | 0 | 1 |

| |$\qquad$| Science tuition | 0.019 | 0.137 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 10,318 | |||

| |$\quad$| Primary schools | 4,137 | |||

| |$\quad$| Secondary schools | 780 | |||

| . | Mean . | SD . | Min . | Max . |

|---|---|---|---|---|

| Panel A: Student descriptive statistics | ||||

| |$\quad$| How good do you think you are at... | ||||

| |$\qquad$| English | 0.928 | 0.928 | -2 | 2 |

| |$\qquad$| Maths | 0.944 | 0.917 | -2 | 2 |

| |$\qquad$| Science | 0.904 | 1.008 | -2 | 2 |

| |$\quad$| Age-11 national test scores percentile | ||||

| |$\qquad$| English | 50.114 | 27.725 | 1 | 100 |

| |$\qquad$| Maths | 50.783 | 28.378 | 1 | 100 |

| |$\qquad$| Science | 49.453 | 28.287 | 1 | 100 |

| |$\quad$| Age-11 rank | ||||

| |$\qquad$| English | 0.496 | 0.295 | 0 | 1 |

| |$\qquad$| Maths | 0.501 | 0.297 | 0 | 1 |

| |$\qquad$| Science | 0.489 | 0.294 | 0 | 1 |

| |$\qquad$| Within student rank SD | 0.137 | 0.089 | 0 | 0.575 |

| |$\quad$| Student characteristics | ||||

| |$\qquad$| FSME | 0.180 | 0.384 | 0 | 1 |

| |$\qquad$| Male | 0.498 | 0.500 | 0 | 1 |

| |$\qquad$| Minority | 0.337 | 0.473 | 0 | 1 |

| Panel B: parental descriptive statistics | ||||

| |$\quad$| Any post-secondary qualification | 0.323 | 0.468 | 0 | 1 |

| |$\quad$| Gross household income>£33,000 | 0.219 | 0.413 | 0 | 1 |

| |$\quad$| Occupation | ||||

| |$\qquad$| English | 0.014 | 0.119 | 0 | 1 |

| |$\qquad$| Maths | 0.031 | 0.175 | 0 | 1 |

| |$\qquad$| Science | 0.036 | 0.185 | 0 | 1 |

| |$\quad$| Parental time investment in schooling | ||||

| |$\qquad$| Number attending parents evening | 1.213 | 0.713 | 0 | 2 |

| |$\qquad$| Special meeting with teacher about child | 0.235 | 0.424 | 0 | 1 |

| |$\qquad$| Frequency of talking with child’s teacher | 2.124 | 0.957 | 1 | 5 |

| |$\qquad$| Involved in child’s school life | 2.969 | 0.782 | 1 | 4 |

| |$\quad$| Parental financial investment in schooling | ||||

| |$\quad$| Paying for any out of school tuition | 0.234 | 0.423 | 0 | 1 |

| |$\qquad$| English tuition | 0.057 | 0.232 | 0 | 1 |

| |$\qquad$| Maths tuition | 0.027 | 0.163 | 0 | 1 |

| |$\qquad$| Science tuition | 0.019 | 0.137 | 0 | 1 |

| Panel C: Observations | ||||

| |$\quad$| Students | 10,318 | |||

| |$\quad$| Primary schools | 4,137 | |||

| |$\quad$| Secondary schools | 780 | |||

Notes: The LSYPE sample consists of 34,674 observations from the cohort 1 who took age-11 exams in 2001 and age-14 exams in 2004. For a detailed description of the data see Section 3.

The LSYPE also contains detailed parental information (Table 2, Panel B). These characteristics are represented by a set of indicator variables, such as parental qualifications (defined by any parent having post-secondary qualification) and gross household income above £33,000. We use information on parent characteristics to test for sorting to primary schools on the basis of rank conditional on performance in Section 5.1. To test if there is additional sorting to primary schools by subject, we have classified the parental occupation of each parent to each subject. Then, we create an indicator variable for each student-subject pair to capture if they have a parent who works in that field. For example, a child of a librarian and a science technician would have parental occupations coded as English and science, respectively.26

Finally, information regarding parental time and financial investments in schooling is used to explore possible mechanisms in Section 7.2. It is possible that parents may adjust their investments according to student rank during primary school. Therefore, we have coded four forms of self-reported parent time investment: the number of parents attending the most recent parent evening27; whether a parent has arranged a meeting with the teacher; how often a parent talks to the teacher; and how personally involved the parent feels in their child’s school life.28 In our sample, on average, 1.2 parents attended the last parents evening, 23.5% had organized a meeting with the teacher, they have meetings less than once a term, and felt fairly involved in the child’s school life.

4. Main Results

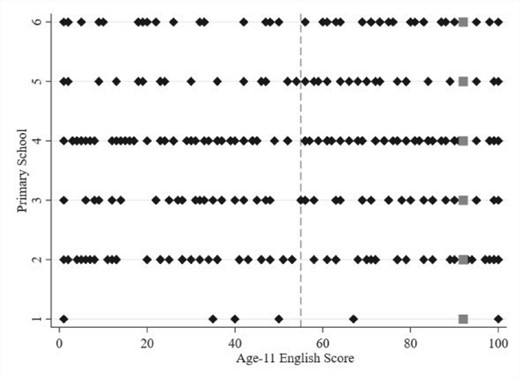

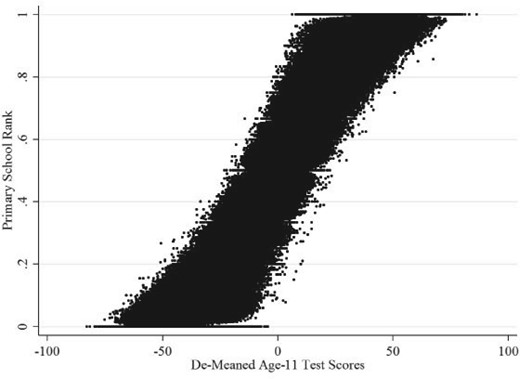

Figure 2 replicates the stylized example from Figure 1 using six primary school English classes from our data. Each class has the same minimum, maximum, and mean (dashed line) test score in the age-11 English exam. Each class also has a student at the 92nd national achievement percentile, but because of the different test score distributions, each of those students has a different rank in their class. This rank is increasing from school one to school six with ranks |$R$| of 0.83, 0.84, 0.89, 0.90, 0.93, and 0.94, respectively, despite all six students having the same absolute and relative-to-the-class-mean test scores. Figure 3 extends this example of the distributional variation by using the data from all primary schools and subjects in our sample. Here, we plot age-11 test scores, de-meaned by primary SSC, against the age-11 class ranks in each subject. The vertical thickness of the plot is the support for the rank distribution. For students close to the median in their class, ranks range from |$R=0.2$| to |$R=0.8$|, showing we have wide support for inference in-sample. This variation exists because classes are small and achievement distributions differ.

Test score distributions across similar classes

Notes: This graph presents data from six primary school English classes that all have a mean test score of 55 (as indicated by the dashed grey line) and a student scoring the minimum and maximum. Each diamond represents a student score, and grey squares indicate all students who scored 92. Given the different test score distributions, each student scoring 92 has a different rank. This rank is increasing from School 1 to School 6 with ranks of 0.83, 0.84, 0.89, 0.90, 0.925, and 0.94, respectively, despite all students having the same absolute and relative-to-the-class-mean test score. Note that some test scores have been randomly anonymized to protect individuals and schools; this does not affect the interpretation of these figures.

Rank distributions across SSCs

Notes: The Y-axis is the primary rank of students, and the X-axis shows the de-meaned test scores by primary SSC. Note that some test scores have been randomly anonymized to protect individuals and schools; this does not affect the interpretation of these figures.

The main estimates of the impact of primary school rank on secondary school outcomes are presented in Table 3. The first two columns show the impact on age-14 test scores. Column 1 allows for a cubic in prior test scores and primary SSC effects, and column 2 additionally controls for student demographics (ethnicity, gender, ever FSME). The interpretation of the estimate from column 1 is that ranking at the top of class compared to the bottom, ceteris paribus, is associated with a gain of 7.946 national percentiles (0.29 standard deviations). When accounting for student characteristics, there is an insignificant reduction to 7.894, implying that characteristics are not correlated with rank.

This rank parameter is large in comparison with other student characteristics. For example, females score 1.398 national percentiles higher than males, and FSME students on average score 3.107 national percentiles lower than non-FSME students. To gauge the size of this rank effect, we scale it by the standard deviation of rank. A one standard deviation increase in rank is associated with increases in later test scores by 0.084 standard deviations, or 2.35 national percentiles.29

Columns 3 and 4 of Table 3 show an equivalent set of results for the same students two years later, after taking the national exams at the end of compulsory education. The impact of primary school rank on test performance has decreased by a small amount between ages 14 and 16. Comparing columns 2 and 4, being at the top of class compared to the bottom during primary school increases age-16 test scores by 6.389 national percentiles compared with 7.894 at age 14. Here, a one standard deviation increase in primary rank improves later test scores by 1.89 national percentiles.

| . | Age-14 test scores . | Age-16 test scores . | Complete subject age-18 . | |||

|---|---|---|---|---|---|---|

| . | (1) . | (2) . | (3) . | (4) . | (5) . | (6) . |

| Primary rank | 7.946*** | 7.894*** | 6.468*** | 6.389*** | 0.025*** | 0.025*** |

| 0.147 | 0.147 | 0.154 | 0.152 | 0.002 | 0.002 | |

| Male | -1.398*** | -2.526*** | -0.012*** | |||

| 0.045 | 0.056 | 0.000 | ||||

| FSME | -3.107*** | -4.329*** | -0.017*** | |||

| 0.030 | 0.041 | 0.000 | ||||

| Minority | 1.978*** | 4.329*** | 0.045*** | |||

| 0.054 | 0.083 | 0.001 | ||||

| Cubic in age 11 test scores | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

| Primary SSC effects | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

| . | Age-14 test scores . | Age-16 test scores . | Complete subject age-18 . | |||

|---|---|---|---|---|---|---|

| . | (1) . | (2) . | (3) . | (4) . | (5) . | (6) . |

| Primary rank | 7.946*** | 7.894*** | 6.468*** | 6.389*** | 0.025*** | 0.025*** |

| 0.147 | 0.147 | 0.154 | 0.152 | 0.002 | 0.002 | |

| Male | -1.398*** | -2.526*** | -0.012*** | |||

| 0.045 | 0.056 | 0.000 | ||||

| FSME | -3.107*** | -4.329*** | -0.017*** | |||

| 0.030 | 0.041 | 0.000 | ||||

| Minority | 1.978*** | 4.329*** | 0.045*** | |||

| 0.054 | 0.083 | 0.001 | ||||

| Cubic in age 11 test scores | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

| Primary SSC effects | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

Notes: Results obtained from six separate regressions based on 2,271,999 student observations and 6,815,997 student-subject observations. In columns 1 and 2, the dependent variable is by-cohort-by-subject percentalized KS3 test scores. In columns 3 and 4, the dependent variable is by-cohort-by-subject percentalized KS4 test scores. In columns 5 and 6, the dependent variable is an indicator for completing an A-Level at age 18 in the corresponding subject. SSC effects are fixed effects for each primary school-by-subject-by-cohort combination. Standard errors in italics and clustered at the secondary school level (3,800). Significance levels *** 1%,** 5%, * 10%.

| . | Age-14 test scores . | Age-16 test scores . | Complete subject age-18 . | |||

|---|---|---|---|---|---|---|

| . | (1) . | (2) . | (3) . | (4) . | (5) . | (6) . |

| Primary rank | 7.946*** | 7.894*** | 6.468*** | 6.389*** | 0.025*** | 0.025*** |

| 0.147 | 0.147 | 0.154 | 0.152 | 0.002 | 0.002 | |

| Male | -1.398*** | -2.526*** | -0.012*** | |||

| 0.045 | 0.056 | 0.000 | ||||

| FSME | -3.107*** | -4.329*** | -0.017*** | |||

| 0.030 | 0.041 | 0.000 | ||||

| Minority | 1.978*** | 4.329*** | 0.045*** | |||

| 0.054 | 0.083 | 0.001 | ||||

| Cubic in age 11 test scores | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

| Primary SSC effects | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

| . | Age-14 test scores . | Age-16 test scores . | Complete subject age-18 . | |||

|---|---|---|---|---|---|---|

| . | (1) . | (2) . | (3) . | (4) . | (5) . | (6) . |

| Primary rank | 7.946*** | 7.894*** | 6.468*** | 6.389*** | 0.025*** | 0.025*** |

| 0.147 | 0.147 | 0.154 | 0.152 | 0.002 | 0.002 | |

| Male | -1.398*** | -2.526*** | -0.012*** | |||

| 0.045 | 0.056 | 0.000 | ||||

| FSME | -3.107*** | -4.329*** | -0.017*** | |||

| 0.030 | 0.041 | 0.000 | ||||

| Minority | 1.978*** | 4.329*** | 0.045*** | |||

| 0.054 | 0.083 | 0.001 | ||||

| Cubic in age 11 test scores | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

| Primary SSC effects | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| | |$\surd$| |

Notes: Results obtained from six separate regressions based on 2,271,999 student observations and 6,815,997 student-subject observations. In columns 1 and 2, the dependent variable is by-cohort-by-subject percentalized KS3 test scores. In columns 3 and 4, the dependent variable is by-cohort-by-subject percentalized KS4 test scores. In columns 5 and 6, the dependent variable is an indicator for completing an A-Level at age 18 in the corresponding subject. SSC effects are fixed effects for each primary school-by-subject-by-cohort combination. Standard errors in italics and clustered at the secondary school level (3,800). Significance levels *** 1%,** 5%, * 10%.

After the examinations at age 16, students can choose to study for A-Levels, the qualifications required to study at university. We estimate the impact of primary school rank in a specific subject on the likelihood of choosing to study that same subject for A-Levels.30 These results are presented in columns 5 and 6 of Table 3, with the binary outcome variable being whether or not the student completed an A-Level related to that subject. In this linear probability model, conditional on prior test scores, student characteristics, and SSC effects, students ranked at the top of the class in a subject compared to the bottom are 2.5 percentage points more likely to choose that subject as an A-Level. Assuming a linear relationship, a one standard deviation increase in rank increases the likelihood of choosing that subject by 0.74 percentage points. As one in ten students complete these subjects at A-Level, a one standard deviation increase in class rank during primary school represents a 7% increase in the probability of choosing the associated A-Level seven years later.

5. Robustness

This section examines the robustness of our main results in four dimensions. First, we test the conditional independence assumption by testing if student characteristics are correlated with rank, conditional on test scores and SSC effects. Second, we test if the estimates are robust to alternate functional forms of prior achievement. Third, we examine if systematic or non-systematic measurement error in the age-11 achievement test scores would result in spurious rank effects. Finally, we address miscellaneous concerns such as school sizes, classroom variance, and the proportion of new peers at secondary school.

5.1. Rank-based primary school sorting

Our first key assumption (A1) is that a student’s rank in a subject is effectively random conditional on achievement and primary school-cohort attended. This would not be the case if parents were selecting primary schools based on the rank that their child would have. However, parents typically want to get their child into the best school possible in terms of average grades (Rothstein, 2006; Gibbons et al., 2013). This would work against any positive sorting by rank, the parents most motivated to do this would be enrolling their child in the schools with higher attainment, and so the child would have a lower rank. Regardless, in order for parents to sort on the basis of rank, they would have to know the ability of their child and of all their child’s potential peers by subject, which is unlikely to be the case when parents are making this choice when their child is only four years old.31

Appendix Section A.1 details two sets of tests which provide evidence against sorting. First, we use the LSYPE sample to show that pre-determined parental characteristics that impact future achievement, such as occupation, qualifications, or income, are uncorrelated with student rank conditional on age-11 test scores and SSC effects. This implies that parents are not selecting primary schools on the basis of rank. Second, we use the main sample to test whether pre-determined student characteristics are correlated with student rank, conditional on age-11 test scores and SSC effects. There are small correlations between student rank and characteristics; however, the coefficients are inconsistent and small. For example, using the largest treatment change possible, students ranked at the top of the class compared to the bottom are 0.8% more likely to be female and 0.8% more likely identify as a minority. To assess the cumulative effect of these small imbalances, we test whether predicted age-14 test scores are correlated with primary rank. We obtain the predicted test scores by re-estimating the main specification without the rank parameter and using the resulting parameters. We find that primary rank does have a small positive relationship with predicted test scores, albeit only about 1/70th of the magnitude of our main coefficient. A one standard deviation increase in rank increases predicted test scores by 0.001 standard deviations. These results are consistent with the fact that our main estimates in Table 3 hardly change when including student characteristics.32

In summary, it appears that parents are not choosing schools on the basis of rank, but there are small imbalances in predetermined student characteristics. These imbalances could be caused by students with certain characteristics having rank concerns during primary school (Tincani, 2015), and who therefore exert just enough effort to gain a higher rank. We return to this subject when discussing specifications that include individual effects that would absorb student competitiveness (Section 5.4) and having competitiveness as a potential mechanism (Section 7). Regardless of the sources of these imbalances, they do not significantly affect our results, as they are precisely estimated to be extremely small.

5.2. Specification checks

Recall that our second main assumption (A2) is that we have not misspecified the main equation such that it generates a spurious result. To test this, Appendix Table A.4 shows rank estimates from six specifications with increasing higher order polynomials for prior test scores. We find that once there is a cubic relationship, the introduction of additional polynomials makes no significant difference to the parameter estimate of interest. The final column replaces these polynomials with an indicator variable for each national test score percentile. With this flexible way of conditioning on prior achievement, we again see no meaningful change in the rank parameter, with an estimate of 7.543 (0.146).

Specification 1 also requires that the test score parameters be constant across schools, subjects, and cohorts, after allowing for mean shifts in outcomes through the inclusion of SSC effects. In Appendix Table A.5, we relax this requirement and allow the impact of the baseline test scores to be different by school, subject, or cohort by interacting the polynomials with the different sets of fixed effects. We again find that allowing the slope of prior test scores to vary by these groups does not significantly impact our estimates of the rank effect.33

5.3. Test scores as a measure of ability

To ensure that rank is conditionally orthogonal to potential outcomes, we account for student characteristics, SSC effects, and age-11 test scores. The intention is to have conditional test scores as an accurate measure of the underlying ability of the student. The inclusion of SSC effects accounts for any factor that impacts the growth of test scores between age 11 and age 14 at the class level. However, there may be other factors that occur during primary school that could cause these test scores to be a poor measure of ability. These sources of measurement error are a concern if they are rank preserving, as it would mean that the rank parameter could pick up the underlying student ability rather than the impact of rank itself. In the following, we outline situations where test scores do not reflect the underlying student ability due to systematic measurement error and the general case of non-systematic measurement error (noise).

5.3.1. Systematic measurement error

We consider two types of systematic measurement error in test scores that would be rank preserving. The first type is measurement error that impacts the level of measured student attainment. Such error could be generated from primary school peer effects. The second type is measurement error that impacts the spread of measured student achievement, which could be generated by primary school teachers. In either case, if these factors caused a permanent change in student ability, they would be fully accounted for by conditioning on the end-of-primary-school test scores.

The concern is that if these factors cause measurement error in observed ability, but preserve rank, then rank could pick up mismeasured ability. Appendix Section A.2 discusses the issue of primary school peer effects in more detail and provides tests to establish that the inclusion of SSC effects accounts for a range of inflated peer effects. Appendix Section A.3 sets out how teacher effects could generate a spurious correlation through increasing the spread of test scores.34 We provide three distinct tests that demonstrate teacher scaling effects are not driving the results. In doing so, we also establish that we do not need to rely on second central moment differences in classroom ability distributions to estimate the rank effect.

5.3.2. Non-systematic measurement error

When students take a test, their scores will not be a perfect representation of how well they perform academically on a day-to-day basis; they instead provide a noisy measure of ability. This type of non-systematic measurement error is potentially problematic for our estimation strategy, as it could generate a spurious relationship between student rank and gains in test scores. This is because they are both subject to the same measurement error but to different extents.

The intuition for this bias is as follows: when measurement errors are relatively large, they will impact both test scores and rank measures. Individuals with a mistakenly high (or low) test score also have a falsely high (or low) rank. Then, as we are estimating the growth in test scores, these students would have lower (higher) observed growth, which would downward bias the rank parameter. At low levels of measurement error, rankings would not change and it would be possible for the rank measure to pick up some information about ability that is lost in the test score measure.

Consider the simplest of situations where there is only one group and two explanatory variables, individual |$i's$| ability |$X_{i}^{*}$|, and class ability ranking |$R_{i}^{*}$|. Assume |$X_{i}^{*}$| cannot be measured directly, so we use a test score |$X_{i}$| as a baseline, which is a noisy measure of true ability and has measurement error |$X_{i}=l_{i}(X_{i}{}^{*},e_{i})$|. We also use this observed test score |$X_{i}$| in combination with the test scores of all others in that group |$X_{-i}$| to generate the rank of an individual, |$R_{i}=k(X_{i},X_{-i})$|. We know this rank measure is going to be measured with error |$e_{i2}$|, such that |$R_{i}=h_{i}(R_{i}^{*},e_{i2})$|. The problem is that this error, |$e_{i2}$|, is a function of their ability |$X_{i}^{*}$| and their own measurement error |$e_{i}$|, but also depends on the ability and measurement errors of all other individuals in their group (|$X_{-i}^{*}$| and |$e_{-i}$|, respectively): |$R_{i}=k(l_{i}(X_{i}^{*},e_{i}),l_{-i}(X_{-i}^{*},e_{-i}))=f(X_{i}^{*},e_{i},X_{-i}^{*},e_{-i}). $| Therefore, any particular realization of |$e_{i}$| not only causes noise in measuring |$X_{i}^{*}$|, but also in |$R_{i}^{*}$|. This means we have correlated, non-linear, and non-additive measurement error, where |$COV(e_{i},e_{i2})\ne0$|. This specific type of non-classical measurement error is not a standard situation, and it is unclear how this would impact the estimated rank parameter.35

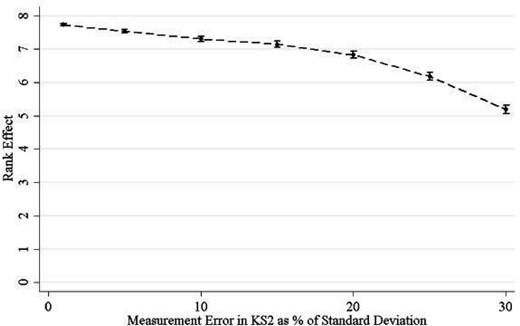

We gauge the importance of this problem by performing a data-driven bounding exercise. This involves a set of Monte Carlo simulations where we add additional measurement error drawn from a normal distribution (|$\mu=0$|) to the test score measure of each student, then recalculate student ranks and re-estimate the specification at ever-increasing variances of measurement error. In doing so, we are informed of the direction and the approximate magnitude of any measurement error-induced bias. The variance of this distribution increases from 1% of the standard deviation in test scores up to 30%. In terms of test scores, this represents an increase in the standard deviation from 0.28 to 11.2. For each measurement error distribution, we simulate the data 1000 times and estimate the rank parameter.

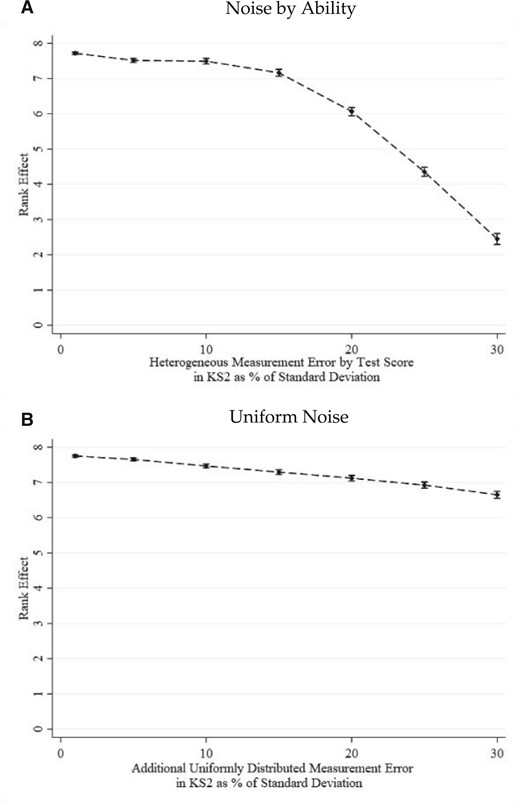

Figure 4 shows the simulated estimates of the mean and the 2.5th and 97.5th percentiles from the sampling distribution of beta for each measurement error level. We see that as measurement error increases, there is a slight downward bias. Consistent with our stated intuition, this bias grows non-linearly with measurement error. Small additional measurement error has little impact on the results. The amount of downward bias from increasing the additional error from 1% to 20% of a standard deviation amounts to the same level of bias as increasing the error from 20% to 25%.

Estimates from Monte Carlo simulations with additional measurement error in baseline test scores

Notes: This figure plots the mean rank estimate from 1000 simulations of Specification 1 with increasing additional measurement error added to student baseline test scores 7 with increasing additional measurement error added to student baseline test scores before computing ranks. The measurement error is drawn from a normal distribution with mean zero and a standard deviation that is proportional to the standard deviation in baseline test scores (28.08). The measurement error of each subject within a student is independently drawn. The error bars represent the 2.5th and the 97.5th percentiles from the sampling distribution of beta for each measurement error level.

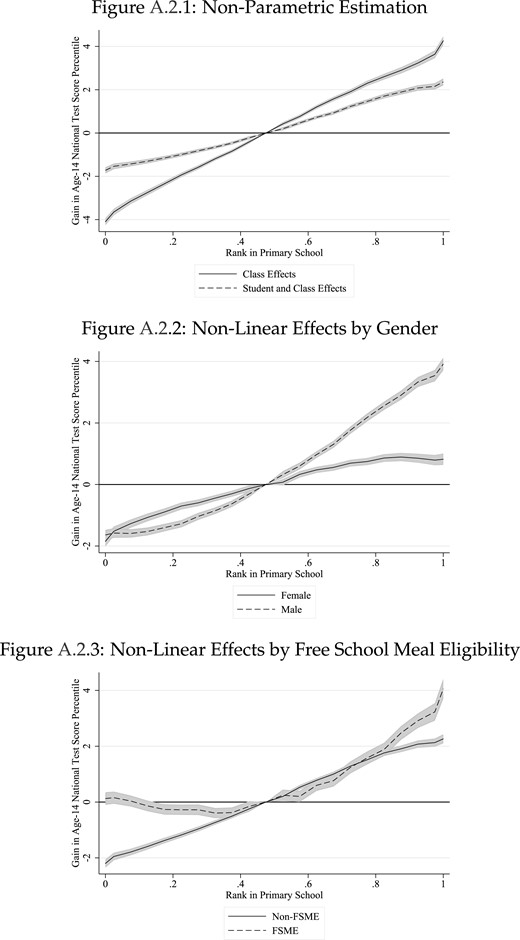

Appendix Figure A.1 repeats this process with alternative types of measurement errors. Panel A presents mean estimates from a heterogeneous measurement error process, where the impact of the normally distributed error increases as the test score is farther away from the national mean. This is reflective of the examinations potentially being less precise at extreme values. This slightly exacerbates the downward bias and the non-linearity of the bias. Next, Panel B draws the additional measurement error from the uniform distribution, which results in smaller downward bias. Given that these national tests have been designed to measure ability in a subject and additive measurement error causes only a small downward bias, we conclude that our main estimates are attenuated, at most, very little.

Finally, we show that multiplicative measurement error (rather than additive measurement error) also does not bias our results. Specifically, we consider measurement error that increases as students’ ability is further from the average. This result is dependent on the achievement distribution being uniformly distributed. With a normally distributed achievement distribution, there can be large measurement error in the tails of the distribution without changes in ranks (real or observed). As a result, the rank parameter picks up some measure of ability that has been masked by the measurement error. One way this can be undone is to transform the observed ability distribution into a uniform distribution. This means that the measurement error in observed test scores always has the same impact on student rank (in expectation), regardless of where they are in the observed ability distribution. This removes the spurious rank effect. We present data-generating processes confirming this result in Appendix Section A.4. As we use the uniformly distributed national student percentiles to rank students, we are immune from this source of bias.

5.4. Further robustness checks: within-student estimates, class size, re-mixing of students, and prior peers

To address potential remaining concerns, we present four further robustness checks. First, we have seen some student demographics predict rank conditional on prior test scores. Despite their apparent economically insignificant size, they may reflect larger unobservable differences between high- and low-ranked students. To address such concerns, we exploit the fact that we have measures for student achievement across three subjects. This allows us to estimate the main specification with the addition of student fixed effects, |$\tau_{i}^{1}$|, which is a component of the error term in Specification 1. This will account for any student-level unobservable characteristic or shock that impacts growth between age-11 and age-14 test scores, such as competitiveness, parental investment, or school investments. Note that this will also absorb any general growth in achievement across all subjects due to student rank, which should be considered part of the rank effect. This estimate is providing a lower bound on the rank effect, because it is only representing the additional gains in a subject due to the prior rank in that same subject in primary school. One could interpret it as the extent of subject specialization due to primary school rank.

| . | . | Main effect . | Effect within student . |

|---|---|---|---|

| . | . | (1) . | (2) . |

| (1) | Main specifications - benchmark | 7.894*** | 4.562** |

| 0.147 | 0.107 | ||

| (2) | Small primary schools (single class) only | 6.469*** | 3.646*** |

| 0.176 | 0.146 | ||

| (3) | Randomized school within cohort | -0.099 | -0.227 |

| 0.130 | 0.150 | ||

| (4) | No prior peers in secondary school | 10.461** | 5.011*** |

| 0.449 | 0.415 | ||

| (5) | Excluding specialist secondary schools | 7.875*** | 4.586*** |

| 0.155 | 0.112 | ||

| (6) | Accounting for secondary-cohort subject FX | 7.942*** | 4.471*** |

| 0.146 | 0.106 | ||

| Student characteristics | |$\surd$| | Abs | |

| Cubic in age 11 test scores | |$\surd$| | |$\surd$| | |

| Primary SSC effects | |$\surd$| | |$\surd$| | |

| Student effects | |$\surd$| | ||

| . | . | Main effect . | Effect within student . |