-

PDF

- Split View

-

Views

-

Cite

Cite

Hiroyuki Masaki, Hideyuki Hotta, Detection of solar internal flows with numerical simulation and machine learning, Publications of the Astronomical Society of Japan, Volume 76, Issue 6, December 2024, Pages L33–L38, https://doi.org/10.1093/pasj/psae093

Close - Share Icon Share

Abstract

The solar interior is filled with turbulent thermal convection, which plays a key role in energy and momentum transport and generation of the magnetic field. Turbulent flows in the solar interior cannot be optically detected due to its significant optical depth. Currently, helioseismology is the only way to detect the internal dynamics of the Sun. However, long-duration data with a high cadence is required and only a temporal average can be inferred. To address these issues effectively, in this study, we develop a novel method to infer solar internal flows using a combination of radiation magnetohydrodynamic numerical simulations and machine/deep learning. With the application of our new method, we can evaluate the large-scale flow at 10 Mm depth from the solar surface with three snapshots separated by an hour. We also apply the method to observational data. Our method is highly consistent with the helioseismology, although the amount of input data is significantly reduced.

1 Introduction

The solar interior is filled with turbulent thermal convection. Thermal convection has several roles in solar dynamics. It transports energy and momentum to construct the structure and large-scale flows in the solar interior, respectively (Hotta et al. 2023). Moreover, thermal convection is the origin of the solar magnetic field, which is the source of solar activities, such as sunspots, flares, and coronal mass ejections. On the surface, turbulent motion causes the Poynting flux, heats the solar corona, and drives the solar wind (Cranmer & van Ballegooijen 2005).

There have been many solar observations to date to evaluate the thermal convection on the solar surface (Bellot Rubio & Orozco Suárez 2019), the details of which are well understood. There is granulation with a typical scale of 1 Mm and supergranulation with a typical scale of 30 Mm. However, optical observations are restricted to the surface, and we cannot access any information on the solar interior due to the significantly large optical depth.

We can currently only access the solar internal dynamics by using the helioseismology method (Duvall et al. 1993). In particular, an acoustic wave is excited by the turbulent convection on the surface, and the wave propagates through the solar interior and emerges at the surface again. The oscillation observed on the surface conveys information about the solar interior. Helioseismology has revealed several essential phenomena in the solar interior, such as the precursor of sunspot emergence (Ilonidis et al. 2011) and large-scale flows in the deep convection zone (Zhao et al. 2013). Although helioseismology is a powerful tool, it still has significant limitations. This method requires long temporal data with a high cadence. Because of the nature of the stochastic excitation of the wave, the observational data include a large amount of noise. To reduce the noise and detect the flow at 3–4 Mm depth, we typically require 12 h long data with a cadence of 1 min, i.e., 720 snapshots (Sekii et al. 2007). This large amount of data increases the computational cost of helioseismology. In addition, the long-time data only provide a time-averaged result. The timescale for the near-surface layer (10 Mm) is several hours, and helioseismology cannot detect the temporal evolution of the layer.

Meanwhile, the technique of radiative magnetohydrodynamic (RMHD) simulation has been significantly improved in the last two decades to understand solar convection dynamics, and nowadays, it can reproduce the solar flows and magnetic fields quite well (Stein & Nordlund 1998; Vögler et al. 2005; Rempel et al. 2009; Hotta et al. 2019; Hotta & Toriumi 2020). These simulations are qualitatively consistent with the observations (Nordlund et al. 2009), and the simulations for the small-scale magnetic field also match the observations (Rempel 2014). There is an indication that we can reproduce the thermal convection properties in the layer shallower than 20 Mm (Lord et al. 2014).

Machine learning is an optimization method for mathematical models that is now applied in various fields. By recognizing patterns from large amounts of data, machine learning effectively solves complex problems that were difficult, improving prediction accuracy and computational efficiency, and achieving excellent outcomes across many fields. Machine learning has also been applied to solar thermal convection. DeepVel estimates horizontal thermal convection on the solar surface from two images taken 30 s apart (Asensio Ramos et al. 2017). This method is superior to other techniques for estimating horizontal flows, such as local correlation tracking (Tremblay et al. 2018). Additionally, Tremblay and Attie (2020) improve DeepVel’s performance with a U-net architecture. Ishikawa et al. (2022) examine prediction performance on different spatial scales and suggest ways to enhance the perfomance of machine learning on small spatial scales. In Masaki et al. (2023), the amount of observational data used for estimation is reduced, enabling predictions from snapshots.

In this study, we propose a novel method to infer the solar internal dynamics taking advantage of the well established RMHD simulations and the fast-growing machine learning technique. The RMHD simulation at the near surface is fairly reliable, and the neural network is trained based on our simulation result. Our method is also validated with observation data taken with the SDO/HMI (Solar Dynamics Observatory/Helioseismic and Magnetic Imager) satellite (Pesnell et al. 2012).

2 Method

2.1 RMHD simulation

We use the radiation magnetohydrodynamics simulation code R2D21 (Hotta et al. 2019; Hotta & Iijima 2020) in this study to calculate the internal convective velocity for training data. R2D2 solves the following MHD equations:

where |$\rho$|, |$\boldsymbol{v}$|, p, T, |$\boldsymbol{g}$|, |$\boldsymbol{B}$|, s, and Q represent the density, velocity, pressure, temperature, gravitational acceleration, magnetic field, entropy, and radiative heating, respectively. The pressure p is calculated from a table prepared using the OPAL equation of state (Rogers et al. 1996). The equation is solved with a fourth-order Runge–Kutta method for the time derivatives and a fourth-order accuracy for the spatial derivatives. The radiative heating Q is solved using a gray approximation. In this study, we solve only up- and downward energy transfer to reduce computational costs. See Hotta and Iijima (2020) for more details.

The computational domain is 49.152 Mm horizontally in each direction and 24.576 Mm vertically. The top boundary is located 700 km above the surface of the Sun, i.e., an optical depth of |$\tau =1$|. The horizontal grid spacing is set to 96 km. We use nonuniform grid spacings for the vertical direction. Around the photosphere, the grid spacing is 48 km, gradually increasing to about 90 km around the bottom boundary. As a result, the simulation domain is solved with |$384\times 512\times 512$| grid points. We continue each simulation for about two weeks. The data output cadence is 5 min.

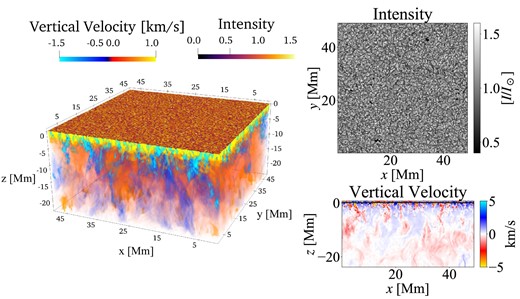

For the generality of the training data, the simulations are performed in five different initial magnetic fields. Snapshots of the simulation are shown in figure 1.

Snapshots of a simulation result. The left panel shows a three-dimensional volume rendering of the simulation. The color in the box represents the vertical velocity. The surface map on the box shows the radiative intensity. The panels on the right show the emergent radiative intensity (top) and a horizontal slice of the vertical velocity (bottom).

2.2 Data processing

In this study, we plan to apply our neural network to observational data taken from SDO/HMI. To fill the gap between the observation and the simulation, we carry out several data processing steps for the obtained data. The resolution of SDO/HMI is |${0_{.}^{\prime \prime }5}$|, corresponding to 350 km at the solar disk center. The simulation data reproduce the observation data by applying SDO’s PSF (point spread function) from Yeo et al. (2014) to the intensity, velocity field, and magnetic field. The grid spacing of our simulation is 96 km, and we average |$4 \times 4$| grids into a grid to decrease the resolution mismatch. This procedure results in an image with a grid spacing of 384 km and a horizontal number of grid points of |$128 \times 128$|.

In addition, similar to Masaki et al. (2023), the power spectrum of simulation and observation data indicates that the small-scale structures in the observation data do not match the simulation data due to the observational noise. To reduce the negative effect of this power spectrum mismatch on the learning process, we add random noise to 5% of the standard deviation of the Fourier-transformed quantities. This noise eliminates small-scale structures with high wavenumbers. Then, an inverse Fourier transform is applied to the data, which is used as the training data.

2.3 Training for neural network

We use |$128 \times 128$| pixel images (radiative intensity, LoS velocity, and LoS magnetic field at the |$\tau =1$| surface) for training as input data. Each of these inputs consists of three images taken at 1 h intervals. Thus, nine images of physical quantities with a resolution of |$128 \times 128$| pixels are input data. The LoS velocities at 5.4, 12, and 18 Mm depths from the surface with |$512 \times 512$| pixel images without any data processing are used as output data. Out of the five simulations performed with different initial conditions for the vertical magnetic field, two are used for actual training, one as validation data to monitor the training process, and the other as test data to evaluate the final network performance. Using the results of a different numerical code from the simulation code that is not used for the test data is more appropriate. However, another code is currently unavailable in our group. Therefore, the evaluated values in this study are considered as reference values. We use the intensity, vertical velocity, and vertical magnetic fields obtained from the simulation as input data.

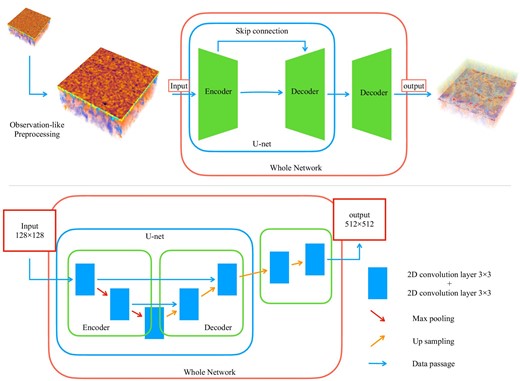

The network architecture is shown in figure 2. This network structure is based on U-net (Ronneberger et al. 2015), which has an encoder–decoder architecture that efficiently processes images. However, while typical encoder–decoder structures usually have the same input and output image sizes (Masaki et al. 2023), in this study, the pixel numbers of the input data (|$128 \times 128$|) and the output data (|$512 \times 512$|) are different. The output data is made to the simulation as closely as possible, so we use the raw simulation data. Thus, the decoder structure is extended to match the size of the output images. As a result, the output has a higher resolution than the input, and the network can be considered to have super-resolution. In the encoder, each convolutional layer allies two convolutions with a kernel size of |$3\times 3$|. This is followed by downsampling using max pooling in a |$2\times 2$| region. The number of kernels doubles with each downsampling, starting with 256 layers in the first layer. In the decoder, after similar convolutions, upsampling is performed by expanding one pixel into a |$2\times 2$| region. Skip connections are applied by concatenating tensors along the kernel dimension. Due to our GPU performance limitations, the U-net is shallower than that of Ronneberger, Fischer, and Brox (2015). The mini-batch size is four. Training is performed for eight epochs, and the model with the highest correlation coefficient on the validation data is chosen. We use mean squared error (MSE) as the loss function. We use an Adam optimizer (Kingma & Ba 2014) and the hyperparameters are the same as in Kingma and Ba (2014). We use approximately 12000 data sets for the training data, while 1000 data are used for validation. This study uses TensorFlow, and the GPU is an Nvidia RTX 2080 Ti.

Illustration of the network architecture. The top diagram is the network’s overall structure based on the U-net model used in this study. The bottom presents a detail of our structure. The blue squares represent the convolution layers. The U-net structure processes input data, two max pooling (shown by red arrows), and two upsamplings (shown by orange arrows). Then, it is upsampled again to match the resolution of the simulation.

3 Result

3.1 Comparison with simulation

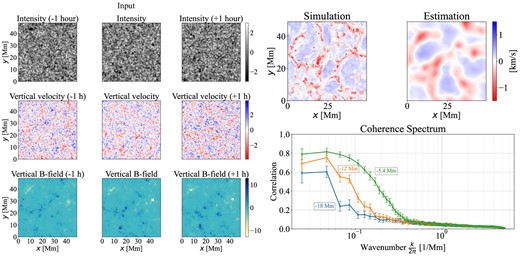

The left nine panels of figure 3 show the input data for the network. The top, middle, and bottom rows show the radiative intensity, the LoS velocity field, and the LoS magnetic field at the |$\tau =1$| surface, respectively. The middle column shows the data at the same time as the estimated velocity field in a deep layer, and the left and right columns show the data 1 h earlier and later, respectively. Each data set is normalized to have a mean of 0 and a standard deviation of 1 to improve training efficiency.

Input data and a comparison between the estimation and simulation. The left panel shows the input data for the neural network. These images represent the values normalized and processed. The top right panels show the result of the simulated and estimated velocity fields by our neural network. The bottom right panel shows the coherence spectrum between the estimated and simulated velocities in the deep convection zone. The error bars represent the 99% confidence interval.

The two top right panels of figure 3 show the LoS velocity at 12 Mm depth from the surface. The right and left panels show the numerically simulated and estimated data by our neural network. Although small-scale structures are smoothed out, large-scale convective structures are well reproduced. The correlation coefficients between the simulated and estimated values are 0.25 at a depth of 5 Mm, 0.37 at a depth of 12 Mm, and 0.37 at a depth of 18 Mm. The lower correlation coefficient at 5 Mm is due to the difficulty in matching small-scale structures because the scale of convective structures is smaller than that of 12 Mm. The reproduction of the large-scale structure can be evaluated with the coherence spectrum, which is an indicator of the agreement of image structures at each scale, with values close to 1 indicating high agreement and values close to 0 indicating no correlation. See Ishikawa et al. (2022) for a detailed definition of the coherence spectrum. The bottom left panel of figure 3 shows the coherence spectra at each depth.

We divide the wavenumber into 256 bins and choose |$\Delta k$| as an interval of |$135.36\, \mathrm{km^{-1}}$|. From figure 3, we can see that those large-scale structures match with a value of about 0.7. This result also aligns with the intuition that estimation becomes more difficult at greater depths.

3.2 Comparison with helioseismology

We compare the detection performance of the internal structure estimated by our neural network with helioseismology, a method for probing the internal structure of the Sun. We adopt a helioseismology method suggested by Gizon and Birch (2004). Performing an inversion to calculate internal velocities adds new uncertainties, and we currently cannot carry out such types of calculations. In this study, we only calculate traveltimes with phase speed filters. We evaluate the points to annular traveltime and can detect the divergent and convergent flows, which physically correspond to up- and downflows in the deep convection zone. Thus, we can compare these with our estimated velocity field. The distance between two points is 12 Mm, and we use the filter corresponding to 8.7–14.5 Mm, as shown in table 1 on page 30 of Gizon and Birch (2004). The traveltime shifts are calculated in 256 directions, evenly spaced over |$360^{\circ }$|.

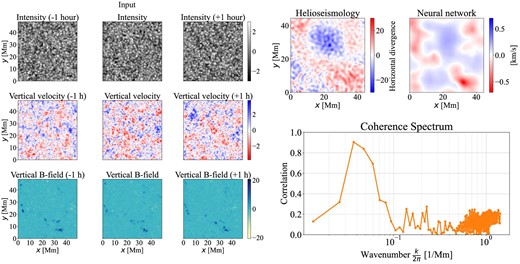

In this study, we show a comparison between the helioseismology method and the proposed neural network for observational data. Although the simulation data underwent the same process, this is not explicitly shown. The result for the simulated data is similar to the following comparison for the observational data. Figure 4 shows the results of the comparison with helioseismology for the observational data. The data are from SDO/HMI on 2011 April 5 from 00:00:45 to 23:59:45. A sample of these data is shown in the same format as figure 3 on the left side of figure 4. The results use 1919 images from a day’s worth of 45 s cadence data. Traveltime is calculated with a window interval corresponding to 12 Mm. Note that this is a comparison made using the data for one case, and it is not an error in the validated values that are not at a sufficient level of accuracy.

Helioseismology result and network estimation from observation data. The left panels show the normalized values of the observed data input into the neural network, as shown in figure 3. The top right panel compares the results from the helioseismology and the estimated velocity field. The bottom right panel depicts the coherence spectra between helioseismology and the estimation.

The top right panels of figure 4 show a comparison between the traveltime shift by the horizontal divergence (left) and the vertical velocity evaluated with our neural network (right). Because helioseismology estimates temporally average values, the network estimates are also averaged over time. The large-scale structures seem to match well. The correlation coefficient is |$-$|0.48, with a negative correlation due to the relationship between the traveltime and the velocity field signs; i.e., a negative traveltime shift corresponds to horizontal divergent flow and upflow (|$v_z\\gt 0$|). The bottom right of figure 4 shows the coherence spectra for both methods. At scales around 20 Mm, the coherence is approximately 0.8, indicating a good match between the two.

4 Summary and discussion

In this study, we propose a novel method to evaluate solar internal flows. We train a neural network to estimate the velocity of upflows in the solar interior from three observable surface quantities: radiative intensity, LoS velocity, and LoS magnetic field. The training data are generated using the radiation magnetohydrodynamics simulation code R2D2, which reproduces internal convection in the Sun. Even with the small amount of data for the input, we nicely reproduce the overall velocity structure in the deep layer.

A comparison with helioseismology using observational data shows a good correlation on a large scale, indicating that the estimation of the network velocity field does not significantly contradict reality. The neural network reproduces small-scale structures slightly better than helioseismology using the simulation data. This method could be better with small-scale structures. Even if the resolution of the output images is reduced, similar predictions can be made for large scales. This may be beneficial in terms of computational speed and data efficiency. However, even with high-resolution data for training, the accuracy does not improve. Drastic performance improvements are not expected with the current method, even with advances in observational technology.

In this study, a 1 h time interval is used for the input. The typical convection time and propagating time to the observable surface should depend on depth. Thus, there may be an optimal time interval for the target depth. Although using a higher cadence for data input can improve performance, increasing the data about 10 times will not lead to drastic improvements whereas it increases computational costs. Thus, appropriate input must be sought according to the purpose. This study uses simulations with resolution matched to SDO/HMI data as input data. Separately, even when training using the raw simulation data as input, the correlation coefficient does not significantly improve at depths shallower than 5 Mm. Therefore, even if observational technology advances and the accuracy of observational data improves, drastic performance improvements cannot be expected with this method.

We apply our neural network to disk center data in this study. When we extend our method to other regions, solar global internal flow can be estimated, and we could possibly predict the emergence of rising flux tubes in the future.

Funding

This work was supported by JST SPRING, Grant Number JPMJSP2109. The results were obtained using Cray XC50 at the Center for Computational Astrophysics, National Astronomical Observatory of Japan. This work was supported by MEXT/JSPS KAKENHI (grant nos. JP23K25906, JP21H04497, and 21H04492) and by MEXT as “Program for Promoting Researches on the Supercomputer Fugaku” (Elucidation of the Sun-Earth environment using simulations and AI; Grant Number JPMXP1020230504).

Data availability

The simulation code and data underlying this article will be shared on reasonable request to the corresponding author. The machine learning code and trained models are publicly available at |$\\lesssimngle$|https://github.com/HiroyukiMasaki/Solar_Interior|$\rangle$|.

Footnotes

Radiation and RSST for Deep Dynamics; where RSST stands for the reduced speed of sound technique (Hotta et al. 2012), which we turned off in this study.