-

PDF

- Split View

-

Views

-

Cite

Cite

Ichiro Takahashi, Nao Suzuki, Naoki Yasuda, Akisato Kimura, Naonori Ueda, Masaomi Tanaka, Nozomu Tominaga, Naoki Yoshida, Photometric classification of Hyper Suprime-Cam transients using machine learning, Publications of the Astronomical Society of Japan, Volume 72, Issue 5, October 2020, 89, https://doi.org/10.1093/pasj/psaa082

Close - Share Icon Share

Abstract

The advancement of technology has resulted in a rapid increase in supernova (SN) discoveries. The Subaru/Hyper Suprime-Cam (HSC) transient survey, conducted from fall 2016 through spring 2017, yielded 1824 SN candidates. This gave rise to the need for fast type classification for spectroscopic follow-up and prompted us to develop a machine learning algorithm using a deep neural network with highway layers. This algorithm is trained by actual observed cadence and filter combinations such that we can directly input the observed data array without any interpretation. We tested our model with a dataset from the LSST classification challenge (Deep Drilling Field). Our classifier scores an area under the curve (AUC) of 0.996 for binary classification (SN Ia or non-SN Ia) and 95.3% accuracy for three-class classification (SN Ia, SN Ibc, or SN II). Application of our binary classification to HSC transient data yields an AUC score of 0.925. With two weeks of HSC data since the first detection, this classifier achieves 78.1% accuracy for binary classification, and the accuracy increases to 84.2% with the full dataset. This paper discusses the potential use of machine learning for SN type classification purposes.

1 Introduction

Time domain science has become a major field of astronomical study. The discovery of the accelerating universe (Riess et al. 1998; Perlmutter et al. 1999) invoked a series of large supernova (SN) surveys in recent decades (Betoule et al. 2014; Scolnic et al. 2018; Brout et al. 2019). These surveys revealed that an entirely new family of transients exists, and the search for unknown populations is currently of great interest (Howell et al. 2006; Phillips et al. 2007; Quimby et al. 2007). For precision cosmology, it is highly important to maintain the purity of Type Ia supernovae (SNe Ia) while performing uniform sampling of these SNe ranging from bright to faint. Spectroscopic follow-up has been essential to distinguish a faint SN Ia from a Type Ib supernova (SN Ib) and a Type Ic supernova (SN Ic), which have similar light curve behavior (Scolnic et al. 2014). Considering that a large amount of precious telescope time is dedicated to these follow-up programs, it is desirable to make efficient use of these telescopes.

The scale of surveys is becoming larger, and it has become impossible to trigger spectroscopic follow-up for all of the candidates in real time. It has therefore become necessary to develop a new classification scheme, and a natural path along which to proceed would be to perform photometric classification (Sako et al. 2011; Jones et al. 2018). The rise of machine learning technologies has resulted in astronomical big data commonly being analyzed using machine learning techniques.

Neural networks have been used for photometric redshift studies from the early stages. Today, many machine learning methods are applied to photometric redshift studies (Collister & Lahav 2004; Carliles et al. 2010; Pasquet et al. 2019). Deep neural networks (DNNs) are being used to process imaging data to identify strong lens systems (Petrillo et al. 2017) or for galaxy morphology classifications (Hausen & Robertson 2020). Currently, machine learning is being introduced to process transient survey data for detection (Goldstein et al. 2015) and classification purposes (Charnock & Moss 2017). A recurrent autoencoder neural network (RAENN) is introduced for photometric classification of the SN light curves (Villar et al. 2020), and being applied to 2315 Pan-Starrs1 data (Hosseinzadeh et al. 2020). In preparation for the Vera C. Rubin Observatory, a real timeclassifier, ALeRCE (Automatic Learning for the RapidClassification of Events), is being developed (Sánchez-Sáezet al. 2020; Förster et al. 2020) and currently applied to the Zwicky Transient Facility (ZTF) data (Carrasco-Davis et al. 2020).

In this paper we introduce our attempt to apply machine learning to actual transient survey data. The Hyper Suprime-Cam (HSC; Miyazaki et al. 2018; Komiyama et al. 2018; Kawanomoto et al. 2018; Furusawa et al.

2018), a gigantic mosaic charge-coupled device still camera mounted on the 8.2 m Subaru Telescope, makes it possible to probe a wide field (1.77 deg2 field of view) and deep space (26th mag in the i band / epoch). Our primary scientific goals are SN Ia cosmology, Type II supernova (SN II) cosmology, and super-luminous supernova (SLSN) studies, as well as to probe unknown populations of transients. As reported recently (Yasuda et al. 2019), more than 1800 SNe were discovered during a six-month campaign. We deployed machine learning (area under the curve (AUC) boosting) for transient detection (Morii et al. 2016), where the machine determines whether a transient is “real” or “bogus.” In Kimura et al. (2017), we adopted a DNN for SN type classification from a two-dimensional image, and highway block was introduced for the optimization of layers. This research is an extension of our previous work (Kimura et al. 2017) and applies a DNN to the photometric classification of transients. We uniquely attempt to use the observed data in a state that is as “raw” as possible to enable us to directly use the data as input for the machine without fitting the data or extracting characteristics.

The structure of this paper is as follows. We introduce our methods in section 2, and the data in section 3. The design of our DNN model and its application to pseudo-real LSST simulation data is described in section 4. Section 5 presents the application of our model to the actual Subaru/HSC data. We discuss the results in section 6 and conclude the paper in section 7.

2 Methods

2.1 Tasks in HSC survey

The Subaru HSC Transient Survey forms part of the Subaru Strategic Project (SSP), which is a five-year program with a total of 300 dark nights (Aihara et al. 2018a; Miyazaki et al. 2018). The HSC-SSP Transient Survey is composed of two seasons, the first of which was executed for six consecutive months from 2016 November through 2017 April. The HSC is mounted on the prime focus for two weeks in dark time. Weather permitting, we aimed to observe two data points per filter per lunation. Details of the survey strategies and the observing logs were reported in Yasuda et al. (2019), and we used the observed photometric data described there.

One of our primary goals with the HSC-SSP Transient Survey is SN Ia cosmology, which aims to perform the most precise measurement of dark energy at high redshift and test whether the dark energy varies with time (Linder 2003). We have been awarded 96 orbits of Hubble Space Telescope time (WFC3 camera) to execute precise infrared (IR) photometry at the time of maximum. Our HST program uses non-disrupted target of opportunity (ToO), which means we are required to send the request for observation two weeks prior to the observation. In other words, we need to identify good candidates two to three weeks prior to the maximum. Although a high-redshift (|$z$| > 1) time dilation factor helps, it is always a challenge to identify SN Ia on the rise.

Our international collaboration team executes spectroscopic follow-up using large telescopes from around the world. Our target, high-redshift SN Ia, is faint (∼24th mag in the i band) for spectroscopic identification even with the most powerful large telescopes: GMOS Gemini (Hook et al. 2004), GTC OSIRIS,1 Keck LRIS (Oke et al. 1995), VLT FORS (Appenzeller et al. 1998), Subaru FOCAS (Kashikawa et al. 2002), and AAT AAOmega Spectrograph (Saunders et al. 2004). Thus, it is critical to hit the target at the time of maximum brightness either by using ToO (GTC), queue mode (VLT), or classical scheduled observation (Keck, Subaru, ATT).

An SN Ia requires approximately 18 d from its explosion to reach its maximum in the rest frame (Conley et al. 2006; Papadogiannakis et al. 2019). Given this fact, the SNe with which we are concerned are high-redshift SN Ia (|$z$| > 1), in which case we have approximately one month in the observed frame for an SN Ia from the time of explosion until it reaches its maximum. However, our task is to identify these SNe two weeks prior to the maximum, which means we have only two data points per filter. In addition to that, the sky conditions continue to change, and we may not have the data as originally planned. In reality, our identification has to proceed despite data points being missing on the rise.

In parallel to the SN Ia cosmology program, our sister projects also need identification and classification of HSC transients. Specifically, the SN II cosmology program requires timely spectroscopic follow-up to measure the expansion velocity of the photosphere from the Hβ line (de Jaeger et al. 2017). An SLSN is of great interest today, because it is a relatively rare event (Quimby et al. 2011) with diverse origination mechanisms (Gal-Yam 2012; Moriya et al. 2018), and it can be used to probe the high-redshift Universe (Cooke et al. 2012). New types of rapid transients are also discovered by HSC, but their identities are yet to be known (Tampo et al. 2020). For these projects, timely spectroscopic follow-up is also critical (Moriya et al. 2019; Curtin et al. 2019).

An early-phase SN Ia provides us with clues to the explosion mechanism (Maeda et al. 2018) and progenitors (Cao et al. 2015). The advantage of HSC is its ability to survey a large volume, and in practice it has confirmed the long-standing theoretical prediction of helium-shell detonation (Jiang et al. 2017). Finding an early-phase SN Ia is not trivial, but HSC is yielding a new set of early-phase SNe Ia (Jiang et al. 2020). Observations of early-phase core-collapse SNe provide us with crucial information on the sizes of the progenitors (Thompson et al. 2003; Tominaga et al. 2011) and the circumstellar medium (Förster et al. 2018).

2.2 Classification method for the HSC-SSP transient survey

We designed two machine learning models with the emphasis on identifying SN Ia, which requires a time-sensitive trigger for HST IR follow-up. The first model operates in binary mode and classifies whether a transient is an SN Ia. In this regard, the majority of high-redshift transients are known to be of the SN Ia type, and our work entails searching for other unknown transients from among those labeled non-SN Ia. The second model classifies a transient into one of three classes: SN Ia, SN Ibc, or SN II. These three classes were chosen for simplicity, and in fact the majority of SNe belong to one of these three categories. SN Ia is a thermonuclear explosion, and its brightness can be calibrated empirically. SN Ib, SN Ic, and SN II are all core-collapse SNe and are classified by their spectral features (Filippenko 1997). The light curves of SN Ib and SN Ic, which are collectively referred to as SN Ibc, are similar to those of SN Ia and always contaminate the SN Ia cosmology program. They are fainter than SN Ia and redder in general. A major challenge of this work is to determine whether we can distinguish SN Ibc from SN Ia.

3 Data

In this section we present the dataset we used for our study. We first introduce our SN dataset from the HSC-SSP Transient Survey (subsection 3.1). Then we describe the simulated photometric data to train the machine (subsection 3.2). Lastly, we explain the preprocessing of the above data for input into the machine (subsection 3.3).

3.1 Observed data from the Subaru/HSC-SSP transient survey

The goal of this project is to classify the light curves observed by Subaru/HSC. The discovery of 1824 SNe recorded during the six-month HSC-SSP Transient Survey during the period of 2016 November through 2017 April was described in Yasuda et al. (2019). The survey is composed of the Ultra-Deep and Deep COSMOS layers (Scoville et al. 2007). The median 5 σ limiting magnitudes per epoch are 26.4, 26.3, 26.0, 25.6, and 24.6 mag (AB) for the g, r2, i2, |$z$|, and y bands, respectively, for the Ultra-Deep layer. For the Deep layer, the depth is 0.6 mag shallower.

The SN dataset consists of time series photometric data (flux, magnitude, and their errors) in each band for each SN. Because part of the y-band photometric data contains residuals due to improper background subtraction influenced by scattered light (Aihara et al. 2018b), we excluded the y-band data from our study, considering the impact thereof on the classification performance.

The redshift information for HSC SNe is a combination of our follow-up observation results and catalogs from multiple surveys of those host galaxies. The spectral redshifts (spec-|$z$|) are adopted from the results of the follow-up spectrum observations by AAT/AAOmega performed in 2018 and those from the DEIMOS (Hasinger et al. 2018), FMOS-COSMOS (Silverman et al. 2015), C3R2 (Masters et al. 2017), PRIMUS (Coil et al. 2011), and COSMOS catalogs. For those without spec-|$z$|, the photometric redshifts (photo-|$z$|) were adopted from the COSMOS 2015 catalog (Laigle et al. 2016) and those calculated from the HSC-SSP survey data (Tanaka et al. 2018).

3.2 Simulated data for training

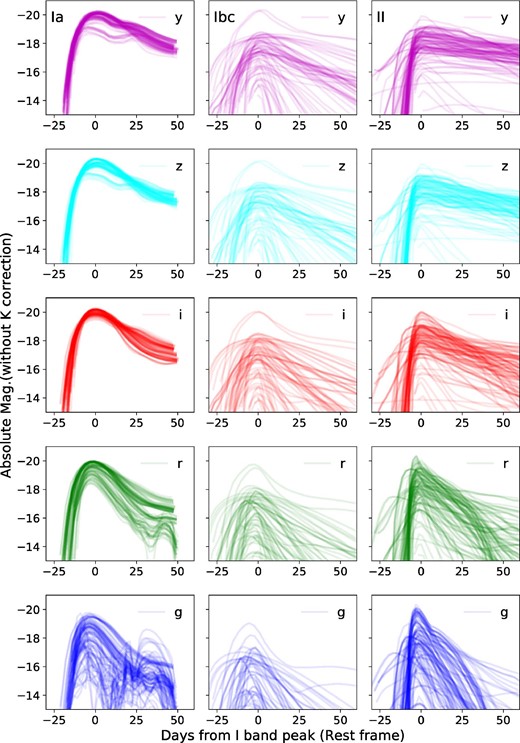

We are in need of simulating observed photometric data to train the machine. For normal SN Ia, we used the SALT2 (Guy et al. 2010) model (version 2.4) that requires two input parameters: c for color and x1 for stretch. We adopted an asymmetric Gaussian distribution for c, and x1 from Mosher et al. (2014), and generated light curves and simulated photometric data points based on the observation schedule. Apart from SN Ia, we used published spectral time series from Kessler et al. (2019) which contains both observed core-collapse SN data and light curves from the simulation. We combined an equal number of SN Ia and non-SN Ia observations to approximate the observed fractions. Although these fractions do not need to be exact for the purpose of our classification, it is important to avoid using an unbalanced dataset. For the three-class classification, we set the ratio of SN Ia : SN Ibc : SN II = 10 : 3 : 7. The redshift distribution of galaxies was taken from the COSMOS survey (Laigle et al. 2016), and we distributed the simulated SNe accordingly from |$z$| = 0.1 through |$z$| = 2.0. Throughout the study reported in this paper we used ΛCDM cosmology with Ωm = 0.3 and h = 0.7. We used the filter response including system throughput from Kawanomoto et al. (2018). Examples of simulated photometric data are shown in figure 1.

Overlay plots of simulated light curves with |$z$| between 0.1 and 1.2. Each panel shows the plots of SN Ia, Ibc, II data from the left, and the g, r2, i2, |$z$|, and y bands from the bottom. The variation of the curves in each panel depends on the different parameters and templates used in the simulation. A noise component was not added to these light curves. (Color online)

A complication that arises when attempting to simulate realistic data is that the machine does not accept expected errors. Alternatively, we may not have identified a good method for including errors. In this study, we therefore simply used brute force, i.e., we placed the expected photometric error on top of the simulated data such that a simulated data point behaves similarly to one of the many realizations. The magnitude of the error in the HSC the simulation would have to consider varies from night to night because of sky conditions. We measured and derived the flux vs. error relationship from the actual observed data at the simulating epoch and applied that relationship to the simulated photometric data.

Guided by the “accuracy” (subsection 4.2), we determined the number of light curves that would be required for training. Based on our convergence test, we concluded that we would need to generate more than 100000 light curves for training as shown in figure 2. We omitted curves with less than 3 σ detection at the maximum because our detection criterion was 5 σ. For training, we generated 514954 light curves for HSC observed data. Their final class ratio after these criteria is SN Ia : Ibc : II = 0.59 : 0.07 : 0.34, and their peak timings are randomly shifted by 450 d.

Convergence test to determine the number of light curves that would need to be generated to train the machine. The solid line shows the mean accuracy of five classifiers. The shaded area shows the standard deviation of the classifiers, which were trained with five-fold cross validation using the training dataset. The results indicate that more than 100000 light curves would be required for training. (Color online)

3.3 Preprocessing of input data

4 Deep neural network classifier

With the rise of Big Data, the use of machine learning techniques has played a critical role in the analysis of astronomical data. Techniques such as random forest, support vector machine, and convolution neural network have been used for photometric data analysis (Pasquet et al. 2019), galaxy classifications (Hausen & Robertson 2020), and spectral classifications (Garcia-Dias et al. 2018; Muthukrishna et al. 2019b; Sharma et al. 2020).

In our work, we seek to classify SNe from photometric data. Our approach entails making use of the observed data without preprocessing or parameterization. In this regard, we rely on deep learning to make our work possible. We decided to test the extent to which deep learning could provide useful results without extracting features such as color, the width of the light curve, and the peak magnitude.

The fact that we went one step further by leaving the observed data as raw as possible means that our input consists of a simple array of magnitudes. An attempt such as this would not have been possible ten years ago; however, owing to advancements in computing and the deep learning technique, this has become a reality. Among the many machine learning methods, we decided to use a DNN to enable us to classify astronomical objects from the raw observed data.

4.1 Model design

In this section we describe the design of our DNN model,2 which accepts an array of observed magnitudes as its input and outputs the SN classification with probabilities. We adopted a highway layer (also known as a “layer in layer”: Srivastava et al. 2015a) as a core part of our network. Compared to plain DNN, the performance of a highway layer improves when the network is deep in terms of parameter optimization (Srivastava et al. 2015b).

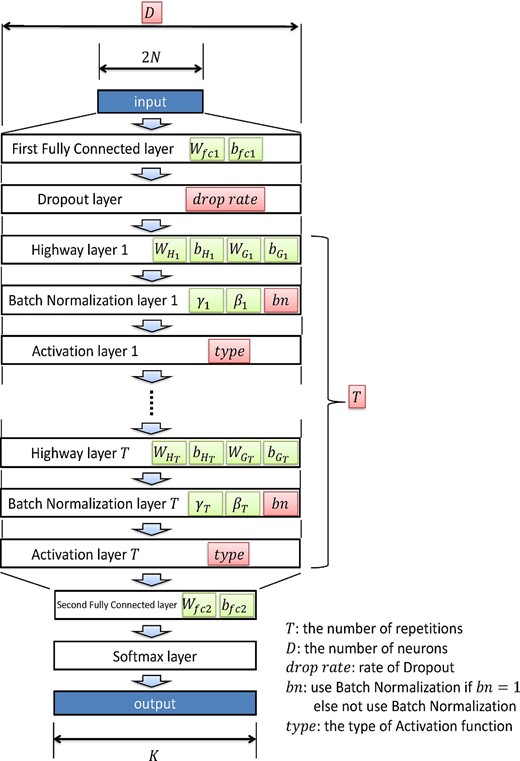

Similar to other DNN models, this model proceeds through a training and validation process to optimize the parameters, and we describe the steps below. Our terminology is commonly used in the world of DNN, but as this is a new introduction to the astronomical community, we explain each step in detail. The architecture of our model is summarized in figure 3. Ultimately, each SN is assigned a probability of belonging to a certain astrophysical class, in our case, the type of SN.

Architecture of the deep neural network classifier. The green boxes are parameters optimized by the gradient descent method during training. The red boxes are hyperparameters that are optimized during the hyperparameter search. The batch normalization layer has four variables (μ, σ2, γ, and β), where μ and σ2 are intended to learn the statistics (mean and variance) of the value through the layer, respectively, and γ and β are scale and shift parameters, respectively, to adjust the output. Note that μ and σ2 are not updated by gradient descent; instead, they are updated by the moving average. They were omitted from the figure for simplicity. (Color online)

4.1.1 Input

4.1.2 First fully connected layer

4.1.3 Dropout layer

To obtain a robust result, it is always best to train all of the neurons as an ensemble and avoid a situation in which one of the neurons adversely affects the result. Dropout is a process in which certain neurons are randomly dropped from training and the dropout rate can be optimized as one of the hyperparameters (Srivastava et al. 2014).

4.1.4 Highway layer

4.1.5 Batch normalization layer

We adopt batch normalization (Ioffe & Szegedy 2015) to accelerate and stabilize the optimization. Even if a large number of parameters need to be trained, batch normalization facilitates convergence of the training process, reduces errors on the slope when we apply entropy minimization, prevents the average and dispersion from becoming exponentially large in deep layers, and minimizes the biases on outputs (Bjorck et al. 2018). Batch normalization is effective in many cases. However, the performance of a model that employs both batch normalization and dropout may degrade (Li et al. 2019).

4.1.6 Activation layer

Each neuron is activated by using nonlinear transformation. Nonlinearity is an important component of DNN, because it allows a wide variety of expressions. Note that the majority of the layers, including fully connected layers, involve a linear transformation and, even if a number of layers were to exist, it would be equivalent to one single linear transformation. Thus, nonlinear transformation is essential to allow each neuron the freedom to have any necessary values. For the first iteration, we do not know what kind of transformation is the best; thus, the transformation itself is taken as one of the hyperparameters. In our work, we used the functions “tf.nn” in Tensorflow.

4.1.7 Second fully connected layer

After T repetitions of the highway layer, batch normalization layer, and activation layer, it is necessary to convert the number of neurons to the number of SNe times the number of SN classes. This operation is opposite to that of the first fully connected layer.

4.1.8 Softmax layer

4.2 Hyperparameter search

We perform a hyperparameter search by combining grid search and the tree-structured Parzen estimator (TPE) algorithm (Bergstra et al. 2013). Although grid search is not suitable to search a high-dimensional space, it has the advantage of searching for multiple points in parallel. In addition, because of the simplicity of the algorithm, it allows us to convey knowledge acquired during preliminary experiments for parameter initialization. Meanwhile, the TPE algorithm is suitable for searching a high-dimensional space, but has the disadvantage of not knowing where to start initially. Therefore, this time, our search was guided by the hyperparameter values that were obtained in the preliminary experiment using grid search, and these results were then used as input for the TPE algorithm. The ranges in which we searched for the hyperparameters are given in table 1.

| Hyperparameter . | Value (grid) . | Range (TPE) . |

|---|---|---|

| D | {100, 300} | 50, …, 1000 |

| T | {1, 3, 5} | 1, …, 5 |

| bn | {true} | {true, false} |

| Drop rate | [5 × 10−3, 0.035] | [5 × 10−4, 0.25] |

| Type | {identity, relu, sigmoid, tanh} | |

| Hyperparameter . | Value (grid) . | Range (TPE) . |

|---|---|---|

| D | {100, 300} | 50, …, 1000 |

| T | {1, 3, 5} | 1, …, 5 |

| bn | {true} | {true, false} |

| Drop rate | [5 × 10−3, 0.035] | [5 × 10−4, 0.25] |

| Type | {identity, relu, sigmoid, tanh} | |

| Hyperparameter . | Value (grid) . | Range (TPE) . |

|---|---|---|

| D | {100, 300} | 50, …, 1000 |

| T | {1, 3, 5} | 1, …, 5 |

| bn | {true} | {true, false} |

| Drop rate | [5 × 10−3, 0.035] | [5 × 10−4, 0.25] |

| Type | {identity, relu, sigmoid, tanh} | |

| Hyperparameter . | Value (grid) . | Range (TPE) . |

|---|---|---|

| D | {100, 300} | 50, …, 1000 |

| T | {1, 3, 5} | 1, …, 5 |

| bn | {true} | {true, false} |

| Drop rate | [5 × 10−3, 0.035] | [5 × 10−4, 0.25] |

| Type | {identity, relu, sigmoid, tanh} | |

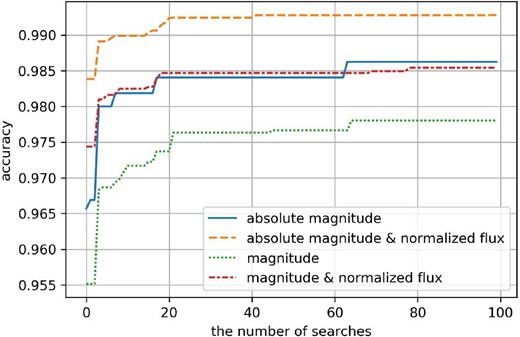

According to the usual approach, we divided the dataset into training and validation datasets. We used the training data to optimize the DNN, and the validation data to measure the accuracy. The hyperparameters were optimized by evaluation with the validation dataset to maximize the accuracy of this dataset. This process was iteratively conducted 100 times to allow the accuracy to converge to its maximum (figure 4).

Result of iterative hyperparameter search (100 cycles) showing its convergence to its maximum performance in terms of accuracy. The task involved binary classification. (Color online)

We can train the DNN classifier in the same way regardless of the number of classes. In the case of multi-type classification, the number of classes is K = 3 in our experiment; thus, the number of outputs of the DNN classifier is also three. In binary classification (SN Ia or non-SN Ia), the number of outputs is two.

We introduced data augmentation to prevent overfitting at the time of training. By increasing the number of input data using data augmentation, we prevent DNN from having to memorize the entire training dataset. We used two data augmentation methods to augment the training dataset. The first was to add Gaussian noise (based on the expected observed uncertainty) to the simulated flux. The second involved the use of the mixup technique (Zhang et al. 2017).

As described above, the hyperparameters (the red boxes in figure 3) of the model are optimized by maximizing the accuracy, whereas the model parameters (the green boxes in figure 3) are optimized by minimizing the cross-entropy error.

4.3 Testing the DNN model with the PLAsTiCC dataset

Before we applied our model to the data observed by HSC, we tested it with a dataset resulting from the LSST simulated classification challenge, i.e., the PLAsTiCC dataset (The PLAsTiCC team 2018; Malz et al. 2019), which is composed of realistic photometric data with errors on time-variable objects. To evaluate our model, we required a dataset with labels of true identity. The PLAsTiCC Deep Drilling Field (DDF) dataset contains data similar to those in the HSC-SSP Transient Survey, and we took advantage thereof. However, we generated the training set ourselves and selected not to use the training set provided by the PLAsTiCC team because we knew that the size of their training dataset was insufficient to achieve maximum performance (figure 2).

The training dataset was created using the method described in subsection 3.2. We generated 370345 light curves based on the filter response and photometric zero-point for LSST (Ivezić et al. 2019). These light curves were composed of the different types of SNe in the ratio SN Ia : ratio Ibc : II = 0.60 : 0.06 : 0.34, with their peaks randomly shifted in time. The test dataset was created by extracting 2297 light curves from the PLAsTiCC dataset. These light curves were labeled Ia, Ibc, or II, to identify the type of SN each curve represented. The light curves were simulated to occur in the COSMOS field.

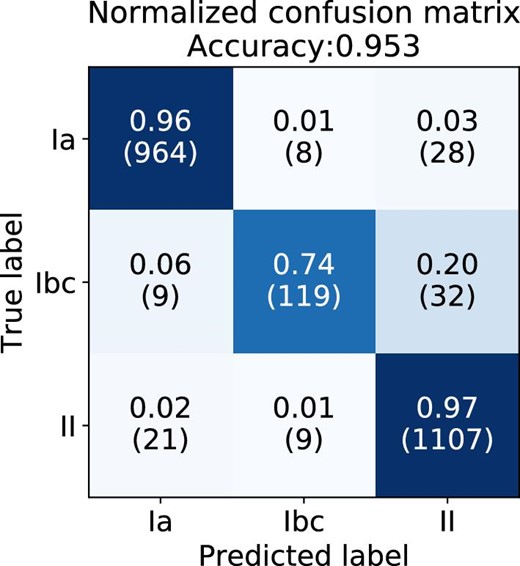

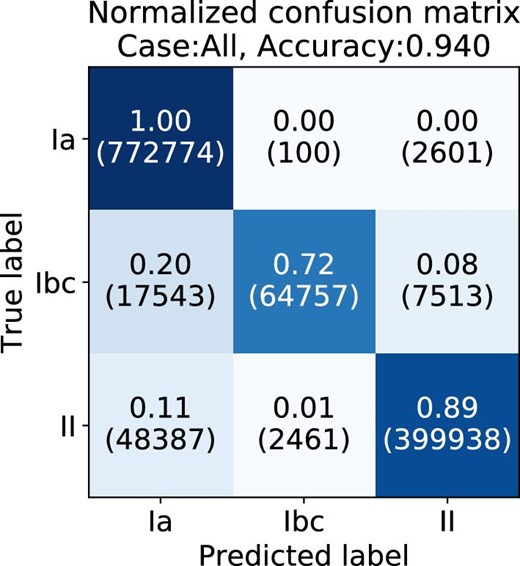

We used the AUC of the receiver operating characteristic (ROC) curve, the precision-recall curve in two-class classification, and the accuracy from the confusion matrix in three-class classification as metrics to evaluate our model. Different combinations of inputs were tested to determine which performs best when using the PLAsTiCC dataset. Our input could be a combination of the arrays of normalized flux (f), magnitude (m), or pseudo-absolute magnitude (M). Table 2 lists the AUC for two-class classification and the accuracy for three-class classification when using PLAsTiCC data. Our investigation showed that a combination of the normalized flux (f) and pseudo-absolute magnitude (M) performs best, and, although the information is redundant, we suspect the different distribution of the data provides the machine with additional guidance. The AUC values for the ROC curve and the precision-recall curve are 0.996 and 0.995, respectively. Figure 5 shows the confusion matrix for the three-class classification, with the total accuracy calculated as 95.3%. As is always the case in the real world, it is difficult to classify SN Ibc, but the effect on overall accuracy is relatively small.

Normalized confusion matrix in the three-class classification of the PLAsTiCC dataset. The classifier received the pseudo-absolute magnitude and normalized flux as its input. The proportions in each row sum to 1. The numbers in parentheses represent the raw numbers. (Color online)

| Input* . | AUC . | Accuracy . | |||

|---|---|---|---|---|---|

| M . | m . | f . | ROC . | Pre.-rec. . | . |

| |$\checkmark$| | |$\checkmark$| | 0.996 | 0.995 | 0.953 | |

| |$\checkmark$| | 0.995 | 0.993 | 0.952 | ||

| |$\checkmark$| | |$\checkmark$| | 0.995 | 0.993 | 0.948 | |

| |$\checkmark$| | 0.995 | 0.991 | 0.940 | ||

| Input* . | AUC . | Accuracy . | |||

|---|---|---|---|---|---|

| M . | m . | f . | ROC . | Pre.-rec. . | . |

| |$\checkmark$| | |$\checkmark$| | 0.996 | 0.995 | 0.953 | |

| |$\checkmark$| | 0.995 | 0.993 | 0.952 | ||

| |$\checkmark$| | |$\checkmark$| | 0.995 | 0.993 | 0.948 | |

| |$\checkmark$| | 0.995 | 0.991 | 0.940 | ||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

| Input* . | AUC . | Accuracy . | |||

|---|---|---|---|---|---|

| M . | m . | f . | ROC . | Pre.-rec. . | . |

| |$\checkmark$| | |$\checkmark$| | 0.996 | 0.995 | 0.953 | |

| |$\checkmark$| | 0.995 | 0.993 | 0.952 | ||

| |$\checkmark$| | |$\checkmark$| | 0.995 | 0.993 | 0.948 | |

| |$\checkmark$| | 0.995 | 0.991 | 0.940 | ||

| Input* . | AUC . | Accuracy . | |||

|---|---|---|---|---|---|

| M . | m . | f . | ROC . | Pre.-rec. . | . |

| |$\checkmark$| | |$\checkmark$| | 0.996 | 0.995 | 0.953 | |

| |$\checkmark$| | 0.995 | 0.993 | 0.952 | ||

| |$\checkmark$| | |$\checkmark$| | 0.995 | 0.993 | 0.948 | |

| |$\checkmark$| | 0.995 | 0.991 | 0.940 | ||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

Table 3 summarizes the classification performance for each group of the test set divided according to the maximum signal-to-noise ratio (SNR) of the photometric data. It shows that the classification performance tends to improve as the maximum signal-to-noise ratio increases.

| Max. SNR . | Number . | AUC* . | |

|---|---|---|---|

| . | . | ROC . | Pre.-rec. . |

| <5 | 31 | 0.975 | 0.983 |

| 5–10 | 736 | 0.989 | 0.983 |

| 10–20 | 814 | 0.999 | 0.998 |

| >20 | 716 | 0.999 | 0.999 |

| All | 2297 | 0.996 | 0.995 |

| Max. SNR . | Number . | AUC* . | |

|---|---|---|---|

| . | . | ROC . | Pre.-rec. . |

| <5 | 31 | 0.975 | 0.983 |

| 5–10 | 736 | 0.989 | 0.983 |

| 10–20 | 814 | 0.999 | 0.998 |

| >20 | 716 | 0.999 | 0.999 |

| All | 2297 | 0.996 | 0.995 |

AUC for the best performing model (M + f).

| Max. SNR . | Number . | AUC* . | |

|---|---|---|---|

| . | . | ROC . | Pre.-rec. . |

| <5 | 31 | 0.975 | 0.983 |

| 5–10 | 736 | 0.989 | 0.983 |

| 10–20 | 814 | 0.999 | 0.998 |

| >20 | 716 | 0.999 | 0.999 |

| All | 2297 | 0.996 | 0.995 |

| Max. SNR . | Number . | AUC* . | |

|---|---|---|---|

| . | . | ROC . | Pre.-rec. . |

| <5 | 31 | 0.975 | 0.983 |

| 5–10 | 736 | 0.989 | 0.983 |

| 10–20 | 814 | 0.999 | 0.998 |

| >20 | 716 | 0.999 | 0.999 |

| All | 2297 | 0.996 | 0.995 |

AUC for the best performing model (M + f).

In the three-class classification of the PLAsTiCC dataset, 107 SNe were misclassified and have the following characteristics:

54% (58/107) of them were “incomplete events” that did not include the peak phase of the SN (the period of 10 d before and 20 d after the peak in the observed frame) in the photometric data, whereas they only constitute 38% of all events.

Of the remaining misclassifications, 29% (14/49) are on the boundary where the difference in probability between the correct class and the predicted class is less than 0.1.

In more than half of the remaining 35 events, SN Ibc was misclassified as either SN Ia or II.

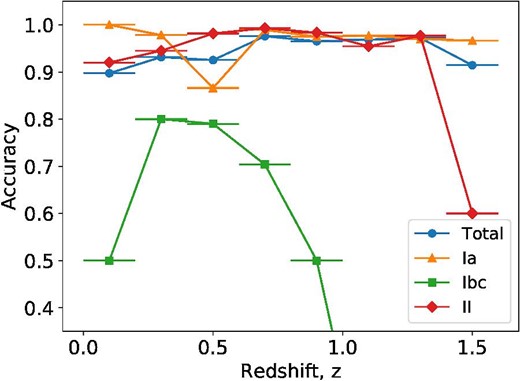

Figure 6 shows the accuracy against redshift for the PLAsTiCC dataset. The accuracy for SN Ibc is lower than that of the other classes; in particular, it is greatly reduced at redshifts beyond 1.0, and also decreased at redshifts of 0.1 to 0.2. Manual verification of individual misclassifications revealed that, although certain misclassified SNe are too faint to classify even with conventional methods, a few bright SNe were also completely misclassified.

Accuracy as a function of redshift in the three-class classification of the PLAsTiCC dataset. (Color online)

5 Application to the HSC-SSP transient survey

We applied the developed classifier to the dataset acquired during the HSC-SSP Transient Survey. This dataset includes photometric data of 1824 SNe. As described in Yasuda et al. (2019), the survey was conducted in two layers with different depths and cadence, i.e., “Deep” and “Ultra-Deep.” Therefore, the number of photometric data points of an SN in each layer could be different. Our DNN model requires exactly the same number of data points as its input; thus, we divided our dataset into five cases based on the number of photometric data points. The number of SNe for each case is summarized in table 4. For example, the number of SNe in Case 0 is 709, and they are in the Ultra-Deep layer. Each SN is represented by a total of 42 epochs of photometric data in four bands (g, r2, i2, and |$z$|). The number of epochs and filter schedule for Case 0 SNe are summarized in table 5. The introduction of these five cases enabled the machine to correctly classify 1812 HSC SNe, which corresponds to 99.3% of the 1824 SNe. The remaining 12 SNe were excluded owing to missing data.

| Case . | Epoch . | Number . | Fraction . |

|---|---|---|---|

| 0 | 42 | 709 | 0.391 |

| 1 | 26 | 646 | 0.357 |

| 2 | 19 | 271 | 0.150 |

| 3 | 10 | 122 | 0.067 |

| 4 | 4 | 64 | 0.035 |

| Case . | Epoch . | Number . | Fraction . |

|---|---|---|---|

| 0 | 42 | 709 | 0.391 |

| 1 | 26 | 646 | 0.357 |

| 2 | 19 | 271 | 0.150 |

| 3 | 10 | 122 | 0.067 |

| 4 | 4 | 64 | 0.035 |

| Case . | Epoch . | Number . | Fraction . |

|---|---|---|---|

| 0 | 42 | 709 | 0.391 |

| 1 | 26 | 646 | 0.357 |

| 2 | 19 | 271 | 0.150 |

| 3 | 10 | 122 | 0.067 |

| 4 | 4 | 64 | 0.035 |

| Case . | Epoch . | Number . | Fraction . |

|---|---|---|---|

| 0 | 42 | 709 | 0.391 |

| 1 | 26 | 646 | 0.357 |

| 2 | 19 | 271 | 0.150 |

| 3 | 10 | 122 | 0.067 |

| 4 | 4 | 64 | 0.035 |

| Filter . | Epochs . | Elapsed day . |

|---|---|---|

| g | 8 | 2, 40, 63, 70, 92, 119, 126, 154 |

| r2 | 9 | 5, 32, 61, 71, 92, 103, 122, 129, 151 |

| i2 | 13 | 2, 6, 32, 40, 61, 68, 71, 94, 101, 120, 127, 154, 155 |

| |$z$| | 12 | 0, 6, 30, 40, 59, 68, 90, 101, 119, 126, 151, 157 |

| Filter . | Epochs . | Elapsed day . |

|---|---|---|

| g | 8 | 2, 40, 63, 70, 92, 119, 126, 154 |

| r2 | 9 | 5, 32, 61, 71, 92, 103, 122, 129, 151 |

| i2 | 13 | 2, 6, 32, 40, 61, 68, 71, 94, 101, 120, 127, 154, 155 |

| |$z$| | 12 | 0, 6, 30, 40, 59, 68, 90, 101, 119, 126, 151, 157 |

| Filter . | Epochs . | Elapsed day . |

|---|---|---|

| g | 8 | 2, 40, 63, 70, 92, 119, 126, 154 |

| r2 | 9 | 5, 32, 61, 71, 92, 103, 122, 129, 151 |

| i2 | 13 | 2, 6, 32, 40, 61, 68, 71, 94, 101, 120, 127, 154, 155 |

| |$z$| | 12 | 0, 6, 30, 40, 59, 68, 90, 101, 119, 126, 151, 157 |

| Filter . | Epochs . | Elapsed day . |

|---|---|---|

| g | 8 | 2, 40, 63, 70, 92, 119, 126, 154 |

| r2 | 9 | 5, 32, 61, 71, 92, 103, 122, 129, 151 |

| i2 | 13 | 2, 6, 32, 40, 61, 68, 71, 94, 101, 120, 127, 154, 155 |

| |$z$| | 12 | 0, 6, 30, 40, 59, 68, 90, 101, 119, 126, 151, 157 |

We subjected the aforementioned five cases of observed HSC data to both two-class and three-class classification. For each of these cases, the machine needs to be trained independently with a dedicated training dataset. Thus, the hyperparameters were optimized for each case and are reported in table 6. Subsections 5.1 and 5.2 describe the performance evaluation for each classification.

| . | Input* . | Two-class . | Three-class . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | T . | D . | Drop rate . | bn . | Type . | T . | D . | Drop rate . | bn . | Type . |

| Case 0 | |$\checkmark$| | |$\checkmark$| | 5 | 178 | 9.47 × 10−3 | 1 | sigmoid | 4 | 429 | 1.20 × 10−3 | 0 | linear | |

| |$\checkmark$| | 3 | 247 | 9.68 × 10−4 | 1 | sigmoid | 4 | 516 | 2.54 × 10−3 | 0 | tanh | |||

| |$\checkmark$| | |$\checkmark$| | 4 | 531 | 6.43 × 10−3 | 0 | linear | 4 | 608 | 1.72 × 10−2 | 0 | linear | ||

| |$\checkmark$| | 4 | 411 | 9.00 × 10−2 | 1 | sigmoid | 4 | 838 | 1.36 × 10−3 | 0 | tanh | |||

| Case 1 | |$\checkmark$| | |$\checkmark$| | 5 | 734 | 8.75 × 10−4 | 0 | tanh | 4 | 915 | 1.03 × 10−2 | 0 | linear | |

| |$\checkmark$| | 2 | 389 | 7.17 × 10−2 | 1 | sigmoid | 5 | 698 | 2.79 × 10−2 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 2 | 647 | 7.92 × 10−4 | 0 | tanh | 4 | 540 | 1.45 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 2 | 342 | 1.42 × 10−2 | 1 | sigmoid | 2 | 520 | 9.99 × 10−4 | 1 | sigmoid | |||

| Case 2 | |$\checkmark$| | |$\checkmark$| | 2 | 368 | 1.79 × 10−3 | 1 | sigmoid | 4 | 698 | 9.08 × 10−4 | 0 | linear | |

| |$\checkmark$| | 4 | 920 | 1.44 × 10−3 | 0 | sigmoid | 4 | 614 | 7.03 × 10−3 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 572 | 4.27 × 10−3 | 1 | sigmoid | 4 | 896 | 8.58 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 4 | 640 | 1.12 × 10−1 | 1 | sigmoid | 5 | 300 | 5.00 × 10−1 | 1 | sigmoid | |||

| Case 3 | |$\checkmark$| | |$\checkmark$| | 5 | 893 | 1.42 × 10−3 | 1 | sigmoid | 4 | 522 | 5.02 × 10−4 | 0 | tanh | |

| |$\checkmark$| | 4 | 880 | 1.98 × 10−2 | 1 | sigmoid | 5 | 841 | 4.96 × 10−2 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 3 | 300 | 5.00 × 10−3 | 1 | linear | 3 | 462 | 9.28 × 10−4 | 1 | sigmoid | ||

| |$\checkmark$| | 3 | 930 | 9.77 × 10−2 | 1 | sigmoid | 3 | 300 | 5.00 × 10−3 | 1 | sigmoid | |||

| Case 4 | |$\checkmark$| | |$\checkmark$| | 5 | 379 | 4.21 × 10−3 | 1 | sigmoid | 3 | 484 | 2.13 × 10−3 | 0 | linear | |

| |$\checkmark$| | 2 | 631 | 1.77 × 10−2 | 0 | sigmoid | 4 | 243 | 3.21 × 10−3 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 140 | 4.04 × 10−3 | 1 | sigmoid | 5 | 389 | 1.23 × 10−4 | 0 | tanh | ||

| |$\checkmark$| | 4 | 567 | 5.77 × 10−2 | 0 | sigmoid | 3 | 354 | 2.14 × 10−1 | 1 | sigmoid | |||

| . | Input* . | Two-class . | Three-class . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | T . | D . | Drop rate . | bn . | Type . | T . | D . | Drop rate . | bn . | Type . |

| Case 0 | |$\checkmark$| | |$\checkmark$| | 5 | 178 | 9.47 × 10−3 | 1 | sigmoid | 4 | 429 | 1.20 × 10−3 | 0 | linear | |

| |$\checkmark$| | 3 | 247 | 9.68 × 10−4 | 1 | sigmoid | 4 | 516 | 2.54 × 10−3 | 0 | tanh | |||

| |$\checkmark$| | |$\checkmark$| | 4 | 531 | 6.43 × 10−3 | 0 | linear | 4 | 608 | 1.72 × 10−2 | 0 | linear | ||

| |$\checkmark$| | 4 | 411 | 9.00 × 10−2 | 1 | sigmoid | 4 | 838 | 1.36 × 10−3 | 0 | tanh | |||

| Case 1 | |$\checkmark$| | |$\checkmark$| | 5 | 734 | 8.75 × 10−4 | 0 | tanh | 4 | 915 | 1.03 × 10−2 | 0 | linear | |

| |$\checkmark$| | 2 | 389 | 7.17 × 10−2 | 1 | sigmoid | 5 | 698 | 2.79 × 10−2 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 2 | 647 | 7.92 × 10−4 | 0 | tanh | 4 | 540 | 1.45 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 2 | 342 | 1.42 × 10−2 | 1 | sigmoid | 2 | 520 | 9.99 × 10−4 | 1 | sigmoid | |||

| Case 2 | |$\checkmark$| | |$\checkmark$| | 2 | 368 | 1.79 × 10−3 | 1 | sigmoid | 4 | 698 | 9.08 × 10−4 | 0 | linear | |

| |$\checkmark$| | 4 | 920 | 1.44 × 10−3 | 0 | sigmoid | 4 | 614 | 7.03 × 10−3 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 572 | 4.27 × 10−3 | 1 | sigmoid | 4 | 896 | 8.58 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 4 | 640 | 1.12 × 10−1 | 1 | sigmoid | 5 | 300 | 5.00 × 10−1 | 1 | sigmoid | |||

| Case 3 | |$\checkmark$| | |$\checkmark$| | 5 | 893 | 1.42 × 10−3 | 1 | sigmoid | 4 | 522 | 5.02 × 10−4 | 0 | tanh | |

| |$\checkmark$| | 4 | 880 | 1.98 × 10−2 | 1 | sigmoid | 5 | 841 | 4.96 × 10−2 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 3 | 300 | 5.00 × 10−3 | 1 | linear | 3 | 462 | 9.28 × 10−4 | 1 | sigmoid | ||

| |$\checkmark$| | 3 | 930 | 9.77 × 10−2 | 1 | sigmoid | 3 | 300 | 5.00 × 10−3 | 1 | sigmoid | |||

| Case 4 | |$\checkmark$| | |$\checkmark$| | 5 | 379 | 4.21 × 10−3 | 1 | sigmoid | 3 | 484 | 2.13 × 10−3 | 0 | linear | |

| |$\checkmark$| | 2 | 631 | 1.77 × 10−2 | 0 | sigmoid | 4 | 243 | 3.21 × 10−3 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 140 | 4.04 × 10−3 | 1 | sigmoid | 5 | 389 | 1.23 × 10−4 | 0 | tanh | ||

| |$\checkmark$| | 4 | 567 | 5.77 × 10−2 | 0 | sigmoid | 3 | 354 | 2.14 × 10−1 | 1 | sigmoid | |||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

| . | Input* . | Two-class . | Three-class . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | T . | D . | Drop rate . | bn . | Type . | T . | D . | Drop rate . | bn . | Type . |

| Case 0 | |$\checkmark$| | |$\checkmark$| | 5 | 178 | 9.47 × 10−3 | 1 | sigmoid | 4 | 429 | 1.20 × 10−3 | 0 | linear | |

| |$\checkmark$| | 3 | 247 | 9.68 × 10−4 | 1 | sigmoid | 4 | 516 | 2.54 × 10−3 | 0 | tanh | |||

| |$\checkmark$| | |$\checkmark$| | 4 | 531 | 6.43 × 10−3 | 0 | linear | 4 | 608 | 1.72 × 10−2 | 0 | linear | ||

| |$\checkmark$| | 4 | 411 | 9.00 × 10−2 | 1 | sigmoid | 4 | 838 | 1.36 × 10−3 | 0 | tanh | |||

| Case 1 | |$\checkmark$| | |$\checkmark$| | 5 | 734 | 8.75 × 10−4 | 0 | tanh | 4 | 915 | 1.03 × 10−2 | 0 | linear | |

| |$\checkmark$| | 2 | 389 | 7.17 × 10−2 | 1 | sigmoid | 5 | 698 | 2.79 × 10−2 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 2 | 647 | 7.92 × 10−4 | 0 | tanh | 4 | 540 | 1.45 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 2 | 342 | 1.42 × 10−2 | 1 | sigmoid | 2 | 520 | 9.99 × 10−4 | 1 | sigmoid | |||

| Case 2 | |$\checkmark$| | |$\checkmark$| | 2 | 368 | 1.79 × 10−3 | 1 | sigmoid | 4 | 698 | 9.08 × 10−4 | 0 | linear | |

| |$\checkmark$| | 4 | 920 | 1.44 × 10−3 | 0 | sigmoid | 4 | 614 | 7.03 × 10−3 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 572 | 4.27 × 10−3 | 1 | sigmoid | 4 | 896 | 8.58 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 4 | 640 | 1.12 × 10−1 | 1 | sigmoid | 5 | 300 | 5.00 × 10−1 | 1 | sigmoid | |||

| Case 3 | |$\checkmark$| | |$\checkmark$| | 5 | 893 | 1.42 × 10−3 | 1 | sigmoid | 4 | 522 | 5.02 × 10−4 | 0 | tanh | |

| |$\checkmark$| | 4 | 880 | 1.98 × 10−2 | 1 | sigmoid | 5 | 841 | 4.96 × 10−2 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 3 | 300 | 5.00 × 10−3 | 1 | linear | 3 | 462 | 9.28 × 10−4 | 1 | sigmoid | ||

| |$\checkmark$| | 3 | 930 | 9.77 × 10−2 | 1 | sigmoid | 3 | 300 | 5.00 × 10−3 | 1 | sigmoid | |||

| Case 4 | |$\checkmark$| | |$\checkmark$| | 5 | 379 | 4.21 × 10−3 | 1 | sigmoid | 3 | 484 | 2.13 × 10−3 | 0 | linear | |

| |$\checkmark$| | 2 | 631 | 1.77 × 10−2 | 0 | sigmoid | 4 | 243 | 3.21 × 10−3 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 140 | 4.04 × 10−3 | 1 | sigmoid | 5 | 389 | 1.23 × 10−4 | 0 | tanh | ||

| |$\checkmark$| | 4 | 567 | 5.77 × 10−2 | 0 | sigmoid | 3 | 354 | 2.14 × 10−1 | 1 | sigmoid | |||

| . | Input* . | Two-class . | Three-class . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | T . | D . | Drop rate . | bn . | Type . | T . | D . | Drop rate . | bn . | Type . |

| Case 0 | |$\checkmark$| | |$\checkmark$| | 5 | 178 | 9.47 × 10−3 | 1 | sigmoid | 4 | 429 | 1.20 × 10−3 | 0 | linear | |

| |$\checkmark$| | 3 | 247 | 9.68 × 10−4 | 1 | sigmoid | 4 | 516 | 2.54 × 10−3 | 0 | tanh | |||

| |$\checkmark$| | |$\checkmark$| | 4 | 531 | 6.43 × 10−3 | 0 | linear | 4 | 608 | 1.72 × 10−2 | 0 | linear | ||

| |$\checkmark$| | 4 | 411 | 9.00 × 10−2 | 1 | sigmoid | 4 | 838 | 1.36 × 10−3 | 0 | tanh | |||

| Case 1 | |$\checkmark$| | |$\checkmark$| | 5 | 734 | 8.75 × 10−4 | 0 | tanh | 4 | 915 | 1.03 × 10−2 | 0 | linear | |

| |$\checkmark$| | 2 | 389 | 7.17 × 10−2 | 1 | sigmoid | 5 | 698 | 2.79 × 10−2 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 2 | 647 | 7.92 × 10−4 | 0 | tanh | 4 | 540 | 1.45 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 2 | 342 | 1.42 × 10−2 | 1 | sigmoid | 2 | 520 | 9.99 × 10−4 | 1 | sigmoid | |||

| Case 2 | |$\checkmark$| | |$\checkmark$| | 2 | 368 | 1.79 × 10−3 | 1 | sigmoid | 4 | 698 | 9.08 × 10−4 | 0 | linear | |

| |$\checkmark$| | 4 | 920 | 1.44 × 10−3 | 0 | sigmoid | 4 | 614 | 7.03 × 10−3 | 0 | linear | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 572 | 4.27 × 10−3 | 1 | sigmoid | 4 | 896 | 8.58 × 10−3 | 0 | linear | ||

| |$\checkmark$| | 4 | 640 | 1.12 × 10−1 | 1 | sigmoid | 5 | 300 | 5.00 × 10−1 | 1 | sigmoid | |||

| Case 3 | |$\checkmark$| | |$\checkmark$| | 5 | 893 | 1.42 × 10−3 | 1 | sigmoid | 4 | 522 | 5.02 × 10−4 | 0 | tanh | |

| |$\checkmark$| | 4 | 880 | 1.98 × 10−2 | 1 | sigmoid | 5 | 841 | 4.96 × 10−2 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 3 | 300 | 5.00 × 10−3 | 1 | linear | 3 | 462 | 9.28 × 10−4 | 1 | sigmoid | ||

| |$\checkmark$| | 3 | 930 | 9.77 × 10−2 | 1 | sigmoid | 3 | 300 | 5.00 × 10−3 | 1 | sigmoid | |||

| Case 4 | |$\checkmark$| | |$\checkmark$| | 5 | 379 | 4.21 × 10−3 | 1 | sigmoid | 3 | 484 | 2.13 × 10−3 | 0 | linear | |

| |$\checkmark$| | 2 | 631 | 1.77 × 10−2 | 0 | sigmoid | 4 | 243 | 3.21 × 10−3 | 1 | sigmoid | |||

| |$\checkmark$| | |$\checkmark$| | 5 | 140 | 4.04 × 10−3 | 1 | sigmoid | 5 | 389 | 1.23 × 10−4 | 0 | tanh | ||

| |$\checkmark$| | 4 | 567 | 5.77 × 10−2 | 0 | sigmoid | 3 | 354 | 2.14 × 10−1 | 1 | sigmoid | |||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

5.1 Binary classification

Binary classification was performed using four versions of classifiers, which we prepared with different inputs as in the PLAsTiCC dataset. This approach allowed us to compare their performance, a summary of which is provided in table 7.

| Dataset . | Input* . | ROC . | Precision-recall . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| Validation | |$\checkmark$| | |$\checkmark$| | 1.000 | 0.990 | 0.987 | 0.976 | 0.887 | 0.993 | 1.000 | 0.993 | 0.991 | 0.983 | 0.917 | 0.995 | |

| |$\checkmark$| | 0.999 | 0.980 | 0.975 | 0.959 | 0.845 | 0.987 | 0.999 | 0.986 | 0.982 | 0.972 | 0.886 | 0.991 | |||

| |$\checkmark$| | |$\checkmark$| | 0.999 | 0.983 | 0.979 | 0.963 | 0.817 | 0.988 | 0.999 | 0.988 | 0.985 | 0.973 | 0.863 | 0.992 | ||

| |$\checkmark$| | 0.996 | 0.971 | 0.966 | 0.938 | 0.790 | 0.980 | 0.997 | 0.980 | 0.976 | 0.955 | 0.841 | 0.985 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.975 | 0.978 | 0.931 | 0.844 | 1.000 | 0.966 | 0.931 | 0.955 | 0.773 | 0.761 | 1.000 | 0.909 | |

| (Light curve | |$\checkmark$| | 0.986 | 0.967 | 0.942 | 0.967 | 0.964 | 0.971 | 0.965 | 0.935 | 0.849 | 0.966 | 0.944 | 0.934 | ||

| verified) | |$\checkmark$| | |$\checkmark$| | 0.947 | 0.926 | 0.887 | 0.756 | 1.000 | 0.923 | 0.836 | 0.860 | 0.803 | 0.632 | 1.000 | 0.820 | |

| |$\checkmark$| | 0.945 | 0.864 | 0.854 | 0.756 | 0.643 | 0.896 | 0.826 | 0.769 | 0.752 | 0.612 | 0.687 | 0.787 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.945 | 0.922 | 0.914 | 0.863 | 0.844 | 0.925 | 0.855 | 0.840 | 0.809 | 0.788 | 0.650 | 0.832 | |

| (All labeled) | |$\checkmark$| | 0.957 | 0.909 | 0.879 | 0.908 | 0.864 | 0.922 | 0.901 | 0.814 | 0.714 | 0.885 | 0.702 | 0.817 | ||

| |$\checkmark$| | |$\checkmark$| | 0.915 | 0.889 | 0.885 | 0.718 | 0.711 | 0.885 | 0.780 | 0.778 | 0.768 | 0.543 | 0.363 | 0.749 | ||

| |$\checkmark$| | 0.911 | 0.837 | 0.855 | 0.713 | 0.656 | 0.862 | 0.773 | 0.685 | 0.712 | 0.523 | 0.385 | 0.712 | |||

| Dataset . | Input* . | ROC . | Precision-recall . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| Validation | |$\checkmark$| | |$\checkmark$| | 1.000 | 0.990 | 0.987 | 0.976 | 0.887 | 0.993 | 1.000 | 0.993 | 0.991 | 0.983 | 0.917 | 0.995 | |

| |$\checkmark$| | 0.999 | 0.980 | 0.975 | 0.959 | 0.845 | 0.987 | 0.999 | 0.986 | 0.982 | 0.972 | 0.886 | 0.991 | |||

| |$\checkmark$| | |$\checkmark$| | 0.999 | 0.983 | 0.979 | 0.963 | 0.817 | 0.988 | 0.999 | 0.988 | 0.985 | 0.973 | 0.863 | 0.992 | ||

| |$\checkmark$| | 0.996 | 0.971 | 0.966 | 0.938 | 0.790 | 0.980 | 0.997 | 0.980 | 0.976 | 0.955 | 0.841 | 0.985 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.975 | 0.978 | 0.931 | 0.844 | 1.000 | 0.966 | 0.931 | 0.955 | 0.773 | 0.761 | 1.000 | 0.909 | |

| (Light curve | |$\checkmark$| | 0.986 | 0.967 | 0.942 | 0.967 | 0.964 | 0.971 | 0.965 | 0.935 | 0.849 | 0.966 | 0.944 | 0.934 | ||

| verified) | |$\checkmark$| | |$\checkmark$| | 0.947 | 0.926 | 0.887 | 0.756 | 1.000 | 0.923 | 0.836 | 0.860 | 0.803 | 0.632 | 1.000 | 0.820 | |

| |$\checkmark$| | 0.945 | 0.864 | 0.854 | 0.756 | 0.643 | 0.896 | 0.826 | 0.769 | 0.752 | 0.612 | 0.687 | 0.787 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.945 | 0.922 | 0.914 | 0.863 | 0.844 | 0.925 | 0.855 | 0.840 | 0.809 | 0.788 | 0.650 | 0.832 | |

| (All labeled) | |$\checkmark$| | 0.957 | 0.909 | 0.879 | 0.908 | 0.864 | 0.922 | 0.901 | 0.814 | 0.714 | 0.885 | 0.702 | 0.817 | ||

| |$\checkmark$| | |$\checkmark$| | 0.915 | 0.889 | 0.885 | 0.718 | 0.711 | 0.885 | 0.780 | 0.778 | 0.768 | 0.543 | 0.363 | 0.749 | ||

| |$\checkmark$| | 0.911 | 0.837 | 0.855 | 0.713 | 0.656 | 0.862 | 0.773 | 0.685 | 0.712 | 0.523 | 0.385 | 0.712 | |||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

| Dataset . | Input* . | ROC . | Precision-recall . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| Validation | |$\checkmark$| | |$\checkmark$| | 1.000 | 0.990 | 0.987 | 0.976 | 0.887 | 0.993 | 1.000 | 0.993 | 0.991 | 0.983 | 0.917 | 0.995 | |

| |$\checkmark$| | 0.999 | 0.980 | 0.975 | 0.959 | 0.845 | 0.987 | 0.999 | 0.986 | 0.982 | 0.972 | 0.886 | 0.991 | |||

| |$\checkmark$| | |$\checkmark$| | 0.999 | 0.983 | 0.979 | 0.963 | 0.817 | 0.988 | 0.999 | 0.988 | 0.985 | 0.973 | 0.863 | 0.992 | ||

| |$\checkmark$| | 0.996 | 0.971 | 0.966 | 0.938 | 0.790 | 0.980 | 0.997 | 0.980 | 0.976 | 0.955 | 0.841 | 0.985 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.975 | 0.978 | 0.931 | 0.844 | 1.000 | 0.966 | 0.931 | 0.955 | 0.773 | 0.761 | 1.000 | 0.909 | |

| (Light curve | |$\checkmark$| | 0.986 | 0.967 | 0.942 | 0.967 | 0.964 | 0.971 | 0.965 | 0.935 | 0.849 | 0.966 | 0.944 | 0.934 | ||

| verified) | |$\checkmark$| | |$\checkmark$| | 0.947 | 0.926 | 0.887 | 0.756 | 1.000 | 0.923 | 0.836 | 0.860 | 0.803 | 0.632 | 1.000 | 0.820 | |

| |$\checkmark$| | 0.945 | 0.864 | 0.854 | 0.756 | 0.643 | 0.896 | 0.826 | 0.769 | 0.752 | 0.612 | 0.687 | 0.787 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.945 | 0.922 | 0.914 | 0.863 | 0.844 | 0.925 | 0.855 | 0.840 | 0.809 | 0.788 | 0.650 | 0.832 | |

| (All labeled) | |$\checkmark$| | 0.957 | 0.909 | 0.879 | 0.908 | 0.864 | 0.922 | 0.901 | 0.814 | 0.714 | 0.885 | 0.702 | 0.817 | ||

| |$\checkmark$| | |$\checkmark$| | 0.915 | 0.889 | 0.885 | 0.718 | 0.711 | 0.885 | 0.780 | 0.778 | 0.768 | 0.543 | 0.363 | 0.749 | ||

| |$\checkmark$| | 0.911 | 0.837 | 0.855 | 0.713 | 0.656 | 0.862 | 0.773 | 0.685 | 0.712 | 0.523 | 0.385 | 0.712 | |||

| Dataset . | Input* . | ROC . | Precision-recall . | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| Validation | |$\checkmark$| | |$\checkmark$| | 1.000 | 0.990 | 0.987 | 0.976 | 0.887 | 0.993 | 1.000 | 0.993 | 0.991 | 0.983 | 0.917 | 0.995 | |

| |$\checkmark$| | 0.999 | 0.980 | 0.975 | 0.959 | 0.845 | 0.987 | 0.999 | 0.986 | 0.982 | 0.972 | 0.886 | 0.991 | |||

| |$\checkmark$| | |$\checkmark$| | 0.999 | 0.983 | 0.979 | 0.963 | 0.817 | 0.988 | 0.999 | 0.988 | 0.985 | 0.973 | 0.863 | 0.992 | ||

| |$\checkmark$| | 0.996 | 0.971 | 0.966 | 0.938 | 0.790 | 0.980 | 0.997 | 0.980 | 0.976 | 0.955 | 0.841 | 0.985 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.975 | 0.978 | 0.931 | 0.844 | 1.000 | 0.966 | 0.931 | 0.955 | 0.773 | 0.761 | 1.000 | 0.909 | |

| (Light curve | |$\checkmark$| | 0.986 | 0.967 | 0.942 | 0.967 | 0.964 | 0.971 | 0.965 | 0.935 | 0.849 | 0.966 | 0.944 | 0.934 | ||

| verified) | |$\checkmark$| | |$\checkmark$| | 0.947 | 0.926 | 0.887 | 0.756 | 1.000 | 0.923 | 0.836 | 0.860 | 0.803 | 0.632 | 1.000 | 0.820 | |

| |$\checkmark$| | 0.945 | 0.864 | 0.854 | 0.756 | 0.643 | 0.896 | 0.826 | 0.769 | 0.752 | 0.612 | 0.687 | 0.787 | |||

| Test | |$\checkmark$| | |$\checkmark$| | 0.945 | 0.922 | 0.914 | 0.863 | 0.844 | 0.925 | 0.855 | 0.840 | 0.809 | 0.788 | 0.650 | 0.832 | |

| (All labeled) | |$\checkmark$| | 0.957 | 0.909 | 0.879 | 0.908 | 0.864 | 0.922 | 0.901 | 0.814 | 0.714 | 0.885 | 0.702 | 0.817 | ||

| |$\checkmark$| | |$\checkmark$| | 0.915 | 0.889 | 0.885 | 0.718 | 0.711 | 0.885 | 0.780 | 0.778 | 0.768 | 0.543 | 0.363 | 0.749 | ||

| |$\checkmark$| | 0.911 | 0.837 | 0.855 | 0.713 | 0.656 | 0.862 | 0.773 | 0.685 | 0.712 | 0.523 | 0.385 | 0.712 | |||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

For the validation dataset, which is part of the simulated dataset, a higher number of input dimensions were found to improve the results, enabling any classifier to classify the data with very high AUC. The best AUCs for all classified events are 0.993 and 0.995 for the ROC and precision-recall curve, respectively.

For the test dataset, the classification performance was verified using 1332 HSC SNe (1256 with redshift) labeled by the SALT2 light curve fitter (Guy et al. 2007, 2010), a conventional classification method. The verification label for the HSC SNe conforms to that reported in Yasuda et al. (2019), which defines SN Ia as SNe that satisfy all four of the following criteria for the SALT2 fitting results: (1) color (c) and stretch (x1) within the 3 σ range of the Scolnic and Kessler (2016) “All G10” distribution; (2) absolute magnitude in the B band, MB, brighter than −18.5 mag; (3) reduced χ2 of less than 10; (4) number of degrees of freedom (dof) greater than or equal to five. Other candidates that satisfy the looser set of conditions above were labeled “Ia?.” Specifically, the range in (1) was expanded to within 5 σ, and the thresholds of (2) and (3) were set to −17.5 mag and 20, respectively. Meanwhile, we defined non-SN Ia in the HSC classification as SNe that do not satisfy the conditions for classification as “Ia” or “Ia?,” and of which the number of dof is five or more. The number of labeled Ia, Ia?, and non-Ia are 429, 251, and 908 (410, 240, and 850, with redshift), respectively. Apart from the above, 215 SNe with less than five dof were labeled as “unclassified,” and the remaining 21 SNe failed to be fitted. This performance evaluation was conducted using 428 SNe Ia and 904 non-SNe Ia classified by our machine.

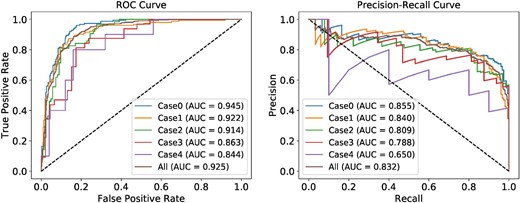

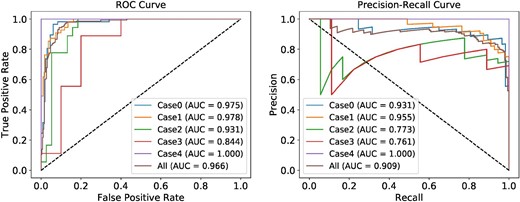

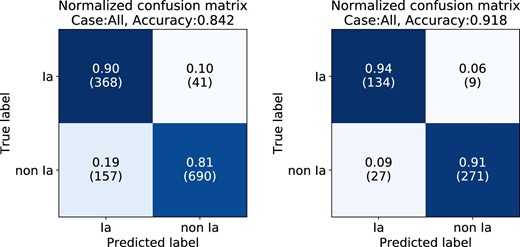

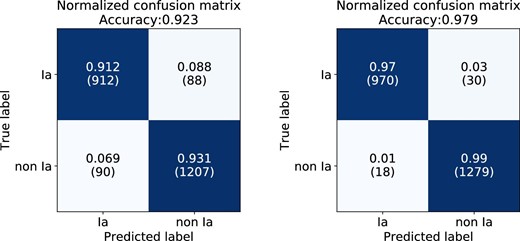

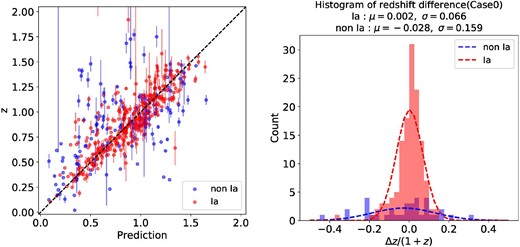

We also extracted 441 “light-curve-verified SNe” that have photometric information before and after their peak and for which spec-|$z$| are available, and verified their classification results. Figures 7 and 8 show the AUCs of the best classifier for all labeled HSC SNe and the light-curve-verified HSC SNe respectively. The confusion matrices for each case are shown in figure 9. The best-performing classifier obtained the same classification results as the conventional method for 84.2% of 1256 labeled SNe, which is 91.8% accurate for the 441 light-curve-verified SNe.

ROC and precision-recall curves for the two-class classification of all labeled HSC SNe. The input to the classifier is the pseudo-absolute magnitude and normalized flux. The colored lines represent the performance for each of the five classifiers with different input cases, and that for all of their outputs. (Color online)

As figure 7, but for the light-curve-verified HSC SNe. (Color online)

Normalized confusion matrices for the binary classification of 1256 labeled HSC SNe (left) and the 441 light-curve-verified SNe (right). The inputs for both classifications are the pseudo-absolute magnitude and normalized flux. (Color online)

In the binary classification of 1256 labeled HSC SNe, 198 of them were misclassified. The misclassification rate for each case is different, and tends to increase as the number of input dimensions decreases, i.e., even though the rate is 13% for Case 0, it is 23% for Case 4. As with the PLAsTiCC data, incomplete events without their peak phase constitute the majority of misclassified events in the HSC data, accounting for 47% (93/198) of them. The second most common cause of misclassification is an outlier value or systematic flux offset in photometric data, accounting for 34% (67/198) of misclassifications. Of the remaining 38 SNe, 17 are boundary events with a Ia probability of 40% to 60%, and the remainder are events for which SALT2 fitting is ineffective.

5.2 Multi-type classification

In this paper we present the classification performance only for the validation dataset with the three-class classifier, because these three types of classification labels are not

available for the HSC transients. The accuracy values for each input in the three-class classification of the validation dataset are summarized in table 8. The best accuracy for the validation dataset is 94.0%. The confusion matrix of the best classifier is shown in figure 10. The result indicates that our classifier has a very high sensitivity toward SN Ia, whereas it is less effective at classifying SN Ibc.

Normalized confusion matrix for validation dataset in the HSC three-class classification. The input is the pseudo-absolute magnitude and normalized flux. The proportions in each row sum to 1 (within the rounding error). (Color online)

Accuracy of each input in the HSC three-class classification for the validation dataset.

| Input* . | Accuracy† . | |||||||

|---|---|---|---|---|---|---|---|---|

| M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| |$\checkmark$| | |$\checkmark$| | 0.985 | 0.926 | 0.920 | 0.890 | 0.774 | 0.940 | |

| |$\checkmark$| | 0.971 | 0.894 | 0.886 | 0.844 | 0.729 | 0.914 | ||

| |$\checkmark$| | |$\checkmark$| | 0.970 | 0.907 | 0.897 | 0.860 | 0.724 | 0.921 | |

| |$\checkmark$| | 0.952 | 0.871 | 0.861 | 0.818 | 0.701 | 0.892 | ||

| Input* . | Accuracy† . | |||||||

|---|---|---|---|---|---|---|---|---|

| M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| |$\checkmark$| | |$\checkmark$| | 0.985 | 0.926 | 0.920 | 0.890 | 0.774 | 0.940 | |

| |$\checkmark$| | 0.971 | 0.894 | 0.886 | 0.844 | 0.729 | 0.914 | ||

| |$\checkmark$| | |$\checkmark$| | 0.970 | 0.907 | 0.897 | 0.860 | 0.724 | 0.921 | |

| |$\checkmark$| | 0.952 | 0.871 | 0.861 | 0.818 | 0.701 | 0.892 | ||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

Accuracy of samples extracted from each case according to the fractions in table 4.

Accuracy of each input in the HSC three-class classification for the validation dataset.

| Input* . | Accuracy† . | |||||||

|---|---|---|---|---|---|---|---|---|

| M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| |$\checkmark$| | |$\checkmark$| | 0.985 | 0.926 | 0.920 | 0.890 | 0.774 | 0.940 | |

| |$\checkmark$| | 0.971 | 0.894 | 0.886 | 0.844 | 0.729 | 0.914 | ||

| |$\checkmark$| | |$\checkmark$| | 0.970 | 0.907 | 0.897 | 0.860 | 0.724 | 0.921 | |

| |$\checkmark$| | 0.952 | 0.871 | 0.861 | 0.818 | 0.701 | 0.892 | ||

| Input* . | Accuracy† . | |||||||

|---|---|---|---|---|---|---|---|---|

| M . | m . | f . | Case 0 . | 1 . | 2 . | 3 . | 4 . | All . |

| |$\checkmark$| | |$\checkmark$| | 0.985 | 0.926 | 0.920 | 0.890 | 0.774 | 0.940 | |

| |$\checkmark$| | 0.971 | 0.894 | 0.886 | 0.844 | 0.729 | 0.914 | ||

| |$\checkmark$| | |$\checkmark$| | 0.970 | 0.907 | 0.897 | 0.860 | 0.724 | 0.921 | |

| |$\checkmark$| | 0.952 | 0.871 | 0.861 | 0.818 | 0.701 | 0.892 | ||

Input to classifier is displayed as a check mark. M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

Accuracy of samples extracted from each case according to the fractions in table 4.

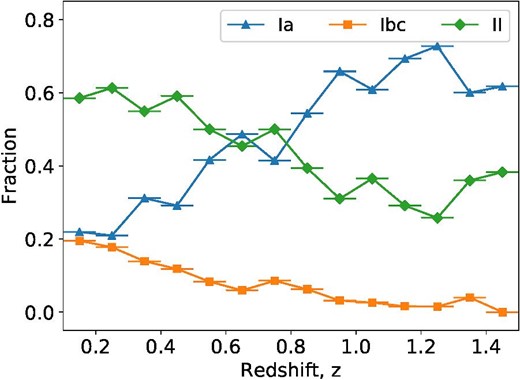

In addition, we describe the predicted classes of actual HSC SNe classified by the three-class classifier. Figure 11 shows the fractions of each type predicted by the classifier in each redshift from 0.1 to 1.5. All of the classified HSC SNe were used to calculate the fraction. These SN types are a combination of the outputs from the two classifiers with different inputs depending on the presence of redshift information: (1) pseudo-absolute magnitude and normalized flux; (2) magnitude and normalized flux.

Type fractions along redshift in HSC three-class classification. (Color online)

5.3 Classification of HSC SNe

We report the classification results of 1824 HSC SNe, obtained by the proposed classifiers, in e-table 1.4 Part of this classification list is provided in table 9 as an example. This list summarizes the probabilities predicted by the two-class and three-class classifiers for each SN, along with the redshifts of the host galaxies and the classification labels assigned on the basis of the SALT2 fitting. The probabilities in this list are calculated from the output of the classifier with the normalized flux added to the input. Each classification performance shown in subsections 5.1 and 5.2 is calculated based on the probabilities in this list.

| Name . | Case . | |$z$| . | |$z$|_src* . | SALT2 fitting . | Classifier (Input†: M + f) . | Classifier (Input†: m + f) . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | . | . | . | dof . | Type‡ . | F_cover§ . | 2-class . | 3-class . | 2-class . | 3-class . | ||||||

| . | . | . | . | . | . | . | Ia . | Ia . | Ibc . | II . | Type . | Ia . | Ia . | Ibc . | II . | Type . |

| HSC16aaau | 1 | |$0.370_{-0.072}^{+0.110}$| | 3 | 7 | Ia? | False | 0.556 | 0.554 | 0.022 | 0.424 | Ia | 0.517 | 0.649 | 0.008 | 0.342 | Ia |

| HSC16aaav | 1 | |$3.280_{-2.423}^{+0.167}$| | 4 | 17 | nonIa | True | 0.134 | 0.049 | 0.002 | 0.949 | II | 0.279 | 0.356 | 0.048 | 0.596 | II |

| HSC16aabj | 0 | |$0.361_{-0.008}^{+0.007}$| | 2 | 8 | nonIa | False | 0.630 | 0.667 | 0.001 | 0.331 | Ia | 0.574 | 0.578 | 0.018 | 0.405 | Ia |

| HSC16aabk | 1 | — | 0 | 9 | Ia? | False | — | — | — | — | — | 0.433 | 0.675 | 0.077 | 0.248 | Ia |

| HSC16aabp | 1 | |$1.477_{-0.032}^{+0.037}$| | 2 | 19 | nonIa | False | 0.957 | 0.964 | 0.001 | 0.035 | Ia | 0.807 | 0.871 | 0.039 | 0.090 | Ia |

| ⋮ | ||||||||||||||||

| HSC17bjrb | 1 | |$0.560_{-0.036}^{+0.024}$| | 3 | 1 | UC | False | 0.003 | 0.007 | 0.004 | 0.989 | II | 0.011 | 0.002 | 0.004 | 0.994 | II |

| HSC17bjwo | 0 | |$1.449_{-0.063}^{+0.080}$| | 2 | 26 | Ia | True | 0.881 | 0.915 | 0.005 | 0.080 | Ia | 0.891 | 0.935 | 0.010 | 0.055 | Ia |

| HSC17bjya | 0 | |$1.128_{-0.000}^{+0.000}$| | 1 | 22 | nonIa | True | 0.130 | 0.145 | 0.039 | 0.816 | II | 0.141 | 0.109 | 0.056 | 0.835 | II |

| HSC17bjyn | 0 | |$0.626_{-0.000}^{+0.000}$| | 1 | 24 | Ia | True | 0.887 | 0.891 | 0.031 | 0.078 | Ia | 0.965 | 0.918 | 0.007 | 0.075 | Ia |

| HSC17bjza | 1 | |$1.350_{-0.156}^{+1.142}$| | 4 | 13 | nonIa | True | 0.016 | 0.041 | 0.016 | 0.943 | II | 0.062 | 0.039 | 0.005 | 0.957 | II |

| HSC17bkbn | 0 | |$0.863_{-0.012}^{+0.036}$| | 2 | 23 | nonIa | True | 0.031 | 0.025 | 0.002 | 0.973 | II | 0.028 | 0.021 | 0.002 | 0.976 | II |

| HSC17bkcz | 0 | |$0.795_{-0.000}^{+0.000}$| | 1 | 27 | Ia | True | 0.675 | 0.674 | 0.035 | 0.291 | Ia | 0.661 | 0.789 | 0.019 | 0.191 | Ia |

| HSC17bkef | 0 | |$2.940_{-0.087}^{+0.119}$| | 2 | 0 | fail | – | 0.219 | 0.443 | 0.000 | 0.556 | II | 0.950 | 0.947 | 0.010 | 0.043 | Ia |

| HSC17bkem | 2 | |$0.609_{-0.000}^{+0.000}$| | 1 | 17 | Ia | True | 0.889 | 0.858 | 0.001 | 0.141 | Ia | 0.901 | 0.863 | 0.023 | 0.114 | Ia |

| HSC17bkfv | 0 | |$0.670_{-0.035}^{+0.035}$| | 3 | 23 | Ia | True | 0.915 | 0.906 | 0.016 | 0.078 | Ia | 0.961 | 0.926 | 0.011 | 0.063 | Ia |

| ⋮ | ||||||||||||||||

| HSC17dskd | 0 | |$0.630_{-0.000}^{+0.000}$| | 1 | 3 | UC | False | 0.889 | 0.863 | 0.087 | 0.050 | Ia | 0.873 | 0.873 | 0.072 | 0.054 | Ia |

| HSC17dsng | 0 | |$1.331_{-0.048}^{+0.048}$| | 2 | 7 | Ia? | False | 0.951 | 0.967 | 0.006 | 0.027 | Ia | 0.935 | 0.895 | 0.011 | 0.094 | Ia |

| HSC17dsoh | 0 | |$1.026_{-0.000}^{+0.000}$| | 1 | 2 | UC | False | 0.968 | 0.968 | 0.011 | 0.020 | Ia | 0.911 | 0.923 | 0.022 | 0.055 | Ia |

| HSC17dsox | 0 | |$1.137_{-0.034}^{+0.041}$| | 2 | 2 | UC | False | 0.708 | 0.794 | 0.019 | 0.186 | Ia | 0.721 | 0.738 | 0.040 | 0.222 | Ia |

| HSC17dspl | 0 | |$0.624_{-0.000}^{+0.000}$| | 1 | 9 | nonIa | False | 0.180 | 0.065 | 0.114 | 0.821 | II | 0.049 | 0.103 | 0.100 | 0.797 | II |

| Name . | Case . | |$z$| . | |$z$|_src* . | SALT2 fitting . | Classifier (Input†: M + f) . | Classifier (Input†: m + f) . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | . | . | . | dof . | Type‡ . | F_cover§ . | 2-class . | 3-class . | 2-class . | 3-class . | ||||||

| . | . | . | . | . | . | . | Ia . | Ia . | Ibc . | II . | Type . | Ia . | Ia . | Ibc . | II . | Type . |

| HSC16aaau | 1 | |$0.370_{-0.072}^{+0.110}$| | 3 | 7 | Ia? | False | 0.556 | 0.554 | 0.022 | 0.424 | Ia | 0.517 | 0.649 | 0.008 | 0.342 | Ia |

| HSC16aaav | 1 | |$3.280_{-2.423}^{+0.167}$| | 4 | 17 | nonIa | True | 0.134 | 0.049 | 0.002 | 0.949 | II | 0.279 | 0.356 | 0.048 | 0.596 | II |

| HSC16aabj | 0 | |$0.361_{-0.008}^{+0.007}$| | 2 | 8 | nonIa | False | 0.630 | 0.667 | 0.001 | 0.331 | Ia | 0.574 | 0.578 | 0.018 | 0.405 | Ia |

| HSC16aabk | 1 | — | 0 | 9 | Ia? | False | — | — | — | — | — | 0.433 | 0.675 | 0.077 | 0.248 | Ia |

| HSC16aabp | 1 | |$1.477_{-0.032}^{+0.037}$| | 2 | 19 | nonIa | False | 0.957 | 0.964 | 0.001 | 0.035 | Ia | 0.807 | 0.871 | 0.039 | 0.090 | Ia |

| ⋮ | ||||||||||||||||

| HSC17bjrb | 1 | |$0.560_{-0.036}^{+0.024}$| | 3 | 1 | UC | False | 0.003 | 0.007 | 0.004 | 0.989 | II | 0.011 | 0.002 | 0.004 | 0.994 | II |

| HSC17bjwo | 0 | |$1.449_{-0.063}^{+0.080}$| | 2 | 26 | Ia | True | 0.881 | 0.915 | 0.005 | 0.080 | Ia | 0.891 | 0.935 | 0.010 | 0.055 | Ia |

| HSC17bjya | 0 | |$1.128_{-0.000}^{+0.000}$| | 1 | 22 | nonIa | True | 0.130 | 0.145 | 0.039 | 0.816 | II | 0.141 | 0.109 | 0.056 | 0.835 | II |

| HSC17bjyn | 0 | |$0.626_{-0.000}^{+0.000}$| | 1 | 24 | Ia | True | 0.887 | 0.891 | 0.031 | 0.078 | Ia | 0.965 | 0.918 | 0.007 | 0.075 | Ia |

| HSC17bjza | 1 | |$1.350_{-0.156}^{+1.142}$| | 4 | 13 | nonIa | True | 0.016 | 0.041 | 0.016 | 0.943 | II | 0.062 | 0.039 | 0.005 | 0.957 | II |

| HSC17bkbn | 0 | |$0.863_{-0.012}^{+0.036}$| | 2 | 23 | nonIa | True | 0.031 | 0.025 | 0.002 | 0.973 | II | 0.028 | 0.021 | 0.002 | 0.976 | II |

| HSC17bkcz | 0 | |$0.795_{-0.000}^{+0.000}$| | 1 | 27 | Ia | True | 0.675 | 0.674 | 0.035 | 0.291 | Ia | 0.661 | 0.789 | 0.019 | 0.191 | Ia |

| HSC17bkef | 0 | |$2.940_{-0.087}^{+0.119}$| | 2 | 0 | fail | – | 0.219 | 0.443 | 0.000 | 0.556 | II | 0.950 | 0.947 | 0.010 | 0.043 | Ia |

| HSC17bkem | 2 | |$0.609_{-0.000}^{+0.000}$| | 1 | 17 | Ia | True | 0.889 | 0.858 | 0.001 | 0.141 | Ia | 0.901 | 0.863 | 0.023 | 0.114 | Ia |

| HSC17bkfv | 0 | |$0.670_{-0.035}^{+0.035}$| | 3 | 23 | Ia | True | 0.915 | 0.906 | 0.016 | 0.078 | Ia | 0.961 | 0.926 | 0.011 | 0.063 | Ia |

| ⋮ | ||||||||||||||||

| HSC17dskd | 0 | |$0.630_{-0.000}^{+0.000}$| | 1 | 3 | UC | False | 0.889 | 0.863 | 0.087 | 0.050 | Ia | 0.873 | 0.873 | 0.072 | 0.054 | Ia |

| HSC17dsng | 0 | |$1.331_{-0.048}^{+0.048}$| | 2 | 7 | Ia? | False | 0.951 | 0.967 | 0.006 | 0.027 | Ia | 0.935 | 0.895 | 0.011 | 0.094 | Ia |

| HSC17dsoh | 0 | |$1.026_{-0.000}^{+0.000}$| | 1 | 2 | UC | False | 0.968 | 0.968 | 0.011 | 0.020 | Ia | 0.911 | 0.923 | 0.022 | 0.055 | Ia |

| HSC17dsox | 0 | |$1.137_{-0.034}^{+0.041}$| | 2 | 2 | UC | False | 0.708 | 0.794 | 0.019 | 0.186 | Ia | 0.721 | 0.738 | 0.040 | 0.222 | Ia |

| HSC17dspl | 0 | |$0.624_{-0.000}^{+0.000}$| | 1 | 9 | nonIa | False | 0.180 | 0.065 | 0.114 | 0.821 | II | 0.049 | 0.103 | 0.100 | 0.797 | II |

Code for redshift source. 1: spec-|$z$|; 2: COSMOS photo-|$z$|; 3: HSC photo-|$z$| Ultra-Deep; 4: HSC photo-|$z$| Deep; 0: hostless.

M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

SN type labeled by SALT2 fitting; UC: unclassified.

Flag indicating whether the photometric data cover the period of 10 d before and 20 d after the peak. SNe with this flag set to False are defined as “incomplete events.”

| Name . | Case . | |$z$| . | |$z$|_src* . | SALT2 fitting . | Classifier (Input†: M + f) . | Classifier (Input†: m + f) . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | . | . | . | dof . | Type‡ . | F_cover§ . | 2-class . | 3-class . | 2-class . | 3-class . | ||||||

| . | . | . | . | . | . | . | Ia . | Ia . | Ibc . | II . | Type . | Ia . | Ia . | Ibc . | II . | Type . |

| HSC16aaau | 1 | |$0.370_{-0.072}^{+0.110}$| | 3 | 7 | Ia? | False | 0.556 | 0.554 | 0.022 | 0.424 | Ia | 0.517 | 0.649 | 0.008 | 0.342 | Ia |

| HSC16aaav | 1 | |$3.280_{-2.423}^{+0.167}$| | 4 | 17 | nonIa | True | 0.134 | 0.049 | 0.002 | 0.949 | II | 0.279 | 0.356 | 0.048 | 0.596 | II |

| HSC16aabj | 0 | |$0.361_{-0.008}^{+0.007}$| | 2 | 8 | nonIa | False | 0.630 | 0.667 | 0.001 | 0.331 | Ia | 0.574 | 0.578 | 0.018 | 0.405 | Ia |

| HSC16aabk | 1 | — | 0 | 9 | Ia? | False | — | — | — | — | — | 0.433 | 0.675 | 0.077 | 0.248 | Ia |

| HSC16aabp | 1 | |$1.477_{-0.032}^{+0.037}$| | 2 | 19 | nonIa | False | 0.957 | 0.964 | 0.001 | 0.035 | Ia | 0.807 | 0.871 | 0.039 | 0.090 | Ia |

| ⋮ | ||||||||||||||||

| HSC17bjrb | 1 | |$0.560_{-0.036}^{+0.024}$| | 3 | 1 | UC | False | 0.003 | 0.007 | 0.004 | 0.989 | II | 0.011 | 0.002 | 0.004 | 0.994 | II |

| HSC17bjwo | 0 | |$1.449_{-0.063}^{+0.080}$| | 2 | 26 | Ia | True | 0.881 | 0.915 | 0.005 | 0.080 | Ia | 0.891 | 0.935 | 0.010 | 0.055 | Ia |

| HSC17bjya | 0 | |$1.128_{-0.000}^{+0.000}$| | 1 | 22 | nonIa | True | 0.130 | 0.145 | 0.039 | 0.816 | II | 0.141 | 0.109 | 0.056 | 0.835 | II |

| HSC17bjyn | 0 | |$0.626_{-0.000}^{+0.000}$| | 1 | 24 | Ia | True | 0.887 | 0.891 | 0.031 | 0.078 | Ia | 0.965 | 0.918 | 0.007 | 0.075 | Ia |

| HSC17bjza | 1 | |$1.350_{-0.156}^{+1.142}$| | 4 | 13 | nonIa | True | 0.016 | 0.041 | 0.016 | 0.943 | II | 0.062 | 0.039 | 0.005 | 0.957 | II |

| HSC17bkbn | 0 | |$0.863_{-0.012}^{+0.036}$| | 2 | 23 | nonIa | True | 0.031 | 0.025 | 0.002 | 0.973 | II | 0.028 | 0.021 | 0.002 | 0.976 | II |

| HSC17bkcz | 0 | |$0.795_{-0.000}^{+0.000}$| | 1 | 27 | Ia | True | 0.675 | 0.674 | 0.035 | 0.291 | Ia | 0.661 | 0.789 | 0.019 | 0.191 | Ia |

| HSC17bkef | 0 | |$2.940_{-0.087}^{+0.119}$| | 2 | 0 | fail | – | 0.219 | 0.443 | 0.000 | 0.556 | II | 0.950 | 0.947 | 0.010 | 0.043 | Ia |

| HSC17bkem | 2 | |$0.609_{-0.000}^{+0.000}$| | 1 | 17 | Ia | True | 0.889 | 0.858 | 0.001 | 0.141 | Ia | 0.901 | 0.863 | 0.023 | 0.114 | Ia |

| HSC17bkfv | 0 | |$0.670_{-0.035}^{+0.035}$| | 3 | 23 | Ia | True | 0.915 | 0.906 | 0.016 | 0.078 | Ia | 0.961 | 0.926 | 0.011 | 0.063 | Ia |

| ⋮ | ||||||||||||||||

| HSC17dskd | 0 | |$0.630_{-0.000}^{+0.000}$| | 1 | 3 | UC | False | 0.889 | 0.863 | 0.087 | 0.050 | Ia | 0.873 | 0.873 | 0.072 | 0.054 | Ia |

| HSC17dsng | 0 | |$1.331_{-0.048}^{+0.048}$| | 2 | 7 | Ia? | False | 0.951 | 0.967 | 0.006 | 0.027 | Ia | 0.935 | 0.895 | 0.011 | 0.094 | Ia |

| HSC17dsoh | 0 | |$1.026_{-0.000}^{+0.000}$| | 1 | 2 | UC | False | 0.968 | 0.968 | 0.011 | 0.020 | Ia | 0.911 | 0.923 | 0.022 | 0.055 | Ia |

| HSC17dsox | 0 | |$1.137_{-0.034}^{+0.041}$| | 2 | 2 | UC | False | 0.708 | 0.794 | 0.019 | 0.186 | Ia | 0.721 | 0.738 | 0.040 | 0.222 | Ia |

| HSC17dspl | 0 | |$0.624_{-0.000}^{+0.000}$| | 1 | 9 | nonIa | False | 0.180 | 0.065 | 0.114 | 0.821 | II | 0.049 | 0.103 | 0.100 | 0.797 | II |

| Name . | Case . | |$z$| . | |$z$|_src* . | SALT2 fitting . | Classifier (Input†: M + f) . | Classifier (Input†: m + f) . | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| . | . | . | . | dof . | Type‡ . | F_cover§ . | 2-class . | 3-class . | 2-class . | 3-class . | ||||||

| . | . | . | . | . | . | . | Ia . | Ia . | Ibc . | II . | Type . | Ia . | Ia . | Ibc . | II . | Type . |

| HSC16aaau | 1 | |$0.370_{-0.072}^{+0.110}$| | 3 | 7 | Ia? | False | 0.556 | 0.554 | 0.022 | 0.424 | Ia | 0.517 | 0.649 | 0.008 | 0.342 | Ia |

| HSC16aaav | 1 | |$3.280_{-2.423}^{+0.167}$| | 4 | 17 | nonIa | True | 0.134 | 0.049 | 0.002 | 0.949 | II | 0.279 | 0.356 | 0.048 | 0.596 | II |

| HSC16aabj | 0 | |$0.361_{-0.008}^{+0.007}$| | 2 | 8 | nonIa | False | 0.630 | 0.667 | 0.001 | 0.331 | Ia | 0.574 | 0.578 | 0.018 | 0.405 | Ia |

| HSC16aabk | 1 | — | 0 | 9 | Ia? | False | — | — | — | — | — | 0.433 | 0.675 | 0.077 | 0.248 | Ia |

| HSC16aabp | 1 | |$1.477_{-0.032}^{+0.037}$| | 2 | 19 | nonIa | False | 0.957 | 0.964 | 0.001 | 0.035 | Ia | 0.807 | 0.871 | 0.039 | 0.090 | Ia |

| ⋮ | ||||||||||||||||

| HSC17bjrb | 1 | |$0.560_{-0.036}^{+0.024}$| | 3 | 1 | UC | False | 0.003 | 0.007 | 0.004 | 0.989 | II | 0.011 | 0.002 | 0.004 | 0.994 | II |

| HSC17bjwo | 0 | |$1.449_{-0.063}^{+0.080}$| | 2 | 26 | Ia | True | 0.881 | 0.915 | 0.005 | 0.080 | Ia | 0.891 | 0.935 | 0.010 | 0.055 | Ia |

| HSC17bjya | 0 | |$1.128_{-0.000}^{+0.000}$| | 1 | 22 | nonIa | True | 0.130 | 0.145 | 0.039 | 0.816 | II | 0.141 | 0.109 | 0.056 | 0.835 | II |

| HSC17bjyn | 0 | |$0.626_{-0.000}^{+0.000}$| | 1 | 24 | Ia | True | 0.887 | 0.891 | 0.031 | 0.078 | Ia | 0.965 | 0.918 | 0.007 | 0.075 | Ia |

| HSC17bjza | 1 | |$1.350_{-0.156}^{+1.142}$| | 4 | 13 | nonIa | True | 0.016 | 0.041 | 0.016 | 0.943 | II | 0.062 | 0.039 | 0.005 | 0.957 | II |

| HSC17bkbn | 0 | |$0.863_{-0.012}^{+0.036}$| | 2 | 23 | nonIa | True | 0.031 | 0.025 | 0.002 | 0.973 | II | 0.028 | 0.021 | 0.002 | 0.976 | II |

| HSC17bkcz | 0 | |$0.795_{-0.000}^{+0.000}$| | 1 | 27 | Ia | True | 0.675 | 0.674 | 0.035 | 0.291 | Ia | 0.661 | 0.789 | 0.019 | 0.191 | Ia |

| HSC17bkef | 0 | |$2.940_{-0.087}^{+0.119}$| | 2 | 0 | fail | – | 0.219 | 0.443 | 0.000 | 0.556 | II | 0.950 | 0.947 | 0.010 | 0.043 | Ia |

| HSC17bkem | 2 | |$0.609_{-0.000}^{+0.000}$| | 1 | 17 | Ia | True | 0.889 | 0.858 | 0.001 | 0.141 | Ia | 0.901 | 0.863 | 0.023 | 0.114 | Ia |

| HSC17bkfv | 0 | |$0.670_{-0.035}^{+0.035}$| | 3 | 23 | Ia | True | 0.915 | 0.906 | 0.016 | 0.078 | Ia | 0.961 | 0.926 | 0.011 | 0.063 | Ia |

| ⋮ | ||||||||||||||||

| HSC17dskd | 0 | |$0.630_{-0.000}^{+0.000}$| | 1 | 3 | UC | False | 0.889 | 0.863 | 0.087 | 0.050 | Ia | 0.873 | 0.873 | 0.072 | 0.054 | Ia |

| HSC17dsng | 0 | |$1.331_{-0.048}^{+0.048}$| | 2 | 7 | Ia? | False | 0.951 | 0.967 | 0.006 | 0.027 | Ia | 0.935 | 0.895 | 0.011 | 0.094 | Ia |

| HSC17dsoh | 0 | |$1.026_{-0.000}^{+0.000}$| | 1 | 2 | UC | False | 0.968 | 0.968 | 0.011 | 0.020 | Ia | 0.911 | 0.923 | 0.022 | 0.055 | Ia |

| HSC17dsox | 0 | |$1.137_{-0.034}^{+0.041}$| | 2 | 2 | UC | False | 0.708 | 0.794 | 0.019 | 0.186 | Ia | 0.721 | 0.738 | 0.040 | 0.222 | Ia |

| HSC17dspl | 0 | |$0.624_{-0.000}^{+0.000}$| | 1 | 9 | nonIa | False | 0.180 | 0.065 | 0.114 | 0.821 | II | 0.049 | 0.103 | 0.100 | 0.797 | II |

Code for redshift source. 1: spec-|$z$|; 2: COSMOS photo-|$z$|; 3: HSC photo-|$z$| Ultra-Deep; 4: HSC photo-|$z$| Deep; 0: hostless.

M: pseudo-absolute magnitude; m: magnitude; f: normalized flux.

SN type labeled by SALT2 fitting; UC: unclassified.

Flag indicating whether the photometric data cover the period of 10 d before and 20 d after the peak. SNe with this flag set to False are defined as “incomplete events.”

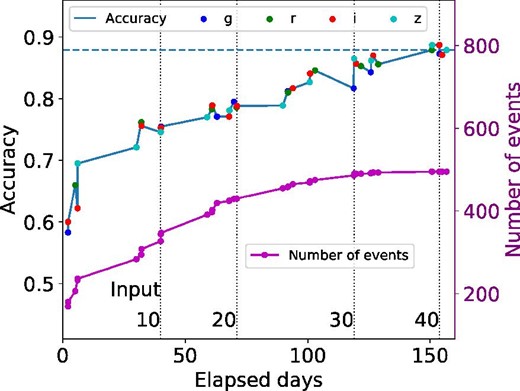

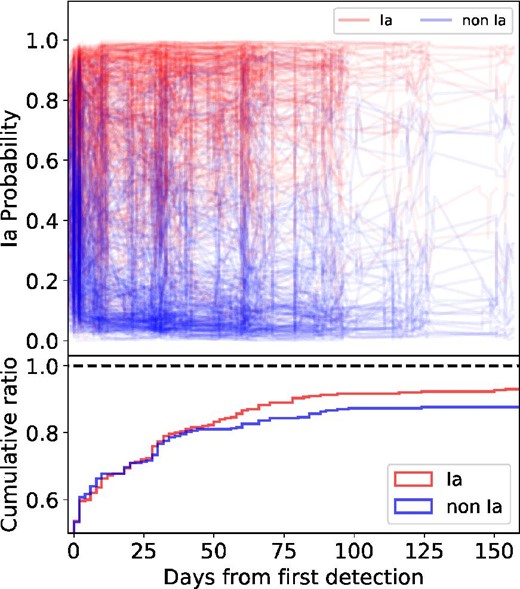

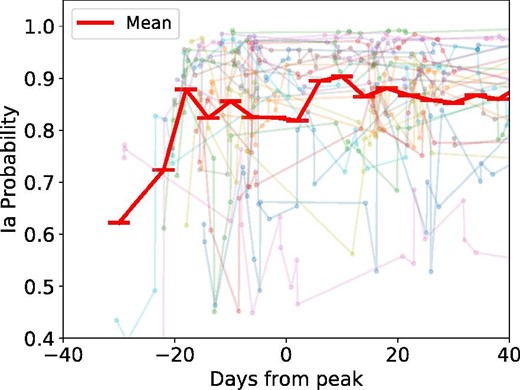

5.4 Dependence on the number of epochs

When using our classification method, the number of photometric data points given to the classifier increases as the survey progresses. Therefore, we investigated the transition of performance against the number of epochs. This was accomplished by classifying the HSC dataset by increasing the number of input data points in increments of one, and by examining the relationship between the number of epochs and the classification performance. Binary classifiers were adopted for classification, and the accuracy calculated from each confusion matrix was used for evaluation. Figure 12 shows the transition of classification performance for the Case 0 HSC dataset along with the number of epochs. Although the Ia accuracy is as low as 0.6 to 0.7 in the early stage of the survey with less than five epochs, it exceeds 0.8 when the number of epochs increases to 22. The partial decrease in accuracy is thought to be due to the new SNe being found upon the addition of a photometric point.