-

PDF

- Split View

-

Views

-

Cite

Cite

Robert W Bickley, Scott Wilkinson, Leonardo Ferreira, Sara L Ellison, Connor Bottrell, Debarpita Jyoti, The effect of image quality on galaxy merger identification with deep learning, Monthly Notices of the Royal Astronomical Society, Volume 534, Issue 3, November 2024, Pages 2533–2550, https://doi.org/10.1093/mnras/stae2246

Close - Share Icon Share

ABSTRACT

Studies have shown that the morphologies of galaxies are substantially transformed following coalescence after a merger, but post-mergers are notoriously difficult to identify, especially in imaging that is shallow or low resolution. We train convolutional neural networks (CNNs) to identify simulated post-merger galaxies in a range of image qualities, modelled after five real surveys: the Sloan Digital Sky Survey (SDSS), the Dark Energy Camera Legacy Survey (DECaLS), the Canada–France Imaging Survey (CFIS), the Hyper Suprime-Cam Subaru Strategic Program (HSC-SSP), and the Legacy Survey of Space and Time (LSST). Holding constant all variables other than imaging quality, we present the performance of the CNNs on reserved test set data for each image quality. The success of CNNs on a given data set is found to be sensitive to both imaging depth and resolution. We find that post-merger recovery generally increases with depth, but that limiting 5|$\sigma$| point-source depths in excess of |$\sim 25$| mag, similar to what is achieved in CFIS, are only marginally beneficial. Finally, we present the results of a cross-survey inference experiment, and find that CNNs trained on a given image quality can sometimes be applied to different imaging data to good effect. The work presented here therefore represents a useful reference for the application of CNNs for merger searches in both current and future imaging surveys.

1 INTRODUCTION

After merging galaxies coalesce into a single post-merger remnant, simulations predict that they are likely to experience an epoch of rapid change. When the merger is gas-rich, morphological disturbances (e.g. as studied in Toomre & Toomre 1972; Barnes & Hernquist 1993; Lotz et al. 2008; Bignone et al. 2017; Santucci et al. 2024) in the post-merger are expected to drive gas towards the centre. The elevated gas densities in turn give rise to elevated central star formation rates (SFRs; Mihos & Hernquist 1996; Di Matteo et al. 2008; Hopkins et al. 2013; Ji, Peirani & Yi 2014; Moreno et al. 2015; Rodríguez Montero et al. 2019; Brown et al. 2023) and supermassive black hole (SMBH) accretion rates (Springel, Di Matteo & Hernquist 2005; Park, Smith & Yi 2017; Sivasankaran et al. 2022; Byrne-Mamahit et al. 2023, 2024). SMBH accretion is an energetic phenomenon, and energy injection by the SMBH, often referred to as active galactic nucleus (AGN) feedback, can lead to regulation, suppression, and even truncation of star formation in post-merger galaxies (Johansson, Naab & Burkert 2009; Barai et al. 2014; Choi et al. 2014; Davies, Pontzen & Crain 2022; Zheng et al. 2022; Quai et al. 2023).

Many of these theoretical predictions have been borne out by observations. Multiple observational studies have found evidence of elevated SFRs (Ellison et al. 2013; Guo et al. 2016; Pan et al. 2019; Thorp et al. 2019; Osborne et al. 2020; Thorp et al. 2022; Tanaka et al. 2023; Reeves & Hudson 2024), SMBH activity (Ellison et al. 2013; Satyapal et al. 2014; Weston et al. 2017; Ellison et al. 2019; Gao et al. 2020; Li et al. 2023b; Comerford et al. 2024), and eventual truncation of star formation (Ellison et al. 2022; Otter et al. 2022; Li et al. 2023a) in post-merger galaxies.

In observations, the presence and strength of the statistical connection between coalescence after a merger event and the subsequent physical metamorphosis is highly sensitive to the method by which the merger sample is selected. Indeed, a number of studies do not find a statistically significant link between elevated star formation, AGN detection, and mergers (e.g. Cisternas et al. 2011; Kocevski et al. 2012; Schawinski et al. 2012; Pearson et al. 2019; Villforth et al. 2019; Secrest et al. 2020).

A variety of methods have been used to identify post-mergers in observations, including manual inspection (e.g. Nair & Abraham 2010; Ellison et al. 2013; Li et al. 2023b), non-parametric morphological statistics (e.g. Conselice 2003; Lotz, Primack & Madau 2004; Pawlik et al. 2016; Rodriguez-Gomez et al. 2019), machine learning-based combinations of statistics (e.g. Nevin et al. 2019; Wilkinson et al. 2024), and deep learning methods (Ackermann et al. 2018; Pearson et al. 2019; Walmsley et al. 2019; Ferreira et al. 2020; Wang, Pearson & Rodriguez-Gomez 2020). Each approach has benefits and drawbacks, with each method generally serving to identify post-merger samples that are degrees of pure (i.e. containing relatively few non-post-merger interlopers), complete (containing a large proportion of the actual post-mergers in the parent sample being studied), and representative (containing galaxies that represent the typical demographical distribution of true post-mergers). Purity and representativeness are essential for the recovery of quantitatively accurate results about post-mergers, and completeness benefits statistical power.

Deep learning methods like convolutional neural networks (CNNs) and vision transformers have been proven useful by a number of standards for post-merger identification (e.g. in Bottrell et al. 2019; Wang et al. 2020; Bickley et al. 2021; Avirett-Mackenzie et al. 2024; Ferreira et al. 2024). They can quickly make predictions of merger status for large image samples, and appear to be capable of identifying complete post-merger samples. A number of efforts to use machine learning for merger classification are summarized in Table 1. Even though they can be very accurate, single deep learning models (e.g. the approach used in Bickley et al. 2021; Bottrell et al. 2022) are severely disadvantaged by the naturally low incidence rate of true post-mergers in the low-z Universe. Generally, additional filtration of the predicted post-merger sample by visual classifiers (e.g. in Bickley et al. 2022, 2024) or using a multi-model classification framework (e.g. Ferreira et al. 2024; La Marca et al. 2024; Pearson et al. 2024) can be used to improve on the purity of lone classifiers. Multimodel frameworks are nevertheless sensitive to the performance of their constituent individual classifiers. In this work, we therefore focus on the performance of single CNN for post-merger classification.

The performance reported by several other works for simulation-trained deep learning identification of galaxy mergers. Efforts in the literature use a wide range of training sets; some use real, pre-labelled galaxy images, while others use a simulation-based approach. The data also come from multiple surveys, or use varying implementations of observational realism. The models used for post-merger classification also vary, but custom CNNs with architectures like the one used in this work are popular.

| Publication . | Training set . | Survey . | Model . | Completeness . |

|---|---|---|---|---|

| Pearson et al. (2019) | EAGLE pre- and post-mergers, matched controls | SDSS | Dieleman, Willett & Dambre (2015) | 0.65 |

| Wang et al. (2020) | TNG100–1 pre- and post-mergers, matched controls | KiDS | Simonyan & Zisserman (2014) | 0.72 |

| Bottrell et al. (2022) | TNG100–1 post-mergers, matched controls | None | ResNet38-V2 | 0.93 |

| Pearson et al. (2022) | Galaxy Zoo (Lintott et al. 2008) responses | KiDS | Custom CNN + ANN | 0.86 |

| Ferreira et al. (2022) | TNG100–1 post-mergers, matched star-forming galaxies | Hubble | Custom CNN | 0.80 |

| Walmsley et al. (2022) | Galaxy zoo responses, multiple categories | DECaLS | Zoobot, Tan & Le (2019) | 0.88 |

| Domínguez Sánchez et al. (2023) | NewHorizon galaxies with or without visible tidal features | HSC | Custom CNN | 0.85 |

| Omori et al. (2023) | TNG50 pre- and post-mergers, matched controls | HSC | Walmsley et al. (2022) | 0.76 |

| Avirett-Mackenzie et al. (2024) | TNG100–1 post-mergers, matched controls | SDSS | Custom CNN | 0.81 |

| Margalef-Bentabol et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | HSC | Walmsley et al. (2022) | 0.78 |

| Ferreira et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | CFIS | Multimodel ensemble | 0.84 |

| Publication . | Training set . | Survey . | Model . | Completeness . |

|---|---|---|---|---|

| Pearson et al. (2019) | EAGLE pre- and post-mergers, matched controls | SDSS | Dieleman, Willett & Dambre (2015) | 0.65 |

| Wang et al. (2020) | TNG100–1 pre- and post-mergers, matched controls | KiDS | Simonyan & Zisserman (2014) | 0.72 |

| Bottrell et al. (2022) | TNG100–1 post-mergers, matched controls | None | ResNet38-V2 | 0.93 |

| Pearson et al. (2022) | Galaxy Zoo (Lintott et al. 2008) responses | KiDS | Custom CNN + ANN | 0.86 |

| Ferreira et al. (2022) | TNG100–1 post-mergers, matched star-forming galaxies | Hubble | Custom CNN | 0.80 |

| Walmsley et al. (2022) | Galaxy zoo responses, multiple categories | DECaLS | Zoobot, Tan & Le (2019) | 0.88 |

| Domínguez Sánchez et al. (2023) | NewHorizon galaxies with or without visible tidal features | HSC | Custom CNN | 0.85 |

| Omori et al. (2023) | TNG50 pre- and post-mergers, matched controls | HSC | Walmsley et al. (2022) | 0.76 |

| Avirett-Mackenzie et al. (2024) | TNG100–1 post-mergers, matched controls | SDSS | Custom CNN | 0.81 |

| Margalef-Bentabol et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | HSC | Walmsley et al. (2022) | 0.78 |

| Ferreira et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | CFIS | Multimodel ensemble | 0.84 |

The performance reported by several other works for simulation-trained deep learning identification of galaxy mergers. Efforts in the literature use a wide range of training sets; some use real, pre-labelled galaxy images, while others use a simulation-based approach. The data also come from multiple surveys, or use varying implementations of observational realism. The models used for post-merger classification also vary, but custom CNNs with architectures like the one used in this work are popular.

| Publication . | Training set . | Survey . | Model . | Completeness . |

|---|---|---|---|---|

| Pearson et al. (2019) | EAGLE pre- and post-mergers, matched controls | SDSS | Dieleman, Willett & Dambre (2015) | 0.65 |

| Wang et al. (2020) | TNG100–1 pre- and post-mergers, matched controls | KiDS | Simonyan & Zisserman (2014) | 0.72 |

| Bottrell et al. (2022) | TNG100–1 post-mergers, matched controls | None | ResNet38-V2 | 0.93 |

| Pearson et al. (2022) | Galaxy Zoo (Lintott et al. 2008) responses | KiDS | Custom CNN + ANN | 0.86 |

| Ferreira et al. (2022) | TNG100–1 post-mergers, matched star-forming galaxies | Hubble | Custom CNN | 0.80 |

| Walmsley et al. (2022) | Galaxy zoo responses, multiple categories | DECaLS | Zoobot, Tan & Le (2019) | 0.88 |

| Domínguez Sánchez et al. (2023) | NewHorizon galaxies with or without visible tidal features | HSC | Custom CNN | 0.85 |

| Omori et al. (2023) | TNG50 pre- and post-mergers, matched controls | HSC | Walmsley et al. (2022) | 0.76 |

| Avirett-Mackenzie et al. (2024) | TNG100–1 post-mergers, matched controls | SDSS | Custom CNN | 0.81 |

| Margalef-Bentabol et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | HSC | Walmsley et al. (2022) | 0.78 |

| Ferreira et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | CFIS | Multimodel ensemble | 0.84 |

| Publication . | Training set . | Survey . | Model . | Completeness . |

|---|---|---|---|---|

| Pearson et al. (2019) | EAGLE pre- and post-mergers, matched controls | SDSS | Dieleman, Willett & Dambre (2015) | 0.65 |

| Wang et al. (2020) | TNG100–1 pre- and post-mergers, matched controls | KiDS | Simonyan & Zisserman (2014) | 0.72 |

| Bottrell et al. (2022) | TNG100–1 post-mergers, matched controls | None | ResNet38-V2 | 0.93 |

| Pearson et al. (2022) | Galaxy Zoo (Lintott et al. 2008) responses | KiDS | Custom CNN + ANN | 0.86 |

| Ferreira et al. (2022) | TNG100–1 post-mergers, matched star-forming galaxies | Hubble | Custom CNN | 0.80 |

| Walmsley et al. (2022) | Galaxy zoo responses, multiple categories | DECaLS | Zoobot, Tan & Le (2019) | 0.88 |

| Domínguez Sánchez et al. (2023) | NewHorizon galaxies with or without visible tidal features | HSC | Custom CNN | 0.85 |

| Omori et al. (2023) | TNG50 pre- and post-mergers, matched controls | HSC | Walmsley et al. (2022) | 0.76 |

| Avirett-Mackenzie et al. (2024) | TNG100–1 post-mergers, matched controls | SDSS | Custom CNN | 0.81 |

| Margalef-Bentabol et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | HSC | Walmsley et al. (2022) | 0.78 |

| Ferreira et al. (2024) | TNG100–1 pre- and post-mergers, non-mergers | CFIS | Multimodel ensemble | 0.84 |

Several studies have adopted a simulation-based approach to post-merger searches by training machine vision tools on images of galaxies from cosmological hydrodynamical box simulations (e.g. Pearson et al. 2019; Wang et al. 2020; Bickley et al. 2021; Bottrell et al. 2022; Ferreira et al. 2022; Domínguez Sánchez et al. 2023; Omori et al. 2023; Avirett-Mackenzie et al. 2024; Margalef-Bentabol et al. 2024). In this work, we train models using galaxy stellar morphologies from the 100–1 run of the IllustrisTNG project simulation suite (Marinacci et al. 2018; Naiman et al. 2018; Nelson et al. 2018; Pillepich et al. 2018; Springel et al. 2018; Nelson et al. 2019).

The completeness and representativeness of a post-merger sample identified by a simulation-trained CNN are limited by the degree of realism of galaxies from the simulation, and the quality of observational realism created when mock observations of simulated galaxies are performed. The limiting factors are already explored in some depth in the literature. For example, Rodriguez-Gomez et al. (2019), who demonstrate |$1\sigma$| statistical agreement in the non-parametric morphological measurements taken from galaxies in the low-z Universe and galaxies from IllustrisTNG. Eisert et al. (2024), meanwhile, find that there is a 70 per cent overlap in the parameter space uncovered via contrastive learning between real low-z galaxies and IllustrisTNG galaxies. Ferreira et al. (2024) also demonstrate that Uniform Manifold Approximation and Projection (UMAP) representations of the parameter spaces occupied by Canada–France Imaging Survey (CFIS) and IllustrisTNG galaxies with CFIS realism are in good qualitative agreement. Bottrell et al. (2019) verified that observational realism is important in training CNNs that will be eventually applied to real observational data sets. While the realistic appearances of galaxies in IllustrisTNG and in the training set place limitations on the maximum potential of our approach, they are not so much a hinderance as to prevent the successful identification of mergers in the low-z Universe (see Bickley et al. 2022).

Image quality has previously been discussed as a factor in the success of morphological classification (e.g. Lotz et al. 2004; Lisker 2008), and efforts have also been made in the literature to characterize the benefits of current and next-generation imaging surveys. For example, Martin et al. (2022) investigate the potential observability of low-surface brightness features in the Legacy Survey of Space and Time (LSST; Ivezić et al. 2019) taken at the Vera Rubin observatory, and Domínguez Sánchez et al. (2023) perform a similar assessment using CNNs to identify tidal features in Hyper Suprime-Cam (Aihara et al. 2022) imaging. The most direct literature precursor to this study, Wilkinson et al. (2024), contains a systematic analysis of merger observability on a grid of depth and resolution, using individual and machine-combined non-parametric morphological statistics. Better (i.e. deeper, higher resolution) imaging data are generally considered to be beneficial, but imaging quality must sometimes be sacrificed for quantity (i.e. extent of on-sky coverage) in pursuit of large merger samples and scientific results with good statistical significance. McElroy et al. (2022) conduct a similar investigation of the role of field of view in successful merger identification using non-parametric morphological statistics. Meanwhile, de Albernaz Ferreira & Ferrari (2018) explore the specific connection between redshift and morphology in several imaging surveys, identifying suitable z ranges for robust morphological characterization. As large, high-quality imaging data from multiple observatories are more often becoming widely available, survey depth and resolution are becoming factors that astronomers can choose when designing experiments. Still greater degrees of control will be available in coming years, with data from LSST becoming available. In this work, we will explore the extent to which the success of low-z post-merger searches is limited by imaging, using five real surveys – SDSS, DECaLS, CFIS, the Hyper Suprime-Cam Subaru Strategic Program Wide field (HSC-W), and the projected 10-yr depth of LSST (hereafter referred to simply as LSST) – to sample the technical parameter space broadly referred to as ‘imaging quality’.

2 DATA AND METHODS

Several methodological improvements and insights have been developed since our original work presented in Bickley et al. (2021), so our approach to this comparative study is described here in detail, even though the experiments are philosophically similar (involving training CNNs on mock images of post-merger and control galaxies from TNG100-1). In this section, we will explain how post-merger and non-post-merger control galaxies are selected from the simulation, how mock observations of the galaxies are performed, and how the CNN is trained. We will also detail how predictions of merger status are made.

2.1 TNG100–1 galaxy selection

We use matched samples of post-merger galaxies and control galaxies to explore the potential of CNNs as a function of image quality. The selection for both classes is updated from that used in Bickley et al. (2021), and is designed to select a somewhat smaller number of galaxies that are more unambiguously post-mergers or non-post-mergers.

2.1.1 Post-mergers

The post-mergers and control galaxy selection for this work is based on that of Ferreira et al. (2024). Ferreira et al. (2024) themselves use the Byrne-Mamahit et al. (2024) approach of searching the TNG100–1 simulation merger trees to determine merger status. As in Bickley et al. (2021), Ferreira et al. (2024) use galaxy metadata from TNG100-1 to select galaxies from snapshots between simulation redshifts |$0\lt z\lt 1$|. Ferreira et al. (2024) argue that galaxy morphologies outside of this redshift range are expected to be statistically and qualitatively different from those found at low-z.

Simulation quality control cuts are also enforced; SubhaloFlag = True ensures that galaxies have dark matter and total mass properties consistent with cosmological origin, and log|$(\mathrm{{\it M}_{\star }/{\rm M}_{\odot }})\gt 10$| ensures that each galaxy contains at least |$\sim 7000$| star particles. Departing from the selection used in Bickley et al. (2021), there is also an upper mass cut at log|$(\mathrm{{\it M}_{\star }/{\rm M}_{\odot }})\lt 11$|. Since a disproportionate number of galaxies with high stellar masses are experiencing a merger, it is difficult to match a representative sample of non-merging control galaxies to them. From this subset Ferreira et al. (2024) select a general merger sample with stellar mass ratios |$\mu \gt $|1:10, and identify a broad post-merger class including all galaxies that have coalesced after a merger that took place in the last 1.7 Gyr. The 1.7 Gyr time window for merger selection is designed to capture the vast majority of galaxies that might exhibit post-merger-like morphologies, since mergers may be identifiable up to 2 Gyr after coalescence (see Lotz et al. 2008). Ferreira et al. (2024) again follow Byrne–Mamahit et al. (2024) and use an updated measurement of |$\mu$|, which considers the mass ratio between interacting companion galaxies at a time when they were at least 50 kpc apart. In comparing the masses of interacting galaxies while they are still well separated, one avoids the effects of ‘numerical stripping’, a simulation problem in which galaxy masses can be under-estimated when particles are wrongly associated with a nearby neighbour. For this work, we take the subset of immediate post-mergers for which coalescence took place in the simulation snapshot prior to imaging (i.e. more than one progenitor subhalo from the previous snapshot are associated with a new subhalo in the present snapshot). Algorithmically identified post-mergers in TNG100-1 have a range of characteristics, with some examples still hosting more than one stellar nucleus, and others having the appearance of a more complete coalescence. Visual inspection of the post-merger sample indicates that cases with multiple nuclei are separated by |$\lt 10$| kpc, and share a common stellar envelope (conveniently, a common criterion for visual identification of post-mergers). The final sample of post-mergers for this work contains 1627 galaxies. The main differences between the post-merger sample used in this paper and that used in Bickley et al. (2021) are the upper limit on |$\mathrm{{\it M}_{\star }}$|, the updated |$\mu$| estimate for the simulation, and the enforcement of the SubhaloFlag.

2.1.2 Controls

An equal number (1627) of control galaxies are matched to the post-mergers described in Section 2.1.1. The control pool includes two types of non-merger galaxies: ‘true’ and ‘potential’. ‘True’ non-mergers have not experienced a merger with a mass ratio of >1:10 in the last 1.7 Gyr (|$T_{\text{post-merger}}\gt 1.7$|), and do not experience a merger for at least 1.7 Gyr after the time of imaging (|$T_{\text{until-merger}}\gt 1.7$|). ‘Potential’ non-mergers have not experienced a merger with a mass ratio of >1:10 in the last 1.7 Gyr, and are unlikely to experience a merger event in the next few Gyr based on a nearest neighbour separation criterion (|$r_{1}\gt 50$| kpc). The mass ratio criterion for controls means that more minor mergers are included in the control sample. The potential non-mergers are distinct from true non-mergers because the simulation runtime came to an end before their merger trees can be fully inspected for potential future merger events. Both types of non-mergers are included in the control pool from which the matched controls in this work are drawn.

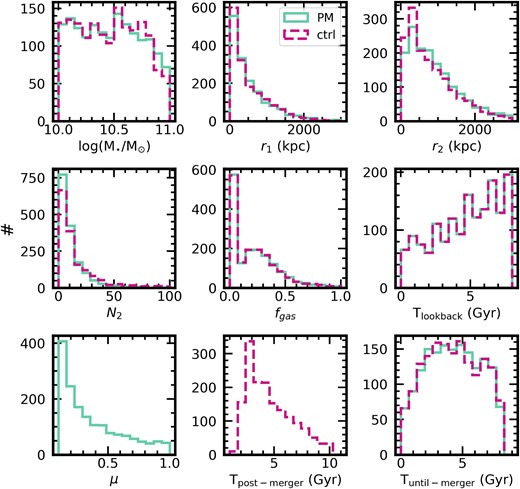

Individual control galaxies are matched to the post-mergers on three parameters: |$\mathrm{{\it M}_{\star }}$|, z, and gas fraction |$f_{\text{gas}}$|, where |$f_{\text{gas}}$| is a unitless quantity defined as the gas mass divided by the sum of the stellar and gas masses. The |$\mathrm{{\it M}_{\star }}$| matching tolerance is 0.05 dex, and the |$f_{\text{gas}}$| tolerance is 0.05. Control galaxies must also be taken from the same simulation snapshot (i.e. with identical lookback time, |$T_{\text{lookback}}$|) as their post-merger counterparts. Gas content and the availability of gas for forming new stars is known to affect the observed morphologies of post-mergers; see Wilkinson et al. (2024), Lotz et al. (2010), and Bell et al. (2006). The nearest neighbour in the |$\mathrm{{\it M}_{\star }}{\!-\!}f_{\text{gas}}$| plane is taken as the best control for each merger. Controls are matched without replacement so that the final samples of mergers and controls contain the same number of unique galaxies. Fig. 1 shows that the merger identification and control matching procedures have been effective in identifying statistically comparable galaxy samples whose primary difference is merger status. Fig. 1 also shows the sample statistics for a number of parameters not used for control matching, including second nearest neighbour distance (|$r_{2}$|), the number of neighbour galaxies within 2 Mpc (|$N_{2}$|).

The stellar mass, lookback time, gas fraction (|$f_{\text{gas}}$|), mass ratio |$\mu$|, and environment (|$r_{1}$|, |$r_{2}$|, |$N_{2}$|) statistics for the post-mergers (teal) and non-merger controls (magenta) used to train the CNNs in this work. We also include |$T_{\text{post-merger}}$| for the controls (but not for the post-mergers because they all have |$T_{\text{post-merger}}=0$|) and |$T_{\text{until-merger}}$| for both classes.

2.2 Mock observations

The mock observation approach used in this work is similar to that in Bickley et al. (2021), with small modifications. First, redshifts are no longer chosen at random from a real population of galaxy redshifts. Instead, we identify five redshift bins between |$0\lt z\lt 0.3$| that contain equal numbers of galaxies in the seventh data release (DR7) of the Sloan Digital Sky Survey (SDSS; York et al. 2000). The redshift values in the centres of each bin are |$z={0.036,0.101,0.150,0.198,0.256}$|. At each of the five redshifts, we perform one mock observation with a fixed physical diameter of 100 kpc for each of four camera angles at the vertices of an equilateral tetrahedron with the galaxy at its centre. We therefore make a total of 20 (five redshifts times four camera angles) mock observations of each galaxy.

Since real skies are not available from all of the surveys we plan to study (10-yr imaging co-adds from LSST are not yet available at the time of writing), we use synthetic image backgrounds based on the reported observational parameters for each survey. We repeat the mock observation procedure for each of the five surveys, so that the complete image data set used in this work includes 20 images for each of 1627 post-mergers and 1627 controls (a total of 65 080 images) for each of five surveys. The observational parameters for each of the surveys are outlined in Table 2, including the charge-coupled device (CCD) pixel scale (on-sky angle subtended by a single pixel), the PSF (expected or known instrumental PSF for the survey, dominated by the atmosphere), |$\sigma _{\text{sky}}$| (the standard deviation of the Gaussian sky noise in magnitudes per square arcsecond used to generate the mock observation), and the |$5\sigma$| point-source depth (a convenient quantity reported as an at-a-glance metric of the limiting depth for many surveys). The essential statistics used to determine |$\sigma _{\text{sky}}$| for each survey were found at the following sources: SDSS1, DECaLS (Dey et al. 2019), CFIS DR52, HSC-W (Aihara et al. 2022), and the LSST 10-yr co-adds (Ivezić et al. 2019).

Essential parameters describing the image quality of r-band data from each of the surveys included in this comparison. The |$5\sigma$| point-source depths and PSFs are plotted against one another later in Fig. 10.

| Survey . | CCD pixel scale . | PSF . | |$\sigma _{\text{sky}}$| . | |$5\sigma$| PSD . |

|---|---|---|---|---|

| (units) . | (arcsec pix−1) . | (arcsec) . | (mag |$\mathrm{arcsec}^{-2}$|) . | (mag) . |

| SDSS | 0.396 | 1.4 | 24.33 | 22.7 |

| DECaLS | 0.262 | 1.18 | 24.97 | 23.54 |

| CFIS DR5 | 0.186 | 0.69 | 25.6 | 25.0 |

| HSC-W | 0.17 | 0.75 | 26.95 | 26.5 |

| LSST 10y | 0.2 | 0.7 | 28.12 | 27.5 |

| Survey . | CCD pixel scale . | PSF . | |$\sigma _{\text{sky}}$| . | |$5\sigma$| PSD . |

|---|---|---|---|---|

| (units) . | (arcsec pix−1) . | (arcsec) . | (mag |$\mathrm{arcsec}^{-2}$|) . | (mag) . |

| SDSS | 0.396 | 1.4 | 24.33 | 22.7 |

| DECaLS | 0.262 | 1.18 | 24.97 | 23.54 |

| CFIS DR5 | 0.186 | 0.69 | 25.6 | 25.0 |

| HSC-W | 0.17 | 0.75 | 26.95 | 26.5 |

| LSST 10y | 0.2 | 0.7 | 28.12 | 27.5 |

Essential parameters describing the image quality of r-band data from each of the surveys included in this comparison. The |$5\sigma$| point-source depths and PSFs are plotted against one another later in Fig. 10.

| Survey . | CCD pixel scale . | PSF . | |$\sigma _{\text{sky}}$| . | |$5\sigma$| PSD . |

|---|---|---|---|---|

| (units) . | (arcsec pix−1) . | (arcsec) . | (mag |$\mathrm{arcsec}^{-2}$|) . | (mag) . |

| SDSS | 0.396 | 1.4 | 24.33 | 22.7 |

| DECaLS | 0.262 | 1.18 | 24.97 | 23.54 |

| CFIS DR5 | 0.186 | 0.69 | 25.6 | 25.0 |

| HSC-W | 0.17 | 0.75 | 26.95 | 26.5 |

| LSST 10y | 0.2 | 0.7 | 28.12 | 27.5 |

| Survey . | CCD pixel scale . | PSF . | |$\sigma _{\text{sky}}$| . | |$5\sigma$| PSD . |

|---|---|---|---|---|

| (units) . | (arcsec pix−1) . | (arcsec) . | (mag |$\mathrm{arcsec}^{-2}$|) . | (mag) . |

| SDSS | 0.396 | 1.4 | 24.33 | 22.7 |

| DECaLS | 0.262 | 1.18 | 24.97 | 23.54 |

| CFIS DR5 | 0.186 | 0.69 | 25.6 | 25.0 |

| HSC-W | 0.17 | 0.75 | 26.95 | 26.5 |

| LSST 10y | 0.2 | 0.7 | 28.12 | 27.5 |

A new approach for generating synthetic sky backgrounds was developed for this effort. First, the light from the synthetic galaxy is re-binned to the required CCD pixel scale and blurred with an artificial Gaussian PSF with the same angular size as reported in the literature (shown in Table 2). The implementation of PSF blurring is the same for each of the five surveys. Next, the parameter |$\sigma _{\text{sky}}$| is needed to specify the amplitude of the noise that will be added to approximate the sky. Computing |$\sigma _{\text{sky}}$| analytically without access to imaging is non-trivial, since |$\sigma _{\text{sky}}$| is sensitive to the CCD pixel scale, the PSF, the survey’s true sensitivity (approximated here by the |$5\sigma$| point source depth), and the aperture within which the signal (e.g. from a galaxy image or a point source) is compared to the noise. In order to determine |$\sigma _{\text{sky}}$| in a homogeneous way for each of the five surveys, we used the following procedure:

Create a point-source with a brightness equal to the reported limiting |$5\sigma$| point-source depth.

Convolve the source with the PSF of the relevant survey.

Add the source to a test image containing an arbitrarily high level of Gaussian noise at the pixel scale of the survey.

Using SourceExtractor (Bertin & Arnouts 1996) as implemented in Python by Barbary (2016), measure the flux and flux error within some circular aperture.

Repeat all previous steps with incrementally less noise in steps of 0.025 mag arcsec|$^{-2}$| until the flux divided by the flux error is at least 5 (i.e. |$5\sigma$|).

Repeat all previous steps 500 times and take median to ensure there is no dependence on noise.

If the aperture used to estimate the |$5\sigma$| point source depth is not given, repeat all previous steps with varying circular apertures until the flux (at the point of a |$5\sigma$| detection) is equal to the reported |$5\sigma$| limiting point source depth of the survey in magnitudes.

Report the standard deviation used to generate the final sky background as |$\sigma _{\text{sky}}$|.

Since the modified version of RealSim (Bottrell et al. 2019) used to generate mock CFIS images in Bickley et al. (2021) accepts a false sky |$\sigma$|, CCD pixel scale, and PSF as input, the availability of |$\sigma _{\text{sky}}$| means that image quality is now fully parametrized. Other than the modifications to the redshift selection and false sky generation methods, the mock observation and image normalization procedures are the same as outlined in section 2.2 of Bickley et al. (2021), in which surface brightness maps are computed from the stellar mass surface density of each galaxy and the r-band absolute magnitudes for TNG100-1 galaxies tabulated in Nelson et al. (2018).

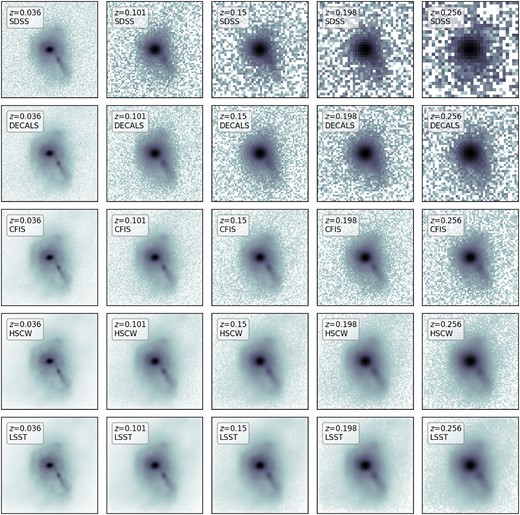

Figs 2 and 3 show a useful cross-section of the data used to train and evaluate the CNNs in this work. In each row, both figures show the five z realizations created for a single camera angle of one post-merger (for Fig. 2) and one control (for Fig. 3) in a single survey. Subsequent rows show the corresponding images from each of the five surveys considered. In SDSS, the visibility of the prominent tidal tail in the post-merger galaxy is hidden in the image noise by |$z=0.2$|, and the post-merger and control look indistinguishable from one another in SDSS in our highest z realization. The other surveys retain the low-surface brightness features associated with the merger across the redshift range, but the deepest images from the LSST camera (fourth row in each mosaic) allow for obvious distinction between the two classes even at the highest z studied here.

A mosaic showing all of the survey and redshift realizations for one camera angle for one galaxy from the post-merger sample. Images are 100 kpc on a side. Redshift increases from left to right, and particularly in the shallower surveys studied in this work (e.g. SDSS and DECaLS) the decrease in visibility of the merger-induce tidal tail with increasing mock observation z can be noted. The differences between rows highlight the importance of PSF, CCD scale resolution, and limiting depth in preserving the morphological signatures of a recent merger event. The galaxy images are shown with |$1\sigma$| log-normalized scaling to better highlight the low-surface brightness features.

The same as Fig. 2, but for one galaxy from the control sample. Even for an undisturbed galaxy, substructure detail becomes increasingly visible for surveys with deeper limiting magnitudes or finer resolution.

2.3 CNN architecture and training strategy

We train CNNs on image data prepared according to the realism parameters of each survey. Images for training are partitioned into training, test, and validation sets, which represent 80, 10, and 10 per cent of the data, respectively. All 20 images associated with each post-merger galaxy, and all 20 images associated with the matched control for a given post-merger galaxy, only ever appear within one partition. The aim of this strategy is to prevent any amount of cross-contamination between the partitions, which could give rise to artificially high-performance metrics. Moreover, the images corresponding to each galaxy appear within the same partition for all five surveys. In this way, each of the five CNNs is trained on nearly identical data, with the only difference being image quality. Controlling for as many nuisance parameters as possible (e.g. information leaking between data partitions, small fluctuations in performances due to differently-shuffled data) maximizes the significance of the comparison between the CNNs conducted later in Section 3.

The CNN architecture used in this work is identical to that used in Bickley et al. (2021) except for the dimensions of the input layer. Since a |$138\times 138$|-pixel input image was chosen in Bickley et al. 2021 based on the z demographics of galaxies in CFIS, it is not strictly fair to enforce an input image size of |$138\times 138$| for all five surveys, whose selection functions will include galaxies with different z distributions than CFIS. Images in this work are resized instead to |$128\times 128$| pixels using the anti-aliasing resize algorithm from skimage3 (van der Walt et al. 2014), and the final images are large enough to capture the essential detail in the galaxy images. An image size of |$128\times 128$| pixels is scientifically arbitrary, but is a popular choice in fixed-input machine vision problems where max pooling operations are used. The resizing operation has no bearing on the pixel-wise sensitivity of the images, since the skimage resize algorithm stretches or shrinks images, rather than re-binning them. Before training, the images are normalized in linear fashion so that their faintest pixel value is fixed at zero and the brightest is fixed at one. The same on-the-fly augmentation algorithm as in Bickley et al. (2021), which applies translational, rotational, and shear transformations of at most 10 per cent during training, is used here as well. All models are allowed to train for an arbitrarily long time as long as their performance is improving – we monitor the value of the loss function (binary cross-entropy, which characterizes cross-contamination between the post-merger and control classes), and if the loss does not improve for 50 training epochs4, training is terminated and the model weights from the epoch with the best validation performance are restored. Training is accelerated using multiple graphics processing units (GPUs) and the ‘mirrored strategy’ approach currently being implemented in tensorflow,5 in which the responsibilities of training are parallelized across multiple GPUs. Importantly, other than a factor decrease in training time proportional to the number of cores used, the models behave the same whether or not the mirrored strategy is used.

We tested two other architectures on the deepest and shallowest image data sets (LSST and SDSS, respectively) in order to assess whether the performance achieved in this work was limited by the data (this is the goal, as we are interested in the influence of data on the accuracy of the classifiers) or by the model. Implementations of AlexNet (Krizhevsky, Sutskever & Hinton 2012) and EfficientNetB06 architecture performed similarly (within |$\sim 2$| per cent in accuracy for both post-mergers and controls) to what is reported in Section 3. The models’ similar global performance suggests that the results of this study are data-limited, rather than model-limited.

After training, each of the five CNNs are evaluated on the 10 per cent of data reserved for testing. Since our training classes contain equal numbers of images, we consider CNN predictions of |$p(x)\gt 0.5$| to indicate a post-merger classification. Finally, we perform an exhaustive cross-survey inference effort in which each model is evaluated on the other four training data sets (e.g. the SDSS model is evaluated on the SDSS, DECaLS, CFIS, HSC-W, and LSST test data sets). The model classifications taken from each of 25 inference sets (five models times five test sets) constitute the new material used to characterize the sensitivity of merger classifications to imaging quality.

3 RESULTS

Having trained all five models and used them to make merger predictions on the test set galaxies, we now present the results, which have been separated into two main categories: same-survey results (Sections 3.1 and 3.2, in which models are evaluated on data with the same construction as their training sets) and cross-survey results (Section 3.3, in which the potential for models to succeed outside of their training regime is explored). We will also pay particular attention to the trends of CNN performance with parameters that could influence the apparent strength of faint tidal morphologies, including redshift, depth, spatial resolution (both pixel scale and PSF), and merger mass ratio. In all cases, ‘completeness’ refers to the fraction of galaxies belonging to a given class (usually the post-mergers) that are classified correctly by the model, while ‘purity’ refers to the fraction of galaxies predicted by the model to be a given class that truly belong to that class.

3.1 Same-survey results

The overall success of the CNNs in distinguishing post-mergers from controls can be summarized by conventional machine learning figures of merit for classification – class-wise completeness (i.e. the fractions of post-mergers and controls that are classified correctly), receiver operating characteristic (ROC) curves, and purity-completeness curves (PCCs). Each model is attempting to generalize over the intrinsic and observational diversity of the merger and control samples in order to correctly classify as many galaxies as possible. When models are less successful in classifying a certain subset of galaxies (e.g. one of the classes, or galaxies belonging to a certain mass range), it is because the model’s overall performance was improved even as the subset in question was compromised.

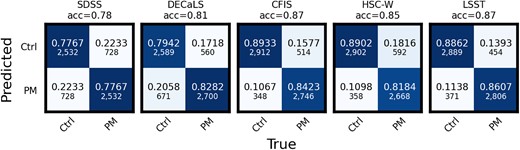

Fig. 4 shows the confusion matrices for models trained and tested on data with realism based on the same survey. The surveys are arranged in order of increasing |$5\sigma$|r-band limiting point-source depth from left to right and top to bottom. SDSS has the lowest total accuracy, as well as the lowest class-wise completenesses, for both post-mergers and controls. Based on the visual quality of the images, this is unsurprising – galaxies at the highest z studied cannot typically be separated by eye in SDSS (see Figs 2 and 3), and visual separability is often a reasonable predictor of the potential of machine vision methods for a given problem. The exact balance between the post-merger and control classes in SDSS suggests that the visual degeneracy is equally confusing in both classes. In trying to adjust itself to account for the lack of merger features in many galaxies that are labelled as mergers, the model ultimately suffers a decrease in overall accuracy.

Confusion matrices for the five trained CNN models, evaluated on test data with the same survey realism as their training data. The overall accuracy for each CNN (total fraction of galaxies labelled correctly) is shown above the panels. The completeness scores for post-mergers (bottom right corner of each confusion matrix) and on non-merger control galaxies (top left corner of each matrix) summarize the class-wise performance for each model.

In DECaLS-quality imaging (representing the next step up from SDSS in terms of depth), the completeness is improved somewhat for both classes, but especially for the post-mergers, presumably since a larger number of galaxies with post-merger labels actually exhibit merger-like morphologies in the training data. Completeness statistics for both classes are again improved in CFIS imaging, since the morphological differences between mergers and controls are more visually distinct with better depth.

Referring to Figs 2 and 3, one can begin to interpret the changes in performance for surveys with better |$5\sigma$| limiting point-source depths than CFIS. At CFIS depth and better, the tidal tail feature in the merger image is visible across the entire redshift range, suggesting that CNNs will nominally be able to distinguish between the classes unless there is a spurious effect (e.g. a chance viewing angle from which a galaxy’s tidal features are obstructed, or a merger that is intrinsically unusually faint). Even though the appearances of individual galaxies in HSC-W and LSST are distinct compared to CFIS, the relatively uniform visibility of merger-induced morphology across the three highest quality data sets is likely responsible for the similarity in performance between the CNNs trained on CFIS, HSC-W, and LSST data. The specific role of depth and resolution in determining the efficacy of each CNN is investigated in greater detail later in Figs 7−9. In interpreting the global performance results, we emphasize that all five models have converged (without improving on the validation data set for 50 epochs).

There is also an apparent ceiling (at least for the degrees of survey realism studied here) for CNN performance for any class-wise completeness or global accuracy score at |$\sim 90$| per cent. The performance limit is consistent with the findings of Bottrell et al. (2022), who report completeness scores between |$89\,{\text{and}}\,90$| per cent when idealized TNG100-1 stellar maps are used to train CNNs. Ferreira et al. (2024)’s merger completeness would appear to be lower, at 84 per cent, but we note that the Ferreira et al. (2024) merger class includes both pre- and post-merger galaxies in a wide temporal window. The comparison is therefore not exact. Since the galaxy morphologies are easily visible across the entire redshift range in the deepest data, we propose that this apparent limit is a consequence of intrinsic degeneracy between the stellar morphologies of galaxies belonging to the post-merger and control classes (e.g. some mergers have truly undisturbed appearances as a result of small |$\mu$| or inconvenient viewing angles, regardless of image quality). Indeed, inspection of the five models’ predictions for |$\sim 100$| individual galaxies reveals that the certain galaxies are intrinsically problematic, i.e. misclassified regardless of viewing angle, z, or survey due to intrinsic morphology. We do not compare the performance of the CNN on survey-realistic images to its performance on ‘idealized’ data, since multiple z realizations are not possible in a realism-free context. As such, the CNN would need to be trained on a significantly smaller data set, and the comparison would no longer be direct. We can instead refer to Wilkinson et al. (2024), who include an idealized data set in their experiment. Even though a different method is used, Wilkinson et al. (2024) also find that excessive depth is not necessarily beneficial to merger identification. An analogous effect is therefore plausibly responsible for the results of this study.

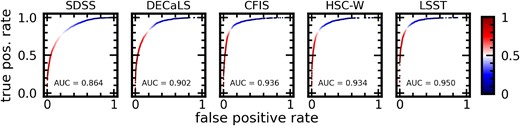

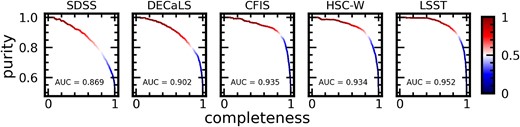

We next use ROC curves to collapse each model’s performance down to a single figure of merit, an area under the curve (AUC) score. Fig. 5 shows ROC curves for the five CNNs, with the AUC score shown in each panel. The curves generally enclose more area with increasing depth, particularly in SDSS (with AUC = 0.864), DECaLS (AUC = 0.902), CFIS (AUC = 0.936), and LSST (AUC = 0.950) imaging. The AUC score for HSC-W (AUC = 0.934) is slightly lower than for CFIS, possibly due to loss of some post-mergers at low-z (see also Fig. 11).

ROC curves, another merit diagram for CNN classifiers, for the five CNNs. ROC curves plot the true positive rate (fraction of post-mergers correctly identified) and the false positive rate (fraction of incorrectly classified post-mergers) as a function of model decision threshold. The area under the ROC curve is also a figure of merit, with 0.5 equivalent to random performance, and 1.0 indicating perfect separation between the classes. ROC AUC score also increases generally with depth, reflecting again the importance of imaging depth in merger identification.

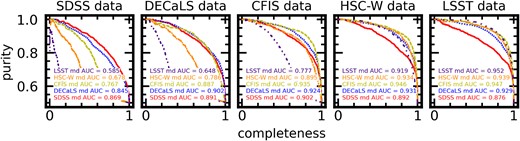

We also show the PCCs and the corresponding AUCs for all five CNNs in Fig. 6. PCCs are distinct from ROC curves in that they highlight the model’s ability to return a sample of post-mergers that is pure and complete as a function of CNN |$p(x)$|. The CFIS-trained model (AUC = 0.94) impressively achieves strong completeness for both classes at a shallower depth. The same-survey figures of merit (completeness scores on post-mergers and controls, and the areas under the ROC and PCCs) for all five models are summarized in Table 3. Since the models are evaluated on equal-sized samples of post-mergers and controls, we note that the purity statistics reported in Table 3 would be lower if the models were used to evaluate a galaxy sample with realistic proportions of mergers and non-mergers.

Purity-completeness (or precision-recall, in machine learning parlance) curves for all five models evaluated on like data. Each panel shows the purity (or precision of the predicted merger sample) and completeness (recall of the predicted merger sample) as a function of the model’s decision threshold (shown on the colour bar). The area under the curve is also a figure of merit, with an AUC of 0.5 indicating performance consistent with random, and an AUC of 1.0 indicating perfect separation between the classes by the model. The area under the curve scales generally with the depth of the survey studied, indicating that the ability of our CNN architecture to identify samples that are degrees of pure and complete depends on the observability of faint features in the images.

The class-wise completeness, overall accuracy, and post-merger purity statistics, as well as AUC scores for the ROC and PCCs for the CNNs trained for each survey. Figures are reported for equal-sized samples of mergers and non-mergers, so the purity statistics would be lower for all surveys if the CNNs were applied to a test set with a realistic distribution of merger stages.

| Survey . | Post-merger completeness . | Control completeness . | Accuracy . | Purity . | Area under ROC . | Area under PCC . |

|---|---|---|---|---|---|---|

| SDSS | 0.78 | 0.78 | 0.78 | 0.78 | 0.86 | 0.87 |

| DECaLS | 0.83 | 0.79 | 0.81 | 0.80 | 0.90 | 0.90 |

| CFIS DR5 | 0.84 | 0.89 | 0.87 | 0.89 | 0.94 | 0.94 |

| HSC-W | 0.82 | 0.89 | 0.85 | 0.88 | 0.93 | 0.94 |

| LSST 10y | 0.86 | 0.88 | 0.87 | 0.88 | 0.95 | 0.95 |

| Survey . | Post-merger completeness . | Control completeness . | Accuracy . | Purity . | Area under ROC . | Area under PCC . |

|---|---|---|---|---|---|---|

| SDSS | 0.78 | 0.78 | 0.78 | 0.78 | 0.86 | 0.87 |

| DECaLS | 0.83 | 0.79 | 0.81 | 0.80 | 0.90 | 0.90 |

| CFIS DR5 | 0.84 | 0.89 | 0.87 | 0.89 | 0.94 | 0.94 |

| HSC-W | 0.82 | 0.89 | 0.85 | 0.88 | 0.93 | 0.94 |

| LSST 10y | 0.86 | 0.88 | 0.87 | 0.88 | 0.95 | 0.95 |

The class-wise completeness, overall accuracy, and post-merger purity statistics, as well as AUC scores for the ROC and PCCs for the CNNs trained for each survey. Figures are reported for equal-sized samples of mergers and non-mergers, so the purity statistics would be lower for all surveys if the CNNs were applied to a test set with a realistic distribution of merger stages.

| Survey . | Post-merger completeness . | Control completeness . | Accuracy . | Purity . | Area under ROC . | Area under PCC . |

|---|---|---|---|---|---|---|

| SDSS | 0.78 | 0.78 | 0.78 | 0.78 | 0.86 | 0.87 |

| DECaLS | 0.83 | 0.79 | 0.81 | 0.80 | 0.90 | 0.90 |

| CFIS DR5 | 0.84 | 0.89 | 0.87 | 0.89 | 0.94 | 0.94 |

| HSC-W | 0.82 | 0.89 | 0.85 | 0.88 | 0.93 | 0.94 |

| LSST 10y | 0.86 | 0.88 | 0.87 | 0.88 | 0.95 | 0.95 |

| Survey . | Post-merger completeness . | Control completeness . | Accuracy . | Purity . | Area under ROC . | Area under PCC . |

|---|---|---|---|---|---|---|

| SDSS | 0.78 | 0.78 | 0.78 | 0.78 | 0.86 | 0.87 |

| DECaLS | 0.83 | 0.79 | 0.81 | 0.80 | 0.90 | 0.90 |

| CFIS DR5 | 0.84 | 0.89 | 0.87 | 0.89 | 0.94 | 0.94 |

| HSC-W | 0.82 | 0.89 | 0.85 | 0.88 | 0.93 | 0.94 |

| LSST 10y | 0.86 | 0.88 | 0.87 | 0.88 | 0.95 | 0.95 |

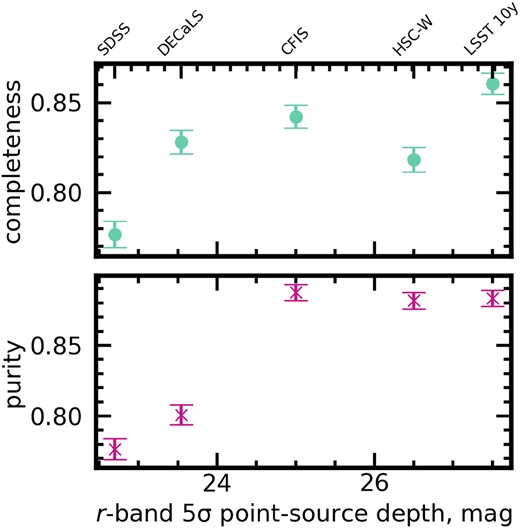

Having stated the overall figures of merit, we will present the performance of the classifiers as a function of several quantities that we expect would bear on a CNN’s ability to distinguish between mergers and controls. Fig. 7 shows the post-merger completeness (teal data series) and the purity of the predicted post-merger sample (magenta data series) for the five CNNs, plotted as a function of the reported r-band limiting |$5\sigma$| point-source depth. Viewing the performance metrics presented in Fig. 4 in the context of each survey’s limiting depth, it is clear that sufficiently deep imaging is helpful for reliable merger classifications, but that there is a diminishing return in taking observations deeper than |$\sim 25$| mag. As a result, the CNN is already finding mergers at low-z in CFIS imaging at a success rate similar to what is expected for HSC-W and LSST.

The completeness and purity scores for models trained with five different survey realism parameters as a function of the reported |$5\sigma$| limiting point-source depth for each survey. Completeness on the post-merger class is shown in teal, and the purity of the predicted post-merger sample is shown in magenta. Generally, deeper imaging is helpful to completeness and purity, but there is a diminishing return and additional complications in imaging from HSC-W, above the depth of CFIS.

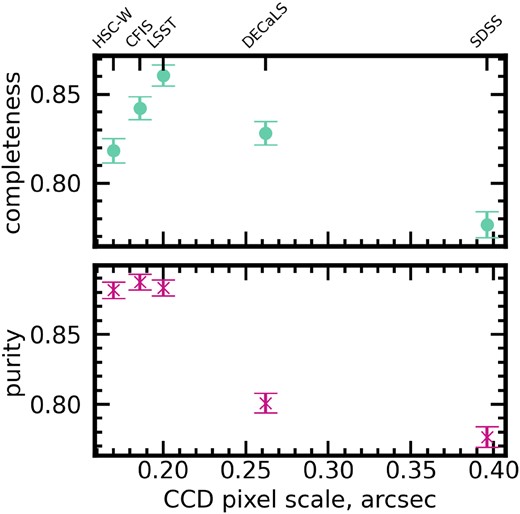

Fig. 8 has the same construction as Fig. 7, but instead plots the surveys’ performance metrics against the reported r-band seeing PSF in arcseconds. The PSFs for all of the surveys are dominated by contributions from Earth’s atmosphere. There seems to be a trend between PSF and completeness, but we argue that this is mainly because survey depth generally trends with PSF (i.e. state-of-the-art surveys are improved in both depth and resolution compared to legacy surveys; see Fig. 10). While it is difficult to estimate the specific contributions of depth and PSF to the final completeness of each model without generating a more extensive grid of mock models, we refer to Wilkinson et al. (2024), who find that depth is the main determinant of merger identification performance when combining non-parametric morphological parameters in a random forest classifier.

The same as Fig. 7 but for the typical PSF (r-band seeing) for each survey, which constrains the effective angular resolution. The completeness and purity achieved by the LSST model, HSC-W model, and CFIS model are closely grouped together on these axes, suggesting that reliable merger classifications can be completed for the z range studied even when the PSF is atmosphere-dominated.

The angular scale of the individual pixels of the camera CCD used to take images for each survey also bears on effective resolution and depth; Fig. 9 plots the same performance metrics for the five models again but as a function of CCD pixel size. The trend with CCD scale is interesting for two reasons. First, the CCD scale determines the extent to which the maximum spatial resolution (set by the PSF) is preserved in the final image; in this way, it is a more direct determinant of spatial resolution than the PSF itself. Second, it plays a role in determining the effective S/N of the image, since signal and noise photons are effectively binned on a pixel-wise basis. Completeness increases with decreasing pixel scale from SDSS to DECaLS and from DECaLS to LSST, but turns down again for smaller pixel scales. It is unlikely that CCD pixel scale is the main driver of the final completeness and purity scores for each model, but they reflect the two main trends suggested by the results up to this point: that increasing depth is generally good for performance, but that an excess of spatial detail at the expense of pixel-wise S/N can be a source of confusion.

The same as Fig. 7 but for the angular pixel scale of the charged couple device (CCD) for each survey, which bears on both effective angular resolution and the pixel-wise S/N. The post-merger completeness and purity for the LSST model, HSC-W model, and CFIS model are grouped together on these axes as well.

Since the morphological disturbances of significant (with |$\mu \gt $|1:10) mergers are generally on the scale of at least several kpc, we propose that imaging sensitivity (determined by the depth and CCD scale) plays a more important role in limiting performance than PSF, at least for the range of PSF studied here. The CCD pixel scale’s role in determining the sensitivity of images is likely more important than its bearing on spatial resolution in the context of this study, since our image preparation pipeline involves resizing all images to |$128\times 128$| pixels. At low-z, the resizing operation also reduces the resolution of the images evaluated by the CNN (i.e. the resizing operation is the limiting factor on resolution), again de-prioritizing the resolution limit set by the CCD except in the highest z realization studied here. In other words, at a given survey depth, smaller CCD scale leads to lower pixel-wise S/N. It is this effect, rather than the spatial resolution constraint set by the CCD, that is leading to variable performance as a function of CCD scale.

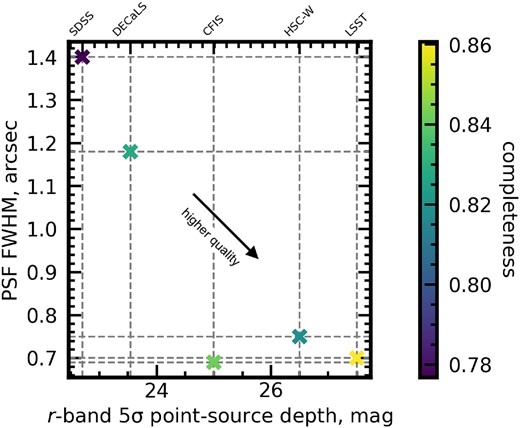

Finally, we plot the models’ post-merger completeness scores on a two-dimensional plane of depth and PSF resolution in Fig. 10. The post-merger completeness statistics for each model are represented by the blue-green colour scale for each marker on the plane. Viewed in two dimensions, the relative importances of the trends outlined in Figs 7 and 8 can be summarized. Since the SDSS- and DECaLS-trained models have the largest PSF and shallowest depth, it is difficult to determine the precise roles of depth and resolution in setting the final completeness score for each model. Still, the visual characteristics of the post-mergers in SDSS and DECaLS data (especially at high-z, see Fig. 2) suggest that the surveys’ comparatively shallow depth may set a practical upper limit on the visibility of tidal features.

The post-merger completeness scores for each of the five models (colour scale) plotted in the depth (here approximated by limiting |$5\sigma$| point-source depth) and resolution (PSF FWHM in arcseconds) plane. Performance is sensitive to both parameters, with an apparent minimum limiting depth of |$\sim 24\,{\text{and}}\,25$| mag being important to high completeness. Resolution also plays a role in setting the final completeness score for each model, but the shallowest two surveys (SDSS and DECaLS) also have the worst spatial resolution, making it somewhat difficult to disentangle the individual contributions of each parameter.

3.2 Performance trends with galaxy parameters

In Section 3.1, we demonstrated that the efficacy of CNN models for merger and non-merger classification is sensitive to survey depth and resolution, but it is also useful to investigate the representativeness of the merger samples that are identified by the CNNs in each image set.

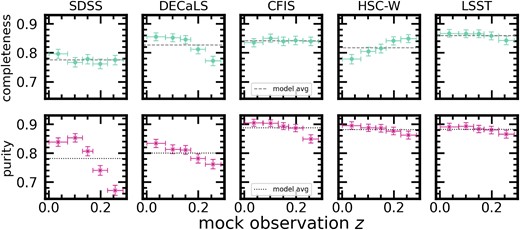

Low-surface brightness features fade with redshift as a result of cosmological dimming (as seen in Fig. 2), so it is reasonable to expect that CNNs will struggle with calibration (learning to identify the range of surface brightnesses associated with tidal features) in shallow imaging and at high-z. The post-merger completeness and predicted post-merger sample purity for each of the five CNNs is shown as a function of mock observation z in Fig. 11. The SDSS, CFIS, and LSST models retain approximately consistent completeness as a function of mock observation z. The DECaLS model identifies post-mergers more successfully at low-z, reflecting the limited visibility of merger-like morphology in the highest two redshift realizations in DECaLS imaging (see Fig. 2). The HSC-W model has the opposite behaviour, possibly due to the resolution of the HSC CCD (see Fig. 9, which reveals decreasing performance with finer CCD resolution between LSST, CFIS, and HSC-W). We emphasize that decreasing merger completeness with CCD scale is not likely related to the limits on spatial resolution imposed by the CCD. Instead, the limit in pixel wise sensitivity at a given depth set by the CCD appears to be a more likely culprit.

The completeness of the five CNNs for post-mergers (teal), purity of the predicted post-merger samples (magenta), and the average completeness (grey dashed line) and average purity (black dotted line) for each model binned as a function of z. Statistics are reported at each of the five discrete redshifts used for mock observations (given in Section 2.2). The error regions are the binomial errors on the statistics at each z. The diversity of the trends shown in each panel suggest that generalizing over the appearances of galaxies across a given z range is difficult for CNNs.

Fig. 11 also reveals that the purity of predicted post-merger samples decreases for all five CNNs with increasing z. This is to be expected, since at high-z, images in all five data sets contain larger random fluctuations due to sky noise. In some cases, we expect that these fluctuations and the muted appearance of some post-mergers’ morphologies together give rise to more contaminated samples. The models’ sensitivity to redshift is broadly the result of challenges inherent to the task of generalizing over a range of z.

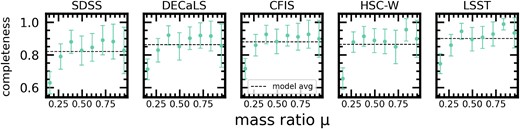

The progenitor mass ratio |$\mu$| is expected to be among the most important factors governing merger observability (see Lotz et al. 2010). Post-mergers whose progenitors were similar in mass are expected to be more dramatically disturbed compared to the remnants of more minor mergers (e.g. with |$\mu \lt $|1:10). Fig. 12 emphasizes this point, and suggests that the influence of |$\mu$| on merger observability is the most ubiquitous one presented in this work. All five CNNs experience very similar trends (albeit with their amplitudes governed by the depth and resolution of each survey) with significant misclassification for post-mergers with |$\mu \lt $|1:4. Mergers near the lower limit of |$\mu$| selection for the training set are therefore at risk of significant under-representation in the final merger sample predicted by the CNN, and the bias is likely worsened if visual classifications are used for quality control (e.g. as in Bickley et al. 2021) since more dramatic post-mergers are also more likely to be confirmed visually.

The same as Fig. 11, but with purity and completeness statistics shown for galaxies arranged in eight bins of |$\mu$|. We find very similar trends as a function of |$\mu$| across all five surveys, even though the models have shown themselves to behave very differently in other tracts of parameter space. The similarity of the trend in all five panels (increasing and stabilizing completeness for higher mass ratios) is intuitive, and suggests that merger mass ratio is one of the primary factors affecting whether a given galaxy will be selected as a post-merger. Post-mergers with |$\mu \lt $|1:4 are likely to be proportionally under-represented by some 20 per cent compared to the remnants of merger events with larger mass ratios. The data is only shown for post-mergers, since the mass ratios of long-past merger events for our control sample galaxies are not relevant.

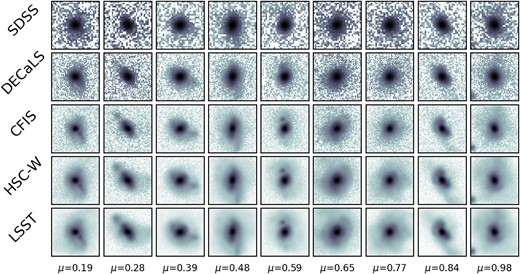

The interplay between merger severity (as approximated by mass ratio, |$\mu$|) and z is important to the results of this study, since we expect that small-|$\mu$| mergers at high-z are the most likely mergers to be overlooked by CNNs. We use Fig. 13 to investigate the relative importances of image quality and mass ratio at high-z. We select one galaxy with |$10.4\lt \mathrm{log({\it M}_{\star })/{\rm M}_{\odot }}\lt 10.6$| each from 9 equal-sized mass ratio bins between |$0.1\lt \mu \lt 1$|, fix the redshift to |$z=0.256$| (the highest redshift studied here), and visualize each galaxy in all five surveys. A narrow mass criterion is used to ensure relatively uniform total brightnesses in the galaxies being compared. Images for a given survey in Fig. 13 are shown in rows, while each column only contains images of one galaxy. Mass ratio increases from left to right, and survey depth increases from top to bottom.

In each row, we investigate the appearance of cases at |$z=0.256$| (the highest z realization studied here) for galaxies with increasing mass ratio |$\mu$| from left to right. The |$\mu$| values for the galaxies shown in each column are printed at the bottom of the figure. This figure demonstrates that the image quality associated with shallowest surveys (SDSS and DECaLS, especially) can obscure essential morphological information, potentially leading to misclassification.

Fig. 13 shows that merger-induced morphological disturbances are generally visible at |$z=0.256$| in CFIS, HSC-W, and LSST, regardless of |$\mu$| (at least for the cases shown). The visibility of merger-like morphology is a reasonable predictor of a CNN’s ability to classify images correctly, but we emphasize that visibility does not translate a correct classification by the CNN in all cases. For DECaLS and SDSS, the two shallowest surveys included in the study, Fig. 13 suggests that the combination of small |$\mu$| and high-z may play a significant role in reducing the CNN performance statistics presented in Fig. 4.

3.3 Cross-survey results

The relative performance of the five CNNs presented in Section 3.1 illustrate the potential of the ‘best-case scenario’ in which models are trained and evaluated on data from the same image quality domain. But machine vision models (and deep learning models in general) cost time and substantial quantities of energy to train, both of which are valuable resources in science research (Strubell, Ganesh & McCallum 2019). The practice of transfer learning (i.e. training an already-trained model on a new data set for fine tuning) shows promise in the galaxy classification domain (Ackermann et al. 2018; Domínguez Sánchez et al. 2019), but transfer learning still mandates the creation of a new data set and additional computing resources. If CNNs can be applied without re-training as a matter of course outside of their original domain without sacrificing scientific rigor, the efficiency of merger searches in the coming years could be improved substantially. Moreover, Bickley et al. (2024) already conducted a case study that indicated our CNN trained on TNG100-1 galaxies with CFIS realism could be applied to shallower and lower resolution imaging from DECaLS without incurring a prohibitive amount of loss or sample impurity. We will investigate the potential for merger searches using cross-survey classifications for the five trained CNNs described earlier in Section 2.3.

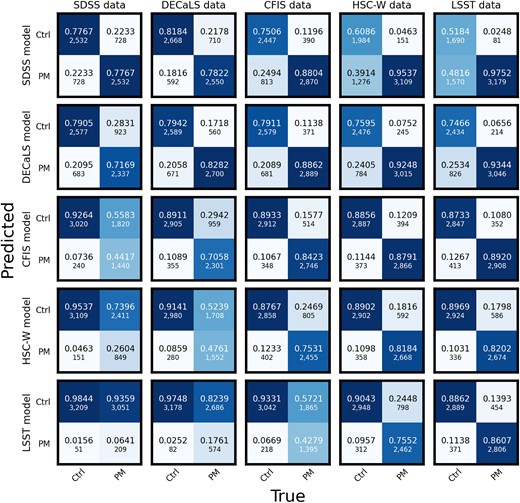

3.3.1 Overall out-of-domain performance

Fig. 14 summarizes the results of the cross-survey inference experiment, in which all five CNNs are applied (without any re-training) to the test data sets for all five surveys. The classification results achieved by a single model are shown in rows, while the results for a given data set are shown in columns. As in Fig. 4, the true labels are shown on the horizontal axes, and the machine-predicted labels are shown on the horizontal axes of each of the confusion matrices. The same five confusion matrices from Fig. 4 are shown along the diagonal as well, for reference.

The results of the cross-survey inference experiment can be broadly characterized as an illustration of the importance of calibration for automated merger searches. If a model learns to identify the features of a recent merger event in a certain range of brightness, it will (perhaps intuitively) misclassify control galaxies if non-mergers as imaged by a different survey appear to have diffuse or asymmetric features with similar brightness levels. Conversely, if mergers in a different survey appear to lack tidal features within the learned range of brightness, a model will misclassify those mergers as controls. The importance of calibration is verified in the class-wise completenesses shown in the confusion matrices above and below the diagonal in Fig. 14. Above the diagonal, models are used to classify deeper imaging than their training regime. As a result, models typically mis-classify a large number of controls as post-mergers; for an extreme case, refer to the top-right confusion matrix, in which the SDSS-trained model misclassifies nearly half of the controls as post-mergers when applied to the LSST-like imaging. Below the diagonal, models are applied to shallower imaging than in training. As a result, a large number of post-mergers are mistakenly labelled by the models as controls; see for example the bottom-left confusion matrix, in which the LSST-trained CNN classifies the vast majority of galaxies as non-mergers regardless of their true label when applied to SDSS imaging. For higher z images in the DECaLS and SDSS data sets, the models trained on deeper imaging suffer two distinct penalties: the first imposed by the significant loss of merger features below the noise (see again Figs 2 and 13), and the second imposed by mis-calibration. Calibration is an obstacle for all of the out-of-domain classification results presented here, and can (in theory) be remedied by re-training. The loss of visibility for mergers in shallow data is more difficult to overcome, and decreases the maximum potential of any model applied to data from shallow imaging surveys.

Confusion matrices for all five trained CNNs after being applied to all five test data sets. Rows of matrices show the classification results for a single model (e.g. for the SDSS-trained model in the first row) on each of the test data sets, while columns show the classification results for each of the five trained CNNs on a given test set (e.g. for the CFIS test set in the third column). The confusion matrices on the diagonal are the same as shown in Fig. 4. Broadly, the matrices highlight the fact that calibration is essential for CNN-based merger searches. When models are applied to shallower data than their training set, they tend to under-predict mergers and over-predict controls. When models are applied to deeper data, they tend to misclassify a larger number of control galaxies as mergers.

Fig. 14 also reveals some surprises in the cross-survey inference experiment that depart from the general trend just described. The HSC-W model and CFIS model perform very well when applied to deeper domains than their training data, perhaps thanks to the plateau in the trend of performance versus |$5\sigma$| limiting point-source depth shown in Fig. 7. Since the practical visibility of merger features (see again Figs 2 and 13) do not appear to change significantly at depths better than |$\sim 25$| mag, we posit that the appearance of merger features become standardized after images are resized and normalized via the method described in Section 2.3. In the context of a hybrid CNN and visual inspection merger identification framework, the CFIS and HSC-W models are therefore extremely useful, i.e. allowing for the recovery of a large proportion of post-mergers outside of their training regimes. In all cases, using visual inspection or other ensemble classification methods as a form of quality control a posteriori is advisable.

The particular combination of training and inference data used in Bickley et al. (2024) is shown in the third row, second column, where the CFIS-trained model is applied to DECaLS data. The model misclassifies an additional 14percnt of post-mergers compared to its performance in CFIS data, a result consistent with the lower global visual agreement fractions for post-mergers reported in Bickley et al. (2024) compared to Bickley et al. (2022) for post-merger searches in CFIS and DECaLS, respectively. Still, the apparent purity of the post-merger sample from DECaLS and the results in Fig. 14 indicate that merger searches can be conducted responsibly using cross-survey inference as long as additional quality control is enforced and any physical biases in the sample are accounted for (or at least discussed) in post.

3.3.2 Changes in purity and completeness

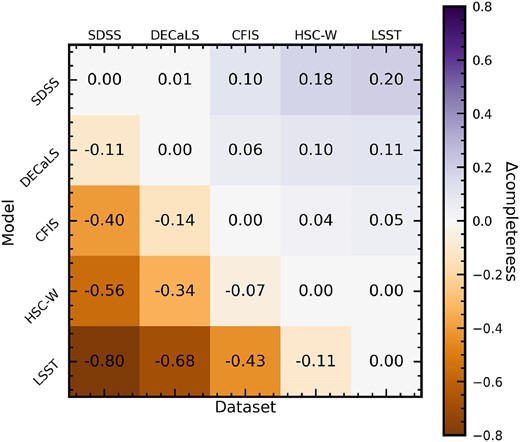

Fig. 15 directly addresses one of the central questions of the cross-survey inference experiment: how much post-merger completeness is lost when a CNN is applied outside of its training domain? In each cell of Fig. 15, we subtract the model’s completeness score on a given data set from its baseline completeness, i.e. its completeness score when applied to the test set from its training domain. Values along the diagonal are therefore zero by definition. Fig. 15 illustrates that completeness generally increases when models are applied to deeper imaging than their training domain (note the trend of positive |$\Delta$| completeness above the diagonal, shown in purple) and decreases when they are applied to shallower imaging than their training domain (negative |$\Delta$| completeness below the diagonal, ochre).

The same configuration as Fig. 14, but reporting the change in post-merger completeness for each model when it is applied outside of its training domain compared to its same-survey completeness score. Positive |$\Delta$| completeness (shown in purple) indicate that a model identifies a greater proportion of the true post-mergers in the test set, while negative |$\Delta$| completeness (shown in ochre) indicate that the model identifies fewer post-mergers compared to its baseline. Values along the diagonal are zero by definition.

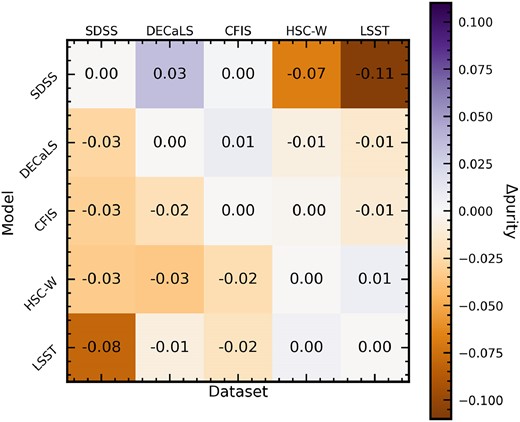

Fig. 16 has the same construction as Fig. 15, but shows the change in purity of the predicted post-merger sample when each model is applied outside of its training domain. The baseline purities for the same-survey experiment are given above in Table 3. Fig. 16 demonstrates that |$\Delta$| purity is usually smaller than |$\Delta$| completeness, though we note that this may be somewhat misleading: since we have created test data sets for each survey that include equal numbers of post-mergers and non-post-mergers, the purity scores presented throughout this work are artificially high compared to what could be achieved with a single CNN in observations.

The same as Fig. 15, but reporting the change in the purity of the predicted post-merger sample for each model when it is applied outside of its training domain compared to its same-survey purity score. Values along the diagonal are zero by definition.

Fig. 16 indicates that purity is generally lost when models are applied outside of their training regime, but there are noteworthy exceptions to the rule. The SDSS-trained model improves in both purity and completeness (see Fig. 15) when it is applied to DECaLS imaging. This is somewhat intuitive, since mergers in the DECaLS data set at higher z are more likely to retain their merger-like appearance. In general, the results of the cross-survey inference experiment confirm that SDSS imaging is too shallow to conduct a holistic merger search spanning the survey’s redshift domain.

Fig. 17 shows the results of the cross-survey inference experiment in another way, folding in the utility of a cut in CNN |$p(x)$| for each model. The PCCs are shown only for the post-merger classes, as the primary class of interest in the context of a merger search. Mirroring the results in Fig. 14, the curves for models applied to shallower data are typically lacking in completeness for a given |$p(x)$|, even though the samples they identify may be very pure. Improving overall performance by each of the models in deeper data again suggests the conclusion that there is an effective standardization of the appearance of merger features in deep imaging, allowing for more successful cross-survey inference. Still, the purity completeness curves indicate that models trained specifically to identify post-mergers in a given image survey are optimal in most cases.

Purity-completeness (or precision-recall) curves for the cross-survey inference experiment. The series of colour-coded curves in each panel show the PCCs for each of the five models applied to one of the data sets. The annotations show the AUC scores for each curve, illustrating each model’s potential to identify samples of post-mergers that are pure and complete when applied outside of their training regimes.

4 DISCUSSION AND CONCLUSIONS

We have detailed the limits placed on low-z merger searches by image quality, using five real surveys as benchmarks, and holding all other methodological variables constant. We find the relationship between image quality and the efficacy of CNNs to be complex. Intuitively, images must be deep enough to detect faint tidal features across the entire redshift range studied in order to be successful. Perhaps unexpectedly, greater depth is not categorically beneficial: in detecting recent (within one TNG100–1 simulation snapshot, some 150 Myr) and impactful (with |$\mu \gt $|1:10) mergers, we find that the depth of CFIS imaging (with a limiting 5|$\sigma$| point-source depth of |$\sim$|25 mag in the r band) is adequate. In other words, imaging with the depth and angular resolution of surveys like CFIS or HSC-W are already near the apparent limit of what is possible for binary classification for |$\mu \gt $|1:10 merger remnants (see also Bottrell et al. 2022). Additional imaging depth is met with a diminishing return in completeness and purity. Additional detail detected in extremely deep (e.g. LSST after 10 yr of co-adds) imaging may be beneficial for more advanced merger characterization tasks that are only lately being explored – e.g. temporal estimation of merger time-scales (see Ferreira et al. 2024; Pearson et al. 2024), identification of merger remnants with smaller progenitor |$\mu$| values (i.e. ‘mini mergers’ as studied in Bottrell et al. 2024), or characterization of fainter galaxies with lower stellar masses.

The results of this work are particularly useful when considered alongside the results of Wilkinson et al. (2024), who use non-parametric morphological statistics to perform a systematic analysis of merger recovery as a function of depth, resolution, viewing angle, and a variety of galaxy properties. Wilkinson et al. (2024) used different observational realism and merger identification techniques than this work, but the trends of performance with depth and resolution still offer a useful point of comparison. We particularly refer the reader to fig. 10 of Wilkinson et al. (2024), which shows the result of an experiment very much like the one conducted in Section 3.1. Wilkinson et al. incorporate multiple non-parametric morphological statistics (asymmetry, outer asymmetry, shape asymmetry, Gini, and M20) in a random forest classifier, which is used to predict merger status. The approach of Wilkinson et al. (2024) is quite similar to the one used in this work, in that CNNs also combine a feature extraction component (although the features in the CNN are learned in the convolution layers, rather than prescribed) with a machine learning classification tool (the fully connected layer of the CNN).

Wilkinson et al. (2024) identify two main trends that are echoed in this work. First, greater depth at a given resolution is generally beneficial, but that the improvement in completeness and purity with increasing 5|$\sigma$| limiting point-source depth above 25 mag is marginal compared to the improvement between |$23{\,\text{and}\,}25$| mag. Wilkinson et al. (2024) also report a turnover in performance as a function of PSF FWHM, with the peak completeness recovered in imaging with a PSF of 0.75 arcsec. The other results on the depth-resolution plane explored in Wilkinson et al. (2024) offer helpful context by filling in the bigger picture for our results, since the five surveys studied here represent discrete selections from a multivariate grid of depth, resolution, and CCD scale.