-

PDF

- Split View

-

Views

-

Cite

Cite

Christopher M Hirata, Masaya Yamamoto, Katherine Laliotis, Emily Macbeth, M A Troxel, Tianqing Zhang, Kaili Cao, Ami Choi, Jahmour Givans, Katrin Heitmann, Mustapha Ishak, Mike Jarvis, Eve Kovacs, Heyang Long, Rachel Mandelbaum, Andy Park, Anna Porredon, Christopher W Walter, W Michael Wood-Vasey, Simulating image coaddition with the Nancy Grace Roman Space Telescope – I. Simulation methodology and general results, Monthly Notices of the Royal Astronomical Society, Volume 528, Issue 2, February 2024, Pages 2533–2561, https://doi.org/10.1093/mnras/stae182

Close - Share Icon Share

ABSTRACT

The upcoming Nancy Grace Roman Space Telescope will carry out a wide-area survey in the near-infrared. A key science objective is the measurement of cosmic structure via weak gravitational lensing. Roman data will be undersampled, which introduces new challenges in the measurement of source galaxy shapes; a potential solution is to use linear algebra-based coaddition techniques such as imcom that combine multiple undersampled images to produce a single oversampled output mosaic with a desired ‘target’ point spread function (PSF). We present here an initial application of imcom to 0.64 square degrees of simulated Roman data, based on the Roman branch of the Legacy Survey of Space and Time (LSST) Dark Energy Science Collaboration (DESC) Data Challenge 2 (DC2) simulation. We show that imcom runs successfully on simulated data that includes features such as plate scale distortions, chip gaps, detector defects, and cosmic ray masks. We simultaneously propagate grids of injected sources and simulated noise fields as well as the full simulation. We quantify the residual deviations of the PSF from the target (the ‘leakage’), as well as noise properties of the output images; we discuss how the overall tiling pattern as well as Moiré patterns appear in the final leakage and noise maps. We include appendices on interpolation algorithms and the interaction of undersampling with image processing operations that may be of broader applicability. The companion paper (‘Paper II’) explores the implications for weak lensing analyses.

1 INTRODUCTION

Weak gravitational lensing – the distortion of the shapes of distant galaxies as their light passes through the gravitational potential of foreground structures – has emerged as one of the powerful tools for probing the growth of structure in the Universe [see Hoekstra & Jain (2008), Weinberg et al. (2013), and Mandelbaum (2018) for recent reviews]. It has now been more than two decades since the early detections of lensing by galaxies (Brainerd, Blandford & Smail 1996) and of the two-point correlations of shear (Bacon, Refregier & Ellis 2000; Kaiser, Wilson & Luppino 2000; Van Waerbeke et al. 2000; Wittman et al. 2000). In that time, both the size of cosmological surveys and the understanding of the instruments and data processing necessary to extract the weak lensing signal has advanced considerably. The current generation of weak lensing surveys – the Kilo Square Degree survey (KiDS), the Dark Energy Survey (DES), and the Hyper Suprime Cam (HSC) – have measured the amplitude of cosmic structure S8 to precision better than a few per cent (Hikage et al. 2019; Hamana et al. 2020; Asgari et al. 2021; Amon et al. 2022; Secco, Samuroff et al. 2022). With these surveys, and the detailed picture of the initial conditions for the growth of structure provided by cosmic microwave background observations (Planck Collaboration 2020), it is now possible to do detailed comparisons of low redshift structures to the predictions of general relativity in the ΛCDM (cosmological constant + cold dark matter) model and various alternatives (e.g. Lemos et al. 2021).

In the 2020s, several major surveys are expected to come online that will lead to large advances in both the statistical constraining power and control of systematic uncertainties in weak lensing measurements. These include the ground-based Vera Rubin Observatory, which will carry out a ‘Wide, Fast, Deep’ survey of the optical sky in the ugrizy bands (LSST Dark Energy Science Collaboration 2012; Ivezić et al. 2019); the Euclid satellite, which features a wide-field visible channel for galaxy shape measurements and a near-infrared (NIR) instrument to improve photometric redshifts (Laureijs et al. 2011); and the Nancy Grace Roman Space Telescope, whose Wide Field Instrument will survey the sky at high angular resolution in four NIR bands spanning 0.9–2.0 μm (in the Reference Survey design;1 Spergel et al. 2015; Akeson et al. 2019). All of these observatories bring advantages relative to current surveys in terms of systematic error control for galaxy shapes: Rubin will obtain hundreds of images of each field, thus enabling much better internal constraints of instrument systematics, while the space missions provide the high angular resolution and stability possible above the Earth’s atmosphere. Furthermore, the coverage from u band in Rubin through ∼2 μm for the space missions (with Roman data reaching NIR depths comparable to Rubin sensitivity, and Euclid data being shallower but covering a wider footprint) provides an enormous wavelength baseline for photometric redshifts (Hemmati et al. 2019; Newman & Gruen 2022).

Although space is in many ways an ideal location for a weak lensing experiment, the high angular resolution brings some challenges related to the pixel scale. A diffraction-limited telescope produces a point spread function (PSF) with a characteristic angular width of λ/D, where λ is the wavelength of observation and D is the diameter of the entrance pupil. If the pixel scale P is larger than λ/(2D), then the image is undersampled in the Nyquist sense: there are Fourier modes present in the image with more than |$\frac{1}{2}$| cycle per sample, with the consequence that the images cannot be unambiguously interpolated, and thus the image intensity |$I({\boldsymbol r})$| cannot be treated as a continuous field. It also produces biases in the moments of images, including the first moment (centroid, relevant to astrometry) and the second moments (sizes and shapes, relevant to weak lensing), which have been studied in many contexts (e.g. Lauer 1999b; Anderson & King 2000; High et al. 2007; Samsing & Kim 2011). One possible solution to the undersampling problem is to simply use small pixels – but if one has a fixed pixel count in the focal plane (often limited by available resources or technical considerations), then shrinking the pixels leads to a smaller field of view and a slower survey if we fix the survey depth and hence the required exposure time per pointing.2 An alternative, adopted for Euclid and Roman, is to accept undersampling, and use multiple dithered exposures of each field. This increases survey speed, but requires the development of algorithms for each application that take multiple undersampled images as input.

Undersampling can affect several stages of a weak lensing analysis (e.g. Kannawadi, Rosenberg & Hoekstra 2021; Finner et al. 2023). One particular step is calibration of the shear estimator – that is, determining how the measured ellipticity of a galaxy ei in a catalog responds to an applied shear γj.3 While one might hope for an ellipticity measurement algorithm that has unit shear response ∂〈ei〉/∂γj = δij, any stable ellipticity estimator has a response that depends on the galaxy population (Massey et al. 2007; Zhang & Komatsu 2011), and so modern lensing analyses contain a ‘shear calibration’ step that determines this response given the ensemble of galaxy morphologies present in that survey (at that depth, resolution, and observed wavelength). Whether the data are well-sampled or not, shear calibration must work with the fact that Fourier modes in the image are only well-measured up through some kmax, and the shear operation moves modes across the k = kmax boundary (e.g. Bernstein 2010), so one cannot take an observed image and infer what the sheared image would look like at the same resolution. In the past decade, several principled shear calibration approaches have been introduced that apply some re-smoothing (or in Fourier space, a cut kcut < kmax) before measuring galaxy shapes (or moments) and have been successful at mitigating the galaxy population-dependent shear calibration biases in simulations. These include Metacalibration, which numerically applies a shear to each object to compute the ensemble response (Huff & Mandelbaum 2017; Sheldon & Huff 2017; Zhang, Sheldon & Becker 2023); the Bayesian Fourier Domain technique, which builds the probability distribution of Fourier-space moments (Bernstein & Armstrong 2014; Bernstein et al. 2016); and approaches that analytically build shear responses (Li & Mandelbaum 2023).4 Further development of these methods is anticipated in support of final analyses of the Stage III ground-based survey data and the upcoming Vera Rubin Observatory. However, all of these techniques rely fundamentally on operations such as cuts in Fourier space that cannot be performed on undersampled data. Indeed, the first attempts to simulate Metacalibration for undersampled images resulted in per cent-level biases (Kannawadi, Rosenberg & Hoekstra 2021; Yamamoto et al. 2023) that exceed requirements for the upcoming surveys. This motivates us to develop image processing techniques to recover full sampling, as a step in the lensing analysis that precedes shape measurement (and even source selection), so that these shear calibration techniques can be applied to Roman data.

The shear response is a property not just of the shape measurement algorithm, but of the sample selection as well, since applying a shear to a galaxy could cause it to cross a selection threshold (Kaiser 2000; Hirata & Seljak 2003). The same tools that have been developed to measure the shear response can be extended to incorporate the response from source selection (e.g. Sheldon et al. 2020, 2023). Furthermore, in a tomographic weak lensing analysis, the assignment of a galaxy to a particular tomographic bin is itself a form of selection, and thus one needs to derive the shear response of the photometry in each filter used for constructing the tomographic bins (e.g. Troxel et al. 2018; Gatti et al. 2021; Myles et al. 2021). The next-generation weak lensing surveys have specified ‘shape measurement’ filters (J129+H158 + F184 in the case of Roman) as well as additional filters that are used for photometric redshifts (Y106 for Roman). This means that although the source galaxies do not need to be resolved in the photo-z-only filters, one still needs to either recover full sampling in these filters (and use the aforementioned frameworks) or develop an alternative framework for determining the statistical shear response. (This issue was not fully understood at the time the Roman Reference Survey was designed.) Therefore, this paper has investigated recovery of a fully sampled mosaic in Roman Y106 band as well.

The recent joint Roman + Rubin simulations (Troxel et al. 2023), built on top of the Legacy Survey of Space and Time (LSST) Dark Energy Science Collaboration (DESC) Data Challenge 2 (DC2) simulations (Korytov et al. 2019; LSST Dark Energy Science Collaboration. 2021a,b; Kovacs et al. 2022), provide an opportunity to test out algorithms to recover full sampling as part of an integrated simulation and processing pipeline suite, and explore the implications for Roman weak lensing analyses. This is the first in a series of papers that develops and tests an implementation of the imcom algorithm (Rowe, Hirata & Rhodes 2011) on a simulation of part of the Roman Reference Survey. In this paper (‘Paper I’), we focus on the characteristics of the simulation, relevant mathematical background, image combination machinery, and basic properties of the outputs. The companion paper (‘Paper II’) covers statistical analyses of the output images, including the ellipticities of simulated stars, correlation functions, noise power spectra, and noise-induced biases. Both papers use a 48 × 48 arcmin region from the simulations, large enough to contain ∼2 Roman fields of view and a representative portion of the tiling pattern (see Fig. 1 ).

The coverage (number of exposures) in each of the four bands in the 48 × 48 arcmin region considered in this paper. Each sub-panel shows one of the filters. The 18-chip ‘pawprint’ feature of the Roman focal plane is easily visible, as is the presence of two roll angles from the two passes in each filter.

This paper is organized as follows. The problem of combining images to create fully sampled output with uniform PSF, and the goals of this simulation effort, are described in more detail in Section 2. The input data, including ancillary information such as masks, are described in Section 3. The image coaddition simulation methodology is described in Section 4. Some of the basic results on output PSF and noise properties are described in Section 5, and we conclude in Section 6. Appendix A presents some useful results on optimal interpolation methods for functions with known oversampling rates that we derived for this work, and that may be of more general interest, while a description of the computing resources for the project can be found in Appendix B. A summary of how undersampling interacts with the operations used in modern shear calibration methods is provided in Appendix C.

2 STATEMENT OF THE PROBLEM

A variety of methods have been explored in the literature for combining multiple images of the sky into a single ‘coadded’ image. If the coadded image is to be used as the starting point for a standard weak lensing analysis, one wants it to be both oversampled and have a well-defined PSF in the sense of Mandelbaum et al. (2023): the output image should be the true astronomical scene convolved with a PSF. We will go further here and ask for an algorithm that produces a specific desired output PSF Γ that is uniform and circular – thus we choose the output PSF and design a coaddition scheme to achieve it, rather than running a coaddition code and accepting the measured PSF at the end. This is advantageous for survey uniformity and mitigation of additive biases, but as we will see this is only possible under certain circumstances.

Thus in the context of this paper, the goal of the coaddition pipeline is to take in several input images of the sky, which are at some native pixel scale sin and in general have their own rotations, distortions, PSFs, and masks; and produce a well-sampled output image at an output pixel scale sout, and with a uniform, round output PSF. Implicit in this statement of charge is that we are trying to accomplish at once several tasks that are sometimes distinct steps in an image processing pipeline:

Interpolation over masked pixels (e.g. cosmic ray hits, bad columns, or hot, dead, or unstable pixels).

Resampling onto a common grid.

Rounding and homogenization of the output PSF (for some weak lensing pipelines; this step could also be performed last or not at all).

Averaging of the intensities from each input image to yield a single output image.

For oversampled data, it may be reasonable to treat these as separate, since operations such as resampling, convolution, and (sometimes) filling in a single missing sample, can be carried out on an oversampled function without introducing biases. However, they may be viewed in a unified framework if all of the operations used are linear. In this case, each step is a matrix operation, leading to an output image that is a linear combination of input images, with the mapping described by an m × n matrix T, where m is the number of output pixels and n is the number of input pixels. These statements apply to many of the common algorithms that have been applied to ground-based weak lensing data sets (either for shapes, source selection, or photo-zs): for example, linear predictive codes for interpolating bad pixels (section 4.5 Bosch et al. 2018); Lanczos-3 (Bertin et al. 2002; Bosch et al. 2018, section 3.3) or polynomial (section 4.4 Huff et al. 2014) interpolation for resampling; and pixel-domain (section 7 of Bernstein & Jarvis 2002) or Fourier-domain (section 4.1 of Huff et al. 2014) rounding kernels, and PSF Gaussianization (Hildebrandt et al. 2012, section 3; Kuijken et al. 2015, section 4.2).

None of steps 1–3 are possible individually on undersampled data without introducing biases or making additional assumptions about the astronomical scene. However, the end goal of constructing a well-sampled output image with uniform PSF with some matrix T may still be possible, even if T cannot be factored into the individual steps. The Fourier-domain algorithm of Lauer (1999a) and the iterative algorithm of Fruchter (2011) are examples that combine some of these steps for some types of input data. The imcom technique of Rowe, Hirata & Rhodes (2011) searches for a matrix T that accomplishes all four steps with minimum noise and target PSF error (as measured with quadratic metrics).5 It is computationally expensive but can work with rolls, geometric distortions, varying input PSFs, and complex masks, and thus is a promising choice for a space-based weak lensing survey with Roman. imcom returns a residual estimate for the output PSF; this allows us to identify cases where the desired output PSF is impossible to build (e.g. due to aliasing with insufficient dithers, or contains Fourier modes not represented in the input image).

We note that the Drizzle algorithm (Fruchter & Hook 2002) that is commonly used to combine undersampled space-based images is itself a linear operation that can be described using a coaddition matrix T. In the case of Drizzle, T is sparse; the entry Tαi is determined by the overlap of output pixel α with a ‘shrunken’ version of input pixel i. The Drizzle matrix T is within the search space for imcom, and therefore by imcom’s target metrics of sum-of-squares error in the PSF and noise, imcom will always perform at least as well as Drizzle (usually much better). But by choosing a particular sparse T, the Drizzle algorithm has a lower memory footprint and much faster run time, and therefore is likely to remain useful in the weak lensing analysis as a ‘quick look’ tool (this is also how it was used in the DC2 simulation; Troxel et al. 2023) and for flagging purposes.

While Rowe, Hirata & Rhodes (2011) demonstrated their method on some test problems with ∼6 × 6 arcsec postage stamps, and the method has also been demonstrated on laboratory data (Shapiro et al. 2013), the method has not yet been applied to Roman simulations over an area large enough to measure the statistical properties of the coadded images. The availability of the Rubin Data Challenge 2 (DC2) + Roman simulation suite, and updated knowledge of Roman properties including characterization of the flight detectors, makes this an excellent time to embark on such a simulation. The qualitative and quantitative objectives for this simulation are as follows:

Wrap the algorithm in a driver that can tile the sky and pipe the appropriate (simulated) observations to the linear algebra kernel and re-assemble the output into a community standard format such as FITS.

Find an example (not necessarily final) set of parameters for the imcom algorithm that avoid basic problems such as noise amplification or ghosting across postage stamp boundaries (or reduce them to acceptable levels).

Characterize how chip gaps and cosmetic defects map into the assembled mosaics.

Use injected sources to test the output normalization, astrometry, size, shape, and higher moments of the final coadd PSF after propagation through imcom.

Measure the correlation function of the ellipticities at scales overlapping the range likely to be included in the Roman weak lensing analysis.

Measure the noise properties of the output image for both uncorrelated and correlated input noise.

Compare the noise measured on the output images to the predictions from the Exposure Time Calculator (Hirata et al. 2013) and analytical descriptions in the literature (e.g. Bernstein 2002).

Determine the as-realized computing time requirements for the coadd on a modern computing cluster (in order to inform both resource estimates and priorities for further optimization).

Compare the moments (i.e. shape and size) of bright unsaturated stars (suitable for PSF characterization) in the output images with the measurements performed on Drizzled coadd from DC2 + Roman simulations.

Most of these objectives can only be achieved by simulating >1 field of view. This paper presents the initial set of coaddition simulations and first look results; follow-on papers will go into more detail on some of the individual objectives.

3 INPUT DATA

We generate several types of input data: full and noiseless image simulations, noise fields, and grids of injected sources. All of these are common types of inputs to image processing pipelines in weak lensing data analysis. Since the coaddition process is linear, we may use the distributive property to superpose outputs and generate, e.g. an output sky image with additional 1/f noise, or an injected object in the real survey (e.g. Suchyta et al. 2016). The processing of all layers at the same time allows the T matrix to be computed only once, a major advantage since it is both the most computationally demanding step and is so large that only a few examples can be stored to disk rather than the T for the whole survey. The layers used in this analysis are shown in Table 1.

| Index . | Name . | Description . | Bands . | Reference . |

|---|---|---|---|---|

| 0 | SCI | DC2 image simulation (‘simple’ model) | All | Section 3.1 |

| 1 | truth | DC2 image simulation – noiseless images | All | Section 3.1 |

| 2 | gsstar14 | Injected point source grid (drawn by GalSim) | All | Section 3.3 |

| 3 | cstar14 | Injected point source grid (drawn by pyimcom_croutines) | All | Section 3.3 |

| 4 | whitenoise1 | Uncorrelated input noise | All | Section 3.4 |

| 5 | 1fnoise2 | 1/f noise (generated in each channel) | All | Section 3.4 |

| 6 | err | DC2 image simulation – noise realization | J129, H158 | Section 3.1 |

| 7 | labnoise | Ground test dark frames | J129, H158 | Section 3.5 |

| Index . | Name . | Description . | Bands . | Reference . |

|---|---|---|---|---|

| 0 | SCI | DC2 image simulation (‘simple’ model) | All | Section 3.1 |

| 1 | truth | DC2 image simulation – noiseless images | All | Section 3.1 |

| 2 | gsstar14 | Injected point source grid (drawn by GalSim) | All | Section 3.3 |

| 3 | cstar14 | Injected point source grid (drawn by pyimcom_croutines) | All | Section 3.3 |

| 4 | whitenoise1 | Uncorrelated input noise | All | Section 3.4 |

| 5 | 1fnoise2 | 1/f noise (generated in each channel) | All | Section 3.4 |

| 6 | err | DC2 image simulation – noise realization | J129, H158 | Section 3.1 |

| 7 | labnoise | Ground test dark frames | J129, H158 | Section 3.5 |

Data may be in ‘all’ of the bands (Y106, J129, H158, and F184), or in the indicated bands only (the decision to add the last two layers was made after processing of Y106 and F184 had started). All layers are generated as 4088 × 4088 SCA images prior to being fed into the coaddition.

| Index . | Name . | Description . | Bands . | Reference . |

|---|---|---|---|---|

| 0 | SCI | DC2 image simulation (‘simple’ model) | All | Section 3.1 |

| 1 | truth | DC2 image simulation – noiseless images | All | Section 3.1 |

| 2 | gsstar14 | Injected point source grid (drawn by GalSim) | All | Section 3.3 |

| 3 | cstar14 | Injected point source grid (drawn by pyimcom_croutines) | All | Section 3.3 |

| 4 | whitenoise1 | Uncorrelated input noise | All | Section 3.4 |

| 5 | 1fnoise2 | 1/f noise (generated in each channel) | All | Section 3.4 |

| 6 | err | DC2 image simulation – noise realization | J129, H158 | Section 3.1 |

| 7 | labnoise | Ground test dark frames | J129, H158 | Section 3.5 |

| Index . | Name . | Description . | Bands . | Reference . |

|---|---|---|---|---|

| 0 | SCI | DC2 image simulation (‘simple’ model) | All | Section 3.1 |

| 1 | truth | DC2 image simulation – noiseless images | All | Section 3.1 |

| 2 | gsstar14 | Injected point source grid (drawn by GalSim) | All | Section 3.3 |

| 3 | cstar14 | Injected point source grid (drawn by pyimcom_croutines) | All | Section 3.3 |

| 4 | whitenoise1 | Uncorrelated input noise | All | Section 3.4 |

| 5 | 1fnoise2 | 1/f noise (generated in each channel) | All | Section 3.4 |

| 6 | err | DC2 image simulation – noise realization | J129, H158 | Section 3.1 |

| 7 | labnoise | Ground test dark frames | J129, H158 | Section 3.5 |

Data may be in ‘all’ of the bands (Y106, J129, H158, and F184), or in the indicated bands only (the decision to add the last two layers was made after processing of Y106 and F184 had started). All layers are generated as 4088 × 4088 SCA images prior to being fed into the coaddition.

3.1 The Roman + Rubin simulations

The principal input data to this study is the Roman arm of the joint Roman + Rubin DC2 simulation, described in Troxel et al. (2023). The simulation begins with a cosmological N-body simulation (Heitmann et al. 2019) populated with galaxies (Benson 2012; Hearin et al. 2020). This forms the basis for the cosmoDC2 simulated extragalactic sky (Korytov et al. 2019; Kovacs et al. 2022), which includes a superset of the region simulated in this paper. This is integrated with a local Universe simulation and a telescope + instrument simulation for the Rubin Observatory (LSST Dark Energy Science Collaboration 2021a,b). The Roman arm of the image simulation observes the same sky, but with the current version of the Roman telescope + instrument simulation framework (an update of Troxel et al. 2021).

The simulation includes a portion of the Roman Reference Survey tiling strategy, which observes the sky in four bands: Y106, J129, H158, and F184. Each band has two passes over the sky at different roll angles, with small-step diagonal dithers to cover the chip gaps. The number of dither positions at each roll was based on preliminary estimates of the number of dithers required to mitigate sampling issues (Rowe, Hirata & Rhodes 2011; Spergel et al. 2015): four in J129, and three in each of H158 and F184. The Y106 band strategy was not required to achieve full sampling and so used three positions. The resulting coverage pattern is shown in Fig. 1. A more in-depth description of the sky tiling can be found in appendix A of Troxel et al. (2023).

The main input layer used for this simulation is the ‘simple’ model sky images from Troxel et al. (2023). These include the convolution of sky objects with the PSF and Poisson noise. They do not include the full detector physics model (this version was chosen so that we can test downstream processing steps, such as image combination, before corrections for the detector effects are ready). The two most important parts of the detector physics missing for our purpose are (i) that there is no pixel mask in the ‘simple’ simulation (we introduce our own in Section 3.2); and (ii) the charge diffusion is not included. Since charge diffusion smears out the effective PSF before pixelization, it actually improves sampling, so we expect that ignoring it is conservative from the perspective of a code that attempts to reconstruct a fully sampled image. This expectation is explicitly tested for a small subsample of the data in Section 5.4.

The ‘simple’ input model also comes with a PSF model (corresponding to a flat spectral energy distribution or SED in Fλ) and an astrometric solution (the World Coordinate System or WCS in the FITS header). The WCS solution in the simulation is ‘perfect’ in the sense that we are working with the same WCS used to draw the image; propagation of systematic errors in the WCS solutions will be considered in a future paper.

We have also included as additional layers the ‘truth’ (noiseless) images from the simulation, and – for J129 and H158 – the ‘err’ HDU (the realization of background Poisson noise used in the simulation).

3.2 Masks

The ‘simple’ DC2 + Roman simulations produce output for all of the pixels. However, in the real mission there will be masked pixels. These can be divided into two types: pixels that are defective (e.g. disconnected, hot, unstable) and are flagged by the calibration pipeline; and pixels affected by a cosmic ray in that particular exposure. These two classes of masks affect image combination differently, since defective pixels tend to be spatially clustered and are the same pixels when we dither, whereas the cosmic ray impacts randomly knock out small groups of pixels.

For this simulation, we have taken the ‘permanent mask’ based on the SCA files constructed from acceptance testing (appendix B Troxel et al. 2023). Out of the 18 chips initially selected for flight, an average of 3.01 per cent of the pixels were flagged (BADPIX HDU) by at least one of the steps in the pipeline. The sources of these flags in order are shown in Table 2. This should be considered only a first approximation to the likely mask used in flight, since the hot pixel populations change as the detector ages or is exposed to radiation (e.g. Sunnquist, Brammer & Baggett 2019).

| Bit . | Meaning . | Fraction . |

|---|---|---|

| 0 | Non-responsive pixel | 0.53 per cent |

| 1 | Hot pixel (did not use 2 h dark for dark estimate) | 0.20 per cent |

| 2 | Very hot pixel (used first read for dark estimate) | 0.11 per cent |

| 3 | Adjacent to pixel with flagged response | 2.47 per cent |

| 4 | Low CDS, high total noise pixel | 0.03 per cent |

| 5 | Failed non-linearity solution | 0.01 per cent |

| 6 | Failed gain solution | 0.08 per cent |

| ALL | 3.01 per cent |

| Bit . | Meaning . | Fraction . |

|---|---|---|

| 0 | Non-responsive pixel | 0.53 per cent |

| 1 | Hot pixel (did not use 2 h dark for dark estimate) | 0.20 per cent |

| 2 | Very hot pixel (used first read for dark estimate) | 0.11 per cent |

| 3 | Adjacent to pixel with flagged response | 2.47 per cent |

| 4 | Low CDS, high total noise pixel | 0.03 per cent |

| 5 | Failed non-linearity solution | 0.01 per cent |

| 6 | Failed gain solution | 0.08 per cent |

| ALL | 3.01 per cent |

The last line shows the union of these flags; since some pixels have multiple flags, the sum of the fractions is greater than ALL.

| Bit . | Meaning . | Fraction . |

|---|---|---|

| 0 | Non-responsive pixel | 0.53 per cent |

| 1 | Hot pixel (did not use 2 h dark for dark estimate) | 0.20 per cent |

| 2 | Very hot pixel (used first read for dark estimate) | 0.11 per cent |

| 3 | Adjacent to pixel with flagged response | 2.47 per cent |

| 4 | Low CDS, high total noise pixel | 0.03 per cent |

| 5 | Failed non-linearity solution | 0.01 per cent |

| 6 | Failed gain solution | 0.08 per cent |

| ALL | 3.01 per cent |

| Bit . | Meaning . | Fraction . |

|---|---|---|

| 0 | Non-responsive pixel | 0.53 per cent |

| 1 | Hot pixel (did not use 2 h dark for dark estimate) | 0.20 per cent |

| 2 | Very hot pixel (used first read for dark estimate) | 0.11 per cent |

| 3 | Adjacent to pixel with flagged response | 2.47 per cent |

| 4 | Low CDS, high total noise pixel | 0.03 per cent |

| 5 | Failed non-linearity solution | 0.01 per cent |

| 6 | Failed gain solution | 0.08 per cent |

| ALL | 3.01 per cent |

The last line shows the union of these flags; since some pixels have multiple flags, the sum of the fractions is greater than ALL.

The cosmic ray mask is based on a random number generator, with the seeds chosen based on the observation ID and SCA so that the same mask is generated for each observation even if it is called in a different block. The density of cosmic ray strikes is taken to be 7.7 × 10−4 per pixel, which is the product of the |$10^{-6}\,$| cm2 pixel area, the 140 s exposure time, and the expected rate of 5.5 events cm−2 s−1 expected for Roman (see Kruk et al. 2016, and note that at L2 we do not have the trapped electron population). While experience with similar detectors on the James Webb Space Telescope is that usually 1–2 pixels are affected (section 6.6 of Rigby et al. 2023), Roman has smaller pixels (so potentially a higher leakage into neighbours via charge diffusion) and we are aiming for higher precision. Therefore, for this simulation, we have masked a 3 × 3 pixel region surrounding each cosmic ray. The less common larger events such as ‘snowballs’ (e.g. Rieke et al. 2023, fig. 15) are not included in the current simulation; we plan to add them in the future once we understand better how they should be implemented. However for the purposes of testing how imcom responds to masked pixels, it is the more frequent events that are likely to have the greatest impact.

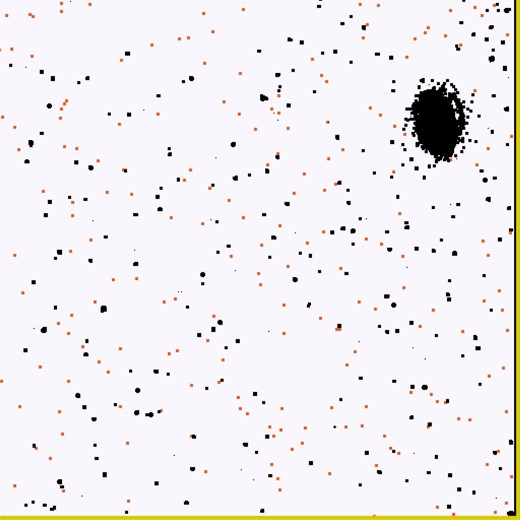

An example of a mask is shown in Fig. 2.

3.3 Injected sources

We have also created two layers that are grids of injected stars. The stars have unit flux and are injected on a healpix6 grid (Górski et al. 2005) of resolution 14 (nside|$\, =2^{14}=16384$|) in the equatorial coordinate system. The selection of grid points that land on each SCA was performed with healpy routines (Zonca et al. 2019). Two layers are generated – one with the external GalSim package, and one with the internal interpolation machinery in our pipeline.

We constructed the grid of injected sources with galsim as follows. For each PSF that is characterized by the observation and SCA that overlaps with the output region, we interpolated the PSF made by the DC2 + Roman simulation, which is oversampled by the factor of 8, using the interpolant, lanczos50. The interpolated PSF image is then convolved with the delta function of unity, and is drawn at each grid point.

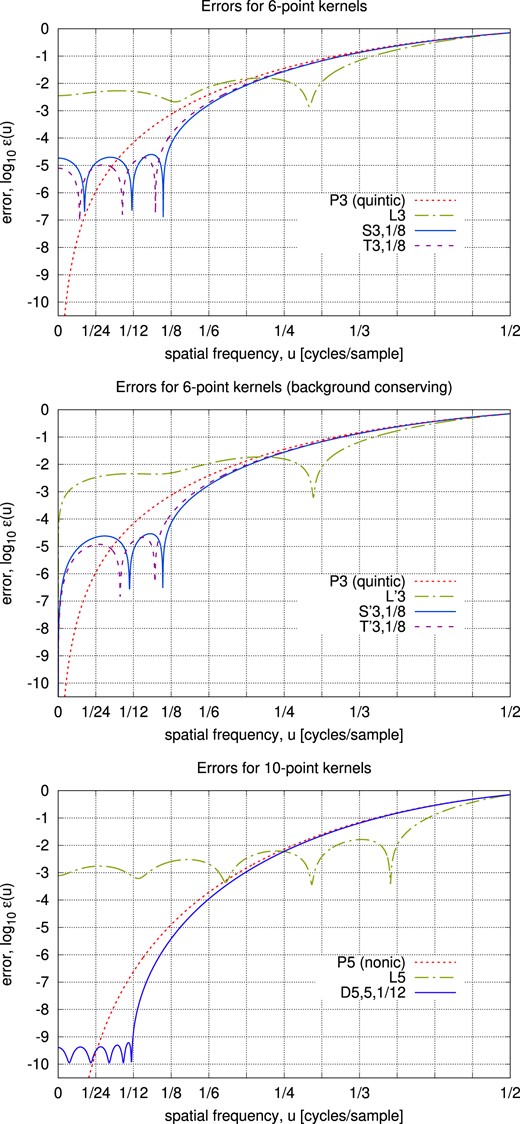

The internal simulation was generated from the same PSF, but interpolated using the ‘D5, 5, |$\tfrac{1}{12}$|’ interpolation kernel. In principle one should get the same results from the two methods aside from the choice of interpolation kernel or treatment of edge effects; however, having both serves as an important cross-check on the implementation.

3.4 Simulated noise fields

We construct two types of simulated noise fields: white noise and 1/f noise. Since the image combination is a linear process, the proper normalization of these noise fields, or the choice to include both, can be chosen in post-processing. We therefore make the simplest choices to normalize the inputs.

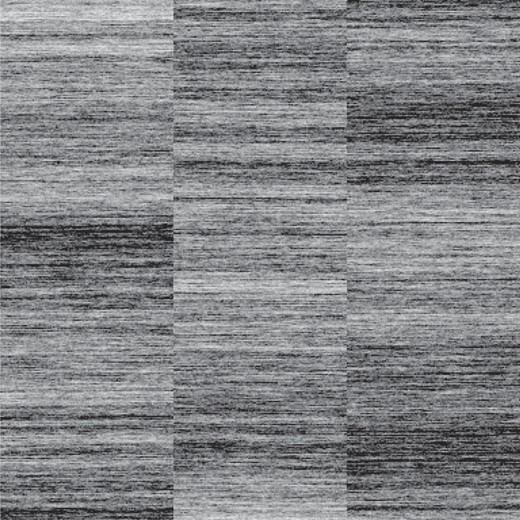

The white noise input is a 4088 × 4088 Gaussian random field with mean 0 and variance 1.

The 1/f noise input is constructed as follows. For each of the 32 readout channels, we construct a 1D Fourier domain signal of length N = 220: |$\lbrace \tilde{S}_k\rbrace _{k=-N/2}^{N/2-1}$|, where the real and imaginary components of |$\tilde{S}_k$| are Gaussians with mean 0 and variance 1/(2|fk|) (except for the constant mode |$\tilde{S}_0=0$|), where fk = k/N is the normalized frequency. This is then discrete Fourier transformed, |$S_j = \sum _{k=-N/2}^{N/2-1} \tilde{S}_k {\rm e}^{2\pi {\rm i}jk/N}$|, and we take the real part. This gives a signal whose variance per logarithmic range in frequency is 1, i.e. the variance coming from all modes between fmin and fmax is ≈ln (fmax/fmin). We take a length 219 portion of this signal (to avoid wrapping effects), reformat it into a 4096 × 128 array, and broadcast it into the appropriate portion of a 4096 × 4096 image. The even channels are flipped left-to-right (see e.g. fig. 2 of Freudenburg et al. 2020 for the ordering of the readout pattern). This does not include guide window or row overheads, but it does provide a noise field with the characteristic horizontal banding pattern of 1/f noise that we can use to assess the impact on weak lensing with coadded images. An example of a 1/f noise field generated in this way is shown in Fig. 3.

An example of a simulated mask. This is the 512 × 512 lower-right corner of SCA 11 in a H158-band observation (ID 8836). Reference pixels are shown in dark yellow (right and bottom sides); permanently masked pixels are shown in black; and pixels rejected in this observation only due to cosmic rays are shown in red-orange (small squares).

A 384 × 384 cutout of a 1/f noise field generated in Section 3.4. The greyscale is a linear stretch from −16 to +16.

Both noise inputs use the numpy.random.default_rng random number generator, with a seed chosen based on the observation ID, the SCA, and an integer specified by the user. In order to reduce storage requirements, the noise fields are generated on the fly at the same time the science data is read. The seed construction ensures that when we make a mosaic coadd, and a given SCA image contributes to more than one tile of the mosaic, that the same noise realization is generated each time.

3.5 Laboratory noise fields

In addition to simulated noise fields, we construct a layer containing noise from laboratory testing of the SCA detectors. By including detector read noise in our analysis, we are able to test in a new way how the detectors themselves might impact galaxy shape measurement. The lab detector test noise fields are intended for a separate work, and thus a more complete and detailed analysis will be forthcoming in a companion paper to this. Here we will present the basic principles of the laboratory noise fields, and refer the interested reader to this future paper for further details.

The lab noise data, taken at the Detector Characterization Laboratory, are Nt × 4096 × 4224 cubes (where Nt is the number of time slices), including dark frames and low and high level flat frames for each SCA. Data is split into 32 readout channels, plus one reference output channel and four rows of reference pixels on each side of the grid. For construction of the noise frames, lab data is averaged into six effective time bins (‘Multi-Accum’ processing; see e.g. section 7.7 of Dressel 2022), slope fitted, and reference pixel-corrected, and converted to electrons using the gain determined using solid-waffle (Freudenburg et al. 2020). We impose each SCA’s lab-tested pixel mask (appendix B Troxel et al. 2023) on each frame so that the mask is the same in all input layers. This ensures that we are still able to process all the layers at the same time and thus only compute the T matrix once. For the total focal plane, this additional masking amounted to 0.095 per cent of the pixels. Lab noise field analysis and results will be presented in detail in the forthcoming paper (Laliotis et al., in preparation).

4 IMAGE COADDITION

The image coaddition process is an updated version of the imcom framework for coaddition of postage stamps (Rowe, Hirata & Rhodes 2011). Major changes include replacing the original Fortran 90 code with a python interface and C back end; and the new driver script to produce mosaic coadds.

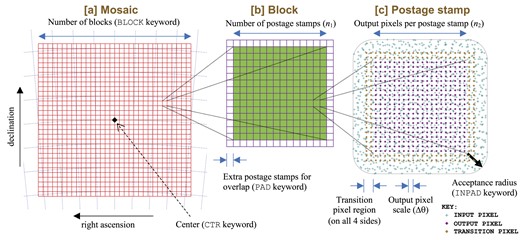

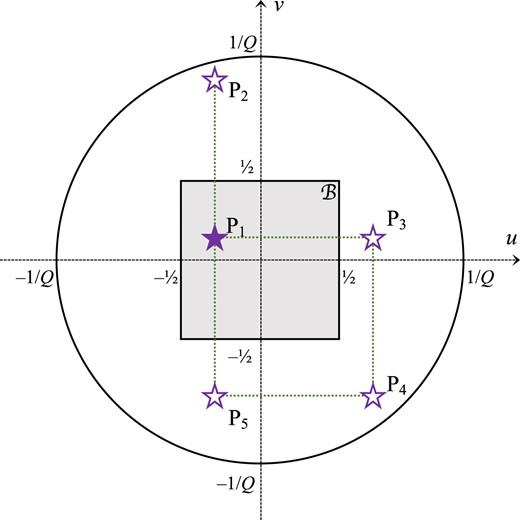

The mosaic process is organized hierarchically, controlled by keywords in the configuration file (see Fig. 4). The top level is a mosaic, consisting of a single world coordinate system over a region of sky small enough to be treated as ‘flat’ to within weak lensing requirements (i.e. the coordinate system shear and plate scale variation should be <10−4). The mosaic is divided into a square grid of blocks; the processing of each block in each filter is a single run of the python script run_coadd.py, and the resulting coadded images and metadata are stored in a single FITS file. The blocks are themselves divided into a square grid of postage stamps, and are extended by some number of postage stamps (PAD) so that there is overlap among the blocks. Each postage stamp has a grid of output pixels, and each output pixel is constructed as a linear combination of input pixels. The algorithm allows any input pixels that are un-masked and within a specified radius (INPAD arc seconds) of the square stamp. The output pixels in a postage stamp are surrounded by a ring of transition pixels that allow the weights to vary smoothly when going to the next postage stamp.

The hierarchical structure of mosaic coadds in this paper. The mosaic (panel a) is defined by a centre, a map projection, and a number of blocks (BLOCK×BLOCK). Each block (panel b) is itself composed of postage stamps; we make an n1 × n1 array, with padding of PAD postage stampps around the rim so that the blocks overlap. The postage stamps (panel c) are composed of an n2 × n2 grid of output pixels, with a transition region around the edge that is merged at the block processing level before writing to a FITS file. The postage stamp is built from all un-masked input pixels in all input images within a given acceptance radius of the stamp.

The sizes of each hierarchical layer used in this paper are shown in Table 3. These are of course subject to refinement as we prepare for the eventual Roman science analysis.

| Parameter or variable name . | Description . | Value . | Unit . |

|---|---|---|---|

| s_in | Input (native) pixel scale | 0.11 | arcsec |

| Δθ (dtheta) | Output pixel scale | 0.025 | arcsec |

| n2 | Postage stamp size in output pixels | 50 | |

| k (fade_kernel) | Transition width in pixels | 3 | |

| n2Δθ | Postage stamp angular size (excluding transition region) | 1.25 | arcsec |

| (n2 + 2k)Δθ | Postage stamp angular size (including transition region) | 1.4 | arcsec |

| INPAD | Acceptance radius for input pixels | 1.25 | arcsec |

| n1 | Block size in postage stamps (1D) | 48 | |

| PAD | Padding region of block in postage stamps | 2 | |

| n1n2Δθ | Block angular size (excluding extra postage stamps) | 1.0 | arcmin |

| |$(n_1+2{\tt PAD})n_2\Delta \theta$| | Block angular size (including extra postage stamps) | 1.08333 | arcmin |

| |$(n_1+2{\tt PAD})n_2$| | Block image side length in output pixels | 2600 | |

| BLOCK | Mosaic size in blocks (1D) | 48 | |

| BLOCK× n1n2Δθ | Mosaic angular size | 0.80 | degree |

| Parameter or variable name . | Description . | Value . | Unit . |

|---|---|---|---|

| s_in | Input (native) pixel scale | 0.11 | arcsec |

| Δθ (dtheta) | Output pixel scale | 0.025 | arcsec |

| n2 | Postage stamp size in output pixels | 50 | |

| k (fade_kernel) | Transition width in pixels | 3 | |

| n2Δθ | Postage stamp angular size (excluding transition region) | 1.25 | arcsec |

| (n2 + 2k)Δθ | Postage stamp angular size (including transition region) | 1.4 | arcsec |

| INPAD | Acceptance radius for input pixels | 1.25 | arcsec |

| n1 | Block size in postage stamps (1D) | 48 | |

| PAD | Padding region of block in postage stamps | 2 | |

| n1n2Δθ | Block angular size (excluding extra postage stamps) | 1.0 | arcmin |

| |$(n_1+2{\tt PAD})n_2\Delta \theta$| | Block angular size (including extra postage stamps) | 1.08333 | arcmin |

| |$(n_1+2{\tt PAD})n_2$| | Block image side length in output pixels | 2600 | |

| BLOCK | Mosaic size in blocks (1D) | 48 | |

| BLOCK× n1n2Δθ | Mosaic angular size | 0.80 | degree |

| Parameter or variable name . | Description . | Value . | Unit . |

|---|---|---|---|

| s_in | Input (native) pixel scale | 0.11 | arcsec |

| Δθ (dtheta) | Output pixel scale | 0.025 | arcsec |

| n2 | Postage stamp size in output pixels | 50 | |

| k (fade_kernel) | Transition width in pixels | 3 | |

| n2Δθ | Postage stamp angular size (excluding transition region) | 1.25 | arcsec |

| (n2 + 2k)Δθ | Postage stamp angular size (including transition region) | 1.4 | arcsec |

| INPAD | Acceptance radius for input pixels | 1.25 | arcsec |

| n1 | Block size in postage stamps (1D) | 48 | |

| PAD | Padding region of block in postage stamps | 2 | |

| n1n2Δθ | Block angular size (excluding extra postage stamps) | 1.0 | arcmin |

| |$(n_1+2{\tt PAD})n_2\Delta \theta$| | Block angular size (including extra postage stamps) | 1.08333 | arcmin |

| |$(n_1+2{\tt PAD})n_2$| | Block image side length in output pixels | 2600 | |

| BLOCK | Mosaic size in blocks (1D) | 48 | |

| BLOCK× n1n2Δθ | Mosaic angular size | 0.80 | degree |

| Parameter or variable name . | Description . | Value . | Unit . |

|---|---|---|---|

| s_in | Input (native) pixel scale | 0.11 | arcsec |

| Δθ (dtheta) | Output pixel scale | 0.025 | arcsec |

| n2 | Postage stamp size in output pixels | 50 | |

| k (fade_kernel) | Transition width in pixels | 3 | |

| n2Δθ | Postage stamp angular size (excluding transition region) | 1.25 | arcsec |

| (n2 + 2k)Δθ | Postage stamp angular size (including transition region) | 1.4 | arcsec |

| INPAD | Acceptance radius for input pixels | 1.25 | arcsec |

| n1 | Block size in postage stamps (1D) | 48 | |

| PAD | Padding region of block in postage stamps | 2 | |

| n1n2Δθ | Block angular size (excluding extra postage stamps) | 1.0 | arcmin |

| |$(n_1+2{\tt PAD})n_2\Delta \theta$| | Block angular size (including extra postage stamps) | 1.08333 | arcmin |

| |$(n_1+2{\tt PAD})n_2$| | Block image side length in output pixels | 2600 | |

| BLOCK | Mosaic size in blocks (1D) | 48 | |

| BLOCK× n1n2Δθ | Mosaic angular size | 0.80 | degree |

We briefly review the postage stamp coaddition problem in Section 4.1, and indicate where there have been algorithm updates. We describe the block and mosaic construction in Section 4.2. The choice of output PSFs – essentially determining the resolution of the coadded images – is discussed in Section 4.3.

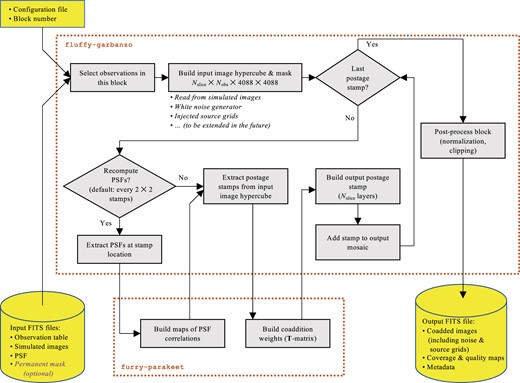

A diagram of the block coaddition script is shown in Fig. 5.

The workflow for coaddition of a block in this paper. There are two repositories: the postage stamp coaddition (furry-parakeet) and the mosaic driver with interfaces to the simulations (fluffy-garbanzo).

4.1 Postage stamp coaddition

The imcom formalism is developed in Rowe, Hirata & Rhodes (2011),7 and here we summarize only the key points that are needed here. imcom produces a coadded postage stamp starting from a set of input postage stamps: these images can be flattened and concatenated into a length n vector |$\lbrace I_i\rbrace _{i=0}^{n-1}$|.8 Masked pixels can simply be removed from this vector with a corresponding decrease in n. These are to be combined into an output image with m pixels: |$H_\alpha = \sum _{i=0}^{n-1} T_{\alpha i}I_i$|, where T is an m × n coaddition matrix. We denote the positions of each pixel centre by |$\lbrace {\boldsymbol r}_i\rbrace _{i=0}^{n-1}$| (input images) or |$\lbrace {\boldsymbol R}_\alpha \rbrace _{\alpha =0}^{m-1}$| (output image). If the input images have effective PSF Gi, then the output pixel α has an effective PSF at offset |${\boldsymbol s}$| given by9

It is a common difficulty with image combination algorithms that PSFout, α varies from pixel to pixel in the coadded image. imcom takes as input a ‘target PSF’ Γ, and attempts to find coaddition weights Tαi that make PSFout, α uniform and close to Γ. Quantitatively, we wish to minimize the leakage function,10

where the square norm is written in Fourier space with weighting |$\tilde{\Upsilon }({\boldsymbol u})$|. The numerator Uα is the square norm of the difference between the as-realized output PSF and the target, while the denominator C is the square norm of the target (thus forming a dimensionless ratio). We will refer to Uα/C itself as the ‘leakage’ (smaller is better), and to the quantity −10log10(Uα/C) as the ‘fidelity’ (larger is better: one can think of this as a suppression of PSF error in decibels). If the n × n noise covariance matrix of the input is N, then the m × m output covariance is |$\mathbf{\Sigma } = \mathbf{T}\mathbf{N}\mathbf{T}^{\rm {T}}$|. imcom also tries to minimize the output covariance Σαα for input white noise (|$\mathbf{N} = {\mathbb {I}}_{n\times n}$|).11

Having two objective functions, leakage Uα/C and noise Σαα, implies a trade-off: usually one can be improved at the expense of the other. We thus optimize row α of the T-matrix with respect to the objective function Uα + καΣαα, where κα is a Lagrange multiplier that controls the trade-off (decreasing κα decreases leakage and increases noise; increasing κα increases leakage and decreases noise). The method of solving for Tαi given κα is described in Rowe, Hirata & Rhodes (2011); the algorithm performs a bisection search in log10κα, with the user specifying the criterion for whether to increase or decrease κα.

Our postage stamp coaddition pipeline implements almost the same algorithm as in the original imcom, but with a python interface and using numpy routines for the linear algebra and Fast Fourier Transform (FFT) steps; the for loop over κα and the interpolation are coded in C and wrapped using the numpy C Application Programming Interface. Aside from changes in language, the substantive differences in the relative to the original imcom are

The construction of T in Rowe, Hirata & Rhodes (2011) requires the construction of the correlations |$[G_i\circ G_j]({\boldsymbol s})$| for every pair of input PSFs. These are now computed and saved in the PSF_Overlap class, because these correlations only need to be re-computed on the scale of the PSF variation, not for every single postage stamp. This saves time when constructing a large-area mosaic.

The interpolation of the correlations |$[G_i\circ G_j]({\boldsymbol s})$| in the original imcom was performed using bivariate polynomial interpolation. In our case, since the PSFs are band-limited, we know that their correlation is band-limited and given a grid size we know the oversampling factor (i.e. sample spacing relative to the Nyquist spacing). We have derived optimal interpolation kernels for this case in Appendix A; we used the ‘D5, 5, |$\tfrac{1}{12}$|’ kernel here.

Rather than simply specifying a target leakage Uα/C or a target noise Σαα, we specify a maximum noise (Σαα)max as our first priority and a maximum leakage (Uα/C)max as our second priority. That is, if both the leakage and noise targets can be met, the Lagrange multiplier search looks for the minimum noise that meets the leakage requirement; but if they cannot both be met, it treats the noise target as a hard constraint and finds the minimum leakage subject to that constraint. Of course in the latter case we would consider masking that pixel in a weak lensing analysis, but it avoids spectacular noise amplifications that could confuse some downstream analysis packages.

The postage stamp coaddition tools are in a separate GitHub repository (furry-parakeet) from the block driver.

4.2 Blocks and mosaics

The coaddition of a block (Fig. 5) begins with the selection of observation ID/SCA pairs that overlap with the block. Once we have these observations, a 4D numpy array is constructed to include all of the input data: in_data[i, j, y, x] contains the pixel in layer i, observation ID/SCA j, and pixel (x, y). Each layer consists of simulated images, noise fields, or grids of injected sources (Table 1). The input data are stored as 32-bit floating point numbers.

The algorithm then executes a loop over the postage stamps in that block. To save computing time, the PSFs are extracted and a PSF_Overlap object created every 4th postage stamp (it is treated as uniform in a block of 2 × 2 postage stamps, based on the PSF for the position at the common corner of the stamps). Then a suite of input postage stamps is created by mapping the centres of the output postage stamp back to each input image; these input stamps are deliberately oversized, since they can then be reduced by combining the input image mask with the logical test for whether a pixel is within the acceptance region (right panel of Fig. 4). Then we build the T-matrix (Section 4.1), and multiply to get an output cube (postage stamp for each layer).

A complication arises when the postage stamps are tiled to make a block: if the postage stamps are simply placed next to each other, there are noise discontinuities in the output image since one suddenly jumps to a different set of input pixels from which one can construct the output pixels. For some applications, these discontinuities may present no difficulties. However, some common applications such as peak finders that run at the resolution of the output image may be confused by these effects (e.g. Bertin & Arnouts 1996). Therefore, we use the transition pixels to smoothly transition from one postage stamp to the next. The overlap of the output postage stamps (including transition pixels) is 2k pixels; we define a sequence |$\lbrace a_m\rbrace _{m=1}^{2k}$|. If we have a ‘left’ postage stamp Ileft(x, y) (whose output region, excluding the transition pixels, is x < xcut) and a ‘right’ postage stamp Iright(x, y) (whose output region, again excluding the transition pixels, is x ≥ xcut), then in the transition region xcut − k ≤ x < xcut + k, we may make a merged image,

This approach does not affect the output PSF as long as the transition sequence satisfies am + a2k + 1 − m = 1. We use the truncated sine function (Li & Mandelbaum 2023),

which avoids the discontinuities of the first derivative of the noise that would occur without the second term. The merging technique in equation (3) is trivially extendable to the y direction as well.

The mosaics are built using a single map projection with an array of blocks. In principle, one could select from any of the projections in the FITS WCS standard (Calabretta & Greisen 2002). We have used the stereographic projection here since it introduces no shear distortion, has zero plate scale gradient at the projection centre, and the Roman footprint can be efficiently tiled with square regions,12 although we may revisit this choice in the future. At an angle θ from the projection centre, the stereographic projection introduces a lowest-order plate scale error of |$\frac{1}{4}\theta ^2$|, or 2.4 × 10−5 at the corner of a 0.8 degree square (|$\theta =0.8/\sqrt{2}\times \pi /180$|). Thus for this test region we only need one mosaic.

We refer to a block within a mosaic by its array position: (0,0) is in the lower-left (southeast) corner, and |$({\tt BLOCK}-1,0)$| is in the lower-right (southwest corner).

4.3 Output PSFs

The imcom formalism is different from many other coaddition algorithms in that the target output PSF Γ is specified by the user and this is used to determine the input-to-output mapping (ssT-matrix), rather than the other way around. It is therefore advantageous to choose an output PSF that is circularly symmetric, so that the image coaddition and ‘rounding kernel’ are accomplished in a single step.

A straightforward choice is the convolution of an obstructed Airy disc (e.g. Rivolta 1986) with a Gaussian (whose width determines the resolution of the coadded image):

where ξ = λ/D is the diffraction scale at the central wavelength of that filter, J1 is the Bessel function of the first kind, and ε is the linear obstruction fraction. This profile has an exact band limit at the diffraction scale, just like all of the input PSFs, and it has the usual ∝ 1/s3 diffraction wings with the same amplitude as for the input PSFs. Being circularly symmetric, it does not contain the diffraction spikes.

The choice of σ involves a trade-off. If σ is larger, then the output PSF is larger, which makes it more difficult to measure the shapes of small galaxies and increases blending. If σ is smaller, then the algorithm will attempt to reconstruct the higher spatial frequency Fourier modes in the image, which usually suffer more aliasing. This means that the output PSF leakage Uα/C is larger (worse). This effect is more significant with small numbers of dithers where not all combinations of input Fourier modes can be de-aliased. The implication is that for smaller σ (hence smaller target PSF), we may need to mask a larger portion of the final output image to control shear measurement systematics. (A possible way around this, which we have not explored in this paper, is to build several mosaics at different output resolutions, with the lowest resolution being available everywhere and the highest resolution available in select regions with more dithers.)

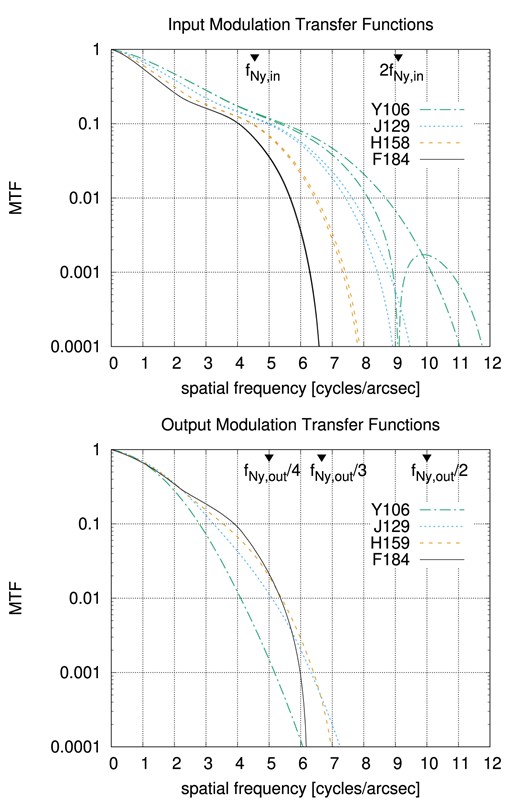

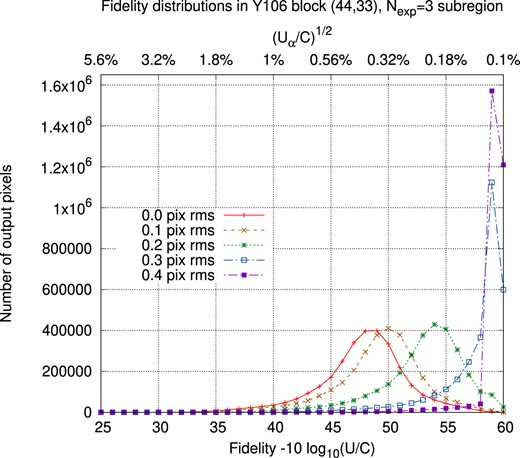

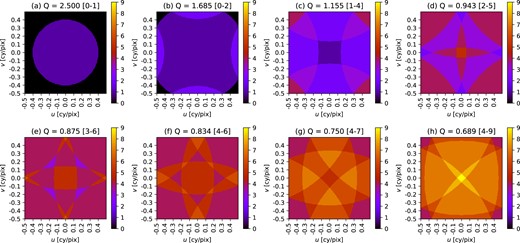

The smoothing scales chosen for each of the Roman filters in this simulation are shown in Table 4, and the modulation transfer functions are shown in Fig. 6. An example of the resolution versus output fidelity trade-off in the Roman Y106 band is shown in Fig. 7.

The input and output modulation transfer functions (MTFs; absolute value of the Fourier transform of the PSF). The upper panel shows the MTFs as computed in the Exposure Time Calculator (Hirata et al. 2013) based on the requirement aberrations. Two curves are shown for each filter, either for wave vector aligned with the pixel grid or at a 45° angle; for F184, the difference is not visible on the plot. The Nyquist frequency at the native (input) pixel scale is marked. The lower panel shows the output PSFs, with fractions of the Nyquist frequency at the output pixel scale sout marked. Note that all of the input images are undersampled (the MTF is non-zero for spatial frequencies extending past fNy, in) but the output images are well sampled, with the MTF dropping to negligible levels (<10−4) by ∼0.36fNy, out in all filters.

Examples of how output fidelity depends on the output PSF and on the number of exposures covering a given region. The first three rows show the fidelity maps, −10log10(Uα/C), for a block of the Roman Y106 band centred at right ascension 53.5142° and declination −40.3898°. On this scale, 60 corresponds to the specified leakage Uα/C = 10−6; lower values correspond to worse matching of the output PSF to the target. We show three resolutions of the target PSF (θFWHM indicated), with sharpest resolution at the top. The bottom row shows the coverage map (number of exposures). The first column shows the full 1.08 × 1.08 arcmin block; the other three columns show zoom-ins of particular regions (the tics are in units of output pixels). Note the particularly poor reconstruction when Nexp = 3 (second column), corresponding to an intersection of chip gaps. Even when Nexp = 5 (third column), if the target PSF is too small (top), one sees imprinting of the 0.11 arcsec (4.4 output pixel) input pixel grid where there is difficulty interpolating from the small number of samples to certain sub-pixel positions. We also see losses where a cosmetic defect reduces the effective number of exposures to 4. Similar behaviour can be seen in the right column, which overlaps with a four-exposure region.

| Band . | Input PSF . | Output PSF . | ||

|---|---|---|---|---|

| . | Effective area . | Sampling factor . | Output smearing . | Full width at half maximum . |

| . | |$\Omega _{\rm psf}/s_{\rm in}^2$| . | Q = λ/Dsin . | |$\sqrt{8\ln 2}\, \sigma /s_{\rm in}$| . | θFWHM [arcsec] . |

| Y106 | 7.06 | 0.834 | 2.25 | 0.279 |

| J129 | 8.60 | 1.021 | 1.75 | 0.230 |

| H158 | 10.96 | 1.250 | 1.50 | 0.210 |

| F184 | 15.28 | 1.456 | 1.25 | 0.200 |

| Band . | Input PSF . | Output PSF . | ||

|---|---|---|---|---|

| . | Effective area . | Sampling factor . | Output smearing . | Full width at half maximum . |

| . | |$\Omega _{\rm psf}/s_{\rm in}^2$| . | Q = λ/Dsin . | |$\sqrt{8\ln 2}\, \sigma /s_{\rm in}$| . | θFWHM [arcsec] . |

| Y106 | 7.06 | 0.834 | 2.25 | 0.279 |

| J129 | 8.60 | 1.021 | 1.75 | 0.230 |

| H158 | 10.96 | 1.250 | 1.50 | 0.210 |

| F184 | 15.28 | 1.456 | 1.25 | 0.200 |

The PSF effective area is defined in the square-norm sense |$\Omega _{\rm psf} = 1/\int [G({\bf s})]^2\, d^2{\bf s}$| and expressed in input pixels. The sampling factors are in the convention of λ/Dsin; this is the same convention used in Rowe, Hirata & Rhodes (2011), but is a factor of 2 larger than the convention of Kannawadi, Rosenberg & Hoekstra (2021). The output smearing is listed as the full width at half maximum of the Gaussian in units of native pixels.

| Band . | Input PSF . | Output PSF . | ||

|---|---|---|---|---|

| . | Effective area . | Sampling factor . | Output smearing . | Full width at half maximum . |

| . | |$\Omega _{\rm psf}/s_{\rm in}^2$| . | Q = λ/Dsin . | |$\sqrt{8\ln 2}\, \sigma /s_{\rm in}$| . | θFWHM [arcsec] . |

| Y106 | 7.06 | 0.834 | 2.25 | 0.279 |

| J129 | 8.60 | 1.021 | 1.75 | 0.230 |

| H158 | 10.96 | 1.250 | 1.50 | 0.210 |

| F184 | 15.28 | 1.456 | 1.25 | 0.200 |

| Band . | Input PSF . | Output PSF . | ||

|---|---|---|---|---|

| . | Effective area . | Sampling factor . | Output smearing . | Full width at half maximum . |

| . | |$\Omega _{\rm psf}/s_{\rm in}^2$| . | Q = λ/Dsin . | |$\sqrt{8\ln 2}\, \sigma /s_{\rm in}$| . | θFWHM [arcsec] . |

| Y106 | 7.06 | 0.834 | 2.25 | 0.279 |

| J129 | 8.60 | 1.021 | 1.75 | 0.230 |

| H158 | 10.96 | 1.250 | 1.50 | 0.210 |

| F184 | 15.28 | 1.456 | 1.25 | 0.200 |

The PSF effective area is defined in the square-norm sense |$\Omega _{\rm psf} = 1/\int [G({\bf s})]^2\, d^2{\bf s}$| and expressed in input pixels. The sampling factors are in the convention of λ/Dsin; this is the same convention used in Rowe, Hirata & Rhodes (2011), but is a factor of 2 larger than the convention of Kannawadi, Rosenberg & Hoekstra (2021). The output smearing is listed as the full width at half maximum of the Gaussian in units of native pixels.

It may seem strange to additionally smooth the image to mitigate sampling effects; after all, the high angular resolution is one of the reasons to build a space mission in the first place. However, it is actually quite similar to the suggestion in Kannawadi, Rosenberg & Hoekstra (2021) to use a larger weighting scale for galaxy ellipticities13 and the convolution suggested by Shen et al. (2022). And we note that the output PSFs in Table 4 are still much smaller than those obtained in ground-based surveys.

4.4 Known issues

There are several issues that arose during the production runs for this project. These were not considered significant enough to warrant re-running the simulations, nor will they affect any of our primary results. However, we do plan to address them in a future version of the simulation:

There was an indexing error that led to the wrong cosmic ray map being read in the Y106 and F184 simulations (this was fixed prior to the J129 and H158 runs). When a postage stamp was being created using the ith input image overlapping that postage stamp, the cosmic ray mask was read from the ith input image for the overall block. Since the cosmic ray maps are realizations of the same random process, this almost always has no effect. But it does mean that in a transition region between two postage stamps at the edge of a chip, there could be cases where the two postage stamps have inconsistent cosmic ray masks.

The lookup algorithm that chooses input images to be used for a block used a search radius that did not account for plate scale variations. This means that there are a few instances where the corners of a chip overlap the block but that input image was not used. In the few cases where this happened, the sense of the effect is that the chip gaps in the coaddition simulation are larger than what we will have with the real data. In addition, sometimes the PSF computation points (at the centre of every 2 × 2 group of postage stamps) went off the edge of the SCA and hence that SCA was rejected from all 4 of the surrounding postage stamps; this also has the effect of increasing the effective chip gaps.

The focal plane layout in the image simulations has some chip spacings that are different from the as-built Roman focal plane. The simulation and the WCS used to coadd the images are however internally consistent.

The output WCS is shifted two postage stamps (2.5 arcsec) from the intended output region due to an error in writing the WCS. This has no effect on the validity of the results since all downstream steps consistently used this WCS; we simply coadded a slightly different region than intended.

The weighting of Fourier modes in the leakage function was intended to be uniform, |$\tilde{\Upsilon }({\boldsymbol u})=1$| in the language of Rowe, Hirata & Rhodes (2011). An experimental feature to place more weight on the longer-wavelength modes, setting |$\tilde{\Upsilon }({\boldsymbol u}) = [1 + A_\Upsilon {\rm e}^{-2\pi ^2 {\sigma} _\Upsilon ^2(u^2+v^2)}]^2$|, was accidentally left on for the production runs with |$A_\Upsilon =1$| and |$\sigma _\Upsilon = \frac{3}{2}\times 0.11$| arcsec. This placed more weight in the optimization than intended on the lowest spatial frequencies (relative to the highest spatial frequencies) by a factor of ≈4; since the resulting T is still a valid coaddition matrix, the results on imcom performance and applicability to weak lensing shape measurement remain valid.

5 RESULTS

We present some general results, examples, and statistics of the output PSF leakage Uα/C and features in the noise here. Statistical analyses of the results in the context of weak lensing are presented in the companion Paper II.

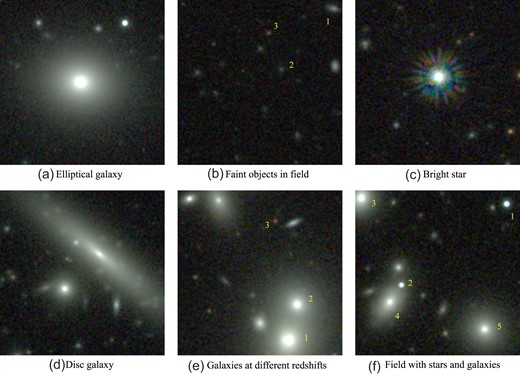

Some examples of regions in the coadded images are shown in Fig. 8.

An assortment of objects in the simulated coadded images. Each panel is a Y106 (blue) + J129 (green) + F184 (red) composite, with a field of 18 arcsec on a side, with a scale stretched from −8 to +1200 |$e/s_{\rm in}^2/$|exposure. (a) An elliptical galaxy at z = 0.18. (b) A deep field; the objects labeled are galaxies at z = 0.81 (object 1), z = 0.79 (2), and z = 0.93 (3). (c) A bright star (visible magnitude |$m_{550\, \rm nm}=16.4$|); note the square pattern imprint of the postage stamp boundaries and the diffraction spikes. (d) A disc galaxy at z = 0.22. (e) An assortment of galaxies; objects 1 and 2 are at z = 0.34, and object 3 at z = 2.81 appears red since the Balmer + 4000 Å feature is redshifted into the H158 band. (f) An assortment of stars (1 and 2) and galaxies (3–5 are in a group at z = 0.28).

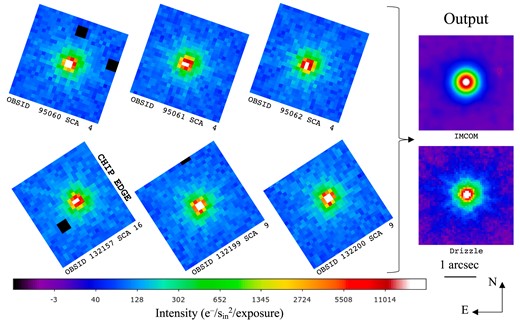

5.1 Stars

An example of a star propagating through the coadd pipeline is shown in Fig. 9. We show both the imcom coaddition as well as the same star coadded in with the commonly used ‘Drizzle’ algorithm (Fruchter & Hook 2002) as described in appendix C of (Troxel et al. 2023). The Drizzle output has pixel scale 0.0575 arcsec and pixfrac = 0.7. Note that the imcom algorithm attempts to produce a round output PSF – this means, in particular, that the diffraction spikes are suppressed to the extent possible by adjusting the T-matrix. Some imperfections at the level of a few× 10−4 of the central surface brightness remain visible. Drizzle is a more local operation, and thus the asymmetry of the original PSFs as well as the diffraction spikes are more prominent.

An example of a simulated coadded star in the Y106 band. The left part of the figure shows the six images of the star, at the as-observed roll angles in the two passes, in the ‘simple’ DC2 simulation. The right panel shows the coadded map. The imcom coadd (this project) is shown at top; the drizzled coadd is shown below. A logarithmic colour stretch is used. The sky level (61 e/p) is present in the inputs but has been removed in the outputs. The black squares in the inputs are masked pixels (for the imcom run).

5.2 Output PSF fidelity

We describe the output PSF fidelity in terms of the quantity −10log10(Uα/C). This is a measure of how well the imcom algorithm thinks it has done on matching the PSF of the output image to the target PSF. The requested fidelity on this scale is 60, which corresponds to a 0.1 per cent error (in a root-sum-square sense) of all of the moments of the PSF.

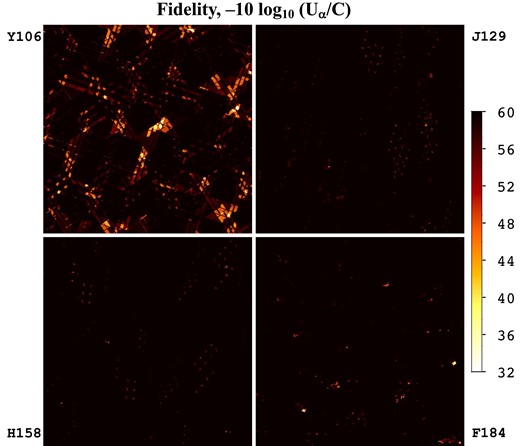

The fidelity maps over the full simulation region for this paper are shown in Fig. 10. The chip gaps are easily visible as regions of lower fidelity: in these cases, there were insufficient dither positions to break the degeneracies among the various Fourier modes in the image. Equation (C18) gives a mode-counting argument for the minimum number of dithers required to disambiguate all Fourier modes in the astronomical scene (note that this is a necessary but not sufficient condition): this argument leads us to a number of dithers of five (Y106), four (J129), three (H158), and two (F184). However, these numbers could prove insufficient due to accidental degeneracies in the dithering pattern (e.g. two dithers at the same roll have an integer pixel offset) or masked pixels; and the required number may also be lower if the high spatial frequency modes have low enough transfer function that they can be ignored even if they are formally present in the image. The imcom simulations in this paper provide a more robust determination of the needed number of dithers in each filter. The worst performance is in Y106 band, since the Reference observing strategy was not originally designed for shape measurement using Y10614 and so we accepted fewer dither positions. The trouble comes mainly from regions with four or fewer dithers in Y106; given this result, and the importance of the Y106 filter in photometric redshifts (e.g. Hemmati et al. 2019), in a future version of the tiling strategy we might consider adding a dither position in Y106 even if we have to use shorter exposure times or accept one less dither in F184. The statistics of the PSF fidelity maps are shown in Fig. 11.

The fidelity map over the 48 × 48 arcmin region simulated, in each of the four bands. The lowest values are seen in Y106, since it has the most undersampled PSF and the number of dither positions is lower than in J129. Note that the same features in the coverage map (Fig. 1) also appear here: the output PSF is not as well matched to the target in regions of intersecting chip gaps.

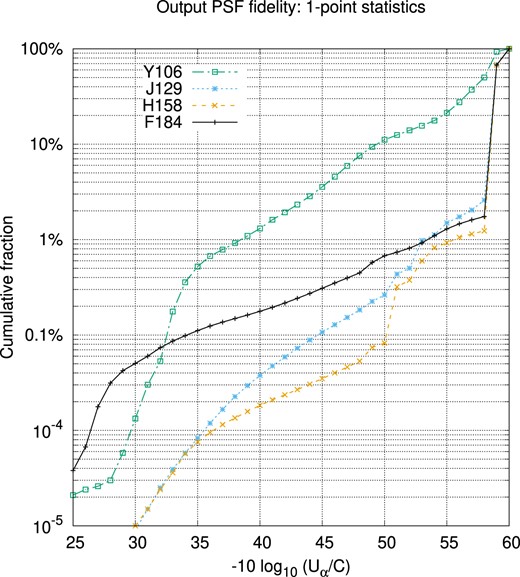

The cumulative distribution of the output PSF fidelity −log10(Uα/C) for each of the Roman filters. The vertical axis shows the fraction of the output 0.8 × 0.8 degree mosaic with PSF fidelity worse than the indicated value.

5.3 Output noise and Moiré patterns

Another aspect of working with multiple undersampled input images is the existence of Moiré patterns. If one combines two input images with no roll, but with a fractional difference ε in plate scale [so the plate scales are P and P(1 + ε)], then the images interlace to produce alternating regions where the samples land on top of each other, and where the samples are ideally interlaced (|$\tfrac{1}{2}$| pixel offset), with a wavelength of P/ε. This behaviour is shown in Fig. 12 for the simple case of two input images in one dimension. One can see that in some parts of the Moiré pattern, the image is effectively sampled at the native pixel scale, whereas in other regions the sampling rate has been improved by a factor of 2; and an obvious concern for a survey is the resulting heterogeneity of the survey data. In particular, in the ‘degenerate’ regions, reconstructing a fully sampled image at output pixel positions in between the samples may prove impossible, or at least result in a large amplification of the noise in the input images (e.g. Lauer 1999a). For Roman, the plate scale is 0.11 arcsec, and the fractional variation in plate scale over a |$\sim \frac{1}{4}$| SCA dither in the y-direction is |$\sim 1.2{{\ \rm per\ cent}}$|, leading to a predicted Moiré wavelength of ∼9 arcsec; this should be thought of as representative since the distortion gradient itself is spatially variable. An example of such a feature in the Roman image combination simulations is shown in Fig. 13. Such features are more common in regions with multiple intersecting chip gaps. While the Moiré patterns are intrinsic to the survey pattern – they represent spatial variations in what degeneracies are present in the data – the specific way they appear in higher-level products such as coadded images or ellipticity biases depends on the algorithm.

![An illustration of a Moiré pattern, with two input images in one dimension. The top row (blue + signs) represents the pixel positions in image #1, and the middle row (red × signs) represents the pixel positions in image #2. The interlaced pixel positions (from both #1 and #2) are shown in the bottom row, and one sees alternating regions of ‘degenerate’ combination (in the linear algebra sense: the two input images give information at the same locations) and ‘ideal’ combination (half pixel offsets, so effectively achieving twice the sampling rate). The wavelength of the pattern is the harmonic difference of the two input wavelengths, 1/[1/P − 1/P(1 + ε)] ≈ P/ε, where ε ≪ 1 is the fractional difference in plate scale.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mnras/528/2/10.1093_mnras_stae182/1/m_stae182fig12.jpeg?Expires=1749162886&Signature=Mi1KXPfK3N4-VRjMPJQrnG1aS9aEXwYJkEwhU7OfpsqJmfjwfS2u68yFfhsrz~X0qve9Hw7h7VfsEwgADOkIX8skaSOST15r96RsSfszd-8bwJSMbrsbKaR~znPvljGvq9KhOwP6aUdByEu6wwzrB26MGtIt77kmxd4vGQP0P8PaUd-kYT~CnqTEE7kaN5ZjjL7mxC~zb0y1v1vSSfK3M5OceOMux~uL6Cq80VYV9cvTnLLyPZmZIbUJetb9W1LG54QZZEh7otejMOQ2j2o5IrpJe-7CpomJkXPpWmjlEcbYg~1G6Yz5XISx174F7xJ9-K9RVtN4-IPzF-5SsG3XHw__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

An illustration of a Moiré pattern, with two input images in one dimension. The top row (blue + signs) represents the pixel positions in image #1, and the middle row (red × signs) represents the pixel positions in image #2. The interlaced pixel positions (from both #1 and #2) are shown in the bottom row, and one sees alternating regions of ‘degenerate’ combination (in the linear algebra sense: the two input images give information at the same locations) and ‘ideal’ combination (half pixel offsets, so effectively achieving twice the sampling rate). The wavelength of the pattern is the harmonic difference of the two input wavelengths, 1/[1/P − 1/P(1 + ε)] ≈ P/ε, where ε ≪ 1 is the fractional difference in plate scale.

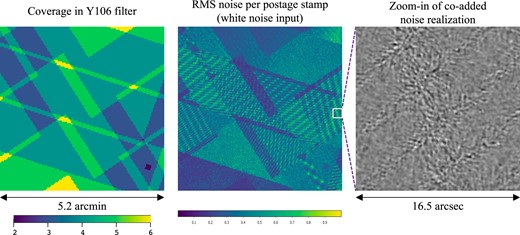

An example of Moiré patterns in the simulated coadd in the Y106 filter. The left panel is a coverage map in a region with intersecting chip gaps in the two passes. The middle panel shows the rms of the ‘whitenoise1’ noise field, as measured in 1.25 × 1.25 arcsec postage stamps. The wave-like features are Moiré patterns; note that these patterns usually start and end at a chip gap, and that a range of wave vectors are present. The right panel shows a zoom-in of a 16.5 × 16.5 arcsec (660 × 660 output pixel) region of the coadded ‘whitenoise1’ noise field. Increased variance in the form of ripples with specific wave vectors can be seen in the degeneracy bands.

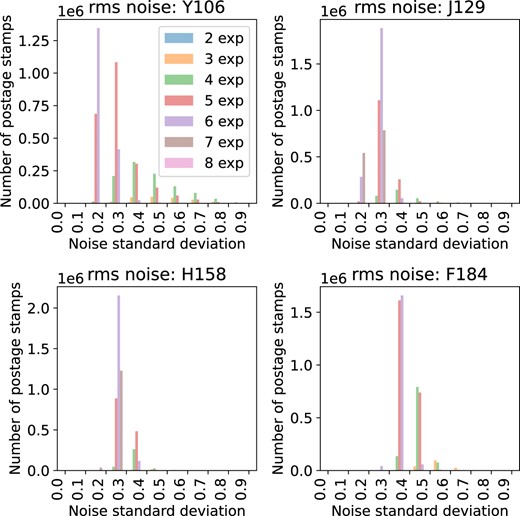

The full situation with the Moiré patterns is more complicated with N > 2 dithered images since then N(N − 1)/2 Moiré wavelengths are present and the pattern is not strictly periodic. In some regions, the dither positions will be closer to the degenerate case in Fig. 12; the fraction of the area where of all the dither positions land within, say, |$\frac{1}{4}$| pixels of each other can be expressed analytically,15 and is |$\frac{3}{16}=18.75{{\ \rm per\ cent}}$| for N = 3; |$\frac{1}{16}=6.25{{\ \rm per\ cent}}$| for N = 4; and |$\frac{5}{256}\approx 1.95{{\ \rm per\ cent}}$| for N = 5. If one goes up to 2 dimensions but with no rolls, then this argument applies in both x and y directions: for example, for N = 3, one would expect |$1-(1-\frac{3}{16})^2 \approx 34{{\ \rm per\ cent}}$| of the area to have samples clustered within |$\frac{1}{4}P$| in x, y, or both (this drops to 12 per cent for N = 4 and 4 per cent for N = 5). For the case with arbitrary rolls, we are unaware of any practical analytical results on these degeneracies, so numerical simulation remains the best approach to assessing the sampling impact of survey strategy choices for Roman. The histograms of the output noise properties from this simulation are shown in Fig. 14.

The rms noise in each output postage stamp in the ‘whitenoise1’ layer (this is normalized to unit variance in the input noise maps). Each panel shows a histogram for one of the four bands; the histograms are broken down by number of input exposures. Note the tail to larger noise values in Y106 with small numbers of exposures; this is driven by regions with strong Moiré patterns.

The banded features in Fig. 13 are visually striking and are a potential concern because coadded noise with an anisotropic power spectrum could introduce an additive noise bias (e.g. the centroid bias, Kaiser 2000; Bernstein & Jarvis 2002, although this is just one of a hierarchy of biases related to noise; Melchior & Viola 2012; Refregier et al. 2012). While the separation of the bands themselves is at the Moiré scale of order 10 arcsec, the band structures are coherent over scales of >1 arcmin (see the middle panel of Fig. 13) and one may wonder whether they introduce power at scales of interest for the weak lensing analysis. A quantitative investigation of the additive noise biases is carried out in the companion Paper II.

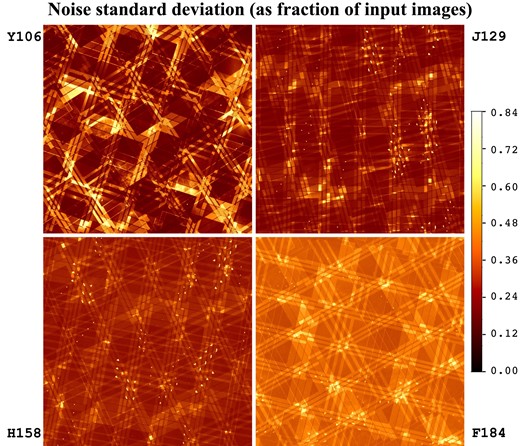

A fully zoomed-out output noise map is shown in Fig. 15.

The noise map over the 48 × 48 arcmin region simulated, in each of the four bands, measured as the rms of the ‘whitenoise1’ noise field, as measured in 1.25 × 1.25 arcsec postage stamps. (The Y106 panel is a zoom-out of the centre panel in Fig. 13.) Once again, we can see the features in the coverage map (Fig. 1). The regions of large cosmetic defects in the SCAs can be seen in the output noise maps as strings of higher-noise splotches corresponding to the dithering sequence.

5.4 Impact of charge diffusion

The ‘simple’ image simulations did not include a charge diffusion, and so we have not included charge diffusion in the main results of this paper. We intuitively expect that this is a ‘stress test’ of the application of imcom, since charge diffusion occurs before pixelization (Mosby et al. 2020) and thus a PSF including charge diffusion is better sampled than one without. However, it is important to check this expectation. We tested this by re-running one block in the most difficult filter (Y106) with several values of the charge diffusion, ranging from 0.0 to 0.4 pixels rms. As an intuitive guide to the importance of charge diffusion, the least-squares fit Gaussian to an Airy disc has width σAiry = 0.41λ/D, so by root-sum-square addition we would expect that inclusion of 0.24 pix rms charge diffusion effectively smears a diffraction-limited Y106 PSF to the resolution of J129, and 0.38 pix rms smears it to the resolution of H158; however, since the Airy disc and features induced by aberrations are not Gaussian, these should be viewed as rough estimates and the full output of the imcom should be used for planning purposes. We chose block location (44,34) because it includes some intersecting chip gaps (the right panel of Fig. 13 is in this block).