-

PDF

- Split View

-

Views

-

Cite

Cite

S Paradiso, M DiMarco, M Chen, G McGee, W J Percival, A convenient approach to characterizing model uncertainty with application to early dark energy solutions of the Hubble tension, Monthly Notices of the Royal Astronomical Society, Volume 528, Issue 2, February 2024, Pages 1531–1540, https://doi.org/10.1093/mnras/stae101

Close - Share Icon Share

ABSTRACT

Despite increasingly precise observations and sophisticated theoretical models, the discrepancy between measurements of H0 from the cosmic microwave background or from baryon acoustic oscillations combined with big bang nucleosynthesis versus those from local distance ladder probes – commonly known as the ‘H0 tension’ – continues to perplex the scientific community. To address this tension, early dark energy (EDE) models have been proposed as alternatives to Lambda cold dark matter, as they can change the observed sound horizon and the inferred Hubble constant from measurements based on this. In this paper, we investigate the use of Bayesian model averaging (BMA) to evaluate EDE as a solution to the H0 tension. BMA consists of assigning a prior to the model and deriving a posterior as for any other unknown parameter in a Bayesian analysis. BMA can be computationally challenging in that one must approximate the joint posterior of both model and parameters. Here, we present a computational strategy for BMA that exploits existing Markov chain Monte Carlo software and combines model-specific posteriors post hoc. In application to a comprehensive analysis of cosmological data sets, we quantify the impact of EDE on the H0 discrepancy. We find an EDE model probability of |${\sim} 90~{{\ \rm per\ cent}}$| whenever we include the H0 measurement from Type Ia supernovae in the analysis, whereas the other data show a strong preference for the standard cosmological model. We finally present constraints on common parameters marginalized over both cosmological models. For reasonable priors on models with and without EDE, the H0 tension is reduced by at least 20 per cent.

1 INTRODUCTION

The standard cosmological model, Lambda cold dark matter (ΛCDM), has been remarkably successful in explaining various cosmological phenomena. However, the persisting discrepancy in the determination of the Hubble constant, H0, has challenged the robustness of this model. H0, the present-day expansion rate of the Universe, plays a pivotal role in our understanding of cosmic evolution, the age of the Universe, and the formation of large-scale structures. It is typically inferred using one of two approaches: local distance ladder measurements or constraints as part of a cosmological model from more general observations such as those from the cosmic microwave background (CMB) or from the combination of baryon acoustic oscillation (BAO) and big bang nucleosynthesis (BBN) observations.

Leveraging the CMB data, the Planck satellite mission reported a value of H0 close to 68 km s−1 Mpc−1 for ΛCDM cosmologies (Planck Collaboration VI 2020b). This is supported by standard ruler observations of BAO from the Sloan Digital Sky Survey (SDSS) and BBN, which favour similar values for the same models (Alam et al. 2021). For the CMB, the absolute measurement at present day results from the photon temperature and blackbody assumption, giving the physical photon density. Density ratios giving the CMB power spectrum then give the matter and baryon densities, with the angular scales of the projected peak positions finally leading to a constraint on H0 through the angular diameter distance. For BAO + BBN, the physics is similar: BBN observations of the baryon-to-photon ratio, together with the present-day photon density from the CMB temperature, fix the present-day size of the BAO ruler. The projected scale of the observed BAO then constrains H0 in a similar way to the CMB constraints. In contrast, observations from local distance ladder studies, such as the Hubble Space Telescope (HST) observations of Cepheid variable stars, favour a higher value of H0 ∼ 74 km s−1 Mpc−1 (Riess et al. 2019). These observations can be considered a more direct route to measure the present-day expansion rate, using distance estimates for individual objects together with their redshifts to directly measure the expansion rate. The divergence in measurements, referred to as the H0 tension, has remained persistent for a number of years now and, in some cases, has intensified as more precise data become available.

One proposal to resolve the H0 tension is the introduction of early dark energy (EDE) into the cosmological model. EDE posits the existence of a scalar field in the early Universe, leading to an enhancement of the expansion rate during the epoch of matter–radiation equality (Karwal & Kamionkowski 2016; Poulin et al. 2019). This additional early acceleration can alleviate the H0 tension by modifying the early-time cosmic expansion and the position of the acoustic feature in the radiation and matter power spectra. The EDE model has gained popularity due to its capacity to reconcile the Planck and HST measurements, but the extent to which the data support this model is unclear.

To address the challenges of model selection and parameter estimation within the context of the H0 tension and EDE, we employ Bayesian model averaging (BMA) as a powerful statistical tool (Trotta 2008; Parkinson & Liddle 2013). By treating the model itself as another unknown in a Bayesian framework, it simultaneously accounts for uncertainty in parameter estimates as well as model choice. BMA offers a principled approach to statistically weight the evidence from various models, enabling a comprehensive analysis of the viability of EDE as a potential solution to the H0 tension.

Standard methods for making Bayesian inferences from cosmological data consider only a single model and then use a method such as Markov chain Monte Carlo (MCMC) to sample from the posterior (Hastings 1970; Tierney 1994) conditional on that model. Accounting for model uncertainty via BMA, however, requires the joint posterior for both models and parameters; this requires accounting for both the likelihood and the model prior, which is not readily available in model-conditional samples. We outline a method not previously applied in cosmology that collapses these model-conditional samples post hoc, thus avoiding the need for specialized software for BMA.

We use this method to apply BMA to scrutinize the implications of the EDE model on resolving the H0 tension. We carefully consider a range of observational data sets, including CMB measurements, Type Ia supernovae (SNIa), and BAOs, to constrain the relevant cosmological parameters. By employing BMA, we assess the credibility of the EDE model as a viable explanation for the H0 tension, while simultaneously exploring the possible range of cosmological scenarios.

The organization of this paper is as follows: in Section 2, we present the theoretical framework of the EDE model and its underlying physical motivations. Section 3 details the BMA methodology employed in this analysis. In Section 4, we present our results and discuss the implications of the EDE model on the resolution of the H0 tension. In Section 5, we conclude with a discussion of our findings and potential avenues for further exploration.

2 EARLY DARK ENERGY

The EDE model introduces an additional dark energy component, characterized by a fractional energy density parameter ΩEDE and associated equation of state parameter wEDE, which is significant during the epoch of recombination. The presence of EDE modifies the cosmic expansion rate, leading to a different evolution of the scale factor a(t) compared to the standard ΛCDM model. During the epoch of recombination, the Friedmann equation for the Hubble parameter H(t) can be written as

where ΩX ≡ ρX/ρcrit is the density parameter for the X component (namely matter, radiation, Λ, and EDE). We assume that Λ is constant and that the Universe is set up to have a flat geometry so that Ωk = 0 and the curvature term does not appear in equation (1).

In this paper, we focus on the theoretical EDE framework introduced by Smith, Poulin & Amin (2020) based on a quintessence scalar field ϕ with an oscillating potential

The case n = 1 represents the well-established axion potential. We will restrict our analysis to the case n = 3, for the reasons given in Hill et al. (2020). The dynamics of the scalar field are governed by the Klein–Gordon equation

where H is the Hubble parameter, the dots represent the derivative with respect to cosmic time, and Vn,ϕ = dVn/dϕ.

Heuristically speaking, in order to effectively mitigate the H0 tension, the EDE contribution is concentrated in a short period after radiation–matter equality and before recombination, when the scalar field dominates the energy density contribution of the Universe and drives the growth of the scale factor before rapidly decaying. We refer the interested reader to Turner (1983), Marsh & Ferreira (2010), Poulin et al. (2019), and references therein, for the details. It is useful to define a renormalized field variable Θ = ϕ/f, so that equation (3) reads

The evolution of the scalar field can be completely determined by the set of three parameters {m, f, Θi}, where Θi represents the initial field value in units of f. From an observational point of view, we can characterize the background evolution by means of the maximum fraction of the total energy density in the field fEDE and the redshift corresponding to that maximum zc, as heuristically illustrated in Fig. 1 for an axion-like EDE field (Poulin et al. 2018); for a given combination of {m, f}, we can always find a value of {fEDE, zc}, so that the model’s free parameter set consists of either {m, f, Θi} or {fEDE, zc, Θi}.

![Redshift evolution of the density parameter ΩX for the main components of the Universe (top) and EDE fraction (bottom). We report the evolution of radiation (dot–dashed), matter (dotted), cosmological constant (dashed), and EDE (solid) spanning different combinations of zEDE in [103, 104] and fEDE in [0.6, 1.4] with a darker to brighter colour palette (for increasing values of zEDE). The redshift evolution of the EDE component is computed for n = 3 in order to match the assumption in the main text.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/mnras/528/2/10.1093_mnras_stae101/1/m_stae101fig1.jpeg?Expires=1749151618&Signature=1xV6V1-NpCHf5moObDQOyxd8zPyR4IDNbKn3gqn5pGQvJvikWiuAUkUfFBbL6bVrsFDDft7GYftIRLMKyab531qMgIOfBfezn0hL8~OSziaZQ~d0VTKLOI6ZneUqKShCjTZd9IebtbER3jlo0S4yFzcMCVRQMTEkURHDPSctxlvnKDjs28IM7uoUwfuywjMIB3T3Ty9t3UGTPwSvnKKRE786sIUAJCeUcozhzzZVV195P6NOPJjjNVzPthLaJ9hicZhXLOOxHdBa-evwvI9nX5Tj9OJNYB3XaUFcBAS-C1SpYRwTURyfVp~OQCNNPKm3KLjvtIEwq7BG9nJx75ploA__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Redshift evolution of the density parameter ΩX for the main components of the Universe (top) and EDE fraction (bottom). We report the evolution of radiation (dot–dashed), matter (dotted), cosmological constant (dashed), and EDE (solid) spanning different combinations of zEDE in [103, 104] and fEDE in [0.6, 1.4] with a darker to brighter colour palette (for increasing values of zEDE). The redshift evolution of the EDE component is computed for n = 3 in order to match the assumption in the main text.

3 INTRODUCTION TO BAYESIAN MODEL AVERAGING

In many statistical analyses, we are faced with the task of selecting the most appropriate model from a set of competing models. Traditional methods first fit a set of candidate models, and then compare their fit according to some criteria like the Akaike Information Criterion (Akaike 1974); finally, inferences are drawn with respect to the ‘best-fitting’ model. A limitation of this approach is that inferences are typically drawn as if the model was chosen a priori – that is, it does not account for uncertainty in the choice of model.

By contrast in a Bayesian framework, model uncertainty can be handled by treating the model like any other unknown, and computing the posterior probability of each candidate model given the observed data and chosen prior. This allows for a principled approach to comparing models and quantifying their relative plausibility based on the data and prior knowledge.

In addition to making direct inferences about the probabilities of different models, this approach allows one to formally account for model uncertainty when making inferences about cosmological parameters via BMA. Intuitively, BMA computes a weighted average over the conditional posteriors for cosmological parameters under each candidate model. This marginalization occurs over the model posterior, ensuring that models with higher probabilities contribute more to the final inference.

Let M = 1, 2, ..., K be a discrete random variable indicating a choice among K different models. For convenience, we will refer to M = j as Mj. The posterior model probability for model Mi, denoted as |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$|, is computed as

where P(M i) is the prior probability of model M i, |$P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$| is the marginal likelihood of the data |$\boldsymbol{d}$| given model Mi, and the denominator serves as a normalization factor to ensure that the probabilities sum to one. We assume that the prior probabilities are separable between model selection and model parameters.

The advantages of BMA are as follows:

Accounting for model uncertainty: BMA provides a comprehensive approach to handle model uncertainty, acknowledging that multiple models may be plausible given the available data (Hoeting et al. 1999).

Robustness: BMA is less sensitive to the specific choice of a single ‘best’ model, reducing the risk of overfitting or underfitting the data (Hoeting et al. 1999).

Improved predictive performance: By averaging predictions from multiple models, BMA often leads to more accurate predictions compared to relying on a single model (Madigan & Raftery 1994).

3.1 fast-mpc and application to a two model problem

Without loss of generality, consider two candidate models, M1 and M2, parametrized by |$\boldsymbol{\theta }_1$| and |$\boldsymbol{\theta }_2$|, respectively, and let |$\boldsymbol{\theta }$| be the union of |$\boldsymbol{\theta }_1$| and |$\boldsymbol{\theta }_2$|. A full Bayesian analysis would characterize the joint posterior of Mi and |$\boldsymbol{\theta }_i$| for i = 1, 2, from which we aim to (i) obtain the posterior probability of each candidate model, |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$|; and (ii) obtain the marginal posteriors of the target parameters, |$P(\boldsymbol{\theta }\vert \boldsymbol{d})$|. The former allows us to assess the extent the evidence in favour of either candidate model; the latter allows our inferences about cosmological parameters to formally account for uncertainty in the choice of model.

Following Hastie & Green (2012), characterizing the full joint posterior (for model and parameters) can be done in one of two ways: across-model approaches and within-model approaches. The most well-known method in the former category is the reversible jump-MCMC (RJ-MCMC; Green 1995), a variant of the Metropolis–Hastings algorithm (known as Metropolis–Hastings–Green) that allows for between-model steps in which the dimension of the parameter vector changes to accommodate models of different sizes. This is a general approach that allows one to explore a large space of models, but it requires somewhat bespoke software implementation (see e.g. Karnesis et al. 2023), and achieving convergence can be challenging in practice.

Instead, we adopt a within-model approach that exploits our knowledge of |$P(\boldsymbol{\theta }\vert \boldsymbol{d},M_1)$| and |$P(\boldsymbol{\theta }\vert \boldsymbol{d},M_2)$| which are easily obtained from the usual model-conditional MCMC (Green, Godsill & Heikkinen 2000; Hastie & Green 2012) using standard software. The marginal posterior of |$\boldsymbol{\theta }$| can be decomposed as follows:

Since samples of |$P(\boldsymbol{\theta }\vert \boldsymbol{d},M_1)$| and |$P(\boldsymbol{\theta }\vert \boldsymbol{d},M_2)$| are readily available from standard analyses conditional on a single model, it then only remains to obtain the two model posteriors |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$| defined in equation (5). This decomposition frames the model-averaged posterior |$P(\boldsymbol{\theta }|d)$| as weighted average of the model-conditional posteriors |$P(\boldsymbol{\theta }|\boldsymbol{d},M_i)$| as in equation (7). Note, however, that it is not sufficient to weight by the model priors P(Mi); rather the weights correspond to the model posteriors |$P(M_i|\boldsymbol{d})$| – the data themselves inform how much weight is assigned to either model.

The model posteriors |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$| in equation (5) consist of both the model priors, P(Mi), which are specified by the analyst, as well as the marginal likelihood:

for i = 1, 2. We obtain an estimate |$\hat{P}(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$| of |$P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$| based on the model-conditional MCMC chain, as described in section 7 of Newton & Raftery (1994):

where |$\boldsymbol{\theta }_{\rm {\mathit{ i}}}^{(\rm {t})}$| is the tth sample in the ith model-conditional MCMC chain with length |$\rm {\mathit{ N}}_{\rm {\mathit{ i}}}$|, and |$\omega (\boldsymbol{\theta }_{\rm {\mathit{ i}}})=P(\boldsymbol{\theta _{\rm {\mathit{ i}}}}\vert M_{\rm {\mathit{ i}}})/\phi (\boldsymbol{\theta }_{\rm {\mathit{ i}}})$|. This is effectively importance sampling to perform a Monte Carlo integration over the prior |$P(\boldsymbol{\theta _{\rm {\mathit{ i}}}}\vert M_{\rm {\mathit{ i}}})$| with an importance sampling function |$\phi (\boldsymbol{\theta }_{\rm {\mathit{ i}}})$|, which is set to the model-conditional posterior |$P(\boldsymbol{\theta }_{\rm {\mathit{ i}}}\vert M_{\rm {\mathit{ i}}},\boldsymbol{d})$| so that we can use the readily available model-conditional chains.

To see this, by Bayes’ theorem, we can express equation (9) as

where |$P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}},\boldsymbol{\theta }_{\rm {\mathit{ i}}})$| is the likelihood conditional on model M i. From this, we have |$\omega (\boldsymbol{\theta }_{\rm {\mathit{ i}}})=P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})/P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}},\boldsymbol{\theta }_{\rm {\mathit{ i}}})$|. Substituting this into equation (10), we see that the estimator is simply the harmonic mean of the likelihood, averaging over the model-conditional chain

To compute this, one must have access to (i) the samples |$\theta _{\rm {\mathit{ i}}}^{(\rm {t})}$| from the model-conditional MCMC, as well as (ii) the full likelihoods evaluated at each of those samples |$P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}}, \boldsymbol{\theta }_{\rm {\mathit{ i}}}^{(\rm {t})})$|, both of which are easily accessible using a cosmological MCMC code such as cobaya (Lewis & Bridle 2002; Lewis 2013; Torrado & Lewis 2021). It is shown in Newton & Raftery (1994) that this estimator converges almost surely to the true |$P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$|. From this and the model priors, we can estimate |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$| via equation (5).

We have implemented this method in a publicly available software package called fast-mpc available at https://github.com/simonpara/Fast-MPC. fast-mpc implementation facilitates BMA via the following steps:

Fit candidate models: run two MCMC chains assuming M1 and M2, respectively, yielding |$\lbrace \boldsymbol{\theta }_1\rbrace _{\rm {\mathit{ N}}_1}$|, |$\lbrace \boldsymbol{\theta }_2\rbrace _{\rm {\mathit{ N}}_2}$|, |$\lbrace P(\boldsymbol{d}\vert M_{\rm {1}}, \boldsymbol{\theta }_{\rm {1}})\rbrace _{\rm {\mathit{ N}}_1}$|, and |$\lbrace P(\boldsymbol{d}\vert M_{\rm {2}}, \boldsymbol{\theta }_{\rm {2}})\rbrace _{\rm {\mathit{ N}}_2}$|.

Estimate |${P}(\boldsymbol{d}\vert M_{1})$| and |${P}(\boldsymbol{d}\vert M_{2})$|: compute estimates |$\hat{P}(\boldsymbol{d}\vert M_{1})$| and |$\hat{P}(\boldsymbol{d}\vert M_{2})$| via equation (13).

Estimate model posteriors: substitute estimates |$\hat{P}(\boldsymbol{d}\vert M_{1})$| and |$\hat{P}(\boldsymbol{d}\vert M_{2})$| into equation (5) to estimate P(M i|d).

Estimate marginal (model-averaged) posteriors of |$\boldsymbol{\theta }$|: substitute estimates of P(Mi|d) into equation (7).

Alternatively, RJ-MCMC would instead produce a single MCMC chain spanning multiple models (an example implementation is Eryn1 described in Karnesis et al. 2023). The resulting chain could easily be used to determine model probabilities as for any other parameter, and would not require us to compute a direct estimate of |$P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$|. In practice, however, convergence is a challenge using RJ-MCMC, and fast-mpc allows for a faster and more convenient computation when (1) the number of candidate models is small and (2) an MCMC sample for each model is available (be it from off-the-shelf software or from publicly available data releases). We compare the two approaches in Appendix A, validating that they give consistent answers in a toy example.

4 RESULTS

For our analysis, we adopted the publicly available MCMC sampler for cosmology cobaya2 (Lewis & Bridle 2002; Lewis 2013; Torrado & Lewis 2021); we exploited theory codes camb (Lewis, Challinor & Lasenby 2000; Howlett et al. 2012) and its early quintessence model implementation (Smith, Poulin & Amin 2020). The BMA was performed through fast-mpc with our publicly available code3; it consists of a library of functions for reading-in MCMC samples and computing all the necessary quantities to estimate |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$|.

Our data sets included the following observables from CMB, CMB lensing, BAO, and SNIa:

CMB: Planck 2018 CMB TT, TE, and EE likelihood (Planck Collaboration V 2020a) in the high-ℓ multipole range 30 ≤ ℓ ≤ 2508 (TT) and 30 ≤ ℓ ≤ 1996 (TE, EE), and the low-ℓ Commander TT likelihood spanning 2 ≤ ℓ ≤ 29.

Lensing: Planck 2018 reconstructed CMB lensing potential power spectrum (Planck Collaboration VIII 2020c).

BAO: Baryonic acoustic oscillation measurements from the Sloan Digital Sky Survey (SDSS) DR7 main galaxy sample (Ross et al. 2015) at z = 0.15, the 6dF galaxy redshift survey (Beutler et al. 2012) at z = 0.106 and the Baryon Oscillation Spectroscopic Survey (BOSS) DR12 (Alam et al. 2017) at low redshift (LOWZ) and high redshift (CMASS) galaxy samples at z = 0.38, z = 0.51, and z = 0.61, the extended BOSS (eBOSS) galaxies and quasars in 0.6 ≤ z ≤ 2.2, and the Lyman-α forest samples in 1.8 ≤ z ≤ 3.5.

SNIa: 1048 luminosity distance measurements from the Pantheon SNIa light-curves catalogue (Scolnic et al. 2018) and Cepheid measurements in the Large Magellanic Cloud from Riess et al. (2019).

We considered the following data set combinations: CMB, CMB + Lensing + BAO + SN, BAO + BBN, and BAO + BBN + SN. The Planck-free cases include information from BBN in the form of a Gaussian prior of Ωbh2 = 0.022 35 ± 0.000 37 under the assumption of Neff = 3.046. This prior is derived using the empirically estimated cross-section of the deuterium described in Adelberger et al. (2011), with abundance D/H = (2.527 ± 0.030) × 10−5 from the high-resolution spectroscopic measurements of seven quasar absorption systems (Cooke, Pettini & Steidel 2018).

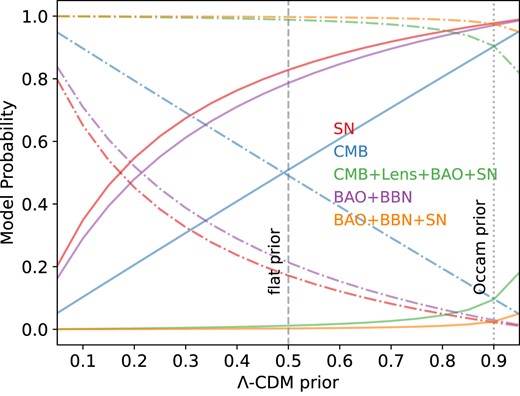

As introduced in Section 3, BMA allows us to compute the posterior probability of our model, given the data and the cosmological parameters, in a Bayesian way, and therefore relies on a prior assumption on model probability as shown in equation (5). We show in Fig. 2 the computed values of |$P(M\vert \boldsymbol{d})$| as a function of the prior assumption on the ΛCDM model [or, equivalently, 1 − P(MEDE)], after removing a conservative burn-in fraction of 0.5. The solid lines are the ΛCDM model probabilities and the dot–dashed lines are the corresponding EDE model probabilities for the different considered data sets. We identified two interesting prior configurations, represented by the grey dotted and dashed lines on the plot:

the flat prior, corresponding to a 50–50 split probability between ΛCDM and EDE, and reflecting the agnostic assumption on which model should be favoured. This is the default prior assumption throughout the paper;

the Occam’s prior, expressing an a priori preference for the ΛCDM model, as it comes with fewer parameters. Note that some observations (such as supernovae samples) are cumulative, or the sample variance is the same (such as the CMB), and hence it is not good practice to form a prior based on data that are also used in the likelihood. We therefore do not include in this prior any previous match between observations and the ΛCDM model, but we include results using this prior as a counter to the flat prior, allowing us to test the sensitivity to the prior. We choose a 90 per cent probability in favour of the ΛCDM cosmological model. As well as explicitly putting in a preference for a simpler model as we do here, we note that BMA also generally favours models with fewer parameters, because they have a smaller volume over which the likelihood is marginalized. Thus, the idea behind Occam’s razor does not only arise in the prior in BMA. For this reason, this prior choice results in strong advocacy for the ΛCDM model and its interpretation should be made carefully. However, the Occam’s prior represents an extreme case supporting the ΛCDM model, and we report results under this assumptions alongside with the standard flat prior for completeness.

Model prior dependence of model posteriors. The models being compared are the ΛCDM model (solid lines) and the EDE model (dot–dashed lines) for the considered data sets: SN, CMB, BAO + BBN, CMB + Lensing + BAO + SN, BAO + BBN + SN. We reported as a grey vertical line the flat prior (dashed) and the Occam’s prior (dotted).

We report the model probabilities under both prior assumptions in Table 1. The ΛCDM model is favoured for both priors for BAO + BBN and SN data sets when considered alone, with a ΛCDM probability of 79 per cent and 83 per cent, respectively, when we adopt a flat model prior – ΛCDM is favoured above 90 per cent under the Occam’s prior. The CMB-only case model probability closely matches the prior probabilities with 51 per cent and 91 per cent ΛCDM model probabilities in the flat prior and Occam’s prior, respectively; a straightforward explanation for this is that the data are not informative about the model choice, and all the information is coming from the prior. On the other hand, we find a strong EDE preference whenever we merge the data sets into CMB + Lensing + BAO + SN and BAO + BBN + SN, with more than 99 per cent EDE model probability under a flat model prior, and, respectively, 96 per cent and 98 per cent for the Occam’s prior. It is not a surprise that the joint data sets BAO + BBN + SN and CMB + Lensing + BAO + SN show a strong preference for EDE; that was precisely the reason EDE was introduced in the first place. These numbers should not be interpreted as one model being right or wrong from an absolute perspective, but rather the probability of one or the other to be favoured by data. That is, our averaged constraints are still conditioned on one of the two models (ΛCDM or EDE) being correct.

Model posteriors as |$[P(\rm {\Lambda CDM}\vert \boldsymbol{d}), \mathit{ P}(\rm {EDE}\vert \boldsymbol{d})]$| for two model prior assumptions: (1) the flat prior is a 50 per cent prior for each model and (2) the Occam’s prior with a 90 per cent prior on ΛCDM versus a 10 per cent prior on EDE.

| . | Model posterior (ΛCDM, EDE) in . | |

|---|---|---|

| . | |$[P(\rm {\Lambda CDM}\vert \boldsymbol{d}), \mathit{ P}(\rm {EDE}\vert \boldsymbol{d})]$| (in per cent) . | |

| Data set . | Flat prior . | Occam’s prior . |

| CMB | [50.9, 49.1] | [90.3, 9.7] |

| CMB + Lensing + BAO + SN | [1.2, 98.8] | [9.5, 90.5] |

| BAO + BBN | [78.7, 21.3] | [97.1, 2.9] |

| BAO + BBN + SN | [0.3, 99.7] | [2.5, 97.5] |

| SN | [82.8, 17.2] | [97.7, 2.3] |

| . | Model posterior (ΛCDM, EDE) in . | |

|---|---|---|

| . | |$[P(\rm {\Lambda CDM}\vert \boldsymbol{d}), \mathit{ P}(\rm {EDE}\vert \boldsymbol{d})]$| (in per cent) . | |

| Data set . | Flat prior . | Occam’s prior . |

| CMB | [50.9, 49.1] | [90.3, 9.7] |

| CMB + Lensing + BAO + SN | [1.2, 98.8] | [9.5, 90.5] |

| BAO + BBN | [78.7, 21.3] | [97.1, 2.9] |

| BAO + BBN + SN | [0.3, 99.7] | [2.5, 97.5] |

| SN | [82.8, 17.2] | [97.7, 2.3] |

Model posteriors as |$[P(\rm {\Lambda CDM}\vert \boldsymbol{d}), \mathit{ P}(\rm {EDE}\vert \boldsymbol{d})]$| for two model prior assumptions: (1) the flat prior is a 50 per cent prior for each model and (2) the Occam’s prior with a 90 per cent prior on ΛCDM versus a 10 per cent prior on EDE.

| . | Model posterior (ΛCDM, EDE) in . | |

|---|---|---|

| . | |$[P(\rm {\Lambda CDM}\vert \boldsymbol{d}), \mathit{ P}(\rm {EDE}\vert \boldsymbol{d})]$| (in per cent) . | |

| Data set . | Flat prior . | Occam’s prior . |

| CMB | [50.9, 49.1] | [90.3, 9.7] |

| CMB + Lensing + BAO + SN | [1.2, 98.8] | [9.5, 90.5] |

| BAO + BBN | [78.7, 21.3] | [97.1, 2.9] |

| BAO + BBN + SN | [0.3, 99.7] | [2.5, 97.5] |

| SN | [82.8, 17.2] | [97.7, 2.3] |

| . | Model posterior (ΛCDM, EDE) in . | |

|---|---|---|

| . | |$[P(\rm {\Lambda CDM}\vert \boldsymbol{d}), \mathit{ P}(\rm {EDE}\vert \boldsymbol{d})]$| (in per cent) . | |

| Data set . | Flat prior . | Occam’s prior . |

| CMB | [50.9, 49.1] | [90.3, 9.7] |

| CMB + Lensing + BAO + SN | [1.2, 98.8] | [9.5, 90.5] |

| BAO + BBN | [78.7, 21.3] | [97.1, 2.9] |

| BAO + BBN + SN | [0.3, 99.7] | [2.5, 97.5] |

| SN | [82.8, 17.2] | [97.7, 2.3] |

A further goal of our study is to incorporate the model uncertainty in the final cosmological parameter estimates. If there are big differences between the credible intervals for a particular parameter conditioned on different models, then we might expect the credible interval to be wider when averaged over models. Thus, BMA has the general ability to reduce tensions by providing wider credible intervals given the model uncertainty. This explains why, for the H0 tension, the marginalized uncertainty tends to mitigate the tension on cosmological parameters, especially on H0, as we find in the following section.

4.1 Model-averaged posteriors

The knowledge of |$P(M\vert \boldsymbol{d})$| allows us to compute the cosmological parameters’ posterior distributions marginalized over the model uncertainty as described in equation (7). For our analysis, we considered the following combination of the ΛCDM base parameters with their flat prior ranges, unless otherwise specified: today’s value of the Hubble parameter H0 ∈ [20, 100], the baryon density parameter |$\Omega _{\rm {b}}\rm {\mathit{ h}}^2\in [0.005,0.1]$| (and a Gaussian prior |$\mathcal {N}(0.002\,35,(0.000\,37)^2)$| when including BBN), the cold dark matter density parameter |$\Omega _{\rm {c}}\rm {\mathit{ h}}^2\in [0.001,0.99]$|, the amplitude of scalar perturbations log(1010As) ∈ [1.61, 3.91], the index of the scalar perturbations ns ∈ [0.8, 1.2], and the optical depth of reionization τ ∈ [0.01, 0.8]. In addition, we sampled the EDE parameters already introduced in Section 2 by keeping n = 3 fixed and adopting the following flat priors: Θi ∈ [0.1, 3.1], fEDE ∈ [0.01, 0.5], and log(10 zEDE) ∈ [3.2, 4.2].

We report in Table 2 the constraints for the six ΛCDM base parameters after marginalizing over the model uncertainty with a flat model prior (top row for each parameter) and the Occam’s model prior (bottom row). We report only the common parameters between the ΛCDM and the EDE models, for those are the only ones influenced by the model uncertainty. For the reader’s reference, we provide the EDE specific model parameters’ constraints in Table 3 as evaluated in our analysis.

Constraints on cosmological parameters marginalized over the model uncertainty with the flat model prior assumption (top row) and the Occam’s prior (bottom row). We report 68 per cent credible intervals for each parameter.

| Parameter . | CMB . | CMB + Lensing + BAO + SN . | BAO + BBN . | BAO + BBN + SN . |

|---|---|---|---|---|

| H0 | |$68.17^{+0.77}_{-1.4}$| | 71.1 ± 1.0 | |$70.3^{+1.4}_{-2.7}$| | 74.0 ± 1.4 |

| |$67.45^{+0.71}_{-0.89}$| | |$71.0^{+1.4}_{-1.0}$| | |$68.8^{+1.6}_{-2.4}$| | 73.9 ± 1.5 | |

| Ωbh2 | |$0.02250^{+0.00017}_{-0.00025}$| | 0.022 84 ± 0.000 22 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 |

| |$0.02239^{+0.00014}_{-0.00018}$| | 0.022 83 ± 0.000 23 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 | |

| Ωch2 | |$0.1229^{+0.0019}_{-0.0042}$| | 0.1291 ± 0.0039 | |$0.1240^{+0.0098}_{-0.013}$| | 0.1398 ± 0.0089 |

| |$0.1207^{+0.0011}_{-0.0021}$| | |$0.1284^{+0.0051}_{-0.0038}$| | |$0.118^{+0.011}_{-0.013}$| | 0.1397 ± 0.0090 | |

| log(1010As) | 3.050 ± 0.017 | 3.065 ± 0.015 | 3.12 ± 0.13 | 3.08 ± 0.11 |

| 3.046 ± 0.017 | 3.064 ± 0.015 | 3.15 ± 0.13 | 3.08 ± 0.11 | |

| ns | |$0.9700^{+0.0052}_{-0.0089}$| | 0.9865 ± 0.0070 | – | – |

| |$0.9658^{+0.0042}_{-0.0056}$| | |$0.9854^{+0.0085}_{-0.0071}$| | – | – | |

| τ | 0.0549 ± 0.0080 | 0.0579 ± 0.0072 | – | – |

| 0.0544 ± 0.0079 | 0.0580 ± 0.0073 | – | – |

| Parameter . | CMB . | CMB + Lensing + BAO + SN . | BAO + BBN . | BAO + BBN + SN . |

|---|---|---|---|---|

| H0 | |$68.17^{+0.77}_{-1.4}$| | 71.1 ± 1.0 | |$70.3^{+1.4}_{-2.7}$| | 74.0 ± 1.4 |

| |$67.45^{+0.71}_{-0.89}$| | |$71.0^{+1.4}_{-1.0}$| | |$68.8^{+1.6}_{-2.4}$| | 73.9 ± 1.5 | |

| Ωbh2 | |$0.02250^{+0.00017}_{-0.00025}$| | 0.022 84 ± 0.000 22 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 |

| |$0.02239^{+0.00014}_{-0.00018}$| | 0.022 83 ± 0.000 23 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 | |

| Ωch2 | |$0.1229^{+0.0019}_{-0.0042}$| | 0.1291 ± 0.0039 | |$0.1240^{+0.0098}_{-0.013}$| | 0.1398 ± 0.0089 |

| |$0.1207^{+0.0011}_{-0.0021}$| | |$0.1284^{+0.0051}_{-0.0038}$| | |$0.118^{+0.011}_{-0.013}$| | 0.1397 ± 0.0090 | |

| log(1010As) | 3.050 ± 0.017 | 3.065 ± 0.015 | 3.12 ± 0.13 | 3.08 ± 0.11 |

| 3.046 ± 0.017 | 3.064 ± 0.015 | 3.15 ± 0.13 | 3.08 ± 0.11 | |

| ns | |$0.9700^{+0.0052}_{-0.0089}$| | 0.9865 ± 0.0070 | – | – |

| |$0.9658^{+0.0042}_{-0.0056}$| | |$0.9854^{+0.0085}_{-0.0071}$| | – | – | |

| τ | 0.0549 ± 0.0080 | 0.0579 ± 0.0072 | – | – |

| 0.0544 ± 0.0079 | 0.0580 ± 0.0073 | – | – |

Constraints on cosmological parameters marginalized over the model uncertainty with the flat model prior assumption (top row) and the Occam’s prior (bottom row). We report 68 per cent credible intervals for each parameter.

| Parameter . | CMB . | CMB + Lensing + BAO + SN . | BAO + BBN . | BAO + BBN + SN . |

|---|---|---|---|---|

| H0 | |$68.17^{+0.77}_{-1.4}$| | 71.1 ± 1.0 | |$70.3^{+1.4}_{-2.7}$| | 74.0 ± 1.4 |

| |$67.45^{+0.71}_{-0.89}$| | |$71.0^{+1.4}_{-1.0}$| | |$68.8^{+1.6}_{-2.4}$| | 73.9 ± 1.5 | |

| Ωbh2 | |$0.02250^{+0.00017}_{-0.00025}$| | 0.022 84 ± 0.000 22 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 |

| |$0.02239^{+0.00014}_{-0.00018}$| | 0.022 83 ± 0.000 23 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 | |

| Ωch2 | |$0.1229^{+0.0019}_{-0.0042}$| | 0.1291 ± 0.0039 | |$0.1240^{+0.0098}_{-0.013}$| | 0.1398 ± 0.0089 |

| |$0.1207^{+0.0011}_{-0.0021}$| | |$0.1284^{+0.0051}_{-0.0038}$| | |$0.118^{+0.011}_{-0.013}$| | 0.1397 ± 0.0090 | |

| log(1010As) | 3.050 ± 0.017 | 3.065 ± 0.015 | 3.12 ± 0.13 | 3.08 ± 0.11 |

| 3.046 ± 0.017 | 3.064 ± 0.015 | 3.15 ± 0.13 | 3.08 ± 0.11 | |

| ns | |$0.9700^{+0.0052}_{-0.0089}$| | 0.9865 ± 0.0070 | – | – |

| |$0.9658^{+0.0042}_{-0.0056}$| | |$0.9854^{+0.0085}_{-0.0071}$| | – | – | |

| τ | 0.0549 ± 0.0080 | 0.0579 ± 0.0072 | – | – |

| 0.0544 ± 0.0079 | 0.0580 ± 0.0073 | – | – |

| Parameter . | CMB . | CMB + Lensing + BAO + SN . | BAO + BBN . | BAO + BBN + SN . |

|---|---|---|---|---|

| H0 | |$68.17^{+0.77}_{-1.4}$| | 71.1 ± 1.0 | |$70.3^{+1.4}_{-2.7}$| | 74.0 ± 1.4 |

| |$67.45^{+0.71}_{-0.89}$| | |$71.0^{+1.4}_{-1.0}$| | |$68.8^{+1.6}_{-2.4}$| | 73.9 ± 1.5 | |

| Ωbh2 | |$0.02250^{+0.00017}_{-0.00025}$| | 0.022 84 ± 0.000 22 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 |

| |$0.02239^{+0.00014}_{-0.00018}$| | 0.022 83 ± 0.000 23 | 0.022 36 ± 0.000 37 | 0.022 36 ± 0.000 37 | |

| Ωch2 | |$0.1229^{+0.0019}_{-0.0042}$| | 0.1291 ± 0.0039 | |$0.1240^{+0.0098}_{-0.013}$| | 0.1398 ± 0.0089 |

| |$0.1207^{+0.0011}_{-0.0021}$| | |$0.1284^{+0.0051}_{-0.0038}$| | |$0.118^{+0.011}_{-0.013}$| | 0.1397 ± 0.0090 | |

| log(1010As) | 3.050 ± 0.017 | 3.065 ± 0.015 | 3.12 ± 0.13 | 3.08 ± 0.11 |

| 3.046 ± 0.017 | 3.064 ± 0.015 | 3.15 ± 0.13 | 3.08 ± 0.11 | |

| ns | |$0.9700^{+0.0052}_{-0.0089}$| | 0.9865 ± 0.0070 | – | – |

| |$0.9658^{+0.0042}_{-0.0056}$| | |$0.9854^{+0.0085}_{-0.0071}$| | – | – | |

| τ | 0.0549 ± 0.0080 | 0.0579 ± 0.0072 | – | – |

| 0.0544 ± 0.0079 | 0.0580 ± 0.0073 | – | – |

Constraints on the EDE model parameters from the different data sets taken into consideration in our analysis.

| Data set . | Θi . | fEDE . | log(10 zEDE) . |

|---|---|---|---|

| CMB | |$2.15^{+0.93}_{-0.31}$| | |$0.045^{+0.010}_{-0.034}$| | 3.65 ± 0.21 |

| CMB + Lensing | |$2.58^{+0.35}_{+0.036}$| | |$0.101^{+0.035}_{-0.029}$| | |$3.62^{+0.22}_{-0.15}$| |

| + BAO + SN | |||

| BAO + BBN | |$2.601^{+0.057}_{-0.17}$| | |$0.110^{+0.077}_{-0.10}$| | 3.573 ± 0.085 |

| BAO + BBN | <2.20 | |$0.238^{+0.062}_{-0.083}$| | 3.73 ± 0.29 |

| + SN |

| Data set . | Θi . | fEDE . | log(10 zEDE) . |

|---|---|---|---|

| CMB | |$2.15^{+0.93}_{-0.31}$| | |$0.045^{+0.010}_{-0.034}$| | 3.65 ± 0.21 |

| CMB + Lensing | |$2.58^{+0.35}_{+0.036}$| | |$0.101^{+0.035}_{-0.029}$| | |$3.62^{+0.22}_{-0.15}$| |

| + BAO + SN | |||

| BAO + BBN | |$2.601^{+0.057}_{-0.17}$| | |$0.110^{+0.077}_{-0.10}$| | 3.573 ± 0.085 |

| BAO + BBN | <2.20 | |$0.238^{+0.062}_{-0.083}$| | 3.73 ± 0.29 |

| + SN |

Constraints on the EDE model parameters from the different data sets taken into consideration in our analysis.

| Data set . | Θi . | fEDE . | log(10 zEDE) . |

|---|---|---|---|

| CMB | |$2.15^{+0.93}_{-0.31}$| | |$0.045^{+0.010}_{-0.034}$| | 3.65 ± 0.21 |

| CMB + Lensing | |$2.58^{+0.35}_{+0.036}$| | |$0.101^{+0.035}_{-0.029}$| | |$3.62^{+0.22}_{-0.15}$| |

| + BAO + SN | |||

| BAO + BBN | |$2.601^{+0.057}_{-0.17}$| | |$0.110^{+0.077}_{-0.10}$| | 3.573 ± 0.085 |

| BAO + BBN | <2.20 | |$0.238^{+0.062}_{-0.083}$| | 3.73 ± 0.29 |

| + SN |

| Data set . | Θi . | fEDE . | log(10 zEDE) . |

|---|---|---|---|

| CMB | |$2.15^{+0.93}_{-0.31}$| | |$0.045^{+0.010}_{-0.034}$| | 3.65 ± 0.21 |

| CMB + Lensing | |$2.58^{+0.35}_{+0.036}$| | |$0.101^{+0.035}_{-0.029}$| | |$3.62^{+0.22}_{-0.15}$| |

| + BAO + SN | |||

| BAO + BBN | |$2.601^{+0.057}_{-0.17}$| | |$0.110^{+0.077}_{-0.10}$| | 3.573 ± 0.085 |

| BAO + BBN | <2.20 | |$0.238^{+0.062}_{-0.083}$| | 3.73 ± 0.29 |

| + SN |

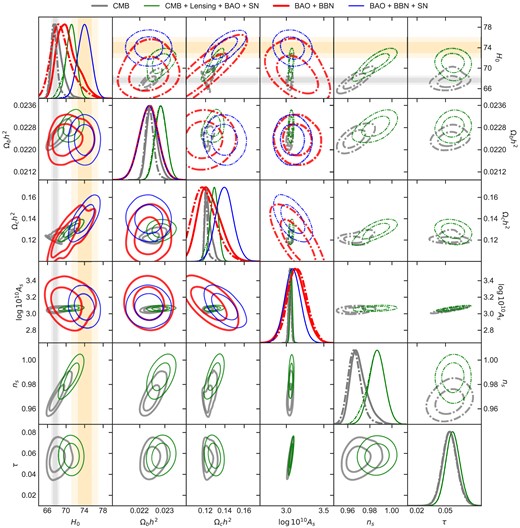

Fig. 3 shows the full posterior probability distributions, after the model uncertainty marginalization, for both the flat and the Occam’s model priors. We shall make a few observations about the impact of the BMA on cosmological parameters’ posteriors. First, the error bars increase with respect to the model-conditional parameter posterior error bars, and it is completely due to the additional model uncertainty considered in the analysis; the impact is more relevant with a flat model prior, especially for those data sets with a lower preference for one specific model, namely CMB and BAO + BBN. Because the EDE model was introduced to solve the Hubble tension, any preference for it using both SN and high-redshift data cannot be interpreted at the same level as if this was a model suggested before the tension became apparent. Ideally, we would therefore like to see a preference for the EDE model from one of other data set, rather than the combination. The results in Fig. 3 show that this is not the case, and the high-redshift data, when analysed on their own do not show a preference for either model. The effect of the Occam’s prior is mostly visible in those very data sets, but the effect is to shrink the parameters’ uncertainties towards those of the model-conditional’s parameter posteriors. Conversely, we notice the opposite behaviour for CMB + Lensing + BAO + SN and BAO + BBN + SN, where the model posterior expresses a strong preference for the EDE model with a flat model prior, whereas the Occam’s prior increases the ΛCDM model probability and therefore broadens the cosmological parameters’ constraints. In the upper-left tile, and throughout the first column/row of the plot, we included the Planck 2018 68 per cent and 95 per cent confidence levels on H0: from Planck Collaboration VI (2020b), we report the TT + EE + TE + low E + Lensing + BAO constraint of H0 = 67.66 ± 0.42, and similarly for SNIa (Riess et al. 2019) we report the value H0 = 74.03 ± 1.42. Both these constraints assume a flat ΛCDM model, and interestingly, the H0 tension is mitigated when accounting for the additional model uncertainty for most of the data set combinations, from ≈1σ in the Planck-only case to ≲1σ in the BAO + BBN case, with a flat model prior. This does not come as a surprise. In fact, we would ideally expect such posterior incompatibility to be even further reduced by accounting for more models; those may include new and different physics or different data modelling.

Comparison of the six ΛCDM parameters marginalized over the model uncertainty with a flat model prior (bottom triangle, solid lines) and the Occam’s prior (upper triangle, dot–dashed lines) from CMB, CMB + Lensing + BAO + SN, BAO + BBN, and BAO + BBN + SN; the parameters shown are the Hubble parameter today H0, the baryon density parameter Ωbh2, the cold dark matter density parameter Ωch2, the scalar perturbation amplitude log(1010As), the scalar perturbation spectral index ns, and the optical depth of reionization τ. For the BAO + BBN and BAO + BBN + SN, the last two parameters are not constrained by the data, and therefore not available in the plot. We included as vertical (horizontal) bands, the constraint (68 per cent and 95 per cent CL) on H0 from Planck 2018 (Planck Collaboration VI 2020b), and from SNIa (Riess et al. 2019), both under the ΛCDM model assumption.

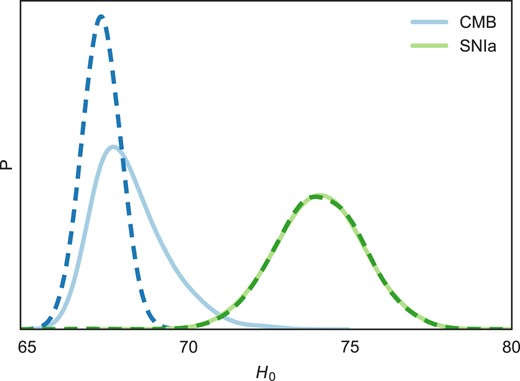

In the context of the H0 tension, we present in Fig. 4 a direct comparison of H0 from CMB and SNIa data sets independently. This is the very original argument leading to the notorious H0 tension. The dashed lines refer to the constraints obtained assuming (conditioning on, in the probabilistic context) the ΛCDM model. Solid lines show the constraints after marginalizing over the model uncertainty by taking ΛCDM and EDE into account. The H0 posterior distribution from CMB broadens, and the parameter estimate changes from 67.29 ± 0.61 in the ΛCDM model to |$68.17^{+0.77}_{-1.4}$| after the marginalization, with a relative increase in the standard deviation of

relieving the H0 tension by ≈1σ. The H0 constraint from SNIa, on the other hand, shows no difference in the final posterior between the conditional and the model marginal case. This is due to the very high ΛCDM model probability of the SN data that is mostly attributed to the insensitivity of SNIa measurements to the EDE parameters. Another way to understand this is that the model selection favours ΛCDM by virtue of the smaller number of model parameters necessary to describe the SNIa data.

Posterior distribution of H0 from CMB and SNIa under the ΛCDM model assumption (dashed line) and after marginalizing over the model uncertainty between ΛCDM and EDE (solid line), assuming a flat model prior.

4.2 Assessing convergence

With any MCMC strategy, it is important to assess convergence in order to ensure that one is sampling from the appropriate target distribution (the posterior). In the RJ-MCMC approach, where the model itself is sampled just like any other parameter, one must also consider convergence of the model choice. By contrast in the within-model strategy, the model is not sampled as part of the MCMC; rather, one would assess convergence for cosmological parameters separately for each model-conditional MCMC according to the Gelman–Rubin criterion on R − 1 (Gelman & Rubin 1992). Once these chains have converged, however, a natural question is whether one has achieved a stable estimate of the model posterior.

Consider an analogous Gelman–Rubin statistic for the collapsed chains:

where |$\sigma ^2_{\rm {BW}}$|4 and |$\sigma ^2_{\rm {WC}}$| refer to the between-chain variance and the within-chain variance of the model posterior probability |$P(M_{\rm {i}}\vert \boldsymbol{d})$|, respectively. The latter is actually to be intended, in our context, as the collapsed within-chain variance if we stack the ith chains from each MCMC conditional run in order to compute the model posterior probability. Moreover, there is a further complication with the evaluation of the within-chain variance of the model probability. Because equation (13) is based on the harmonic mean, to estimate the within-chain variance, we use a second-order Taylor expansion of |$P(M_{\rm {i}}\vert \boldsymbol{d})$| described in Appendix B.

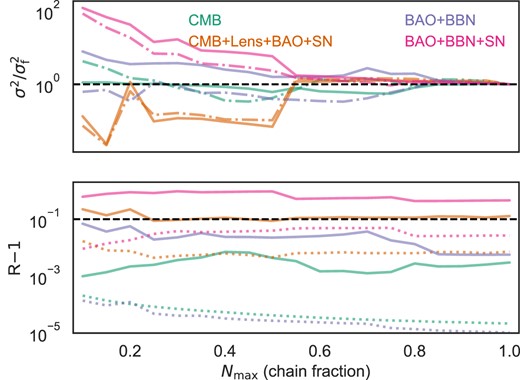

The bottom panel of Fig. 5 shows R − 1 for the considered data sets as a function of the fraction of the overall number of samples.. A good rule of thumb is to consider the chains converged if R − 1 < 0.1 (or equivalently R < 1.1; Gelman & Rubin 1992). Our analysis shows that the CMB and BAO + BBN chains achieved the required convergence already with 10 per cent of samples under the flat-prior assumption, and the CMB + Lensing + BAO + SN with |${\sim} 30~{{\ \rm per\ cent}}$|, whereas BAO + BBN + SN require longer chains, |${\sim} 50~{{\ \rm per\ cent}}$|, to get R − 1 ∼ 0.1. On the other hand, the Occam’s prior assumption shows a more robust convergence, easily understood since we are casting the ΛCDM model with a 90 per cent prior probability, and therefore most of the information is already in the model-conditional chains for which convergence has already been assessed and achieved.

In the bottom panel, R − 1 for the considered data sets as a function of the fraction of the overall number of samples. The solid line refers to the flat model prior, the dotted line the Occam’s prior; we plot R − 1 = 0.1 with black dashed lines. On the top panel, the between-chain variance (solid line) and the within-chain variance (dashed) as a function of the overall number of samples, normalized by the value computed for the full chain length |$\sigma ^2_{\rm {f}}$|. The horizontal dashed lines refer to unitary value for the variance ratio.

The top panel of Fig. 5 shows the individual variance contributions to the computation of R, namely the between-chain variance (solid lines) and the collapsed within-chain variance (dashed lines) – referring to collapsed couples of model-conditional chains – as functions of the fraction of the total chain length and normalized for the value at the full chain length. This illustration can be very helpful to understand the behaviour of the variance of the model posterior distribution that as already mentioned in this section, does not necessarily converge along with the within-model parameters’ posteriors. In fact, we notice that it becomes stable for an overall chain length fraction of ≳0.5−0.6, for which the within-model parameters are still not converged.

5 CONCLUSIONS

We have presented an efficient BMA algorithm tailored for cosmological analyses called fast-mpc. We have used this to investigate the pressing issue of the Hubble constant tension (H0 tension) within the framework of modern cosmology. Our work has demonstrated the power of BMA to decide between models in a clear and easy to understand way, while providing a robust and accurate characterization of the Universe’s fundamental properties, by providing parameter constraints that marginalize over multiple models.

Through the application of fast-mpc to a diverse set of cosmological data sets, including CMB, BAO, and SNIa observations, we have considered the EDE solution to the Hubble tension in the Bayesian framework. Any Bayesian comparison between models must include a prior, as with any other parameter, and BMA makes these priors explicit. By adopting a Bayesian perspective that incorporates model uncertainty, we have performed a more general cosmological parameter estimation, ultimately yielding more general constraints on cosmological parameters that allow EDE as a possible, but not the only, solution. We found that we cannot distinguish between the two models using CMB data alone, and therefore the additional model uncertainty leads to an |${\sim} 92~{{\ \rm per\ cent}}$| larger credible interval for H0 when a flat model prior is applied. On the other hand, BAO + BBN and SNIa data sets show a preference for the ΛCDM model, with model uncertainty contributions to the overall H0 posterior width of |${\sim} 155~{{\ \rm per\ cent}}$| for the former, and null for the latter, for a flat model prior. Similarly, we found that the combined data sets CMB + lensing + BAO + SN and BAO + BBN + SN show a |${\gtrsim} 90~{{\ \rm per\ cent}}$| EDE model probability for both flat and Occam’s priors, with an uncertainty increase in H0 of |${\sim} 210~{{\ \rm per\ cent}}$| for CMB + lensing + BAO + BBN and |${\sim} 84~{{\ \rm per\ cent}}$| for BAO + BBN + SN.

Our findings underscore the broader potential of BMA as a valuable tool for cosmological investigations. This work highlights the necessity of considering a diverse range of cosmological models and the importance of quantifying the associated uncertainties. Though the potential for BMA in cosmology has been acknowledged elsewhere (see Parkinson & Liddle 2013), uptake has been slow, possibly due to the computational challenges of between-model sampling strategies like RJ-MCMC. By contrast, the simple computational approach presented here – which involves only post-hoc computation of standard model-conditional MCMC chains – has the potential to make BMA accessible in a wide array of problems, including large-scale cosmological analyses.

In an era of precision cosmology where ever more precise measurements are continually pushing the boundaries of our understanding, the fast-mpc algorithm offers a robust and adaptable approach for model selection, accommodating the complexity of modern cosmological data sets. As we continue to refine our understanding of the Universe, BMA stands ready to contribute to more comprehensive and insightful cosmological investigations in the future.

ACKNOWLEDGEMENTS

All authors acknowledge support from the Canadian Government through a New Frontiers in Research Fund (NFRF) Exploration grant. WJP acknowledges the support of the Natural Sciences and Engineering Research Council of Canada (NSERC) (funding reference number RGPIN-2019-03908) and from the Canadian Space Agency. GM acknowledges the support of the Natural Sciences and Engineering Research Council of Canada (NSERC) (RGPIN-2022-03068 and DGECR-2022-004433). Research at Perimeter Institute was supported in part by the Government of Canada through the Department of Innovation, Science and Economic Development Canada and by the Province of Ontario through the Ministry of Colleges and Universities. This research was enabled in part by support provided by Compute Ontario (computeontario) and the Digital Research Alliance of Canada (alliancecan).

DATA AVAILABILITY

The fast-mpc code along with the plotting script used to generate the figures in this paper is publicly available at https://github.com/simonpara/Fast-MPC. All the data we used in this work are delivered within the MCMC sampler cobaya, at https://github.com/CobayaSampler/cobaya.

Footnotes

It follows the usual definition: |$\sigma ^2_{\rm {BW}}=\frac{1}{\rm {\mathit{ J}}-1}\sum _{\rm {\mathit{ i}}=1}^{\rm {J}}(\bar{x}_{\rm {\mathit{ i}}}-\langle \bar{x}_{\rm {\mathit{ i}}}\rangle)^2$|, where |$\bar{x_{\rm {i}}}$| is the parameter’s average for the ith chain, J is the number of chains, and |$\langle \bar{x}_{\rm {\mathit{ i}}}\rangle$| is the average between all the chains.

References

APPENDIX A: MODEL-CONDITIONAL CHAINS WEIGHTING VALIDATION THROUGH A TOY MODEL

As explained in Section 3, our fast model posterior computation approach (fast-mpc) should achieve comparable results to an RJ-MCMC routine. To demonstrate this agreement, we present a simple example with two models. We perform BMA both with the fast-mpc method and with an RJ-MCMC and compare the results from the two analyses, and we find that the methods agree to within 3.5 per cent.

The simple problem we choose is set up as follows: Model 1 (M1) is a horizontal line y(x) = m and Model 2 (M2) is the line y(x) = x + m. The single parameter to be sampled in the MCMC, y(0) = m, is constrained by the data set consisting of two data points {(0, 0), (1, 0)}, with independent errors distributed normally with a variance of 0.5. We consider a flat model prior where each model is initially assumed to be equally likely; that is, P(Mi) = 0.5 for i = 1, 2.

We first fit each candidate model separately, running four model-conditional chains per model via standard MCMC sampler. For each chain, a preliminary set of 1000 samples is collected in order to compute the covariance of the parameters. This covariance is used as the covariance of the multivariate normal proposal distribution inside the MCMC sampler for the main conditional chains, which runs over 10 000 samples. We then use the fast-mpc methodology outlined in Section 3 to compute the model posteriors from the set of conditional chains.

Next, we run the RJ-MCMC chains, similarly computing the covariance for each model with 1000 initial samples and running the main chains over 10 000 samples. An RJ-MCMC sampler essentially builds a single chain from parameter samples from all the models it considers. In contrast to a classical MCMC, at each link in the chain the RJ-MCMC chooses to either accept a new point within the model it is currently in, accept a new point in another model that is more probable, or remain at its current location; in other words, the model choice is itself a parameter to be sampled. In this way, the RJ-MCMC will (after converging) choose to spend its time in each model according to the posterior probability of that model. Thus, to retrieve the model posteriors from an RJ-MCMC chain, one simply counts the number of iterations the sampler spent in each model. If the number of iterations in Model 1 and Model 2 are denoted N1 and N2, respectively, the calculation for the model posteriors is straightforward:

where K is the total number of models being compared (K = 2 in this example). The resulting model posteriors from both BMA methods are reported in Table A1.

Model posteriors retrieved for the toy model by fast-mpc and the RJ-MCMC chains. The posteriors are reported as [M1, M2]. These results were retrieved using 40 per cent burn-in for the model-conditional chains.

| Methodology . | Model posteriors . |

|---|---|

| fast-mpc | |$[57\,\mathrm{ per}\,\mathrm{ cent},43\,\mathrm{ per}\,\mathrm{ cent}]$| |

| RJ-MCMC | |$[58\,\mathrm{ per}\,\mathrm{ cent},42\,\mathrm{ per}\,\mathrm{ cent}]$| |

| Methodology . | Model posteriors . |

|---|---|

| fast-mpc | |$[57\,\mathrm{ per}\,\mathrm{ cent},43\,\mathrm{ per}\,\mathrm{ cent}]$| |

| RJ-MCMC | |$[58\,\mathrm{ per}\,\mathrm{ cent},42\,\mathrm{ per}\,\mathrm{ cent}]$| |

Model posteriors retrieved for the toy model by fast-mpc and the RJ-MCMC chains. The posteriors are reported as [M1, M2]. These results were retrieved using 40 per cent burn-in for the model-conditional chains.

| Methodology . | Model posteriors . |

|---|---|

| fast-mpc | |$[57\,\mathrm{ per}\,\mathrm{ cent},43\,\mathrm{ per}\,\mathrm{ cent}]$| |

| RJ-MCMC | |$[58\,\mathrm{ per}\,\mathrm{ cent},42\,\mathrm{ per}\,\mathrm{ cent}]$| |

| Methodology . | Model posteriors . |

|---|---|

| fast-mpc | |$[57\,\mathrm{ per}\,\mathrm{ cent},43\,\mathrm{ per}\,\mathrm{ cent}]$| |

| RJ-MCMC | |$[58\,\mathrm{ per}\,\mathrm{ cent},42\,\mathrm{ per}\,\mathrm{ cent}]$| |

To assess the agreement of the model posterior results, we ran 100 model-conditional chains – 50 for each model – and calculate the average model posteriors across the 50 sets of chains with the fast-mpc method. We also compute the average model posteriors for 50 RJ-MCMC chains. Comparing these averages, we find that the average model posteriors agree with each other within their standard errors. The average values and their errors are reported in Table A2.

Average model posteriors computed over 50 chains in each method and their standard errors, showing the agreement between the results of the fast-mpc and RJ-MCMC methods for model posterior computation. The model-conditional chains were all analysed with 40 per cent burn-in removed.

| Methodology . | M1 . | M2 . |

|---|---|---|

| fast-mpc | (59 ± 1) per cent | (41 ± 1) per cent |

| RJ-MCMC | (57.9 ± 0.3) per cent | (42.1 ± 0.3) per cent |

| Methodology . | M1 . | M2 . |

|---|---|---|

| fast-mpc | (59 ± 1) per cent | (41 ± 1) per cent |

| RJ-MCMC | (57.9 ± 0.3) per cent | (42.1 ± 0.3) per cent |

Average model posteriors computed over 50 chains in each method and their standard errors, showing the agreement between the results of the fast-mpc and RJ-MCMC methods for model posterior computation. The model-conditional chains were all analysed with 40 per cent burn-in removed.

| Methodology . | M1 . | M2 . |

|---|---|---|

| fast-mpc | (59 ± 1) per cent | (41 ± 1) per cent |

| RJ-MCMC | (57.9 ± 0.3) per cent | (42.1 ± 0.3) per cent |

| Methodology . | M1 . | M2 . |

|---|---|---|

| fast-mpc | (59 ± 1) per cent | (41 ± 1) per cent |

| RJ-MCMC | (57.9 ± 0.3) per cent | (42.1 ± 0.3) per cent |

The toy model simulation successfully demonstrates that the fast-mpc method for computing model posteriors is comparable in performance to the RJ-MCMC method for models that have similar posterior probabilities, and can be used to accurately compare a small number of models without the need for a more complicated RJ-MCMC infrastructure.

APPENDIX B: MODEL POSTERIOR FOR COLLAPSED WITHIN-CHAIN VARIANCE

The Gelman–Rubin statistic is a valuable tool in Bayesian statistics and MCMC methods to assess convergence of multiple chains in MCMC simulations. Its primary purpose is to determine whether these chains have reached a stationary distribution and, thus, provide reliable estimates of posterior distributions. In effect, MCMC techniques are employed to sample from complex probability distributions, particularly when analytical solutions are not available. Multiple chains are run in parallel, each starting from different initial values. The goal is to ensure that these chains converge to the same stationary distribution, which indicates that the MCMC algorithm has sufficiently explored the parameter space. In order to assess the convergence of chains, the Gelman–Rubin statistic, expressed through the parameter R, quantifies the ratio of the between-chain variance to the within-chain variance. When chains have not yet converged, the between-chain variance is expected to overestimate the converged chain variance; conversely, the within-chain variance is expected to underestimate it. As already introduced in Section 4, we adopt an analogous Gelman–Rubin statistic to assess the stability of the model posterior estimate. The within-chain variance is computed by stacking chains from individual model-conditional MCMC runs. This means that we are actually computing the within-chain variance from a pair of collapsed chains from two different runs, and will refer to this quantity as the collapsed within-chain variance.

A general prescription to compute R is as follows:

compute the between-chain variance |$\sigma ^2_{\rm {BC}}$| using the mean of all chains and the variance of the chain means;

calculate the within-chain variance |$\sigma ^2_{\rm {WC}}$|;

plug |$\sigma ^2_{\rm {BC}}$| and |$\sigma ^2_{\rm {WC}}$| into equation (15) to compute R.

For the purposes of comparing between-to within-chain variance, we treat the data |$\boldsymbol{d}$| as fixed.

As anticipated, the computation of (ii) does not come straightforwardly in fast-mpc. We describe here how to compute the collapsed within-chain variance for the model posterior |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$| defined in equation (5). The estimator |$H = \hat{P}(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$| introduced in equation (13) is the harmonic mean of the conditional likelihoods |$X_{\rm {\mathit{ t}}}=P(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}}, \boldsymbol{\theta }_{\rm {\mathit{ i}}}^{(\rm {t})})$| for the model M i.

It is sometimes more convenient to consider the reciprocal of H:

where we kept the notation introduced in the main text. Let us therefore define the quantities

where R stands for reciprocal.

Using the central limit theorem, provided that in our case |$X_{\rm {t}}^{-1}$| has finite variance we can demonstrate that

and using the delta method for a function g(x) = x−1 we obtain the useful result:

which in our case gives

In our analysis, H = H(M i) for |$\rm {\mathit{ i}}=1,2$|, and we want to estimate the variance of |$P(M_{\rm {\mathit{ i}}}\vert \boldsymbol{d})$|. Without loss of generality, consider i = 1:

where we used the first-order Taylor expansion of 1/x ≈ 1 − (x − 1) applied to the definition of |$P(M_{\rm {i}}\vert \boldsymbol{d})$| given in equation (5). The first-order Taylor expansion of the distribution |$f(M_1,M_2) = 1-\frac{\hat{P}(\boldsymbol{d}\vert M_{\rm {2}})}{\hat{P}(\boldsymbol{d}\vert M_{\rm {1}})}$| around

provides an estimator of equation (B7):

where we neglected the covariance term by means of the independence of estimator |$\hat{P}(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$| for different i. That is, treating |$\boldsymbol{d}$| as fixed, the covariance term is equal to zero by the independence of model-conditional chains whose samples are separately used to compute |$\hat{P}(\boldsymbol{d}\vert M_{\rm {\mathit{ i}}})$|, i = 1, 2. Note that |$\boldsymbol{d}$| is treated as fixed (i.e. not random) in the calculation of between-chain variance because all chains use the same data. In order for the within-chain variance to be comparable to the between-chain variance, it too should treat |$\boldsymbol{d}$| as fixed. This means the randomness in |$\hat{P}(\boldsymbol{d}\vert M_i)$| is attributed to the parameters randomly sampled in different chains. Since each chain is run independently, the samples from different chains are also independent.

Equation (B9) can be conveniently evaluated from the quantities μi and |$\sigma ^2(\boldsymbol{d}\vert P(M_{\rm {\mathit{ i}}}))$| defined by equations (13) and (B6), respectively. Using this formula, we can determine the within-chain variance, and compare against the between-chain variance to assess estimation stability for the fast-mpc method.