-

PDF

- Split View

-

Views

-

Cite

Cite

J D Cohn, Nicholas Battaglia, Multiwavelength cluster mass estimates and machine learning, Monthly Notices of the Royal Astronomical Society, Volume 491, Issue 2, January 2020, Pages 1575–1584, https://doi.org/10.1093/mnras/stz3087

Close - Share Icon Share

ABSTRACT

One emerging application of machine learning methods is the inference of galaxy cluster masses. In this note, machine learning is used to directly combine five simulated multiwavelength measurements in order to find cluster masses. This is in contrast to finding mass estimates for each observable, normally by using a scaling relation, and then combining these scaling law based mass estimates using a likelihood. We also illustrate how the contributions of each observable to the accuracy of the resulting mass measurement can be compared via model-agnostic Importance Permutation values. Thirdly, as machine learning relies upon the accuracy of the training set in capturing observables, their correlations, and the observational selection function, and as the machine learning training set originates from simulations, two tests of whether a simulation’s correlations are consistent with observations are suggested and explored as well.

1 INTRODUCTION

Galaxy clusters are the most massive bound structures in the Universe and are often complex. This is in part due to their tendencies to be triaxial, to lie in an anisotropic environment at intersections (nodes) of the cosmic web, and to be recently formed. A cluster’s host halo can be characterized by its mass, which characterizes many properties of the gas, galaxies and other quantities within it (for a recent review, see Wechsler & Tinker 2018). Clustering with other haloes is also most affected by a cluster’s mass (Kaiser 1984; Cole & Kaiser 1988; Efstathiou et al. 1988; Mo & White 1996), although there are secondary (‘assembly bias’) effects that are also under study, both for clustering and for other cluster properties, such as number of galaxies within. There also is interest in cluster masses due to the sensitivity of the cluster mass distribution to cosmological parameters (e.g. Voit 2005; Allen et al. 2011; Borgani & Kravtsov 2011).

A particular cluster boundary definition specifies a cluster’s halo and its mass corresponding to the particular definition. In a simulation, a definition of where a cluster halo begins and where its environment ends (given the physics included in the simulation) can be precisely applied to find the mass, especially using the available three-dimensional spatial and velocity information. Observationally, it is much harder to disentangle a cluster from its surroundings and from direction-dependent effects, and to account for confounding dynamical effects. For example, cluster mass can be indicated by the number of galaxies the cluster hosts (richness), but galaxies are observed in redshift space and thus can lie several Mpc from the cluster but appear to be within it. The hot cluster gas scatters cosmic microwave background (CMB) photons en route to us via the Sunyaev–Zel’dovich effect (Sunyaev & Zel’dovich 1972), effectively leaving a shadow on the CMB, but other mass along the line of sight to the cluster can also scatter these photons and thus modify the signal. Weak lensing shear captures all the mass in the cluster, but only as part of capturing all the mass between the galaxy being sheared (located behind the cluster) and us (in front of the cluster). Although X-ray measurements tend to only track the hot gas within the cluster, the dynamical state of the gas can significantly modify estimates, and even for X-ray, projection effects can contaminate the sample (e.g. Ramon-Ceja et al. 2019). There is also a suggested dynamic cluster ‘edge’ definition based upon velocities and positions of galaxies in a cluster, via their splashback (e.g. Adhikari et al. 2014; More et al. 2015) or backsplash (e.g. Gill et al. 2005) radius. These dynamics are used in the caustics (Rines et al. 2003) method to find cluster masses, which has scatters due to interlopers and line-of-sight variations in velocity dispersions (e.g. Gifford et al. 2013).

Multiwavelength measurements of clusters can be combined to get a more complete picture of any given individual cluster or of a distribution of clusters. Often, the route for determining a cluster’s mass is to find a mass–observable relation for each cluster property, separately, e.g. galaxy richness or SZ flux. These fits (usually power-law scaling relations) convert observables into mass estimates. For multiwavelength measurements, these power-law–based mass estimates plus their estimated errors are combined in a likelihood to estimate the underlying cluster mass (see Allen et al. 2011 for a detailed review and discussion). However, as the scatter between the true and estimated mass for each observable is often due to the cluster’s triaxiality, anisotropic environment and other physical cluster properties, the mass estimate errors using different observables can be correlated. These mass error correlations should be represented faithfully in the analysis, or they can impose a bias (see e.g. Rykoff et al. 2008; Stanek et al. 2010; White et al. 2010; Allen et al. 2011; Angulo et al. 2012; Shirasaki et al. 2016 for more details and examples of correlations). Correlations are also possible between the scatter in the observable used to select the clusters and observables used to measure the cluster masses. To summarize, observables are each converted to cluster mass via an observable specific scaling relation (usually a power law), and then combined using correlations whose form is either marginalized over or estimated from simulations.

In this note, machine learning is suggested as a method to obtain multiwavelength mass estimates of a cluster directly from the observational quantities, skipping the intermediate step of matching observations to average relations for mass (of a predetermined form, i.e. scaling relations, plus estimating their correlated mass errors) and instead going straight to the mass prediction of the combined observations. One reason one might expect this approach to be successful is that scatter around the true mass for multiwavelength observations can be analysed using principal component analysis (Noh & Cohn 2012), where it can be seen that the leading contribution to the scatter for each observable tends to be occur in a certain combination with that of other observables.

This suggested approach follows the philosophy of Ho et al. (2019) and earlier papers (Ntampaka et al. 2015, 2016, 2017). There, galaxy cluster masses are estimated by using cluster galaxy velocities, but instead of turning all the cluster velocities into a single velocity dispersion and mapping the dispersion into a mass using a mean relation (approximate fit), the individual velocities are given to a machine learning algorithm to encode the more detailed relations between the different galaxy velocities, their angular positions, and the cluster final mass. See also the excellent paper by Armitage et al. (2019), which combines, with no measurement errors (but fixed length cylinders to include some projection effects), several X-ray, SZ, galaxy angular position, velocity dispersion, and count properties to obtain machine learning cluster masses. They consider a large range of features and include the gradient boost method as we do, below, but not the other two random forest related methods, which we found had better success. Amongst the methods they tested, they found the most success with ordinary linear regression and variants of ridge regression.

Here, using a simulation which has several different multiwavelength mock cluster observations, machine learning is used to

calculate the mass directly from the combination of the different multiwavelength observables, without going through a mass estimate for each,

compare subsets of multiwavelength measurements to each other to identify which combinations give the smallest scatters and biases in predicting mass (within the measurement approximations which are made in the simulation), and

test for whether a given simulation correctly captures correlations between different observables (again, within the context of a specific mass–observable relation given by the simulation).

Having all multiwavelength mock observations made on the same simulation guarantees a shared model for all the observables which are being compared. However, this suggested approach is also limited in its usefulness by the accuracy of the simulation (and how well this accuracy is understood); hence, the interest in identifying tests for correlations such as are discussed below. This accuracy is also important for the usual scaling relations method, if a simulation is used for calibrating the scaling relation.

Again, using the various observations together in a machine learning algorithm is to be contrasted to mapping each individual observable’s measurement to mass via a pre-determined scaling relation plus scatter (from a simulation) and then combining probabilities. The hope is that the simultaneous fit might include more information from the relations between the multiwavelength measurements and thus improve the accuracy. In Section 2, the simulation measurements and machine learning methods are described. In Section 3.1, mass estimates are made and errors are characterized. In Section 3.3, a few ways of testing a simulation relative to observations are suggested and explored with a simulation (relative to a simulation with shuffled observables, i.e. with artificially suppressed correlations). Section 4 concludes.

2 METHODS AND DATA

We use a dark matter only simulation in a periodic box of side 200 Mpc h−1 with 15003 particles, evolved using the TREEPM (White 2002) code, and provided by Martin White. Cluster observables are assigned as in White et al. (2010, hereafter WCS), which can be consulted for details beyond those found below. Although the sample used for analysis in WCS itself has a slightly larger box with higher resolution, its cluster measurements did not extend to low enough mass to reasonably expect completeness for some cuts considered here. Combining the two samples did not seem appropriate, given their different resolutions and cosmologies.

The simulation background cosmological parameters are h = 0.72, n = 0.97, Ωm = 0.25, and σ8 = 0.8, slightly off from the best-estimated current cosmology (Planck collaboration 2016), but close enough for the concerns of interest in this note. There are outputs at 45 times equally spaced in ln (a) from z = 10 to 0. Haloes are found using a friends-of-friends (FoF) halo finder (Davis et al. 1985), with linking length b = 0.168 times the mean interparticle spacing. Masses quoted below are FoF masses. As the box is fairly small, only z = 0.1 is considered, with 117 haloes with |$M\gt 10^{14}\, h^{-1} \, \mathrm{M}_\odot$| and 339 haloes with |$M\gt 5 \times 10^{13}\, h^{-1} \, \mathrm{M}_\odot$|. Mock observations are made of five observables. For each observation, the cluster is placed at the centre of the box. To get more statistics and to explore the effect of anisotropy, triaxiality, and their correlations, each cluster is ‘observed’ via all five methods below, from 96 different isotropically distributed directions on a sphere. The methods are as follows:

Nred: Red galaxy richness based on the older MaxBCG mass estimator Koester et al. (2007); colours are assigned using Skibba & Sheth (2009), with redshift evolution as detailed in appendix B2 of WCS.

MF: Richness from matched filter based upon Yang et al. (2007). This gives each galaxy at position (R, Δr∥) from the cluster centre a weight made up of a projected NFW halo profile (Navarro et al. 1997) in the plane of the sky, times a Gaussian in Δr∥ along the line of sight, with the galaxy kept if this weight exceeds a threshold value of 10. The details are in equations (7)–(11) of Yang et al. (2007), with redshift Δz replaced with real space Δr∥, and H0 replaced with H. The matched filter dispersion and characteristic radius are based on the true halo mass.

vel: Velocity dispersions, calculated using the method described in detail in section 5.1 in WCS.

Yint: Sunyaev–Zel’dovich decrement y (Sunyaev & Zel’dovich 1972). Each particle is given the mean temperature associated with the velocity dispersion of its host halo. For each halo centre, the integration of the SZ signal is done within a disc of radius r180b perpendicular to the line of sight, through the entire box along the line of sight [apodized as in WCS (equation 1) to avoid a sharp boundary cut-off].

WL shear: Fit to a dispersion σζ using the ζ statistic and a singular isothermal sphere profile. These are described in section 3.3 of WCS. Roughly, ζ (WCS, equation 2) corresponds to subtracting the projected surface density in an annulus from that of a disc to decrease the contributions from material uncorrelated with the cluster. Then ζ is converted to |$\sigma _\zeta ^2$| by multiplying factors drawn from assuming some particular cluster profile, equation 3 in WCS. An isothermal sphere was used; similar results were obtained, as profiles and annuli parameters were varied (see WCS and the other papers referenced above for further detail on these techniques).

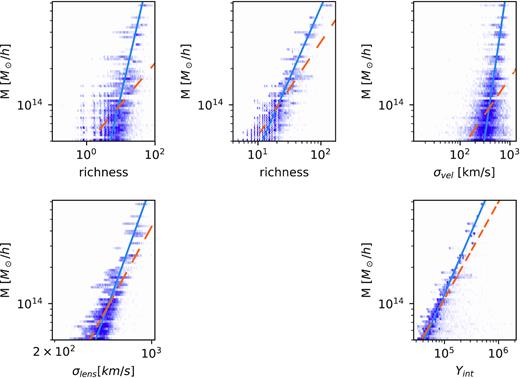

The distribution of the resulting observables as a function of mass is shown in Fig. 1. Lines are fit either using a least-squares fit (dashed line) or binning in 20 log Mtrue bins and finding the median observable in each and fitting to those, dropping bins with no clusters in them. These are meant to be mock observations, that is, relying primarily only on information available to an observer (rather than the three-dimensional velocities and positions, exact gas content, etc., which a simulator might be able to access). However, some of the mock observations have advantages over real sky observations: using true r180b and not including any survey-dependent residuals from foreground cleaning (SZ), using real space along the redshift direction and true halo mass matched filter dependence (MF), and neglecting survey dependent photometric redshift magnitude and shape errors and galaxy shape errors (WL shear). Also, the MaxBCG richness is not in standard use anymore; however, its replacement in several new and upcoming large surveys (redmapper, Rykoff et al. 2014) does not yet have a halo model or other description that would allow its richness prediction to be reliably calculated for this sample. [There are many other richness estimators possible, see Old et al. (2015) for a comparison of several of them, applied to two blinded data sets.]

Distribution of each observable for all the lines of sight of all haloes with |$M\gt 5 \times 10^{13}\, h^{-1} \, \mathrm{M}_\odot$| in the box. Solid lines in each log–log plots show scaling relations found by binning each observable in 20 bins of log mass, and then doing a least-squares fit, while dashed lines correspond to a direct least-squares fit.

For machine learning, for each mock multiwavelength observation, the input variables are the five observables (Nred, MF, vel, Yint, WL shear) for each cluster, along a single line of sight. The output is the true mass log10Mtrue for the training set, and the mass prediction log10Mpred for the testing set. The training set is a random selection of the set of cluster observations (1/10 of the sample), with testing done on the other 9/10. The machine learning programs used are GradientBoostingRegressor, ExtraTreesRegressor and RandomForestRegressor from sklearn in python.

The exact calls were as follows:

GradientBoostingRegressor(loss=’ls’, learning_rate=0.1, n_estimators=100, subsample=1.0, criterion=’friedman_mse’, min_samples_split=2, min_samples_leaf=1, min_weight_fraction_leaf=0.0, max_depth=3, min_impurity_split=None, init=None, random_state=None, max_features=None, alpha=0.9, verbose=0, max_leaf_nodes=None, warm_start=False, presort=’auto’)

ExtraTreesRegressor(n_estimators=700, min_samples_split=5, n_jobs=-1)

RandomForestRegressor(n_estimators=300, n_jobs=-1, min_samples_split=5)

The GradientBoostingRegressor uses default values for the arguments, while ExtraTreesRegressor and RandomForestRegressor follow Kamdar et al. (2016a), Kamdar et al. (2016b) for parameters in the function calls.

There are several detailed descriptions of these methods in the literature, in particular Breiman (2001) and Geurts et al. (2006) for Random Forests and Extra Trees [see also the descriptions for astronomers in Kamdar et al. (2016a), sections 2.4.2 and 2.4.3] and Friedman (2001) for Gradient Boosts. The books by Hastie et al. (2009) and Geron (2017) have pedagogical approaches, with the latter oriented around scikit.learn in particular.

Heuristically, Random Forests and Extra Trees create regression trees based upon a training sample, splitting the sample according to one feature at a time until some condition is met. Both methods choose the feature for each subsequent split by taking a random subset of the features (in the case here, the features are the five observables, Nred, MF, vel, Yint, and WL shear) and testing possible bifurcations, one for each of the features in the random feature subset. The ‘best’ bifurcation is the one which roughly has the least variance, where variance is calculated with respect to the mean in each branch, over all the elements in each branch. After applying this ‘best’ bifurcation, if the stopping condition is not met, the process repeats to create the next bifurcation of the tree. Both methods create an ensemble of trees and combine the results to reduce overfitting and improve accuracy.

The big differences between the Random Forests and Extra Trees are that the training set is used differently and the bifurcations at each step in the regression tree are chosen differently. In Random Forests, the full training set is not used for every tree in the ensemble. Instead, a bootstrap sample of the training set is used, with a different bootstrap sample for each tree that is in the combined final ensemble. Secondly, when the bifurcations are made, the ‘best’ bifurcation is found separately for each feature (with ‘best’ as above), and then the resulting best bifurcations of each feature are intercompared. The best of the best bifurcations is chosen to make the next tree bifurcation. For Extra Trees, instead, the whole training set is used to train each tree. Secondly, for bifurcations, a random bifurcation is chosen for each of the features being compared. These random bifurcations of each feature are compared to each other (rather than the best bifurcations of each feature in the Random Forest), but again, the ‘best’ one is taken. The Extra Trees method is much faster, as the ‘best’ bifurcation is not first found for each feature separately, before comparing the separate proposed feature bifurcations to each other. It is not always evident which one will do a better job on a given problem until they are both tried.1

The gradient boost method uses an ensemble of trees, as well, but instead of taking a tree ensemble with different trees having different random training samples or bifurcations, it creates a sequence of trees, where each tree fits the negative gradient of the loss function of the previous tree. The loss function chosen here is least squares.

These particular methods were chosen due to their familiarity for the authors; an exhaustive comparison of all the methods available in scikit.learn (Pedregosa et al. 2011) or elsewhere, or possibilities with custom made ML codes, was not undertaken. A more extensive comparison and exploration would be interesting to do for a machine learning study, given the initial success with these three ‘out-of-the-box’ methods.

3 RESULTS

3.1 Predicting mass

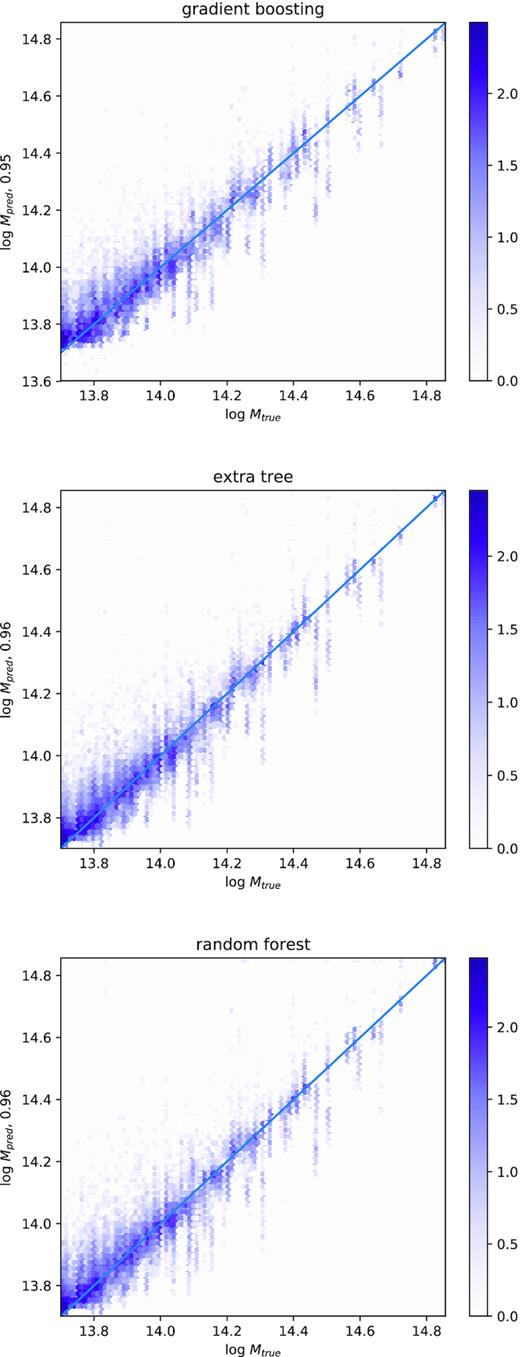

The correlation between log10Mtrue and log10Mpred for the three machine learning methods are shown in Fig. 2, and lie in the range of 95–96 per cent, listed on the y-axis for each panel.

True and predicted |$\log _{10} M/(h^{-1}\, \mathrm{M}_\odot)$| for several different machine learning algorithms, Gradient Boost, Extra Trees, and Random Forests (top to bottom). The correlation between true and predicted values is shown on the y-axis. Stripes at fixed Mtrue appear because each cluster appears 96 times, observed from 96 different angles. The colour-scale corresponds to log10Nclusters.

To estimate the scatter in true mass for a given predicted cluster mass, the distribution in the simulation must be chosen not according to the unobservable true mass, as in Fig. 2, but according to the same selection function as was used to construct the observed sample. If the simulation can be sampled in the same way as the observations, i.e. with the same cuts on SZ flux Yint, etc., and is accurate enough, the true mass distributions can be predicted by combining the observations and the simulation. One way to estimate the accuracy of Mpred for an observation, without a particular survey-specific selection function in hand, is to make a cut in Mpred, based on Fig. 2. This cut appears to be complete based upon Fig. 2, and so to the extent the simulation is accurate and thus to be trusted for the machine learning predictions, it can also be used to estimate the regime of applicability. For |$M_{\rm pred}\ge 10^{14}\, h^{-1} \, \mathrm{M}_\odot$|, it seems all the contributions from the lower mass clusters |$5\times 10^{13}\, h^{-1}\, \mathrm{M}_\odot$| and above will be captured. Ideally there would be even lower mass haloes measured with these observations in the simulation, and a larger sample, to confirm lower masses are not being missed with this cut, but these are not available in the current data set. This Mpred cut can be applied to both the simulation and observations without knowing the true mass distribution in the observations, and is just a stand-in for a specific but unknown selection function corresponding to a given survey.

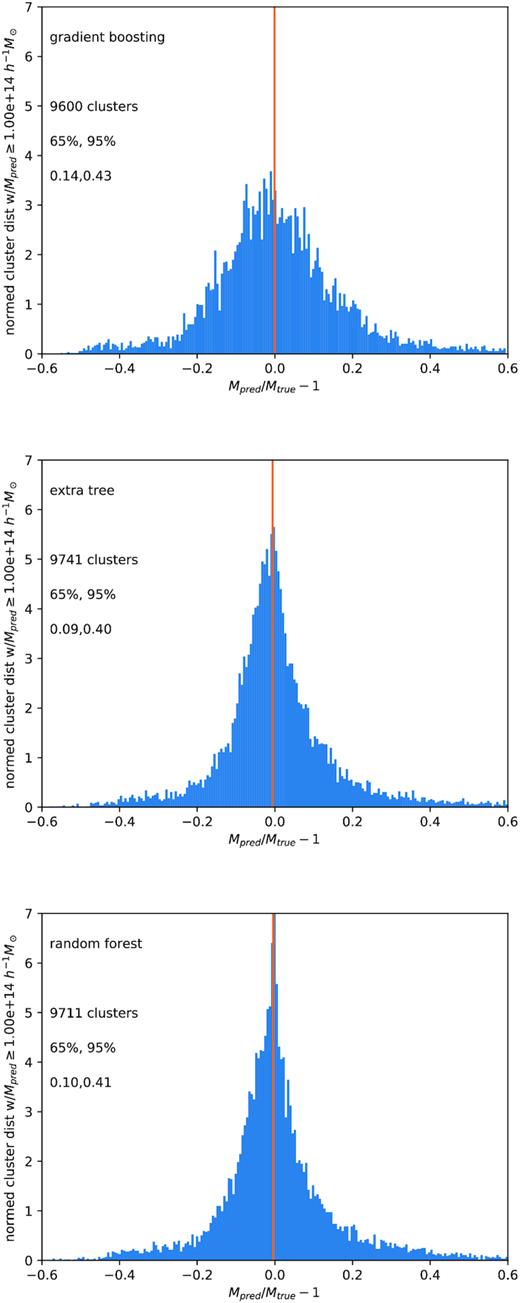

For |$M_{\rm pred}\ge 10^{14}\, h^{-1} \, \mathrm{M}_\odot$|, Mpred/Mtrue − 1 is shown in Fig. 3 for the three different ML algorithms also used in Fig. 2. The distributions are not well fit by Gaussian or lognormal distributions, so values of Mpred/Mtrue − 1 encompassing 65 per cent and 95 per cent of the galaxies around the median are shown. The vertical line is the median Mpred/Mtrue, which is slightly less than one.

Distribution of Mpred/Mtrue − 1 for clusters selected with |$M_{\rm pred} \ge 10^{14}\, h^{-1} \, \mathrm{M}_\odot$|, using Gradient Boost, Extra Trees, and Random Forests (top to bottom). The 2 per cent of clusters lying in in the tails of each distribution are omitted. The values of |Mpred/Mtrue − 1| enclosing 65 per cent and 95 per cent of the haloes around the median value (vertical line) of |Mpred/Mtrue − 1| are as listed. The median Mpred/Mtrue < 1.

Extra Trees and Random Forests have narrower scatter in Mpred/Mtrue − 1 than Gradient Boost, and all three histograms have tails to large Mpred/Mtrue − 1, comprising the 2 per cent of cluster lines of sight that are not shown. Again, the advantage of this approach is to calculate the mass from the measurements in combination, all at once. In principle, errors and scaling relations for the usual approach would also have to be calculated from a simulation, using distributions such as are in Fig. 1, with close attention to using the relevant selection function and its correlations with the multiwavelength measurements. Unfortunately, the effect of these errors on the mass function as a whole was difficult to estimate without adding many other assumptions, as the sample was only large because it was replicated 96 times using different lines of sight.

As pointed out by the referee, it might be expected that the tree methods (Extra Trees and Random Forest) would work better than the boosting method, as boosts work better with high bias data, and reduce bias, and trees work better with high variance data, and reduce variance. If one is using scaling relations, the mass error for each observable seems to be more dominated by variance than by bias.

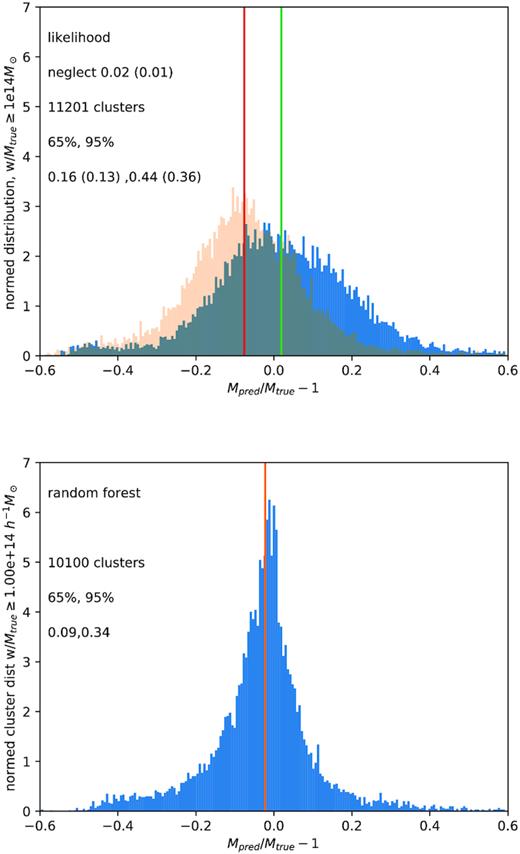

Comparison with a likelihood approach. The cluster sample is selected, for simplicity, on |$M_{\rm true} (\ge 10^{14}\, h^{-1} \, \mathrm{M}_\odot$|). At top are the distributions of Mpred/Mtrue − 1 for clusters using likelihood methods based upon the two scaling relations in Fig. 1. The solid line (dashed line) in Fig. 1 corresponds to the darker (lighter) histogram. At bottom is the same quantity for the same sample, using Random Forests to predict mass. The 2 per cent (1 per cent) (top) and <1 per cent (bottom) of clusters lying in the tails of each distribution are omitted. The values of |Mpred/Mtrue − 1| enclosing 65 per cent and 95 per cent of the haloes around the median value (vertical line) of |Mpred/Mtrue − 1| are as listed.

Comparing these two kinds of likelihood estimates against the machine learning predictions was also done for the sample used for the WCS, which has a bigger box (|$250\, \mathrm{Mpc}\, h^{-3}$|), higher resolution (20483 particles) and slightly different cosmology (Ωm = 0.274, |$\Omega _\Lambda$| = 0.726, h = 0.7,n = 0.95, σ8 = 0.8). This sample has more clusters above |$10^14\, h^{-1} \, \mathrm{M}_\odot$|, 256 to the 117 in the smaller box, but only mock measurements for clusters with true mass down to |$10^{14}\, h^{-1} \, \mathrm{M}_\odot$|. Thus, as mentioned earlier, was not used for the other measurements in this note. For this cluster sample, both the likelihood and ML fits were not as biased, as the median values of Mpred/Mtrue − 1 were usually close to zero. However, the likelihood fits for the two scaling relations shown in Fig. 1 remained broad compared to the ML fits, with half width around the median for 65 per cent of the clusters at (0.13, 0.14) and for 95 per cent at (0.38, 0.36), relative to (0.09) for 65 per cent and (0.29) for 95 per cent for the Random Forests fit. This is the main point of this note. In Section 4, several possible refinements and improvements in the machine learning approach are listed.

3.2 Subsets of multiwavelength measurements

One can also study how well mass is predicted by a given subset of the observations (within the context of the idealizations for the WL, SZ, and MF measurements mentioned earlier). In machine learning, Importance Permutation (IP; e.g. Breiman 2001; Strobl et al. 2008) is a model-agnostic way to determine the relative importance of each observable, i.e. to ask: ‘how important is each simulated observable when inferring Mpred (in these simulations)?’ The IP procedure uses the difference in the prediction accuracy before and after permuting a given predictor observable to quantify its importance. The IP is generally a reliable except for cases where the observables are highly correlated, which will result in an overestimation of the highest ranked observables (Strobl et al. 2008). However, the lowest ranking IP will not be biased as the least correlated observables will also have the lowest IP values. Given the large correlations between observables, the IP values can be used to determine which observables provide the least information. The lowest ranking observable was vel followed by Nred for all three machine learning methods , Gradient Boost, Extra Trees, and Random Forest. The IP values for vel and Nred ranged from 0.004 to 0.006 and 0.02 to 0.05, respectively, compared to roughly factors of 2–10 larger IP values for the other observables. These are the raw IP values, which have better statistical properties than the scaled (z-score) IP values (e.g. Strobl et al. 2008). Note that these two measurements are also the two with the most complete inclusion of observational contributions to mass scatter.

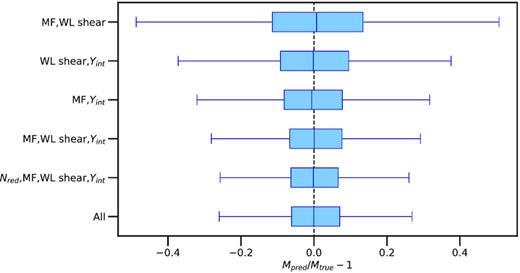

Whisker plots in Fig. 5 illustrate the impact of removing observables on the Mpred/Mtrue − 1 distributions. Only the Extra Tree distributions are shown in Fig. 5, as results are similar for the other two methods. The baseline is the inclusion of all observables, at bottom. When the lowest IP observables, vel and Nred, are dropped, the distributions show little to no change, consistent with their low IP values and the statement that they contain the least information for inferring Mpred. Hence, here the IP values can provide an efficient and effective way to explore the impact of removing observables on the Mpred/Mtrue − 1 distributions. The upper three whiskers show the distributions corresponding to pairs of the remaining three observables, MF, WL shear, and Yint. Of these three, dropping Yint causes the most spread in Mpred/Mtrue − 1. Combining the whisker plots with the IP results, one finds, for these idealized SZ, WL shear, and MF multiwavelength measurements, that Yint is the most important-simulated observable for predicting cluster masses.

Mass predictions from subsets of observations. The median, 25–75, and 0.35–99.65 percentiles of the Mpred/Mtrue − 1 distributions (see Fig. 3) for Extra Trees are illustrated with vertical line inside the box, the edges of the box, and the whiskers, respectively. The subsets of observables are listed on the y-axis including the entire set of observables (labelled all) at the bottom for reference. From the bottom, the second and third subsets have the vel and Nred observables (with the lowest Important Permutation, or IP, values) cumulatively removed. The upper three subsets show pairs of the remaining three observables: MF, WL shear, and Yint. The Mpred/Mtrue − 1 distributions upon removing the vel and Nred observables show little to no change compared to the all the observables, which is consistent with the IP results that they provide the least information within the assumptions used to create the simulated mock catalogue. Results are similar for Gradient Boost and Random Forest.

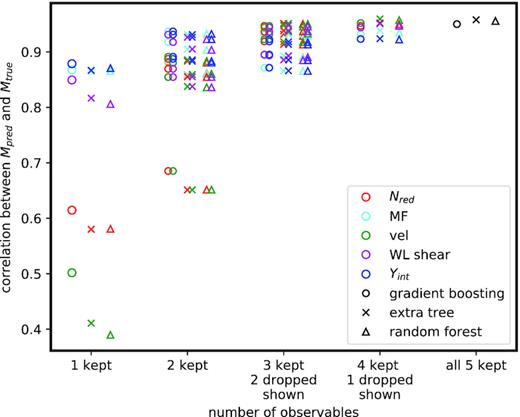

A brute force comparison of correlation functions between true and predicted masses gives complementary information. In Fig. 6, Mpred and Mtrue correlations are shown for all combinations of observables. The circle, cross, and triangle symbols denote the three different machine learning methods, and colours denote the observation or observations included (for using one or two observables) or the observations or observation dropped (when using three or four observables). These comparisons are based on a cut on true mass. A second step would be again to impose the selection function, but this is very survey dependent, and the simulated sample is very small; the idea here is just to give a qualitative sense of how much variation in Mpred–Mtrue correlations occurs with (combinations of) different methods.

Correlations between Mpred and Mtrue when a subset of the observables is used. Colours denote that observables are being used (when one or two are used to calculate Mpred) or neglected (when three or four are used to calculate Mpred). The three shapes correspond to the three machine learning methods as noted, slightly offset horizontally, for readability. For reference, the Mpred to Mtrue correlations when using all measurements, as in Fig. 2, are shown in black at far right.

It would be interesting to apply this method to different combinations of proposed observations, for instance to explore survey strategy trade-offs in the context of other observations. For such a study to be reliable, of course, the specific survey dependent systematic errors in all of the measurements should be included as much as possible, and the idealizations removed as much as possible.

3.3 Simulation validation

The accuracy of Mpred from machine learning as proposed here depends on the training simulations sufficiently capturing the observables as a function of true mass, the correlations between the observables, and the effects of the selection function. (As mentioned earlier, other mass estimates using scaling relations and correlations derived from simulations depend upon the accuracy of the simulations as well. In the case where the correlations are taken out by marginalization, the form of the relation is required, including the variables upon which it depends, rather than the actual numbers.)

Simulations and observations can be tested directly for agreement of some properties, such as number counts of clusters with a certain Yint or richness or other observational quantities. The hope is to find tests which, if passed, would suggest that the simulation is also accurate for properties that are not directly measurable, such as correlations used by ML, or mass scatter correlations, used in likelihood estimates. Both of these involve the not directly observable true mass.

One test which an accurate simulation must pass is to capture correlations between observed properties, for instance requiring a simulation (with the same selection function) validate the correlations estimated in Farahi et al. (2019). This is used regularly to test galaxy formation simulation models, e.g. in Agarwal et al. (2018).

Another angle to try to test simulated correlations between observables and the true mass is to use the machine learning encoding of joint relations between different observables, rather than only the pairwise correlation function of observables above. Specifically, one can use machine learning to predict one observable given the others, by training on all observables but one in the simulation (instead of using all observables to predict the observationally inaccessible Mtrue as in Section 3.1). If the simulation is sufficiently accurate, the machine learning relations between observables, derived from mock simulation observations, should also hold for actual observations. Here, the true and predicted (from ML) simulation observable pairs, for instance, for Yint, can be compared to the true versus predicted observation observable pairs for Yint, training and testing on the remaining four observables in the simulation, and then also testing on the observables from an observation. Crucially, predictions for both the simulation observables and the observational counterparts are based on the simulation training set.2 If the simulation is close to the observations, the relation of the predictions and true values in the mock observations should be similar to the relation of the predictions and true values in the actual observations.

As the interest is in the case where the simulation matches the number counts (a first check that a reasonable training simulation will pass) but does not match all the correlations between observables, and given that there is no simulation that agrees with all observations, shuffled line of sight variants of the original simulation are taken as ‘observation’. These shuffled ‘observations’ have the same number counts as the original simulation, but not the same correlations between observables. Two shufflings are considered:

Shuffling different lines of sight for each observable, separately for each individual cluster, and

Shuffling values of observables between lines of sight and between clusters within 0.03 dex bins in halo mass.

The latter shuffling not only mixes lines of sight but other (possibly isotropic) effects, such as local density, which might vary between clusters sharing the same mass.3 Each kind of shuffling is done eight separate times to give 16 samples for comparison with the original. To approximate a survey selection function, a minimum cut on WL shear σlens of 530 km s−1 (which seems to reject most low mass haloes, see Fig. 1) is made. Shuffling is done after the WL shear cut to preserve number counts. The selection function cuts out many more clusters than the Mpred selection in Section 3.1, however, imposing an appropriate Mpred cut for the shuffled samples seemed to require more steps and assumptions.

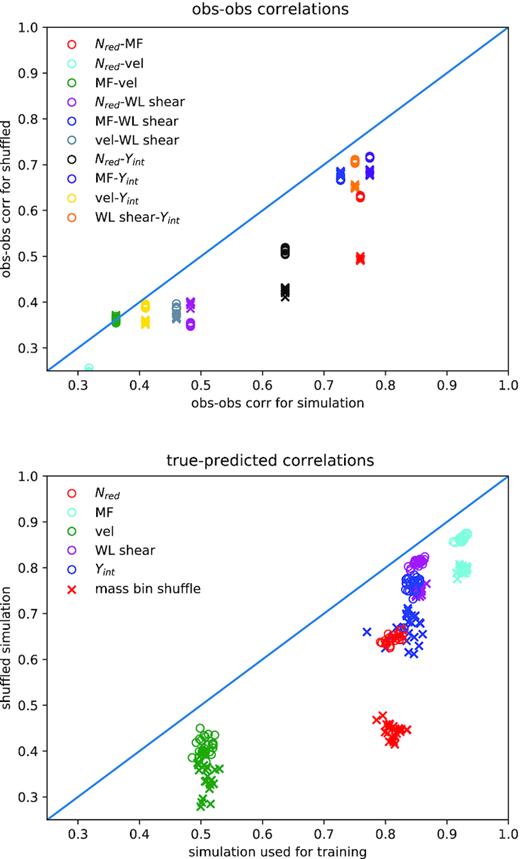

Figs 7 and 8 illustrate these two kinds of comparisons between simulations and observations: direct correlations between observables, and correlations between ML predicted and true observables, each determined by the other four observables.

Distinguishing between a data set with the same number counts for observables (as in Fig. 1), but different correlations due to shuffling. Two simulations are considered, the original one, and one where cluster properties are shuffled either between clusters within 0.03 dex bins for true mass (crosses) or only between lines of sight for each individual cluster (open circles). Top: Correlations between pairs of observables; the correlations for observables for the shuffled clusters are, as expected, lower than the unshuffled ones. Bottom: Correlations between predicted and true values of each of the five observables, separately, when predicted by the other four (x-axis), versus correlations between predictions and true values when the machine learning algorithm trained on the original simulation is tested on each of the two shuffled simulations instead. All three machine learning methods are included. The correlation relative to the original simulation degrades more strongly if cluster properties are shuffled within a mass bin, not only between lines of sight. See the text for more discussion. Clusters have observed WL shear σlens ≥ 530 km s−1 in all cases.

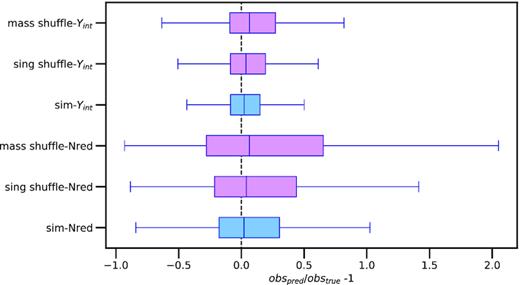

Distribution of Extra Tree pred/true Yint −1 (top 3 whiskers) and pred/true Nred richness −1 (bottom three whiskers) for all clusters in sample, predicted in each case from the other four observables. Again, as in Fig. 6, the median, 25–75, and 0.35–99.65 percentiles are illustrated with vertical central lines, the box edges, and the whiskers, respectively. The blue box refers to the training simulation, while the shuffled sightline box (middle of each triplet) and shuffled in 0.03 dex mass bins box(top of each triplet) are in plum. Note that ‘true’ means the value of Yint or Nred richness measured in the simulation or observation, respectively. The sample selection function is WL shear σlens ≥ 530 km s−1.

In the top panel of Fig. 7, correlations are shown between different simulation observables (x-axis) and their counterparts for the shuffled variants (‘observations’, y-axis); if the two agreed exactly, the points would lie on the diagonal line. Of course, the observables should always be correlated to some extent as they are both taken to be proxies for true mass; however, if the scatter in observables from true mass is also correlated, when the mass-observable scatter is modified by fixing mass and shuffling observables, the correlations will change, as is seen.

The bottom panel of Fig. 7 compares correlations between machine learning predicted single observables and true observables in the simulation (x-axis), and the predicted observables and true values in their shuffled counterparts (‘observations’, y-axis). The predicted values of each observable is obtained using the other four via machine learning, trained in both cases on 1/10 of the unshuffled simulation sample.

In both panels, crosses are mass bin shuffled variants, circles are cluster-by-cluster shuffled variants, and colours indicate which observable or observables are involved. Some pair correlations (observable-observable, top panel) or internally predicted quantities (true-pred, bottom panel) change strongly when the original simulation properties are shuffled, but not all. With an actual set of observations in hand, these shuffled simulation variants may be useful in helping to bound the expected response to changing correlations between observables (again, to the extent that the simulations are faithful).

The simulation and shuffled variants are also compared via whisker plots of machine learning predicted/true −1 values of the fifth observable for two observables in Fig. 8. Extra Trees is shown for this example, as it gave the highest correlations between true and predicted for the original simulation. Again, the mass shuffled sample is more distinguishable from the original sample than the sample with properties shuffled only cluster by cluster, possibly due to local density variations as mentioned above.

The above examples show how correlations between observables themselves, correlations between true and machine learning predicted observables, and predicted/true ratio distributions could be used to flag disagreements between simulations and observations (in practice, replacing the shuffled simulation in this example with actual sky observations, but perhaps using simulation shuffling to help bound the expected effects).

4 SUMMARY AND DISCUSSION

In this note, three ideas were tried out on a simulation:

Using machine learning plus a simulation with mock observations to predict halo masses directly from multiwavelength observations, rather than going through scaling relation mass assignments plus correlated scatter estimates. This produced fairly tight correlations between Mpred and Mtrue.

Comparing the accuracy of machine learning mass predictions for different combinations of multiwavelength measurements, which for this simulation and mock observations gave clear answers as to which combinations have the most constraining power. These were compared via IP and brute force. The specific preferred combinations found here are not directly comparable to specific surveys. Although the Nred and vel measurement errors are faithfully represented in this data set, the other three methods benefit from neglecting survey dependent systematics (SZ and WL), using true mass for some scalings (SZ, WL, MF, often a weak effect) and real space (MF). These systematics should be included, and the measurement approximations dropped to the appropriate extent, in order to do analyses for specific surveys.

Testing correlations in simulations (here used to train machine learning) by comparing relations between observables in the simulated mock observations to those in the observations. Testing is done by correlating observables within the simulated or observational data sets directly or via machine learning. In the latter case using one training sample to make predictions for both the simulations and the observations. For the example here, observations with different correlations were generated from the simulation by shuffling the different multiwavelength measurements between lines of sight, either for each cluster separately or in mass bins. Differences were seen between the simulation and its shuffled counterparts for almost all variations of these tests.

These initial forays give encouraging results. There are several ways to go further, following up on this promising exploration. More extensive simulated data sets could allow all of these ideas to be more thoroughly tested, refined and applied, and could have

larger volume and thus more clusters (there are larger simulations in the literature with several different observables, e.g. Angulo et al. 2012).

A larger sample might also allow using fewer lines of sight per cluster to test for and if need be get rid of possible effects introduced by related lines of sight in the small sample used here. In particular, the small number of distinct high-mass halo measurements mdoneakes it difficult to estimate the effect of the machine learning mass errors on the mass function. Also, a larger sample might allow a more refined comparison of correlations; for example, one could consider smaller bins in some observable, for instance, clusters which lie in a specific WL shear bin, more similar to Farahi et al. (2019), rather than just above a minimum value as done here in Section 3.3.

Lastly, the small number of distinct true masses in this sample may also have some effect on ML success. DecisionTreeRegressor was also explored, for instance, but had an unreasonably high success rate, seemingly due to matching clusters directly to the exact masses found in the training set. The small number of true masses does not seem to be an issue in the machine learning methods shown here, but a broader sampling of the space of possible clusters would be better.

more simulated rather than ‘painted on’ physical properties, for instance, using hydrodynamics to capture more of the baryonic effects during evolution (e.g. Shirasaki et al. 2016; Henson et al. 2017), and more generally, improvements of mock observations.

more cluster done, for instance, other richness estimators could be used, such as many of the galaxy-based cluster mass estimators that have been compared in Old et al. (2015). The velocity information used in Ntampaka et al. (2015, 2016, 2017) and Ho et al. (2019) could certainly be added, or X-ray, which plays an important role in many cluster studies. X-ray luminosity and X-ray temperature measurements are included in the machine learning cluster mass estimates for a 357 cluster sample by Armitage et al. (2019), along with mean, standard deviation, kurtosis and skewness of angular positions, stellar masses, velocities, and angular positions plus velocities of galaxies in a cylinder, number of galaxies, galaxy distribution axial ratio, and SZ flux within 5R200c. Some of these would complement well the observables used here.

more simulated observations of smaller mass haloes and other possible configurations which might also pass the cluster selection cuts (depending upon the cluster finding algorithm, i.e. sample selection).

For mass predictions, improved machine learning algorithms are likely possible, as these were just ‘out of the box’. Extensive comparisons of algorithms could be reasonably expected to yield even better results. Ideally, such comparisons would be on data sets that are closely tuned to specific observational surveys (such as using specific cluster selection functions, or best guess estimated errors for, for instance, photometric redshifts or other measurement ingredients). In addition, it is possible that some pre-processing of the data would give more successful results, such as using observables raised to some power, thus combining some of the physics from the scaling relations with the machine learning inferences from the simulation data.

In addition, it would be good to know if there are better ways than simply sampling, as is done here, to estimate the error on P(Mtrue|Mpred) as found by these machine learning approaches (such as using a‘prediction interval’). For the whole sample, the distribution of predictions from a simulation is a good first estimate of these probabilities, assuming the simulation is a representative sampling, but it would be very good to have a way to analyse smaller distributions of observed clusters, down to, for example, using extensive multiwavelength measurements of a single cluster to estimate the likelihood that the cluster came from the same distribution as that of a given simulation. The scatter in P(Mtrue|Mpred) in Fig. 3 seems fairly small, but in practice would have to calculated with a simulation with a better sampled population as described above, and the errors above would need to be combined with the uncertainties going into the simulation [see e.g. estimates in Shirasaki et al. (2016) for an enumeration of some of these].

In testing the simulations for accuracy, perhaps further information (such as ‘importance’) could be used to inform the simulation if it does not sufficiently match observations, and more sophisticated definitions of ‘match’ could be used. It would also be good to incorporate the observational measurement error bars into the simulation tests, perhaps by using a distribution of observational input measurement values weighted by their likelihood. As the errors are different in observations and in the simulations, it seems that training on observations might also be possible (to predict one observable from the others), but one of the two training sets is likely preferable. In addition, the shuffling test here is similar to the analysis made setting the correlations to zero in Shirasaki et al. (2016); that is, the two sorts of correlations are the ones in the simulation and their decorrelated counterpart. It is not clear how a different correlation (rather than a decorrelating shuffling) would appear in these tests.

To summarize, matching each observable to a mass and then combining mass predictions relies upon calibrating each mass prediction separately, while combining the observables first, as suggested here, relies upon a joint calibration from a simulation capturing all observed properties. This machine learning based approach requires a more detailed simulation, but as some of the underlying physics is shared between observables, this approach may have the benefit of including more of the true correlations. Another possibility is to go even further with machine learning, to cosmological parameters, skipping the calculation of cluster masses along the way.4 Both approaches are incorporating physical assumptions about clusters and their masses, but in different ways, giving a more complete understanding when considered together.

ACKNOWLEDGEMENTS

We thank M. White for the simulations and discussions, G. Evrard for suggestions on an early draft, and the referee and editor for many helpful questions and suggestions. The simulations used in this paper were performed at the National Energy Research Scientific Computing Center and the Laboratory Research Computing project at Lawrence Berkeley National Laboratory.

Footnotes

DecisionTreeRegressor is also used in Kamdar et al. (2016a). It fits to a single tree rather than an ensemble. It fails to provide reasonable scatter for the sample here, due to the small number of distinct true halo masses, especially at the high-mass end.

After submission of this paper, we learned of training on one simulation and testing on another as a test being used in simulations to recover star formation histories from spectra (Lovell et al. 2019).

We thank M. White for emphasizing this point.

We thank G. Holder for this suggestion.