-

PDF

- Split View

-

Views

-

Cite

Cite

K C Chan, M Crocce, A J Ross, S Avila, J Elvin-Poole, M Manera, W J Percival, R Rosenfeld, T M C Abbott, F B Abdalla, S Allam, E Bertin, D Brooks, D L Burke, A Carnero Rosell, M Carrasco Kind, J Carretero, F J Castander, C E Cunha, C B D’Andrea, L N da Costa, C Davis, J De Vicente, T F Eifler, J Estrada, B Flaugher, P Fosalba, J Frieman, J García-Bellido, E Gaztanaga, D W Gerdes, D Gruen, R A Gruendl, J Gschwend, G Gutierrez, W G Hartley, K Honscheid, B Hoyle, D J James, E Krause, K Kuehn, O Lahav, M Lima, M March, F Menanteau, C J Miller, R Miquel, A A Plazas, K Reil, A Roodman, E Sanchez, V Scarpine, I Sevilla-Noarbe, M Smith, M Soares-Santos, F Sobreira, E Suchyta, M E C Swanson, G Tarle, D Thomas, A R Walker, DES Collaboration, BAO from angular clustering: optimization and mitigation of theoretical systematics, Monthly Notices of the Royal Astronomical Society, Volume 480, Issue 3, November 2018, Pages 3031–3051, https://doi.org/10.1093/mnras/sty2036

Close - Share Icon Share

ABSTRACT

We study the methodology and potential theoretical systematics of measuring baryon acoustic oscillations (BAO) using the angular correlation functions in tomographic bins. We calibrate and optimize the pipeline for the Dark Energy Survey Year 1 data set using 1800 mocks. We compare the BAO fitting results obtained with three estimators: the Maximum Likelihood Estimator (MLE), Profile Likelihood, and Markov Chain Monte Carlo. The fit results from the MLE are the least biased and their derived 1σ error bar are closest to the Gaussian distribution value after removing the extreme mocks with non-detected BAO signal. We show that incorrect assumptions in constructing the template, such as mismatches from the cosmology of the mocks or the underlying photo-|$z$| errors, can lead to BAO angular shifts. We find that MLE is the method that best traces this systematic biases, allowing to recover the true angular distance values. In a real survey analysis, it may happen that the final data sample properties are slightly different from those of the mock catalogue. We show that the effect on the mock covariance due to the sample differences can be corrected with the help of the Gaussian covariance matrix or more effectively using the eigenmode expansion of the mock covariance. In the eigenmode expansion, the eigenmodes are provided by some proxy covariance matrix. The eigenmode expansion is significantly less susceptible to statistical fluctuations relative to the direct measurements of the covariance matrix because of the number of free parameters is substantially reduced.

1 INTRODUCTION

Baryon acoustic oscillations (BAO), generated in the early universe, leave their imprint in the distribution of galaxies (Peebles & Yu 1970; Sunyaev & Zeldovich 1970). At early times (|$z$| ≳ 1100), photons and baryons form a tightly coupled plasma, and sound waves propagate in this plasma. After the recombination of hydrogen, photons can free stream in the universe. The acoustic wave pattern remains frozen in the baryon distribution. The sound horizon at the drag epoch is close to 150 Mpc. The formation of the BAO in the early universe is governed by the well-understood linear physics, see Bond & Efstathiou (1984), Bond & Efstathiou (1987), Hu & Sugiyama (1996), Hu, Sugiyama & Silk (1997), and Dodelson (2003) for the details of the cosmic microwave background physics. Given that this scale is relatively large, it is less susceptible to astrophysical contamination and other non-linear effects. Another reason for its robustness is that it exhibits sharp features in two-point correlations, while non-linearity tends to produce changes in the broad-band power. The BAO can serve as a standard ruler (Eisenstein & Hu 1998; Meiksin, White & Peacock 1999; Eisenstein, Seo & White 2007). Observations of the BAO feature in the distribution of galaxies has been recognized as one of the most important cosmological probes that enables us to measure the Hubble parameter and the equation of state of the dark energy [see e.g. Weinberg et al. (2013); Aubourg et al. (2015)]. The potential distortions of BAO due to non-linear evolution and galaxy bias are less than 0.5 per cent (Crocce & Scoccimarro 2008; Padmanabhan & White 2009).

Using spectroscopic data, the BAO was first clearly detected in Sloan Digital Sky Survey (SDSS) (Eisenstein et al. 2005) and 2dF Galaxy Survey (2dFGS) (Cole et al. 2005), and subsequently in numerous studies, e.g. Gaztanaga, Cabre & Hui (2009), Percival et al. (2010), Beutler et al. (2011), Kazin et al. (2014), Ross et al. (2015), Alam et al. (2017), Bautista et al. (2017), and Ata et al. (2017). Spectroscopic data give precise redshift information, but such surveys are relatively expensive, as they require spectroscopic observations of galaxies targeted from existing imaging surveys. On the other hand, multiband imaging surveys relying on the use of photometric redshift (photo-|$z$|) for radial information (Koo 1985) can cheaply survey large volumes. There are several (generally weaker) detections of the BAO feature in the galaxy distribution using photometric data (Padmanabhan et al. 2007; Estrada, Sefusatti & Frieman 2009; Hütsi 2010; Seo et al. 2012; Carnero et al. 2012; de Simoni et al. 2013). The Dark Energy Survey (DES) and future surveys such as The Large Synoptic Survey Telescope (LSST) (LSST Science Collaboration et al. 2009) will deliver an enormous amount of photometric data with well calibrated photo-|$z$|’s, thus we expect that accurate BAO measurements will be achieved from these surveys.

In this work, we investigate the BAO detection using the angular correlation function of a galaxy sample optimally selected from the first year of DES data (DES Y1, Crocce et al. 2017). DES is one of the largest ongoing galaxy surveys, and its goal is to reveal the nature of the dark energy. One of the routes to achieve this goal is to accurately measure the BAO scale in the distribution of galaxies as a function of redshift. As it is a photometric survey, its redshift information is not so precise, but it can cover a large volume. This is advantageous to the BAO measurement: the sound horizon scale is large, it requires large survey volume to get good statistics. The BAO sample (Crocce et al. 2017) derived from the first year DES data (Drlica-Wagner et al. 2017) already consists of about 1.3 million galaxies covering more than 1318 deg2. This is only 1 per cent of the total number of galaxies identified in DES Y1, which can be used for science analyses such as Abbott et al. (2017a).

A measurement of the BAO at the effective redshift (or mean redshift) of the survey, |$z$|eff = 0.8 was presented in Abbott et al. (2017b) using this sample. This effective redshift is less explored by other existing surveys. In Abbott et al. (2017b), three statistics: the angular correlation function |$w$|, the angular power spectrum Cℓ, and the 3D correlation function ξ were used. See Camacho et al. (2018) and Ross et al. (2017b) for the details on the Cℓ and ξ analyses. These statistics are sensitive to different systematics and they provide important cross checks for the analyses. By measuring angular correlation |$w$| from the data divided into a number of redshift bins (or tomography), no precise redshift information is required, thus this statistics is well suited for extracting BAO information from the photometric sample. In the current paper, we present the details of the calibrations and optimization applied when using |$w$| to measure the BAO. Although the fiducial setup is tailored to DES Y1, the analysis and apparatus developed here will be useful for upcoming DES data and other large-scale imaging surveys.

This paper is organized as follows. In Section 2, we introduce the theory for the BAO modelling and for the Gaussian covariance matrix. We also describe the mock catalogues used to test the pipeline. In Section 3, we discuss the extraction of the angular diameter distance using BAO template-fitting methods, and we present three different estimators for such procedure: Maximum Likelihood Estimator (MLE), Profile Likelihood (PL), and Markov Chain Monte Carlo (MCMC). Potential systematics errors in the angular diameter distance scale due to the assumed template used for BAO extraction are studied in Section 4, such as the BAO damping scale, the assumed cosmological model or the propagation of photo-|$z$| errors. In Section 5, we discuss various optimizations for the analysis. Section 6 is devoted to issues related to the covariance; in particular we present an eigenmode expansion that allows to adapt the covariance to changes in the underlying template or sample assumptions. We present our conclusions in Section 7. In Appendix A, we further compare the results obtained with the three estimators.

2 THEORY AND MOCK CATALOGUES

To detect the BAO signal in the data, we employ a template-fitting method. A template encodes the expected shape and amplitude of the BAO feature. It is computed using the expected properties, e.g. the survey and galaxy sample characteristics. A large set of mock catalogues are constructed to mimic those detailed characteristics. The template is then fitted to the correlation functions measured on the mock catalogues to extract the BAO distance scale, and to study different systematic and statistical effects.

In this section, we discuss how the template is constructed. We also introduce a Gaussian theory covariance that is employed at different moments in the paper. The mock catalogues used in this study are briefly described in Section 2.3, with full details given in Avila et al. (2017).

2.1 The BAO template

The MICE cosmology is the reference cosmology adopted in the DES Y1 BAO analysis (Abbott et al. 2017b), and thus is in this paper as well. It was chosen primarily because of the MICE simulation set (Fosalba et al. 2015), a large high-resolution galaxy light-cone simulation tailored in part to reproduce DES observables, and accessible to us. In particular, MICE halo catalogues were used to calibrate the Halogen mock catalogues used in DES Y1 BAO analysis (Abbott et al. 2017b) and all the supporting papers including this one. In summary, the cosmological parameters in MICE cosmology are Ωm = 0.25, ΩΛ = 0.75, Ωb = 0.044, h = 0.7, σ8 = 0.8, and ns = 0.95. Such a low matter density is no longer compatible with the current accepted value by Planck [Ωm = 0.31 (Ade et al. 2016)]. We investigate in Section 4.2 how the BAO fit is affected when there is mismatch between the template cosmology and the cosmology of the mocks.

2.2 Theory covariance matrix

Finally, we note that the survey angular geometry mask does not appear explicitly in the Gaussian covariance, only the survey area through fsky. In configuration space, the effect of the mask is less severe than in Fourier/harmonic space. None the less, the geometry of the mask, including the fact that it has holes, makes the number of random pairs as a function of separation not to simply scale with the effective area of the survey. Hence, the shot noise term will not exactly follow equation (9) but acquire a scale dependence, see Krause et al. (2017). We have checked that this effect is negligible for our BAO sample. Furthermore, Avila et al. (2017) compared the covariance matrix obtained from mocks to the Gaussian covariance matrix and found agreement to within 10 per cent. In Section 6, we study this issue in greater detail. Another effect, the supersample covariance due to the coupling of the small-scale modes inside the survey with the long mode when the window function is present and is only important for small scales (Hamilton, Rimes & Scoccimarro 2006; Takada & Hu 2013; Li, Hu & Takada 2014; Chan, Moradinezhad Dizgah & Noreña 2018).

2.3 Mock catalogues

We calibrate our methodology using a sample of 1800 Halogen mocks (Avila et al. 2017) that match the BAO sample of DES Y1. We outline the basic information here, and refer the readers to Avila et al. (2017), and Avila et al. (2015), for more details. For each mock realization, the dark matter particle distribution is created using second-order Lagrangian perturbation theory. Each mock run uses 12803 particles in a box size of |$3072 \, \mathrm{Mpc} \, h^{-1}$|. Haloes are then placed in the dark matter density field based on the prescriptions described in Avila et al. (2015). The halo abundance, bias as a function of halo mass, and velocity distribution are matched to those in the MICE simulation (Fosalba et al. 2015). Haloes are arranged in the light-cone with the observer placed at one corner of the simulation box. The light-cone is spanned by 12 snapshots from |$z$| = 0.3 to 1.3. From this octant, the full sky mock is formed by replicating it eight times with periodic boundary conditions. Galaxies are placed in the haloes using a hybrid halo occupation distribution–halo abundance matching prescription that allows for galaxy bias and number density evolution.

The mocks match to the properties of the DES Y1 BAO sample. The angular mask and sample properties including the photo-|$z$| distribution, the number density, and the galaxy bias are matched. Redshift uncertainties are accurately modelled by fitting a double skewed Gaussian curve to photo-|$z$| distribution measured from the data, and this relation is then applied to the mocks. The final mock catalogue covers an area of 1318 deg2 on the sky as in DES Y1. We consider the mock data in the photo-|$z$| redshift range [0.6,1], and there are close to 1.3 million galaxies per mock in this range. In the fiducial setting, the sample is further divided into four redshift bins of width Δ|$z$| = 0.1. In total, we produce 1800 realizations, and we use them to calibrate the pipeline and estimate the covariance matrix. Unless otherwise stated, the mock covariance is used.

3 BAO FITTING METHODS

3.1 Methods overview

For high signal-to-noise BAO data, one expects to recover Gaussian likelihoods e.g. in the case of Alam et al. (2017). For such data, many methods for the BAO fitting would be expected to yield consistent results. But this might not be our situation, as the expected signal to noise is close to 2.

Here, we compare three methods to derive the BAO angular scale from the data, testing them thoroughly with the mocks: MLE, PL, and MCMC. We define each below. These methods differ in how they define the probability distribution of the interested variables, and how the best-fitting values and errors are computed. For a review of these statistical methods, see e.g. Press et al. (2007), Hogg, Bovy & Lang (2010), and Trotta (2017).

In the BAO fitting even though the full likelihood is multidimensional (with all the parameters in the template) we are ultimately interested in only one, the BAO dilation parameter α. Here, we are mostly interested in which estimator gives the most reliable result for α.

Throughout, we use |$\bar{ \alpha }$| and σα to denote the best fit and the error obtained from the fitting method for an individual realization. We will use angular brackets to represent the ensemble average over the mocks: e.g. |$\langle \bar{\alpha }\rangle$| is the mean of the best-fitting distribution, while |$\mathrm{std} (\bar{\alpha })$| is the standard deviation of the |$\bar{ \alpha }$| distribution.

3.1.1 Maximum Likelihood Estimator

We will further take the symmetric error bar by averaging over the lower and upper bars. In the frequentist’s interpretation, because α is a parameter, we can interpret the error bars only when the experiments are repeated. Suppose N independent measurements are repeated, we expect to have 68 per cent of the time the 1σ bars enclosing the true value (α = 1 for the unbiased case) for a Gaussian distribution.

We will consider the likelihood in the range of α ∈ [0.8,1.2], and BAO is regarded as being detected only if the 1σ interval can be constructed within the interval [0.8,1.2].

3.1.2 Profile Likelihood

3.1.3 Markov Chain Monte Carlo

In the Bayesian approach, α is a random variable, we can talk about the chance that the α value lies in the 1σ interval. Strict Bayesians will stop at the posterior distributions as their final product, but to compare with other methods, we will deviate from the strict Bayesianism and use the posterior distributions to compute the summary statistics (Hogg et al. 2010). If the distribution is Gaussian, we expect 68 per cent of chance. We use the median of the MCMC chain for |$\bar{\alpha }$|, and σα is derived from 16 and 84 percentiles of the chain. Again to facilitate the comparison with other methods, we average over the left and right error bars to get a symmetric one. Alternatively, we can use the mean and the standard deviation for the best fit and its error bar. We opt for the median and the percentiles because we find that the results encloses |$\langle \bar{ \alpha } \rangle$| closer to the Gaussian expectation. The prior on α is taken to be [0.6,1.4]. Similar to PL, |$\bar{\alpha }$| and σα can always be defined.

We use the MCMC implementation emcee (Foreman-Mackey et al. 2013), in which multiple walkers are employed and the correlation among the walkers are reduced by using the information among them. We use 100 walkers, 3000 burn-in steps, and 2000 steps for the run. In this setting, the MCMC fitting code takes about 50 times longer than the MLE code does. We have conducted some convergence test on the number of steps required. We took a sample of steps in the range from 1500 to 50 000 and find that fluctuations of |$\bar{\alpha }$| are within 0.1 per cent from the convergent value (assuming convergence attained with 50 000 steps) and those of σα within 2 per cent. Thus, 2000 steps are sufficient to make sure that it does not impact the results later on. We note that the usage of the percentiles of the distribution is much less susceptible to statistical fluctuations than using the mean and the variance.

3.1.4 Comparison criteria

We will test these methods against the mock catalogues. We will check how stable the fit results are, especially how small a bias (in comparison to the known true value) that each method yields.

Note that we do not enforce the error distribution to be Gaussian, indeed they are not (see Fig. 3). We only want the 1σ error bar to enclose the true answer close to the Gaussian expectation. In principle, for MLE, we can adjust the value of Δχ2 so that it encloses the true value (e.g. α = 1) 68 per cent of the time. Similar adjustments can be done for other estimators. By doing so, the derived error bar yields the desired Gaussian probability expectation. However, the Δχ2 = 1 rule works well for us, and no adjustment is needed.

3.2 Comparison of the BAO fit results by MLE, PL, and MCMC

Following Abbott et al. (2017b), we consider those fits with their 1σ intervals of α falling outside the range [0.8,1.2] as non-detections. These non-detections are poorly fit by our template, and they cause the distribution of |$\bar{ \alpha }$| to be highly non-Gaussian. Thus, we will remove the non-detection mocks first. We will comment more on this at the end of the section.

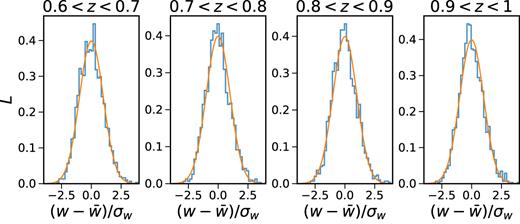

Before proceeding to the comparison, we first verify the Gaussian likelihood assumption equation (14) using the mock catalogues. The likelihood tends to be Gaussian distributed thanks to the central limit theorem. Because the Gaussian likelihood assumption is central to the analysis, we need to check it [e.g. Scoccimarro (2000); Hahn et al. (2018)]. In Fig. 1, we show the likelihood distribution of the values of |$w$| measured from the mocks. We have shown the results for the bin θ = 2°. We have used the standard normal variable |$(w - \bar{w}) / \sigma _\text{w}$|, where |$\bar{w}$| and σw are the mean and the standard deviation of the distribution of |$w$|. We find that the likelihood indeed follows the Gaussian distribution well.

The likelihood distribution of |$w$| at the bin θ = 2° (histogram). The standard normal variable is used. The Gaussian distribution with zero mean and unity variance (solid line) is plotted for comparison.

In Table 1, we show the fit with MLE, PL, and MCMC for two detection criteria. First, for MLE with error derived from Δχ2 = 1, there are 91 per cent of the mocks with their 1σ error bars fall within the interval of [0.8,1.2], while for MCMC the fraction is 84 per cent. For PL, it is also almost 100 per cent.

The BAO fit with MLE, PL, and MCMC obtained with two sets of detection criteria (left and right). For Detection Criterion 1, we only consider those mocks whose 1σ interval |$\bar{\alpha } \pm \sigma _\alpha$| fall within the interval [0.8,1.2]. For Detection Criterion 2, we use the same set of mocks for all three methods, those having 1σ interval falling within [0.8,1.2] using the MLE estimate of |$\bar{\alpha }$| and σα. For each criterion, the first fraction is normalized with respect to the total number of mocks (1800), while the second fraction is normalized with respect to the number of mocks satisfying the selection criterion.

| . | Detection Criterion 1 . | Detection Criterion 2 . | ||||

|---|---|---|---|---|---|---|

| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] MLE (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.91 | 1.001 ± 0.052 | 0.69 | 0.91 | 1.001 ± 0.052 | 0.69 |

| PL | 0.99 | 1.004 ± 0.049 | 0.77 | 0.91 | 1.003 ± 0.046 | 0.78 |

| MCMC | 0.84 | 1.007 ± 0.049 | 0.74 | 0.91 | 1.007 ± 0.059 | 0.74 |

| . | Detection Criterion 1 . | Detection Criterion 2 . | ||||

|---|---|---|---|---|---|---|

| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] MLE (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.91 | 1.001 ± 0.052 | 0.69 | 0.91 | 1.001 ± 0.052 | 0.69 |

| PL | 0.99 | 1.004 ± 0.049 | 0.77 | 0.91 | 1.003 ± 0.046 | 0.78 |

| MCMC | 0.84 | 1.007 ± 0.049 | 0.74 | 0.91 | 1.007 ± 0.059 | 0.74 |

The BAO fit with MLE, PL, and MCMC obtained with two sets of detection criteria (left and right). For Detection Criterion 1, we only consider those mocks whose 1σ interval |$\bar{\alpha } \pm \sigma _\alpha$| fall within the interval [0.8,1.2]. For Detection Criterion 2, we use the same set of mocks for all three methods, those having 1σ interval falling within [0.8,1.2] using the MLE estimate of |$\bar{\alpha }$| and σα. For each criterion, the first fraction is normalized with respect to the total number of mocks (1800), while the second fraction is normalized with respect to the number of mocks satisfying the selection criterion.

| . | Detection Criterion 1 . | Detection Criterion 2 . | ||||

|---|---|---|---|---|---|---|

| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] MLE (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.91 | 1.001 ± 0.052 | 0.69 | 0.91 | 1.001 ± 0.052 | 0.69 |

| PL | 0.99 | 1.004 ± 0.049 | 0.77 | 0.91 | 1.003 ± 0.046 | 0.78 |

| MCMC | 0.84 | 1.007 ± 0.049 | 0.74 | 0.91 | 1.007 ± 0.059 | 0.74 |

| . | Detection Criterion 1 . | Detection Criterion 2 . | ||||

|---|---|---|---|---|---|---|

| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] MLE (Fraction selected) . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.91 | 1.001 ± 0.052 | 0.69 | 0.91 | 1.001 ± 0.052 | 0.69 |

| PL | 0.99 | 1.004 ± 0.049 | 0.77 | 0.91 | 1.003 ± 0.046 | 0.78 |

| MCMC | 0.84 | 1.007 ± 0.049 | 0.74 | 0.91 | 1.007 ± 0.059 | 0.74 |

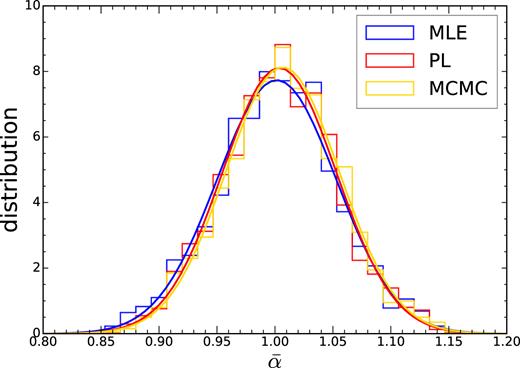

In Fig. 2, we show the distribution of the best-fitting α obtained with these three methods after pruning the non-detection mocks. As a comparison we have also plotted the Gaussian distribution with the same mean and variance, we find that |$\bar{\alpha }$| follows the Gaussian distribution well. The mean of the best fit from MLE is the least biased among the three methods. The distributions of |$\bar{\alpha }$| from PL and MCMC are quite similar. They tend to be more skewed towards |$\bar{\alpha } \gt 1$|, and this can be seen from their corresponding Gaussian distribution and the |$\langle \bar{\alpha }\rangle$| shown in Table 1. In particular for MCMC, |$\langle \bar{\alpha } \rangle$|, it is larger than 1 by 0.007. In Table 1, the fraction of mocks with the 1σ error bar enclosing the |$\langle \bar{\alpha } \rangle$| is also shown. We find that MLE with Δχ2 = 1 prescription encloses |$\langle \bar{\alpha } \rangle$| 0.69 of the time, which is very close to the Gaussian expectation, while PL and MCMC are higher than the Gaussian expectation by 9 per cent and 6 per cent, respectively.

The histograms show the distribution of the best-fitting |$\bar{\alpha }$| obtained using MLE (blue), PL (red), and MCMC (yellow). The solid lines (blue for MLE, red for PL, and yellow for MCMC) are the Gaussian distributions with the same mean and variance as the corresponding histograms.

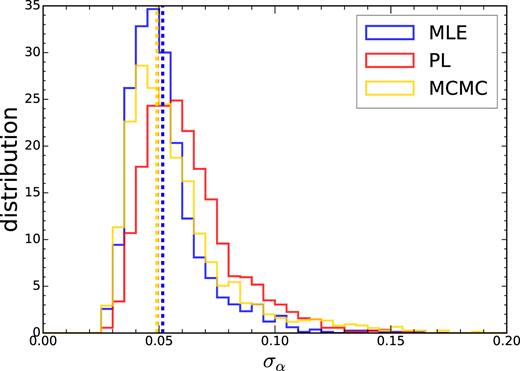

In order to see the error derived more clearly, in Fig. 3, the distribution of the errors derived from these three methods are shown. As a comparison, we also show the |$\mathrm{std}(\bar{\alpha })$|. In the caption of Fig. 3 we have given the numbers for the 〈σα〉 and |$\mathrm{std}(\bar{\alpha })$| for MLE, PL, and MCMC. The 〈σα〉 obtained from the MLE method coincides with |$\mathrm{std}(\bar{\alpha })$| well, while MCMC gives slightly larger error σα. PL tends to give the largest error with |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha }) = 1.27$|.

The distribution of the error derived from each individual mock (blue for MLE, red for PL, and yellow for MCMC). The vertical dashed lines are the standard deviations of the best-fitting |$\bar{\alpha }$| shown in Fig. 2. The 〈σα〉 and |$\mathrm{std}(\bar{\alpha })$| for MLE, PL, and MCMC are (0.053, 0.052), (0.062, 0.0492), and (0.057, 0.049) respectively. While for MLE 〈σα〉 and |$\mathrm{std}(\bar{\alpha })$| coincide, PL and MCMC yield larger 〈σα〉.

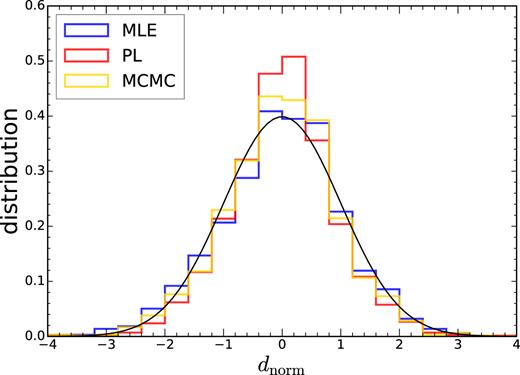

We plot the distributions of the normalized variable for these methods in Fig. 4. By comparing with the Gaussian distribution with zero mean and unity variance, we find that MLE with Δχ2 = 1, dnorm is more Gaussian, while MCMC is slightly worse, and PL is the least Gaussian.

The distribution of the normalized variable dnorm. The results obtained with MLE (blue), PL (red), and MCMC (yellow) are shown. The Gaussian distribution with zero mean and unity variance (solid, black) are shown for reference.

In the detection criterion we have applied, the non-detections are different for each of the methods. We therefore wish to check if the differences we have found in the results are simply due to that. We now apply the same selection criterion that the 1σ interval obtained from MLE falls within [0.8,1.2] to all the three methods. The results are shown in Table 1 as Detection Criterion 2. We find that the results are essentially the same as the previous one, ruling out that the differences are due to the selection criterion. Note that for MCMC, the |$\mathrm{std}(\bar{\alpha })$| has increased quite appreciably because using the MLE detection criterion, a large fraction of extreme mocks are retained and they cause a big increase in the |$\mathrm{std}(\bar{\alpha })$|. In the rest of the analysis, we will stick to the original pruning criterion.

The choice of the interval [0.8,1.2] is somewhat arbitrary, but based on past results [e.g. Anderson et al. (2014); Ross et al. (2017a)] that suggest it is a reasonable choice (though, admittedly, more a rule of thumb than anything else). To further justify this choice, in the Appendix A, we show the comparison of results obtained with a larger interval [0.6,1.4]. We find that by including the extreme mocks, the distribution of the best-fitting |$\bar{\alpha }$| exhibits strong tails, and it does not agree with the Gaussian distribution with the same mean and variance. These extreme mocks not only enlarge the mean of the error bars 〈σα〉, they also cause bias in |$\langle \bar{\alpha } \rangle$|. In particular for MLE, |$|\langle \bar{\alpha } \rangle - 1 |$| changes from 0.001 (Table 1) to 0.029 (Table A1). For MCMC, it only changes mildly from 0.007 to 0.010. Overall, we find that when the wider interval [0.6,1.4] is adopted, the MCMC is superior to the other two methods because it gets less biased results, and the derived 1σ intervals which enclose |$\langle \bar{\alpha } \rangle$| is 71 per cent of the mocks, the closest to the Gaussian expectation. Moreover, |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })=0.92$| is close to 1.

Because the small fraction of the mocks without a BAO detection highly bias the distributions of the best-fitting parameters, and since it would be difficult to extract useful BAO information from them, we adopt a smaller interval to get rid of them. In practice, if the best fit with its 1σ interval is outside the range [0.8,1.2] in our actual data, the data would be poorly fitted (or the error bar poorly estimated) by our existing methodology and we can hardly claim to detect the BAO signals in the data. In this case, we may need to change the fiducial cosmological model or wait for additional data.

Thus, overall we find that after pruning the non-detections, MLE yields the estimate with the least bias and the error bar using Δχ2 = 1 results in the 1σ error closest to the Gaussian expectation. Therefore, if we prune the data, MLE furnishes an effective BAO fitting method. For the rest of the analysis, we always use the pruned data.

4 TEMPLATE SYSTEMATICS

In this section, we study the potential systematics associated with the template and investigate how they can affect the BAO fit results. We will first determine the physical damping scale Σ in the template by fitting to the mock catalogue. Although the damping scale does not bias the mean for the BAO fit, it can strongly affect the error bar that is derived. We then examine how the BAO fit is affected when the template does not coincide precisely with the data, i.e. the BAO scale in the template is different from that in the data. The parameter α is introduced to allow the shift in the BAO scale, it is crucial to check how successful it deals with the mismatch.

4.1 The BAO damping scale

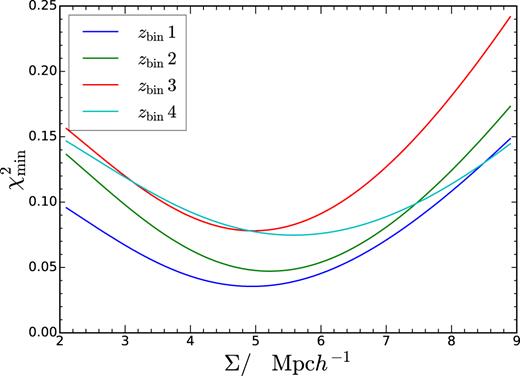

To determine the correct physical damping scale, we fit the templates with only one fitting parameter Σ to the mean |$w$| of the mocks (i.e. α = 1, B = 1, and Ai = 0). We have considered four redshift bins and fitted to each redshift bin separately. The minimum of the χ2 obtained with MLE is plotted against the damping scale in Fig. 5. The best-fitting damping scale is in between 5 and 6 |$\, \mathrm{Mpc} \, h^{-1}$| across the redshift bins. However, we note that the highest redshift bin, bin 4, requires the largest damping. This is contrary to the expectation that the damping scale should decrease as redshift increases because the non-linearity becomes weaker. This is not due to the photo-|$z$| distribution because of the following reason. Although bin 4 has the largest photo-|$z$| uncertainty, which can blur the BAO, it is taken into account in ϕ(|$z$|) already and does not affect the more fundamental 3D damping scale. Throughout this work, we simply take the mean of the best fit of the four redshift bins, which is |$\Sigma = 5.2 \, \mathrm{Mpc} \, h^{-1}$|. We have checked that the differences between using the mean damping scales and the individual best fit results in no detectable change in α.

The minimum of the χ2 against the BAO damping scale Σ for the four redshift bins used.

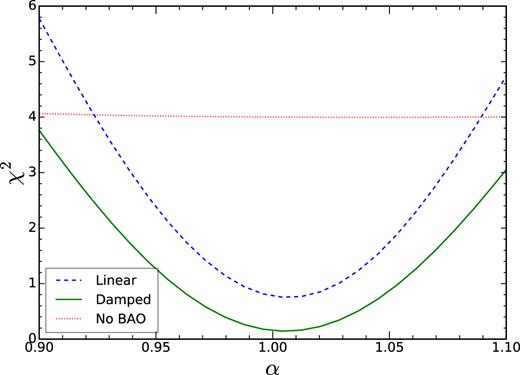

It is instructive to see how the fit is affected when the template without damping is used. To check this, we compare the fits with |$\Sigma =5.2 \, \mathrm{Mpc} \, h^{-1}$| against the one without damping i.e. |$\Sigma =0 \, \mathrm{Mpc} \, h^{-1}$|. We jointly fit to the mean of the four bins and the results are shown in Fig. 6. The linear case yields a larger overall value of χ2 than the damped one. As a comparison, we also show the fit using a template without BAO, and it is clearly disfavoured compared to the BAO model. We also see that the χ2 bound is narrower for the linear template, and hence using it we would get an artificially tighter bound on α. On the other hand, the extreme case of no BAO, there is no bound on α at all.

The χ2 as a function of α when the template without damping (dashed, blue) and with the damping scale |$\Sigma = 5.2 \, \mathrm{Mpc} \, h^{-1}$| (solid, green) are used. The fit with a no-BAO template (dotted, red) is also shown.

In Eisenstein et al. (2007) [see also Seo & Eisenstein (2007)], the BAO damping is modelled using the differential Lagrangian displacement field between two points at a separation of the sound horizon. Under the Zel’dovich approximation (Zel’dovich 1970), at |$z$|eff = 0.8 the spherically averaged damping scale is |$5.35 \, \mathrm{Mpc} \, h^{-1}$|, which is close to our recovered value. Note that the damping scale is obtained by integrating over the linear power spectrum [eq. (9) in Eisenstein et al. (2007)], and in Planck cosmology, we get |$4.96 \, \mathrm{Mpc} \, h^{-1}$| instead. Thus in principle, we can allow the damping scale to be a free parameter. In Abbott et al. (2017b), we have tested the results obtained using different damping scales (2.6 and |$7.8 \, \mathrm{Mpc} \, h^{-1}$|), consistent with the trend shown in Fig. 6, the damping scale has only small effect on the best fit, but a smaller damping scale results in a smaller error bar. Given the quality of the current data, we fix the BAO damping scale to be 5.2|$\, \mathrm{Mpc} \, h^{-1}$|.

4.2 Incorrect fiducial cosmology

The mock catalogues were created using the MICE cosmology. To test the cosmology dependence, we fit a template computed using the Planck cosmology (Ade et al. 2016). The Planck cosmology should be closer to the current cosmology and it is used in the other analyses, such as the Baryon Oscillation Spectroscopic Survey (BOSS) DR12 (Ross et al. 2017a). For the Planck cosmology, we use Ωm = 0.309, σ8 = 0.83, ns = 0.97, and h = 0.676. From camb (Lewis et al. 2000), in the MICE cosmology, the sound horizon at the drag epoch is |$153.4 \, \mathrm{Mpc}$|, while in the Planck cosmology it is |$147.8 \, \mathrm{Mpc}$|. For the effective redshift |$z$|eff = 0.8, DA in MICE cosmology is larger than that in the Planck one by 3.1 per cent. Thus, we expect the BAO in the Planck cosmology to be smaller than that in the MICE by 4.1 per cent in angular scale.

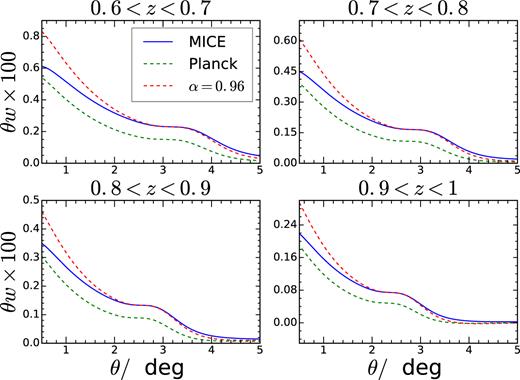

In Fig. 7, we plot the matter angular correlation function obtained using the MICE and Planck cosmology. To check how well the rescaling parameter α works, we rescale the angular correlation by |$w$|(αθ) and then match the amplitude at the BAO scale with that of the MICE one. We find that the rescaling results in a good match with the MICE one around the BAO scale. However, away from the BAO scale, the disagreement with the MICE template increases. Since we use the angular scale in the range of [0|${^{\circ}_{.}}$|5, 5°] in the fitting, it is not clear if we can recover the true cosmology.

The angular correlation obtained using the MICE (solid, blue) and Planck (dashed, green) cosmologies. The Planck correlation rescaled by α = 0.96 (red) and shifted in amplitude to match the MICE one.

In Table 2, we compare the fit obtained using the MICE template with that from the Planck cosmology one. We have displayed the fits using the mean of the mocks and the individual mocks. As the mean result is obtained by averaging over 1800 realizations, the covariance is reduced by a factor of 1800. We have shown four decimal places as the error bars are small for the mean fit. In this very high signal-to-noise setting, there is still |$\bar{\alpha } - 1 \sim 0.004$| bias in the best fit for all these estimators. It is much larger than the estimated error bar σα ∼ 0.0013, thus the bias is systematic. This can arise from the non-linear effects such as the dark matter non-linearity and galaxy bias (Crocce & Scoccimarro 2008; Padmanabhan & White 2009). When the Planck template is used, the best-fitting α is about 0.9674. Thus, the ratio of the dilation parameter is |$\bar{\alpha }_{\rm Planck} / \bar{\alpha }_{\rm MICE} = 0.9634$| and it is still smaller than the expectation 0.959 by ∼3σα. Of course this high signal-to-noise case occurs if we have 1800 times the DES Y1 volume. For the present DES volume, it is still statistical error dominated as can be seen below.

The BAO fit obtained with MLE, PL, and MCMC for the MICE and Planck cosmologies. The fits to the mean of the mocks and the individual mocks are shown.

| . | MICE . | Planck . | ||||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.69 | 0.9675 ± 0.0014 | 0.965 ± 0.050 | 0.70 |

| PL | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.77 | 0.9673 ± 0.0014 | 0.969 ± 0.048 | 0.78 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.74 | 0.9674 ± 0.0014 | 0.974 ± 0.047 | 0.75 |

| . | MICE . | Planck . | ||||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.69 | 0.9675 ± 0.0014 | 0.965 ± 0.050 | 0.70 |

| PL | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.77 | 0.9673 ± 0.0014 | 0.969 ± 0.048 | 0.78 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.74 | 0.9674 ± 0.0014 | 0.974 ± 0.047 | 0.75 |

The BAO fit obtained with MLE, PL, and MCMC for the MICE and Planck cosmologies. The fits to the mean of the mocks and the individual mocks are shown.

| . | MICE . | Planck . | ||||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.69 | 0.9675 ± 0.0014 | 0.965 ± 0.050 | 0.70 |

| PL | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.77 | 0.9673 ± 0.0014 | 0.969 ± 0.048 | 0.78 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.74 | 0.9674 ± 0.0014 | 0.974 ± 0.047 | 0.75 |

| . | MICE . | Planck . | ||||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.69 | 0.9675 ± 0.0014 | 0.965 ± 0.050 | 0.70 |

| PL | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.77 | 0.9673 ± 0.0014 | 0.969 ± 0.048 | 0.78 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.74 | 0.9674 ± 0.0014 | 0.974 ± 0.047 | 0.75 |

Now we turn to the distribution of |$\bar{\alpha }$|. Since the expected |$\bar{\alpha } \approx 0.96$| is not symmetric about the interval [0.8,1.2] for the case of Planck cosmology. This asymmetry can bias the distribution of |$\bar{ \alpha }$|. To prevent this bias, we use the detection criterion that the 1σ error bounds fall within the boundary [0.76, 1.16] for the case of Planck cosmology. For MCMC, the prior on α is changed to [0.56,1.36], although this results in no detectable significance compared to the fiducial choice [0.6,1.4]. |$\langle \bar{\alpha } \rangle$| shows larger variations than the mean fit case because the covariance is larger by a factor of 1800. The ratio of the dilation parameter |$\langle \bar{\alpha }_{\rm Planck} \rangle / \langle \bar{\alpha }_{\rm MICE} \rangle$| are 0.964, 0.965, and 0.967 for MLE, PL, and MCMC, respectively. The ratio is close to what we get for the mean fit. Note that if we keep the boundary of α to be [0.8,1.2] for the Planck case, we get |$\langle \bar{\alpha } \rangle = 0.970$|, 0.976, and 0.979, resulting in larger difference from the mean fit results. It is worth mentioning that this adjustment of the interval only affects the mocks with more extreme values of the best fit.

The small difference between the fit results and the estimation from equation (26) could be because we have used the information not just about the BAO scale, but also the shape of the correlation around it. This is related to the polynomial Ai used to absorb the broad-band power dependence. We have checked that the bias in the fit reduces with the order of polynomial in Ai. For example, for the case of mean fit, for Np = 1, 2, and 3, |$\bar{\alpha } - 1$| are −0.095, −0.054, and −0.034, respectively. For large range in the angles, the polynomial cannot perfectly remove the dependence. However for Np = 3, the bias in the best-fitting |$\bar{\alpha }$| is reduced to a basically negligible level given the signal-to-noise ratio of our data, suggesting our results should be robust at least for true cosmologies between MICE and Planck. Of course one can use a template that is closer to the currently accepted cosmology, although at the risk of the confirmation bias.

4.3 Photo-|$z$| error

Suppose that the true photo-|$z$| distribution is ϕ0, but because of some photo-|$z$| error δϕ, which can arise from systematic errors in the photo-|$z$| calibration, the photo-|$z$| distribution ϕ = ϕ0 + δϕ is used. In this section, we test how the photo-|$z$| error affects the BAO fit.

We will investigate two types of photo-|$z$| errors in the templates: in one case, the means of the photo-|$z$| distributions are systematically shifted by 3 per cent in each of the four tomographic redshift bins, for the second case, the standard deviations of the photo-|$z$| distributions are increased by 20 per cent. While the typical relative error in the mean of the redshift distributions for the DES Y1 BAO sample is about 1 per cent (Gaztañaga et al. 2018), 3 per cent is used to increase the signal-to-noise ratio of our systematic test. The 20 per cent error in the spread is the upper bound of the relative error in the width of those redshift distributions (Gaztañaga et al. 2018).

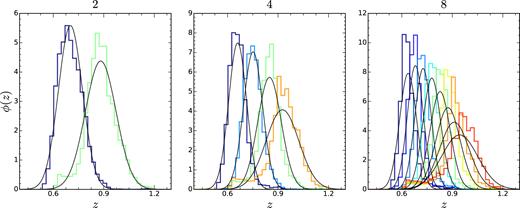

The photo-|$z$| distribution from the mock catalogue as a function of the true redshift |$z$| for a number of redshift bins N|$z$| = 2, 4, and 8 bins, respectively. The solid black lines show the Gaussian distribution with the same mean and variance as the photo-|$z$| distribution.

In Table 3, we compare the fit results obtained using the fiducial template, the template with 3 per cent increase in the mean of ϕ(|$z$|), and the one with 20 per cent increase in σ. For the fit to the mean, there is a shift of −0.0254, −0.0251, and −0.0239 for MLE, PL, and MCMC, respectively, when the mean of ϕ is systematically shifted by 3 per cent. When ϕ is systematically shifted by 3 per cent, we use the interval [0.776, 1.176] for α instead. The shift for |$\langle \bar{ \alpha } \rangle$| are −0.024 relative to the fiducial case for all the estimators. On the other hand, when the standard deviation of the distributions are increased by 20 per cent, there is no significant systematic trend for the best fits. Although not shown explicitly here, there is about 2 per cent increase in 〈σα〉 for all the estimators, signalling that the error indeed increases.

The BAO fit using the fiducial photo-|$z$| distribution, the distribution with mean shift by 3 per cent, and the distribution with standard deviation increased by 20 per cent. The fits to the mean of the mocks and the individual mocks are shown.

| . | Fiducial ϕ(|$z$|) . | 3 per cent increase in the mean of ϕ(|$z$|) . | 20 per cent increase in the std of ϕ(|$z$|) . | |||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.9789 ± 0.0014 | 0.977 ± 0.051 | 1.0043 ± 0.0012 | 1.001 ± 0.051 |

| LP | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.9792 ± 0.0013 | 0.980 ± 0.049 | 1.0043 ± 0.0013 | 1.004 ± 0.049 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.9791 ± 0.0013 | 0.983 ± 0.049 | 1.0042 ± 0.0013 | 1.007 ± 0.049 |

| . | Fiducial ϕ(|$z$|) . | 3 per cent increase in the mean of ϕ(|$z$|) . | 20 per cent increase in the std of ϕ(|$z$|) . | |||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.9789 ± 0.0014 | 0.977 ± 0.051 | 1.0043 ± 0.0012 | 1.001 ± 0.051 |

| LP | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.9792 ± 0.0013 | 0.980 ± 0.049 | 1.0043 ± 0.0013 | 1.004 ± 0.049 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.9791 ± 0.0013 | 0.983 ± 0.049 | 1.0042 ± 0.0013 | 1.007 ± 0.049 |

The BAO fit using the fiducial photo-|$z$| distribution, the distribution with mean shift by 3 per cent, and the distribution with standard deviation increased by 20 per cent. The fits to the mean of the mocks and the individual mocks are shown.

| . | Fiducial ϕ(|$z$|) . | 3 per cent increase in the mean of ϕ(|$z$|) . | 20 per cent increase in the std of ϕ(|$z$|) . | |||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.9789 ± 0.0014 | 0.977 ± 0.051 | 1.0043 ± 0.0012 | 1.001 ± 0.051 |

| LP | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.9792 ± 0.0013 | 0.980 ± 0.049 | 1.0043 ± 0.0013 | 1.004 ± 0.049 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.9791 ± 0.0013 | 0.983 ± 0.049 | 1.0042 ± 0.0013 | 1.007 ± 0.049 |

| . | Fiducial ϕ(|$z$|) . | 3 per cent increase in the mean of ϕ(|$z$|) . | 20 per cent increase in the std of ϕ(|$z$|) . | |||

|---|---|---|---|---|---|---|

| . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . | Best fit to mean of mocks . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| (all mocks) . |

| MLE | 1.0043 ± 0.0013 | 1.001 ± 0.052 | 0.9789 ± 0.0014 | 0.977 ± 0.051 | 1.0043 ± 0.0012 | 1.001 ± 0.051 |

| LP | 1.0043 ± 0.0013 | 1.004 ± 0.049 | 0.9792 ± 0.0013 | 0.980 ± 0.049 | 1.0043 ± 0.0013 | 1.004 ± 0.049 |

| MCMC | 1.0030 ± 0.0012 | 1.007 ± 0.049 | 0.9791 ± 0.0013 | 0.983 ± 0.049 | 1.0042 ± 0.0013 | 1.007 ± 0.049 |

Good agreement between the full fit results and the estimation by equation (29) validates the simple argument. This is useful because it provides a convenient estimate for the accuracy of the photo-|$z$| required for the BAO fit. For example, from the redshift validation (Gaztañaga et al. 2018), the mean of the photo-|$z$| error after sample variance correction is about 1 per cent, thus the shift in the BAO position is about 0.8 per cent. This is still marginal compared to other potential systematic shifts and is less than 20 per cent of the statistical uncertainty. For DES Y3, the amount of data is expected to increase roughly by a factor of 3, and so the error on α is expected to reduce by almost a factor of |$\sqrt{3}$|. Hence, the photo-|$z$| uncertainty is still expected to be subdominant for Year 3. The shift in the mean of the photo-|$z$| distribution has similar effects as the parameter α, and hence they would be degenerate with each other. It is important that the photo-|$z$| distribution is calibrated in an independent means e.g. using the external spectroscopic data.

5 OPTIMIZING THE ANALYSIS

To derive the fit, an accurate precision matrix, i.e. the inverse of the covariance matrix, is necessary. In Abbott et al. (2017b), the workhorse covariance is derived from the mock catalogues. To get an accurate precision matrix from the mocks, we want the dimension of the covariance matrix to be small relative to the number of mocks (Anderson 2003; Hartlap, Simon & Schneider 2007). Hence, it is desirable to reduce the number of data bins while preserving the information content. To this end, we consider optimizing of the number of angular bins, the number of redshift bins, and the inclusion of cross redshift bins.

5.1 The angular bin width

We use the following method to check the dependence of the BAO fit results on the angular bin size. We generate a theory template with fine angular bin width (0|${^{\circ}_{.}}$|01 here). A mock data vector with coarser bin width is created by bin averaging the theory template over the bin width. The mock data vector is then fitted using the fine template. In the analysis, we use the Gaussian covariance equation (12). As we see in equation (20), for the error analysis, the magnitude of the χ2 does not matter, and only the deviation from the best fit does. This method enables us to explore the likelihood about its maximum, and hence derive the strength of the constraint. It is similar to the often used Fisher forecast (Tegmark, Taylor & Heavens 1997; Dodelson 2003).

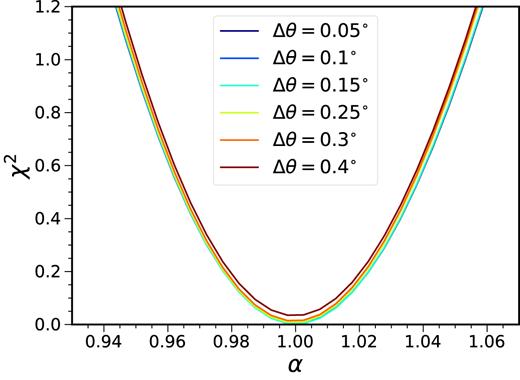

In Fig. 9, we show the distribution of χ2 as a function of the dilation parameter α for a number of angular bin widths. In this ideal noiseless setting, the best fit can fit the mock data extremely well, as manifested with χ2 ≈ 0 at α = 1. The Δχ2 = 1 rule can give the 1σ constraint on α. For the angular bin width Δθ = 0|${^{\circ}_{.}}$|05, 0|${^{\circ}_{.}}$|1, 0|${^{\circ}_{.}}$|15, 0|${^{\circ}_{.}}$|25, 0|${^{\circ}_{.}}$|3, and 0|${^{\circ}_{.}}$|4, the 1σ error bars are 0.0522, 0.0521, 0.0520, 0.0514, 0.0509, and 0.0503, respectively. The mock data with coarser bin width yields slightly smaller 1σ error bar because they give a slightly less precise representation of the underlying model. This is often the case when a poor model is used, it gives larger χ2 (the definition of a poor model) and an artificially stringent constraint.

χ2 as a function of α for different angular bin widths. The models with different bin width Δθ yield similar results.

For BAO fitting, it is preferable to have the bin width to be fine enough so that there are a few data points in the BAO dip-peak range to delineate the BAO feature. Thus for the rest of the study, we shall stick to Δθ = 0|${^{\circ}_{.}}$|15. In Abbott et al. (2017b), the covariance is derived from the mock catalogues. As we find that the differences between different bin widths results are negligible, to reduce the size of the data vector, Δθ = 0|${^{\circ}_{.}}$|3 was adopted in Abbott et al. (2017b).

5.2 Number of photo-|$z$| bins

For a sample that relies on photo-|$z$|s, there can be large overlaps among the photo-|$z$| distributions from different redshift bins, and thus substantial covariance between different redshift bins. Here, we test how the constraints depend on the number of redshift bins.

Based on the assigned photo-|$z$| in the mock, we divide the samples in the redshift range [0.6,1] into N|$z$| redshift bins, with equal width in |$z$|. For example, we have shown the photo-|$z$| distribution ϕ(|$z$|) ≡ P(|$z$|||$z$|photo) for N|$z$| = 2, 4, and 8. We see that there is indeed large overlap in the photo-|$z$| distribution. We also plotted the Gaussian distribution with the same mean and variance as the photo-|$z$| distribution. The photo-|$z$| distribution is moderately Gaussian and the deviation from Gaussianity increases with |$z$|. In fact, Avila et al. (2017) find that a double Gaussian distribution offers a better fit to ϕ.

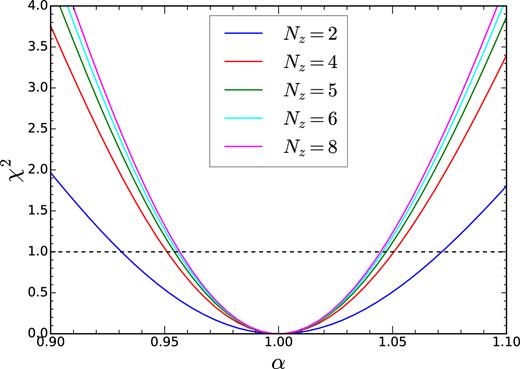

Similar to that in Section 5.1, using the photo-|$z$| distribution shown in Fig. 8, we generate templates with fine bin width (Δθ = 0|${^{\circ}_{.}}$|01), data vectors with coarser bin width (Δθ = 0|${^{\circ}_{.}}$|15), and the Gaussian covariances. The template needs to smoothly represent the theory, and Δθ = 0|${^{\circ}_{.}}$|01 is sufficient. The χ2 as a function of α for different N|$z$| are displayed in Fig. 10. We see that for N|$z$| = 2, the data are undersampled and the 1σ error bar on α is weak (at Δχ2 = 1, it is 0.07). When N|$z$| is increased to 4, the error bar is tightened to 0.05 at Δχ2 = 1. Further increasing the number of redshift bins, there is little gain, and the change in 1σ error bar is less than 0.005 for N|$z$| = 8. To strike a balance between retaining as much information as possible and keeping the size of the data vector small, we use N|$z$| = 4 for the rest of the work and in Abbott et al. (2017b).

The χ2 as a function of α for different number of redshift bins N|$z$| = 2, 4, 5, 6, and 8, respectively. The black dash line indicates the Δχ2 = 1 threshold.

In Abbott et al. (2017b), different combinations of redshift bins of width 0.1 in the range [0.6–1] are used to do the BAO fit. In Table 4, we examine how the results vary with the number of redshift bins used. We consider the fit using four redshift bins (bins 1, 2, 3, and 4), three redshift bins (bins 2, 3, and 4), and two redshift bins (bins 3 and 4). Recall that the redshift bins are in ascending order, e.g. bin 1 refers to [0.6,0.7], etc. When the number of redshift bins is reduced, the constraining power of the data is expected to decrease. The fraction of mocks with 1σ error bar in the range [0.8,1.2] is expected to decrease with the number of redshift bins. The trends for both the MLE and MCMC are consistent with this expectation. PL does not show any clear changes mainly because the mean and error of PL can be defined within any range, which is taken to be [0.8,1.2] here. The mean of the best-fitting |$\langle \bar{ \alpha } \rangle$| is essentially unchanged, but the spread |$\mathrm{std}(\bar{\alpha })$| increases mildly by 12 per cent, 6 per cent, and 10 per cent for MLE, PL, and MCMC, respectively. On the other hand, the error derived from the each realization show much larger increase. We find that 〈σα〉 increases by 21 per cent, 35 per cent, and 33 per cent for MLE, PL, and MCMC, respectively. We also note that |$\langle \sigma _\alpha \rangle / \mathrm{std} (\bar{\alpha })$| is closest to 1 among these fitting methods.

The BAO fit obtained using varying number of redshift bins: four, three, and only two bins. The results from MLE, PL and MCMC are shown.

| . | Bins 1–4 . | Bins 2–4 . | Bins 3 and 4 . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/\mathrm{std}(\bar{\alpha })$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$||$/ \mathrm{std}(\bar{\alpha })$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/ \mathrm{std}(\bar{\alpha })$| . |

| MLE | 1.001 ± 0.052 | 0.91 | 1.02 | 1.001 ± 0.053 | 0.87 | 1.06 | 0.998 ± 0.058 | 0.79 | 1.11 |

| PL | 1.004 ± 0.049 | 0.99 | 1.27 | 1.005 ± 0.051 | 0.99 | 1.32 | 1.003 ± 0.052 | 0.99 | 1.51 |

| MCMC | 1.007 ± 0.049 | 0.84 | 1.17 | 1.006 ± 0.051 | 0.75 | 1.22 | 1.004 ± 0.054 | 0.63 | 1.41 |

| . | Bins 1–4 . | Bins 2–4 . | Bins 3 and 4 . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/\mathrm{std}(\bar{\alpha })$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$||$/ \mathrm{std}(\bar{\alpha })$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/ \mathrm{std}(\bar{\alpha })$| . |

| MLE | 1.001 ± 0.052 | 0.91 | 1.02 | 1.001 ± 0.053 | 0.87 | 1.06 | 0.998 ± 0.058 | 0.79 | 1.11 |

| PL | 1.004 ± 0.049 | 0.99 | 1.27 | 1.005 ± 0.051 | 0.99 | 1.32 | 1.003 ± 0.052 | 0.99 | 1.51 |

| MCMC | 1.007 ± 0.049 | 0.84 | 1.17 | 1.006 ± 0.051 | 0.75 | 1.22 | 1.004 ± 0.054 | 0.63 | 1.41 |

The BAO fit obtained using varying number of redshift bins: four, three, and only two bins. The results from MLE, PL and MCMC are shown.

| . | Bins 1–4 . | Bins 2–4 . | Bins 3 and 4 . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/\mathrm{std}(\bar{\alpha })$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$||$/ \mathrm{std}(\bar{\alpha })$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/ \mathrm{std}(\bar{\alpha })$| . |

| MLE | 1.001 ± 0.052 | 0.91 | 1.02 | 1.001 ± 0.053 | 0.87 | 1.06 | 0.998 ± 0.058 | 0.79 | 1.11 |

| PL | 1.004 ± 0.049 | 0.99 | 1.27 | 1.005 ± 0.051 | 0.99 | 1.32 | 1.003 ± 0.052 | 0.99 | 1.51 |

| MCMC | 1.007 ± 0.049 | 0.84 | 1.17 | 1.006 ± 0.051 | 0.75 | 1.22 | 1.004 ± 0.054 | 0.63 | 1.41 |

| . | Bins 1–4 . | Bins 2–4 . | Bins 3 and 4 . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/\mathrm{std}(\bar{\alpha })$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$||$/ \mathrm{std}(\bar{\alpha })$| . | Fraction |${\bar{\alpha }} \pm \sigma _\alpha$| in [0.8,1.2] . | 〈σα〉 |$/ \mathrm{std}(\bar{\alpha })$| . |

| MLE | 1.001 ± 0.052 | 0.91 | 1.02 | 1.001 ± 0.053 | 0.87 | 1.06 | 0.998 ± 0.058 | 0.79 | 1.11 |

| PL | 1.004 ± 0.049 | 0.99 | 1.27 | 1.005 ± 0.051 | 0.99 | 1.32 | 1.003 ± 0.052 | 0.99 | 1.51 |

| MCMC | 1.007 ± 0.049 | 0.84 | 1.17 | 1.006 ± 0.051 | 0.75 | 1.22 | 1.004 ± 0.054 | 0.63 | 1.41 |

5.3 Adding cross-correlations

So far we considered only the autocorrelation in redshift bin |$w$|ii. Here, we test the gain on the constraint in α when the cross-correlations among different redshift bins are included.

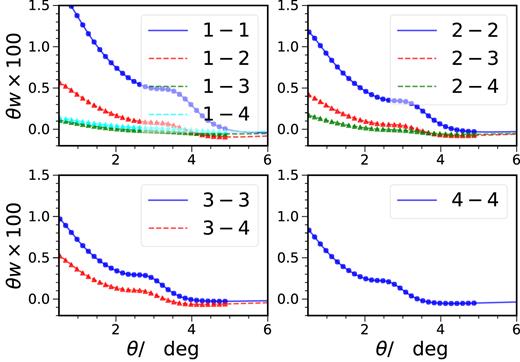

In Fig. 11, the auto and cross-correlation functions are plotted. We see that only the cross-correlation between the adjacent redshift bins show any BAO signal, while the redshift bins that are further apart have little signal in their cross-correlation. Thus, the BAO constraint improvement if any, can only come from the cross-correlation of adjacent redshift bins.

The auto (solid, circles, blue) and cross-correlation (dashed, triangles, other colours) between different redshift bins.

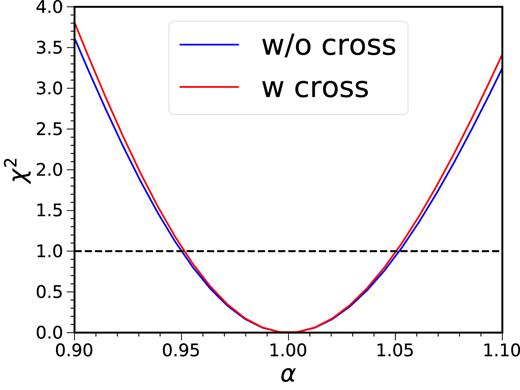

In Fig. 12, the χ2 fit using only the auto correlation and when the cross-correlations are included are compared. In the fit, for each of the |$w$|ij, a new set of parameters B and Aa are introduced. Hence for our case, there are altogether 10 × 4 + 1 = 41 free parameters. We find that the gain in the BAO constraint when the cross redshift bin correlations are included is very limited. In this estimate, Gaussian covariance is used. As it is noiseless, there is no problem of covariance matrix inversion. In practice, when the covariance is estimated from the mocks, we need to reduce the dimension of the covariance matrix. Of course, almost all the improvement on the α constraint is expect to come from the adjacent redshift bins (although this is not shown explicitly here), so in any real analysis, we should include only the nearest redshift bins. Still the dimension of the covariance matrix is increased from 4Nθ to 7Nθ, where Nθ is the number of angular bin for each redshift, when the cross-correlations between adjacent redshift bins are included. Thus, we recommend that only the autocorrelations be used.

The χ2 for the constraint on α for only using the autocorrelation (blue) and including also the cross-correlations (red).

5.4 Testing the order of the polynomial

As we mentioned after equation (6), we test the number of free parameters Ai in the template. In Table 5, we show the fit results for Np = 1, 2, and 3 in equation (6). From the limited data set, it is hard to draw a solid conclusion. Overall, there is no clear systematic trend with Np for these three methods, thus it shows that the fiducial order of polynomial adopted although somewhat arbitrary, it does not lead to systematics bias in the analysis. Assuringly, MLE with Np = 3, which is the workhorse model adopted in Abbott et al. (2017b), is the method that performs best in terms of being largely unbiased and recovering close to 68 per cent of the true answer (i.e. the Gaussian expectation).

The BAO fit with different number Np (=1, 2, 3) in the template equation (6). The results for MLE, PL, and MCMC are compared.

| . | Np = 1 . | Np = 2 . | Np = 3 . | |||

|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.996 ± 0.047 | 0.72 | 0.995 ± 0.051 | 0.68 | 1.001 ± 0.052 | 0.69 |

| PL | 0.993 ± 0.046 | 0.77 | 1.000 ± 0.050 | 0.79 | 1.004 ± 0.049 | 0.77 |

| MCMC | 1.005 ± 0.046 | 0.75 | 0.994 ± 0.050 | 0.71 | 1.007 ± 0.049 | 0.74 |

| . | Np = 1 . | Np = 2 . | Np = 3 . | |||

|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.996 ± 0.047 | 0.72 | 0.995 ± 0.051 | 0.68 | 1.001 ± 0.052 | 0.69 |

| PL | 0.993 ± 0.046 | 0.77 | 1.000 ± 0.050 | 0.79 | 1.004 ± 0.049 | 0.77 |

| MCMC | 1.005 ± 0.046 | 0.75 | 0.994 ± 0.050 | 0.71 | 1.007 ± 0.049 | 0.74 |

The BAO fit with different number Np (=1, 2, 3) in the template equation (6). The results for MLE, PL, and MCMC are compared.

| . | Np = 1 . | Np = 2 . | Np = 3 . | |||

|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.996 ± 0.047 | 0.72 | 0.995 ± 0.051 | 0.68 | 1.001 ± 0.052 | 0.69 |

| PL | 0.993 ± 0.046 | 0.77 | 1.000 ± 0.050 | 0.79 | 1.004 ± 0.049 | 0.77 |

| MCMC | 1.005 ± 0.046 | 0.75 | 0.994 ± 0.050 | 0.71 | 1.007 ± 0.049 | 0.74 |

| . | Np = 1 . | Np = 2 . | Np = 3 . | |||

|---|---|---|---|---|---|---|

| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle {\bar{\alpha }} \rangle \pm {\rm std}({\bar{\alpha }})$| . | Fraction with |${\bar{\alpha }} \pm \sigma _\alpha$| enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 0.996 ± 0.047 | 0.72 | 0.995 ± 0.051 | 0.68 | 1.001 ± 0.052 | 0.69 |

| PL | 0.993 ± 0.046 | 0.77 | 1.000 ± 0.050 | 0.79 | 1.004 ± 0.049 | 0.77 |

| MCMC | 1.005 ± 0.046 | 0.75 | 0.994 ± 0.050 | 0.71 | 1.007 ± 0.049 | 0.74 |

6 COVARIANCE

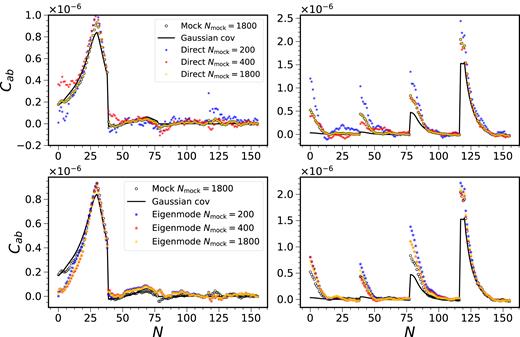

In this section, we consider the issues of covariance in more detail. We first study how the Gaussian covariance impacts the BAO fit, and then investigate how to improve the covariance derived from a set of mock catalogues. One of the potential issues that arises in the survey analysis pipeline is that sample properties such as the n(|$z$|) and galaxy bias could be different from those used to create the mocks. We show that the correction due to these property changes can be mitigated with the help of the Gaussian covariance. We also investigate expanding the covariance matrix and precision matrix using the eigenmodes from some proxy covariance matrix. We demonstrate that this approach can substantially reduce the influence of the noise because the number of free parameters are significantly reduced, and that it can effectively mimic the effect of small changes in the sample properties.

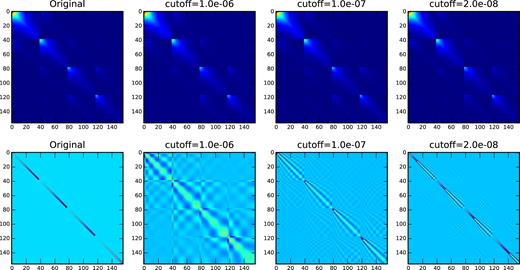

6.1 BAO fit with the Gaussian covariance

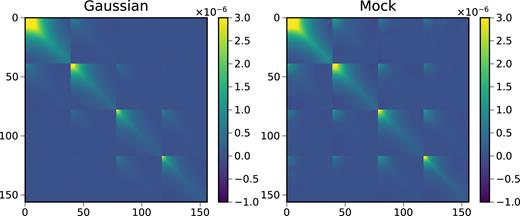

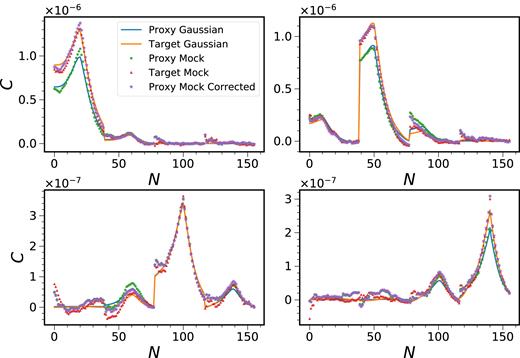

In Fig. 13, we show the covariance matrix obtained from the mock catalogue and the Gaussian theory. We arrange the data vector in ascending order in redshift. To see the difference more clearly, we plot four different rows of the covariance matrix in Fig. 14. We have shown the results for two samples (proxy and target, see below). The symbols and lines show the mock and the Gaussian covariances, respectively. From Figs 13 and 14, it is clear that the Gaussian covariance captures most of the features well. However, the Gaussian covariance exhibits only correlation between the neighbouring redshift bins, while the mock covariances show correlation beyond the neighbouring redshift bins.

The Gaussian covariance (left-hand panel) and the mock covariance (right-hand panel).

Four rows of the covariance matrix (the row number corresponds to the peak position) are shown. The results for Gaussian covariance (blue curve for the proxy and orange one for the target) and the mock covariance (green circles for the proxy and red triangles for the target) are compared. The composite covariance (violet stars) obtained by combining the proxy mock results with the Gaussian correction is also displayed.

We summarize the BAO fit results using the mock and the Gaussian covariance in Table 6. The distribution of |$\bar{\alpha }$| is similar to that from the mock and |$\mathrm{std} (\bar{\alpha })$| is only systematically larger than that from the mock by a couple of percent. However, 〈σα〉 from the Gaussian covariance is only about 80 per cent of 〈σα〉 derived from the mock.

The BAO fit results obtained using the mock and the Gaussian covariance. The results for MLE, PL, and MCMC are shown.

| . | Mock covariance . | Gaussian covariance . | ||||

|---|---|---|---|---|---|---|

| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.001 ± 0.052 | 1.02 | 0.69 | 1.002 ± 0.054 | 0.84 | 0.60 |

| PL | 1.004 ± 0.049 | 1.27 | 0.77 | 1.004 ± 0.052 | 1.04 | 0.68 |

| MCMC | 1.007 ± 0.049 | 1.17 | 0.74 | 1.007 ± 0.051 | 0.96 | 0.64 |

| . | Mock covariance . | Gaussian covariance . | ||||

|---|---|---|---|---|---|---|

| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.001 ± 0.052 | 1.02 | 0.69 | 1.002 ± 0.054 | 0.84 | 0.60 |

| PL | 1.004 ± 0.049 | 1.27 | 0.77 | 1.004 ± 0.052 | 1.04 | 0.68 |

| MCMC | 1.007 ± 0.049 | 1.17 | 0.74 | 1.007 ± 0.051 | 0.96 | 0.64 |

The BAO fit results obtained using the mock and the Gaussian covariance. The results for MLE, PL, and MCMC are shown.

| . | Mock covariance . | Gaussian covariance . | ||||

|---|---|---|---|---|---|---|

| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.001 ± 0.052 | 1.02 | 0.69 | 1.002 ± 0.054 | 0.84 | 0.60 |

| PL | 1.004 ± 0.049 | 1.27 | 0.77 | 1.004 ± 0.052 | 1.04 | 0.68 |

| MCMC | 1.007 ± 0.049 | 1.17 | 0.74 | 1.007 ± 0.051 | 0.96 | 0.64 |

| . | Mock covariance . | Gaussian covariance . | ||||

|---|---|---|---|---|---|---|

| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . | |$\langle \bar{\alpha }\rangle \pm \mathrm{std}(\bar{\alpha })$| . | |$\langle \sigma _\alpha \rangle / \mathrm{std}(\bar{\alpha })$| . | Fraction with 1σ enclosing |$\langle \bar{\alpha } \rangle$| . |

| MLE | 1.001 ± 0.052 | 1.02 | 0.69 | 1.002 ± 0.054 | 0.84 | 0.60 |

| PL | 1.004 ± 0.049 | 1.27 | 0.77 | 1.004 ± 0.052 | 1.04 | 0.68 |

| MCMC | 1.007 ± 0.049 | 1.17 | 0.74 | 1.007 ± 0.051 | 0.96 | 0.64 |

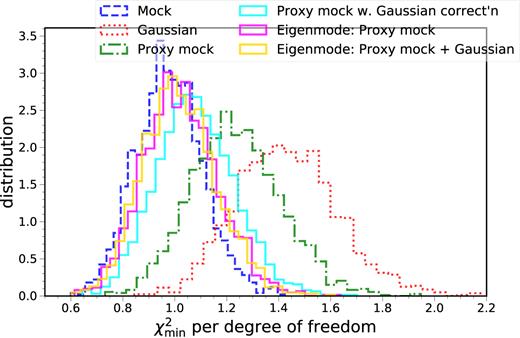

In Fig. 15, we plot the |$\chi ^2_{\rm min}$| per degree of freedom for the BAO fit using different prescriptions for the covariance. The histograms are obtained by fitting to 1800 mock data vectors, and they only differ in the covariance used in the fit. The mock covariance by construction gives the |$\chi _{\rm min}^2$| per degree of freedom ∼1, while we find that the Gaussian covariance (with the correct sample properties) gives a higher value, ∼1.4. We will use the |$\chi _{\rm min}^2$| per degree of freedom as the metric to decide which prescription of the covariance gives a better approximation to the correct mock covariance.

The distributions of the |$\chi _{\rm min}^2$| per degree of freedom for the BAO fit using various covariances. The results obtained using the target mock covariance (blue, dashed), Gaussian covariance (red, dotted), proxy mock covariance (green, dotted–dashed), the proxy mock corrected with the Gaussian covariance (cyan, solid), the direct eigenmode expansion on the proxy mocks (magenta, solid), and the eigenmode expansion combining the Gaussian covariance eigenmodes with the proxy mock ones (yellow, solid). The covariance yielding the best approximation to the mock covariance is expected to give |$\chi _{\rm min }^2$| per degree of freedom closest to that obtained using the mock results.

6.2 Correcting sample variation using Gaussian covariance

As mocks take time to produce, they are often created using some expected data properties. If these differ from the actual data properties, the mocks do not perfectly match the data. Here, we investigate correcting these changes in the mock covariance matrix using the Gaussian covariance. These sample changes can result from variation in the bias parameter and number density of the samples. To be concrete, let us call the mock that we created using the expected properties the ‘proxy mocks’, and the ones with the final correct properties the ‘target mocks’.

In Fig. 14, we have plotted the CCorrected and we find that the long-range correlation beyond the neighbouring bins are preserved. In Fig. 15, we have also plotted the |$\chi ^2_{\rm min}$| per degree of freedom for the BAO fit using the proxy mock covariance with the Gaussian correction. It results in values smaller than those obtained using the proxy mock covariance or the target Gaussian covariance, and hence it signals that the corrected covariance is closer to the true one.

Overall, by combining the proxy mock covariance with the Gaussian correction, we get better agreement with the target mock covariance than using solely the proxy or the Gaussian covariance. This composite approach is expected to work when the variation of the sample is small, e.g. the variation in n(|$z$|) is small, and the non-Gaussian contribution is weak because we have not corrected the part due to the non-Gaussian covariance. It offers a means to correct the property changes in covariance matrix without having to re-run the mocks.

6.3 Eigenmode expansion of the covariance matrix

There are methods that have been proposed to combine mocks with theory covariance to reduce the impact of the noise fluctuations (Pope & Szapudi 2008; Taylor & Joachimi 2014; Paz & Sánchez 2015; Pearson & Samushia 2016; Friedrich & Eifler 2017). Here, we consider the expansion of the covariance and precision matrices using the eigenmodes or the principal components of a given covariance matrix. The effects due to noise can be mitigated because the number of free parameters is substantially reduced when the eigenmodes are given. The study of the eigenmode of the covariance matrix is known as principal component analysis (PCA) and it is widely used in many different fields. PCA identifies the most rapidly varying direction in the data space with many variables, and hence find a more effective way to describe the data. In the cosomological covariance context, it has been used in Scoccimarro (2000), Harnois-Déraps & Pen (2012), and Mohammed, Seljak & Vlah (2017).

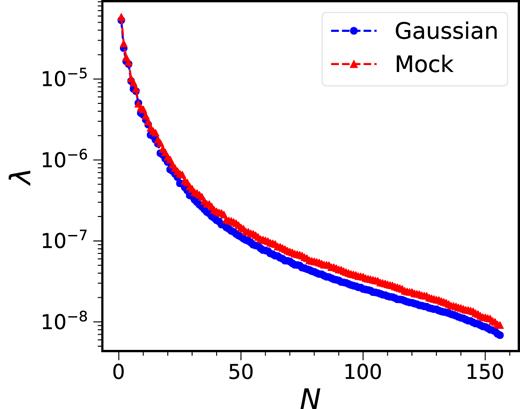

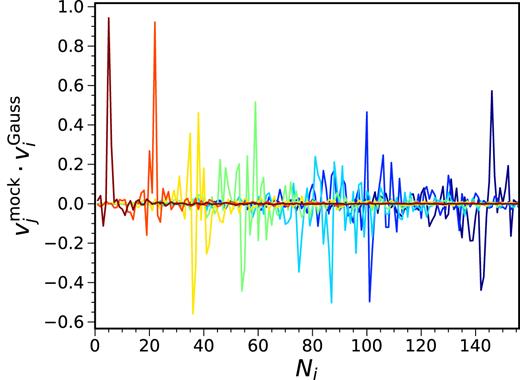

The full covariance matrix of dimension p has p(p + 1)/2 independent elements, while fitting using a given basis of eigenmodes has only p free parameters. Thus, this basis cannot allow for all variations in the covariance and they cannot fit a general symmetric matrix. The success of this method hinges on how well the given eigenmodes approximate those of the target covariance matrix. We can obtain these eigenmodes using theory or approximate methods, e.g. Second-order Lagrangian Perturbation Theory (2LPT) mock in Scoccimarro (2000) . In the following, we use both the Gaussian theory covariance and the covariance derived from mocks with slightly different sample properties. We consider two samples (proxy and target) which differ slightly in the n(|$z$|) and bias. The bias of these two samples differ by between a few per cent to 10 per cent, while the mean of the two photo-|$z$| distributions differs by up to 7 per cent. There are 1800 realizations for both samples.