-

PDF

- Split View

-

Views

-

Cite

Cite

Florence H Sheehan, Shannon McConnaughey, Rosario Freeman, R Eugene Zierler, Formative Assessment of Performance in Diagnostic Ultrasound Using Simulation and Quantitative and Objective Metrics, Military Medicine, Volume 184, Issue Supplement_1, March-April 2019, Pages 386–391, https://doi.org/10.1093/milmed/usy388

Close - Share Icon Share

Abstract

We developed simulator-based tools for assessing provider competence in transthoracic echocardiography (TTE) and vascular duplex scanning.

Psychomotor (technical) skill in TTE image acquisition was calculated from the deviation angle of an acquired image from the anatomically correct view. We applied this metric for formative assessment to give feedback to learners and evaluate curricula.

Psychomotor skill in vascular ultrasound was measured in terms of dexterity and image plane location; cognitive skill was assessed from measurements of blood flow velocity, parameter settings, and diagnosis. The validity of the vascular simulator was assessed from the accuracy with which experts can measure peak systolic blood flow velocity (PSV).

In the TTE simulator, the skill metric enabled immediate feedback, formative assessment of curriculum efficacy, and comparison of curriculum outcomes. The vascular duplex ultrasound simulator also provided feedback, and experts’ measurements of PSV deviated from actual PSV in the model by <10%.

Skill in acquiring diagnostic ultrasound images of organs and vessels can be measured using simulation in an objective, quantitative, and standardized manner. Current applications are provision of feedback to learners to enable training without direct faculty oversight and formative assessment of curricula. Simulator-based metrics could also be applied for summative assessment.

INTRODUCTION

Ultrasound is a portable, low cost, and safe diagnostic imaging modality, but its utility and accuracy are highly dependent on the ability of the provider to acquire images of diagnostic quality. Deficient provider skill in ultrasound image acquisition limits diagnostic accuracy at the point of care1 and the efficacy of tele-ultrasound.2 However, there is no standardized, objective, quantitative method for assessing competence in diagnostic ultrasound. Certification and credentialing continue to be based on duration of training and number of procedures performed.3

Assessing procedural competence of physicians and sonographers is important following initial training and to monitor skill retention in the clinical setting, especially following lapses in practice. This report describes metrics that we developed for measuring psychomotor skill in ultrasound image acquisition using simulation. Both previously reported data and new findings are presented as evidence for the validity of our skill metrics,4,5 and to provide a comprehensive view of how skill metrics can be applied in medical education. The studies presented demonstrate the relationship of the skill metric with other variables reflecting learner characteristics by comparing the scores of experts, defined as faculty with expertise in the modality, senior fellows, and ultrasound technicians qualified not only to perform the imaging clinically but also to teach it to residents and fellows, vs. novices who lack these credentials but have completed the curriculum, and of untrained vs. trained novices.

The transthoracic echocardiography (TTE) simulator was designed to measure accuracy in image acquisition while enabling training on real patient images. The vascular duplex ultrasound simulator was designed to assess competence in measurement-based diagnosis. This report also details how we applied these metrics for formative assessment of performance and to provide feedback during training.

METHODS

Simulator-Based Competency Testing in TTE

Simulator Design

The trainee manipulates a mock transducer to “scan” a mannequin while a PC displays ultrasound images from a 3D/4D image volume in a 2D view that morphs in real-time appropriately for the transducer’s position and orientation. The transducer is tracked using a magnetic field device (Patriot, Polhemus, Colchester, VT, USA). The simulator’s curriculum followed the traditional sequence, presenting didactic material first followed by hands-on practice using an Image Library of cases generated from the image data of volunteers or patients at the University of Washington (UW) as previously described.6

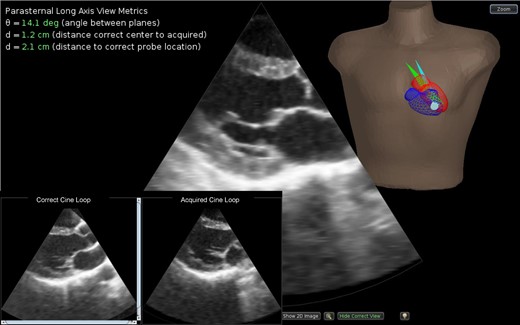

Psychomotor skill is measured as the deviation angle between the plane of a learner-acquired image and the plane of the anatomically correct image for a specified standard view (Fig. 1). The correct view plane is defined geometrically from 3D reconstruction of each case’s heart chambers and associated structures.6 The construct validity of this deviation angle as a skill metric was demonstrated by comparing the performance of experts vs. novices who had trained on the simulator by scanning seven cases.6 Cognitive skill (knowledge) in image interpretation is measured using multiple choice questions that are presented to the trainee after s/he completes image acquisition.

The transthoracic echo simulator interface. For feedback, the location and orientation of the plane of the image acquired by the trainee and the plane of the anatomically correct image are shown in the torso (blue and green sectors, respectively). The visual guidance display shows a 3D reconstruction of the heart of the patient in this study. The correct image is defined using anatomic landmarks carried from analysis of the original patient image data set to the final reconstruction. For example, the parasternal long axis view is defined from the centroid of the mitral annulus, the center of the aortic valve, and the left ventricular apex. The metrics of psychomotor skill are displayed at the upper left. For visual comparison of image quality, the correct and acquired images can be viewed side by side and played as cine loops (inset). During a test, the metrics and dual display are shown only after the trainee has completed the case and submitted his/her results.

Feedback is provided to trainees both visually and quantitatively. As the learner scans the mannequin, the anatomic location and orientation of the image sector are continuously tracked and displayed within the 3D heart reconstruction. Immediately following each image acquisition, the visual guidance display shows the plane of the acquired image alongside the plane of the correct view in the 3D heart for anatomic comparison as well as a cine loop of the acquisition in a dual display side by side with the correct image for visual comparison of image quality. For quantitative feedback, the psychomotor skill metrics are numerically reported at the time of image acquisition.

For self-assessment, the trainee can elect to first acquire the view without assistance, and then turn on visual guidance after acquisition to determine his/her accuracy in acquiring the view. The feedback enables learners to proceed through the curriculum self-paced without requiring constant faculty intervention or oversight.

For formative assessment, cases are presented to the trainee in Test Mode without visual guidance. The trainee must acquire all views and answer image interpretation questions. After s/he “submits” his/her answers, the dual display and psychomotor skill metrics are shown in addition to the correct answers to the cognitive test, so that testing is also a learning experience.

Study Design

In the following studies, the participants both expert and novice were recruited at the UW and the studies were performed at the UW using the simulator developed at the UW.

Formative Assessment of Curriculum Efficacy

We applied the deviation angle for formative assessment by employing this skill metric to gauge the efficacy of simulator-based training in TTE. The simulator was employed during testing for both the “patient” and the metric for comparing performance pre- vs. post-training. The pre-test presented a single pathologic case, and the post-test comprised six cases. The initial study was performed on 22 residents.7 Statistical analysis was performed using Wilcoxon signed ranks test to compare deviation angle measured at the pre-test vs. post-training.

Subsequently as demand for training increased, data was collected on other provider groups.8,9 The six-case test was considered arduous, requiring over three hours to complete,7 and has now been reduced to three cases after analyses showed a high correlation (r = 0.92) between the results of three-case and six-case tests.

Formative Assessment to Compare Curricula

The second application of the deviation angle was for formative assessment to compare the efficacy and efficiency of two curricula. The first curriculum that we developed for the simulator followed the tradition of didactic-before-hands-on-practice. The second curriculum employed scaffolding and deliberate practice with integrated didactics and experiential learning (IDEL) to break the task of learning cardiac ultrasound into discrete steps. Scaffolding is an instructional technique in which temporary support is provided to assist early learners in reaching a level of skill that s/he would not be able to easily reach alone. The level of support is gradually decreased as the learner progresses until s/he achieves competence.10,11 In the IDEL curriculum, focused didactic information is immediately followed by targeted simulator practice for each module.10,11 The results of this curriculum were compared to historical controls from the standard curriculum by Mann–Whitney test.

Formative Assessment to Compare Psychomotor Skill Metrics

Transducer tracking permits analysis of the distance between (a) the centers of the acquired and correct views and (b) the transducer locations for acquired and correct views in addition to deviation angle.12 The change in each metric from pre- to post-training was assessed in 15 novices using Wilcoxon signed ranks test. The three forms of the skill metric, deviation angle and two distance metrics, were evaluated using logistic regression to compare their ability to distinguish the pre- vs. post-training status.

Simulator-Based Competency Testing in Vascular Duplex Scanning

Simulator Design

As for TTE, the user manipulates a mock transducer to scan the mannequin, and the PC displays B-mode ultrasound images from a 3D image volume in a 2D view that morphs in real-time appropriately for the transducer’s position and orientation. The transducer is tracked using a magnetic field device (Patriot, Polhemus, Colchester, VT, USA). An Image Library of cases was generated from images of the carotid artery, femoral artery, or upper extremity dialysis access shunt of volunteers or patients at the UW as previously described.13 Duplex scanning combines the ultrasound modalities of B-mode imaging and pulsed Doppler flow detection in a single instrument. For the Doppler simulation, the artery segment of interest is reconstructed in 3D. Computational fluid dynamics (CFD) modeling is performed on the 3D reconstruction to populate the reconstruction with time-varying velocity vectors that define the blood flow at all points within the segment.13

The velocity field is sampled along with the image data to create a spectral waveform display that responds in real-time to the control panel settings and sample volume location selected by the examiner. The CFD velocity vector fields are projected onto the Doppler beam and converted to a color map to generate a simulated color Doppler display for additional realism.

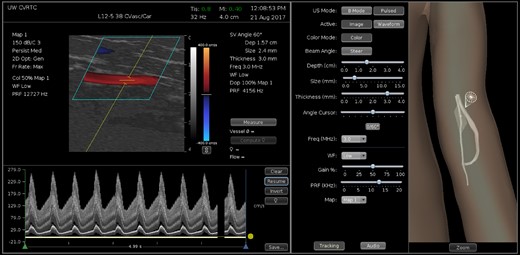

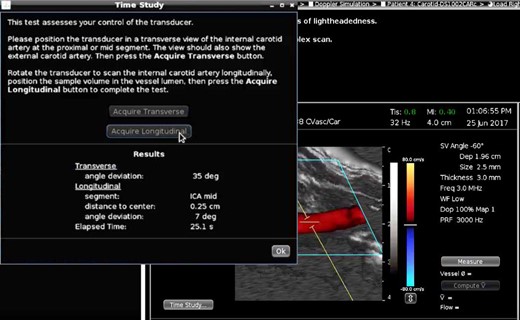

As in the TTE simulator feedback is provided by tracking and displaying the anatomic location and orientation of the image plane within the 3D vessel reconstruction (Fig. 2). Psychomotor skill is assessed from the trainee’s accuracy in positioning the image plane along the long axis of the vessel segment and the pulsed Doppler sample volume in the center of the lumen. In addition, dexterity is assessed from the time required for the trainee to rotate the transducer from a cross-sectional view of a vessel such as the internal carotid artery (ICA) to a longitudinal view of the ICA, the orientation of the image plane relative to the ICA long axis, and the distance of the sample volume from the center of the ICA lumen (Fig. 3). Cognitive skill is assessed from the trainee’s ability to set the beam steering, select the size and depth of the Doppler sample volume within the B-mode image, specify the Doppler angle relative to the vessel axis, adjust the pulse repetition frequency to avoid aliasing, and obtain measurements such as the peak systolic velocity (PSV) of blood flow based on the spectral waveforms.

Vascular Doppler simulator interface showing scan of a dialysis access shunt model. The location and orientation of the image plane are indicated in a 3D reconstruction of the shunt that is displayed in the visual guidance display (right panel). The gray sphere indicates the position of the sample volume, and the red and green curves indicate the centerlines of the artery and vein. The middle panel displays parameter settings. Volume flow has been measured from the diameter of the vein and blood flow velocity over four cardiac cycles (left panel). A measurement of peak systolic blood flow velocity is also displayed.

Dexterity test. The trainee has acquired a transverse view of the internal (ICA) and external carotid arteries, rotated to a longitudinal view of the ICA, and positioned the sample volume in the center of the ICA lumen. The test results report the accuracy and speed of the trainee.

For self-assessment, measurements of PSV, end diastolic velocity, and volume flow can be submitted for immediate comparison with the true values along with a report on whether the vessel segment was correctly identified. The user can erase his/her results and repeat the case as desired. Immediately following each dexterity test, the interface reports the elapsed time, whether the correct vessel was imaged, the deviation angle between the vessel long axis and the image plane, and the distance of the sample volume from the center of the vessel (Fig. 3).

For formative assessment, cases are presented in Test mode, without benefit of the visual guidance provided by the 3D vessel reconstruction. Feedback is still provided after each case is “submitted.”

Validation

The validity of the vascular simulator was assessed from the accuracy of blood flow velocity measurements by expert users on the simulator compared to the true velocities in the CFD models used to populate the artery models in the simulator. The deviation was expressed as a percent of the true velocity.

Subject Participation

All studies had the approval of the Human Subjects Review Committee at the UW, and all participants gave informed consent.

RESULTS

Simulator-Based Competency Testing in TTE

Formative Assessment of Curriculum Efficacy

The traditional curriculum was completed by 22 first-year residents in internal medicine, family medicine, anesthesiology, or surgery who had no prior TTE training. Curriculum efficacy was demonstrated by significant (p < 0.0001) improvements in psychomotor skill (decrease in deviation angle from a median of 81 degrees at the pre-test to 28 degrees after training) and cognitive skill (29% increase, p < 0.0001).7

Formative Assessment to Compare Curricula

The IDEL curriculum was completed by 16 nurse practitioners and resident physicians. In comparison to the previously validated, traditional didactic-before-practice curriculum, the average time to completion decreased from 8.0 ± 2.5 hours to 4.7 ± 1.9 hours (p < 0.0001). There was no difference in post-training cognitive outcome between the curricula as measured by a simulator post-test.14 However post-training psychomotor skill was higher in residents trained using the IDEL curriculum (Table I).

| . | Psychomotor Skill*, ◦/N . | Cognitive Skill*, %/N . | ||

|---|---|---|---|---|

| . | Pre-training . | Post-training . | Pre-training . | Post-training . |

| Standard curriculum | 81 (54) | 28 (11) | 45 (25) | 73 (15) |

| 22 | 22 | 22 | 22 | |

| IDEL curriculum | 78 (65) | 22 (6) | 45 (20) | 67 (15) |

| 6 | 16 | 6 | 16 | |

| p** | 0.365 | 0.015 | 0.935 | 0.129 |

| . | Psychomotor Skill*, ◦/N . | Cognitive Skill*, %/N . | ||

|---|---|---|---|---|

| . | Pre-training . | Post-training . | Pre-training . | Post-training . |

| Standard curriculum | 81 (54) | 28 (11) | 45 (25) | 73 (15) |

| 22 | 22 | 22 | 22 | |

| IDEL curriculum | 78 (65) | 22 (6) | 45 (20) | 67 (15) |

| 6 | 16 | 6 | 16 | |

| p** | 0.365 | 0.015 | 0.935 | 0.129 |

*Values presented in terms of the median and interquartile range ().

**Mann–Whitney test; threshold for significance p < 0.05.

IDEL, integrated didactic and experiential learning; N, number.

| . | Psychomotor Skill*, ◦/N . | Cognitive Skill*, %/N . | ||

|---|---|---|---|---|

| . | Pre-training . | Post-training . | Pre-training . | Post-training . |

| Standard curriculum | 81 (54) | 28 (11) | 45 (25) | 73 (15) |

| 22 | 22 | 22 | 22 | |

| IDEL curriculum | 78 (65) | 22 (6) | 45 (20) | 67 (15) |

| 6 | 16 | 6 | 16 | |

| p** | 0.365 | 0.015 | 0.935 | 0.129 |

| . | Psychomotor Skill*, ◦/N . | Cognitive Skill*, %/N . | ||

|---|---|---|---|---|

| . | Pre-training . | Post-training . | Pre-training . | Post-training . |

| Standard curriculum | 81 (54) | 28 (11) | 45 (25) | 73 (15) |

| 22 | 22 | 22 | 22 | |

| IDEL curriculum | 78 (65) | 22 (6) | 45 (20) | 67 (15) |

| 6 | 16 | 6 | 16 | |

| p** | 0.365 | 0.015 | 0.935 | 0.129 |

*Values presented in terms of the median and interquartile range ().

**Mann–Whitney test; threshold for significance p < 0.05.

IDEL, integrated didactic and experiential learning; N, number.

Formative Assessment to Compare Psychomotor Skill Metrics

We analyzed the test results of 15 residents who were echo novices when they began training on the simulator. Deviation angle improved from pre- to post-training test, but there was no significant change in either the distance between the centers of the acquired and correct views, or the distance between the transducer locations for acquired and correct views (Table II). Deviation angle was the only one of the three metrics that was predictive of training status (pre-test vs. post-training).

| . | Deviation Angle, ◦* . | Plane Distance, cm* . | Transducer Distance, cm* . |

|---|---|---|---|

| Pre-training | 71 (60) | 3.0 (0.7) | 4.3 (2.4) |

| Post-training | 23 (8) | 2.6 (0.7) | 2.7 (1.5) |

| p** | 0.003 | 0.131 | 0.062 |

| . | Deviation Angle, ◦* . | Plane Distance, cm* . | Transducer Distance, cm* . |

|---|---|---|---|

| Pre-training | 71 (60) | 3.0 (0.7) | 4.3 (2.4) |

| Post-training | 23 (8) | 2.6 (0.7) | 2.7 (1.5) |

| p** | 0.003 | 0.131 | 0.062 |

*Values presented in terms of the median and interquartile range ().

**Pre- vs. post-training test results were compared using Wilcoxon Signed Rank Test; threshold for significance p < 0.05.

| . | Deviation Angle, ◦* . | Plane Distance, cm* . | Transducer Distance, cm* . |

|---|---|---|---|

| Pre-training | 71 (60) | 3.0 (0.7) | 4.3 (2.4) |

| Post-training | 23 (8) | 2.6 (0.7) | 2.7 (1.5) |

| p** | 0.003 | 0.131 | 0.062 |

| . | Deviation Angle, ◦* . | Plane Distance, cm* . | Transducer Distance, cm* . |

|---|---|---|---|

| Pre-training | 71 (60) | 3.0 (0.7) | 4.3 (2.4) |

| Post-training | 23 (8) | 2.6 (0.7) | 2.7 (1.5) |

| p** | 0.003 | 0.131 | 0.062 |

*Values presented in terms of the median and interquartile range ().

**Pre- vs. post-training test results were compared using Wilcoxon Signed Rank Test; threshold for significance p < 0.05.

Simulator-Based Competency Testing in Vascular Duplex Scanning

Validation

Initial validation was performed on carotid artery models. Three expert examiners made a total of 36 carotid artery PSV measurements on two simulated cases. The PSV measured by the examiners deviated from true PSV by 8 ± 5% (N = 36). The deviation in PSV did not differ significantly between artery segments, normal and stenotic arteries, or examiners.13 A second validation was performed on dialysis access shunt models. Three expert examiners made 43 PSV measurements on two cases. The mean deviation from the actual PSV was 7.7 ± 6.1% and was similar in arteries (7.1 ± 5.4%, N = 24) and veins (8.5 ± 6.9%, N = 19), and between operators (p = NS by ANOVA).15

DISCUSSION

The need to focus on competency-based medical education (CBME) was voiced in 1978 by McGaghie in a World Health Organization report, “The intended output of a competency-based programme is a health professional who can practice medicine at a defined level of proficiency, in accord with local conditions, to meet local needs.”16 For both competence, which refers to a minimally acceptable requirement such as correctly performing a procedure once, and proficiency, which refers to a higher level of mastery such as the ability to consistently perform a procedure correctly two or more times in a row, implementation of CBME requires tools for assessment.

Our research has focused on the development and validation of tools for assessing psychomotor skill in diagnostic ultrasound. Psychomotor skill in performing medical procedures has always been difficult to assess in a manner that is objective and reproducible between instructors, and cannot be measured by written and oral examinations, unlike knowledge, judgment, and process thinking.17,18 Instead, certification has traditionally been, and still is, based on faculty observation of performance. In the ACGME’s Suggested Best Methods for Competency Evaluation: Evaluation Methods for Performance of Medical Procedures, both “Checklist” and “Simulations & Models” have a rating of 1: “most desirable,” “360 Global Rating” is rated 2: “next best method,” whereas both “Global Rating” and “Procedure or Case Logs” are rated 3: “potentially applicable.”19 For example, the Objective Structured Assessment of Technical Skills (OSATS) uses a checklist detailing the component skills.20 However each task requires a separate checklist,21 checklists may require multiple observers for reliability,22 and the relative power of checklist vs. global rating for discriminating between trainee skill levels is controversial.21,23–25 All of these assessment methods rely on subjective opinions and none are standardized, with the notable exception of simulation.

The results of the studies presented demonstrate that psychomotor skill in diagnostic cardiac and vascular ultrasound can be measured objectively using simulation-based assessment tools, starting from the first step in competently performing a diagnostic ultrasound exam – acquiring images of adequate quality – to interpretation and reporting. The metrics that we have developed and validated have value for medical education on multiple levels.

Individual learners are the first level. They benefit from receiving immediate expert feedback throughout the curriculum without requiring constant faculty oversight. The feedback is presented immediately, both visually and quantitatively to connect with different learning styles. The feedback provides guidance on how to optimize transducer position, as a mentor might, to inform learners on how well they are positioning the transducer, guide them to optimize the view they are attempting to acquire, and help them reach proficiency. The deviation angle has proven to be a powerful tool for teaching TTE and is now implemented for teaching transesophageal echo at the UW. The feedback implemented on our vascular duplex ultrasound simulator is more advanced because diagnosis is not made solely by interpreting the acquired images, but also from measurements made by the learner from acquired images. In both simulators, feedback is provided on both the psychomotor and cognitive skill components. Feedback is important because it leads to more effective learning and promotes retention.26,27 Indeed frequent feedback is recommended as a key component of CBME.16 The metrics described can also be applied to other organs and vessels.28 An unintended but welcome consequence was that the numeric results can be motivational because trainees ask how they compare with others.

The faculty are the second level to benefit. Providing basic training on the mannequin allows faculty to reserve their time for advanced training. Program directors benefit by having access to standardized and quantitative metrics of trainee progress. Even seeing that someone is not progressing may also be useful: skill metrics have shown that some trainees may never achieve competence.29 On-the-job competence assessment could also be used to assist in hiring, assess skill maintenance, and determine the need for retraining or refreshment following deployment, leave, and/or periods of reduced patient volume.

On the program level, the skill metrics that we have developed have shown their value for several types of formative assessments. Perhaps the most important is verifying that a curriculum is effective and beneficial to learners. Having skill metrics also enables comparison of versions of the curriculum and even versions of the metric itself from the perspective of the educational outcome in an evidence-based manner.

Simulation is beginning to be employed for summative assessment or certification, credentialing, and accreditation. Two specialty boards, anesthesiology and surgery, require the use of simulation for primary certification.30 There is widespread interest in applying simulation to assess skill, if judged from the size of a recent review – 417 studies.31 Although the authors wrote, “validity evidence for simulation-based assessments is sparse,” they reported that “28 studies evaluated associations between simulator-based performance and performance with real patients…Without exception, these studies showed that higher simulator scores were associated with higher performance in clinical practice”. We have had similar results, finding that providers with higher patient volume were able to acquire images on the simulator with lower deviation angle.32

CONCLUSION

This report describes objective and quantitative simulator-based metrics of psychomotor as well as cognitive skill in echocardiography and vascular duplex ultrasound. These skill metrics give immediate feedback to the individual learner and enable training without direct faculty oversight. On the program level, the skill metrics are useful for formative assessments; applications include evaluation of the efficacy of a curriculum and comparison of outcomes from two different curricula. Competence in diagnostic ultrasound of organs and vessels can be measured in an automated and standardized manner using simulation for formative and summative assessment.

Previous Presentations

Presented as a poster at the 2017 Military Health System Research Symposium.

Funding

This study was supported by grants from the Edward J. Stemmler Medical Education Research Fund (0910-050), the Wallace H. Coulter Foundation, and the National Institute of Biomedical Imaging and Bioengineering and the National Institute of Environmental Health Sciences (R41 and R42 EB018124). This supplement was sponsored by the Office of the Secretary of Defense for Health Affairs.