-

PDF

- Split View

-

Views

-

Cite

Cite

Steven Furnell, Usable Cybersecurity: a Contradiction in Terms?, Interacting with Computers, Volume 36, Issue 1, 1 January 2024, Pages 3–15, https://doi.org/10.1093/iwc/iwad035

Close - Share Icon Share

Abstract

Encounters and interactions with cybersecurity are now regular and routine experiences for information technology users across a variety of devices, systems and services. Unfortunately, however, despite long-term recognition of the importance of usability in the technology context, the user experience of cybersecurity and privacy is by no means guaranteed to address this criterion. This paper presents an outline of usability issues and challenges in the cybersecurity context, with examples of how it has (or indeed has not) evolved in some established contexts (looking in particular at web browsing and user authentication), as well as consideration of the extent to which any better attention is apparent within more recent and emerging technology contexts (considering the presentation of related features in scenarios including app stores and smart devices). Based on the evidence, cybersecurity is clearly yet to reach a stage where its mention would naturally imply usability, but at the same time the two concepts do not have to represent a contradiction in terms. The resulting requirement is for the increasing recognition of the issue to translate into a greater level of resulting attention and action.

RESEARCH HIGHLIGHTS

Highlights the challenges of achieving usability in the cyber security context.

Examines the evolution of usable cyber security features in common end-users contexts.

Assesses the additional usability issues that have emerged in more recent technologies and online services.

1. INTRODUCTION

One of the fundamental issues in delivering cybersecurity is for it to workable and acceptable for the people that it is intended to serve and support. There are many instances of humans being characterized as the ‘weakest link’ in security, and the human element has been suggested as a contributing factor in 82% of data breaches (Verizon, 2022). However, although this may be the case, it would be misleading to assume that people are consequently to blame for the security issues. In many cases, although humans may have been involved in a security incident, the fault may be with technologies or processes they were expected to use. Providing security controls and safeguards, whether they be dedicated tools or features within wider systems and applications, is only effective if they get used appropriately. A safeguard that is ignored, disabled or misconfigured is not providing the intended protection. Whether any of these negatives occurs will often depend on how well the safeguard is received by its target users. If it proves inconvenient or disruptive, then it could well find itself being ignored or disabled. Similarly, if it is overly complicated, then it could again be dismissed, or it could result in it being used wrongly (i.e. leading users to incorrect decisions and a false sense of security rather than actual protection). In short, and to quote from a 2017 keynote speech delivered by a speaker from the UK’s National Cyber Security Centre, ‘The way to make security that works is to make security that works for people’(NCSC, 2017).

In recognizing the importance of usability, we need to start with the realization that security is not the prime reason that people are using their technology. As such, if security aspects are apparent to people at all, then it is arguably already some level of distraction from what they are really trying to do. If the experience with it then proves to be anything but straightforward, then it has the potential to be regarded as unwelcome and inconvenient—even though it is ultimately meant to be serving a positive purpose for the user, their organization, their family or whoever else they might be inclined to want to protect alongside themselves.

We also need to appreciate that secure behaviour is not the starting point for many users. In many cases, it is something that takes up their time and gets in the way of what they are really trying to do. In this context, poor usability can contribute to an overall sense of negativity in users’ relationship with security and increases fatigue (Furnell and Thomson, 2009; Stanton et al., 2016). This in turn can ultimately affect how security is perceived. On the one hand, what we want is for it to be seen as a positive—as the user’s ‘friend’. In this sense, it can be seen as a guardian angel, helping to block threats and safeguard data, and as a result it provides reassurance and acts as an enabler of activity. However, the manner in which security is encountered, presented and manifested can often prompt a more negative perception, with security potentially being seen more as the ‘enemy’ of the user, insofar as it gets in the way of what they are actually trying to do, causes tasks to take longer and sometimes even prevents them from being achieved altogether (i.e. a ‘security says no’ scenario). In this situation, it delivers the exact opposite effect to that wherein users may become worried and find that it inhibits their activity.

The notion of security ‘getting in the way’ is important in relation to the earlier point about the user’s primary task—i.e. we can assume that on many occasions when security is encountered, users are actually trying to do something else. As such, if the security experience proves to be unclear, obstructive or excessively time-consuming, it is not going to be judged to be a good experience and moreover has the potential to affect the user’s perspective about engaging with security on future occasions. In some scenarios, it will motivate the behaviours that work against security objectives, with protection being ignored, unused or disabled. In other cases, users will not have the option to work around security and are instead forced to coexist with technologies and controls that they dislike, increasing the potential to go from fatigue to burnout (Cong Pham et al., 2019). In practice, neither situation is desirable—we do not want to offer users a choice to ignore, disable or otherwise avoid security, but we equally do not want them to feel that it is working against them. What is clearly needed is security that is user-compatible, and it is necessary to recognize that this does not typically happen by chance or by default. Indeed, if usable security is to be achieved, it needs to be done by design and followed through into the implementation. It needs to consider the nature of the users, the devices, the tasks and the context in which usage will be occurring, alongside the type and level of protection that needs to be achieved.

The paper title basically poses the question of whether usable security is something we can expect or not—do the notions of security and usability fit together naturally, or are they opposing issues in practice? This discussion begins by identifying the different dimensions that contribute toward usable security, and briefly reflects on its recognition as a target issue. The focus then moves toward examining the extent to which this target has been achieved, looking at related examples from the past and the present day. What these will collectively show is that although the situation may have improved in terms of usability being recognized, this by no means guarantees that it is sufficiently considered and addressed in all contexts. Indeed, as users encounter security issues, mechanisms and choices across a broader range of contexts, the issue of making their experience usable needs to be recognized and addressed by a wider range of developers and providers.

2. DIMENSIONS OF USABLE SECURITY

In considering the issue of usable security, we need to start with an appreciation of what usability includes in the first place. International Organization for Standardization (ISO) 9241–11:2018 defines it as: ‘The effectiveness, efficiency and satisfaction with which specified users achieve specified goals in particular environments’ (ISO, 2018). The criteria by which usability is assessed are:

effectiveness: the accuracy and completeness with which specified users can achieve specified goals in particular environments;

efficiency: the resources expended in relation to the accuracy and completeness of the goals achieved;

satisfaction: the comfort and acceptability of the work system to its users and other people affected by its use.

Each of these are, of course, rather broad headings, and have not been specified with security particularly in mind. Turning this toward a security context, Caputo et al. directly adopt the ISO vocabulary in their definition of ‘usable security’, characterizing it as ‘delivering the required levels of security and also user effectiveness, efficiency and satisfaction’ (Caputo et al., 2016)—so basically highlighting that the three factors need to be maintained alongside whatever security is provided. Taking a different perspective, Zurko and Simon define ‘user-centered security’ as ‘security models, mechanisms, systems and software that have usability as a primary motivation or goal’ (Zurko and Simon, 1996). This is clearly more explicit about the aspects of security that may be encompassed, but does not specifically explain what the associated usability would look like. It is also arguably questionable whether usability needs to be the primary goal because this could imply that security then becomes secondary to the usability. To think it through a little further and to think about what the usability would include in a security-specific perspective, some related requirements are summarized by the acronym CLUE (proposed here as a means of making the requirements more memorable):

Convenient: It is important to maintain balance—security should not be so visible that it becomes intrusive or impedes performance because users are likely to disable features that interfere with legitimate use.

Locatable: We need to be able to find the features we need, and if users have to spend too long looking, they may give up and remain unprotected.

Understandable: Users should be able to determine and select the protection they require, and the technology should not make unrealistic assumptions about their previous knowledge

Evident: Users should be able to determine whether protection is being applied and to what level. Appropriate status indicators and warnings will help to remind users if safeguards are not enabled.

As can be seen, these have relevance across the set of ISO criteria. For example, a lack of convenience will affect efficiency and satisfaction and may also impact the effectiveness if the user feels unable to complete a desired action or disables protection to get it out of the way. Building the picture a bit further, we can consider a few of the issues that can be encountered in practice that users may associate with different dimensions of usability:

Confusing—Users can find themselves frustrated by facing things that they do not understand. This may be due to use of technical terminology that they do not understand or to a lack of sufficient information to explain what is going on or what the user is required to do in response. Users may consequently be left with a sense of ambiguity and a lack of confidence over the correctness of the action/decision being taken.

Distracting—This reflects situations when security gets in the way of what the user is actually trying to do. As a consequence, it can feel inconvenient and intrusive and may seem to be acting against the user’s interests. Most commonly illustrated by things such as pop-ups, dialog boxes and the like, which attempt to draw attention to an issue that the user needs to be aware of.

Hidden—This relates to the scenario where the user knows (or at least believes) that security features are available but they cannot easily establish where to find them.

Inconsistent—The situation in which users find that the security lessons they learned in one context (application, device, etc) cannot be directly or easily applied in others. A good example here would be good password practice, where the rules enforced in one system are not the same as applied in others.

Insufficient—This represents the situation where users want or expect to be able to do something, or for particular features to be available, but then find that related controls or options are unavailable. This can often be the case when moving from desktop operating systems to other platforms and may sometimes be for quite legitimate reasons, insofar as some of the issues that may demand security provision on a desktop system may not exist on mobile or smart devices. Nonetheless, it can leave users with the sense of having tried to engage with security, but then been prevented from doing so.

Time-consuming—Fundamentally, how long does it take to get the task done, including (where appropriate) the time taken to locate and then use the features concerned. It can also include approaches that are considered to impose a performance overhead, which can in turn relate to explicit interactions, as well as background activities (e.g. the impression that the system is slowed down by backup or malware scanning activities, even if the user is not required to give direct attention during the process).

Relating these back to the earlier ISO list, Table 1 indicates the criteria that are most likely to be affected by each of the issues at hand. A notable point here is that everything is considered to have a potential impact on the user’s satisfaction—whether they end up appropriately protected or not as a result, the user experience is the aspect that is almost guaranteed to take a hit.

| Issue . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Confusing/lacking clarity/unclear |  |  | |

| Distracting/inconvenient/intrusive |  |  | |

| Hidden/concealed |  |  | |

| Inconsistent/misleading |  |  |  |

| Insufficient/lacking control |  |  | |

| Time-consuming/burdensome |  |  | |

| Issue . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Confusing/lacking clarity/unclear |  |  | |

| Distracting/inconvenient/intrusive |  |  | |

| Hidden/concealed |  |  | |

| Inconsistent/misleading |  |  |  |

| Insufficient/lacking control |  |  | |

| Time-consuming/burdensome |  |  | |

| Issue . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Confusing/lacking clarity/unclear |  |  | |

| Distracting/inconvenient/intrusive |  |  | |

| Hidden/concealed |  |  | |

| Inconsistent/misleading |  |  |  |

| Insufficient/lacking control |  |  | |

| Time-consuming/burdensome |  |  | |

| Issue . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Confusing/lacking clarity/unclear |  |  | |

| Distracting/inconvenient/intrusive |  |  | |

| Hidden/concealed |  |  | |

| Inconsistent/misleading |  |  |  |

| Insufficient/lacking control |  |  | |

| Time-consuming/burdensome |  |  | |

It is also worth noting that usability issues can relate to both the communication and control aspects of security. It is not just about how easily users can find and use security settings, but also about how clearly and effectively they receive security-related messages and notifications (which, depending on their effectiveness, may serve to affect users’ behaviour and usage in ways that reduce or amplify risk).

Another important realization is that usability is ultimately judged from a subjective perspective for each individual because it also relates to the capability of the user (e.g. information technology [IT] novices versus those with advanced capabilities). Those without a clear understanding of the technology or a limited level of IT literacy will likely struggle with some aspects of security by default because some aspects tend to be presented in terms of technical language and jargon, or at least assume that users are conversant enough with other aspects of how their system/device works to make their own connections about where and how the security is fitting in. As such, novices are most likely to encounter problems from the clarity and understanding perspective. By contrast, the users at the advanced end of the scale are likely to become frustrated with aspects of security that they cannot control in a manner that suits their level of understanding and way of working. Here, it is more likely to be the security elements that are time-consuming and lacking control that have the primary impacts on satisfaction. For the remaining users in the middle ground, dissatisfaction is likely to come from the combined frustrations of security getting in the way when they do not want it, and then being difficult to find and use when they do.

The appreciation of the need for usable security sits within the broader context of a wider recognition of human aspects of security. Looking for example at the treatment of the topic with the Cyber Security Body of Knowledge (CyBOK), we find a specific Knowledge Area dedicated to Human Factors (Sasse and Rashid, 2021), within which usable security is the first issue explored alongside several others:

Usable security

Human Error

Cybersecurity awareness and education

Positive Security

Stakeholder Engagement

We can see that these issues are linked, and that all of those that follow can be seen to have a relationship to usability. For example, usable technologies improve the chances of reduced human error, they make it easier for users to put awareness and education into action, they help to promote security in a more positive manner and enhance the chances of getting people to engage with it. In this sense, usability is the key to achieving good security behaviour and can represent the ultimate hurdle to overcome in enabling people to play their part (Furnell, 2010).

3. EXAMINING USABLE SECURITY EVOLUTION

As is clear from the age of the earlier definitions, usable security is not a new idea. It has been on the radar for some time now and often represents a point of intersection with research and practice from the human–computer interaction (HCI) community. In some cases, this has meant inheriting established (or at least recognized) HCI principles and ensuring that they get applied to security techniques and tools, and a good example would be to ensure that user interfaces and interactions follow classic principles such as those proposed by Shneiderman (Shneiderman, 1998). In other cases, it involves a more fundamental recognition that some approaches to security are less user-compatible than others, and looking for ways to significantly redesign, reengineer or even replace them with approaches that will work more effectively.

The usable security domain has seen its own share of landmark contributions, and two that tend to get widely cited are Whitten and Tygar’s 1999 paper ‘Why Johnny can’t encrypt’ (Whitten and Tygar, 1999) and Adams and Sasse’s paper from the same year ‘Users are not the enemy’ (Adams and Sasse, 1999). The first particularly served to spotlight difficulties caused by the presentation of security at the interface level, with technical terminology and assumptions that proved confusing for many users looking to send encrypted email messages. The second focused on the problem of landing users with a fundamentally challenging security technique—the password—the very nature of which makes it easy to use badly, but hard to use well.

Almost a quarter of a century on from Whitten and Tygar’s work, it is worth reflecting on the priorities they defined for achieving usable security. Specifically, they suggested that software is useable if those expected to use it:

are reliably made aware of the security tasks they need to perform;

are able to figure out how to successfully perform those tasks;

don’t make dangerous errors and

are sufficiently comfortable with the interface to continue using it.

More than 2 decades later, these points clearly remain valid. However, it is interesting to consider the extent to which related problems are still in evidence. Certainly, the threat landscape has broadened in the intervening years, as has the range of devices and services through which they can be encountered. Being cybersecurity literate and maintaining cyber hygiene is therefore more challenging today than it was at the end of the 1990s. Users are faced with related issues across a wider range of technologies, and so need to be able to manage their security in a range of contexts. Of course, their ability to do this depends not only on their own knowledge and willingness, but also the usability of the security methods that have been served up to support them. However, as we shall see across the examples in the next few sections, the situation here can remain rather varied.

3.1. Usable security in web browsing

If we think about common and long-established user activities, then a standard one across multiple devices is web browsing. Unfortunately, the very fact of going online means that users are exposed to potential risk, and so the associated software—the web browser—will typically be offering security settings to protect them.

Rather than surveying the usability of existing platforms, the browser provides an opportunity to look at the ‘then and now’ context, and the extent to which the usability of security can remain a problem issue. To this end, we can consider the ‘Security Options’ interface from Microsoft’s Internet Explorer web browser. The author began using it as a reference point in 2004, when the current version was IE6 (introduced in 2001). The analysis at the time highlighted various factors that could frustrate usability, particularly from the perspective of interface-level clarity and understandability. However, thanks to some key aspects remaining basically the same in later versions, the example remained valid until the final version of the browser, IE11 (introduced in 2013 but still supported by Microsoft all the way through to 2022) (Furnell, 2016).

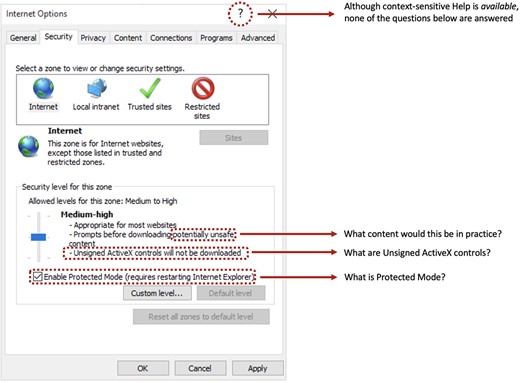

The main Security Options interface from Microsoft Internet Explorer 11.

Figure 1 illustrates some of the issues that were still apparent in IE11 and the resulting questions that might occur to the interested user within just the first interface. The image is depicting the default security setting for the ‘Internet’ zone, with the security level set to what is termed ‘Medium-high’ (and as shown in the image, the associated slider only has two other positions—namely ‘High’ and ‘Medium’—and so, perhaps reassuringly, the user cannot set ‘Low’ security for their Internet browsing). Despite the ostensibly simple slider control being used to set the security level required, the interface poses several usability challenges. Specifically, we see the user being presented with information that they may not understand or may misinterpret. It is worth looking at each of the highlighted questions in turn:

Part of the description of the ‘Medium-high’ security level Indicates that the user will be prompted before downloading ‘potentially unsafe’ content. Although this may initially seem reasonable enough, it raises the question of what type(s) of content this relates to. For example, does it mean that the user is protected from malware (which most people would reasonably consider ‘unsafe’), or phishing websites (which would certainly be ‘unsafe’ if you visited them inadvertently), or pornographic material (which many would consider ‘unsafe’, particularly if downloaded in workplace or family contexts)? In fact, the setting would guarantee to protect against none of these (although certain aspects of malicious code behaviour might be prevented, it would certainly not safeguard against the threat of malware in a broader sense). However, the problem is that this is not made apparent and the user is basically left to judge the meaning of the setting based on their own subjective interpretation of what constitutes ‘potentially unsafe content’.

The final part of the level descriptor indicates that ‘Unsigned ActiveX controls will not be downloaded’, and so to understand what this means, the user needs to be aware of what an ActiveX control is and what it means for one to be unsigned. To answer the first question, ActiveX was a Microsoft standard for software components, and the controls were ‘small apps that allow websites to provide content such as videos and games’ (Microsoft, 2022a). However, given that the same definition goes on to explain that such apps may also include unwanted content, users would be advised to be sure of their source of any controls they download and to avoid those that have not been digitally signed to prove their origin. So, this is why it would be desirable for unsigned controls not to be downloaded, but the interface does not explain this further and even amongst a technically literate audience, there are typically few that can explain this one.

Departing from the slider, there is the checkbox to ‘Enable Protected Mode’ (which is selected in the image, but was normally disabled by default). However, this raises the question of what the mode is, and also why would someone not want to enable it if it is providing protection? To again quote from Microsoft, ‘Enhanced Protected Mode isolates untrusted web content in a restricted environment that’s known as an AppContainer. This process limits how much access malware, spyware or other potentially harmful code has to your system’ (Microsoft, 2022b). However, it only works for software that has been written to be compatible with the mode, and so by enabling it, users may find that other content does not run correctly.

Clearly, all of the settings make somewhat more sense when more fully explained, and it would of course be impractical for all this detail to have been provided as part of the interface. However, having some links or pop-ups to provide clarification (e.g. in response to users clicking on or hovering over the keywords) would have been a reasonable compromise. Similarly, further details could have been provided as part of more general Help for the interface. However, although a link to related Help seems to be available (as highlighted at the top of the image), it does not serve to answer any of the questions just discussed, and instead explains aspects such as where to find the security settings (which the user presumably knows already, having invoked the help from this interface) and how to use the slider.

As an aside, it is worth noting that although this critique has only looked at the top-level interface, there was far more for the user to face if they were to select the ‘Custom level’ setting. This would yield a further window with 50+ settings that could be individually enabled or disabled, but again without any of them being properly explained as part of the information accessible from within the interface. Moreover, when making any changes and then returning to the main interface, the slider would be replaced by a message stating that security was then set to ‘Custom’, with no indication of how this was positioned in relation to the Medium/Medium-high/High settings that might otherwise be used (i.e. the user is told they have a custom level of security, but is no longer clear on whether this is similar to, better or worse than the default).

The positive end to this story is that IE is no longer the browser that Microsoft expects users to use. In 2015, alongside the release of Windows 10, it released a complete replacement in the form of Microsoft Edge. This essentially solved the usable security problems from IE by removing many of the features that required the security choices to be there in the first place. As a result, rather than the potentially ambiguous and confusing interface from Figure 1, supplemented by its 50+ custom settings, the initial release of Edge offered a grand total of just 10 security and privacy settings, giving the user much less to understand and potentially misconfigure. Although this was clearly a good outcome, the fact that it took a ground-up redesign of the browser to enable the security to become more usable is a notable point. It illustrates the difficulty of retrofitting usability onto something that is inherently more complex by design, and conversely the potential benefit of considering the user perspective at an early stage.

3.2. Usable user authentication

Although the example of web browsing represents a common end-user activity in which security may be encountered, by far the most common security-specific activity is user authentication. This is consequently an area that is significantly important from the usability perspective. However, although it is almost certainly the aspect of security that most people see most often, the usability has proven to be extremely variable.

The traditional and still most common form of authentication is of course the password, and the approach has been long-criticized from the usability perspective. By default, passwords are difficult to choose, use and manage for many users, and the problem is amplified in modern-day use by the sheer number of systems, sites and devices on which we are expected to use them. Over time, various elements of automation have been introduced to improve the usability aspect—password management tools can create and store strong passwords and can autofill password fields rather than requiring users to type them in manually. However, these tools are not uniformly available in all contexts, and there are still many situations in which users will find themselves being asked to deal with passwords unaided. And in these cases, we continue to see that users essentially get it wrong, choosing weak passwords that years of good practice guidance would have steered them away from. Indeed, year on year we see reports of the same poor password choices (e.g. password, 123,456) and these choices recurring time and again (NordPass, 2022). These are rightly criticized as being predictable and therefore putting the associated accounts at greater risk, but at the same time are likely being chosen because users are seeking their own usability workarounds. The weak choices make it easier for them to choose and remember the passwords (i.e. they do not have to put tangible effort into either task), and so in terms of the ISO usability criteria, things are working out well from the user’s perspective. The problem, of course, is that the very thing the password is there to ensure (i.e. security) is being weakened in the process—a good example of how usability and security are often seen to be in direct conflict.

At the same time, we can note that the reason these common passwords persist is because users continue to be permitted to choose them and because many websites provide no guidance or enforcement to the contrary (Furnell, 2022). Moreover, where guidance or enforcement of password rules does occur, there is a significant lack of consistency in what is advised and/or permitted, such that the lessons learned in one site or system are by no means guaranteed to apply in others. From the users’ perspective, this can again contribute to a sense of confusion and complicates the usability of an approach that they might otherwise learn to use more effectively.

Of course, passwords are recognized as a significant route of compromise, and even without the risk of explicitly poor choices, they are still vulnerable to exposure through threats such as phishing, keylogging, and ill-advised sharing between users. As such, the current advice is to move beyond passwords or strengthen them via Multi-Factor Authentication (MFA). However, this potentially introduces usability challenges of a different type, which can potentially limit uptake unless it is mandated. For example, Microsoft have reported that in August 2021, they were seeing 50 million password attacks per day on Azure Active Directory. At the same time, however, ‘only 20% of users and 30% of global admins are using strong authentications such as multi-factor authentication (MFA)’ (Microsoft, 2022c). Although the reason for the lack of engagement is not suggested, it would not seem unreasonable to assume that usability issues (either real or perceived) are part of the issue here. It is also interesting to observe that global administrator accounts were being permitted to exist without stronger authentication being a requirement. The fact that they were given a choice in the matter is again likely to be linked to the usability/tolerability angle and illustrates that these issues remain relevant even for the more technical/advanced user community.

| Approach . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Basic password manually typed | ? | ? | ? |

| Basic password automatically entered |  |  |  |

| MFA at each login | ? | ? |  |

| MFA periodically and on new devices |  |  |  |

| Approach . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Basic password manually typed | ? | ? | ? |

| Basic password automatically entered |  |  |  |

| MFA at each login | ? | ? |  |

| MFA periodically and on new devices |  |  |  |

| Approach . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Basic password manually typed | ? | ? | ? |

| Basic password automatically entered |  |  |  |

| MFA at each login | ? | ? |  |

| MFA periodically and on new devices |  |  |  |

| Approach . | Usability criteria affected . | ||

|---|---|---|---|

| Effectiveness . | Efficiency . | Satisfaction . | |

| Basic password manually typed | ? | ? | ? |

| Basic password automatically entered |  |  |  |

| MFA at each login | ? | ? |  |

| MFA periodically and on new devices |  |  |  |

Indeed, MFA itself requires additional effort—it needs to be set up in the first instance (from both the system administration perspective and by the individual users), and it then changes the nature of login on an ongoing basis. This in turn can lead to a negative experience for users, particularly if they find themselves confronted with the resulting ‘confirmation requests’ across multiple platforms and applications. This leads to what has been termed ‘MFA fatigue’ or indeed MFAtigue, which has in turn been defined as ‘Extreme fatigue or frustration caused by years of using legacy Multi-Factor Authentication, amplified by the extra hardware, extra steps, and extra time of MFA’ (HYPR, 2020). The recognition of this fatigue has become an exploitation route for attackers (Constantin, 2022). In what are varyingly termed ‘MFA fatigue attacks’ and ‘prompt bombing’, this basically refers to a situation in which the attacker, having already compromised the standard login credentials (e.g. via phishing or some other means), then uses them to generate repeated sign-in confirmation messages from the user’s MFA application. The intended result is that the user gets sufficiently frustrated or annoyed with the resulting notifications that they simply approve one to get them to stop (thereby giving the attacker the access they were seeking).

In practice, the potential for MFA fatigue very much depends on how it has been implemented. If users are required to use it every time they log in, then it is very likely to be seen as a noticeable overhead that was not there before. If, however, it is used in a more judicious manner—e.g. on the first access via any new device, and then periodically thereafter, then it is likely to be viewed as a more tolerable approach.

Table 2 compares the potential differences between the approaches with reference to the ISO9241–11:2018 usability criteria introduced earlier. If we think about traditional passwords used in the traditional way, then the level of perceived usability is likely to vary from user to user depending on their underlying personality and practices (e.g. do they have issues remembering or typing their password and/or have they chosen one that makes it easier for them to do so). Having a password that is autopopulated via a password manager immediately takes away both of these concerns because the user does not have to remember it nor type it, and so this represents a scenario that is naturally more usable. However, the protection is still dependent on just the password and so, moving to a stronger approach is desirable from a security perspective. If MFA were to be used at each login, then regardless of how effectively and efficiently the process worked, it would still be perceived as an additional task compared with traditional login, and so would likely impact the satisfaction. However, applying MFA in a periodic manner minimizes this concern and likely guarantees that effectiveness and efficiency are viewed positively as well.

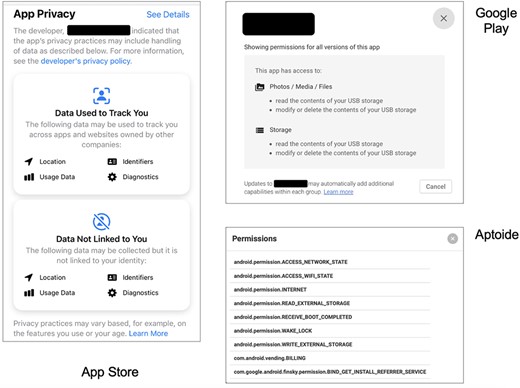

Varying presentation of privacy and permission details in different app stores.

An area that has seen significant uptake in recent years is the use of biometrics—the ability to automatically identify or authenticate users based on physiological or behavioural characteristics. Biometrics can be used in a range of guises, but the most commonly encountered techniques in consumer-level devices are based on face, fingerprint and voice recognition. These are now part of the day-to-day user experience for millions of users, thanks in large part to their integration within mobile devices such as smartphones and tablets, and within the space of just a few years, biometrics went from being a technology that people heard about to something that was routinely experienced in mainstream use.

Much of the drive toward biometrics is due to the desire to make security usable rather than to improve the level of security (noting that to date, biometrics have not replaced passcodes—i.e. PINS or passwords—on the target devices, but have instead provided a usability layer on top of them). This is illustrated by the following quote from Apple’s marketing back in 2013 when its TouchID fingerprint recognition was first introduced on the iPhone 5s: ‘You check your iPhone dozens and dozens of times a day, probably more. Entering a passcode each time just slows you down’ (Apple, 2013).

The benefit of biometrics if approached correctly is that the authentication can be provided in a way that is non-intrusive and largely transparent from the user perspective. For example, using face recognition to access a smartphone is an almost seamless experience—the user holds the phone up to look at it, and in the same instant the device captures and verifies them from the facial data captured by the camera and other sensors.

The side benefit of the convenience can be a further increase in the level of security. For example, with smartphones, it leads to a situation where users have device protection permanently enabled by default, whereas they would not routinely have done so in the era when it required them to enter a passcode each time. In the past, many users did not use a passcode or configured the device to only ask for it after a period on inactivity (thereby leaving a window of vulnerability during which the device was not protected against unauthorized use if it were to be lost or left unattended). Now, the (lack of) effort required to use the biometric means that users are comfortable to have it enabled all the time, and so the device is consistently protected to the same level.

4. THE BROADENING REQUIREMENT FOR USABLE SECURITY

Things have changed considerably since the seminal critique from Whitten and Tygar. Users are now facing security considerations and decisions in a range of new guises thanks to the varied range of devices and online services they now use. To illustrate the point, this section looks at three examples that may confront today’s users in both personal and workplace contexts: namely, understanding the permissions and privacy implications of apps, dealing with cookies and managing the protection of their smart devices. Each presents them with a range of new challenges and choices that further extend the contexts in which they encounter security and privacy issues and do so in a way that may not directly relate to their earlier experiences.

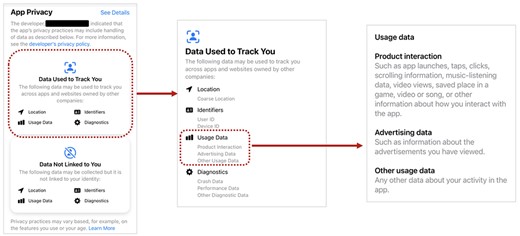

Exploring the levels of information available about app data usage.

4.1. App permissions and privacy

One relatively new scenario now facing millions of users is that apps downloaded to their smartphones and tablets will often be seeking (or indeed requiring) permissions to access aspects of the device and/or the data held on it. In some cases, this will be for perfectly natural and obvious reasons. For example, an app involving image capture would naturally be expected to need access to the camera to take a picture and to the user’s photo library to then store it. In other cases, however, we may find apps requesting permissions that the user would not normally expect or understand the need for. For example, imagine that the same image capture app also wants to collect the user’s location and usage data. Both could be legitimate from the developer’s perspective—it wants the app to be able to store meta data for where a photo was taken, and they want to be able to track the extent to which users make use of their app. The former gives a potential value-add for the user, whereas the latter is clearly of value to the developer. However, the user may not necessarily be comfortable with either, and so may wish to know about the level of access than an app is expecting before installing it (and ideally be able to control it after the event).

Concern about apps overclaiming permissions has led to concern about potential impacts on user privacy (Tang et al., 2017) and although app stores may make attempts to list the permissions being sought, there can be considerable variation in the ways they do so. As an illustration of this, consider the three examples in Figure 2, all of which show the permissions requested by the same app, but as conveyed in different app store contexts (namely Apple’s App Store, Google Play and the third-party Android store Aptoide). We can see that from the user perspective, we are getting three very different experiences here. In the case of the App Store, the user is getting a data-centric view, with the information panel telling them about the types of data that the app (and its developer) will collect that can be used to track the user and that which is collected but not linked to them. In the Google Play case, the information is framed around the permissions being granted to the app to access different aspects of the user’s device (in this case, indicating it can access their media and their storage). Notably, although both stores have sought to categorize the rights available to the app, they have done so in different ways—what it can collect versus what it can do. In practice, of course, both perspectives may be of interest and could leave users familiar with one approach feeling that they are not getting the other side of the story. In complete contrast to either approach, the Aptoide store simply presents the user with the set of Android permissions that the app will be granted, meaning that only those users with a sufficiently technical background to understand or interpret the meaning of the permissions will have a clear idea of what the app may be getting access to.

Notably, although all the app stores are giving an indication of what permissions are going to be granted, none of them are immediately conveying details of why, nor indicating how the data the data will be used, and similarly none of them offer the user any sense of control over what can be granted or shared (other than to choose not to install the app). Although it might not be feasible for the user to be offered control (i.e. the app needs/wants this level of access to function or as part of the developers’ willingness to offer it), the user may still feel that they are somehow being limited or shortchanged by not being given any options.

Looking beyond this surface level, there is also the question of whether the user is really being given sufficient information to understand what is happening. To illustrate this point, we can further examine the example from the App Store, which is ostensibly providing the clearest breakdown of the three illustrated (and was widely heralded at the time Apple introduced it as being a step forward in ensuring the transparency of data collection by app developers (Weir, 2021)). Figure 3 depicts the information available to the interested user if they attempt to go beyond the surface level from the initial interface. In this particular case, we drill down into the ‘Data Used to Track You’ category and find that we get an expansion of each of the four data types that were initially just named with accompanying icons. We now see a series of further items being listed under each heading, which reflect what this specific app is collecting within each category. However, even this only takes us so far. For example, when looking at the nature of the Usage Data that are collected, we are now told that this involves ‘Product Interactions’ and ‘Other Usage Data’, both of which are as likely to raise questions as answer them. However, attempting to go deeper to discover what data these actually are ultimately leads only to Apple’s generic descriptors of the categories (Apple, 2022) (thereby giving the user some more insight into what the collected data might be, but no definitive answers as to what are actually being collected). As such, for the user who was interested or concerned enough to look this far, it is easy to envisage that they will not have left with a strong level of satisfaction as a result. There is also potential for confusion and misunderstanding about what the category descriptions really mean and what is going to get collected as a result. Indeed, research has established that not only do users still misunderstand or misinterpret the meaning of the labels, but the developers responsible for assigning them may also make mistakes in doing so (Cranor, 2022). This in turn highlights that although the whole notion of privacy labelling has been introduced to be more informative to users, the usability of the mechanism itself can still impede the effectiveness in practice.

4.2. Handling cookies

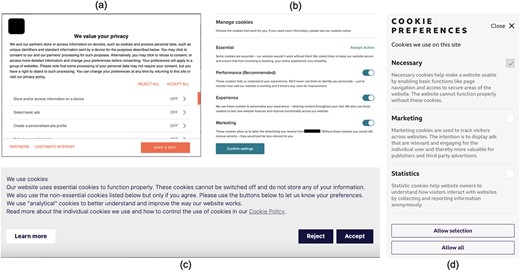

An example that has become very prominent thanks to the introduction of the European General Data Protection Regulation is the use of pop-ups to confirm user preferences in relation to cookies and other tracking technologies. Here, we find examples that often seem to be intentionally framed to make it more difficult for users to make the choice that they are likely to want to make, and at the same time make it more likely for them to make the wrong choice in error. A selection of such dialogues is presented in Figure 4, although it should be noted that numerous other variations can be encountered in practice, thus creating an immediate issue in terms of the consistency of the user experience. If we look at the choices on offer, then from the outset, it is likely that many users will not understand what they are being asked. In the interests of clarity, we can briefly outline what the different types of cookies are (noting that even at this level, different labels are used for the same thing) (Kock, 2019):

Necessary (or Essential) cookies—Do not require the user’s consent because they are essential to the features and functionality of the site.

Preferences (or Experience or Functionality) cookies—enable websites to ‘remember’ the user’s previous choices (e.g. region or language preferences) and thereby to personalize their experience in future visits.

Statistics (or Performance) cookies—collect anonymized information about website use (e.g. page visits, link clicks) that can be used to improve the website’s functionality.

Marketing cookies—track users’ online activity, helping advertisers to deliver more relevant advertising or to limit how many times they see a given advert.

Illustrative variations of ‘manage cookies’ interfaces that users may encounter across different sites.

Although the ‘strictly necessary’ cookies are typically listed along the other categories, they do not offer the user an option to change the setting and will either be shown with an indicator that they are ‘Always Active’ or (perhaps less usefully) a toggle button or checkbox that is ghosted/greyed-out to show that users cannot change it (as shown in Figure 4d). Although their consent is not required, it is still relevant for users to be told what these cookies are and why they are essential to the site.

Even from the small selection of examples in the Figure, we can make a number of observations that are notable from the usability perspective:

Examples (a) and (c) have options to enable the user to Reject all optional cookies with one click, whereas the others do not.

Examples (b) and (d) provide brief explanations for each of their settings, whereas example (a) does not.

Examples (a) and (d) set all of the optional settings to ‘off’ by default, whereas example (b) defaults all of the settings to ‘on’, and has the ‘Confirm settings’ button highlighted, increasing the chance that the user will accept everything (potentially by mistake).

Example (c) conceals the detail of the individual cookie settings unless the user opts to ‘Learn more’. As such, if they were to select ‘Accept’ from the initial dialogue, they do not have a clear indication of what settings they are accepting.

Example (d) shows the ‘Necessary’ cookies as a pre-selected checkbox that the user cannot actually control.

Example (d) has an ‘Allow all’ option that would select everything, but not a corresponding ‘Reject all’ option.

The level of variation has been highlighted in other works, with (for example) Hennig et al. highlighting that many cookie disclaimers are designed in ways that are not aligned with the ideas of the GDPR, with the lack of a privacy-friendly approach serving to complicate the task for users when trying to understand and make choices (Hennig et al., 2022).

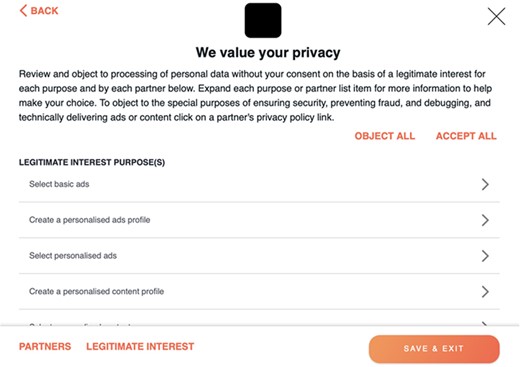

Adding further to the potential challenge and complexity for the user, it can be noted that the example in Figure 4(a) also includes a separate ‘Legitimate Interest’ link. Clicking on this reveals a completely different range of settings (Figure 5), which are based on sharing various types of data with partners (of the website) who have claimed to have what GDPR terms a legitimate interest in collecting the data concerned (Wessel-Tolvig, 2020). The implication here is that the data can be collected without requiring consent, and so these settings are essentially distinct from the cookies that users have already permitted or denied. In this example, there are nine ‘legitimate interest purposes’ in total (four are visible in the Figure) and well >100 partners to whom they may apply. Although there is an ‘Object all’ option, it operates distinctly from the ‘Reject all’ link on the main interface. So, users are faced with a situation where they may believe that they have prevented data collection and tracking by selecting ‘Reject all’, whereas there was a further setting that they needed to select, the existence of which may not have been obvious to them unless they were already aware of the ‘legitimate interest’ issue. Moreover, although many sites operate like examples (a) and (d) and have their non-essential consent options preset to ‘off’ by default, the legitimate interest settings are almost invariably set to ‘on’, and so require the user to be aware that they need to object/reject/decline to prevent the related activities (and in many cases, they do not provide an ‘Object all’ option, thereby requiring users to manually and laboriously disable each setting individually—which one can readily interpret as using a lack of usability as a means to get the user to give up and concede whatever permissions are requested).

4.3. Smart devices

Recent years have seen considerable growth in the devices forming part of the Internet-of-Things (IoT), often more colloquially known as smart devices. Users can consequently find themselves surrounded by a plethora of such devices in both home and workplace contexts. Although the ‘smart’ aspects of all the devices are clearly intended to enhance the user’s experience in some way, they are also adding to the technology estate that the user must understand and (in the case of personal devices) manage from a security and privacy perspective. However, part of the challenge here is that it introduces the issues in unfamiliar guises, and on devices that users have not previously been used to thinking about from these perspectives. As such, there is an immediate usability issue in the sense that the users need to orient themselves to thinking about these devices as having security concerns that should be addressed, and then determining how to do so. Even then, however, there will not be a clear baseline of what to expect in terms of security and how to control it.

As an illustration, we can examine a casual sample of three smart TVs, all released in the space of the last 5 years or so. They are from different manufacturers (LG, Samsung and Sony), but otherwise ostensibly similar as smart devices. All are connected to the Internet; all permit the user to download and install apps, browse the web, store media (music/photos/videos) and connect external devices; and all share a primary characteristic of being televisions operated via remote control handsets. However, given their shared functionality, they turn out to be quite different in terms of the security and privacy features that users would encounter if they chose to explore the settings. All have options for Software Update to keep apps and system software up-to-date, but beyond this, things are rather varied:

Smart TV 1—seems to have nothing related to security—the closest it comes is a ‘Safety’ menu that permits setting a PIN to control access to applications, inputs (sources) and programmes (channels).

Smart TV 2—has a set of ‘Smart Security’ options somewhat buried away within an ‘Expert Settings’ section of the overall ‘System’ settings menu. These security settings are described as being to ‘protect your TV from hackers, spyware and viruses’, and include the options to scan the device for malware and to enable/disable Real-Time Monitoring to perform this scanning in real time. Elsewhere, there is also a PIN-based lock, which can again prevent changes to channels/tuning, as well as restrict access to the apps (but the latter is done rather differently and not accessed via the main device settings).

Smart TV 3—Supports the notion of user accounts and sign-in (with sub-options for payment authentication and permitting the use of the voice assistant), and various options including Parental controls (which links to the PIN-based restrictions for channels, inputs and apps), an Apps menu (including sub-options for ‘App permissions’ and Security ‘restrictions’) and a distinct Privacy menu with sections for devices, account and app-related settings (which includes consolidating some of the options also accessible via other menu routes).

So, with just three examples, it is clear that the user experience of the same ‘type’ of smart device can be quite varied. Of course, this is arguably equally the case between computers, smartphones and tablets, but all of those are devices in which the user is more likely to expect there to be security and privacy issues to consider and related settings to look at.

Aside from the varying levels of usability (e.g. including the ease with which a user can get a sense of whether and where any security-relevant options are to be found), the difference in the functionality itself is interesting. For example, the presence of malware protection on Smart TV 2 raises questions about why it is not apparent on either of the others (both of which are still connected to the Internet and using ostensibly the same apps). Meanwhile, Smart TV 3 offers control over app permissions, determining which apps may gain access to what aspects of the device (including microphone, storage and system information). Neither of the other TVs offer this, but run many of the same apps over which this TV allows the permissions to be granted or denied.

Smart TV 2’s malware protection is curious in the sense that it is unclear why the ‘Real-Time Monitoring’ option should be an option at all. The user is told nothing to suggest that enabling the setting is risking a negative impact on anything else, and so what would be the rationale or benefit in leaving it switched off?

It may be that some devices are ‘smarter’ than others and need more security features accordingly, often because of the underlying platform (operating system) on which the device is based. However, many users are not going to be naturally aware that they are buying a device that even has an operating system, let along appreciating that if they choose OS A, then then may find themselves having to deal with more security features than with OS B.

From the casual user perspective, it is easy to imagine that the following conclusions could be drawn based on the features available to them:

Smart TV 1—Security is not an issue because there are no settings to worry about.

Smart TV 2—There are some settings related to malware protection, so there is presumably a risk from this. However, there is an option to keep monitoring for it in real time, and so if that is on, we can assume we are as protected as possible.

Smart TV 3—Dealing with security and privacy seems to be a something of a challenge because there is quite a confusing array of settings, and it takes a while to navigate around to (a) find them all and (b) get a sense of whether everything is set up as desired.

What we essentially see across smart TVs and the other ‘broader context’ examples is that they all bring security and privacy issues with them, but the default situation in each case still has the significant potential to leave users feeling that things have fallen short of the ISO usability principles. Indeed, we see approaches that users may not feel are effective (e.g. the lack of real insight or control with the privacy labels), efficient (e.g. the varying demands of the cookie settings) or satisfying (e.g. the varying presence or absence of features across different smart devices). As such, there is undoubtedly some way to go before usable security is something that can truly be relied on as a natural standpoint.

5. CONCLUSIONS AND RECOMMENDATIONS

The paper is not highlighting a new issue, and the importance of offering security that is usable has clearly been recognized for several decades. At the same time, from the examples presented here, usability issues still persist in various forms in various security-related contexts. As such, the short conclusion is that it remains recognized but unresolved, and more needs to be done.

Unless one can go right back to the root cause and influence the design of security mechanisms, then usability issues are a difficult challenge to address. As such, the first point to highlight is essentially less a recommendation and more of an appeal to the designers and implementors of security (be it in the form of specific tools or features within wider systems and services) to ensure that they give explicit recognition to the usability perspective. We now increasingly talk about security by design, but this tends to refer to the technology level. To be truly effective, it needs to extend all the way through to the user experience.

Looking at existing guidance for security design, the focus is typically very much focused around the technology perspective. As an example, we can consider related guidance from the UK’s National Cyber Security Centre (NCSC, 2019), which identify five core principles for cybersecurity design, each supported by a series of sub-points. When talking about the need to ‘Understand the system “end-to-end”’, there is no direct mention that the end points are often the users, and so it potentially overlooks that understanding their perspective and capability is arguably key to keeping the system secure. Elsewhere in the guidance, there is at least a partial nod toward one aspect of usability, with another point highlighting the need to ‘Make it easy for users to do the right thing’ (recognizing the need to prevent them from developing workarounds that may circumvent security features). It ends by suggesting the need to ‘Make the most secure approach the easiest one for users’, but this is unlikely to be achieved if usability principles have not been considered more widely within the design.

The unfortunate reality is that individual users, and indeed most organizations, will not be in a position where they can directly improve the technology itself. This is something that needs to be done at source by the designers/developers concerned. For the users and adopters of the affected technology, this leaves two basic choices—swap out the current solution for something that is more usable or take alternative steps to mitigate the usability issues. Neither route will typically be ideal, insofar as replacing one technology with another may be easier said than done (and in some cases may not be viable at all, especially if the usability issue is not tied to a particular instance of a particular thing—e.g. as would be the case with the cookie controls). Meanwhile, mitigating usability issues that you cannot actually fix will essentially mean supporting users to live with them and make the best of it. For example, if a particular aspect is confusing or unclear, then a means of compensating could be to ensure targeted awareness and training to ensure that people know how to use it more effectively. However, although this may work for usability issues that stem from technologies that are badly presented, it will not do anything to address cases where they are simply inefficient or time-consuming to use.

To consider the question posed in the title, the honest answer is currently ‘neither’. The provision of security is certainly not yet at a stage where usability is implicit or guaranteed. At the same time, referring to usable security is not a fundamental contradiction in terms, and there are increasingly instances in which it is given more appropriate attention. The fact that is it possible to achieve usable approaches is obviously encouraging because it means that users do not have to find themselves in the position where it feels like security is working against them. At the same time, the usable security is clearly far from the default provision in all contexts, and it requires awareness and effort to achieve it. The cause for hope is that an ever-growing proportion of the security community is moving to the realization that the latter are necessary and worth pursuing.