-

PDF

- Split View

-

Views

-

Cite

Cite

G. Ouillon, D. Sornette, E. Ribeiro, Multifractal Omori law for earthquake triggering: new tests on the California, Japan and worldwide catalogues, Geophysical Journal International, Volume 178, Issue 1, July 2009, Pages 215–243, https://doi.org/10.1111/j.1365-246X.2009.04079.x

Close - Share Icon Share

Summary

The Multifractal Stress-Activated model is a statistical model of triggered seismicity based on mechanical and thermodynamic principles. It predicts that, above a triggering magnitude cut-off M0, the exponent p of the Omori law for the time decay of the rate of aftershocks is a linear increasing function p(M) = a0M+b0 of the main shock magnitude M. We previously reported empirical support for this prediction, using the Southern California Earthquake Center (SCEC) catalogue. Here, we confirm this observation using an updated, longer version of the same catalogue, as well as new methods to estimate p. One of this methods is the newly defined Scaling Function Analysis (SFA), adapted from the wavelet transform. This method is able to measure a mathematical singularity (hence a p-value), erasing the possible regular part of a time-series. The SFA also proves particularly efficient to reveal the coexistence and superposition of several types of relaxation laws (typical Omori sequences and short-lived swarms sequences) which can be mixed within the same catalogue. Another new method consists in monitoring the largest aftershock magnitude observed in successive time intervals, and thus shortcuts the problem of missing events with small magnitudes in aftershock catalogues. The same methods are used on data from the worldwide Harvard Centroid Moment Tensor (CMT) catalogue and show results compatible with those of Southern California. For the Japan Meteorological Agency (JMA) catalogue, we still observe a linear dependence of p on M, but with a smaller slope. The SFA shows however that results for this catalogue may be biased by numerous swarm sequences, despite our efforts to remove them before the analysis.

1 Introduction

The popular concept of triggered seismicity reflects the growing consensus that earthquakes interact through a variety of fields (elastic strain, ductile and plastic strains, fluid flow, dynamic shaking and so on). This concept was first introduced from mechanical considerations, by looking at the correlations between the spatial stress change induced by a given event (generally referred to as a main shock), and the spatial location of the subsequent seismicity that appears to be temporally correlated with the main event (the so-called aftershocks, King et al. 1994; Stein 2003). Complementarily, purely statistical models have been introduced to take account of the fact that the main event is not the sole event to trigger some others, but that aftershocks may also trigger their own aftershocks and so on. Those models, of which the Epidemic Type of Aftershock Sequences (ETAS) model (Kagan & Knopoff 1981; Ogata 1988) is a standard representative with good explanatory power (Saichev & Sornette 2006a), unfold the cascading structure of earthquake sequences. This class of models show that real-looking seismic catalogues can be generated by using a parsimonious set of parameters specifying the Gutenberg–Richter distribution of magnitudes, the Omori–Utsu law for the time distribution of aftershocks and the productivity law of the average number of triggered events as a function of the magnitude of the triggering earthquake.

Very few efforts have been devoted to bridge these two approaches, so that a statistical mechanics of seismicity based on physical principles could be built (see Sornette 1991; Miltenberger et al. 1993; Carlson et al. 1994; Sornette et al. 1994; Ben-Zion & Rice 1995; Ben-Zion 1996; Rice & Ben-Zion 1996; Klein et al. 1997; Dahmen et al. 1998; Ben-Zion et al. 1999; Lyakhovsky et al. 2001; Zoller et al. 2004, for previous attempts). Dieterich (1994) has considered both the spatial complexity of stress increments due to a main event and one possible physical mechanism that may be the cause of the time-delay in the aftershock triggering, namely rate-and-state friction. Dieterich's model predicts that, neglecting interactions between triggered events, aftershocks sequences decay with time as t−p with p≃ 1 independently of the main shock magnitude, a value which is often observed but only for sequences with a sufficiently large number of aftershocks triggered by large earthquakes, typically for main events of magnitude 6 or larger. Dieterich's model is able to take account of a complex stress history, but it has never been grafted onto a self-consistent epidemic model of seismicity, taking account of mechanical interactions between all events.

Recently, two of us (Ouillon & Sornette 2005; Sornette & Ouillon 2005) have proposed a simple physical model of self-consistent earthquake triggering, the Multifractal Stress-Activated (MSA) model, which takes into account the whole space-time history of stress fluctuations due to past earthquakes to predict future rates of seismic activity. This model assumes that rupture at any scale is a thermally activated process in which stress modifies the energy barriers. This formulation is compatible with all known models of earthquake nucleation (see Ouillon & Sornette 2005, for a review), and indeed contains the state-and-rate friction mechanism as a particular case. The ultimate prediction of this model is that any main event of magnitude M should be followed by a sequence of aftershocks which rate decays with time as an Omori-Utsu law with an exponent p varying linearly with M.

Ouillon & Sornette (2005) have tested the linear dependence of p on M on the SCEC catalogue over the period from 1932 to 2003. Using a superposed epoch procedure (see Section 3.1) to stack aftershocks series triggered by events within a given magnitude range, they found that the p-value increases with the magnitude M of the main event according to p(M) = a0M+b0 = a0(M−M0), where a0 = 0.10, b0 = 0.37, M0 = −3.7. Performing the same analysis on synthetic catalogues generated by the ETAS model, for which p is by construction independent of M, did not show an increasing p(M), suggesting that the results obtained on the SCEC catalogue reveal a genuine multifractality which is not biased by the method of analysis.

Here, we reassess the parameters a0 and b0 for Southern California, using an updated and more recent version of the catalogue, and extend the analysis to other areas in the world (the worldwide Harvard CMT catalogue and the Japanese JMA catalogue), to put to test again the theory and to check whether the parameters a0 and b0 are universal or on the contrary vary systematically from one catalogue to the other, perhaps revealing meaningful physical differences between the seismicity of different regions. We use three different methodologies to estimate p-values. The first one is a generalization of the construction of binned approximations of stacked time-series that we used in Ouillon & Sornette (2005). Here, we also introduce a new method specifically designed to take account of the possible contamination of the singular signature of the Omori-Utsu law by a regular and non-stationary background rate contribution that may originate from several different origins described in Section 3.3. This new method is coined the Scaling Function Analysis (SFA), and borrows the key ideas of the wavelet transform of multifractal signals. We also introduce another new method to shortcut the problem of missing events in aftershock sequences. Its main idea is to monitor the magnitude of the largest events as a function of time in a triggered sequence. We show that this allows us to determine the p-value, provided we know the b-value of the Gutenberg-Richter law (see Section 3.4).

Before focusing on the new statistical techniques for analysing aftershock sequences and presenting the results, we first recall the main concepts defining the MSA model. All analytical calculations have already been presented and detailed in Ouillon & Sornette (2005) and won't be repeated here. Only the relevant results will be used to compare with the empirical analysis.

In the last section of this paper, our results and theory will be discussed and confronted to recent models and ideas mostly published since Ouillon & Sornette (2005). We will in particularly show that most of them display data that are compatible with our observations (Peng et al. 2007), or present models that are special cases of ours (Helmstetter & Shaw 2006; Marsan 2006). Discrepancies with other works will be shown to be due either to declustering techniques incompatible with what we know about earthquake nucleation processes (Zhuang et al. 2004; Marsan & Lengliné 2008), or with physical models of fundamental different nature (Ziv & Rubin 2003).

2 The Multifractal Stress-Activation Model

2.1 Motivation and background

Mechanical models that try to predict the aftershock sequence characteristics from the properties of the main shock rupture are generally based on the spatial correlations between the Coulomb stress transferred from the main event to the surrounding space and the location of aftershocks. As the rupture characteristics of most of the aftershocks are unknown, and that many such events are even likely to be missing in the catalogues, the stress fluctuations due to aftershocks are neglected. While this approach aims at predicting the space-time organization of hundreds to thousands of aftershocks, it remains fundamentally a ‘one-body’ approach. Computing the stress transfer pattern resulting from the sole main shock and postulating that earthquake nucleation is activated by static stress (through mechanisms like rate-and-state friction or stress corrosion), one can predict the time dependence of the aftershocks nucleation rate which behaves as an Omori-Utsu law with exponent p≃ 1, independent of the magnitude M of the main event (Dieterich 1994).

Early works on stress transfer (see for example, King et al. 1994) have shown that some large recent earthquakes, like the Landers event, have certainly been triggered by a sequence of previous large events. Actually, there is a priori no support for the claim that the Landers event could have been triggered solely by large, well-defined previous events. Indeed, many other smaller events, for which rupture parameters are unknown, are likely to have played a significant role in the nucleation of that main shock (see also Felzer et al. 2002, for a similar discussion on the 1999 Hector Mine earthquake). This hypothesis is supported by the recent growing recognition that small earthquakes taken as a whole are probably as important as or even more influential in triggering future (small and) large earthquakes (Helmstetter et al. 2005).

We thus clearly need a ‘many-body’ approach to deal with the general problem of earthquake triggering, as any event, including aftershocks, is the result of a previous, complex space-time history of the stress or strain field, most of it being beyond our observation and quantification abilities. The MSA model has been developed to combine the complex stress history with the idea that events are activated by static stress. One specific prediction has been derived from a theoretical analysis of the model, namely the time dependence of the aftershocks rate and the most probable p-value that should be observed.

2.2 Description of the main ingredients of the MSA model

One may argue that the thermal dependency of a process such as rate-and-state friction holds only at the microscopic scale, depicting asperity creep. Anyway, the exponential dependency of the seismicity rate on the applied stress is still conserved at larger scales (see Dieterich 1994, for example, yet in that case one has to neglect the time dependency as it doesn't take account of multiple interactions and triggering). Indeed, most theoretical and experimental works on rate-and-state friction generally agree on the conclusion that the rate dependence of friction reflects the role of thermally activated physical processes at points of contacts between the sliding surfaces. This processes occur at microscopic scales, but renormalize at the macroscopic scale so that the parameters of the rate-and-state friction law depend on temperature. For example, Chester & Higgs (1992) studied experimentally the temperature dependence of parameters A and A−B as well as of the base friction coefficient μ0 of the rate-and-state friction law for wet or dry ultrafine grained quartz gouge. In the velocity weakening regime (which is the regime for which earthquakes can occur, and that we implicitly assume to hold in our MSA model), they observed that an increase in temperature induces a decrease of A−B, so that the system is more unstable. This effect is enhanced in the wet case, while the value of A doesn't depend significantly on temperature. On the other hand, they also observe that, still in the velocity weakening regime, the base frictional strength increases with temperature for wet and dry tests, which agrees well with other experiments published by Blanpied et al. (1991) on granite gouge at hydrothermal conditions. Chester & Higgs (1992) and Chester (1994) propose an empirical modification of the rate-and-state friction law which takes account explicitly of the temperature and which predicts that changes in temperature and slip rate have opposite effects on the steady-state friction coefficient. At temperatures corresponding to seismogenic depths (i.e. from ≃ 300 to ≃ 600 K) the increase of the base friction is less than 5 per cent (Chester & Higgs 1992) while the change of A−B is much larger. All in all, we thus expect that the time to unstable slip of a given fault will be reduced when temperature increases, thus supporting our model in terms of a thermally activated seismicity rate. For other experiments and models of thermally activated friction processes see Ben-Zion (2003), Heslot et al. (1994) or Baumberger et al. (1999), among others.

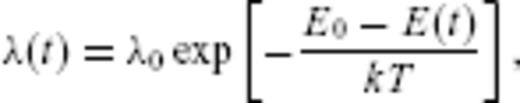

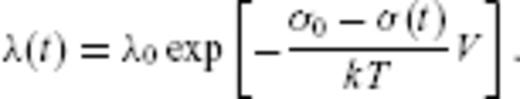

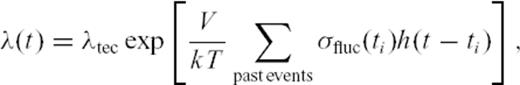

and time t, the earthquake nucleation rate is therefore

and time t, the earthquake nucleation rate is therefore

is assumed to be a scalar quantity, such as the Coulomb stress or any combination of the components of the full stress tensor.

is assumed to be a scalar quantity, such as the Coulomb stress or any combination of the components of the full stress tensor. which reads

which reads

is the spatial location at which the earthquake rate is observed) but is constant with time. The second term is a double integral over the whole space and over all past times up to the present observation time t. The first term in this integral,

is the spatial location at which the earthquake rate is observed) but is constant with time. The second term is a double integral over the whole space and over all past times up to the present observation time t. The first term in this integral,  is the number of events that occurred in an elementary space-time hypervolume

is the number of events that occurred in an elementary space-time hypervolume  . The next term,

. The next term,  , is the stress fluctuation that occurred at the source of a past event located at spatial coordinate

, is the stress fluctuation that occurred at the source of a past event located at spatial coordinate  and time τ. The last term,

and time τ. The last term,  , is the Green's function (possibly non-isotropic) that mediates this stress fluctuation from the source to the observation location and time.

, is the Green's function (possibly non-isotropic) that mediates this stress fluctuation from the source to the observation location and time.Starting from the basic eq. (3) of thermal activation driven by stress and the eq. (4) for the stress field evolution, we now state several hypotheses that are needed in order to describe the statistics of earthquake triggering taking account of all past events whatever their time of occurrence, location and size.

Hypothesis 1: Every shock is activated according to the rate determined by Arrhenius law, function both of (possibly effective) temperature and stress.

Hypothesis 2: Every shock of magnitude M triggers instantaneously of the order of 10qM events, which is equivalent of the productivity law in the ETAS model grounded on empirical observations (Helmstetter 2003). This hypothesis is discussed at the end of this paper.

due to the occurrence of seismic events depend on their spatial location

due to the occurrence of seismic events depend on their spatial location  , their rupture geometry and the spatial dependence of the Green's function. As most of these parameters are at best poorly known, and given the fact that a significant number of events are not even recorded at all (Sornette & Werner 2005), we follow Kagan (2004) and consider that the stress fluctuations are realizations of a random variable σfluc that may be positive or negative. We assume that the probability density function of this random variable can be written as

, their rupture geometry and the spatial dependence of the Green's function. As most of these parameters are at best poorly known, and given the fact that a significant number of events are not even recorded at all (Sornette & Werner 2005), we follow Kagan (2004) and consider that the stress fluctuations are realizations of a random variable σfluc that may be positive or negative. We assume that the probability density function of this random variable can be written as

2.3 Main prediction of the MSA model

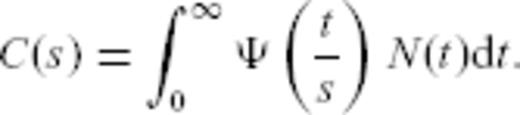

As the nucleation rate λ(t) obviously depends on the past seismicity rate (which controls the occurrence time ti of past events), eq. (8) must be solved self-consistently. The mathematical framework necessary to solve this equation has already been presented in Ouillon & Sornette (2005), so we won't detail it here again. The final result is that, if the condition μ(1 +θ) = 1 is fulfilled, then

- (i)

A magnitude M event will be followed by a sequence of aftershocks which takes the form of an Omori-Utsu law with exponent p.

- (ii)

This exponent p depends linearly on the magnitude M of the main event.

- (iii)

There exists a lower magnitude cut-off M0 for main shocks below which they do not trigger (considering that triggering implies a positive value of p).

Our estimates below suggest that, for the Earth crust, μ(1 +θ) should lie somewhere within [0.73;1.5], thus bracketing the criterion for multifractality to hold. Ouillon & Sornette (2005) have shown that multifractality (and the main p(M) prediction discussed in this paper) also holds for a rather large set of values of μ and θ such that μ(1 +θ) = 1 is only approximately fulfilled. Saichev & Sornette (2006b) have identified that this stems from a generic approximate multifractality for processes defined with rates proportional to an exponential of a long-memory process [i.e. with power-law memory kernel such as our h(t) given by (7) for t < τM].

This model thus predicts that the exponent of the power-law relaxation rate of aftershocks increases with the size of the energy fluctuation, which is indeed the hallmark of multifractality (see for instance, Sornette 2006, for a definition and exposition of the properties associated with multifractality). This model has thus been coined the ‘MSA’ model (as used in the remaining of this paper).

In contrast with the phenomenological statistical models of earthquake aftershocks such as the ETAS model, the MSA model is based on firm mechanical and thermodynamic principles. A fundamental idea is that it integrates the physics of non-linear nucleation processes such as rate and state friction or slow crack growth. It is not possible to relate the ETAS model (Kagan & Knopoff 1981; Ogata 1988) to physically based earthquake nucleation processes. The above exposition shows that the non-linearity of the nucleation process translates necessarily into a non-linearity of the activation rates: the cumulative nucleation rate due to successive triggering events is not additive as in the ETAS model, but multiplicative. Stresses indeed add up, but not seismicity rates (which depend exponentially on the cumulative stress). Only the MSA model is compatible with the rate-and-state friction or slow crack growth process. The MSA model also takes account of negative stress fluctuations, which tend to decrease the seismicity rate, whereas, in the ETAS model, the effect of every event is to promote seismicity everywhere in space.

2.4 Possible significance of the condition μ(1 +θ) ≈ 1 ensuring the multifractality of earthquake sequences

2.4.1 Conjecture on an endogenous self-organization

Using the previous analysis of Ouillon & Sornette (2005) and anticipating the results shown below, we here discuss the possible physical interpretation of the condition μ(1 +θ) ≈ 1 found from the analysis of the MSA model for multifractality in the Omori law to hold. This condition suggests a deep relation between, on one hand, the exponent μ embodying all the spatial complexity and self-organization of the fault pattern and, on the other hand, the exponent θ encoding the temporal self-organization via earthquakes of the fault pattern. Such relation between power-law exponents associated with space and time organization are usually found only in critical phenomena. A natural interpretation rests on the deep association between faults and earthquakes, the former supporting the later, and the later growing and organizing the former. Indeed, one of the main results of modern seismology is that earthquakes occur on pre-existing faults. The fault network geometry thus controls the spatial location of earthquakes. In return, earthquakes are the key actors for the maturation and growth of fault networks. Such feedback loops lead to self-organization. This chicken-and-egg feedback has been proposed to lead to a critical self-organization (Sornette et al. 1990; Sornette 1991). The relationship between the exponents μ and θ encoding the space and time complexity of interactions between earthquakes is reminiscent of a similar relationship proposed by Sornette & Virieux (1992) between short- and long-term deformation processes. By solving a non-linear strain diffusion equation featuring a power-law distributed noise term encoding the Gutenberg-Richter distribution of earthquake sizes, Sornette and Virieux found, via a self-consistent argument that strain is scale invariant, that the Gutenberg-Richter b-value is determined endogenously for the largest events to the value 3/2. This value has received some confirming evidence (Pacheco et al. 1996) but it is fair to say that the determination of the shape of the distribution of earthquake sizes for very large and extreme events is still much debated (see for instance, Pisarenko & Sornette 2004, and references therein). A theory allowing to fix the exponents μ and θ in a similar way is still lacking. The MSA model takes these two exponents as exogenous, while a more complete theory encompassing the self-organization of earthquakes and faults at all timescales should determine them endogenously.

Let us know discuss briefly what we know presently of their possible values, both from theoretical considerations and empirical studies.

2.4.2 What is known on the exponent μ

A theoretical approach showed that the stress distribution due to a uniform distribution of equal-sized defects in an elastic medium obeys the Cauchy law (Zolotarev & Strunin 1971; Zolotarev 1986), which corresponds to μ = 1. Restricting potential events to occur on a fractal set of dimension δ embedded in a 3-D space, Kagan (1994) predicts that μ = δ/3, so that the Cauchy law should be associated with the upper bound of possible μ-values. Kagan (1994) also provides a measure of μ using static elastic stress transfer calculations from moment tensor solutions of the worldwide Harvard CMT catalogue, which allow him to estimate stress increments due to past events at the locii of future events, as well as their statistical distribution. Using pointwise double-couple approximations of event sources, he observes that the large stress tail of the distribution (which mainly characterizes stresses transferred by nearby events) behaves as a power law with μ = 1, hence as a Cauchy law. As this value is the one that corresponds to a uniformly random distribution of sources, he proposes that this may be due to earthquake location uncertainties. Those uncertainties make earthquake clusters look spatially random at short spatial ranges, so that their fractal dimension δ is close to 3, and thus μ = 1. Considering the distribution of smaller stress increments, he obtains μ = 0.74. Such a value corresponds theoretically to δ = 2.2, in excellent agreement with the spatial correlation dimension of earthquake hypocentres (Kagan 1994). Marsan (2005) tried to estimate μ directly from a natural catalogue of events (Southern California). He computed the distribution of stress fluctuations taking account only of target sites that were the locii of future pending events. The stress value is computed by taking account of the focal mechanisms of the events. This approach yields a μ-value close to 0.5.

While it seems clear that the hypothesis that the distribution of source stresses is heavy tailed (power law) with a small exponent of the order of 1, all these works suffer from one or several problems.

- (i)

These works neglect the fact that a huge majority of events occur without being recorded at all (the smallest magnitude events) and are thus excluded from the statistics.

- (ii)

Some potential nucleation sites experienced stress fluctuations from past recorded events but didn't break during the instrumental period and are thus excluded from the statistics too.

- (iii)

Marsan (2005) did not consider triggered events that occurred too close to the triggering event, so that he could use a pointwise source approximation. Using such simplified source geometries certainly influences the resulting value of μ, as the symmetry properties of the stress field are significantly altered in the vicinity of the fault.

- (iv)

Moreover, the rupture pattern on the fault plane is generally quite complex, so that stress fluctuations occur on the rupture plane itself (and are thought to be the reason of the occurrence of the majority of aftershocks there). Those stress fluctuations are mediated in the surrounding medium by the Green's function, and are thus not taken into account by the studies cited above. It is thus certainly very difficult to assess a precise value of μ from a natural data set.

2.4.3 What is known on the exponentθ

Several different mechanisms of stress relaxation may contribute to the determination of the exponent θ.

A first mechanism involves linear viscous relaxation processes. If stress relaxation in the crust is assumed to be dominated by linear viscous processes, then stress relaxes with time as t−3/2 in a 3-D medium (providing an appropriate description for stress fluctuations due to small earthquakes), and as t−1 in a 2-D medium (that may apply for large events, that break throughout the brittle crust). If such a transition exists, then θ should vary from 1/2 to 0 for magnitudes, respectively, smaller and larger than about 6–6.5.

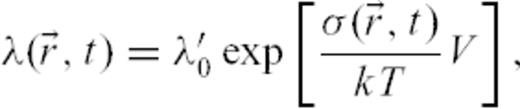

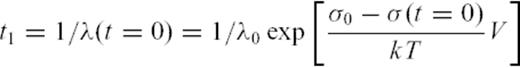

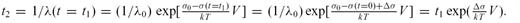

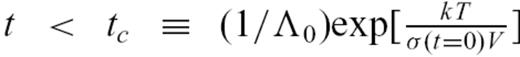

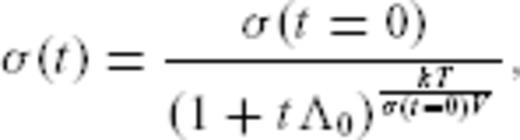

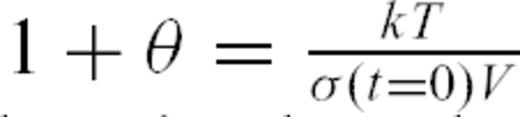

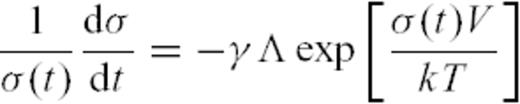

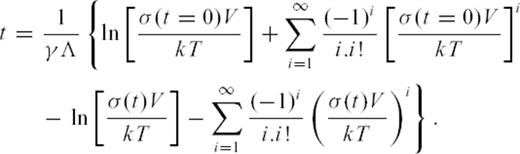

, the initial applied stress is σ(t = 0). As earthquakes are thermally activated, the average waiting time for the first event to occur is given by eq. (2)

, the initial applied stress is σ(t = 0). As earthquakes are thermally activated, the average waiting time for the first event to occur is given by eq. (2)

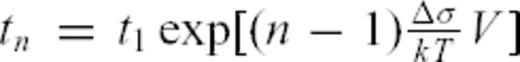

. Iterating this reasoning, we can show that tn, the average waiting time between events (n− 1) and n, is

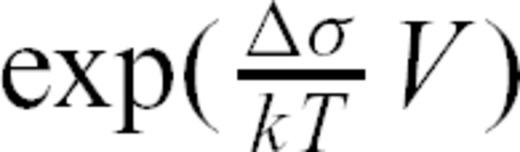

. Iterating this reasoning, we can show that tn, the average waiting time between events (n− 1) and n, is  . As waiting times between successive events define a geometrical sequence with common ratio

. As waiting times between successive events define a geometrical sequence with common ratio  , the average seismicity rate at location

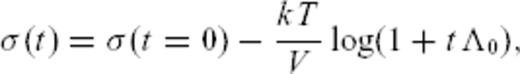

, the average seismicity rate at location  decreases as 1/t. As the cumulative stress drop is proportional to the number of events, the stress relaxes with time as

decreases as 1/t. As the cumulative stress drop is proportional to the number of events, the stress relaxes with time as

, expression (10) is undistinguishable from

, expression (10) is undistinguishable from

.

.

3 Methodology Of The Empirical Multifractal Analysis

3.1 Step 1: selection of aftershocks

In this paper, we use a method for stacking aftershock time-series which is slightly different from the one used in Ouillon & Sornette (2005), especially in the way we take account of the time dependence of the magnitude threshold Mc(t) of completeness of earthquake catalogues.

Aftershock time-series are then sorted according to the magnitude of the main event, and stacked using a superposed epoch procedure within given main shock magnitude ranges. This means that we shift the occurrence time of each main shock to t = 0. For each main event, the associated aftershock sequence is shifted in time by the same amount. In this new time frame, all events tagged as aftershocks in the original catalogue occur within the time interval [0;T]. We choose main shock magnitude intervals to vary by half-unit magnitude steps, such a magnitude step being probably a rough upper-bound for the magnitude uncertainties (see Werner & Sornette 2008, and references therein). Shifting of individual aftershock time-series to the same common origin allows us to build composite aftershock sequences that feature a large amount of events, even if the magnitude of the main shock is low. For example, a single magnitude 2.0 event may trigger at most 1 or 2 detected aftershocks (and most of the time none at all), which makes impossible to quantify any rate. But collecting and stacking 10 000 aftershock sequences triggered by as many magnitude 2.0 events allows one to build a time-series featuring several hundreds to thousands of aftershocks. An average p-value can then be estimated with a good accuracy for main shocks of such small magnitude ranges.

This methodology to build aftershocks stacked series is straightforward when the magnitude threshold Mc(t) of catalogue completeness is constant with time, which is the case for the Harvard catalogue, for example. For the SCEC and JMA catalogues, we take into account the variation of Mc(t) as follows. Individual aftershock times series are considered in the stack only if the magnitude of the main event, occurring at time t0, is larger than Mc(t0). If this main event obeys that criterion, only its aftershocks above Mc(t0) are considered in the series. This methodology allows us to use the maximum amount of data with sufficient accuracy to build a single set of stacked time-series of aftershock decay rates. The time variation of Mc has been estimated by plotting the magnitude distribution of earthquakes year by year in each catalogue, and assuming that Mc coincides with the onset of the Gutenberg-Richter law.

The time variation of Mc we propose should not be considered too strictly. For example, if a catalogue is considered to be complete down to Mc = 3.0, this does not prevent it to be incomplete during the first days that follow a magnitude 7 event. Indeed, any declustering algorithm should first define a Mc(t) function that describes the detection threshold magnitude for isolated main shocks. But in return, estimating Mc(t) necessitates to decluster the catalogue first. As our estimation of Mc(t) takes account of all events in the catalogue, including aftershocks, it thus provides an overestimate of the Mc(t) threshold for main shocks.

Ouillon & Sornette (2005) used a slightly different strategy accounting for the variation of Mc with time by dividing the SCEC catalogue into subcatalogues covering different time intervals over which the catalogue was considered as complete above a given constant magnitude threshold. This led Ouillon & Sornette (2005) to analyse four such subcatalogues separately. The robustness of the results presented below in the presence of these algorithmic variations is a factor strengthening our belief that the multifractal time dependent Omori law that we report in this paper is a genuine phenomenon and not an artefact of the data analysis or of the catalogues.

The method we used to decluster our earthquake catalogues involves a certain amount of arbitrariness, like the choice of the sizes of the spatial and temporal windows to select aftershocks. The values we chose are similar to those used in other works using the same kind of declustering technique. We should mention that two techniques have been recently introduced to remove the arbitrariness of aftershock selection rules. Each of them assumes that each event has a given probability of being triggered by any of the events that preceded it, and a probability of being a spontaneous background event. All probabilities (that are equivalent to rates) sum up to 1 for each event. The first approach (Zhuang et al, 2004) assumes that the phenomenology of earthquakes space-time organization can be modelled by an ETAS process with energy, space and time power-law kernels. The declustering procedure just consists in inverting the parameters controlling the shapes of the kernels, like the p-value. This is done using a maximum likelihood approach. The second approach (Marsan and Lengliné, 2008) is a non-parametric one based on an expectation-maximization algorithm. In this case, the functional form of the kernels is a priori unknown and is inverted as well. This last approach is must more general than the one of Zhuang et al (2004) and has been claimed by its authors to be completely model-independent, and thus free of any arbitrariness. We would like to moderate this view and point out that this method is certainly the best one to use when one is dealing with a data set generated by an ETAS-like algorithm, but that it is not adapted to the declustering of earthquake catalogues. As previously mentioned, the method of Marsan and Lengliné (2008) assumes that at any place and any time, the earthquake nucleation rate is the sum of individual rates triggered by each of the events that occurred in the past, plus a background rate. This assumption has the advantage of allowing easy computations, but it is fundamentally incompatible with what is known about the physics of earthquakes. If we assume that stress is elastically redistributed after any event, then the stress at any place and time is the sum of previous stress redistributions (and far-field loading). If we assume that earthquakes are exponentially activated by stress and temperature, an assumption which is underlying most models of earthquake nucleation, then it follows that the resulting rate due to previous events is proportional to the product of individual triggered rates, not their sum. The approach of Zhuang et al. (2004) and Marsan & Lengliné (2008) is thus intrinsically incompatible with all we know about earthquake nucleation physics. Indeed, introducing this kind of linear declustering methodology assumes a priori that earthquakes are governed by a linear process, which is quite a strong assumption. The fact that Marsan & Lengliné (2008) observe a rather weak magnitude-dependent p-value is likely to be the consequence of such an a priori choice, since linear models do not predict magnitude-dependent p-values by construction. We are perfectly aware that our simple method of declustering separates some main shocks from the rest of seismicity, whereas our model states that earthquake triggering involves the whole hierarchy of triggering events, down to very small and even undetected magnitudes. Unfortunately, no general non-linear declustering scheme, taking account of the triggering effect of every event, presently exists. The main difficulty to define such a non-linear probabilistic model is that one has to know in advance the nature of the non-linearity (which in our case amounts to deal with elastic stresses and their sign).

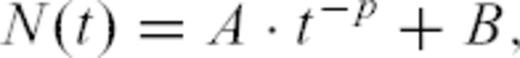

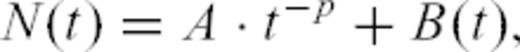

3.2 Step 2: fitting procedure of the stacked time-series

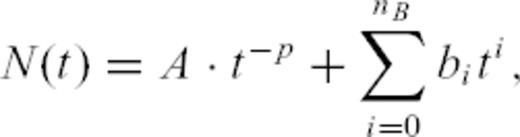

As the linear density of bins decreases as the inverse of time, each bin receives a weight proportional to time, balancing the weight of data points along the time axis. In our binning, the linear size of two consecutive intervals increases by a factor α > 1. Since the choice of α is arbitrary, it is important to check for the robustness of the results with respect to α. We thus performed fits on time-series binned with 20 different values of α, from α = 1.1 to 3 by step of 0.1. We then checked whether the fitted parameters A, B and p were stable as a function of α. We observed that the inverted parameters do not depend significantly on α. We used the 20 estimations of A, B and p for the 20 different α's to construct their averages and standard deviations. For some rare cases, we obtained p-values departing clearly from the average (generally for the largest or smallest values of α)-we thus excluded them to perform a new estimate of p, following here the standard procedure of robust statistical estimation (Rousseeuw & Leroy 1987). In order to provide reliable fits, we excluded the early times of the stacked series, where aftershock catalogues appear to be incomplete (Kagan 2004). Finally, an average p-value (and its uncertainty) determined within the main shock magnitude interval [M1;M2] is associated with the magnitude  . Our approach extends that of Ouillon & Sornette (2005) who performed fits on the same kind of data using only a single value α = 1.2.

. Our approach extends that of Ouillon & Sornette (2005) who performed fits on the same kind of data using only a single value α = 1.2.

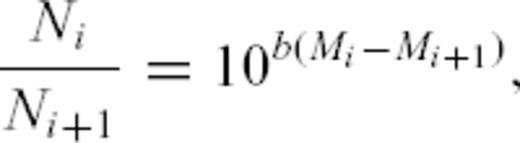

The time interval over which the fit is performed is chosen such that the event rate time-series scales as an Omori-Utsu law whatever the value of the α-parameter. For a given magnitude range, all time-series are thus fitted using the same time interval. In return, this ensures that the time interval over which a power-law scaling is observed does not depend on the choice of a unique and arbitrary α value. The end time is usually taken as t = 1 yr, except if a large burst of activity occurs near that time (as is the case for example for the largest magnitude range in Fig. 3). The beginning of the fitting interval has been chosen by eye inspection for each value of α and we subsequently checked that the chosen common fitting interval for the least-squares approach was similar to the one used for the SFA method (see below).

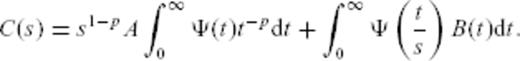

![Binned stacked series of aftershock sequences in the SCEC catalogue (after removing the events in the Brawley zone) for various magnitude ranges. Magnitude ranges are, from bottom to top: [1.5;2], [2;2.5], [2.5;3], [3;3.5], [3.5;4], [4;4.5], [4.5;5], [5;5.5], [5.5;6], [6;6.5], [6.5;7], [7;7.5], [7.5;8]. The solid lines show the fits to individual time-series with formula (15). All curves have been shifted along the vertical axis for the sake of clarity.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/gji/178/1/10.1111/j.1365-246X.2009.04079.x/3/m_178-1-215-fig001.jpeg?Expires=1749849640&Signature=QrE7cvdpP5fWyyfBDOekX1t0zlKMn-Yz-PC14zcTkaL9xI~oCSc-PPgoAtfhegCatnGPC2mkR6V80FMdezSLzxC57lpvE7RNwqwTsqVpwijm9X87ixHlIdEePVxi5xlTuoKRo52ptigElJ-oLwn4A0JQ1AcBhbWpwWyFJgMhoy~uNE5G3VueW6dm9GMy54~JUsQovOISN9OIZHEdwAXF3NU2LWo3h3SGxFgtAUdE9gpLn2lHRHzcfgCyq2DN8aoJrz~hZ4KVC5w5J-EziogteuZHiRTW7fZKeDNiKvQlMsksNoxbvkCNrRlGVq7bgWiZWr0aDFLvIl2T63EUzKtfuw__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Binned stacked series of aftershock sequences in the SCEC catalogue (after removing the events in the Brawley zone) for various magnitude ranges. Magnitude ranges are, from bottom to top: [1.5;2], [2;2.5], [2.5;3], [3;3.5], [3.5;4], [4;4.5], [4.5;5], [5;5.5], [5.5;6], [6;6.5], [6.5;7], [7;7.5], [7.5;8]. The solid lines show the fits to individual time-series with formula (15). All curves have been shifted along the vertical axis for the sake of clarity.

For each magnitude range, we thus have 20 different binned time-series corresponding to different values of α. For the sake of clarity, we only plot the binned aftershocks time-series whose p-value is the closest to the average p-value obtained over the 20 different values α for that magnitude range. Its fit using eq. (15) will be plotted as well.

Using binned time-series and OLS fits is very popular in the literature to determine p-values. The next two sections will point out that the quality of the selected aftershock time-series generally suffers from sampling biases. For low magnitude main shocks, the sequences can be contaminated by sequences triggered by previous events. Sequences triggered by large magnitude events often suffer from time-dependent undersampling of small aftershocks. The next two sections address those problems and propose new methods to solve them. They will also provide independent estimations of the p-values for the different main shock magnitude ranges.

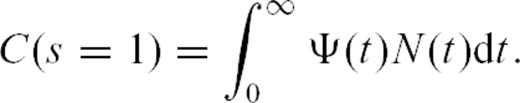

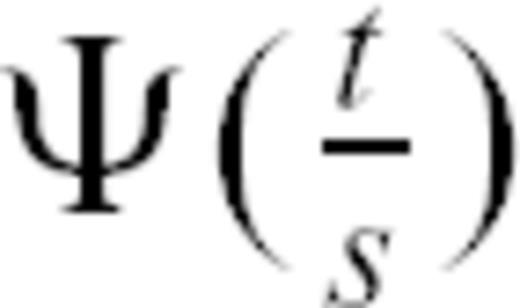

3.3 Step 3: scaling function analysis

In order to address this question, that is, to take account of the possible time-dependence of B, two strategies are possible:

- (i)

The 20 different binned time-series can be fitted using eq. (17) with the unknowns being A, p, nB and the bi's,

- (ii)

One can use weights in the fitting procedure of the original data so that the polynomial trend is removed. One is then left with a simple determination of A and p alone.

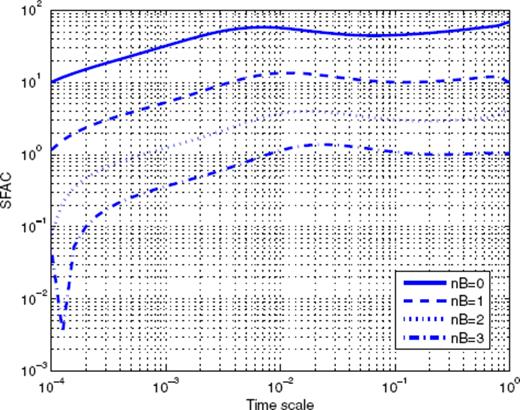

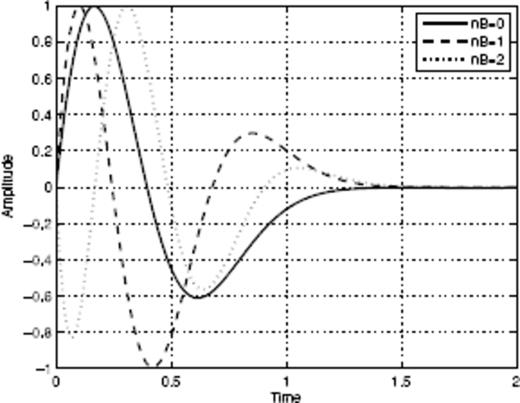

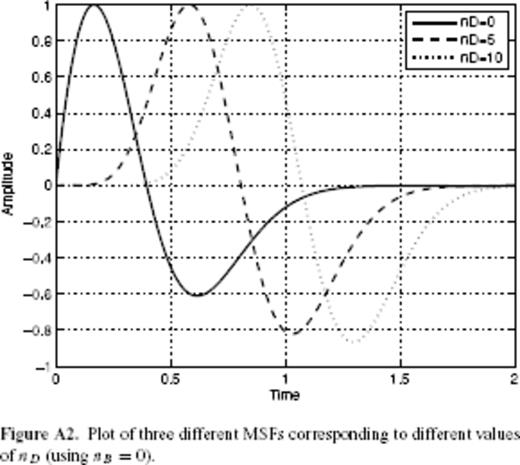

We have implemented the second strategy in the form of what we refer to as the ‘SFA’. The Appendix describes in details this method that we have developed, inspired by the pioneering work of Bacry et al. (1993) on wavelet transforms, and presents several tests performed on synthetic time-series to illustrate its performance and the sensitivity of the results to the parameters. Those tests show that the SFA method will be very useful to process aftershock sequences triggered by small main shocks for which a power-law scaling can be observed down to very small timescales. For sequences following larger magnitude events, a breakdown of scaling properties is often observed due to magnitude-dependent sampling problems at early times. The help of the SFA method is then more limited (see the Appendix). The next section proposes still another new method that allows one to deal with those undersampling biases. Note that the SFA method indeed introduces another parameter nD which controls the shape of the wavelet close to t = 0. The Appendix discusses the role of this parameter as well. In their attempt to quantify diffusion processes within individual aftershock sequences of large Californian events, Helmstetter et al. (2003) performed a wavelet analysis of triggered time-series at various spatial scales. This wavelet analysis in the time domain is indeed equivalent to the present SFA in the case nB = nD = 0.

3.4 Step 4: time variation of maximum magnitudes

Fitting of binned time-series or of the SFA coefficient as a function of timescale requires in both case to select reliable aftershock catalogues. This step is by far the most difficult, especially for short timescales after a large event, as the earthquake activation rate is so large that it proves impossible to pick up seismic waves arrival times and locate every event, especially the smallest ones. This human and instrumental limitation is generally invoked to explain the observed roll-off of aftershocks nucleation rate just after a large main shock.

Helmstetter et al. (2005) use eq. (18) to correct their aftershock time sequences for the missing events using a very simple reasoning: if a main shock of magnitude M occurs at time τ = 0 then, at time t, the catalogue of aftershocks is complete above a magnitude mth given by eq. (18). Assuming that the Gutenberg-Richter law holds at any time with a constant b-value, it follows that N0(t), the theoretical total number of events triggered at time t, is proportional to  , where Nobs[m > mth(M, t)] is the observed number of aftershocks of magnitude larger than mth(M, t). Plotting N0(t) as a function of t thus allows one to estimate the rate of aftershocks as a function of time, including missing events in the time-series. Helmstetter et al. (2005) observe that this approach provides a significant increase of the temporal range available to measure the p-value of the Omori law.

, where Nobs[m > mth(M, t)] is the observed number of aftershocks of magnitude larger than mth(M, t). Plotting N0(t) as a function of t thus allows one to estimate the rate of aftershocks as a function of time, including missing events in the time-series. Helmstetter et al. (2005) observe that this approach provides a significant increase of the temporal range available to measure the p-value of the Omori law.

While this approach seems at first sight simple and efficient, it nevertheless possesses several drawbacks. First, it necessitates to carefully analyse several aftershock sequences to obtain eq. (18), which is a very tedious task. It is also obvious that all coefficients in this equation will depend on the geometry of the permanent seismic stations network at the time of occurrence of the main event. Thus, this equation cannot be used for all earthquakes in a given catalogue: every time a change occurs in the geometry of the seismograph network, coefficients in eq. (18) have to be updated, requiring a new analysis of a sufficient number of individual sequences spanning a large spectrum of main shock magnitudes. This also means that all coefficients have to be determined for a single given catalogue: the various parameters for Southern California are not valid for the JMA or the Harvard catalogues.

Another important problem is that mth(M, t) has a much more complex time-dependence than the one given by eq. (18). To show this, we shall consider a simple example. We assume that a main event E1 of magnitude M1 = 7.5 occurs at time τ = 0. As mentioned above, using eq. (18) predicts that all triggered events of magnitude m > 2 will be detected after a time lapse of about 3 weeks. We now suppose that, 6 months after the main event, another event E2 of magnitude M2 = 7.5 occurs within a short distance of E1. This event, as well as the majority of its aftershocks, will thus be considered as aftershocks of event E1. It is now important to understand that, after event E2 occurs, many events of magnitude m < 2 will be missed for a time span of about 3 weeks. It follows that the aftershock catalogue of event E1 will also be depleted of the same quantity of aftershocks during a time interval that spans (6 months, 6 months + 3 weeks). The simple prediction of eq. (18) is thus not verified for event E1. One can extend this simple reasoning to different values of M1 and M2 and show that, in an aftershock time sequence, every aftershock is itself followed by a time interval within which events are missing. It follows that any aftershock time sequence misses events not only at its beginning, but also all along its duration. It is a priori difficult to correct for this effect as it depends heavily on the specific time distribution of the aftershock magnitudes characterizing each sequence.

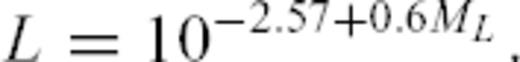

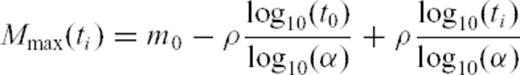

The new method we propose in this section allows one to analyse aftershock time-series without the need to define any magnitude detection threshold. It is based on two simple assumptions: the first one is that the Gutenberg-Richter law holds down to the smallest possible earthquake source magnitude Mmin and that the b-value is independent of the magnitude of the main shock and of the time elapsed since that main shock occurred. The second assumption reflects the way seismologists deal with, select and process seismic signals: for a variety of reasons, one is mostly interested in the largest events in a time-series. This stems from the fact that they are generally associated with a high signal-to-noise ratio and are detected by a large number of stations, allowing a clean inversion of the data (such as hypocentre location, focal mechanism and source dynamics). They also dominate the rate of energy and moment release in the sequence, and thus the potential damage that may be due to large aftershocks. As much interest thus focuses on the largest events, and because they are obviously easier to detect, it is reasonable to state that, in any given time interval, the event that has the smallest probability to be missed is the largest one. Indeed, even if the P- and/or S-waves arrival times prove to be unreadable due to the superposition of waves radiated by numerous other smaller events, one will anyway be able to pick up the arrival times of the maximum amplitude of the P or S waves, which shall be sufficient to provide the spatial location of the associated event and compute its magnitude with reasonable accuracy. We will thus assume that this reasoning holds true even after the occurrence of a large event, except maybe during the early part of the associated coda waves, whose amplitude may completely hide any immediate aftershock.

where Mi is the magnitude of the largest event that occurred within that bin. This value for Ni just states that there is on average one event of magnitude Mi in the time bin i. Writing that

where Mi is the magnitude of the largest event that occurred within that bin. This value for Ni just states that there is on average one event of magnitude Mi in the time bin i. Writing that

. If we now set Mmax(ti) = Mi, we get Mmax(ti) = m0+iρ, where m0 is a constant. Noting that ti = t0αi, we get

. If we now set Mmax(ti) = Mi, we get Mmax(ti) = m0+iρ, where m0 is a constant. Noting that ti = t0αi, we get  , yielding finally

, yielding finally

. The slope is zero if p = 1: in that case, the total number of events remains constant within each logarithmic time bin, so that the typical maximum magnitude within each bin does not vary. The observation that the total number of events counted in logarithmic time bins is roughly constant has been previously advanced to support the pure Omori law ∼1/t, with p = 1 (Kagan & Knopoff 1978; Jones & Molnar 1979). Our present purpose is to refine these analyses and show that p actually deviates from 1 in a way consistent with the MSA model. If p < 1, the total number of events within each bin increases with time, so does Mmax. The reverse holds if p > 1. This method does not require the estimation of the total number of events within each time bin, because it assumes that the magnitude of the largest event within a given bin is a reliable measure of that total number. We are presently developing a more rigorous approach based on a maximum likelihood estimation of p and K that will be presented in a forthcoming paper. The main drawback of this method is that it presently doesn't take into account the background seismicity rate. This method is therefore restricted to time-series where the contribution of the background rate is small relative to the Omori power-law decaying rate. This restriction is not serious for the time sequences triggered by the largest main events. For smaller main events, we will focus on time intervals where the total rate is at least 10 times larger than the background rate, based on the visualization of the binned time-series presented in Section 3.2. Similarly to the analysis of the binned time-series, we will consider 20 different possible values of α, leading us to obtain as many p-values, with their average and standard deviation for each main shock magnitude range. For all catalogues, we will consider that b = 1, so that for each fit we have p = 1 −ρ′. It is easy to show that if we assume b = 0.9 (respectively b = 1.1) for instance, our estimations of a0 has to be decreased (respectively increased) by 10 per cent. The choice of b is thus not critical to estimate the multifractal properties of a seismic catalogue.

. The slope is zero if p = 1: in that case, the total number of events remains constant within each logarithmic time bin, so that the typical maximum magnitude within each bin does not vary. The observation that the total number of events counted in logarithmic time bins is roughly constant has been previously advanced to support the pure Omori law ∼1/t, with p = 1 (Kagan & Knopoff 1978; Jones & Molnar 1979). Our present purpose is to refine these analyses and show that p actually deviates from 1 in a way consistent with the MSA model. If p < 1, the total number of events within each bin increases with time, so does Mmax. The reverse holds if p > 1. This method does not require the estimation of the total number of events within each time bin, because it assumes that the magnitude of the largest event within a given bin is a reliable measure of that total number. We are presently developing a more rigorous approach based on a maximum likelihood estimation of p and K that will be presented in a forthcoming paper. The main drawback of this method is that it presently doesn't take into account the background seismicity rate. This method is therefore restricted to time-series where the contribution of the background rate is small relative to the Omori power-law decaying rate. This restriction is not serious for the time sequences triggered by the largest main events. For smaller main events, we will focus on time intervals where the total rate is at least 10 times larger than the background rate, based on the visualization of the binned time-series presented in Section 3.2. Similarly to the analysis of the binned time-series, we will consider 20 different possible values of α, leading us to obtain as many p-values, with their average and standard deviation for each main shock magnitude range. For all catalogues, we will consider that b = 1, so that for each fit we have p = 1 −ρ′. It is easy to show that if we assume b = 0.9 (respectively b = 1.1) for instance, our estimations of a0 has to be decreased (respectively increased) by 10 per cent. The choice of b is thus not critical to estimate the multifractal properties of a seismic catalogue.4 Results

4.1 The Southern California catalogue

4.1.1 Selection of the data

Ouillon & Sornette (2005) have analysed the magnitude-dependence of the p-value for aftershocks sequences in Southern California. However, since we have here developed different methods to build binned stacked series and to fit those series, it is instructive to reprocess the Southern California data in order to (1) test the robustness of Ouillon & Sornette (2005)'s previous results and (2) provide a benchmark against which to compare the results obtained with the other catalogues (Japan and Harvard). This also provides a training ground for the new SFA and maximum magnitude monitoring methods.

The SCEC catalogue we use is the same as in Ouillon & Sornette (2005), except that it now spans a larger time interval (1932–2006 inclusive). The magnitude completeness threshold is taken with the same time dependence as in Ouillon & Sornette (2005): Mc = 3.0 from 1932 to 1975, Mc = 2.5 from 1975 to 1992, Mc = 2.0 from 1992 to 1994 and Mc = 1.5 since 1994. We assume a value Δ = 5 km for the spatial location accuracy (instead of 10 km in Ouillon & Sornette 2005). This parametrization allows us to decluster the whole catalogue and build a catalogue of aftershocks, as previously explained.

4.1.2 An anomalous zone revealed by the SFA

The obtained binned stacked series are very similar to those presented by Ouillon & Sornette (2005). However, the SFA reveals deviations from a pure power-law scaling of the aftershock sequences, which take different shapes for different magnitude ranges, as we now describe.

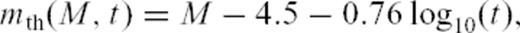

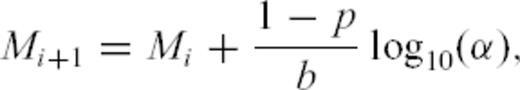

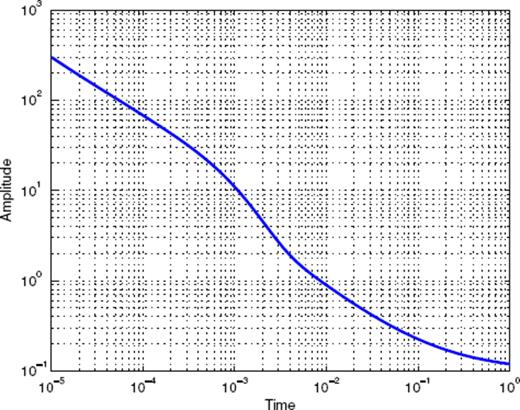

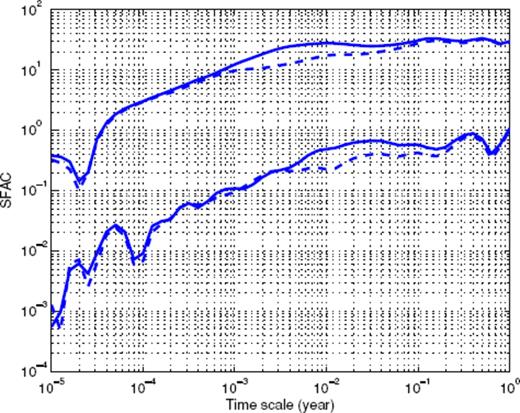

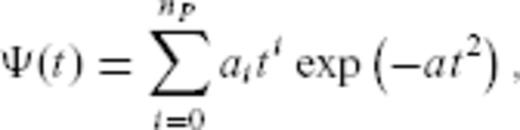

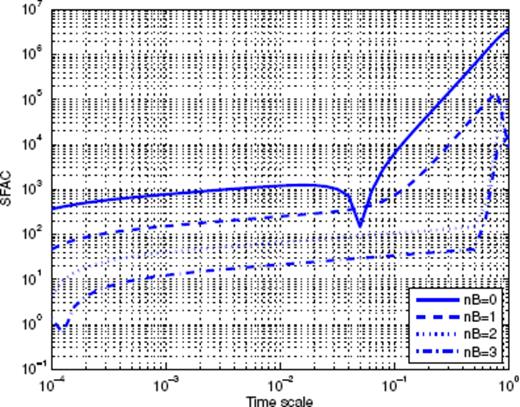

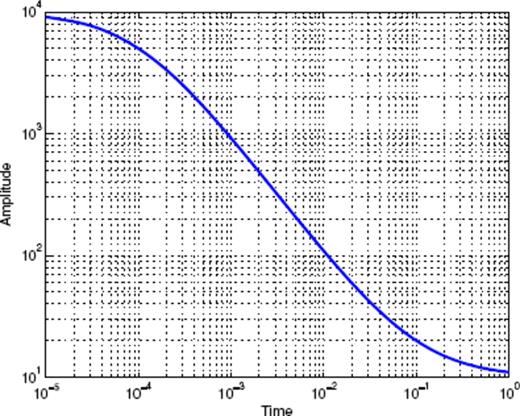

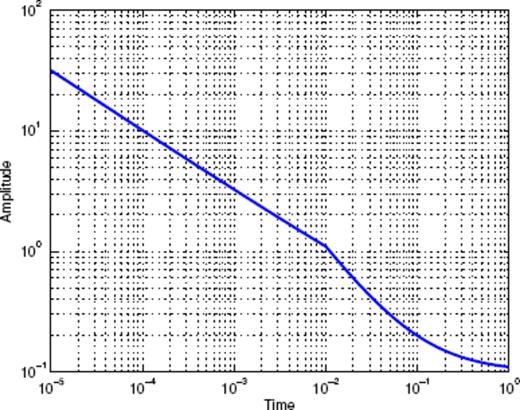

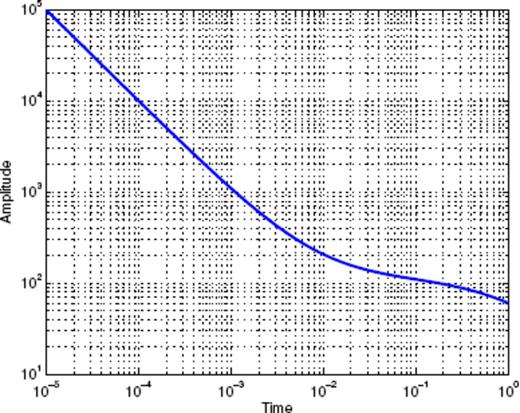

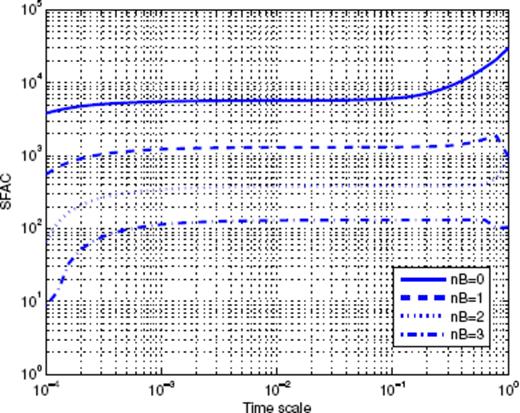

Let us first consider Fig. 1, which shows the binned stacked series obtained for main shock magnitudes in the interval [4;4.5]. The many data points represent the binned series for all values of the binning factor α. The aftershock decay rate does not appear to be a pure power law, and displays rather large fluctuations. A first scaling regime seems to hold from 10−5 to 4 × 10−4 yr, followed by a second scaling regime up to 5 × 10−3 yr, then a third scaling regime which progressively fades into the background rate. Note the similarity of this time-series with the synthetic one shown in Fig. A7 in the Appendix, which is the sum of three different contributions (a gamma law, a power law, and a constant background term). Fig. 2 shows the Scaling Function Analysis coefficient (SFAC) of the corresponding set of aftershocks. The two solid lines correspond, respectively (from top to bottom) to nB = 0 and 3.

![Binned stacked time-series of sequences triggered by main events with magnitudes M within the interval [4;4.5] in the SCEC catalogue, including the Brawley zone. This plot shows all binned series corresponding to all the 20 binning factors from α = 1.1 to 3.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/gji/178/1/10.1111/j.1365-246X.2009.04079.x/3/m_178-1-215-fig002.jpeg?Expires=1749849640&Signature=Y1LnBjOB513OCH~ZJ~7Q6ARW~PEpzA87f3EdQztU1Xdokm568paI-puFX7xbc13tiQkfLLrEcMmbajUtKQ2udOv8zxA8r3uOZURDsCu90fgcYviueY5HUjPVW1JZrqnrVSFzLEMDi~AddSnGyaog99dIMKG3JcgVlA8iWJzm4aqTQb8fA7u6lx31z~fgqMpWZXHP8-R-AKY7-NJs1awKYbEBzmWhFSph4pHYsnDpg7xGaGI20CI8eEUZqQD~LbHobRc0kX8sA5nqo2kyUX2C0XtZ21~fMPz-KoDXuNrw7T037lN6bMJP6hJqf3H9Ew82ygyOJWtli8q60fXOv6YNhg__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Binned stacked time-series of sequences triggered by main events with magnitudes M within the interval [4;4.5] in the SCEC catalogue, including the Brawley zone. This plot shows all binned series corresponding to all the 20 binning factors from α = 1.1 to 3.

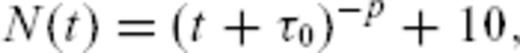

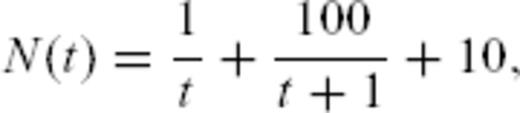

Time-series defined as the sum of a Gamma function, an Omori law and a constant background term.

Scaling function analysis coefficient (SFAC) of the time-series shown in Fig. 1. The two top curves correspond to nB = 0, the bottom curves to nB = 3. The solid curves refer to the data sets which include events in the Brawley zone. The dashed curves correspond to the data sets excluding those events.

The first important observation is that the shape of the SFAC as a function of scale is independent of nB. This means that the term B(t) in (16) is certainly quite close to a constant. Secondly, we clearly observe that a first power-law scaling regime holds for timescales within [5 × 10−5; 5 × 10−3] (for nB = 0 and similarly with the same exponent for nB = 3). The exponent being ≃ 0.6, this suggests a p-value equal to p = 0.4. Each curve then goes through a maximum, followed by a decay, and then increases again. This behaviour is strikingly similar to the one shown in Fig. A8 in the Appendix. This suggests that the time-series shown in Fig. 2 may be a mixture of several different contributions, such as gamma and power laws. This simple example shows that the SFA provides a clear evidence of a mixture of aftershock sequences with different nature within the same stacked series-a fact that has never been considered in previous studies of the same or of other catalogues.

Scaling function analysis of the time-series shown in Fig. A7. Each curve corresponds to a given value of nB used to build the corresponding MSF.

We thus tried to identify in the SCEC catalogue those events that may be responsible for the non-Omori behaviour revealed by the SFA. After many trials, we were able to locate a very small spatial domain in which many short-lived sequences occur. This zone is located within [−115.6°; −115.45°] in longitude and [32.8°;33.1°] in latitude. It is located in the Imperial Valley area, and extends northwest from the Imperial fault to the San Andreas fault. It corresponds to the Brawley seismic zone, and its seismicity is known to be dominated by short-lived earthquake swarms (Habermann & Wyss 1984). In the remaining of the SCEC catalogue analysis, we decided to exclude any sequence triggered by a shock in this small zone. The impact of excluding the Brawley area is illustrated in Fig. 2 with the two dashed lines, which can be compared with the two continuous lines (which correspond to the full catalogue). Excluding the Brawley area significantly changes the scaling properties, and one can now measure an exponent p = 0.80 for timescales larger than 10−3 yr.

4.1.3 Results on the cleaned SCEC catalogue using the binned stacked series

Following our identification of the anomalous Brawley zone, we removed all events in the aftershock catalogue associated with this zone, and launched again our analysis of the binned stacked sequences using our three independent methods of analysis.

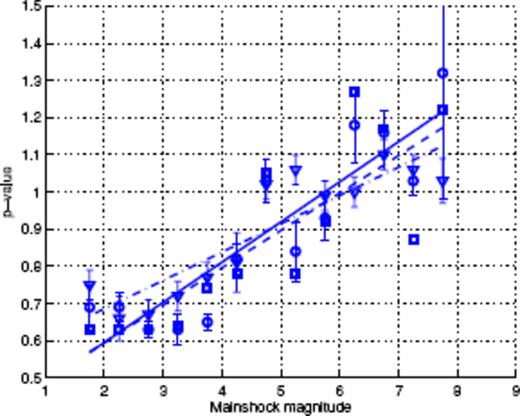

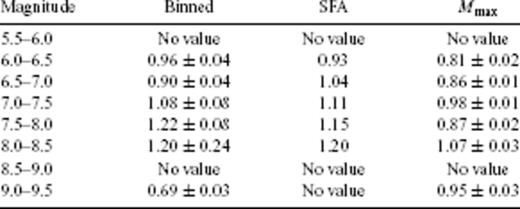

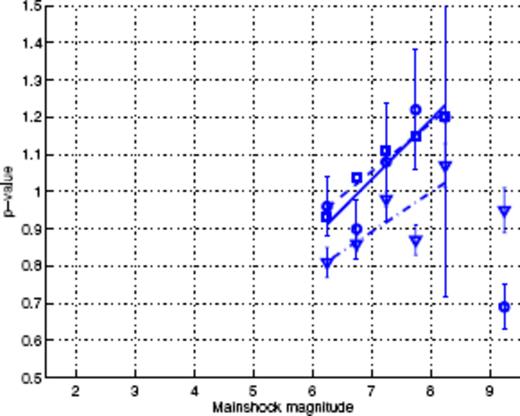

Fig. 3 shows the binned stacked series for the SCEC catalogue. Each series corresponds to a given magnitude range. For each magnitude range, for the sake of clarity, we chose to plot only one binned time-series, corresponding to a given α-value. The α-value we choose is the one for which the obtained p-value is the closest to the average p-value over all α values for that magnitude range. The solid lines show the fits of the corresponding series with formula (15). For each magnitude range, the average p-values and their standard deviations are given in Table 1. Fig. 4 shows the corresponding dependence p(M) of the p-value as a function of the magnitude of the main shocks. The dependence p(M) is well fitted by the linear law p(M) = 0.11M+ 0.38. This relationship is very close to the dependence p(M) = 0.10M+ 0.37, reported by Ouillon & Sornette (2005). Despite the differences in the catalogues and in the general methodology, we conclude that the results are very stable and confirm a significant dependence of the exponent of the Omori law as a function of the magnitude of the main shock.

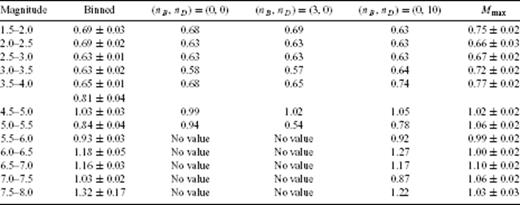

p-values for the SCEC catalogue obtained from fitting binned stacked sequences with formula (15) (second column), with the SFA (third to fifth columns) and with monitoring Mmax (last column). (nB, nD) correspond to the parameters used to define the mother scaling function (see the Appendix for definitions). p(M) values in the second, fifth and last columns are plotted in Fig. 4.

p(M) values obtained for the SCEC catalogue with fits of binned time-series (squares-second column of Table 1), Scaling Function Analysis (circles-fifth column of Table 1) and monitoring of Mmax (triangles-last column of Table 1). Continuous, dashed and dot-dashed lines stand for their respective linear fits.

The events within the SCEC catalogue we used are located within [−121.877°; −114.005°] in longitude and [31.3283°;38.4967°] in latitude. Recently, Helmstetter et al. (2007) showed that the completeness magnitude in Southern California displayed spatial variations, especially near the boundaries of that area where larger magnitude detection thresholds are observed. We thus performed a second binned time-series analysis, taking account only of events occurring within [−120;−116] in longitude and [34;38] in latitude. The results we obtained provide a relationship p(M) = 0.11M+ 0.31, which is in good agreement with the one we obtained using the whole catalogue. The other methods of analysis were thus applied to the previous, larger catalogue too.

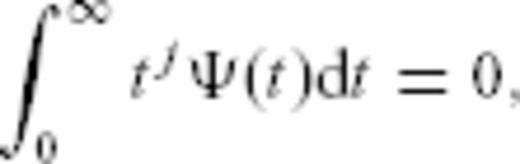

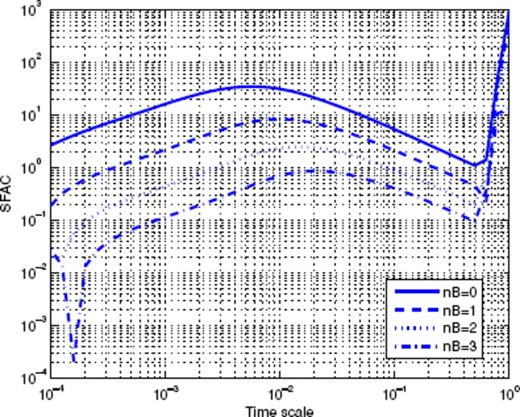

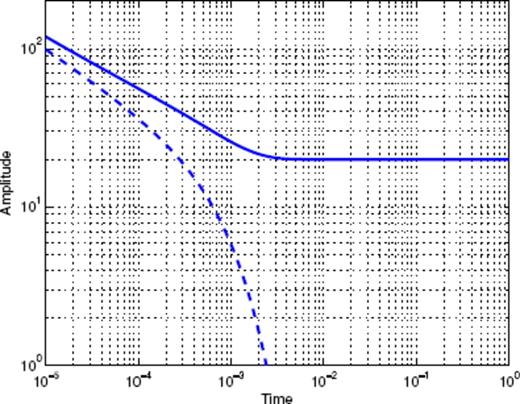

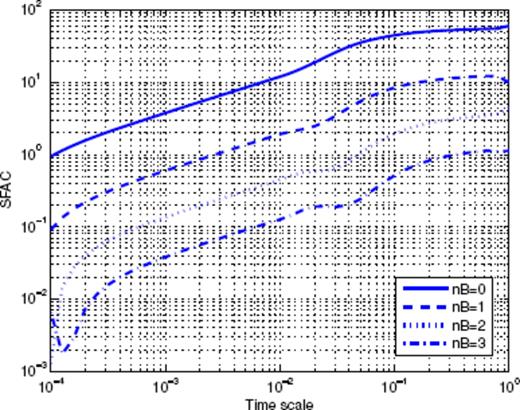

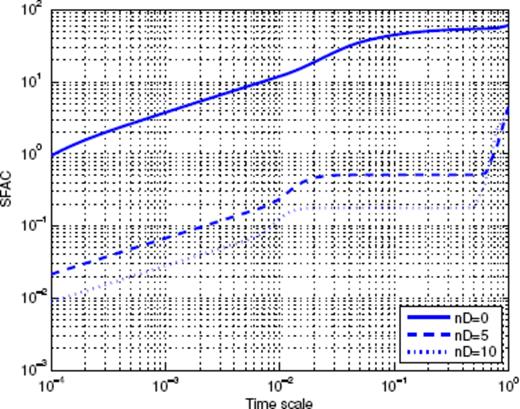

4.1.4 Results obtained with the SFA method

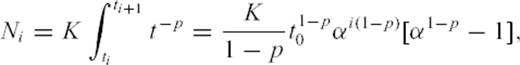

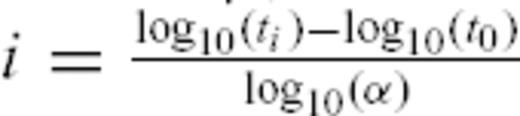

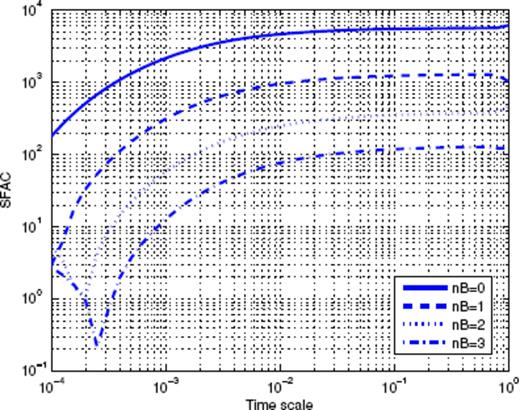

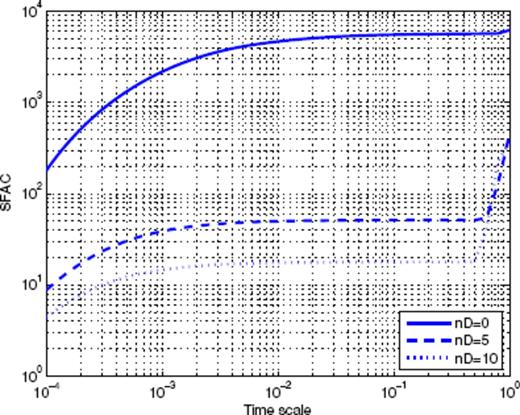

The SFAC as a function of scale are displayed in Figs 5–7. In each of these figures, we analyse the stacked aftershock time-series for main shocks in a small magnitude interval, and vary the two parameters nB (which controls the ability of the SFA to filter non-Omori dependence) and nD (which controls the weight put to early times in the stacked aftershock sequence, the larger nD is, the weaker is the influence of the early times in the analysis). The parameter nD is defined in the Appendix as the maximum order of the derivatives of the ‘mother scaling function’Ψ of the SFA method which vanish at t = 0. Typically, we consider the following values: nB = 0 and 3 and nD = 0 and 10. In the set of Figs 5–7, the upper solid curve corresponds to nB = 0 and nD = 0, the dashed curve corresponds to nB = 3 and nD = 0, while the lower solid curve corresponds to nB = 0 and nD = 10. One can check that the value of nB has very little influence on the shape of the curves, suggesting that the contribution of the background rate is practically constant with time. This in turn validates our aftershock selection procedure, as it shows that aftershock sequences are at most very weakly contaminated by sequences triggered by previous events. The straight dashed lines show the power-law fit of the SFAC as a function of timescale. In some cases, different scaling regimes hold over different scale intervals, so that more than one fit is proposed for the same time-series (see for instance, Figs 5e and 6a). Note that the fitting interval has a lower bound at small scales due to the roll-off effect observed in the time domain. At large timescales, several features of the time-series define the upper boundary of the fitting interval. The first feature is of course the finite size of the time-series, as discussed in the Appendix. The other property is related to the occurrence of large secondary aftershock sequences that appear as localized bursts in the time-series and distort it. For example, consider the time-series corresponding to the main shock magnitude range [1.5;2] in Fig. 3, for which one can observe the occurrence of a burst at a time of about 6 × 10−2 yr. This corresponds to a break in the power-law scaling of the SFAC at timescales of about 10−1 yr. We thus only retained the p-values measured using timescales before such bursts occur. As the magnitude of the main shocks increases, the roll-off at small timescales extends to larger and larger timescales, so that the measure of p proves impossible when nD = 0. This is the reason why we consider the p-value measured with nD = 10 and nB = 0 as more reliable, especially for the large main shock magnitudes.

![SFA method applied to the SCEC catalogue: main shock magnitudes vary within [1.5;2] (plot a) up to [4;4.5] (plot f). In each plot, the upper solid curve corresponds to nB = 0 and nD = 0, the dashed curve corresponds to nB = 3 and nD = 0, while the lower solid curve corresponds to nB = 0 and nD = 10. Occurrences of large bursts in the time-series sometimes interrupt the power-law scaling (see plots a, b and e).](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/gji/178/1/10.1111/j.1365-246X.2009.04079.x/3/m_178-1-215-fig007.jpeg?Expires=1749849640&Signature=Koet-72YlkkZqUzF~UINkdHSVBcNdmJ3hTzqA0Z1dKk-Q8rBs2Mu-ohv6m2giN2qyhpnARfuWeGT8d1Qt0c2hg2otyeGD7n4ZS9rxoihqaO-ZiImbHzuFnuzn4MdVVY~HrDWZ7SlC~aMjXB98HucKKfS03-cl1jKpKTytEW8PH-Z0tZxGCkjU86tDTQF9kBNNYagSi8oec3gU52OFnmLCnFnlKFO90nsopuxls17J9RJ3u3WFdMsfdizANTQe-W5N8IoQOooHtTGmu5P0Ph98KC3TuIXSqvdMeivZRhAWYCkv9BfazomrfQ9y~wJaQrLNJsjvKTOrHT-FAC3BUx7Cg__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

SFA method applied to the SCEC catalogue: main shock magnitudes vary within [1.5;2] (plot a) up to [4;4.5] (plot f). In each plot, the upper solid curve corresponds to nB = 0 and nD = 0, the dashed curve corresponds to nB = 3 and nD = 0, while the lower solid curve corresponds to nB = 0 and nD = 10. Occurrences of large bursts in the time-series sometimes interrupt the power-law scaling (see plots a, b and e).

![SFA method applied to the SCEC catalogue: main shock magnitudes vary within [4.5;5] (plot a) up to [7;7.5] (plot f). In each plot, the upper solid curve corresponds to nB = 0 and nD = 0, the dashed curve corresponds to nB = 3 and nD = 0, while the lower solid curve corresponds to nB = 0 and nD = 10. The roll-off of aftershock time-series at small timescales breaks scaling down and imposes to choose nD = 10.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/gji/178/1/10.1111/j.1365-246X.2009.04079.x/3/m_178-1-215-fig008.jpeg?Expires=1749849640&Signature=cgWCozS6Rsps4jIjCGSNRUc~VdNfHiZO9dMypim2rWGeeAGTBC4N21mg0kFSs56KjjDb6rp8TPr5PakEQhBBKuurxnJRdR2QN9c~wc9HNqGiKZRIeyr-AQPuru4-vuKXakNgVD12jBtLAKpM4H-XCR9BVXbqmxMtfQ4GOf50uUap4UgsMcfwxkgCN8ncrAZZBDSNyT82H01Dfy5NhT12Qo0pzcMBZUo2YZZbVY-1pL5kily1UlElBkuiS5HkwhcGehlltLSZrZB7siJeZKzprwLnJDq5zgP7NB5uON6JbrO3bHUXzp9kKpgqP5JGtBtZaa6VyFri98RBStGpasZfuQ__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

SFA method applied to the SCEC catalogue: main shock magnitudes vary within [4.5;5] (plot a) up to [7;7.5] (plot f). In each plot, the upper solid curve corresponds to nB = 0 and nD = 0, the dashed curve corresponds to nB = 3 and nD = 0, while the lower solid curve corresponds to nB = 0 and nD = 10. The roll-off of aftershock time-series at small timescales breaks scaling down and imposes to choose nD = 10.

![Same as Fig. 6 for M within [7.5;8].](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/gji/178/1/10.1111/j.1365-246X.2009.04079.x/3/m_178-1-215-fig009.jpeg?Expires=1749849640&Signature=FTmwKFPw0-E8eKBc6wDpup1vFC7Dbfa3Efz6HZQXxNcXgwYhJkV9nXlM35eBBZdnPhSR6WuA1OmshUtfS~h9A-qbWVucHymwxBLEOkO9ONJD1keTfGXJhmLEaDdz4lWRidlW5iqV8vmObjNv-zGbvE97x3Si26L5MXioB-pOMqcM~889A7IKUogvfsRdpjqpbfec2Rc-fSqE3bOlJnLb-zDbrjwLfNqI2Ilhm0qi~Ij5c82MGT7ABykBhePX884tR0qSv14dCpY01OZYebZksbOVPICLlK5BAm3iv3xEs8~aey8e~pKewX4j-~HreTWwlqE7hQ3HF1VAZgYKjugpNw__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

The estimation of reliable error bars with the SFA method is not as obvious as for the other methods, as different (nB, nD) couples describe different scaling functions, that is, different methods to estimate p. For example, the binned stacks method previously defined indeed belongs to the general family of SFA methods. In that case, the mother scaling function is a simple square function, so that it doesn't filter out any background term. Using different values of the binning factor α changes the scale of analysis, but not the basic properties of the analysed time-series. In the SFA method that we defined in Section 3.3, changing nB and nD filter out some different parts of the signal itself before we unearth its scaling properties through a linear fit in log-log scales. Each mother scaling function Ψ thus defines a unique tool in itself. Table 1 gives an idea of the variation of p obtained with different scaling functions (hence different methods). Note that they agree very well with those obtained with the direct binning and fitting approach.

Fig. 4 shows the p-values obtained with nB = 0 and nD = 10 as a function of M. We plot those results without error bars (for reasons given above). A linear fit gives p(M) = 0.10M+ 0.40, in excellent agreement with the results obtained using the direct fit to the binned stacked series.

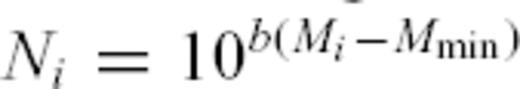

4.1.5 Results obtained with the maximum magnitudes method

We now present the results obtained with the analysis using maximum magnitudes in logarithmic binned time-intervals. As this method focuses only on extreme events, the corresponding plots of the maximum magnitude as a function of time elapsed since the main shock display larger fluctuations than the ones given by the two other methods. Fig. 8 shows such plots for main shock magnitudes within [2;2.5] (square symbols) and within [7;7.5] (circles) for a common ratio α = 1.7 used to bin the time intervals. For the largest magnitude range, a clear decrease of the maximum magnitude with time is observed, with a slope of about −0.05. Assuming that b = 1, this yields p = 1.05. Note that the scaling law extends down to a very small time slightly less than 2 min, whereas the binned time-series analysis used previously allowed to observe power-law scaling only down to about 1 month. For the smallest magnitude range, we can observe that Mmax increases with time, with a slope of about 0.41. It follows that p = 1 − 0.41 = 0.59, and the scaling range extends down to a time of about 0.3 s. We also see that there are two very clear outliers at magnitudes Mmax larger than 7. They can thus be very easily discarded when performing the fit to find p. Indeed, for main shock magnitudes lower than 3, we fitted the corresponding data up to a time t = 10−3 yr to remove the possible influence of the constant background. The results obtained using 20 different values of α for all magnitude ranges are gathered in Table 1. Note that they compare very well with those obtained with the two other methods. They are plotted with error bars in Fig. 4. A linear fit gives p = 0.08M+ 0.53, in good agreement with previous results on the same catalogue. Discarding the smallest and the largest magnitude ranges gives an even better agreement with p = 0.10M+ 0.44. The significance of the largest magnitude range will be discussed later in this paper.

![Time evolution of extreme aftershock magnitudes following main shocks with magnitudes within [2;2.5] (squares) and within [7;7.5] (circles).](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/gji/178/1/10.1111/j.1365-246X.2009.04079.x/3/m_178-1-215-fig010.jpeg?Expires=1749849640&Signature=rYzbKkHYSvsbng62lB6Ql6Zx2Af4ci-KTw7lpwsLrrFlc1nzepo4uCSBfr25lRK3G1J1ZubiiVQp1MXgVdyGmEo62liUjR4WlUO9Uvdxny5QQarCaUXVlrUl7ct-Cl0c0Io2OodKeD2-VCTkpIJt~tfXFRFMF3PTW7MURCUNQqm5u5EDhhaUuQGSFn5M~D~kMmhpqOu3rzHSUil0IwpBrM6cIspkHOr0q~9NyVzkaYGxvSHPlweaUSTdwv8Q9xUx-IPENC8o7SFmdy29jYqLUBIkG8-EiwqTFlbN89ZrQpHs9egdN5oM88RmjRDNFBeeUqmfyhtMrzRplwQeFfrybw__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Time evolution of extreme aftershock magnitudes following main shocks with magnitudes within [2;2.5] (squares) and within [7;7.5] (circles).

4.2 JMA catalogue

The JMA catalogue used here extends over a period from 1923 May to 2001 January inclusive. We restricted our analysis to the zone (+130°E to +145°E in longitude and 30°N to 45°N in latitude), so that its northern and eastern boundaries fit with those of the catalogue, while the southern and eastern boundaries fit with the geographic extension of the main Japanese islands. This choice selects the earthquakes with the best spatial location accuracy, close to the inland stations of the seismic network. In our analysis, the main shocks are taken from this zone and in the upper 70 km, while we take into account their aftershocks which occur outside and at all depths.

Our detailed analysis of the aftershock time-series at spatial scales down to 20 km reveals a couple of zones where large as well as small main events are not followed by the standard Omori power-law relaxation of seismicity. The results concerning these zones will be presented elsewhere. Here, we simply removed the corresponding events from the analysis. The geographical boundaries of these two anomalous zones are [130.25° E; 130.375° E]×[32.625° N; 32.75° N] for the first zone, and [138.75° E; 139.5° E]×[33° N; 35° N] for the second one (the so-called Izu islands area). This last zone is well known to be the locus of earthquakes swarms (see for example Toda et al. 2002), which may explain the observed anomalous aftershock relaxation. We have been conservative in the definition of this zone along the latitude dimension so as to avoid possible contamination in the data analysis which would undermine the needed precise quantification of the p-values.

The completeness of the JMA catalogue is not constant in time, as the quality of the seismic network increased more recently. We computed the distribution of event sizes year by year, and used in a standard way (Kagan 2003) the range over which the Gutenberg-Richter law is reasonably well obeyed to infer the lower magnitude of completeness. For our analysis, we smooth out the time dependence of the magnitude threshold Mc above which the JMA catalogue can be considered complete from roughly Mc(1923 − 1927) = 6, to Mc(1927 − 1930) = 5.5, Mc(1930 − 1962) = 5, Mc(1962 − 1993) = 4.5, Mc(1993 − 1995) = 3.5 and Mc(1995 − 2001) = 2.5. This time-dependence of the threshold Mc(t) will be used for the selection of main shocks and aftershocks. The assumed value of events location uncertainty Δ has been set to 10 km.

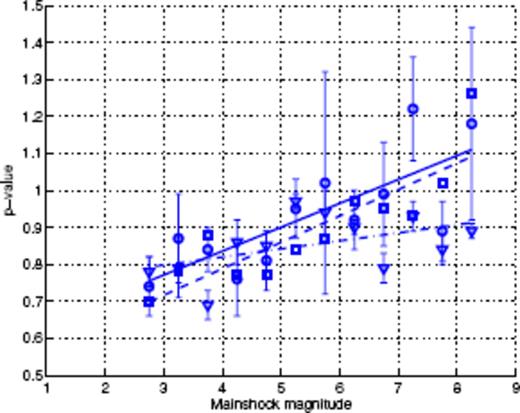

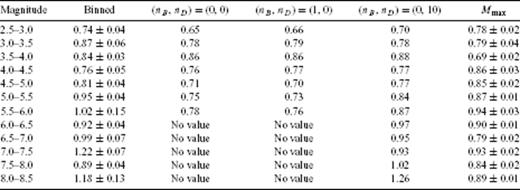

4.2.1 Binned stacked times series

For the JMA catalogue, 12 magnitude intervals were used (from [2.5;3] to [8;8.5]). Fig. 9 shows the 12 individual stacked aftershocks time-series and their fits (using a value for the binning factor α determined as described above for the SCEC catalogue). Fig. 10 plots the exponent p averaged over the 20 values of α as a function of the middle value of the corresponding magnitude interval. These values are also given in Table 2. A linear fit gives p = 0.06M+ 0.58 (shown by the solid straight line in Fig. 10). The p-value thus seems less dependent on the main shock magnitude M than for the SCEC catalogue.

![Binned stacked series of aftershock sequences in the JMA catalogue for various magnitude ranges (from [2.5;3] at the bottom to [8;8.5] at the top by steps of 0.5). The solid lines show the fits of formula (eq. 15) to the individual time-series. All curves have been shifted along the vertical axis for the sake of clarity.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/gji/178/1/10.1111/j.1365-246X.2009.04079.x/3/m_178-1-215-fig011.jpeg?Expires=1749849640&Signature=pRzZcuST5qmvMl-2y4Qhs5b1ZqbWsYfoKq044tIUqcgLetaORnibHXKdB9aiW~fw7C8EsBtyAuKUoyuTkqP7bFDXUGA3z2afo6LyMxwUIJhSC410rSQ0IgwdmFCd1XvmkfhD3FjK8oT-Llb-ZNuzv0kHUmXz7Bp6wymsH5nqZmMjmQfx29bUMoedk19dY5uabcXs1QkUMLIV3vFAgHgnAfcOI81Gx~wy65RVBaeLpfaOcY5BbYfBpLASvMMLOwlyntkOKCaF-InsmApg21131RZkvm5XooeNrwYMzwgGOXxSMJU6cAQRt9BVGASgRCyjNXkD~TNw4LN6DGiYFuk5cA__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Binned stacked series of aftershock sequences in the JMA catalogue for various magnitude ranges (from [2.5;3] at the bottom to [8;8.5] at the top by steps of 0.5). The solid lines show the fits of formula (eq. 15) to the individual time-series. All curves have been shifted along the vertical axis for the sake of clarity.

Exponents p(M) of the Omori law obtained for the JMA catalogue with different methods (stacked binned method and SFA), with the corresponding fits: binned time-series (squares-second column of Table 2), Scaling Function Analysis (circles-fifth column of Table 2) and monitoring of Mmax (triangles-last column of Table 2). Continuous, dashed and dot-dashed lines stand for their respective linear fits.

p-values for the JMA catalogue obtained by fitting binned stacked sequences (second column), with the SFA (third to fifth columns) and with monitoring Mmax (last column). (nB, nD) correspond to the parameters used to define the mother scaling function. p(M) values in the second, fifth and last columns are plotted in Fig. 10.

4.2.2 SFA method