-

PDF

- Split View

-

Views

-

Cite

Cite

Max L Olender, José M de la Torre Hernández, Lambros S Athanasiou, Farhad R Nezami, Elazer R Edelman, Artificial intelligence to generate medical images: augmenting the cardiologist’s visual clinical workflow, European Heart Journal - Digital Health, Volume 2, Issue 3, September 2021, Pages 539–544, https://doi.org/10.1093/ehjdh/ztab052

Close - Share Icon Share

Abstract

Artificial intelligence (AI) offers great promise in cardiology, and medicine broadly, for its ability to tirelessly integrate vast amounts of data. Applications in medical imaging are particularly attractive, as images are a powerful means to convey rich information and are extensively utilized in cardiology practice. Departing from other AI approaches in cardiology focused on task automation and pattern recognition, we describe a digital health platform to synthesize enhanced, yet familiar, clinical images to augment the cardiologist’s visual clinical workflow. In this article, we present the framework, technical fundamentals, and functional applications of the methodology, especially as it pertains to intravascular imaging. A conditional generative adversarial network was trained with annotated images of atherosclerotic diseased arteries to generate synthetic optical coherence tomography and intravascular ultrasound images on the basis of specified plaque morphology. Systems leveraging this unique and flexible construct, whereby a pair of neural networks is competitively trained in tandem, can rapidly generate useful images. These synthetic images replicate the style, and in several ways exceed the content and function, of normally acquired images. By using this technique and employing AI in such applications, one can ameliorate challenges in image quality, interpretability, coherence, completeness, and granularity, thereby enhancing medical education and clinical decision-making.

Medical images visualize physiological systems to differentially display constituent tissue. However, different imaging systems often have competing tradeoffs, require independent interpretation, and are all imperfect. The generation of synthetic images on the basis of tissue distribution offers exciting prospects to improve image quality, construct coherent composites from disparate sets of images, complete images with obscured regions, and more. Artificial intelligence provides a convenient and effective means to produce such medical images of tissue distributions.

We have developed such a system for intravascular imaging, a technology used in diagnosing and treating atherosclerosis and coronary artery disease. The system studies the relationship between the structure and appearance of blood vessels. It is shown many intravascular images and told what each portrays—the vessel’s geometry and distribution of constituent tissue types. Based on relationships it learns, the system creates images that would be expected for any given vessel structure. Through iterative training, the system achieves increasing accuracy and realism.

The technology has several potential uses. It can ‘translate’ between image styles, easing interpretation by cardiologists proficient with specific image types or facilitating comparison between images of the same vessel acquired by different systems. Additionally, the technology can integrate multiple information sources into a consolidated, intuitive image. Information about the vessel, derived from complementary systems and tests, can be combined and expressed in a familiar, interpretable image. Images can also be enhanced with the aid of computer algorithms or recreated with less noise, generated for educational platforms, and incorporated into datasets for research and development.

Introduction

Artificial intelligence (AI) is widely touted for its potential to transform medicine through automated diagnosis, risk-stratification, decision-making, and intervention guidance.1 However, facilitating ancillary tasks and care delivery workflows offers more immediate outlets for contribution by AI. In this enterprise, an underexplored but exciting application is medical image generation, which inverts prevailing paradigms of AI as a tool to automatically interpret images2 and instead applies AI to make images more interpretable. Through this inverted paradigm, AI is poised to contribute markedly to cardiology in particular, as cardiovascular medicine largely communicates in information-dense images that establish a common language and facilitate holistic consideration.

Imaging is relied upon by cardiologists, yet the clinical utility of such images is susceptible to variability in image quality, interpretability, and completeness. A prolific arsenal of imaging modalities generates information that can overwhelm or be squandered in the absence of specialized expertise. Difficulties can arise from the inconsistent use of differing image modalities. Images can also include artefacts and noise that limit their value and impede their use. This is especially true for intravascular imaging—optical coherence tomography (OCT) and intravascular ultrasound (IVUS) can enlighten or confound interventional cardiologists. Furthermore, scientists and engineers are stymied by limited availability of data for translational research and development. Image generation tools possess the untapped ability to address these myriad challenges.

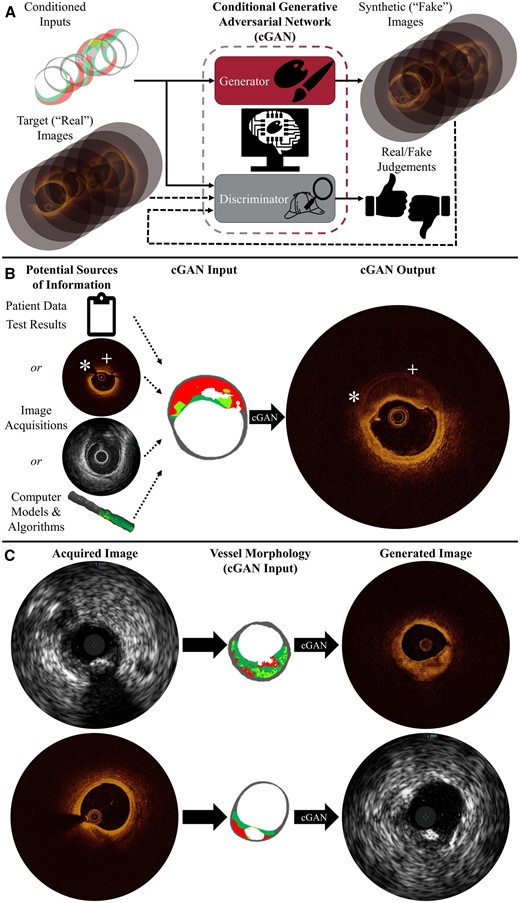

Artificial intelligence systems, which do not rely on a deep understanding of the physical principles underlying an imaging modality, are very attractive for their power and flexibility. Physics-based simulations of imaging processes can generate images for sufficiently simple systems, but such models are neither amenable to imaging systems governed by more complex physical phenomena, nor readily generalized as a modality-agnostic platform technology. However, one can generate synthetic images of complex tissue anatomy using increasingly potent and popular artificial neural networks.2,3 A special configuration called a conditional generative adversarial network (cGAN) is remarkably adept at generating images, including medical images.4 cGANs learn to transform a map of underlying features into a corresponding image depicting those features. To do so, two AI systems are trained interactively as adversaries (Figure 1A)—a generator learns to produce increasingly realistic images to deceive a discriminator, a binary classifier which learns characteristics that distinguish acquired images from synthesized ones with increasing precision.5

Synthetic image generation addresses shortcomings in medical imaging. Conditional generative adversarial networks transform maps of vascular tissue morphology into synthetic images conveying specified structure in the visual vernacular of imaging systems. (A) The generator learns to produce images—here optical coherence tomography—using feedback from the discriminator, which is trained to distinguish between acquired and synthetic images. (B) Images are generated from morphology, determined using any combination of sources. Surpassing the acquired optical coherence tomography image, the synthetic image shows the complete vessel wall with enhanced far-field feature discrimination in the presence of attenuating plaque; there is no guidewire shadow (*) or obfuscated abluminal features (+). (C) Images can be translated between intravascular ultrasound and optical coherence tomography modality renderings through the shared commonality of underlying (micro)morphology.

Uniquely leveraging the capabilities of cGANs, an AI platform can synthesize enhanced, yet familiar, clinical images to address shortcomings in cardiovascular imaging (Figure 1). These systems can be trained to learn the relationship between anatomic (micro)structure and visual appearance from annotated intravascular images. A labelled map of atherosclerotic plaques and adjacent tissue (i.e. diseased vascular morphology) serves as the input to the cGAN; its aim is to generate an intravascular image of the specified physical formation (Figure 1). To train such a system, we presented over 1000 annotated vessel cross-section morphologies (i.e. label maps), further augmented through random rotation and mirroring, alongside corresponding OCT or IVUS images. Once relationships between morphology and appearance in both modalities are learned, the AI systems can render OCT or IVUS images expected for any given lesion composition in milliseconds (Figure 1B). The trained AI system can then be applied to augment the cardiologist’s visual clinical workflow.

Discussion

The unique approach to generating synthetic cardiovascular images, leveraging and capturing the heterogeneity of vascular tissue, offers exciting faculties to augment the cardiologist’s visual clinical workflow. In particular, AI can enhance image quality, interpretability, coherence, completeness, and granularity, offering promising applications in clinical decision-making and education. Furthermore, with additional annotated data, the platform can be extended to any current or future modality depicting vascular morphology. Especially exciting is that, given the speed of this technology, integration into commercial systems could allow real-time ancillary support.

One straightforward application is conversion between imaging modalities, as images acquired with one modality can be rendered as those of another for clinician interpretation. Images can be translated between modality renderings through the shared commonality of underlying (micro)anatomy (Figure 1C). The anatomical map of various tissue types (e.g. calcified, fatty, fibrotic, etc.), on whose accuracy the validity of generated images depends, can be derived from intravascular images by manual annotation, virtual histology,6 automated image processing,7 or any combination thereof. Once underlying tissue architecture is established, these platforms can synthesize images portraying that physical structure for any trained modality to enable the use of a clinician’s preferred modal rendering, facilitate co-registration of multi-modal datasets, or ease comparison of image series acquired with different modalities by unifying visual styles.

Another powerful benefit of this AI-enabled transformation is that all relevant available knowledge of tissue architecture underlying the images can be unified into a single coherent representation, thereby combining the best of each and mitigating information overload. Images can be enriched by integrating and consolidating geometric and morphological information from various imaging acquisitions, modalities, processing and analysis algorithms, or other test results (Figure 1B). The platform can fuse complementary sources of disparate data—which may be independently incomplete or limited—to rapidly assimilate comprehensive information into a single coherent, intuitive visual representation. For example, tissue morphology can be derived from several complementary sources, merging hybrid or multimodal imaging for unified visualization.

Furthermore, acquired images can be recreated with decreased ambiguity and noise. In training the cGAN, the system does not need to be constrained to replicating standard modalities exactly; the AI can be trained using post-processed images to aid in their interpretation. Additionally, because the generation method represents a mapping of provided morphological input, non-physiological artefacts can be excluded and obscured regions rendered by supplying a complete tissue map to the system. This exciting prospect is illustrated in Figure 1B: whereas highly attenuating lesion obfuscates abluminal tissue and guidewire shadow obscures a significant arc of the originally acquired OCT image, corresponding segments of the generated synthetic image clearly illustrate vessel wall structure in its entirety. The synthetic image may thus allow for a more complete assessment of plaque burden, remodelling, and vessel size, better informing the cardiologist.

The ability to generate synthetic clinical images also presents rich opportunities to integrate patient-specific virtual phantoms into the image-based workflows and decision-making processes of personalized medicine.8,9 Computational models—virtual representations of physical systems10—not only enable the generation of different projections and views, but, through simulation and modelling, also visualization of the system at different time points and conditions. Derived images could contribute to the emerging paradigm of computer-simulated clinical trials for regulatory evaluation11 as well.

Finally, AI-powered medical image generation offers a unique tool to facilitate education and training. Because images can be generated from a morphological template specified at will, the platform offers an unconstrained source of physiology-based images without the challenges of acquisition; even rare phenotypes, contraindicative conditions, or mild disease states not justifying invasive imaging—and therefore not already readily available—could be rendered for illustrative, educational purposes. Learners can also be presented with images of one or more relevant modalities depicting any underlying pathology to reinforce structure-appearance and inter-modality relationships, and inherent label maps provide keys for learning assessments.

Further work is needed to measure and analyse the efficacy of such approaches,12,13 and performance must be validated in any proposed application before use in clinical workflows informing diagnosis or treatment. Security must also be assessed to prevent the manipulation of patient data by malicious actors.14 Finally, experts must be educated and trained to appropriately utilize this technology and understand its limitations and sources of uncertainty.

Conclusion

As the prominence and competence of AI burgeons, the visually driven field of cardiology is amenable to AI-powered image generation. We present a platform to generate realistic yet subtly enhanced intravascular images based upon (patho)physiological tissue morphology. This digital health technology facilitates exciting applications in auxiliary and educational systems that empower cardiologists, who retain full autonomy and authority in interpretation and decision-making processes. The clinical and technical communities offer complementary insights in considering and exploring the full implications and uses of such technology. Stakeholders must work together to realize the tremendous opportunities of these advancements and drive forward exciting applications in ancillary systems empowering cardiologists to provide the best possible patient care.15

Lead author biography

Max L. Olender is a postdoctoral associate in the Harvard-MIT Biomedical Engineering Center at the Massachusetts Institute of Technology (Cambridge, MA, USA), where he completed a Ph.D. (2021) in mechanical engineering. He also holds B.S.E. (2015) and M.S.E. (2016) degrees in mechanical and biomedical engineering from the University of Michigan (Ann Arbor, MI, USA). He previously conducted research at the University of Michigan with the Biomechanics Research Laboratory and the Neuromuscular Lab. Dr. Olender is a member of the European Society of Biomechanics, Biophysical Society, Institute of Electrical and Electronics Engineers, and American Association for the Advancement of Science.

Funding

Work was funded in part by MathWorks (fellowship to M.L.O.) and the U.S. National Institutes of Health (Grant R01 49039 to E.R.E.).

Conflict of interest: J.M.dlT.H. has received unrestricted grants for research from Amgen, Abbott, Biotronik, and Bristol-Myers Squibb and advisory fees from AstraZeneca, Boston Scientific, Daichy, and Medtronic, but there is no overlap or conflict with the work presented here. L.S.A. has an ongoing relationship with Canon USA, but there is no overlap or conflict with the work presented here. E.R.E. has research grants from Abiomed, Edwards LifeSciences, Boston Scientific, and Medtronic, but there is no overlap or conflict with the work presented here. M.L.O., L.S.A., and E.R.E. have applied for a patent on related inventions (‘Arterial Wall Characterization in Optical Coherence Tomography Imaging’, 16/415,430). M.L.O. and E.R.E. have submitted a provisional patent application on related inventions (‘Systems and Methods for Utilizing Synthetic Medical Images Generated Using a Neural Network’, 62/962,641).

Data availability

No new data were generated or analysed in support of this research.

Consent: Use of images was approved by the IDIVAL Clinical Research Ethics Committee (Santander, Spain).

References