-

PDF

- Split View

-

Views

-

Cite

Cite

Steven M. Bellovin, Susan Landau, Herbert S. Lin, Limiting the undesired impact of cyber weapons: technical requirements and policy implications, Journal of Cybersecurity, Volume 3, Issue 1, March 2017, Pages 59–68, https://doi.org/10.1093/cybsec/tyx001

Close - Share Icon Share

Abstract

There has been much public rhetoric on the widespread devastation of cyber weapons. We show that contrary to the public perception – and statements from some political and military leaders – cyber weapons not only can be targeted, they have been used in just such a manner in recent years. We examine the technical requirements and policy implications of targeted cyberattacks, discussing which variables enable targeting and what level of situation-specific information is required for such attacks. We also consider technical and policy constraints on cyber weapons that would enable them to be targetable.

Precise targeting requires good technical design. The weapon must be capable of precise aim, rather than affecting any computer within reach. Precise targeting also requires good intelligence. The attacker must have sufficiently detailed knowledge of the target’s environment to avoid accidental damage to other machines, including ones that depend indirectly on the targeted ones. To avoid accidental damage to other machines, including machines that depend indirectly on those targeted, the attacker must have highly detailed knowledge of the target’s environment.

If imprecise targeting is defined to include other damage ultimately traceable to the initial use of a cyber weapon, proliferation becomes an issue. We consider proliferation in two ways: immediate and time-delayed (the latter could occur through repurposing of the weapon or the weapon's techniques). The nonproliferation objective has a broad meaning, for it includes not only preventing others from using code snippets and information on zero days, but also preventing the employment of useful attack techniques and new classes of attack. Preventing opponents from repurposing cyber weapons is not solely through technical means, such as code obfuscation, but also through policy measures such as disclosure. As a result, while some of the nonproliferation effort falls to the attacker, some must be handled by potential victims.

Introduction

When new weaponry is initially introduced, it is often accompanied by great fear over its potential for damage. Such has certainly been the case for cyber weapons. In part this is because so many Internet attacks have had an effect on a large number of systems. This has given rise to apprehension that cyber weapons are necessarily indiscriminate. The facts do not bear this out. Cyber weapons can be targeted. Indeed, a number of them have already been so. And although cyber weapons are software, and thus replicable, they can be designed to reduce proliferation risks.

Both military commanders and political leaders prefer to have a wide range of options. Decreasing the extent of a weapon’s potential damage increases the range of options for how it may be used. When policy makers decide to use weapons, they have in mind certain effects they want the weapons to cause and ancillary impacts they wish to avoid. In a military context, the intended effect is usually damage to designated targets; an undesired effect is damage to humans and/or property that are not among the designated targets. The use of a highly targetable cyber weapon may be as effective against a target as a kinetic weapon, but with significantly less risk of collateral damage.

A second reason for valuing cyber weapons that can be used in targeted cyberattacks is that excessive damage to unintended targets can be a catalyst for an undesired escalation of conflict. For example, during World War II, German bombers – intending to hit the docks of London – accidentally bombed central London, which Hitler had placed off-limits for the Luftwaffe. In response, the Royal Air Force (RAF) launched a bomber attack against armament factories north of Berlin and Tempelhof Airport in Berlin. However, this attack also hit, accidentally, some residential areas in Berlin. Shortly thereafter, Hitler launched the Blitz against London, apparently in retaliation for the (mistaken) RAF bombing of residential areas in Berlin [1].

This article seeks to examine the technical requirements necessary to ensure cyber weapons are not indiscriminate, and the policy guidelines to ensure that outcome. We begin by analyzing weapons used in recent targeted cyberattacks in order to draw out the reasons why the attacks remained targeted. We use this to illuminate the technical and policy principles that should guide the design of cyber weapons that can be targeted.

It is useful to separate unintended or undesired damage to humans and/or property into two categories. One category is the unintended or undesired damage that might arise as a direct and immediate result from an attack using a certain cyber weapon. A second category is damage to human and/or property that might arise from another party’s use of a weapon, cyber or otherwise, that is similar in nature to the one that was used in the original attack. (In this context, “similar in nature” means that the weapon employs the same principles of operation to create its damaging effects as does the original weapon.) In other words, concerns often arise about whether A’s use of a weapon, cyber or not, in a scenario against B might lead to some adversary (e.g. B or a third party C) using that or a similar weapon against persons or property that A does not wish to damage. These concerns are particularly salient when A’s use of that weapon is the first time that the weapon has been used; these are often captured under the rubric of preventing or minimizing the likelihood of proliferation. Preventing proliferation may be a sufficiently high policy objective that, under some configuration of facts and circumstances, may take precedence over a decision to use a given cyber weapon in any particular case.

We begin in section “Inherently indiscriminate weapons versus targetable weapons” by examining several cyber weapons and discuss what technical means were employed that prevented their spread. We follow this in section “Technical issues in targeting” by showing what other considerations must be taken into account to ensure a cyber weapon stays as target. In section “Proliferation of cyber weapons,” we discuss the proliferation of cyber weapons, both the ways in which it can occur, and ways that states can work to prevent proliferation.

A note on terminology: For purposes of this article, a cyber weapon is defined to be a software-based IT artifact or tool that can cause destructive, damaging, or degrading effects on the system or network against which it is directed.1 A cyber weapon has two components: a penetration component and a payload component. The penetration component is the mechanism through which the weapon gains access to the system to be attacked. The payload component is the mechanism that actually accomplishes what the weapons is supposed to do – destroy data, interrupt communications, exfiltrate information, causing computer-controlled centrifuges to speed up, and so on.2 Penetrations can occur using well-known vulnerabilities, including those for which patches have been released. The vast majority of attacks are accomplished in this way. Some, however, including the sophisticated Stuxnet attack on the Iranian nuclear facility in Natanz, used zero-day vulnerabilities; these are vulnerabilities that are discovered and exploited before being disclosed to the vendor or otherwise being publicly disclosed.

We use the term “target” as a noun to refer to an entity that the attacker wishes to damage, destroy, or disrupt. Any given entity (target) may have multiple computers associated with it, all of which the attacker wishes to damage, destroy, or disrupt. A target might thus be an air defense installation, a corporation, a uranium enrichment facility, or even a nation. In some instances, a given entity may have only one computer associated with it, in which case the term “target” and “computer” can be used interchangeably.

If the payload causes data exfiltration, we call this cyber exploitation. If the payload causes damage, destruction, degradation, or denial, the use is called a cyberattack.3

Inherently indiscriminate weapons versus targetable weapons

Weapons that are “inherently indiscriminate” are prohibited as instruments of war. Thus, for example, the US Department of Defense Law of War Manual states “inherently indiscriminate weapons” are “weapons that are incapable of being used in accordance with the principles of distinction and proportionality” [3].

US military doctrine explicitly allows the conduct for offensive operations in cyberspace for damaging or destructive purposes as long as they are conducted in accordance with the laws of war [3]. Thus, the doctrine presupposes the existence of cyber weapons that are not “inherently indiscriminate."

Cyber weapons have nonetheless generated great fear. There are multiple reasons for this response. The recent development of computer technology as an instrument of attack readily creates fear, especially for those for whom the technology is far from second nature. The speed with which society and, in particular, modern industrialized societies have become dependent upon networking technologies, has exacerbated this concern.

Early cyber incidents may have fed this belief. Early self-replicating PC worms and viruses were indeed designed to spread far and wide.4 The Morris incident of 1988 was the first Internet worm, though its public impact was limited because the Internet was largely unused by the general public at the time.5 The rapidly spreading worms of the early 2000s had much more impact. Blaster, for example, affected CSX Railroad’s signaling network and interfered with Air Canada’s reservation and check-in systems [5]. These attacks were not intended to have these effects; however, they all clogged computers and network links. Had the authors intended these programs to have such effects, it would have been fair to call their programs cyber weapons and their use as indiscriminate. The 1998 Chernobyl virus and the 2004 Witty worm were the first major instances of malware carrying destructive payloads [6, 7]; however, they were both apparently released as an act of random vandalism rather than for any particular purpose.

That said, indiscriminate targeting is not an inherent characteristic of all cyberattacks. A number of cyberattacks we have seen to date – those on Estonia, Georgia, Iran, Sony – have used weapons that have been carefully targeted and, for various reasons, have not caused significant damage beyond the original target. In three of these cases (Estonia, Georgia, and Iran), the cyberattacks would appear to have helped accomplish the goal of the attackers – and did so without loss of life. None of the four attacks used weapons that were “inherently indiscriminate”.

These attacks mark the beginning of the use of cyber in nation-state clashes. Cyberattacks are likely to be used by many parties, including nation-states, to accomplish their goals both in circumstances of overt and acknowledged armed conflict and those short of overt and acknowledged armed conflict.

Constraints on targetable cyber weapons

As noted above, inherently indiscriminate weapons are incapable of being used in accordance with the principles of distinction and proportionality. The phrase “incapable of being used” is essential – nearly all weapons are capable of being used discriminately because their significantly harmful effects can be limited to areas in which only known enemy entities are present.

What is technically necessary for a cyber weapon to be capable of being used discriminately? Two conditions must be met:

Condition 1: The cyber weapon must be capable of being directed against explicitly designated targets; i.e. the cyber weapon must be targetable.

Condition 2: When targeted on an explicitly designated entity, the weapon must minimize the creation of significant negative effects on other entities that the attacker has not explicitly targeted.

These conditions will be discussed in more depth below. A cyber weapon capable of being used discriminately will be called targetable.

Successful targeted cyberattacks

The first publicly visible cyberattack on a country as a country, i.e. as opposed to on specific targets within a country, was a massive denial of service attack on Estonia.6,7 The attack – most observers either hold the Russian government responsible or believe that the Russian government either supported or tolerated it – did no permanent damage; however, Internet links and servers in Estonia were flooded by malicious traffic, leaving them unusable. The cyberattack the following year against Georgia was similar, both in attribution and effect, although in the Georgian case the cyberattack was only one part of a larger conflict that involved conventional Russian and Georgian military forces.

The attacks on Estonia and Georgia were denial of service attacks, disrupting those nations’ connections to the Internet. They did not, however, cause long-term damage to computers or data (cutting off access to the Internet during the attack was, of course, damaging).8 The attack on Iran's centrifuges and North Korea's attack on Sony did cause damage, are the ones towards which we now turn our attention.

Stuxnet is a computer worm that spread from machine to machine but only did harm to very specific targets. Though Stuxnet was found on computers in Iran, Indonesia, India, and the USA [10], the only destruction it caused was in Natanz, where it caused centrifuges to spin too fast, breaking them. That is because of the way the weapon was constructed.

Stuxnet is a sophisticated cyber weapon that relied on several zero-day vulnerabilities to penetrate systems not connected to the Internet, which meant that it had to largely function autonomously after injection. The authors had very precise knowledge of the target environment; Stuxnet used that knowledge for very precise targeting. It also manipulated output displays to deceive the plant’s operators into believing that the centrifuge complex was operating properly. Although Stuxnet appeared both inside and outside of Iran, its destructive payload was only activated when within the Natanz centrifuge plant.9

Although Stuxnet could operate autonomously, its controllers did not treat it as “fire and forget.” Rather, they developed several versions, with enhanced features (and perhaps for fixing bugs), adding new functionality, exploiting newly learned information about the target, adapting to changes in the operating environment, etc.

Stuxnet carried the malware payload – but first there were intelligence activities to determine what systems Stuxnet should attack and what their characteristics were. There is no clear public evidence on how this intelligence was gathered. It could have been through a human operator (e.g. an insider), it could have been electronic – e.g. obtained from hacking into Siemens systems, the supplier of the specific PLCs used in Natanz (and that signaled Stuxnet that it was at the target system) – or some combination.

Iran apparently retaliated for Stuxnet by attacking the Saudi Aramco oil company. This cyber weapon, named Shamoon, erased the disks of infected computers. It was triggered by a timer set to go off at 11:08 AM, 15 August 2012; the malware had apparently been installed by an insider in the corporate network and not in a separate, disconnected net that ran the oil production machinery [11]. Shamoon spread via shared network drives; this limited its spread to within the targeted corporation [12].

The North Korean attack on Sony was quite different in nature. Standard techniques were used, both for the initial penetration and for the destructive activities that followed. The initial penetration of Sony was via “spear-phishing” [13], a technique that involves sending a plausible-sounding email to a target to induce him or her to click on a link; this link will then install malware. The attackers then spent months exploring and investigating Sony’s network, learning where the interesting files were and planning how to destroy systems.

The actual destruction of the disks within Sony was performed by a worm [14]. At least some parts of the destruction software used commercial tools to bypass operating system protections.

In other words, and in contrast to Stuxnet, the attack on Sony was largely manual. The attackers allegedly exfiltrated a very large amount of information (100 terabytes), without being noticed [15]; they have publicly released 200 gigabytes of it. Carelessness on the part of the defender thus seems to have played an important role.

Distinctions between the profiled cyberattacks

There are a number of different types of cyberattack. The goal might simply be to “clog” the Internet to prevent work from getting done, it might be to destroy computers, or even physical devices attached to computers. We consider each in turn.

Denial of service attacks

One common form of online harassment is to launch a distributed denial of service (DDoS) attack. Usually, it is just that – harassment – though under certain circumstances, preventing communication can have more serious consequences. Generally speaking, a denial of service attack involves exhausting some resource required by the target; these resources can include CPU capacity, network bandwidth, etc. The attacks can be direct, i.e. against the site itself, or it can be indirect, by attacking some other site required by the target. In a DDoS attack, the resources of many computers are dedicated to attacking single target. A single home computer, with perhaps 10 Mbps of upstream bandwidth, cannot clog the link of a commercial site with perhaps a 500 Mbps link, but a few hundred attackers can do so easily. Estonia and Georgia were seriously affected by large-scale DDoS attacks.

Although DDoS attacks are by their nature highly targeted, the potential for collateral impact is high, especially in indirect attacks. Assume, for example, that 10 000 home machines focused their bandwidth on a single target. The aggregate bandwidth, 100 Gbps, could flood some of the ISP’s links into the router complex serving that customer and thereby affect other customers.10 Even if the ISP links are not flooded, there can still be collateral impact; most smaller websites are hosted on shared infrastructure.11

The risk of collateral damage is especially high in indirect DDoS attacks. One common example of that is an attack against the Domain Name System (DNS) servers handling the target’s name.12 DNS servers normally require far less bandwidth “than”, say, a website and hence the operators of such servers make use of lower bandwidth (and lower cost) links; they are thus much easier to clog. However, a DNS server may handle many other domains than just the one target; DDoSing such a server could take many other sites off the air as well.

File damage

Attacks that delete or damage files on the target’s computers are arguably more significant than DDoS attacks. This sort of attack generally requires that the target computers be penetrated, i.e. that the attacker be able to run destructive code on each one. It may be possible to launch one attack that effectively penetrates multiple computers, e.g. by planting malware on a shared file server, but that will be situation-dependent.

In practice, the amount of damage actually done to files is often the least important part of the attack. In well-run sites, all important disks are backed up, so little data will be lost. Furthermore, advice after even “normal” “security incidents” is to wipe the disk, reformat, and reinstall, regardless of what is perceived to have taken place; disinfecting a system is difficult, time-consuming, and of low-assurance. Thus, whether files are actually damaged or not, the response to a penetration is the same: start over and restore important data from back-up media.

That said, data manipulation can be very disorienting, especially when occurring in the midst of a kinetic attack. Indeed, during the recent US presidential election, there was concern over data manipulation during the day of the election.

As before, the risk of collateral damage depends on the quality of intelligence available. It is often straightforward to identify interesting target machines; however, identifying indirect dependencies can be difficult. Suppose, for example, that a mail server used by an opponent’s foreign ministry is hacked. That may destroy their ability to send and receive mail. However, the health ministry may be relying on the same server for its infrastructure.

Physical damage

The most serious kind of attack is one that causes physical damage to computers or equipment attached to them. (That, of course, was the effect of Stuxnet.) Computers are cheap and plentiful; while having to restore files is annoying, it is unlikely to be fatal if the company has adequate backups. Consider Sony and the South Korean banks that were attacked [16], both apparently by North Korea: despite destruction of files, all are still in business, and none spent more than an inconsequential amount of time recovering.

Physical damage, however, requires repair or replacement of physical items. This is likely to be far more expensive and far more time-consuming than restoring files. The demonstration attack by DHS on an electrical generator showed that elements of the power grid could be damaged by a cyberattack [17]. This point was underscored by recent cyberattacks on three Ukrainian power distribution systems, which demonstrated that a well-planned attack on the grid could shut the systems down [18]. These three systems were restored to full functionality in a few hours, but a similar attack on US power systems might cause damage that would take significantly longer to repair. It is likely that other forms of cyber-dependent critical infrastructure – dams, pipelines, chemical plants, and more – are equally vulnerable.

The risk of collateral damage from cyberattacks on physical assets may be high. The attacker may find it difficult to make good and reliable estimates of collateral damage. For example, if a pipeline is destroyed, might there be an explosion that kills many people? Will an attack on a hydroelectric power plant damage the dam and cause massive and unwanted downstream flooding?

Why these attacks stayed targeted

Despite the differences, the attacks share a crucial similarity: none of the attacks spread significant damage past the designated targets. There was of course an overriding policy reason to avoid attacks that would spread. But how was it achieved in practice?

The attacks against Estonia and Georgia were DDoS attacks aimed directly at entities of these two nations. DDoS attacks executed in such a manner do not spread beyond their intended targets.13

There was no need for the attack against Sony to be self-spreading. To a fair extent, automation simply was not part of the attack strategy. During the initial stages of setting up the attack, people actively determined which systems to penetrate, and decided what information to exfiltrate.

The attack on Iran provides another interesting dimension in targeting. To achieve the strategic goal of delaying the Iranian effort to build a nuclear weapon, the Stuxnet weapon had to be used repeatedly; doing so only once could destroy some centrifuges, but not enough to seriously set back the Iranian effort. Thus, so that it could continue to destroy additional centrifuges, it was crucial that the weapon remain undetected.

Because the centrifuges at Natanz were not connected to the Internet, Stuxnet had to be introduced into the Natanz complex without the benefit of a network connection. In the absence of a cooperative insider at the Natanz complex, a method had to be found that would bridge the air gap. Stuxnet was thus designed to spread in virus-like fashion. Once inside the Natanz complex, Stuxnet could use worm-like characteristics to spread on the internal network.

At the same time, to maintain its covert nature, the program had to avoid doing damage elsewhere – damage that might be noticed and investigated. Thus, Stuxnet also had to avoid spreading too virulently from the original injection point.14

These requirements point to two important aspects of Stuxnet: very careful targeting to prevent spread (and thus discovery) and extremely precise intelligence on the configuration of the target machines. For the weapon to be successful, its designers needed to know exactly what to do and when. There may have been several iterations, with spyware gathering the necessary data (including blueprints and other design documents), or perhaps some HUMINT. The weapon had an extreme need for intelligence, an extreme need for precise characterization of how the attack should work, and an extreme need for high-quality damage-limiting software.

Technical issues in targeting

For an attack to be precisely targeted, it must not affect other computers. For this to hold, extremely precise intelligence is necessary. As we shall demonstrate, some of this intelligence-gathering must be built into the weapon.

In general, learning what will happen if a particular computer or network link is attacked is not easy. In some cases, this may not matter much; if a link serves only a military base containing the target computer, the other machines that are likely to be affected are also military and hence legitimate targets. Other situations are more difficult. A server distributing propaganda or serving as a communication node for terrorists may be located in a virtual machine in a commercial hosting facility. In such a case, civilian machines are easily affected by a weapon used against the legitimate target. In some cases, understanding the interactions with the other computers will require an initial analytic penetration. That is, the attacking force would need to conduct cyber exploitation on the target computer to detect an underlying virtual machine hypervisor, which other computers are communicating to or through it, etc.

In some cases, cyber weapons must operate autonomously, without real-time control by a human operator. As noted, Stuxnet was designed this way, because the target network was physically separated from the Internet.15 Autonomous operation poses particular challenges for precise targeting. To learn the limits of what it may do, the weapon itself may need to automated intelligence gathering and analysis. An analogy would be to a camera in a smart bomb that is used not only to guide the weapon but also to detect, say, the presence of children. In other situations, the cyber weapon’s controller may need to monitor for impending collateral damage. Consider a DDoS attack that could flood other links. Simple network diagnostics could reveal if computers “near” the target are being affected to any undue extent. Note, though, that the success of such monitoring presumes that the dependencies are understood; one cannot monitor the behavior of a connection not known to exist.

Technical considerations also affect the extent and nature of collateral damage that might be expected when a cyber weapon is used in a specific operation against a particular target; these considerations pertain both to weapons design and intelligence support for using that weapon. Issues related to collateral damage have legal, ethical, and tactical/technical dimensions.

From an ethical perspective, an attacker has an obligation to minimize the harm to noncombatants that may result from a legitimate attack against a military objective. Legally, this ethical obligation is codified under the laws of armed conflict as the proportionality requirement of jus in bello.

In the DOD formulation, the likely collateral damage from the use of a weapon is one factor that is weighed in deciding whether to employ the weapon at all. The other factor is the military advantage that would accrue should the attack be successful. If the likely collateral damage from the attack is too high given its military value, the attack is either altered in some way to reduce the likely collateral damage or else scrubbed entirely.

The tactical reason for minimizing collateral damage is that an attack that spreads far and wide is more likely to be discovered,16 which may lead the defenders to take countermeasures. Libicki has considered a related issue, limiting the size and scope of an attack for the same purpose – to reduce the likelihood that the defender will notice the attack. Some of the techniques he describes, such as opting for smaller effects (to enable a longer term attack) or exploiting a vulnerability particular to a target, apply to minimizing collateral damage [23].

Launching cyberattacks that minimize collateral damage requires a commensurate degree of precise intelligence about the target environment. Kinetic attacks have a similar requirement – most friendly fire incidents or inadvertent attacks on protected facilities are due to incorrect or incomplete information [24]. However, the volume of intelligence information needed for targeted cyberattacks is usually significantly larger.

We now discuss certain technical requirements our conditions impose on the design and use of targetable cyber weapons.

Condition 1: targeting the weapon

Targetable kinetic weapons must meet Condition 1. Article 36 of Additional Protocol I of 1977 of the Geneva Conventions requires that parties to the Convention conduct a legal review of weapons that are intended to be used to ensure that they meet this condition.17 But how to meet Condition 1 in practice depends on specific details of the weapon in question; for cyber, as our discussion on successful targeted attacks makes clear, the details are both complex and important.

As per above, in order that a cyber weapon be usable only on intended targets, the location and technical specifications of the intended target must be sufficiently precisely determined that they uniquely determine the intended target. For example, intelligence might provide an IP address at which a putative target is located. Thus, a cyber weapon might be provided with capabilities that enable it to be directed to a specified set of IP addresses.18

Even after the cyber weapon reaches an entity within which it can release its payload, the weapon must still be able to examine technical characteristics of the environment in which it finds itself. It must be able to determine if it has, in fact, arrived at the correct place. Detailed information concerning the desired target (e.g. PLC type and configuration such as was used in Stuxnet), must be available in advance so that the weapon has a baseline against which to compare its environment.

The more detailed such information is, the better. In the limiting case, intelligence may reveal the serial numbers of various components in the environment – if serial numbers are known and are machine-readable, the probability that the weapon is in the targeted environment is surely high enough to allow its use.

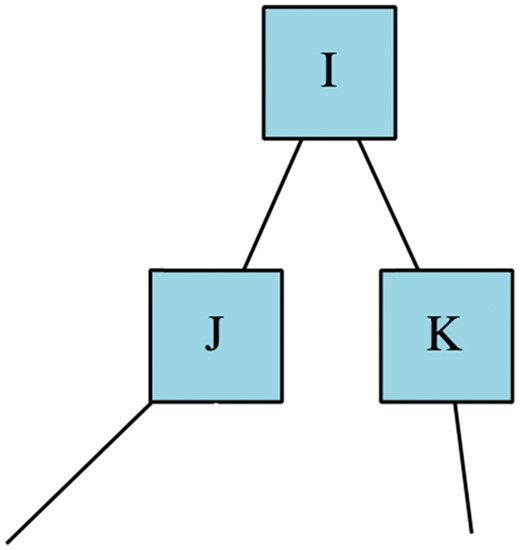

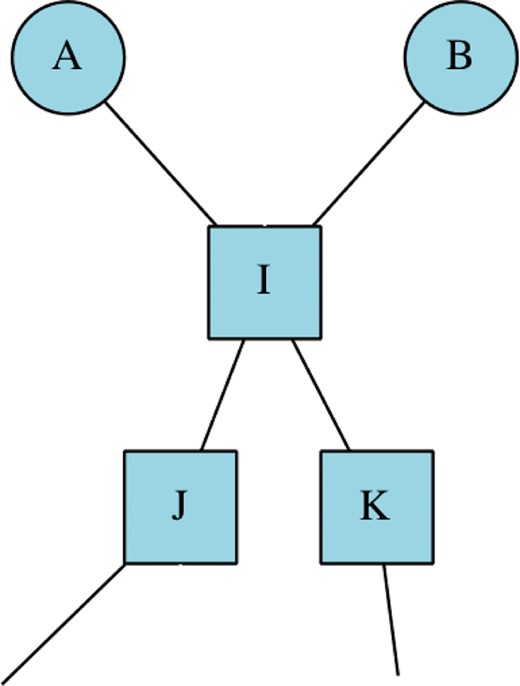

An attack on a target “I”, passing through “J” and “K”, but where “A” and “B” rely on "I" for their own functions.

Under the laws of war, the legitimacy of directing a weapon against I would depend on whether damage to B would be disproportionate to the military advantages gained by damage to A. This comparison is known as the proportionality test.

In certain cases, would-be targets may be protected by international law or agreement from attack. For example, hospitals are regarded as having special protection under the Geneva Conventions, though they must be clearly labeled (e.g. with a Red Cross symbol). To the extent that nations can agree that certain entities should be protected against cyberattack, cooperative measures may serve to identify these entities (Sidebar Box 1).

Sidebar Box 1: On Cooperative Measures to Differentiate Protected and Nonprotected Entities in Cyberspace

Possible cooperative measures can relate to locations in cyberspace – e.g. nations might publish the IP addresses associated with the information systems of protected entities,19 and cyber weapons would never be aimed at those IP addresses. Alternatively, systems may have machine-readable tags (such as a special file kept in common locations) that identify it as a protected system. Cyber weapons could be designed to check for such tags as part of their assessment of the environment.

Such cooperative measures are problematic in many ways. For example, a scheme in which tags are applied to protected entities runs a strong risk of abuse – a military computer system could easily be equipped with a tag indicating that it is used by a hospital. (Of course, on the kinetic battlefield, a Red Cross symbol could also be improperly painted on a building containing ammunition.)

The notion of tagged protected facilities raises a number of difficult research questions. For example, how can a cyber weapon query the flag when the act of querying is easily detected and thus revelatory of an intrusion? How can protected facilities be listed publicly when the existence of one might give away the fact of an associated military computing complex?

Yet another tension would result in the event that the cyber weapon sensed that it was in the vicinity of its intended target (on the basis of intelligence information gathered for this specific purpose) but also found a tag indicating that it was a protected facility. Should the weapon release its attack payload, despite the presence of a tag designating it as a protected entity? Or refrain from doing so despite intelligence information indicating that it is indeed in the environment of a valid target. Note that the same problem occurs in non-cyber situations when, for example, an ambulance is being used for the transport of weapons.

A scheme to label nonprotected entities also creates problems. For example, public lists of the IP addresses of military computing facilities is an invitation to the outside world to target those facilities. The legal need to differentiate unprotected (military) and protected (civilian) facilities arises only in the context of the laws of war, which themselves come into force only during armed conflict. Thus, we confront the absurdity of a situation in which prior to the outbreak of hostilities, lists of military entities are kept secret, but upon initiation of hostilities, they are made public.

Having reached its set of targets, a third issue is that the cyber weapon must be designed so that if the payload exits the target’s machines, it does not cause damage elsewhere – including through denial of service attacks. In addition, and especially so for a cyber weapon that propagates autonomously, the method of propagation must not itself become a major disruptor of nontargets.20 The Morris worm would have failed this test while Stuxnet would pass easily.

Condition 2: avoiding collateral damage

It is easy to avoid collateral damage if the target is not connected to other systems. Consider the possibility that causing a harmful effect on the target (call it A) might have harmful effects on other systems (B, C, etc.) connected to it. Of course, A can be destroyed by kinetic means, and B may or may not be damaged as a result. (In some scenarios, B will see the kinetic destruction of A as simply losing its connection to A, and it will handle the destruction of A in the same way.) Such scenarios are common in kinetic warfare, and a well-developed legal and policy regime has emerged to deal with such questions.

But computer systems are often interconnected in complex ways that make the clarity of the above situation much more difficult to handle than for kinetic systems. Damaging but not destroying one computer system can have unforeseen effects on other, seemingly unrelated ones.21 Furthermore, the negative impact on connected computers B and C may be greater when A is merely damaged in some way rather than completely destroyed: B and C may be well programmed to know what to do when the connection to A is broken, but they may not be able to recognize when data coming from A is corrupted in some way or even seriously delayed (e.g. as the result of an attack on A). Thus, in the latter case, B and C may continue operating but slowly or with bad data – which may be more consequential than if the flow of data from A is simply stopped.22

Proliferation of cyber weapons

For a variety of reasons, proliferation of cyber weapons is potentially much easier than proliferation of kinetic weapons. Unlike kinetic weapons that explode and destroy themselves as well as the target, a cyber weapon does not necessarily destroy itself upon use. Such a weapon, used to attack one target, is relatively easily recovered and some components may be repurposed. Thus, unless special precautions are taken to prevent the reuse of a cyber weapon, proliferation to other parties may enable those other parties to use the weapon for their own purposes – and thereby cause damage to other entities.

In addition, manufacture of cyber weapons is much cheaper and faster than typical kinetic weapons. Once a cyber weapon has been reverse engineered and its mechanism of deployment and use recovered, making more of the same is far easier than the manufacture of other types of weapons.

With cyber weapons, however, simply the use of a weapon may supply the attacker with necessary information – technical or otherwise – to construct its own version if precautions are not taken to prevent such an outcome. In the cyber weapon context, the nonproliferation objective seeks to prevent others from being able to use code snippets, information on zero days, useful techniques for weapons design, or even new classes of weapon. The risk of proliferation that may arise from these efforts varies substantially, with some posing little to no risk, and some posing a great deal.

These considerations also apply to prepositioned cyber weapons, which are sometimes called logic bombs. Such weapons are implanted into adversary computers in advance of hostilities, and they are intended to cause damage not upon implantation but at some later time when some condition has been met. The triggering condition can be that a specified amount of time has elapsed, the occurrence of a specific time and date, the receipt of a message from the owner or operator of the weapon, or the sensing of a particular condition in the environment into which the weapon has been prepositioned.

Assuming the process of prepositioning is executed properly, a prepositioned cyber weapon causes no damage before it is triggered. Once triggered, the damage it causes to intended or unintended targets is subject to the same considerations as discussed above. But prepositioning a cyber weapon poses a risk that it will be discovered before it is triggered and recovered intact. Once discovered, reverse engineering attempts on the recovered weapon will surely be undertaken, and thus proliferation of that weapon becomes a distinct possibility. Furthermore, the owner or operator of the weapon is likely to not know if the weapon has been discovered.

Mechanisms for proliferation

As we have suggested, the actual use of a cyber weapon may lead to proliferation: the target will turn around and convert the weapon used against it into its own weapon. There are several ways in which this can happen.

The simplest is knowledge that makes a particular technique work. It has been said that the only real secret of the atomic bomb was that one could be built; after that, it “merely” required applied physics and engineering (and, of course, the expenditure of a large sum of money). The same is true in the cyber domain. For example, although Hoare had warned in 1981 of the dangers of not checking array indices [28], it was not until the 1988 Morris worm that most people realized that the security risk was quite real. Similarly, although using USB flash disks to jump an air gap was conceivable as an attack possibility, few thought it could be done in practice until Stuxnet showed that it worked.

A second form of proliferation involves code that reuses the specific, low-level techniques of an existing weapon. IRONGATE is an example: it has been called a “Stuxnet copycat," but was quite clearly not written by the same group.23 Recently leaked CIA documents describe the existence of a program to reuse malware obtained from other sources, including other nations' cyber operations [30]. If the CIA is doing this, presumably other intelligence agencies are as well.

Finally, the actual code used can be reverse-engineered. This requires significant effort by skilled individuals, but it can be done. The reverse engineering of Stuxnet, the only sophisticated cyber weapon that we have described, was accomplished by a handful of researchers in private sector firms working essentially in odd bits of time. The expertise at intelligence agencies are at least as good – and probably significantly better – because they are handling sophisticated attacks from other nations. Reverse engineering of cyber weapons by agencies responsible for national security can be expected to be as equally professional in their approach to deconstruction as the Stuxnet teams were in construction of the weapon.

Taking steps to limit proliferation

A state wanting to limit future damages caused by a given kind of cyberattack should take into account proliferation issues and take actions that effectively diminish the risks of proliferation. From a technical standpoint, meeting the nonproliferation objective is thus the problem of either (i) obfuscating the code of the weapon so that an adversary cannot make use of it or (ii) changing the environment of those who might be harmed by successful proliferation so that they will not be harmed. Problem (i) can be solved unilaterally by the actions of the weapons designer; problem (ii) requires action on both the part of the attacker and others.

It is useful to divide the issue of proliferation into the immediate aftermath of an attack and those events that may occur due to proliferation in the longer term – perhaps days or weeks later. The simplest way to conceal the exploit used is by “loader/dropper” architecture. In such a design, a small piece of code takes advantage of a security hole to penetrate a system, downloads the actual payload, and then deletes itself. An adversary who later determines that a system is infected will find only the payload and not the part of the code that actually effects the penetration of the target.

Attackers can also take steps to obfuscate their code. For example, they can encrypt the important parts of the code; the decryption key24 is derived from values specific to the target machines.25 When encryption is used for obfuscation, it becomes very difficult to decrypt it without access to the target machines. The Gauss spyware employed such a scheme [31]. Obfuscation is the technique of choice for solving problem (a).

Preventing proliferation occurring over the long term is the focus of problem (ii), and is much harder as the solution is not entirely under the attacker’s control. One approach – perhaps the only approach – to solving problem (ii) is to provide to potential victims the information needed to prevent the repurposed weapon from being used against them. Specifically, the attacker would disclose the mechanisms used to effect penetration (i.e. the vulnerabilities) after the weapon had served its original purpose.26

If disclosure of the penetration mechanisms were done entirely publicly, any potential victim could patch its systems to prevent the weapon from being used against it.27 In this case, the weapon might well be unusable in the future. Also, widespread disclosure could make available information that would make it easier for attacks to occur much faster than they might have otherwise.28 Alternatively, the disclosure could be limited to the extent possible only to friendly parties, thus increasing the likelihood that the same penetration mechanisms would be useful against other targets in the future. These issues are at the heart of the Vulnerabilities Equities Process, a process established by the Executive Branch in the USA to determine whether a given vulnerability should be disclosed (to enable its repair) or not disclosed (to enable it to be used by the US government in the future) [32].

Whether the long-term nonproliferation objective should dominate over other policy concerns is something to be determined on a case-by-case basis.

Conclusions

Contrary to a belief held in many quarters, cyber weapons as a class are not inherently indiscriminate. But designing cyber weapons so that it can be used in a targeted manner is technically demanding and requires a great deal of intelligence about the targets against which it will be directed. When the technical demands can be met and the requisite intelligence is in hand, it is possible to conduct cyberattacks that are precisely targeted to achieve a desired effect and with minimal damage to entities that should remain unharmed.

A second consideration in limiting damage from using a cyber weapon is that of proliferation. Proliferation does not arise as the direct result of using a cyber weapon in an attack. Rather, it is the result of the fact that a cyber weapon, once used, may become available for others to examine, copy, and reuse for their own purposes.

Whether Stuxnet was a factor in bringing Iran to the bargaining table over the development of nuclear weapons is hard to determine. What is clear was the fact that the USA was able to attack such a well-defended site at Natanz; that undoubtedly demonstrated to the Iranians the depth of US capabilities. What is notable about the US attack on the nuclear facility is that it was accomplished without loss of life.

The scope and nature of the ability to narrowly target a cyber weapon are crucial aspects of how, if at all, it can or should be used. The laws of armed conflict require the use of weapons that are not inherently indiscriminate, and furthermore that all weapons be used in a discriminating manner; this article has shown that it is possible for some cyber weapons to be used in a discriminating manner, i.e. that such weapons can be directed against specifically designated entities. But designing and using a cyber weapon in the requisite manner requires effort – perhaps significantly more effort, both on development and on intelligence gathering – than one that might be used in a less discriminating manner.

In this article, we have sought to begin the conversation on how targetable cyber weapons can be developed from a technical standpoint and what policy issues need to be part of the considerations in both design and deployment. As far as we are aware, our article is the first in the open literature to discuss targeting of cyber weapons and ways to avoid proliferation. We hope this work will engender further discussion of these issues.

Acknowledgements

We are grateful for comments from Thomas Berson, Joseph Nye, Michael Sulemeyer, and several anonymous referees. Their comments greatly improved the paper.

Funding

This work was supported by the Cyber Policy Program of Stanford University’s Center for International Security and Cooperation and the Hoover Institution.

Cyber weapons can be instantiated in hardware as well. Tampering with hardware can result in widespread or narrow effects, depending on the mechanism and how it is activated. Backdoors can be embedded in hardware [2] and installed at any point in the supply chain from initial fabrication as commodity hardware to the process of shipping a finished system to an end user.

An analogy could be drawn to an airplane carrying a payload – the airplane is the penetration mechanism by which the attacker gains access to adversary territory. Once there, the airplane can drop high-explosive bombs, incendiaries, nuclear weapons – or simply drop leaflets. Similarly, the same plane could simply take pictures, which extends our analogy to cyber exploitation.

Some analysts include a third class of nation-state intrusion known as “preparing the battlefield.” Roughly speaking, it involves planting software that will be useful in event of a conflict but leaving it dormant until then. The nearest analogy is prepositioning munitions in enemy territory before a war.

This article also distinguishes between two methods through which a cyber weapon can propagate. A worm is a cyber weapon that propagates through a network. By contrast, a virus propagates through nonnetwork mechanisms such as infected files and human assistance, witting or unwitting, to carry the infection from an infected machine to an uninfected one. Because human assistance is needed, viruses propagate most readily and virulently within affinity groups. A given piece of malware can be designed as a worm or a virus, or it can make use of both mechanisms to propagate.

The Morris worm ultimately spread to a large number of systems on the Internet (at the time, about 10%, or about 6000). For an account of the Morris worm, see [4].

During NATO’s bombing campaign against Serbia, there were low-grade attacks against various NATO systems. Some of these are believed to have been launched by the Serbia military; see [8]

There were only some minor website defacements.

Stuxnet was designed to unlock its malware payload only after it had checked the target system had for a specific configuration of this plant: the model of PLCs (programmable logic controllers), the type of frequency converters, the layout of the units, and so on. If this check did not indicate that Stuxnet was inside a plant that matched this configuration, Stuxnet would remain on the compromised system, but Stuxnet's payload would not be decrypted, at which point it could only spread to other systems, see Zetter, 28–29.

For complex reasons involving shared resources and network dynamics, the full 100 Gbps is unlikely to be realized; still, the effect would be considerable.

This might be on a virtual machine hosted by a cloud provider such as Amazon; alternatively, it could be done as a separate website hosted on a large dedicated web server.

The DNS is a distributed database that converts user-friendly names like www.stanford.edu into the IP address used by the network protocols.

Note, however, that other kinds of DDoS attacks can affect entities beyond those directly targeted. For example, on 21 October 2016, a large DDoS attack targeted Dyn, an Internet infrastructure company that served many large consumer-facing firms, such as the New York Times, Spotify, Twitter, Amazon, among others. This attack prevented many consumers from being able to access their desired web pages, even though the consumer-facing firms were not directly targeted. For accounts of this attack, see [19] and [20].

Under certain conditions, attacks on networks that are air-gapped from the Internet can simplify targeting – i.e. if they only activate once certain conditions are met, all other computers with reach are part of the same isolated – and, likely, targeted – networks.

For example, according to the New York Times, Stuxnet was detected and then nullified precisely because it has spread too far [22]. This analysis is somewhat controversial and contradicted by other accounts.

Article 36 states that “In the study, development, acquisition or adoption of a new weapon, means or method of warfare, a High Contracting Party is under an obligation to determine whether its employment would, in some or all circumstances, be prohibited by this Protocol or by any other rule of international law applicable to the High Contracting Party.” This article is widely regarded as imposing legal requirements to conduct reviews of weapons before they are adopted for use, see e.g. [25].

This is not always sufficient to prevent collateral damage, especially if shared resources – network links, computers, etc. – exist.

It is necessarily a minor disruptor in the sense that the attacked system is using resources to spread the attack, and that is not normal behavior for the system under attack.

AT&T’s Internet service once suffered an outage due to an erroneous change by another ISP [26].

There are many ways in which this can occur. For example, TCP is designed to slow down drastically in the presence of packet loss [27], since under normal conditions packet loss indicates network congestion. A carefully modulated denial of service attack could induce such behavior.

IRONGATE is a piece of control system malware that appeared post Stuxnet, and appears to be reusing of a number of Stuxnet's techniques [29].

A cryptographic key is a long, generally random number. Files encrypted with modern cryptosystems are generally unreadable unless the key is known.

Computers have a variety of unique, unguessable numbers present, such as manufactured-in serial numbers, network hardware addresses, etc.

This approach to solving problem (ii) is not effective in all cases. The reason is that an independently developed cyber weapon based on an attack technique revealed from a proof of concept demonstration may not use the same vulnerabilities that the demonstration used.

Experience shows that not all potential victims install patches to known vulnerabilities. Indeed, the successful North Korean attack against Sony used well-known techniques and exploited unpatched systems. Furthermore, older systems are often not patchable: the hardware is incapable of running the newer versions of the code.

In the days immediately after a patch is announced, hostile parties reverse engineer the patch to discover the vulnerability being fixed and launch attacks taking advantage of that vulnerability against a wide range of targets, many of whom will not have installed the patch by the time their attack occurs.

There are also reports that Israel’s air strike on a purported North Korean-built nuclear reactor in Syria was aided by a cyber intrusion that disabled Syrian air defense radars [9]. No details have emerged nor has there been independent confirmation.

See [21] (“once the removable drive has infected three computers, the files on the removable drive will be deleted”).

Relying on third-party lists of IP addresses, e.g. a country’s national registry of protected computers, is a fragile defense. While servers’ IP addresses are much more stable than those of desktops or laptops, they can and do change, due to replacement (a new server is likely to be brought online before the old one is decommissioned), changes in network topology (for technical reasons, address assignment has to mirror the physical interconnections of different networks), etc. Currently, system administrators tend to keep only those entries necessary for day-to-day functioning of their computers and networks; a national registry important only in time when war does not qualify.

References

Computer networks in South Korea are paralyzed in cyberattacks.

http://dyn.com/blog/dyn-statement-on-10212016-ddos-attack/ (31 December 2016, date last accessed).