-

PDF

- Split View

-

Views

-

Cite

Cite

Manoj M Wagle, Siqu Long, Carissa Chen, Chunlei Liu, Pengyi Yang, Interpretable deep learning in single-cell omics, Bioinformatics, Volume 40, Issue 6, June 2024, btae374, https://doi.org/10.1093/bioinformatics/btae374

Close - Share Icon Share

Abstract

Single-cell omics technologies have enabled the quantification of molecular profiles in individual cells at an unparalleled resolution. Deep learning, a rapidly evolving sub-field of machine learning, has instilled a significant interest in single-cell omics research due to its remarkable success in analysing heterogeneous high-dimensional single-cell omics data. Nevertheless, the inherent multi-layer nonlinear architecture of deep learning models often makes them ‘black boxes’ as the reasoning behind predictions is often unknown and not transparent to the user. This has stimulated an increasing body of research for addressing the lack of interpretability in deep learning models, especially in single-cell omics data analyses, where the identification and understanding of molecular regulators are crucial for interpreting model predictions and directing downstream experimental validations.

In this work, we introduce the basics of single-cell omics technologies and the concept of interpretable deep learning. This is followed by a review of the recent interpretable deep learning models applied to various single-cell omics research. Lastly, we highlight the current limitations and discuss potential future directions.

1 Introduction

The advances in high-throughput omics technologies have transformed our ability to probe molecular programs at a large scale, providing insight into the complex mechanisms underlying various biological systems and diseases. Until recently, most early omics technologies (except profiling somatic mutation frequencies) have been typically applied to profile a population of cells, known as ‘bulk’ profiling (Hasin et al. 2017), where the heterogeneity of cells and cell types are masked by the average signal across the cell population (Wang and Bodovitz 2010). The establishment of technologies such as single-cell RNA-sequencing (scRNA-seq) (Tang et al. 2009, Shalek et al. 2013) and single-cell assay for transposase-accessible chromatin by sequencing (scATAC-seq) (Buenrostro et al. 2015) enables the dissection of cellular heterogeneity at the single-cell level based on their gene expression and chromatin accessibility profiles. Latest developments in single-cell omics technologies towards multimodality now allow for simultaneous multimodal measurements from the same cell in a single experiment, providing opportunities for understanding a broad range of cellular processes and diseases (Baysoy et al. 2023, Badia-I Mompel et al. 2023, Kim et al. 2023).

Deep learning, a rapidly evolving sub-field of machine learning, has gained considerable attention in the single-cell community for its capability to deal with heterogeneous, sparse, noisy, and high-dimensional single-cell omics data and versatility in handling a wide range of applications (Ma and Xu 2022). For example, deep learning models have been demonstrated to excel in tasks such as dimensionality reduction, batch effect removal, data imputation, cell type annotation, and inferring cellular trajectories (Arisdakessian et al. 2019, Tian et al. 2019, Li et al. 2020, Li 2023, Yu et al. 2023a). Nevertheless, deep learning models are well known for their lack of interpretability (von Eschenbach 2021). That is, predictions made by these models are often hard to interpret, especially towards understanding the underlying molecular mechanisms that drive cellular processes and phenotype. To this end, improving model interpretability has attracted increasing attention, in particular, in applications such as identifying molecular regulators and reconstructing biological networks (Fortelny and Bock 2020, Chen et al. 2023, Huang et al. 2023, Liu et al. 2023, Lotfollahi et al. 2023).

In this work, we review the basics of single-cell omics technologies and key principles behind interpretable deep learning. We next summarize the latest development of interpretable deep learning-based models specifically tailored to single-cell omics research, providing a global view of the current applications in the main interpretable deep learning taxonomy. Finally, we discuss the challenges and potential future directions in this burgeoning field.

2 Fundamentals of single-cell omics and interpretable deep learning

2.1 The advent of single-cell omics technologies

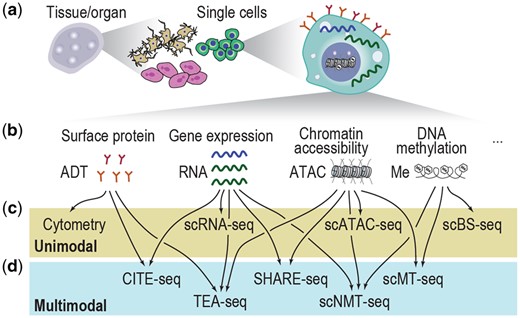

The establishment of scRNA-seq technologies that enable transcriptomic profiling at single-cell resolution (Fig. 1a) has emerged as a powerful tool for dissecting cellular composition (Tang et al. 2009). With its ability to reveal cellular variability in gene expression, the use of scRNA-seq has better facilitated biological discoveries than traditional bulk data such as in cell type annotation for model organisms (Iram et al. 2018, Jones et al. 2022) and cell lineage tracing during development and disease progression (Kester and Van Oudenaarden 2018, Wagner and Klein 2020). The development of single-cell techniques that profile other modalities, e.g. scATAC-seq (Buenrostro et al. 2015) and single-cell bisulfite sequencing (scBS-seq) of methylomes (Smallwood et al. 2014), and their combination with other single-cell techniques such as cytometry (Spitzer and Nolan 2016) have led to the generation of additional data modalities and together provided a more holistic view of the gene expression, surface proteins, chromatin accessibility, and DNA methylation in single cells (Fig. 1b and c). Nevertheless, these ‘unimodal’ single-cell omics technologies are often independently applied, and each molecular attribute is profiled separately, creating significant difficulties in data modality integration from such ‘unpaired’ data.

Summary illustration of single-cell omics. (a) A schematic of single cells from different complex tissues/organs. (b) Molecular attributes in a single cell and their corresponding modalities in single-cell omics. (c, d) Example unimodal (c) and multimodal (d) single-cell omics technologies.

The development of single-cell omics towards multimodality alleviates the difficulties in integrating data modalities from unpaired data generated by unimodal technologies by measuring multiple data modalities from each single cell (Baysoy et al. 2023). The multimodal data generated from such ‘paired’ experiments provide a more holistic view of each cell across multiple molecular attributes. For example, cellular indexing of transcriptomes and epitopes by sequencing (CITE-Seq) (Stoeckius et al. 2017), simultaneous high-throughput ATAC and RNA expression with sequencing (SHARE-seq) (Ma et al. 2020), and simultaneous single-cell methylome and transcriptome sequencing (scMT-seq) (Hu et al. 2016) each capture a different combination of two modalities in a single cell (Fig. 1d), and techniques such as TEA-seq (Swanson et al. 2021) and single-cell nucleosome, methylation, and transcription sequencing (scNMT-seq) (Clark et al. 2018) can capture a combination of three modalities in a single cell. A recent review has summarized a comprehensive list of multimodal single-cell omics technologies (Vandereyken et al. 2023).

2.2 Interpretability of deep learning models

Deep learning is motivated by designing models consisting of multiple processing layers to learn representations of data with multiple levels of abstraction (LeCun et al. 2015). Some popular deep learning models include convolutional neural networks (CNNs) (LeCun et al. 1998), variational autoencoders (VAEs) (Kingma and Welling 2013), and, more recently, transformer (Vaswani et al. 2017). Deep learning models, while incredibly successful in their application to various domains, are typically considered as ‘black boxes’ for the lack of interpretability (Rudin 2019) due to their complex multi-layer non-linear architecture, activation functions, and potentially a large number of parameters (Zhang et al. 2021). Such opacity poses significant challenges in establishing trust and ensuring accurate validation, especially in domains such as molecular biology and biomedicine, where the reasoning behind predictions is crucial for our understanding and subsequent applications (Novakovsky et al. 2023b). This has motivated research that aims to increase deep learning model interpretability. While the term ‘model interpretability’ still lacks a universal consensus of definition in the machine learning community (Lipton 2018), we adopt a working definition where it is broadly considered to be the ability of the model to generate, explain, and present in human understandable terms its decision-making process or insights of data.

Adopting popular taxonomies in machine learning (Doshi-Velez and Kim 2017, Murdoch et al. 2019, Allen et al. 2023), the interpretability of deep learning models can be largely categorized into (i) intrinsic, whether prior knowledge or interpretable designs are incorporated into the neural network structures, and post-hoc, where additional analyses are performed to extract interpretable knowledge from the trained neural networks (Murdoch et al. 2019), and (ii) model-specific, where the interpretability is tailor-made for a specific neural network design, and model-agnostic, where the techniques could be used across different neural network architectures. For example, post-hoc feature attribution techniques such as Shapley value estimation (Lundberg and Lee 2017) and LIME (Ribeiro et al. 2016) are frequently used for identifying important learning features from trained neural networks and are generally considered to be model-agnostic interpretations as they are applicable to various neural network architectures. In contrast, the design of specific model architectures for interpretable learning, such as the use of transformer networks with attention layers, is intrinsic and specific to the model (Chefer et al. 2021). In the next section, we will review the current works of interpretable deep learning applications to single-cell omics research in light of these overarching categories.

3 Harnessing the power of interpretable deep learning for single-cell omics research

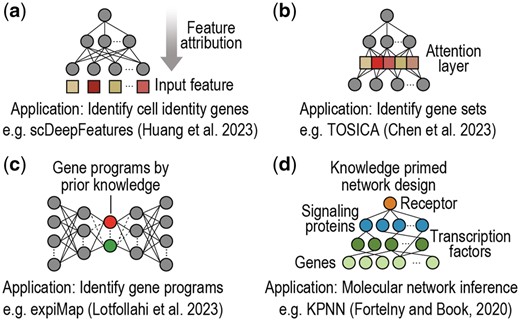

The application of deep learning models to single-cell omics data analysis has been met with remarkable success owing to their capability to deal with challenging data characteristics (e.g. heterogeneity, sparsity, noise, high-dimensionality) and versatility in handling a wide range of applications in single-cell omics research. Nonetheless, deep learning models often lack interpretability, complicating efforts towards understanding the underlying molecular mechanisms that drive cellular processes and phenotype. In recognition of this limitation, increasing research has been directed to interpretable deep learning in single-cell omics. This section summarizes the key developments based on the biological applications of model interpretability, such as identifying cell identity genes and molecular features (Fig. 2a), discovering gene sets (Fig. 2b) or gene programs (iii) (Fig. 2c) that underlie cell types, and inferring molecular networks (Fig. 2d) among other applications. A summary of various interpretable deep learning methods reviewed in this work is depicted in Fig. 3.

Schematic of example interpretable deep learning models applied to single-cell omics. (a) Using post-hoc feature attribution techniques to identify cell identity genes that distinguish cell types (Huang et al. 2023). Colours denote the estimated importance of input features: (b) Designing an intrinsic and model-specific attention layer to detect gene sets for annotating cell types (Chen et al. 2023). Colours denote the estimated importance of latent features: (c) Incorporating prior knowledge to design embeddings for detecting activated gene programs underlie cell types (Lotfollahi et al. 2023). Colours denote different gene programs. (d) Using prior knowledge to design neural network architectures for modelling molecular networks (Fortelny and Bock 2020). Colours denote different molecular species.

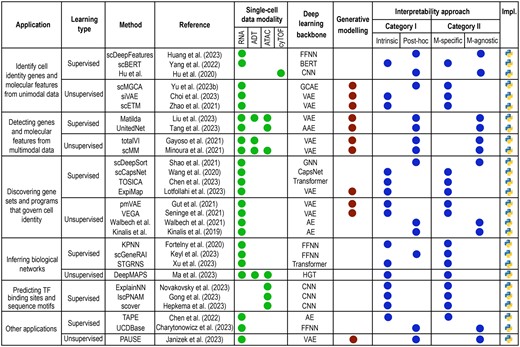

Overview of interpretable deep learning methods applied to diverse single-cell analyses. Each method is categorized based on its associated task, learning type, underlying architecture, interpretability approach, and implementation.

3.1 Identifying cell identity genes and molecular features from unimodal data

The identification of genes and other molecular features that underlie cell identity and can discriminate cell types is an essential task in single-cell omics data analysis (Yang et al. 2021). scDeepFeatures exemplifies the use of several post-hoc approaches for identifying cell identity genes from scRNA-seq data, where a simple multilayer perceptron (MLP)-based neural network is trained to classify cell types, and subsequently various feature attribution techniques (e.g. LIME, feature ablation, occlusion, DeepLIFT) are applied to identify genes that discriminate cell types (Huang et al. 2023). Similarly, Hu et al. proposed a post-hoc and model-agnostic data permutation approach to extract surface proteins from cytometry data using a CNN (Hu et al. 2020). Alternatively, scMGCA is a post-hoc and model-specific approach where a graph convolutional autoencoder is first used to learn an embeddings-by-cells matrix from the input data of a normalized count matrix and a cell graph, and a post-hoc embedding analysis procedure is used to identify key cell identity genes that separate cell types and are functionally enriched in the Gene Ontology (GO) analysis (Yu et al. 2023b). scETM serves as a good example for intrinsic and model-specific approaches and uses a variational autoencoder and a linear decoder to factorize the input data into a tri-factor of cells-by-topics, topics-by-embeddings, and embeddings-by-genes matrices. It allows the incorporation of prior pathway information and together enables the identification of interpretable gene markers and cellular signatures when integrating multiple scRNA-seq datasets (Zhao et al. 2021). scBERT is another intrinsic and model-specific approach that uses a transformer with an attention layer to encode the pre-training data into an embeddings-by-cells matrix and leverage this embedding matrix for subsequent cell-type annotation of future datasets. Cell-type discriminative genes and their long-range dependencies are captured by the attention layer in the model (Yang et al. 2022). Finally, siVAE uses cell-wise and gene-wise variational autoencoders to learn interpretable embeddings linked to important genes and co-expression networks (Choi et al. 2023).

3.2 Detecting genes and molecular features from multimodal data

Similar to gene selection from unimodal scRNA-seq data, various methods have also been developed for identifying other molecular features from multimodal single-cell omics data. To this end, several popular approaches make use of autoencoders where multiple data modalities are integrated through embedding learning. For example, Matilda uses a multi-task learning framework of a variational autoencoder and a classification head to classify cell types and a post-hoc feature attribution procedure to identify molecular features from multimodal single-cell omics data that contribute to the classification of each cell type (Liu et al. 2023). Similarly, UnitedNet uses an adversarial autoencoder (AAE) framework for integrating data modalities and subsequently identifying important molecular features using a post-hoc feature attribution technique of Shapley value estimation (Tang et al. 2023). totalVI also learns from multiple data modalities using a variational autoencoder and offers a post-hoc archetypal analysis to interpret each latent dimension by relating them to the molecular features in the input data (Gayoso et al. 2021). Lastly, scMM builds a mixture-of-experts of variational autoencoders for integrating data modalities and then performs a post-hoc step to traverse latent dimensions for identifying molecular features strongly associated with each latent dimension (Minoura et al. 2021). These four methods can be generally considered model-agnostic given that the post-hoc approaches they used for identifying molecular features, albeit different, are largely model-independent.

3.3 Discovering gene sets and programs that govern cell identity and states

Another essential task that extends to gene identification is to discover gene sets or gene programs that jointly govern cell identity, cell states, and cellular processes. scDeepSort represents one of the first methods that use GNNExplainer, a post-hoc and model-agnostic approach, for selecting gene sets that are predictive of cell types from a weighted graph neural network trained on scRNA-seq data (Shao et al. 2021). Compared to post-hoc and model-agnostic approaches, methods that rely on intrinsic and model-specific mechanisms have enjoyed more popularity. For example, scCapsNet uses a capsule network model for interpretable learning and captures gene sets that are predictive to cell types from scRNA-seq data (Wang et al. 2020). TOSICA uses a multi-head self-attention network to incorporate prior biological knowledge for identifying gene sets that belong to pathways or regulons for cell type annotation using scRNA-seq data (Chen et al. 2023). VEGA utilizes prior knowledge of gene modules for designing an interpretable latent space in variational autoencoders and subsequently detecting active modules from models trained on scRNA-seq data (Seninge et al. 2021). Likewise, ExpiMap incorporates prior knowledge of gene programs into a sparsely connected variational autoencoder during pre-training on large scRNA-seq reference atlases and subsequently identifies gene programs that are associated with cell types and cell states in query datasets (Lotfollahi et al. 2023). pmVAE trains multiple variational autoencoders, each incorporating prior information of a pathway module for detecting biological effects such as cell stimulation from scRNA-seq data (Gut et al. 2021). Walbech et al. implemented specialized training of autoencoders for scRNA-seq by enforcing a soft orthogonality constraint in the bottleneck layer of the model, and the interpretability was evaluated using gene set enrichment analysis on the saliency maps (Simonyan et al. 2013, Walbech et al. 2021). Similarly, Kinalis et al. employed a constrained autoencoder and interpreted the model by analysing saliency maps for gene signatures of biological pathways (Kinalis et al. 2019).

3.4 Inferring molecular networks from single-cell omics data

Building on the concept of gene sets and pathways, the next related task is to infer molecular networks, such as gene regulatory networks (GRNs), or capture regulatory relationships among transcription factors (TFs) and their target genes (Badia-I Mompel et al. 2023, Kim et al. 2023). One of the first methods towards achieving this aim is the knowledge-primed neural network (KPNN), an intrinsic and model-specific approach that explores the design of a sparsely connected neural network based on prior knowledge of genome-wide regulatory networks and subsequently trains the model using scRNA-seq data to learn regulatory strengths as weights of network edges between TFs and their target genes (Fortelny and Bock 2020). Alternatively, scGeneRAI attempts to infer GRNs by predicting the expression of a gene from a set of other genes using scRNA-seq data and layer-wise relevance propagation (LRP), a post-hoc and model-specific feature attribution technique (Keyl et al. 2023). Similar to scGeneRAI, STGRNS aims to reconstruct GRNs by predicting TF expressions using gene sets but relies on an intrinsic and model-specific approach where a transformer network with a multi-head attention layer is trained on scRNA-seq data (Xu et al. 2023). More recently, methods such as DeepMAPS have been developed for intrinsic and model-specific interpretable learning from multimodal single-cell omics data (Ma et al. 2023). In particular, DeepMAPS uses a graph autoencoder for integrating data modalities and then inserts the trained graph autoencoder into a heterogeneous graph transformer (HGT) with mutual attention layers for inferring cell-type-specific GRNs.

3.5 Predicting TF binding sites and sequence motifs

In GRNs, TFs regulate their target genes through binding to cis-regulatory elements (CREs) that contain specific sequence motifs. The prediction of TF binding sites and sequence motifs therefore goes further than inferring GRNs solely based on gene expression and deepens our understanding of underlying mechanisms of molecular network regulation. Several studies have demonstrated the utility of CNNs for addressing this task. In particular, ExplainNN predicts TF binding sites and sequence motifs through learning convolutional filters on DNA sequences. The model predictions are validated using scATAC-seq data and curations in databases such as JASPAR (Novakovsky et al. 2023a). IscPNAM is another CNN-based method for predicting TF binding sites but integrates DNA sequences with bulk data (e.g. ATAC-seq data) for their prediction and generates interpretations from additional attention modules in the network (Gong et al. 2023). Similar to ExplainNN, IscPNAM also uses scATAC-seq data for its prediction evaluation. Lastly, scover also uses CNN for interpretable learning of sequence motifs using convolutional filters. While scATAC-seq data are used for validation purposes in ExplainNN and IscPNAM, they are used for training CNNs in scover for discovering regulatory motifs that are cell-type-specific and reside in distal CREs and therefore can benefit from the cell-type-specific information captured by single-cell omics data (Hepkema et al. 2023). Although IscPNAM uses model intrinsic attention modules for interpretations, all three methods rely on intrinsic and model-specific mechanisms for interpretable learning.

3.6 Other applications

The above applications of interpretable deep learning mostly centre around some closely related tasks of identifying genes and gene sets and inferring their regulatory relationships from unimodal and multimodal single-cell omics data. Beyond these, a few studies have explored other potential applications, including the use of single-cell models as a foundation for signal deconvolution in other modalities. Bulk and spot-based spatial omics technologies, for instance, do not inherently provide cell type-specific estimates. Cell-type signatures derived from single-cell reference data can be used to disaggregate mixed expression profiles into individual cell type proportions, offering clearer insights into cellular compositions (Chen et al. 2018, Biancalani et al. 2021, Sang-Aram et al. 2023). Recent interpretable deep learning methods developed for such purposes include TAPE for bulk deconvolution and UCDBase for deconvoluting both bulk and spatial transcriptomic data. Specifically, TAPE implements a training stage for an autoencoder and an adaptive learning stage to extract interpretable information from the trained model, including cell-type-specific signature matrix and gene expression profiles, and predicted cell-type composition in the bulk data (Chen et al. 2022). On the other hand, UCDBase first pre-trains a densely-connected neural network model using large-scale scRNA-seq atlases and transfers the model for bulk and spatial data deconvolution. The model interpretations are generated using a post-hoc model-specific feature attribution technique of integrated gradients (Charytonowicz et al. 2023). Another example is PAUSE, which models transcriptomic variation using a biologically constrained autoencoder to attribute variations in scRNA-seq data to pathway modules (Janizek et al. 2023).

4 Current challenges and future opportunities

Interpretable deep learning has made a significant impact on the single-cell omics research field. However, the current application of interpretable deep learning techniques to single-cell omics research is still limited to a few related tasks of identifying genes and programs and inferring molecular networks they form. Other common tasks, such as trajectory inferences and cell–cell interactions, are crucial in single-cell omics data analysis (Heumos et al. 2023) but remain less explored in the context of interpretable deep learning. We anticipate that future method development and application will investigate the potential utility of interpretable deep learning in addressing these tasks.

Besides multimodality, single-cell omics research is increasingly moving towards multi-sample and multi-condition. While methods such as ExpiMap have been designed for data generated from different samples and with various perturbations and conditions in mind (Lotfollahi et al. 2023), there is still a lack of application of interpretable deep learning models to specifically address these emerging data structures that go beyond typical tasks of cell type annotation, cell identity gene selection, and GRN inference from a normal sample without experimental perturbations. Given the increasing application of single-cell omics techniques to studying human diseases and drug perturbations, we expect to see new methods developed to extract interpretable information from these more complex data structures.

Another aspect of single-cell omics research is in the field of spatial omics (Rao et al. 2021). Few interpretable deep learning methods have been specifically designed to take advantage of the extra spatial information besides the gene expression profile of cells. Yet, such information can be valuable for studying cell–cell communications and cellular microenvironments that drive normal development and diseases such as cancer. Therefore, developing deep learning models to integrate spatial information and other omic data modalities in single cells for interpretable learning may lead to a better understanding of developmental processes and improved treatments of cancer.

A significant challenge that limits a comprehensive evaluation and understanding of interpretable deep learning methods is the lack of standardized metrics for quantifying model interpretability. Recently, some attempts have been made to measure interpretability, including the proposal of a metric based on the information transfer rate during an annotation task. This metric evaluates how explanations provided by interpretability approaches can enhance the speed and accuracy with which human annotators replicate the decisions of a given machine learning model (Schmidt and Biessmann 2019). However, interpretable deep learning methods for single-cell omics research are highly task-dependent, making it particularly challenging to apply a uniform metric of interpretability. Developing more adaptable measures for quantitatively assessing interpretability is crucial and requires future research.

The choice of models can also have a large impact on interpretability. For example, generative models are often considered more interpretable because they inherently model the underlying probability distribution of the input data. By learning an interpretable data representation, these models facilitate the inference of latent variables that capture key data characteristics and provide intuitive insights into how different input features might influence predictions. Non-generative models, on the other hand, lack these intermediate probabilistic layers and typically prioritize prediction performance for specific tasks without explicitly modelling data distribution. This characteristic can make them potentially straightforward in terms of interpretability, as they often enable a clearer understanding of direct input-output mappings when the model architecture is relatively simple (Adel et al. 2018).

Recently, large foundation models trained on vast repositories of data have gained significant interest in single-cell omics research as they are generalizable and can be further fine-tuned for specific downstream analyses. Transformer models, with their self-attention architecture, continue to excel as foundation models and the attention scores can be easily analysed post-hoc for interpretability. scGPT represents one of the first single-cell foundation models, leveraging a generative pre-trained transformer with a specialized multi-head attention mask to simultaneously generate cell and gene embeddings and capture underlying gene–to–gene interactions (Cui et al. 2024). Other strategies that could potentially improve the interpretability of these frameworks include simplifying model layers or combining the knowledge from large models with linear models to produce hybrid frameworks (Singh et al. 2023).

Compared to traditional models, such as ensemble learning which has also demonstrated outstanding performance in various applications (Yang et al. 2010), deep learning models have shown competitive performance. A case study that compared deep learning and non-deep learning models, including Random Forest, on cell identity gene selection from scRNA-seq data has demonstrated superior performance of deep learning models as measured by cell type classification using selected genes (Huang et al. 2023). Nevertheless, recent research on combining ensemble learning with deep learning methodologies, referred to as ‘ensemble deep learning’ (Cao et al. 2020), has demonstrated their effectiveness in various bioinformatics applications including single-cell research (Yu et al. 2023a). We anticipate model interpretability to be an important consideration in designing these more complex architectures.

It is important to note that annotating interpretable deep learning models reviewed in this work into the four overarching categories is useful for their summarization. However, this is only intended to serve as a conceptual framework for ease of understanding the main strategies used in each method. Some methods use multiple interpretable learning techniques that can fit into more than one category, while others develop new strategies that may not precisely fit into any of these categories. Furthermore, there are additional taxonomy strategies, such as classifying model interpretability to be global and local (Allen et al. 2023), but are excluded due to their less utility in summarizing methods reviewed in this work.

Finally, the concept and definition of interpretability in machine learning are still evolving and often used interchangeably with the word ‘explainability’ (Gilpin et al. 2018). For example, methods that use simpler neural network architectures and perform linear transformation can be viewed as more interpretable. Besides improving model interpretability, methods that generate biologically meaningful results or intuitive visualization to the human eyes (Wagner et al. 2016) can also be viewed as more interpretable. For instance, in scvis, increased interpretability is defined as generating embeddings that better preserve the local and global neighbour structures in the original high-dimensional scRNA-seq data (Ding et al. 2018). While these definitions of interpretability are beyond the scope of this review, they are nonetheless important aspects that will contribute to the future development of interpretable deep learning in the single-cell omics research field.

5 Conclusion

Deep learning models, previously viewed as ‘black boxes’ for their lack of interpretability, have become increasingly interpretable due to the recent progress made in interpretable deep learning. This has stimulated a growing interest in using interpretable deep learning techniques for single-cell omics research, as the ability to identify and understand molecular regulators and networks is critical for guiding downstream experimental validations. Here, we briefly introduce the key concepts in single-cell omics technologies and interpretable deep learning techniques and then review the application of interpretable deep learning-based models to various single-cell omics data analysis tasks. Finally, we discuss current challenges and opportunities in applying interpretable deep learning to single-cell omics research.

Acknowledgements

The authors would like to thank all their colleagues, particularly those at the Sydney Precision Data Science Centre, The University of Sydney, for their intellectual engagement and constructive feedback.

Author contributions

Pengyi Yang conceptualized this work and supervised all authors to review the literature, and write and edit the manuscript. All authors read and approved the final manuscript.

Conflict of interest

None declared.

Funding

This work was supported by a National Health and Medical Research Council (NHMRC) Investigator [1173469 to P.Y.].

References

Author notes

Manoj M Wagle and Siqu Long Equal contribution