-

PDF

- Split View

-

Views

-

Cite

Cite

Jack Lanchantin, Yanjun Qi, Graph convolutional networks for epigenetic state prediction using both sequence and 3D genome data, Bioinformatics, Volume 36, Issue Supplement_2, December 2020, Pages i659–i667, https://doi.org/10.1093/bioinformatics/btaa793

Close - Share Icon Share

Abstract

Predictive models of DNA chromatin profile (i.e. epigenetic state), such as transcription factor binding, are essential for understanding regulatory processes and developing gene therapies. It is known that the 3D genome, or spatial structure of DNA, is highly influential in the chromatin profile. Deep neural networks have achieved state of the art performance on chromatin profile prediction by using short windows of DNA sequences independently. These methods, however, ignore the long-range dependencies when predicting the chromatin profiles because modeling the 3D genome is challenging.

In this work, we introduce ChromeGCN, a graph convolutional network for chromatin profile prediction by fusing both local sequence and long-range 3D genome information. By incorporating the 3D genome, we relax the independent and identically distributed assumption of local windows for a better representation of DNA. ChromeGCN explicitly incorporates known long-range interactions into the modeling, allowing us to identify and interpret those important long-range dependencies in influencing chromatin profiles. We show experimentally that by fusing sequential and 3D genome data using ChromeGCN, we get a significant improvement over the state-of-the-art deep learning methods as indicated by three metrics. Importantly, we show that ChromeGCN is particularly useful for identifying epigenetic effects in those DNA windows that have a high degree of interactions with other DNA windows.

Supplementary data are available at Bioinformatics online.

1 Introduction

The human genome includes over 3 billion base pairs (bp), each being described as A, C, G or T. Chromatin (DNA and its organizing proteins) is responsible for many regulatory processes such as controlling the expression of a certain gene. Active chromatin elements such as transcription factors (TFs) proteins binding at particular location in DNA or histone modifications (HMs) are what constitute that location’s ‘chromatin profile’ (we use the terms ‘epigenetic state' and chromatin profile interchangeably). Understanding the chromatin profile of a local region of DNA is a step toward understanding how that region influences relevant gene regulation since chromatin profile is a direct factor in regulating expression. Since biological experiments are time-consuming and expensive, computational methods that can accurately simulate and predict the chromatin profile are crucial. Modeling the chromatin profile of each bp has been a long standing challenge due to the sheer length and complexity of genome DNA. Deep neural networks have shown state-of-the-art performance in extracting useful features from segments of DNA to predict the chromatin profile (e.g. if a TF protein binds to that location or not; Alipanahi et al., 2015; Zhou and Troyanskaya, 2015). However, these methods heuristically divide DNA into local “windows” (e.g. about 1000 bp long) and predict the states of each window independently, disregarding the effects of distant windows. Due to the spatial 3D organization of chromatin in a cell, distal DNA elements (potentially over 1 million bp away) have shown to have effects on chromatin profiles (Ma et al., 2018; Mifsud et al., 2015; Rao, et al., 2014).

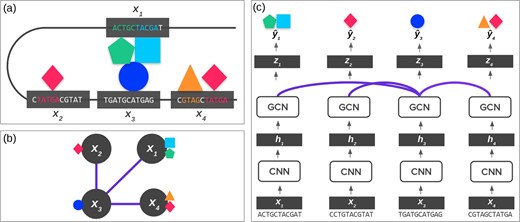

Figure 1a illustrates the importance of using both sequence and 3D genome (i.e. chromosome conformation) data. This figure shows long-range dependencies between chromatin windows, where the colored shapes represent multiple TF proteins. TFs typically bind to specific sequence patterns in DNA known as motifs (Stormo, 2000). However, a TF may also bind to a DNA window due to the presence of other TFs nearby in the 3D space because they form a protein complex (Brackley et al., 2012; Ma et al., 2018). Such a case will result in motifs far away in the 1D genome coordinate space, but nearby in the 3D space. The corresponding dependencies between the chromatin windows are illustrated by the triangle, square and circle TFs. The two segments interacting in the middle of the diagram are very far in the 1D sequence representation (represented by the gray line), but very close in the 3D representation. Similarly, a TF may not bind to a segment with its motif present due to another interfering TF nearby in the 3D space. These types of interactions are lost in data-driven prediction models that only consider local DNA segments independently.

(a) 3D Genome. The 3D shape of chromatin can lead DNA ‘windows’ (shown in gray boxes) far apart in the 1D genome space to be spatially close. These spatial interactions can influence chromatin profiles, such as TFs binding (as shown by the colored shapes). In most cases, the DNA sequence determines the chromatin profile. However, it can also be influenced by interactions, such as the formation of TF complexes shown in the middle. (b) Graph Representation of DNA. Using Hi-C data, we can represent subfigure (a) using a graph, where the lines between windows are the edges indicated by Hi-C data. (c) ChromeGCN. By using a GCN on top of convolutional outputs the model considers the known dependencies between long-range DNA windows. The lines between windows correspond to edges in Hi-C data

However, modeling these known long-range interactions between windows is difficult. Local sequence window-based prediction methods assume data samples are independent according to the commonly used independent and identically distributed (IID) assumption. Yet, the long-range dependencies existing in DNA make windows not IID.

Modeling long-range or non-local interactions, has had a long history in many areas such as natural language processing, where the label of one particular segment depends on the label of a segment far away. Recurrent neural networks (RNNs) such as Long short-term memory netoworks (LSTMs) (Hochreiter and Schmidhuber, 1997) have been used to model non-local dependencies where the model relies on the hidden state to remember the state of a token (e.g. a word) very far away. However, LSTMs are known to only remember a small number of tokens back, leading to rather ‘local’ relationship learning (Hochreiter and Schmidhuber, 1997; Vaswani et al., 2017). This drawback has lead to an increasing interest in the explicit modeling of non-local dependencies via pairwise interaction models such as transformers (Dai et al., 2019; Devlin et al., 2018; Vaswani et al., 2017).

In a related line of work, graph convolutional networks (GCNs) have been proposed to model the pairwise dependencies of nodes in graph or 3D structured data such as citation networks and point clouds (Kipf and Welling, 2016; Scarselli et al., 2008; Wang et al., 2018, Zitnik et al., 2018). This direct modeling of edges allows the network to learn non-local relationships. Although typically viewed in its 1D sequential form, DNA can be represented as 3D genome structured data via Hi-C maps, as shown in Figure 1b. Hi-C maps are matrices that give the number of contacts between two segments of DNA, and normalized Hi-C maps tell us the likelihood of two locations interacting (Ay et al., 2014). Using Hi-C data, segments of DNA can be represented as nodes on a graph, and edges are interactions between segments. Such interactions can be crucial in regulatory processes such as gene transcription (Rao et al., 2014). That is, Hi-C contacts are a direct reflection of how distant epigenetic elements interact. We hypothesize that accounting for such interactions will lead to improved chromatin profile prediction accuracy.

In this work, we propose ChromeGCN, a novel method that uses a fusion of both sequence and 3D genome data (in the form of Hi-C maps) to predict the chromatin profile of DNA segments. To the best of our knowledge, ChromeGCN is the first deep learning framework that successfully combines sequence and 3D genome data to model both local sequence features and long-range dependencies for chromatin profile prediction. ChromeGCN works by first representing DNA windows as a d-dimensional vector with a convolutional neural network (CNN) on the local window sequence. We then revise the window vector using a GCN on all window relationships from Hi-C 3D genome data. We test ChromeGCN on datasets from two cell lines where we compare against the previous state of the art chromatin profile prediction methods. We demonstrate that ChromeGCN outperforms previous methods, especially for profile labels that are highly correlated with long-range chromatin interactions.

An important aspect of ChromeGCN is that it allows us to better understand Hi-C data in the context of the chromatin profile. Hi-C maps tell us where the contacts are in the genome, but they don’t tell us important contacts for epigenetic labels. Using ChromeGCN, we propose Hi-C saliency maps to understand which Hi-C contacts are most important for chromatin profile labeling. Since ChromeGCN uses explicit long-range relationships from Hi-C data (as opposed to implicit long-range relationships using a RNN), we can easily understand the important relationships for greater interpretability.

The main contributions of this article are:

We propose ChromeGCN, a novel framework that incorporates both local sequence and long-range 3D genome data for chromatin profile prediction.

We experimentally validate the importance of ChromeGCN on two cell lines from ENCODE, showing that modeling long-range genome dependencies is critically important.

We introduce Hi-C saliency maps, a method to identify the important long-range interactions for chromatin profile prediction from Hi-C data.

2 Background and related work

2.1 Predicting chromatin profile using machine learning

Computational models for accurately predicting chromatin profile labels from DNA sequence have gained popularity in recent years due to the urgency of the task for many applications. For instance, predicting how epigenetic effects vary when variants in DNA occur. The importance of computational modeling arises from the low cost and high speed in comparison to biological lab experiments.

One class of methods for state prediction used generative models in the form of position weight matrices (PWM; Stormo, 2000). These methods construct motifs, or short contiguous sequences (often 8–20 bp in length), which are representative of a particular chromatin profile label such as a TF binding. A new sequence can then be classified according to how well it matches the motif. A significant drawback of using predefined motif features is that it is difficult to find the correct motifs for predicting unseen sequences (Alipanahi et al., 2015). Another class of methods uses string kernels (SKs; Ghandi et al., 2014; Singh et al., 2017), where some kernel function is built to capture the similarity between DNA segments according to substring patterns. However, these methods suffer from the issue of a predefined feature engineering. Moreover, these methods do not scale to a large number of sequences (Zhou and Troyanskaya 2015).

To overcome the issues of PWM and SK methods, researchers turned to automatic feature extraction using deep neural networks which have outperformed both generative PWM and SK methods (Alipanahi et al., 2015; Zhou and Troyanskaya 2015). CNNs were the first deep learning method to outperform previous methods. CNNs have been used extensively to learn features of DNA for sequence-based prediction (Alipanahi et al., 2015; Hassanzadeh and Wang, 2016; Lanchantin et al., 2016; Zhou and Troyanskaya 2015; Zhou et al., 2018). The benefit of convolutional models is that they have an inductive bias for modeling translation invariant features in DNA sequences. This allows CNNs to effectively learn the correct ‘motifs’ or kernels for chromatin labeling. There has since been several revisions to the original CNN models for marginally better feature extraction, such as adding a recurrent network on top of the CNN motif features (Lanchantin et al., 2017; Quang and Xie, 2016).

However, current state-of-the-art models only learn the features from the sequences of individual local windows and not between windows (i.e. longer-range interactions). Since DNA interacts with itself in the form of long-range 3D contacts, labeling the chromatin profiles of a window can be affected by another distant window. (Kelley et al., 2018) use longer-range dependencies (32 bp), but the dependencies are modeled implicitly using dilated convolution across 128 bp windows. Accordingly, methods that account for explicit long-range 3D chromatin contacts are needed to model the true interactions in DNA.

2.2 DNA interactions via Hi-C maps

Hi-C experiments and 3C experiments in general, are biological methods used to analyze the spatial organization of chromatin in a cell. These methods quantify the number of interactions between genomic loci. Two loci that are close in 3D space due to chromatin folding may be separated by up to millions of nucleotides in the sequential genome. Such interactions may result from biological functions, such as protein interactions (Hakim and Misteli, 2012). Supplementary Appendix Figure SA10 shows an example Hi-C map from the GM12878 cell line. The darker the lines indicate more DNA-DNA interactions.

Since the first Hi-C maps were generated, many works have been introduced to analyze the maps (Ma et al., 2018) investigated the spatial relationships of co-localized TF binding sites (TFBS) within the 3D genome. They show that for certain TFs, there is a positive correlation of occupied binding sites with their spatial proximity in the 3D space. This is especially apparent for weak TFBS and at enhancer regions (Bailey et al., 2015) identified that the ZNF143 TF motif in the promoter regions provides sequence specificity for long-range promoter–enhancer interactions (Wong, 2017) identified coupling DNA motif pairs on long-range chromatin interactions (Schreiber et al., 2018) use CNNs to predict Hi-C interactions from sequence inputs. None of the previous methods, however, use known Hi-C data to learn better feature representations of genomic sequences for chromatin profile prediction.

2.3 Graph convolutional networks

GCNs were recently introduced to model non-local or -smooth data (Dai et al., 2016; Gilmer et al., 2017; Hamilton et al., 2017; Kipf and Welling, 2016; Scarselli et al., 2008; Veličković et al., 2017). For the task of node classification, GCNs can learn useful node representations which encode both node-level features and relationships between connected nodes. Essentially, GCNs learn node representations by encoding local graph structures and node attributes, and the whole framework can be trained in an end-to-end fashion. Because of their effectiveness in learning graph representations, they achieve state-of-the-art results in node classification. The main assumption is that the input samples (in our case, individual DNA windows) are not independent. By modeling the graph dependency between samples, we can obtain a better representation of each of the samples. Non-local neural networks (Wang et al., 2018) are an instantiation of graph convolution, which was designed to model the long-range interactions in video frames.

3 Problem formulation and data processing

The objective of chromatin profile prediction (i.e. chromatin effect prediction) is to tag segments of DNA with the probability of how likely a certain chromatin effect (aka chromatin profile label) is present. In our formulation, we define chromatin profile labels to include TF binding, HMs and accessibility (DNase I). This is known as a multi-label classification task, where multiple labels can be positive at once (different from multi-class tasks where only one label can be positive). Formally, given an input DNA window (a segment of length T), we want to predict for a label l, where l ranges from 1 to L.

3.1 Sequence data

We derive epigenetic labels using ChIP-seq data from ENCODE (ENCODE Project Consortium, 2004). We use the cell lines GM12878 and K562, two of the most widely used from ENCODE and Roadmap (ENCODE Project Consortium, 2004; Kundaje et al., 2015). For each cell line, we use all windows which have at least one positive epigenetic ChIP-seq peak. We consider any peak from ENCODE to be a positive peak. We follow a similar setup as in (Zhou and Troyanskaya, 2015) where we bin the DNA into 1000 bp windows. If any ChIP-seq peak overlaps with at least 100 bp of a particular window, we consider that a positive window for that chromatin label. We then extract the 2000 bp sequence surrounding the center of each window as the input features, as done in Zhou et al. (2018), since the motif for a particular signal may not be contained fully in the 1000 bp length window. Although we use the 2000 bp sequence, we consider each window to be the original non-overlapping 1000 bp for notation purposes. An illustration of how sequences are extracted is shown in Supplementary Appendix Figure SA4a. Following Zhou and Troyanskaya (2015), we use chromosome 8 for testing and also add Chromosomes 1 and 21. Chromosomes 3, 12 and 17 are used for validation, and all other chromosomes (excluding X and Y) are used for training. The datasets are summarized in Table 1.

| Cell line . | Train windows . | Valid windows . | Test windows . | TFs . | HMs . | DNase I . |

|---|---|---|---|---|---|---|

| GM12878 | 368 082 | 89 911 | 79 731 | 90 | 11 | 2 |

| K562 | 457 609 | 106 777 | 117 815 | 150 | 12 | 2 |

| Cell line . | Train windows . | Valid windows . | Test windows . | TFs . | HMs . | DNase I . |

|---|---|---|---|---|---|---|

| GM12878 | 368 082 | 89 911 | 79 731 | 90 | 11 | 2 |

| K562 | 457 609 | 106 777 | 117 815 | 150 | 12 | 2 |

Note: GM12878 contains 103 total chromatin profile labels, and K562 contains 164 total labels. We use the same chromosomes for training, validation, and testing for both datasets.

| Cell line . | Train windows . | Valid windows . | Test windows . | TFs . | HMs . | DNase I . |

|---|---|---|---|---|---|---|

| GM12878 | 368 082 | 89 911 | 79 731 | 90 | 11 | 2 |

| K562 | 457 609 | 106 777 | 117 815 | 150 | 12 | 2 |

| Cell line . | Train windows . | Valid windows . | Test windows . | TFs . | HMs . | DNase I . |

|---|---|---|---|---|---|---|

| GM12878 | 368 082 | 89 911 | 79 731 | 90 | 11 | 2 |

| K562 | 457 609 | 106 777 | 117 815 | 150 | 12 | 2 |

Note: GM12878 contains 103 total chromatin profile labels, and K562 contains 164 total labels. We use the same chromosomes for training, validation, and testing for both datasets.

3.2 3D Genome data

We then use 3D genome, also known as chromatin conformation, data from Hi-C contact maps to extract interaction evidence between the DNA windows. We use 1000 bp resolution intra-chromosome Hi-C data from (Rao et al., 2014; for K562, the lowest resolution is 5000 bp, so we upsample to get 1000 bp resolution). We convert the Hi-C contact map for each chromosome into a graph whose nodes are 1000 bp DNA windows and whose edges represent contact between two 1000 bp windows. Since the full Hi-C contact for each chromosome is too dense, we rank each contact edge, and use the top 500 000 Hi-C contacts as edges per chromosome (each chromosome maps to a Hi-C graph). Contacts are ranked using the SQRTVC normalization from (Rao et al., 2014), which normalizes for the distance between two positions so that long-range contacts are included in the top 500 k. While we chose these specific DNA window and Hi-C resolution sizes, we want to emphasize that our model is applicable to any window and Hi-C data sizes.

4 Materials and methods

Our goal is to learn a model f which takes in a DNA subsequence window and predicts the probability of a set of epigenetic labels , where is an L dimensional vector. Our method, ChromeGCN uses three submodules for f: fCNN, fGCN and fPred. The first module, fCNN, models local sequence patterns from each window using a CNN. This module takes as input and outputs a vector representation . The second module, fGCN, models long-range 3D genome dependencies between windows using a GCN. This module takes as input all window vectors concatenated as H, as well as their Hi-C relationships represented by adjacency matrix A, and outputs refined representations of all windows . The of each window now encodes both window sequence patterns and the relationships between windows. We can then predict the epigenetic labels using a classifier function on each using . An overview of ChromeGCN is shown in Figure 1c. The following sections explain each submodule in detail.

4.1 Modeling local sequence representations using CNNs

Following the recent successes in many epigenetic label prediction tasks (Alipanahi et al., 2015; Lanchantin et al., 2017; Quang and Xie, 2016 ; Zhou and Troyanskaya, 2015; Zhou et al., 2018), we learn a representation of each genomic window sequence using a CNN. CNNs have become the de facto standard for encoding short DNA windows due to their properties, which effectively capture local sequence structure. Each learned kernel, or filter, in CNNs effectively learns a DNA ‘motif’, or short contiguous sequence representative of a particular output label (Alipanahi et al., 2015). Since many epigenetic processes are hypothesized to be dependent on motifs (Bailey et al., 2015), CNNs are a good choice for encoding DNA.

Equation (1) can then be repeated for several layers where each successive layer uses a new W and nin is replaced with nout from the previous layer. In our implementation, we use six layers of convolution where each successive layer learns higher-order motifs of the window. After the convolutional layers, the output of the last layer is flattened into a vector and then linearly transformed into a lower-dimensional vector of size d, which we denote . Succinctly, the CNN module computes the following: for each window.

4.2 Modeling long-range 3D genome relationships using GCNs

Although CNN models work well on independent local window sequences, they disregard known long-range relationships between windows that are influential in the chromatin profile. One option would be to extend the window size. However, due to the 3D shape of DNA, long-range contact dependencies may be located millions of base-pairs apart, making current convolutional models infeasible. In this section, we introduce the fGCN module, a method to explicitly and efficiently model such long-range interactions using GCNs.

4.3 Predicting label probabilities for each window

4.4 Identifying and interpreting important Hi-C edges via Hi-C saliency maps

While Hi-C contact maps tell us where the contacts are, Hi-C saliency maps show us how important each contact is for the chromatin profiles. We define Equation (7) to be over all labels, but we can easily visualize the Hi-C saliency, or important edges for one particular label. We show both the full Hi-C saliency across all labels, as well as for one specific label (YY1) in the experiments.

4.5 Model variations

To test the effectiveness of the long-range dependencies, we use the following ChromeGCN variations. Each variation uses the same model, with different edge dependencies in the form of A.

ChromeGCNconst: Instead of using Hi-C edges we use a constant set of nearby neighbors according to the 1D sequential DNA representation. We define each window ’s neighbors to be the windows surrounding (7 on each side: ) which we denote as . . This variant allows us to see whether the very long-range interactions from the normalized Hi-C maps are useful.

ChromeGCN: This variation uses the original Hi-C adjacency matrix, . .

Hi-C contacts are close neighboring contacts. However, by using top 500k contacts after the SQRT normalization for the Hi-C graph, we reduced some of this locality bias in the graph. This results in many of the edges being far away in the 1D space. This allows us to decouple the effects of local neighboring contacts (constant) and long-range (normalized Hi-C) contacts.

ChromeGCN: Last, we use a combination of the constant neighborhood around each window and the Hi-C adjacency matrix, which integrates close and far windows for each window. This variation uses the following function: .

4.6 Model details and training

To circumvent GPU memory constraints of training end-to-end, we pre-train the fCNN model by classifying each with the classification function . Once the pre-training converges on the training set, we use the trained weights for each sample as fixed inputs to fGCN. Although we pre-train fCNN, ChromeGCN is still end-to-end differentiable, making it possible to use sequence visualization methods such as DeepLIFT (Shrikumar et al., 2017) for a particular window.

For all model predictions, we run the forward and the reverse complement through simultaneously and average the output of the two. All DNA window inputs are encoded using a lookup table that maps each character A, C, G, T and N (unknown) to a d-dimensional vector. The output of the encoding is a matrix, where τ denotes sequence length (τ = 2000 in our experiments).

All of our models are trained using stochastic gradient descent with momentum of 0.9 and a learning rate of 0.25. The CNN model is trained using a batch size of 64, and the GCN and RNN models are trained using an entire chromosome as a batch (since each is modeling the between window dependencies of an entire chromosome at once). The CNN model projects each window to a vector of dimension 128. The GCN uses two layers of feature dimension 128 at each layer.

5 Experiments and results

5.1 Baselines

We compare against the state-of-the-art chromatin profile prediction model from (Zhou et al., 2018), referred to as the CNN baseline, as well as the recurrent model from (Quang and Xie, 2016). Since our model outputs labels for TFBS, HMs and accessibility, motif-based methods (Bailey et al., 2009) aren’t applicable. Since we have 368 082 training samples, kernel-based methods such as (Ghandi et al., 2014) aren’t applicable (Zhou and Troyanskaya, 2015) compared their CNN to a modified version of (Ghandi et al., 2014), which only used a small number of training samples, and the CNN model was significantly better. Our CNN baseline, (Zhou et al., 2018) is an improved version from Zhou and Troyanskaya (2015). Furthermore, the focus of our study is to show that state-of-the-art deep learning models are missing important long-range dependencies in the genome.

CNN: To illustrate the importance of the GCN, we compare against the outputs from the fCNN module: . This is the six-layer CNN model from Zhou et al. (2018; we modify the last layer in order to extract a d-dimensional feature vector output). This is the same CNN that is pre-trained for ChromeGCN to produce each .

DanQ: This model uses a RNN on top of CNN outputs within a window. It still uses local sequence window inputs, but models relationships between sequence patterns via an LSTM (Quang and Xie, 2016).

ChromeRNN: As a baseline to compare against using GCNs for long-range dependency modeling, we construct an RNN model on the window embeddings . After pre-training the CNN module fCNN, The RNN model takes in all window embeddings at once and models the sequential dependencies among windows: . As with ChromeGCN, the RNN is shared across chromosomes, but does not cross chromosomes. In other words, the embeddings are updated one chromosome at a time , where C is the total number of windows for a chromosome. We note this is different from the DanQ baseline (Quang and Xie, 2016) which uses an RNN within windows (Quang and Xie, 2016). ChromeRNN instead is for modeling dependencies between windows. In our experiments, we use an LSTM (Quang and Xie, 2016) with the same number of layers and hidden units as the GCN.

5.2 Prediction performance

To evaluate the methods, we use area under the ROC curve (AUROC), area under the precision-recall curve (AUPR), and the mean recall at 50% false discovery rate (FDR) cutoff. Table 2 shows the mean metric results across all epigenetic labels for each cell line. Modeling long-range dependencies results in significant improvements over the baseline CNN model, which does not account for such long-range interactions. For instance, with respect to AUPR for GM12878, ChromeRNN improves upon the CNN from 0.350 to 0.384 and ChromeGCN outperforms ChromeRNN to achieve a mean AUPR of 0.395. Also, we see that the ChromeRNN outperforms DanQ, indicating that using an RNN to model between window dependencies is more important than within window features. Moreover, we can see that the ChromeGCN models outperform ChromeRNN, indicating that not only the closely neighboring windows in the 1D space contribute to the improvements, but also the close neighbors in the 3D space, as indicated by the used Hi-C maps. ChromeGCN outperforms the baselines on the TF and DNase I labels, and ChromeRNN outperforms all other methods on the HM labels. This indicates that non-local modeling is particularly important for TF binding and accessibility. We provide the performance results of each label type (TF, HM and DNase I) are shown in Tables 3 and 4. We also provide detailed plots of ROC and precision-recall curves in Supplementary Appendix Figures SA5–8.

| . | GM12878 . | K562 . | ||||

|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.895 | 0.350 | 0.293 | 0.894 | 0.325 | 0.265 |

| DanQ (Quang and Xie, 2016) | 0.886 | 0.348 | 0.290 | 0.900 | 0.343 | 0.290 |

| ChromeRNN | 0.906 | 0.384 | 0.342 | 0.910 | 0.365 | 0.327 |

| ChromeGCNconst | 0.904 | 0.377 | 0.331 | 0.904 | 0.358 | 0.321 |

| ChromeGCN | 0.904 | 0.385 | 0.341 | 0.903 | 0.358 | 0.319 |

| ChromeGCN | 0.909 | 0.395 | 0.356 | 0.912 | 0.372 | 0.338 |

| . | GM12878 . | K562 . | ||||

|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.895 | 0.350 | 0.293 | 0.894 | 0.325 | 0.265 |

| DanQ (Quang and Xie, 2016) | 0.886 | 0.348 | 0.290 | 0.900 | 0.343 | 0.290 |

| ChromeRNN | 0.906 | 0.384 | 0.342 | 0.910 | 0.365 | 0.327 |

| ChromeGCNconst | 0.904 | 0.377 | 0.331 | 0.904 | 0.358 | 0.321 |

| ChromeGCN | 0.904 | 0.385 | 0.341 | 0.903 | 0.358 | 0.319 |

| ChromeGCN | 0.909 | 0.395 | 0.356 | 0.912 | 0.372 | 0.338 |

Note: For both cell lines, GM12878 and K562, we show the average across all labels for three different metrics. Our method, using a GCN to model long-range dependencies helps improve performance over the baseline CNN model which assumes all DNA segments are independent. Detailed results of all cell lines and label types are shown in the Supplementary Appendix. Best performing methods are shown in bold.

| . | GM12878 . | K562 . | ||||

|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.895 | 0.350 | 0.293 | 0.894 | 0.325 | 0.265 |

| DanQ (Quang and Xie, 2016) | 0.886 | 0.348 | 0.290 | 0.900 | 0.343 | 0.290 |

| ChromeRNN | 0.906 | 0.384 | 0.342 | 0.910 | 0.365 | 0.327 |

| ChromeGCNconst | 0.904 | 0.377 | 0.331 | 0.904 | 0.358 | 0.321 |

| ChromeGCN | 0.904 | 0.385 | 0.341 | 0.903 | 0.358 | 0.319 |

| ChromeGCN | 0.909 | 0.395 | 0.356 | 0.912 | 0.372 | 0.338 |

| . | GM12878 . | K562 . | ||||

|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.895 | 0.350 | 0.293 | 0.894 | 0.325 | 0.265 |

| DanQ (Quang and Xie, 2016) | 0.886 | 0.348 | 0.290 | 0.900 | 0.343 | 0.290 |

| ChromeRNN | 0.906 | 0.384 | 0.342 | 0.910 | 0.365 | 0.327 |

| ChromeGCNconst | 0.904 | 0.377 | 0.331 | 0.904 | 0.358 | 0.321 |

| ChromeGCN | 0.904 | 0.385 | 0.341 | 0.903 | 0.358 | 0.319 |

| ChromeGCN | 0.909 | 0.395 | 0.356 | 0.912 | 0.372 | 0.338 |

Note: For both cell lines, GM12878 and K562, we show the average across all labels for three different metrics. Our method, using a GCN to model long-range dependencies helps improve performance over the baseline CNN model which assumes all DNA segments are independent. Detailed results of all cell lines and label types are shown in the Supplementary Appendix. Best performing methods are shown in bold.

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.909 | 0.328 | 0.262 | 0.796 | 0.478 | 0.469 | 0.801 | 0.657 | 0.750 |

| DanQ (Quang and Xie, 2016) | 0.899 | 0.325 | 0.260 | 0.794 | 0.479 | 0.463 | 0.792 | 0.650 | 0.723 |

| ChromeRNN | 0.914 | 0.354 | 0.299 | 0.859 | 0.574 | 0.610 | 0.821 | 0.689 | 0.787 |

| ChromeGCNconst | 0.913 | 0.348 | 0.291 | 0.849 | 0.554 | 0.58 | 0.815 | 0.68 | 0.777 |

| ChromeGCN | 0.916 | 0.362 | 0.309 | 0.819 | 0.516 | 0.528 | 0.814 | 0.683 | 0.774 |

| ChromeGCN | 0.918 | 0.369 | 0.319 | 0.845 | 0.552 | 0.584 | 0.820 | 0.692 | 0.783 |

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.909 | 0.328 | 0.262 | 0.796 | 0.478 | 0.469 | 0.801 | 0.657 | 0.750 |

| DanQ (Quang and Xie, 2016) | 0.899 | 0.325 | 0.260 | 0.794 | 0.479 | 0.463 | 0.792 | 0.650 | 0.723 |

| ChromeRNN | 0.914 | 0.354 | 0.299 | 0.859 | 0.574 | 0.610 | 0.821 | 0.689 | 0.787 |

| ChromeGCNconst | 0.913 | 0.348 | 0.291 | 0.849 | 0.554 | 0.58 | 0.815 | 0.68 | 0.777 |

| ChromeGCN | 0.916 | 0.362 | 0.309 | 0.819 | 0.516 | 0.528 | 0.814 | 0.683 | 0.774 |

| ChromeGCN | 0.918 | 0.369 | 0.319 | 0.845 | 0.552 | 0.584 | 0.820 | 0.692 | 0.783 |

Note: Best performing methods are shown in bold.

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.909 | 0.328 | 0.262 | 0.796 | 0.478 | 0.469 | 0.801 | 0.657 | 0.750 |

| DanQ (Quang and Xie, 2016) | 0.899 | 0.325 | 0.260 | 0.794 | 0.479 | 0.463 | 0.792 | 0.650 | 0.723 |

| ChromeRNN | 0.914 | 0.354 | 0.299 | 0.859 | 0.574 | 0.610 | 0.821 | 0.689 | 0.787 |

| ChromeGCNconst | 0.913 | 0.348 | 0.291 | 0.849 | 0.554 | 0.58 | 0.815 | 0.68 | 0.777 |

| ChromeGCN | 0.916 | 0.362 | 0.309 | 0.819 | 0.516 | 0.528 | 0.814 | 0.683 | 0.774 |

| ChromeGCN | 0.918 | 0.369 | 0.319 | 0.845 | 0.552 | 0.584 | 0.820 | 0.692 | 0.783 |

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.909 | 0.328 | 0.262 | 0.796 | 0.478 | 0.469 | 0.801 | 0.657 | 0.750 |

| DanQ (Quang and Xie, 2016) | 0.899 | 0.325 | 0.260 | 0.794 | 0.479 | 0.463 | 0.792 | 0.650 | 0.723 |

| ChromeRNN | 0.914 | 0.354 | 0.299 | 0.859 | 0.574 | 0.610 | 0.821 | 0.689 | 0.787 |

| ChromeGCNconst | 0.913 | 0.348 | 0.291 | 0.849 | 0.554 | 0.58 | 0.815 | 0.68 | 0.777 |

| ChromeGCN | 0.916 | 0.362 | 0.309 | 0.819 | 0.516 | 0.528 | 0.814 | 0.683 | 0.774 |

| ChromeGCN | 0.918 | 0.369 | 0.319 | 0.845 | 0.552 | 0.584 | 0.820 | 0.692 | 0.783 |

Note: Best performing methods are shown in bold.

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.905 | 0.314 | 0.256 | 0.78 | 0.410 | 0.317 | 0.792 | 0.625 | 0.683 |

| DanQ (Quang and Xie, 2016) | 0.909 | 0.331 | 0.279 | 0.796 | 0.438 | 0.365 | 0.797 | 0.638 | 0.693 |

| ChromeRNN | 0.917 | 0.349 | 0.305 | 0.848 | 0.520 | 0.528 | 0.817 | 0.664 | 0.742 |

| ChromeGCNconst | 0.911 | 0.342 | 0.301 | 0.84 | 0.507 | 0.507 | 0.810 | 0.657 | 0.729 |

| ChromeGCN | 0.912 | 0.346 | 0.306 | 0.807 | 0.454 | 0.412 | 0.812 | 0.657 | 0.730 |

| ChromeGCN | 0.919 | 0.358 | 0.320 | 0.837 | 0.495 | 0.484 | 0.821 | 0.67 | 0.752 |

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.905 | 0.314 | 0.256 | 0.78 | 0.410 | 0.317 | 0.792 | 0.625 | 0.683 |

| DanQ (Quang and Xie, 2016) | 0.909 | 0.331 | 0.279 | 0.796 | 0.438 | 0.365 | 0.797 | 0.638 | 0.693 |

| ChromeRNN | 0.917 | 0.349 | 0.305 | 0.848 | 0.520 | 0.528 | 0.817 | 0.664 | 0.742 |

| ChromeGCNconst | 0.911 | 0.342 | 0.301 | 0.84 | 0.507 | 0.507 | 0.810 | 0.657 | 0.729 |

| ChromeGCN | 0.912 | 0.346 | 0.306 | 0.807 | 0.454 | 0.412 | 0.812 | 0.657 | 0.730 |

| ChromeGCN | 0.919 | 0.358 | 0.320 | 0.837 | 0.495 | 0.484 | 0.821 | 0.67 | 0.752 |

Note: Best performing methods are shown in bold.

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.905 | 0.314 | 0.256 | 0.78 | 0.410 | 0.317 | 0.792 | 0.625 | 0.683 |

| DanQ (Quang and Xie, 2016) | 0.909 | 0.331 | 0.279 | 0.796 | 0.438 | 0.365 | 0.797 | 0.638 | 0.693 |

| ChromeRNN | 0.917 | 0.349 | 0.305 | 0.848 | 0.520 | 0.528 | 0.817 | 0.664 | 0.742 |

| ChromeGCNconst | 0.911 | 0.342 | 0.301 | 0.84 | 0.507 | 0.507 | 0.810 | 0.657 | 0.729 |

| ChromeGCN | 0.912 | 0.346 | 0.306 | 0.807 | 0.454 | 0.412 | 0.812 | 0.657 | 0.730 |

| ChromeGCN | 0.919 | 0.358 | 0.320 | 0.837 | 0.495 | 0.484 | 0.821 | 0.67 | 0.752 |

| . | TFs . | HMs . | DNase I . | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | Mean AUROC . | Mean AUPR . | Mean Recall at 50% FDR . | |

| CNN (Zhou et al., 2018) | 0.905 | 0.314 | 0.256 | 0.78 | 0.410 | 0.317 | 0.792 | 0.625 | 0.683 |

| DanQ (Quang and Xie, 2016) | 0.909 | 0.331 | 0.279 | 0.796 | 0.438 | 0.365 | 0.797 | 0.638 | 0.693 |

| ChromeRNN | 0.917 | 0.349 | 0.305 | 0.848 | 0.520 | 0.528 | 0.817 | 0.664 | 0.742 |

| ChromeGCNconst | 0.911 | 0.342 | 0.301 | 0.84 | 0.507 | 0.507 | 0.810 | 0.657 | 0.729 |

| ChromeGCN | 0.912 | 0.346 | 0.306 | 0.807 | 0.454 | 0.412 | 0.812 | 0.657 | 0.730 |

| ChromeGCN | 0.919 | 0.358 | 0.320 | 0.837 | 0.495 | 0.484 | 0.821 | 0.67 | 0.752 |

Note: Best performing methods are shown in bold.

Furthermore, since ChromeGCN models the window relationships explicitly and not recurrently, we obtain a significant speedup at test time over the ChromeRNN baseline. ChromeGCN achieves a 6.3× speedup on the three GM12878 test chromosomes and 6.8× speedup at test time on the three K562 test chromosomes.

5.3 Analysis of using Hi-C data

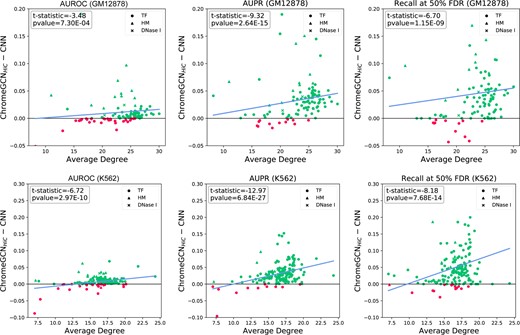

Figure 2 shows a detailed comparison of ChromeGCN versus the baseline CNN model across three different metrics for both GM12878 and K562. Each point represents a label, and the y-axis shows the absolute improvement of the ChromeGCN model over the CNN. The labels are sorted on the x-axis by the average degree of the label’s positive samples (i.e. windows where the label is positive) on the Hi-C map. We can see that for all three metrics, the improvements of the ChromeGCN over the CNN increase as the average degree of the labels increase. This indicates that the ChromeGCN is important for labels that have many neighbors in the Hi-C graph (i.e. those that are frequently in contact with other segments in the 3D space). Two of the TFs which obtain the highest performance increase (in the top 5) from using ChromeGCN over CNN, CEBPB STAT3, are validated by Ma et al. (2018), which show that these two TFs commonly co-occur with other TFs in the 3D space when binding.

Comparison of our ChromeGCN method versus the baseline CNN (Zhou et al., 2018) for three metrics. Each point represents one chromatin profile label. The labels are sorted in the x-axis by the average degree of their positive windows. The y-axis indicates absolute increase of the ChromeGCN over the CNN model. As the average degree increases, the improvement of the ChromeGCN model increases over the CNN. Green points indicate ChromeGCN performed better and red indicates the CNN performed better. The blue line shows the linear trend line. The ChromeGCN is significantly better, as demonstrated by the P values from a pairwise t-test

The P-values shown are computed by a pairwise t-test across all labels. The ChromeGCN model significantly outperforms the CNN model in all three metrics. Importantly, our results indicate that by using the long-range interactions given by Hi-C data, we can obtain improvements in modeling the chromatin state labeling, resulting in better classification accuracy.

5.4 Long-range interaction visualization

A significant merit of ChromeGCN is that by using known 3D genome relationships, we can find and visualize the critical relationships for chromatin profile prediction. To understand how important the Hi-C edges are for the predictions of ChromeGCN, we visualize the saliency map of , as explained in Section 4.4.

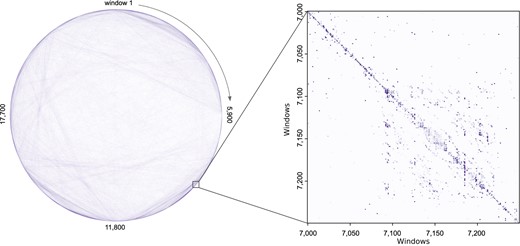

Figure 3 shows the Hi-C saliency map for Chromosome 8 in GM12878. Figure 3 (left) shows all 500k Hi-C contacts used Chromosome 8. Windows are represented as points along the circle, with a total of 23 600 windows. Lines between the windows represent Hi-C edges, and the darkness of the line represents the saliency, or importance of that edge for chromatin state prediction across all windows in Chromosome 8. Figure 3 (right) shows the saliency map for 250 windows (total of 250 bp input) in Chromosome 8. Cell (i, j) tells us the importance of window column j for the prediction of window row i labels (Fig. 4).

Hi-C Saliency Map Visualization. Left: Saliency Map for the all 500k edges in for GM12878 Chromosome 8 (total of 23 600 windows). The darker the line, the more important that edge was for predicting the correct Chromatin profile, indicating that the Hi-C data were used by the GNN for that particular interaction. Right: Fine-grained analysis of the Chromosome 8 Saliency Map. This figure shows the normalized Saliency Map values for 250 windows (total of 250 bp input) in Chromosome 8

Although Figure 3 shows the Hi-C saliency map for all chromatin profile labels, we can also visualize the Hi-C saliency map for individual labels. The inner loop of Equation (7) changes to only use the label of interest. In Supplementary Appendix Figure SA9, we show the Hi-C saliency map for the YY1 TF on GM12878 Chromosome 8. This gives us insight into the important 3D contacts for YY1 binding.

6 Conclusion

In this work, we present ChromeGCN, a novel framework that combines both local sequence and long-range 3D genome data (via Hi-C data) for chromatin profile prediction. We show experimentally that ChromeGCN outperforms previous state-of-the-art methods that only use local sequence data. Additionally, we show that we can identify and visualize the important 3D genome dependencies using the proposed Hi-C saliency maps. We plan to further investigate the value of Hi-C saliency maps in future work.

In this work, we demonstrate our ChromeGCN on two well-known cell types. However, it is important to be able to port out method to other cell types. Fortunately, our method is broadly generalizable for any cell type where there is known epigenetics and HiC data. Additionally users can use any cell type which has HiC alike data (e.g. HiChIP or ChIA-PET). This is an important distinction of our work—all the user needs is data which denotes where the long-range relationships are in the genome. Furthermore, if a cell type does not have any training labels, we can use the genomic features and graph of a related cell type and perform transfer learning to predict on the new cell type. We plan to extend our work into this domain.

Although we demonstrate the importance of ChromeGCN on the task of chromatin profile prediction, ChromeGCN is a generic model for incorporating 3D genome structure into any genome sequence prediction task. We hope that this work encourages researchers to use known long-range relationships from 3D genome data when constructing machine learning models. We plan to release our data and code for greater visibility. ChromeGCN introduces an effective and efficient framework to model such relationships for better chromatin modeling, as well as an easy way to interpret important relationships.

Financial Support: This work was partly supported by the National Science Foundation under NSF CAREER award No. 1453580. Any Opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect those of the National Science Foundation.

Conflict of Interest: none declared.

References

ENCODE Project Consortium. (

Wikipedia contributors. (