-

PDF

- Split View

-

Views

-

Cite

Cite

Xiao Cai, Hua Wang, Heng Huang, Chris Ding, Joint stage recognition and anatomical annotation of drosophila gene expression patterns, Bioinformatics, Volume 28, Issue 12, June 2012, Pages i16–i24, https://doi.org/10.1093/bioinformatics/bts220

Close - Share Icon Share

Abstract

Motivation: Staining the mRNA of a gene via in situ hybridization (ISH) during the development of a Drosophila melanogaster embryo delivers the detailed spatio-temporal patterns of the gene expression. Many related biological problems such as the detection of co-expressed genes, co-regulated genes and transcription factor binding motifs rely heavily on the analysis of these image patterns. To provide the text-based pattern searching for facilitating related biological studies, the images in the Berkeley Drosophila Genome Project (BDGP) study are annotated with developmental stage term and anatomical ontology terms manually by domain experts. Due to the rapid increase in the number of such images and the inevitable bias annotations by human curators, it is necessary to develop an automatic method to recognize the developmental stage and annotate anatomical terms.

Results: In this article, we propose a novel computational model for jointly stage classification and anatomical terms annotation of Drosophila gene expression patterns. We propose a novel Tri-Relational Graph (TG) model that comprises the data graph, anatomical term graph, developmental stage term graph, and connect them by two additional graphs induced from stage or annotation label assignments. Upon the TG model, we introduce a Preferential Random Walk (PRW) method to jointly recognize developmental stage and annotate anatomical terms by utilizing the interrelations between two tasks. The experimental results on two refined BDGP datasets demonstrate that our joint learning method can achieve superior prediction results on both tasks than the state-of-the-art methods.

Availability: http://ranger.uta.edu/%7eheng/Drosophila/

Contact: [email protected]

1 INTRODUCTION

The mRNA in situ hybridization (ISH) provides an effective way to visualize gene expression patterns. The ISH technique can precisely document the localization of gene expression at the cellular level via visualizing the probe by colorimetric or fluorescent microscopy to allow the production of high quality images recording the spatial location and intensity of the gene expression (Fowlkes et al., 2008; Hendriks et al., 2006; L'ecuyer et al., 2007; Megason and Fraser, 2007). Such spatial and temporal characterizations of expressions paved the way for inferring regulatory networks based on spatio-temporal dynamics. The raw data produced from such experiments includes digital images of the Drosophila embryo (examples are visualized in Fig. 1) showing a particular gene expression pattern revealed by a gene-specific probe (Grumbling et al., 2006; Lyne et al., 2007; Tomancak et al., 2002, 2007). The fruit fly Drosophila melanogaster is one of the most used model organisms in developmental biology.

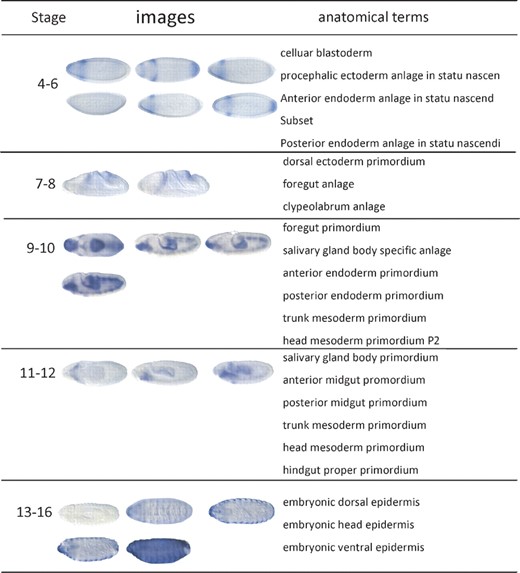

Examples of Drosophila embryo ISH images and associated anatomical annotation terms in the stages 4–6, 7–8, 9–10, 11–12 and 13–16 in the BDGP database. The darker stained region highlights the place where the gene is expressed. The darker color the region has, the higher the gene expression level is

Traditionally, such ISH images are analyzed directly by the inspection of microscope images and available from well-known databases, such as the Berkeley Drosophila Genome Project (BDGP) gene expression pattern database (Tomancak et al., 2002, 2007) and Fly-FISH (L'ecuyer et al., 2007). To facilitate spatio-temporal Drosophila gene expression pattern studies, researchers needed to solve two challenging tasks first: Drosophila gene expression pattern stage recognition (temporal descriptions) and anatomical annotation (spatial descriptions). As shown in Figure 1, Drosophila embryogenesis has been subdivided into 17 embryonic stages. These stages are defined by prominent features that are distinguishable in living Drosophila embryos (Weigmann et al., 2003). To recognize the stages of the Drosophila, embryos provide their time course patterns. On the other hand, the Drosophila gene expression patterns are often recorded by controlled vocabularies from the biologist's perspective (Tomancak et al., 2002). Such anatomical ontology terms describe the spatial biological patterns and often cross stages. What is more, because the ISH images are attached to each other collectively becoming bags of images, the corresponding stage label as well as anatomical controlled terms are the descriptions of the whole group of images instead of each individual image inside the bag. A Drosophila embryo ISH image bag belongs to only one stage, but has multiple related anatomical terms. Previously, those two tasks are tackled by domain experts. However, due to the rapid increase in the number of such images and the inevitable bias annotation by human curators, it is necessary to develop an automatic method to classify the developmental stage and annotate anatomical structure using controlled vocabulary.

Recently, a lot of research works have been proposed to solve the above two problems. They considered the stage recognition as a single-label multi-class classification problem while the anatomical annotation was treated as a multi-label multi-class classification problem. (Kumar et al., 2002) first developed an embryo enclosing algorithm to find the embryo outline and extract the binary expression patterns via adaptive thresholding. (Peng and Myers, 2004) and (Peng et al., 2007) developed new ways to represent ISH images based on Gaussian mixture models, principal component analysis and wavelet functions. Besides that, they utilized min-redundancy max-relevance to do the feature selection and automatically classify gene expression pattern developmental stages. Recently, (Puniyani et al., 2010) constructed a system (called SPEX2) and concluded that the local regression (LR) method taking advantage of the controlled term–term interactions can get the best enhanced anatomical controlled term annotation results. The LR method was proposed by Ji et al. and developed based on their previous works (Ji et al., 2008; 2010; Li et al., 2009; Shuiwang et al., 2009;). All of the above methods have provided new inspirations and insights for classifying or annotating Drosophila gene expression patterns captured by ISH. However, none of them considered doing those two tasks simultaneously. As we know, intuitively, anatomical controlled vocabulary terms provide evidence for the stage label and vice versa. For example, the early stage range is more likely annotated with the controlled terms such as ‘statu nascendi’ and ‘celluar’ than the terms ‘embryonic’ and ‘epidermis’. Therefore, besides the image–stage and image–annotation relationships which have been well studied and applied in the previous research, it is necessary to take advantage of the correlations between stage classes and annotation terms.

In this article, we propose a novel Tri-Relational Graph (TG) model that comprises the data graph, anatomical controlled terms graph, developmental stage label graph to jointly classify the stage of images and annotate anatomical terms simultaneously. Upon the TG model, we introduce a Preferential Random Walk (PRW) method to simultaneously produce image-to-stage, image-to-annotation, image-to-image, stage-to-image, stage-to-annotation, stage-to-stage, annotation-to-image, annotation-to-stage and annotation-to-annotation relevances to jointly learn the salient patterns among images that are predictive of their stage label and anatomical annotation terms. Our method achieves superior developmental stage classification performance and anatomical terms annotation results compared with the state-of-the-art methods.

We consider each image bag as a data point and extract the bag-of-word features that are widely used in computer vision research as the corresponding descriptors. Since the real object is 3D and each image can only provide 2D observation from a certain perspective, we integrate the bag-of-word features for different views. We summarize our contributions as follows:

This article is the first one to propose a novel solution to the questions ‘What is the developmental stage?’ and ‘What are the anatomical annotations’ simultaneously, given an unlabeled image bag.

Via the new TG model that we constructed, the relationships between stage label and anatomical controlled terms as well as the correlations among anatomical terms can be naturally and explicitly exploited by the graph-based semi-supervised learning methods.

We propose a new PRW method to seek the hidden annotation–annotation and annotation–stage relevances. Other than only using image-to-image relevance conducted by existing methods, we can directly predict the stage label and annotate anatomical controlled terms for unknown image bags.

2 DATA DESCRIPTORS

As we known, the Drosophila embryos are 3D objects. However, the corresponding image data can only demonstrate 2D information from a certain view. Since recent study has shown that incorporating images from different views can improve the classification performance consistently (Ji et al., 2008), we will use the images taken from multiple views instead of one perspective as the data descriptor. We only consider the lateral, dorsal and ventral images in our experiment due to the fact that the number of images taken from other views is much less than that of the above three views. All the images from BDGP database have been pre-processed, including alignment and resizing to 128×320 gray images. For the sake of simplicity, we extract the popular SIFT (Lowe, 2004) features from the regular patches with the radius as well as the spacing as 16 pixels (Shuiwang et al., 2009), which is shown in Figure 2. Specifically, we extract one SIFT descriptor with 128 dimensions on each patch and each image is represented by 133 (7×19) SIFT descriptors. Nevertheless, the above SIFT features cannot be directly used to measure similarity between data points (image bags), because the number of images in each image bag is different. In order to get a desired equal length descriptor to release the burden of later learning task, we need to build codebook for all extracted SIFT features first and then redo the data representations for each image bag based on the constructed codebook.

Demonstration of the regular patches. We extract one SIFT feature on one patch, where the radius and spacing of the regular patches are set to 16 pixels

2.1 Codebook construction

Usually the codebook is established by conducting the clustering algorithms on a subset of the local features, and the cluster centers are then chosen as the visual words of the codebook. In our study, we use K-means to do the clustering on the training image bags. Since the result of K-means depends on the initial centers, we repeat it with 10 random initializations from which the one resulting in the smallest objective function value is selected. The number of clusters is set to 1000, 500 and 250 for lateral, dorsal and ventral images, respectively, according to the total number of images for each view as shown in Table 1. (Other codebook sizes gave similar performance.)

| Stage range . | 4–6 . | 7–8 . | 9–10 . | 11–12 . | 13–16 . | Total . |

|---|---|---|---|---|---|---|

| Size of control term | 11 | 12 | 12 | 20 | 31 | 79 |

| No. of image bags | 500 | 500 | 500 | 500 | 500 | 2500 |

| No. of lateral images | 1514 | 812 | 727 | 1356 | 1004 | 5414 |

| No. of dorsal images | 226 | 324 | 431 | 447 | 724 | 2152 |

| No. of ventral images | 164 | 137 | 81 | 214 | 216 | 812 |

| Stage range . | 4–6 . | 7–8 . | 9–10 . | 11–12 . | 13–16 . | Total . |

|---|---|---|---|---|---|---|

| Size of control term | 11 | 12 | 12 | 20 | 31 | 79 |

| No. of image bags | 500 | 500 | 500 | 500 | 500 | 2500 |

| No. of lateral images | 1514 | 812 | 727 | 1356 | 1004 | 5414 |

| No. of dorsal images | 226 | 324 | 431 | 447 | 724 | 2152 |

| No. of ventral images | 164 | 137 | 81 | 214 | 216 | 812 |

| Stage range . | 4–6 . | 7–8 . | 9–10 . | 11–12 . | 13–16 . | Total . |

|---|---|---|---|---|---|---|

| Size of control term | 11 | 12 | 12 | 20 | 31 | 79 |

| No. of image bags | 500 | 500 | 500 | 500 | 500 | 2500 |

| No. of lateral images | 1514 | 812 | 727 | 1356 | 1004 | 5414 |

| No. of dorsal images | 226 | 324 | 431 | 447 | 724 | 2152 |

| No. of ventral images | 164 | 137 | 81 | 214 | 216 | 812 |

| Stage range . | 4–6 . | 7–8 . | 9–10 . | 11–12 . | 13–16 . | Total . |

|---|---|---|---|---|---|---|

| Size of control term | 11 | 12 | 12 | 20 | 31 | 79 |

| No. of image bags | 500 | 500 | 500 | 500 | 500 | 2500 |

| No. of lateral images | 1514 | 812 | 727 | 1356 | 1004 | 5414 |

| No. of dorsal images | 226 | 324 | 431 | 447 | 724 | 2152 |

| No. of ventral images | 164 | 137 | 81 | 214 | 216 | 812 |

2.2 Data (image bag) representations

After we get three codebooks, the images in each bag are quantized separately for each view. Features computed from patches on images with a certain view are compared with the visual words in the corresponding codebook and the visual word closest to the feature in terms of Euclidean distance is utilized to represent it. Therefore, if an image bag encompasses the images from three views, then it could be represented by three bags of words, one for each view. We concatenate the three vectors so that the images with different views (lateral, dorsal and ventral) in one bag can be represented by one vector. To be specific, Let xl∈ℝ1000, xd∈ℝ500 and xv∈ℝ250 denote the bag-of-words vector for images in a bag with lateral, dorsal and ventral view, respectively. The descriptor for this image bag can be represented as x=[xl; xd; xv]∈ℝ1750. Since not all the image bags enclose the images from all three views, the corresponding bag-of-words representation is a vector of zeroes if a specific view is absent. Moreover, in order to capture the variability of the number of images in each view and each bag, we normalized the bag-of-words vector to unit length. At last, each image bag is represented by a normalized vector x.

3 METHODS

In this section, we first construct a TG to model Drosophila gene expression patterns followed by proposing a novel PRW method. Using PRW on TG, we jointly make stage classification and annotate anatomical terms of Drosophila gene expression patterns.

={c1,···, cKc} represented by yic∈{0, 1}Kc, such that yic(k)=1 if xi is classified into class ck, and 0 otherwise. Meanwhile, each image bag xi is also annotated with a number of anatomical ontology terms

={c1,···, cKc} represented by yic∈{0, 1}Kc, such that yic(k)=1 if xi is classified into class ck, and 0 otherwise. Meanwhile, each image bag xi is also annotated with a number of anatomical ontology terms  ={a1,···, aKa} represented by yia∈{0, 1}Ka, such that yia(k)=1 if xi is annotated with term ak, and 0 otherwise. Also, for convenience, we write yi=[yicT, yiaT]T∈{0, 1}Kc+Ka. Without loss of generality, we assume the first l<n image bags are already labeled, which are denoted as T={xi, yi}i=1l. Our task is to learn a function f : X→{0, 1}Kc+Ka from T that is able to classify an unlabeled data point xi(l+1≤i≤n) into one stage class in

={a1,···, aKa} represented by yia∈{0, 1}Ka, such that yia(k)=1 if xi is annotated with term ak, and 0 otherwise. Also, for convenience, we write yi=[yicT, yiaT]T∈{0, 1}Kc+Ka. Without loss of generality, we assume the first l<n image bags are already labeled, which are denoted as T={xi, yi}i=1l. Our task is to learn a function f : X→{0, 1}Kc+Ka from T that is able to classify an unlabeled data point xi(l+1≤i≤n) into one stage class in  and to annotate it with a number of anatomical terms in

and to annotate it with a number of anatomical terms in  at the same time. For simplicity, we write Yc=[y1c,···, ync], Ya=[y1a,···, yna], and Y=[y1,···, yn]. As introduced in Section 1, the stage class and anatomical terms have some relations. We utilize the following affinity matrix to model their interrelations, R∈ℝKc×Ka, where R(i, j) indicates how closely class ci and term aj are related. In this work, we compute it as

at the same time. For simplicity, we write Yc=[y1c,···, ync], Ya=[y1a,···, yna], and Y=[y1,···, yn]. As introduced in Section 1, the stage class and anatomical terms have some relations. We utilize the following affinity matrix to model their interrelations, R∈ℝKc×Ka, where R(i, j) indicates how closely class ci and term aj are related. In this work, we compute it as

is the i-th row of Yc and

is the i-th row of Yc and  is the j-th row of Ya. Throughout this article, we denote a vector as a bold lowercase character and a matrix as an uppercase character. We denote the i-th entry of a vector v as v(i), and the entry at the i-th row and j-th column of a matrix M as M(i, j). ||v|| denotes the Euclidian norm of vector v. And the inner product of two vector v1 and v2 is defined as <v1, v2>=v1Tv2.

is the j-th row of Ya. Throughout this article, we denote a vector as a bold lowercase character and a matrix as an uppercase character. We denote the i-th entry of a vector v as v(i), and the entry at the i-th row and j-th column of a matrix M as M(i, j). ||v|| denotes the Euclidian norm of vector v. And the inner product of two vector v1 and v2 is defined as <v1, v2>=v1Tv2.3.1 The construction of TG

X=(

X=( X,

X,  X) can be induced, where

X) can be induced, where  X=X and

X=X and  X⊆

X⊆ X×

X× X. And we use kNN graph. To be specific, we connect xi, xj if one of them is among the other's k nearest neighbor and define the value of the edge connecting them by Equation (2). Because

X. And we use kNN graph. To be specific, we connect xi, xj if one of them is among the other's k nearest neighbor and define the value of the edge connecting them by Equation (2). Because  X characterizes the relationships between data points, it is usually called as data graph, such as the middle subgraph in Figure 3. Existing graph-based semi-supervised learning methods (Kang et al., 2006; Zha et al., 2008) only make use of the data graph, on which the class label information is propagated.

X characterizes the relationships between data points, it is usually called as data graph, such as the middle subgraph in Figure 3. Existing graph-based semi-supervised learning methods (Kang et al., 2006; Zha et al., 2008) only make use of the data graph, on which the class label information is propagated.

The TG constructed from the gene expression data. Solid lines indicate affinity between vertices within in a same subgraph, dashed lines indicates associations between vertices in two different subgraphs

A=(

A=( A,

A,  A) is induced, where

A) is induced, where  A=

A= and

and  A⊆

A⊆ A×

A× A. We call

A. We call  A as annotation label subgraph, which is shown as the right subgraph in Figure 3. Similarly, stage classification label graph shown as the left subgraph in Figure 3,

A as annotation label subgraph, which is shown as the right subgraph in Figure 3. Similarly, stage classification label graph shown as the left subgraph in Figure 3,  C=(

C=( C,

C,  C) can be constructed from stage classification labels, where

C) can be constructed from stage classification labels, where  C=

C= and

and  C⊆

C⊆ C×

C× C, where we define the value of the edge connecting two stage labels as WC(i, j)=‖Sbi−Sbj‖F, where ‖ ‖F means Frobenius norm and Sbi denotes the between class scatter matrix for stage i. Connecting

C, where we define the value of the edge connecting two stage labels as WC(i, j)=‖Sbi−Sbj‖F, where ‖ ‖F means Frobenius norm and Sbi denotes the between class scatter matrix for stage i. Connecting  X and

X and  A by the annotation associations via the green dashed lines, connecting

A by the annotation associations via the green dashed lines, connecting  X and

X and  C by the class associations via the blue dashed lines and connecting

C by the class associations via the blue dashed lines and connecting  C and

C and  A by the stage-term association via the purple dashed lines, we construct a TG as following:

A by the stage-term association via the purple dashed lines, we construct a TG as following:

AX=(

AX=( X, VA,

X, VA,  XA) connects GX and GA, whose adjacency matrix is YaT. Similarly, the adjacency matrix of

XA) connects GX and GA, whose adjacency matrix is YaT. Similarly, the adjacency matrix of  CX=(

CX=( X,

X,  C,

C,  XC) is YcT. The subgraph (

XC) is YcT. The subgraph ( C,

C,  A,

A,  CA) characterizes the associations between stage classes and anatomical terms whose adjacency matrix is R defined in Equation (1).

CA) characterizes the associations between stage classes and anatomical terms whose adjacency matrix is R defined in Equation (1). X, we aim to simultaneously classify and annotate an unlabeled data point using all the information encoded in

X, we aim to simultaneously classify and annotate an unlabeled data point using all the information encoded in  . Since all data points (gene expression image bags), stage terms and annotation terms are equally regarded as vertices on

. Since all data points (gene expression image bags), stage terms and annotation terms are equally regarded as vertices on  , our task is to measure the relevance between a class/anntotation term vertex and a data point vertex. As each class/annotation term has a set of associated training data points, which convey the same biological record information as the class/annotation term, we consider both a class/annotation term vertex and its labeled training image bag vertices as a group set,

, our task is to measure the relevance between a class/anntotation term vertex and a data point vertex. As each class/annotation term has a set of associated training data points, which convey the same biological record information as the class/annotation term, we consider both a class/annotation term vertex and its labeled training image bag vertices as a group set,

3.2 Preferential random walk

Standard random walk on a graph W can be described as a Markov process with transition probability M=D−1W, where di=∑jW(i, j) is the degree of vertex i and D=diag(d1,···, dn). Clearly, MT ≠ M and ∑jM(i, j)=1. When W is symmetric, it corresponds to an undirected graph. When W is asymmetric, it corresponds to a directed graph and di is the out degree of vertex i. Let p(t) be the distribution of the random walker at time t, the distribution at t+1 is given by p(t+1)(j)=∑ip(t)(i)M(i, j). Thus, the equilibrium (stationary) distribution of the random walk p*=p(t=∞) is determined by MTp*=p*. It is well known that the solution is simply given by p*=We/(∑idi)=d/(∑idi), where d=[d1,···, dn]T.

Similar to RWR (Tong et al., 2006), when we set the h to be a probability distribution in which all the entries are 0 except for those corresponding to  k, p*(i) measures how relevant the k-th group is to the i-th vertex on

k, p*(i) measures how relevant the k-th group is to the i-th vertex on  .

.

3.3 Preferential random walk on TG

In order to classify and annotate unlabeled data points using the equilibrium probabilities in Equation (6) of the PRW on TG, we need to construct the transition matrix M and the preferential distribution h from  .

.

Construction of the transition matrix M:

X,

X,  C and

C and  A respectively, and the rest six sub-matrices are the intersubgraph transition matrices among

A respectively, and the rest six sub-matrices are the intersubgraph transition matrices among  X,

X,  C and

C and  A. Let β1∈[0, 1] be the jumping probability, i.e. the probability that a random walker hops from

A. Let β1∈[0, 1] be the jumping probability, i.e. the probability that a random walker hops from  X to

X to  C and vice versa. And let β2∈[0, 1] be the jumping probability from

C and vice versa. And let β2∈[0, 1] be the jumping probability from  X to

X to  A or vice versa. Therefore, β1 and β2 regulates the reinforcement between

A or vice versa. Therefore, β1 and β2 regulates the reinforcement between  X and one of the other two subgraphs. When both β1=0 and β2=0, the random walk are performed independently on

X and one of the other two subgraphs. When both β1=0 and β2=0, the random walk are performed independently on  X, which is equivalent to existing graph-based semi-supervised learning methods only using the data graph

X, which is equivalent to existing graph-based semi-supervised learning methods only using the data graph  X. Similarly, we define λ as the jumping probability from

X. Similarly, we define λ as the jumping probability from  C to

C to  A or vice versa.

A or vice versa.

Let diX=∑jWX(i, j), diY=∑jY(i, j), diQa=∑jQa(i, j), diQc=∑jQc(i, j) where Qa=R+Ya and Qc=RT+Yc.

Construction of the preferential distribution H:

(k)(i)=1/∑iyi(k) if yi(k)=1 and h

(k)(i)=1/∑iyi(k) if yi(k)=1 and h (k)(i)=0, otherwise; h

(k)(i)=0, otherwise; h (k)(i)=1, if i=k, γ∈[0, 1] controls how much the random walker prefers to go to the data subgraph

(k)(i)=1, if i=k, γ∈[0, 1] controls how much the random walker prefers to go to the data subgraph

and other two subgraphs

and other two subgraphs

,

,

. It can be verified that ∑ih(k)(i)=1, i.e, h(k) is a probability distribution. Let IK be the identity matrix of size K×K, we write

. It can be verified that ∑ih(k)(i)=1, i.e, h(k) is a probability distribution. Let IK be the identity matrix of size K×K, we write

PRW on TG:

is the i-th row vector of matrix Pnc*. Therefore, we can do the stage classification and anatomical controlled term annotation simultaneously.

is the i-th row vector of matrix Pnc*. Therefore, we can do the stage classification and anatomical controlled term annotation simultaneously.4 DATA REFINEMENT

In this section, we will introduce the details of the data used in our experiment. Drosophila embryogenesis has 17 stages, which are divided into 6 major ranges, i.e. stages 1–3, 4–6, 7–8, 9–10, 11–12 and 13–16 (the stage 17 is usually studied individually), in the BDGP database (Tomancak et al., 2002). Each image bag is labeled with one stage term and many controlled vocabulary terms. The total number of anatomical controlled vocabulary terms is 303.

We used the following way to refine the dataset. First, we only keep the image bag data with lateral, dorsal and ventral view information. And then, we eliminate six common annotation terms, that is, ‘no staining, ubiquitous, strong ubiquitous, faint ubiquitous, maternal, rapidly degraded’, which can be regarded as outliers because they can neither provide stage-specific information nor record anatomical structures. After that, we remove the anatomical terms whose data sample is <50. We ignore the stage 1–3 data since the number of anatomical terms after the above procedure becomes 2, too small to be compared with other stages. And finally we get 79 anatomical annotation terms in total that we will consider to annotate the unlabeled image bag.

We refine the data mainly based on the following two reasons. On one hand, the annotation terms which appear in too few image bags are statistically too weak to be learned effectively. On the other hand, since we will use 5-fold cross-validations in our experiments, we have to guarantee there is at least one data point associated with each anatomical term in each fold. Moreover, in order to balance the number of image bags for different stages, we randomly sample 500 image bags as the data points for each stage. At last, the summary of the refined dataset is shown in Table 1.

5 EXPERIMENT

In this section, we will conduct experiments to evaluate PRW empirically on the refined dataset and compare it with other state-of-art classification methods. Since our method can do joint classification, in order to evaluate the benefit of joint learning, we compare its performance with that of the state-of-art multiclass single label or multiclass multilabel algorithms which can only handle either stage classification or anatomical term annotation problem. Our procedure is to train our model with stage labeled and anatomical term annotated image bags. All testing image bags are unlabeled with developmental stage and unannotated with anatomical controlled terms.

5.1 Experimental setup

When constructing PRW on TG, we used kNN graph setting k=9. We used ‘inverse’ 5-fold cross-validation to determine the values of the following five parameters, that is, using 1-fold for training and using the remaining 4-folds for testing to mimic the scenario in the real application where the number of training data is much less than the testing data. In our experiment, we found that the following five parameters are not sensitive in certain ranges with good performances. β1, β2 and λ controls the jumping between different subgraphs and cannot affect the result much if they are assigned in the range of (0.1, 0.45). α controls initial preference of the random walker and will get stable result if it is assigned in the range of (0, 0.1). γ controls how much the random walker prefers to go to the data subgraph or to go to two other subgraphs and it is usually in the range of (0.1, 0.3).

Besides those parameters, we also need to initialize the stage as well as anatomical controlled terms for the testing image bag xi, where i=l+1,…, n, l is the number of training image bag. In our experiment, we used k-nearest neighbor (KNN) method to do the initializations for both stage classification and anatomical term annotations tasks because of its simplicity and clear intuition. To be specific, we use k=1 and we abbreviate it as 1NN. Our joint classification framework will self-consistently amend the incorrect labels for stage and controlled terms. We perform 10 random splits of the data and report the average performance over the 10 trials. Please note that, in each trial, we still do ‘inverse’ 5-fold cross validation and record the average performance result as the result of that trial.

5.2 Image bag stage classification

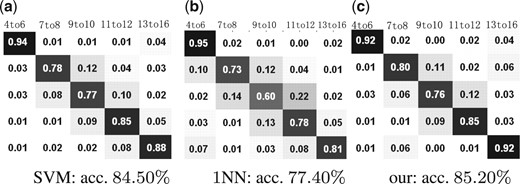

Drosophila gene expression pattern stage categorization is a single-label multi-class problem. We compare the result of our method with that of support vector machine (SVM) with radial basis function (RBF) kernel (Chang and Lin, 2001). We use the optimal parameter values for C and γ got from cross-validation as well. We also compare the classification result of 1NN that we use to do the initialization. We assess the classification in terms of the average classification accuracy and the average confusion matrices. Since the data that we used is class balanced, the mean value of the entries on the diagonal of the confusion matrix is also the average classification accuracy. From the resulting average confusion matrices shown in Figure 5, we can see that the average prediction accuracy of our method is better than that of the other two state-of-art methods, especially in the last stage 13–16, where the number of anatomical terms is greatly larger than that of the other stages.

5.3 Image bag controlled vocabulary terms annotation

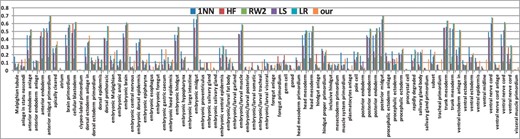

Besides the stage classification task, we also validate our method by predicting the anatomical controlled terms for the Drosophila gene expression patterns, which can be considered as a multi-class multi-label classification problem. The conventional classification performance metrics in statistical learning, precision and F1 score, are utilized to evaluate the proposed methods. For every anatomical term, the precision and F1 score are computed following the standard definition for the binary classification problem. To address the multi-label scenario, following Tsoumakas and Vlahavas (2007), macro and micro average of precision and F1 score are used to assess the overall performance across multiple labels. We compared four state of art multi-label classification methods: local shared subspace (LS) (Ji et al., 2008), local regression (LR) (Ji et al., 2009), harmonic function (HF) (Zhu et al., 2003) and random walk (RW) (Zhou and Schölkopf, 2004). All of them are proposed recently to solve the multilabel annotation problem. In addition, we compare the results of 1NN as well. For the first three methods we use the published codes posted on the corresponding author's websites. And we implement the RW method following the original work (Zhou and Schölkopf, 2004). For HF and RW methods, we follow the original work to solve the multilabel annotation only. Therefore, we only evaluate those two methods on data subgraph and annotation label subgraph without using any information derived from the classification label subgraph such as the stage–term correlation. Table 2 shows the average anatomical annotation performance of 79-term dataset. Compared to the above five stat-of-the-art methods, our method has the best results by all metrics. Figure 6 illustrates the average Micro F1 score of our method, 1NN, LS, LR, RW and HF approaches for all the anatomical terms on 79-term dataset. And again, our method consistently achieves best performance for most of the anatomical controlled terms.

| Method . | Ma Pre . | Ma F1 . | Mi Pre . | Mi F1 . |

|---|---|---|---|---|

| 1NN | 0.3455 | 0.3595 | 0.2318 | 0.2230 |

| LS | 0.5640 | 0.3778 | 0.3516 | 0.1903 |

| LR | 0.6049 | 0.4425 | 0.3953 | 0.2243 |

| RW | 0.4019 | 0.3385 | 0.2808 | 0.1835 |

| HF | 0.3727 | 0.3296 | 0.2756 | 0.1733 |

| Our method | 0.6125 | 0.4434 | 0.4057 | 0.2336 |

| Method . | Ma Pre . | Ma F1 . | Mi Pre . | Mi F1 . |

|---|---|---|---|---|

| 1NN | 0.3455 | 0.3595 | 0.2318 | 0.2230 |

| LS | 0.5640 | 0.3778 | 0.3516 | 0.1903 |

| LR | 0.6049 | 0.4425 | 0.3953 | 0.2243 |

| RW | 0.4019 | 0.3385 | 0.2808 | 0.1835 |

| HF | 0.3727 | 0.3296 | 0.2756 | 0.1733 |

| Our method | 0.6125 | 0.4434 | 0.4057 | 0.2336 |

Ma Pre, Avg. Macro Precision; Ma F1, Avg. Macro F1; Mi Pre, Avg. Micro Precision; Mi F1, Avg. Micro F1.

| Method . | Ma Pre . | Ma F1 . | Mi Pre . | Mi F1 . |

|---|---|---|---|---|

| 1NN | 0.3455 | 0.3595 | 0.2318 | 0.2230 |

| LS | 0.5640 | 0.3778 | 0.3516 | 0.1903 |

| LR | 0.6049 | 0.4425 | 0.3953 | 0.2243 |

| RW | 0.4019 | 0.3385 | 0.2808 | 0.1835 |

| HF | 0.3727 | 0.3296 | 0.2756 | 0.1733 |

| Our method | 0.6125 | 0.4434 | 0.4057 | 0.2336 |

| Method . | Ma Pre . | Ma F1 . | Mi Pre . | Mi F1 . |

|---|---|---|---|---|

| 1NN | 0.3455 | 0.3595 | 0.2318 | 0.2230 |

| LS | 0.5640 | 0.3778 | 0.3516 | 0.1903 |

| LR | 0.6049 | 0.4425 | 0.3953 | 0.2243 |

| RW | 0.4019 | 0.3385 | 0.2808 | 0.1835 |

| HF | 0.3727 | 0.3296 | 0.2756 | 0.1733 |

| Our method | 0.6125 | 0.4434 | 0.4057 | 0.2336 |

Ma Pre, Avg. Macro Precision; Ma F1, Avg. Macro F1; Mi Pre, Avg. Micro Precision; Mi F1, Avg. Micro F1.

5.4 The advantage of joint learning

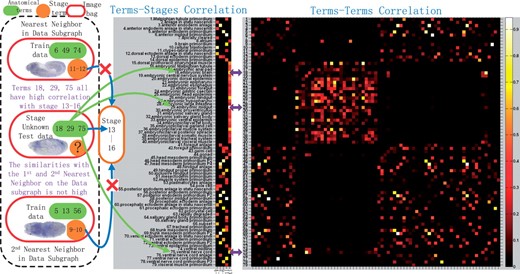

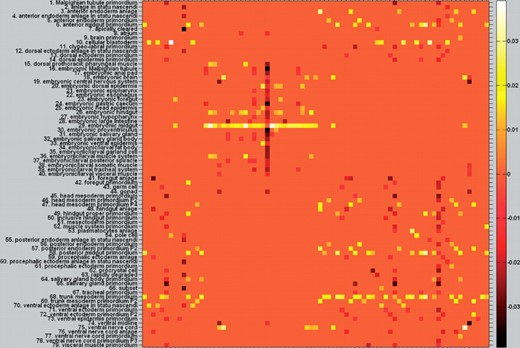

Unlike the traditional work, our proposed method can take advantage of all the information to do the stage classification and anatomical term annotation simultaneously. Therefore, when the number of training data is scare, we can resort to both intrarelations and interrelations to make the decision for stage classification and anatomical controlled term annotation simultaneously. When there are strong correlation between those two tasks, we expect that the performance of both tasks will be enhanced by joint learning work than treating them individually and independently. Figure 4 shows the pairwise label correlations of the 79 terms and stage–term correlations between 5 stages and 79 terms. As highlighted by purple arrows, we can observe that there are high pairwise correlations between the terms ‘embryonic brain’,‘ventral nerve cord’ as well as ‘embryonic/larval muscle system’. Moreover, all the above three terms have high correlations with the stage 13–16, which can provide strong evidence that the given testing image bag could belong to the last developmental stage besides the induction from the data graph only. If our joint classification framework annotates it with all those three terms, although from the data similarity we cannot get strong evidence for the stage prediction, we can take advantage of the term–term as well as term–stage high correlations to adjust its stage to stage 13–16. In other words, relevant anatomical terms could help us to predict the stage label since they provide the spatial and temporal information of local structure corresponding to a specific embryo development stage. Nevertheless, not all anatomical terms will definitely benefit stage classification, which is consistent with our stage classification result. From Figure 5, we can see that our method may have competitive result compared with SVM with respect to some certain stage. However, given more anatomical term information, the performance of our method will gradually outperform the other methods, especially for the prediction result of stage 13–16.

The middle part demonstrates the terms–stages correlation and the right part shows the terms–terms correlation of 79 terms. The stage unknown test data shown in the left part is classified correctly as Stage 13–16, because of the strong correlation between the predicted stage and its predicted anatomical terms and vice versa, NOT the similarity of its first and second nearest neighboring data induced from the data graph only

Stage classification results in terms of confusion matrices on 79-term dataset: (a) the confusion matrix calculated by SVM (b) the confusion matrix calculated by 1NN. (c) the confusion matrix calculated by our method. (a) SVM: acc. 84.50%; (b) 1NN: acc. 77.40%; (c) our: acc. 85.20%

The Avg. Micro F1 score of five methods on each term in 79-term dataset. (It is better to be viewed in colorful and zoomed in mode.)

5.5 The more meaningful asymmetric correlation matrix

The learned difference matrix. (It is better to be viewed in colorful and zoomed in mode.) In order to see the asymmetric entries more clearly, we plot Paa*−Paa*T. After PRW, the entries marked as brighter square have higher conditional probability (positive correlation) than its counterpart which is marked as darker color. This asymmetric reflects more accurate term–term correlation than the original symmetric assumption

6 CONCLUSION

In this article, we proposed a novel TG model to learn the task interrelations between stage recognition and anatomical terms annotation of Drosophila gene expression patterns. The standard bag-of-word features and three major views (lateral, dorsal and ventral) were used to describe the 3D Drosophila images. A new PRW method was introduced to simultaneously propagate the stage labels and anatomical controlled terms via TG model. Both stage classification and anatomical controlled term annotation tasks are jointly completed. We evaluated the proposed method using one refined BDGP dataset. The experimental results demonstrated in the real application, when the number of training data is scarce, our joint learning method can achieve superior prediction results on both tasks than the state-of-the-art methods. What is more, we can discovery more accurate asymmetric term–term correlation, which can potentially improve the results of both tasks even more.

ACKNOWLEDGEMENT

The author would like to thank Dr Sudhir Kumar for his help in data collection.

Funding: [This research was supported by National Science Foundation Grants CCF-0830780, CCF-0917274, DMS-0915228 and IIS-1117965].