-

PDF

- Split View

-

Views

-

Cite

Cite

Peng Liu, Yuchen Pan, Hung-Ching Chang, Wenjia Wang, Yusi Fang, Xiangning Xue, Jian Zou, Jessica M Toothaker, Oluwabunmi Olaloye, Eduardo Gonzalez Santiago, Black McCourt, Vanessa Mitsialis, Pietro Presicce, Suhas G Kallapur, Scott B Snapper, Jia-Jun Liu, George C Tseng, Liza Konnikova, Silvia Liu, Comprehensive evaluation and practical guideline of gating methods for high-dimensional cytometry data: manual gating, unsupervised clustering, and auto-gating, Briefings in Bioinformatics, Volume 26, Issue 1, January 2025, bbae633, https://doi.org/10.1093/bib/bbae633

Close - Share Icon Share

Abstract

Cytometry is an advanced technique for simultaneously identifying and quantifying many cell surface and intracellular proteins at a single-cell resolution. Analyzing high-dimensional cytometry data involves identifying and quantifying cell populations based on their marker expressions. This study provided a quantitative review and comparison of various ways to phenotype cellular populations within the cytometry data, including manual gating, unsupervised clustering, and supervised auto-gating. Six datasets from diverse species and sample types were included in the study, and manual gating with two hierarchical layers was used as the truth for evaluation. For manual gating, results from five researchers were compared to illustrate the gating consistency among different raters. For unsupervised clustering, 23 tools were quantitatively compared in terms of accuracy with the truth and computing cost. While no method outperformed all others, several tools, including PAC-MAN, CCAST, FlowSOM, flowClust, and DEPECHE, generally demonstrated strong performance. For supervised auto-gating methods, four algorithms were evaluated, where DeepCyTOF and CyTOF Linear Classifier performed the best. We further provided practical recommendations on prioritizing gating methods based on different application scenarios. This study offers comprehensive insights for biologists to understand diverse gating methods and choose the best-suited ones for their applications.

Introduction

Cytometry is a powerful single-cell assay that allows for high-dimensional profiling of diverse cell populations in suspension [1–3]. This technique has been widely applied to clinical diagnosis, immunology and cancer research, and the pharmaceutical industry [3–5]. Flow cytometry (FCM) and mass cytometry (or cytometry by time-of-flight, CyTOF) are two major techniques that employ labeled antibodies to quantify the cell surface and intracellular proteins. FCM labels the markers by fluorescence and measures the fluorescence emitted per cell as they pass individually through a laser beam. The traditional FCM technique can detect ~8–10 markers, while the recent study has developed a 43-color flow cytometry panel [6]. As an advanced technique, CyTOF utilizes antibodies chelated with heavy metal isotopes to identify cell surface and intracellular proteins. The metal isotopes are primarily from the lanthanide series of elements, making them neither biologically derived nor radioactive. Once cell suspensions are stained and introduced into the mass cytometer, they are nebulized into droplets containing individual cells. The droplets are then ionized with argon plasma to release the metal isotopes attached to the antibodies in each droplet. The ions are separated based on mass such that the lower mass biologically derived atoms are removed and those of higher mass enter a time-of-flight chamber to measure their mass-to-charge ratios, allowing for the quantification of relative isotope abundance in each droplet [7–9]. Since mass cytometry has minimal background and high specificity, CyTOF allows for simultaneous combined measurement of up to 40–50 different antibodies, enabling the identification of a large number of cellular populations from an individual sample.

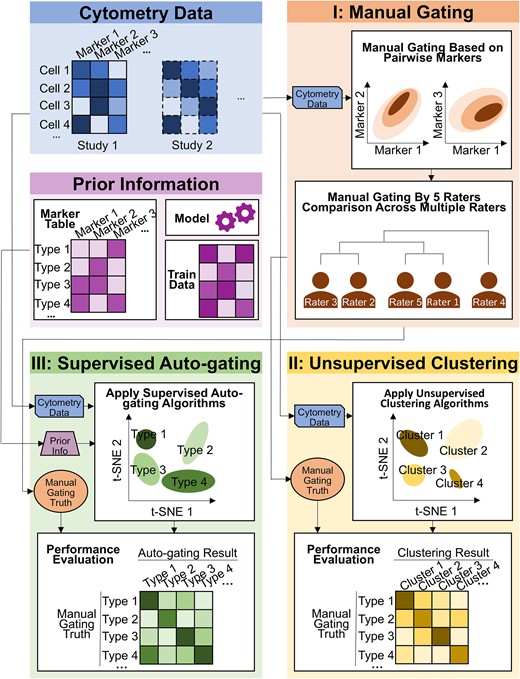

Analysis of cytometry data, although a powerful technique, brings challenges to the community. One of the major obstacles is to properly identify cell populations among thousands to millions of cells based on their high-dimensional markers [10]. In this study, we performed a comprehensive quantitative evaluation of available gating methods for high-dimensional cytometry data. Figure 1 provides a flow chart demonstrating the cytometry data format, composition of marker tables, and the evaluation pipeline of three gating categories in this study: manual gating, unsupervised clustering, and supervised auto-gating. The first manual 2D gating is the most traditional method used by biologists to identify cell populations. As illustrated in Fig. 1, in the “Manual gating” block, pairwise makers are selected based on prior knowledge (known markers of interest) and applied to identify a subset of cells. This subset of cells can be further selected and grouped by other makers to identify cell subpopulations. Eventually, hierarchical layers of cell populations are built according to different markers applied. Manual gating can be performed using FlowJo (BD Life Sciences), Cytobank (Beckman Coulter), or other software with friendly graphical user interfaces. It has advantages in identifying different cell populations of interest straightforwardly and flexibly. However, the gating process is experience-based, time-consuming, and relies on prior knowledge and arbitrary cutoffs to assign cell populations.

Workflow of the cytometry data analysis pipeline. The cell-by-marker intensity table per study was used as the input. For the (I) manual gating method, scatter plots on pairwise markers were drawn to define the cell populations. In this paper, manual gating was performed by five raters independently, and their gating results were compared. For the (II) unsupervised clustering methods, cytometry data were input into multiple clustering algorithms. Their results were compared to the manual gating and evaluated. For the (III) supervised auto-gating methods, both cytometry data and prior knowledge (such as maker table, model, and training data) were used as input. Multiple auto-gating algorithms were applied and evaluated based on the manual gating truth.

In addition to manual gating, computer-aided unbiased algorithms have been developed to identify cell populations in a more automated manner [11], including the second and third categories: unsupervised clustering and supervised auto-gating (which further includes semisupervised or supervised gating). For clustering methods, as shown in the “Unsupervised Clustering” block of Fig. 1, cells are grouped into clusters based on marker intensities without human intervention. Cell type characteristics of the identified clusters are, however, unknown and rely on researchers to further annotate. The supervised auto-gating methods (illustrated in the “Supervised Auto-gating” block of Fig. 1) not only group cells into clusters based on the marker intensities but also additionally curate the cell populations identified by assigning labels to each cell cluster based on prespecified cell-type marker tables. Compared to manual gating, computer-aided methods are faster, can simultaneously analyze multiple datasets in a highly efficient and reproducible manner, and do not rely on prior knowledge to cluster the cellular populations. However, these methods sacrifice the flexibility afforded by manual gating.

As shown in Fig. 1, three types of gating methods (manual gating, unsupervised clustering, and supervised auto-gating) have been developed with increasing automation and decreasing human intervention. With the advanced popularity and development of cytometry technology, several review and evaluation papers have been published in the last 10 years. Most review literature provides descriptive introductions of clustering and visualization tools, along with conceptual guidelines for users, but offers little to no quantitative comparisons to support the conclusion [11–20]. To our knowledge, only two papers have performed numerical evaluation. Weber et al. [21] compared 18 unsupervised clustering algorithms on four CyTOF and two FCM datasets. Liu et al. [22] evaluated seven unsupervised clustering methods and two semisupervised methods across six datasets to provide guidelines on choosing clustering algorithms for cytometry data. However, a more comprehensive evaluation of tools, especially in manual gating and supervised auto-gating, and extensive panels of evaluation criteria are lacking to conclude a solid guideline for users. In contrast to the limited scope of existing papers, we performed comprehensive investigation and evaluation in all three categories in this paper (see Supplementary Table 1 for a side-by-side comparison of existing literature and the current paper). Firstly, in manual gating, we collected gating results from five raters in three different labs and evaluated gating consistency across raters. Two hierarchical layers of gating results further served as ground truth to evaluate the gating performance of the other computer-aided algorithms. Secondly, in unsupervised clustering, we attempted 32 unsupervised clustering tools previously reviewed by Liu et al. [11] and successfully implemented and compared 23 tools across six datasets. Based on the truth from manual gating, we expanded evaluation criteria (adjusted Rand index [ARI] and F-measure) and computing benchmarks to provide an evaluation panel for prioritizing overall tool performance. We also evaluated the tools’ ability to detect rare populations, which is critical in many biological or clinical applications. Finally, in auto-gating, we successfully implemented 4 out of the 6 auto-gating (supervised or semi-supervised) methods [11] to provide guidelines on the application of automatic cell population identification.

The innovation and merits of this evaluation paper compared to existing review papers [11, 14, 15, 20–24] are highlighted below (Supplementary Table 1).

Gating methods

Manual gating (5 raters), unsupervised clustering (23 tools), and auto-gating (4 tools) methods were systematically reviewed and compared. To the best of our knowledge, such a comprehensive evaluation has not been performed before, particularly as previous assessments largely lacked evaluations of both manual gating and auto-gating.

Datasets

Tools were evaluated by both in-house and public datasets, including multiple species (human, mouse, and nonhuman primates) and cell types (peripheral blood mononuclear cells [PBMCs], placental villi, and bone marrow). For in-house data, two hierarchical layers of manual gating were used as ground truth, where the first layer included major populations, and the second layer contained a more detailed identification of subpopulations. Data for two rare populations were also used to check the tool’s ability to detect small clusters of cells.

Evaluation benchmarking

Multiple evaluation measurements were employed, including F-measure, ARI, and Cohen’s kappa index. In addition, performance in different settings for the number of clusters was compared and discussed. Computing time was evaluated on a grid of cell numbers to estimate the scalability of the tools for ultra-large cell number applications in the future.

Tool recommendation

Based on comprehensive comparisons of a wide range of computer-assisted tools, our overall recommendations align and further extend from the previous publications, suggesting tools based on criteria such as accuracy, runtime, cluster-setting capabilities, graphical visualization, and performance in detecting rare populations.

Practical guideline

All programming scripts for tool implementation and comparison were made available on GitHub (https://github.com/hung-ching-chang/GatingMethod_evalutation/), on Zenodo (https://zenodo.org/records/13851548), and attached as Supplementary Files. During our research, we were surprised to find that implementing many published tools was not straightforward; even after contacting the original authors and making multiple attempts by several co-authors, many tools proved infeasible to use. This paper thus enables future users in the field to easily apply and compare various tools on their datasets. The GitHub and Zenodo resources also provide an evaluation platform when a new gating method is developed in the future.

Materials and methods

Cytometry by time-of-flight experimental pipeline for rhesus macaque non-human primate (NHP) samples

‘Placental samples’ were collected from the California Primate Center at the University of California–Davis as described in our published study [25] and from which CyTOF experiment data were extracted. Briefly, pregnant macaques were injected with either lipopolysaccharide (LPS) or saline solution; their offspring were delivered via Cesarean section 16 h following injection, and their placental biopsies were collected following delivery. For cryogenic storage, each placental layer sample was stored and processed according to a previously published protocol [26]. Fresh tissue samples were cut to 1 mm size and stored in 1 ml of freezing media (10% dimethyl sulfoxide (DMSO, Sigma) and 90% fetal bovine serum (FBS, Gibco)) by slow-freezing in a Nalgene Mr. Frosty freezing container (Sigma). For experimental processing, fresh or cryopreserved samples were made into single-cell suspensions by digesting overnight with DNase and collagenase diluted 1:5000 in digestion media (Hank's Balanced Salt Solution (HBSS) w/o Ca++ and Mg++, containing 5 mM ethylenediaminetetraacetic acid (EDTA) and 10 mM 2-[4-(2-hydroxyethyl)piperazin-1-yl]ethanesulfonic acid (HEPES)) on an orbital shaker. Single-cell suspensions underwent staining for CyTOF as described below. Details for this dataset were described in our published manuscript [25].

‘PBMCs’ were isolated from rhesus macaque blood via a Ficoll gradient. Blood was diluted 1:1 with Phosphate-buffered saline (PBS) in a conical tube, and an equal volume of Ficoll was added below the blood layer with a Pasteur pipette. The tube underwent a 30-min spin with low acceleration and no brake to separate the PBMCs from the rest of the blood contents. The PBMC layer was pipetted out and washed with PBS twice before resuspension in freezing media and slow freezing in a Mr. Frosty for cryopreservation. They were then thawed and underwent staining for CyTOF as described below. Data for this set of samples are publicly available on Cytobank (Beckman Coulter).

Cytometry by time-of-flight experimental pipeline for human samples

‘Placental samples’ were collected through the University of Pittsburgh Biospecimen Core as described in Toothaker et al. [27] and from which CyTOF data were extracted. Human placental biopsies were separated by layers for long-term cryogenic storage or immediate experimental processing. They were stored cryogenically and prepared into single-cell suspensions in the same manner as described above for the NHP tissue samples. Single-cell suspensions underwent staining for CyTOF as described below. Details for this dataset were described in our published manuscript [27].

‘PBMCs’ were isolated from human blood draws via Ficoll gradient and cryopreserved as described above for the NHP blood samples. Human patients were recruited from Boston Children’s Hospital (BCH) under the BCH Institutional Review Board protocol number P00000529. They were then thawed and underwent staining for CyTOF as described below. CyTOF data for this dataset are publicly available on Cytobank (Beckman Coulter).

‘CyTOF’ staining was performed according to the previously published protocol in Stras et al. [28]. Briefly, single-cell suspensions were washed in cell-staining buffer (CSB) composed of PBS with 0.5% bovine serum albumin (Sigma) and 0.02% sodium azide. Viability was assessed with Rh103 (Fluidigm) DNA intercalator. After an additional wash, cells were stained with their respective surface-staining antibody cocktails. For intracellular staining, cells were washed and fixed utilizing FoxP3 fixation and permeabilization kit (Invitrogen). After fixation, cells were washed with the FoxP3 wash buffer and then incubated in their respective intracellular antibody cocktails. Cells were then washed with CSB again and fixed with 1.6% paraformaldehyde (Sigma). After storage in CSB overnight, cells were incubated with 191Ir/193Ir DNA Intercalator (Fludigm) in Maxpar Permeabilization Buffer (Standard Biotools) for cellular identification. On the day of analysis, cells were washed with MilliQ water and resuspended in normalization beads at a 1:10 dilution (Fluidigm). Data collection for the samples was done on a Fluidigm mass cytometer, and data were exported as Flow Cytometry Standard (FCS) files.

Data description

To evaluate the gating methods, we collected datasets generated by cytometry technology from both our in-house samples and publicly available sources. As shown in Table 1, six datasets were used in this study: human PBMCs, rhesus macaque PBMCs, human placental villi [27], rhesus macaque placental villi [25], human bone marrow [29], and mouse bone marrow [30]. Among these, the first four datasets were generated from our in-house libraries as described above. The human bone marrow [29] and mouse bone marrow [30] datasets were collected from the public databases from previous studies. For all six datasets, manual gating and cell population annotation were available (from the original papers for public data or by our manual gating for in-house data) that can serve as the ground truth for performance evaluation of computer-aided gating methods. Detailed descriptions of each dataset, cell population, and marker table are summarized in Table 1, Supplementary Tables 2 and 3. Down-sampled datasets to 20 000 cells were generated for tools that could not finish running the full datasets within 3 h.

| Study (citation) . | Sample ID . | Manual gating results . | Manual gating from five experts . | Clustering evaluation . | Rare population identification . | Auto-gating evaluation . | Time benchmark evaluation . | # Clusters (Layer 1, 2, rare) . | # Markers (Layer 1, 2, rare) . | # cells . | # study/sample . | Species . | Tissue . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Human PBMC (Cytobank) | Fresh_Tcell− | Y | Y | Y | Y | Y | Y | 7, 14, 3 | 6, 9, 12 | 190 931 | 1 | Human | PBMC |

| Fresh_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 171 297 | 1 | Human | PBMC | |

| Frozen_Tcell− | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 90 382 | 1 | Human | PBMC | |

| Frozen_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 87 459 | 1 | Human | PBMC | |

| Rhesus PBMC (Cytobank) | Pooled 6-sample | Y | N | Y | N | Y | N | 6, 13 | 6,9 | 1252 | 6 | Rhesus | PBMC |

| Human Villi [27] | ID1042 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 8334 | 1 | Human | Villi |

| ID1130 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 6485 | 1 | Human | Villi | |

| Pooled 12-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 42 414 | 12 | Human | Villi | |

| Rhesus Villi [25] | ID430 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 70 170 | 1 | Rhesus | Villi |

| ID437 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 66 726 | 1 | Rhesus | Villi | |

| Pooled 14-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 345 886 | 14 | Rhesus | Villi | |

| Human bone marrow [29] | Set1 | Y | N | Y | N | N | N | 8, 20 | 13 | 167 039 | 1 | Human | bone marrow |

| Set2.H1 | Y | N | Y | N | N | N | 7, 10 | 32 | 72 017 | 1 | Human | bone marrow | |

| Set2.H2 | Y | N | Y | N | N | N | 7, 10 | 32 | 31 324 | 1 | Human | bone marrow | |

| Mouse bone marrow [30] | S01 | Y | N | Y | N | N | N | 7, 24 | 39 | 53 173 | 1 | Mouse | bone marrow |

| Pooled 10-sample | Y | N | Y | N | N | N | 7, 24 | 39 | 514 386 | 10 | Mouse | bone marrow |

| Study (citation) . | Sample ID . | Manual gating results . | Manual gating from five experts . | Clustering evaluation . | Rare population identification . | Auto-gating evaluation . | Time benchmark evaluation . | # Clusters (Layer 1, 2, rare) . | # Markers (Layer 1, 2, rare) . | # cells . | # study/sample . | Species . | Tissue . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Human PBMC (Cytobank) | Fresh_Tcell− | Y | Y | Y | Y | Y | Y | 7, 14, 3 | 6, 9, 12 | 190 931 | 1 | Human | PBMC |

| Fresh_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 171 297 | 1 | Human | PBMC | |

| Frozen_Tcell− | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 90 382 | 1 | Human | PBMC | |

| Frozen_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 87 459 | 1 | Human | PBMC | |

| Rhesus PBMC (Cytobank) | Pooled 6-sample | Y | N | Y | N | Y | N | 6, 13 | 6,9 | 1252 | 6 | Rhesus | PBMC |

| Human Villi [27] | ID1042 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 8334 | 1 | Human | Villi |

| ID1130 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 6485 | 1 | Human | Villi | |

| Pooled 12-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 42 414 | 12 | Human | Villi | |

| Rhesus Villi [25] | ID430 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 70 170 | 1 | Rhesus | Villi |

| ID437 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 66 726 | 1 | Rhesus | Villi | |

| Pooled 14-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 345 886 | 14 | Rhesus | Villi | |

| Human bone marrow [29] | Set1 | Y | N | Y | N | N | N | 8, 20 | 13 | 167 039 | 1 | Human | bone marrow |

| Set2.H1 | Y | N | Y | N | N | N | 7, 10 | 32 | 72 017 | 1 | Human | bone marrow | |

| Set2.H2 | Y | N | Y | N | N | N | 7, 10 | 32 | 31 324 | 1 | Human | bone marrow | |

| Mouse bone marrow [30] | S01 | Y | N | Y | N | N | N | 7, 24 | 39 | 53 173 | 1 | Mouse | bone marrow |

| Pooled 10-sample | Y | N | Y | N | N | N | 7, 24 | 39 | 514 386 | 10 | Mouse | bone marrow |

| Study (citation) . | Sample ID . | Manual gating results . | Manual gating from five experts . | Clustering evaluation . | Rare population identification . | Auto-gating evaluation . | Time benchmark evaluation . | # Clusters (Layer 1, 2, rare) . | # Markers (Layer 1, 2, rare) . | # cells . | # study/sample . | Species . | Tissue . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Human PBMC (Cytobank) | Fresh_Tcell− | Y | Y | Y | Y | Y | Y | 7, 14, 3 | 6, 9, 12 | 190 931 | 1 | Human | PBMC |

| Fresh_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 171 297 | 1 | Human | PBMC | |

| Frozen_Tcell− | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 90 382 | 1 | Human | PBMC | |

| Frozen_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 87 459 | 1 | Human | PBMC | |

| Rhesus PBMC (Cytobank) | Pooled 6-sample | Y | N | Y | N | Y | N | 6, 13 | 6,9 | 1252 | 6 | Rhesus | PBMC |

| Human Villi [27] | ID1042 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 8334 | 1 | Human | Villi |

| ID1130 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 6485 | 1 | Human | Villi | |

| Pooled 12-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 42 414 | 12 | Human | Villi | |

| Rhesus Villi [25] | ID430 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 70 170 | 1 | Rhesus | Villi |

| ID437 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 66 726 | 1 | Rhesus | Villi | |

| Pooled 14-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 345 886 | 14 | Rhesus | Villi | |

| Human bone marrow [29] | Set1 | Y | N | Y | N | N | N | 8, 20 | 13 | 167 039 | 1 | Human | bone marrow |

| Set2.H1 | Y | N | Y | N | N | N | 7, 10 | 32 | 72 017 | 1 | Human | bone marrow | |

| Set2.H2 | Y | N | Y | N | N | N | 7, 10 | 32 | 31 324 | 1 | Human | bone marrow | |

| Mouse bone marrow [30] | S01 | Y | N | Y | N | N | N | 7, 24 | 39 | 53 173 | 1 | Mouse | bone marrow |

| Pooled 10-sample | Y | N | Y | N | N | N | 7, 24 | 39 | 514 386 | 10 | Mouse | bone marrow |

| Study (citation) . | Sample ID . | Manual gating results . | Manual gating from five experts . | Clustering evaluation . | Rare population identification . | Auto-gating evaluation . | Time benchmark evaluation . | # Clusters (Layer 1, 2, rare) . | # Markers (Layer 1, 2, rare) . | # cells . | # study/sample . | Species . | Tissue . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Human PBMC (Cytobank) | Fresh_Tcell− | Y | Y | Y | Y | Y | Y | 7, 14, 3 | 6, 9, 12 | 190 931 | 1 | Human | PBMC |

| Fresh_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 171 297 | 1 | Human | PBMC | |

| Frozen_Tcell− | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 90 382 | 1 | Human | PBMC | |

| Frozen_Tcell+ | Y | N | Y | Y | Y | N | 7, 14, 3 | 6, 9, 12 | 87 459 | 1 | Human | PBMC | |

| Rhesus PBMC (Cytobank) | Pooled 6-sample | Y | N | Y | N | Y | N | 6, 13 | 6,9 | 1252 | 6 | Rhesus | PBMC |

| Human Villi [27] | ID1042 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 8334 | 1 | Human | Villi |

| ID1130 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 6485 | 1 | Human | Villi | |

| Pooled 12-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 42 414 | 12 | Human | Villi | |

| Rhesus Villi [25] | ID430 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 70 170 | 1 | Rhesus | Villi |

| ID437 | Y (pooled gating) | N | Y | N | Y | N | 7, 14 | 6,9 | 66 726 | 1 | Rhesus | Villi | |

| Pooled 14-sample | Y | N | Y | N | Y | N | 7, 14 | 6,9 | 345 886 | 14 | Rhesus | Villi | |

| Human bone marrow [29] | Set1 | Y | N | Y | N | N | N | 8, 20 | 13 | 167 039 | 1 | Human | bone marrow |

| Set2.H1 | Y | N | Y | N | N | N | 7, 10 | 32 | 72 017 | 1 | Human | bone marrow | |

| Set2.H2 | Y | N | Y | N | N | N | 7, 10 | 32 | 31 324 | 1 | Human | bone marrow | |

| Mouse bone marrow [30] | S01 | Y | N | Y | N | N | N | 7, 24 | 39 | 53 173 | 1 | Mouse | bone marrow |

| Pooled 10-sample | Y | N | Y | N | N | N | 7, 24 | 39 | 514 386 | 10 | Mouse | bone marrow |

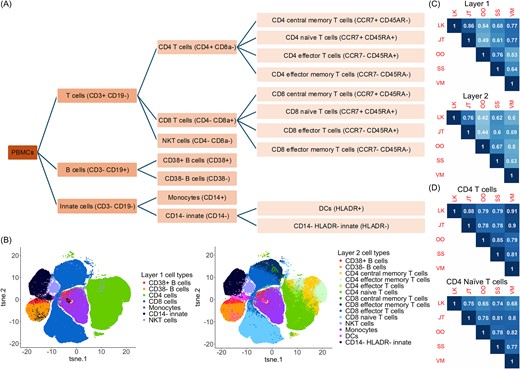

For the human PBMC study, libraries for four treatments were included: fresh unstimulated (−) T cell, fresh stimulated (+) T cell, frozen T cell −, and frozen T cell +. As shown in Fig. 2A and Supplementary Tables 2 and 3, the original datasets were based on staining for 52 markers. To serve as ground truth, manual gating was performed at two hierarchical layers (Fig. 2B). The first layer employed six markers (CD3, CD19, CD4, CD8a, CD38, and CD14) to identify seven major cell populations (CD14− innate, CD38− B cells, monocytes, Natural killer T (NKT) cells, CD4 T cells, CD8 T cells, and CD38+ B cells). The second layer further divided the major populations and eventually identified 14 cell types (CD14− HLADR− innate, CD38− B cells, CD4 central memory T cells, CD4 effector memory T cells, CD4 effector T cells, CD4 naïve T cells, CD8 central memory T cells, CD8 effector memory T cells, CD8 effector T cells, CD8 naïve T cells, Dendritic cells (DCs), monocytes, NKT, and CD38+ B cells) using nine markers (CD3, CD19, CD4, CD8a, CD38, CD14, CCR7, CD45RA, and HLA-DR) [31]. To evaluate the tool’s ability to identify rare populations, innate lymphoid cells (ILCs) and regulatory T cells (Tregs) (each with <3% of the total cell numbers) were selected by the manual gating based on 12 markers (CD3, CD19, CD4, CD8a, CD38, and CD14, CCR7, CD45RA, HLA-DR, CD25, FoxP3, and CD127).

Coherence of manual gating across five raters. (A) A hierarchical layer of the human PBMC populations gated by the selected markers. (B) t-SNE figures indicating the manual gating cell population in Layer 1 and Layer 2 by rater L.K. (C) Average pairwise kappa index among five raters with hierarchical clustering to illustrate the similarity of the raters based on their gating assignments. (D) Pairwise kappa index among five raters on CD4 T cells and CD4 naïve T cells.

In addition to the human PBMCs, the other three in-house datasets were gated into two hierarchical layers. For the NHP PBMC dataset, six samples were used and pooled together for the evaluation. Similar to the human PBMC dataset, the first layer included six major populations (CD14− innate, monocytes, NKT cells, CD8 T cells, CD4 T cells, and CD38+ B cells) using six markers (CD19, CD8a, CD14, CD38, CD4, and CD3). The second layer contained 13 clusters (DCs, monocytes, NKT, CD14- HLADR- innate, CD8 central memory T cells, CD8 effector memory T cells, CD8 effector T cells, CD8 naïve T cells, CD4 central memory T cells, CD4 effector memory T cells, CD4 effector cells, CD4 naïve T cells, and CD38+ B cells) gated by nine markers (CD19, HLA-DR, CD8a, CD14, CD45RA, CD38, CCR7_CD197, CD4, and CD3). For the human placental villi study, a total of 12 samples were analyzed. Two samples (ID1042 and ID1130) with the highest number of cells were selected as representative data. A pooled library merging all 12 samples was also used for evaluation. Similarly, for the NHP villi datasets, a total of 14 samples were analyzed. The top two samples (ID430 and ID437) and the pooled library were compared in this paper. For both human and NHP villi studies, the first layer includes 7 major populations (CD14− innate, monocytes, NKT cells, CD38- B cells, CD8 T cells, CD4 T cells, and CD38+ B cells) gated by six markers (CD18, CD8a, CD38, CD14, CD3, and CD4). The second layer contained 14 cell populations (CD4 central memory T cells, CD4 effector T cells, CD4 effector memory T cells, CD4 naïve T cells, CD8 central memory T cells, CD8 effector T cells, CD8 effector memory T cells, CD8 naïve T cells, DCs, monocytes, NKT cells, CD38- B cells, CD14- HLADR- innate, and CD38+ B cells) identified by nine markers (CD19, HLA-DR, CD8a, CD38, CD14, CD45RA, CD3, CCR7, and CD4). Supplementary Tables 2 and 3 describe the cell population and marker table in more detail.

Human and mouse bone marrow datasets were generated from previous studies and downloaded from a public database [29, 30]. For the human bone marrow study [29], one healthy donor was measured in the first dataset with 13 markers (Supplementary Table 3). Manual gating was available with 25 cell populations. To avoid rare populations, several subpopulations with fewer cells were merged or removed for our analysis. Eventually, 8 and 20 populations derived from manual gating and merging were used as the first and second layers of ground truth, respectively (Supplementary Table 2). In addition, two healthy donor samples were available for the second dataset where 32 markers were measured. On top of the original manual gating, we further removed or merged several smaller populations. Finally, we applied 7 and 10 populations as the first and second layers of underlying truth. For the mouse bone marrow datasets [30], 10 samples were available in total. We selected two mice (S01 and S02) and a pooled library of 10 samples as representative for the following study. This dataset measured 39 markers and manually gated cells into 7 and 24 populations for the first and second layers. The details of the cellular populations and markers used for gating and identification are described in Table 1 and Supplementary Tables 2 and 3.

Data format, preprocessing, and parameter setting

Cytometry data are commonly stored in FCS format, containing information on both metadata and marker expression. The associated metadata table generally describes experimental and channel information, such as marker name, marker description, and range information. The expression file is in an array or matrix format where each row represents an individual cell, and each column stands for a marker/channel. These channels correspond to fluorescent markers or heavy metals in flow or mass cytometry data, which have been described in the metadata table [13, 32, 33].

As the data preprocessing steps, all the negative intensities were trimmed at zero or very small random numbers close to zero (if the algorithms report an error when using multiple zeros as input). Cytometry data were further scaled and inverse hyperbolic sine–transformed (|${X}_{new}=\mathrm{asinh}\left(a+b\ast{X}_{old}\right)+c$|), with a = 0, b = 0.2, and c = 0) [34]. Tools were first applied to the full data with all cells. If the tool could not complete the run within 3 h, down-sampled data with 20 000 cells were used as an alternative. All the tools were run by default parameter settings except for the number of clusters. If the tool allowed for the specification of the number of clusters to be generated, the true number of clusters was used as input. For detailed scripts for preprocessing and running the tools, please refer to the script files deposited to GitHub (https://github.com/hung-ching-chang/GatingMethod_evalutation/) and Zenodo (https://zenodo.org/records/13851548).

Manual gating collected from five raters

The “fresh T-cells -” library from the human PBMC study was manually gated on the Cytobank [35] platform by five raters (L.K., J.T., O.O., S.S., and V.M.) who were asked to manually gate the immune cell populations based on their experience independently. No computational algorithm was allowed. Eventually, commonly identified cell populations by all the raters were selected for further evaluation. Based on the hierarchical gating structure in Fig. 2A, these cell populations were categorized into two layers, containing 7 and 14 populations for the first and second layers, respectively. The cell populations gated by LK in both layers were visualized by t-distributed stochastic neighbor embedding (t-SNE) [36] plots in Fig. 2B, and the gating results by the other four raters are shown in Supplementary Fig. 1. To quantitively evaluate the magnitude of agreement between the raters, both kappa index and ARI measurements were employed, as described in detail in the Methods section. A follow-up hierarchical clustering analysis was performed to group the raters with similar gating results based on cellular annotation in the two layers, respectively.

List of unsupervised clustering algorithms evaluated

Unsupervised clustering algorithms group cells into clusters based solely on their marker intensities, lacking the ability to assign the resulting clusters to known cell populations (Fig. 1). In a previous publication [11], we summarized 32 tools for unsupervised clustering, which were either specifically designed for cytometry data or for general clustering. Based on the number of input libraries, clustering methods are categorized by the tools that can work on individual samples and tools that need multiple libraries as input. This comparison study only focused on the former ones. As such, we quantitatively compared a total of 23 clustering methods (Table 2): ACCENSE [37], CCAST [38], ClusterX [32], CosTaL [39], Cytometree [40], densityCUT [41], DensVM [42], DEPECHE [43], FLOCK [44], flowClust [45], FlowGrid [46], flowMeans [47], flowPeaks [48], FlowSOM [49, 50], immunoClust [51], k-means [52], PAC-MAN [53], PhenoGraph [29], Rclusterpp [54], SamSPECTRAL [55], SPADE [56], SWIFT [57], and X-shift [30]. These tools have free publicly available software and are compatible with our parameter settings (see Data Format, Preprocessing, and Parameter Setting section). Manual gating was employed as the underlying truth to evaluate the performance of these unsupervised clustering tools.

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Rare . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | F-measure | Mean rank | Overall rank |

| ACCENSE | 22 | 22 | 22 | 15 | 13 | 18.8 | 21 |

| CCAST | 4 | 1 | 8 | 4 | 1 | 3.6 | 2 |

| ClusterX | 19 | 20 | 20 | 21 | 2 | 16.4 | 19 |

| CosTaL | 14 | 6 | 9 | 10 | 20 | 11.8 | 12 |

| Cytometree | 10 | 16 | 6 | 5 | 19 | 11.2 | 9 |

| densityCUT | 9 | 15 | 19 | 17 | 17 | 15.4 | 17 |

| DensVM | 13 | 7 | 10 | 10 | 11 | 10.2 | 7 |

| DEPECHE | 1 | 1 | 5 | 13 | 3 | 4.6 | 4 |

| FLOCK | 5 | 5 | 3 | 7 | 7 | 5.4 | 6 |

| flowClust | 2 | 9 | 1 | 6 | 5 | 4.6 | 4 |

| FlowGrid | 7 | 12 | 14 | 20 | 5 | 11.6 | 11 |

| flowMeans | 15 | 19 | 18 | 16 | 10 | 15.6 | 18 |

| flowPeaks | 11 | 17 | 17 | 19 | 8 | 14.4 | 15 |

| FlowSOM | 6 | 3 | 2 | 2 | 9 | 4.4 | 3 |

| immunoClust | 20 | 21 | 15 | 18 | 21 | 19 | 22 |

| k-means | 8 | 10 | 11 | 9 | 18 | 11.2 | 9 |

| PAC-MAN | 3 | 4 | 3 | 1 | 4 | 3 | 1 |

| PhenoGraph | 17 | 13 | 7 | 3 | 12 | 10.4 | 8 |

| Rclusterpp | 15 | 8 | 13 | 8 | 16 | 12 | 13 |

| SamSPECTRAL | 18 | 18 | 21 | 22 | 14 | 18.6 | 20 |

| SPADE | 21 | 10 | 15 | 14 | 15 | 15 | 16 |

| X-shift | 11 | 14 | 12 | 12 | 22 | 14.2 | 14 |

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Rare . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | F-measure | Mean rank | Overall rank |

| ACCENSE | 22 | 22 | 22 | 15 | 13 | 18.8 | 21 |

| CCAST | 4 | 1 | 8 | 4 | 1 | 3.6 | 2 |

| ClusterX | 19 | 20 | 20 | 21 | 2 | 16.4 | 19 |

| CosTaL | 14 | 6 | 9 | 10 | 20 | 11.8 | 12 |

| Cytometree | 10 | 16 | 6 | 5 | 19 | 11.2 | 9 |

| densityCUT | 9 | 15 | 19 | 17 | 17 | 15.4 | 17 |

| DensVM | 13 | 7 | 10 | 10 | 11 | 10.2 | 7 |

| DEPECHE | 1 | 1 | 5 | 13 | 3 | 4.6 | 4 |

| FLOCK | 5 | 5 | 3 | 7 | 7 | 5.4 | 6 |

| flowClust | 2 | 9 | 1 | 6 | 5 | 4.6 | 4 |

| FlowGrid | 7 | 12 | 14 | 20 | 5 | 11.6 | 11 |

| flowMeans | 15 | 19 | 18 | 16 | 10 | 15.6 | 18 |

| flowPeaks | 11 | 17 | 17 | 19 | 8 | 14.4 | 15 |

| FlowSOM | 6 | 3 | 2 | 2 | 9 | 4.4 | 3 |

| immunoClust | 20 | 21 | 15 | 18 | 21 | 19 | 22 |

| k-means | 8 | 10 | 11 | 9 | 18 | 11.2 | 9 |

| PAC-MAN | 3 | 4 | 3 | 1 | 4 | 3 | 1 |

| PhenoGraph | 17 | 13 | 7 | 3 | 12 | 10.4 | 8 |

| Rclusterpp | 15 | 8 | 13 | 8 | 16 | 12 | 13 |

| SamSPECTRAL | 18 | 18 | 21 | 22 | 14 | 18.6 | 20 |

| SPADE | 21 | 10 | 15 | 14 | 15 | 15 | 16 |

| X-shift | 11 | 14 | 12 | 12 | 22 | 14.2 | 14 |

Note: Tool SWIFT can only be applied to human PBMC data, so it's not included in the overall evaluation.

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Rare . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | F-measure | Mean rank | Overall rank |

| ACCENSE | 22 | 22 | 22 | 15 | 13 | 18.8 | 21 |

| CCAST | 4 | 1 | 8 | 4 | 1 | 3.6 | 2 |

| ClusterX | 19 | 20 | 20 | 21 | 2 | 16.4 | 19 |

| CosTaL | 14 | 6 | 9 | 10 | 20 | 11.8 | 12 |

| Cytometree | 10 | 16 | 6 | 5 | 19 | 11.2 | 9 |

| densityCUT | 9 | 15 | 19 | 17 | 17 | 15.4 | 17 |

| DensVM | 13 | 7 | 10 | 10 | 11 | 10.2 | 7 |

| DEPECHE | 1 | 1 | 5 | 13 | 3 | 4.6 | 4 |

| FLOCK | 5 | 5 | 3 | 7 | 7 | 5.4 | 6 |

| flowClust | 2 | 9 | 1 | 6 | 5 | 4.6 | 4 |

| FlowGrid | 7 | 12 | 14 | 20 | 5 | 11.6 | 11 |

| flowMeans | 15 | 19 | 18 | 16 | 10 | 15.6 | 18 |

| flowPeaks | 11 | 17 | 17 | 19 | 8 | 14.4 | 15 |

| FlowSOM | 6 | 3 | 2 | 2 | 9 | 4.4 | 3 |

| immunoClust | 20 | 21 | 15 | 18 | 21 | 19 | 22 |

| k-means | 8 | 10 | 11 | 9 | 18 | 11.2 | 9 |

| PAC-MAN | 3 | 4 | 3 | 1 | 4 | 3 | 1 |

| PhenoGraph | 17 | 13 | 7 | 3 | 12 | 10.4 | 8 |

| Rclusterpp | 15 | 8 | 13 | 8 | 16 | 12 | 13 |

| SamSPECTRAL | 18 | 18 | 21 | 22 | 14 | 18.6 | 20 |

| SPADE | 21 | 10 | 15 | 14 | 15 | 15 | 16 |

| X-shift | 11 | 14 | 12 | 12 | 22 | 14.2 | 14 |

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Rare . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | F-measure | Mean rank | Overall rank |

| ACCENSE | 22 | 22 | 22 | 15 | 13 | 18.8 | 21 |

| CCAST | 4 | 1 | 8 | 4 | 1 | 3.6 | 2 |

| ClusterX | 19 | 20 | 20 | 21 | 2 | 16.4 | 19 |

| CosTaL | 14 | 6 | 9 | 10 | 20 | 11.8 | 12 |

| Cytometree | 10 | 16 | 6 | 5 | 19 | 11.2 | 9 |

| densityCUT | 9 | 15 | 19 | 17 | 17 | 15.4 | 17 |

| DensVM | 13 | 7 | 10 | 10 | 11 | 10.2 | 7 |

| DEPECHE | 1 | 1 | 5 | 13 | 3 | 4.6 | 4 |

| FLOCK | 5 | 5 | 3 | 7 | 7 | 5.4 | 6 |

| flowClust | 2 | 9 | 1 | 6 | 5 | 4.6 | 4 |

| FlowGrid | 7 | 12 | 14 | 20 | 5 | 11.6 | 11 |

| flowMeans | 15 | 19 | 18 | 16 | 10 | 15.6 | 18 |

| flowPeaks | 11 | 17 | 17 | 19 | 8 | 14.4 | 15 |

| FlowSOM | 6 | 3 | 2 | 2 | 9 | 4.4 | 3 |

| immunoClust | 20 | 21 | 15 | 18 | 21 | 19 | 22 |

| k-means | 8 | 10 | 11 | 9 | 18 | 11.2 | 9 |

| PAC-MAN | 3 | 4 | 3 | 1 | 4 | 3 | 1 |

| PhenoGraph | 17 | 13 | 7 | 3 | 12 | 10.4 | 8 |

| Rclusterpp | 15 | 8 | 13 | 8 | 16 | 12 | 13 |

| SamSPECTRAL | 18 | 18 | 21 | 22 | 14 | 18.6 | 20 |

| SPADE | 21 | 10 | 15 | 14 | 15 | 15 | 16 |

| X-shift | 11 | 14 | 12 | 12 | 22 | 14.2 | 14 |

Note: Tool SWIFT can only be applied to human PBMC data, so it's not included in the overall evaluation.

List of auto-gating algorithms evaluated

Unsupervised clustering methods can identify cell clusters with distinct marker characteristics. However, without further annotation of cell populations, these clusters provide no biological insight. Manual annotation of cell clusters is time-consuming and biased. To overcome this problem, several automated annotation and cell-type identification algorithms have been developed. These auto-gating algorithms are designed to identify the resulting clusters from clustering algorithms based on either the prior knowledge of the relationship between lineage markers and the identity of cellular populations or learned from training datasets (Fig. 1). Our previous publication [11] summarized six auto-gating algorithms: DeepCyTOF [58], CyTOF linear classifier [59], ACDC [60], MP [61], OpenCyto [62], and flowLearn [63]. DeepCyTOF [58] utilizes deep learning techniques and training data to assign cells to known cell types. Likewise, the CyTOF liner classifier [59] applies training data to predict cell types based on linear discriminant analysis. In addition, ACDC [60] uses a prespecified marker matrix to guide the grouping of cells based on a semisupervised learning approach, and MP [61] employs a maker matrix to predict cell types through a Bayesian model. In this paper, we compared the performance of the above four methods, while OpenCyto and flowLearn were not evaluated since both of them are not fully automated and require user supervision. As the cell types could be further divided, the underlying truth for cell type identification is controversial and needs to be adjusted depending on the problem at hand. Therefore, to perform a comprehensive evaluation, two hierarchical layers of manually gated references were used in the manuscript.

Methods for performance evaluation

Definition

Cell population identification by manual gating is used as truth to evaluate the performance of unsupervised and supervised algorithms. For each individual or pooled library, a set of |$n$| cells |$S=\left\{{o}_1,{o}_2,\dots, {o}_n\right\}$| can be grouped into two partitions with |$r$| populations and |$c$| populations, defined as |$X=\left\{{X}_1,{X}_2,\dots, {X}_r\right\}$| and |$Y=\left\{{Y}_1,{Y}_2,\dots, {Y}_c\right\}$|. For the pairwise comparison between X and Y, the contingency table can be defined as

| |${Y}_1$| | |${Y}_2$| | … | |${Y}_c$| | Sum | |

| |${X}_1$| | |${n}_{11}$| | |${n}_{12}$| | … | |${n}_{1c}$| | |${a}_1$| |

| |${X}_2$| | |${n}_{21}$| | |${n}_{22}$| | … | |${n}_{2c}$| | |${a}_2$| |

| … | … | … | … | ||

| |${X}_r$| | |${n}_{r1}$| | |${n}_{r1}$| | … | |${n}_{rc}$| | |${a}_r$| |

| Sum | |${b}_1$| | |${b}_2$| | |${b}_c$| |

| |${Y}_1$| | |${Y}_2$| | … | |${Y}_c$| | Sum | |

| |${X}_1$| | |${n}_{11}$| | |${n}_{12}$| | … | |${n}_{1c}$| | |${a}_1$| |

| |${X}_2$| | |${n}_{21}$| | |${n}_{22}$| | … | |${n}_{2c}$| | |${a}_2$| |

| … | … | … | … | ||

| |${X}_r$| | |${n}_{r1}$| | |${n}_{r1}$| | … | |${n}_{rc}$| | |${a}_r$| |

| Sum | |${b}_1$| | |${b}_2$| | |${b}_c$| |

| |${Y}_1$| | |${Y}_2$| | … | |${Y}_c$| | Sum | |

| |${X}_1$| | |${n}_{11}$| | |${n}_{12}$| | … | |${n}_{1c}$| | |${a}_1$| |

| |${X}_2$| | |${n}_{21}$| | |${n}_{22}$| | … | |${n}_{2c}$| | |${a}_2$| |

| … | … | … | … | ||

| |${X}_r$| | |${n}_{r1}$| | |${n}_{r1}$| | … | |${n}_{rc}$| | |${a}_r$| |

| Sum | |${b}_1$| | |${b}_2$| | |${b}_c$| |

| |${Y}_1$| | |${Y}_2$| | … | |${Y}_c$| | Sum | |

| |${X}_1$| | |${n}_{11}$| | |${n}_{12}$| | … | |${n}_{1c}$| | |${a}_1$| |

| |${X}_2$| | |${n}_{21}$| | |${n}_{22}$| | … | |${n}_{2c}$| | |${a}_2$| |

| … | … | … | … | ||

| |${X}_r$| | |${n}_{r1}$| | |${n}_{r1}$| | … | |${n}_{rc}$| | |${a}_r$| |

| Sum | |${b}_1$| | |${b}_2$| | |${b}_c$| |

For |$i\in \left\{1,2,\dots, r\right\}$| and |$j\in \left\{1,2,\dots, c\right\}$|, |${n}_{ij}=\mid{X}_i\cap{Y}_j\mid$| represents the number of overlapping cells between partition |${X}_i$| and |${Y}_j$|, and |${a}_i$| and |${b}_j$|indicate |$\mid{X}_i\mid$|and |$\left|{Y}_j\right|$|, respectively.

When focusing on a certain population |$i$| from partition |$X$| and population |$j$| from partition |$Y$|, the 2-by-2 confusion matrix can be written as

| . | Prediction . | ||

|---|---|---|---|

| |${Y}_j$| . | |${}^{\neg }{Y}_j$| . | ||

| Truth | |${X}_i$| | TP (true positive) | FN (false negative) |

| |${}^{\neg }{X}_i$| | FP (false positive) | TN (true negative) | |

| . | Prediction . | ||

|---|---|---|---|

| |${Y}_j$| . | |${}^{\neg }{Y}_j$| . | ||

| Truth | |${X}_i$| | TP (true positive) | FN (false negative) |

| |${}^{\neg }{X}_i$| | FP (false positive) | TN (true negative) | |

| . | Prediction . | ||

|---|---|---|---|

| |${Y}_j$| . | |${}^{\neg }{Y}_j$| . | ||

| Truth | |${X}_i$| | TP (true positive) | FN (false negative) |

| |${}^{\neg }{X}_i$| | FP (false positive) | TN (true negative) | |

| . | Prediction . | ||

|---|---|---|---|

| |${Y}_j$| . | |${}^{\neg }{Y}_j$| . | ||

| Truth | |${X}_i$| | TP (true positive) | FN (false negative) |

| |${}^{\neg }{X}_i$| | FP (false positive) | TN (true negative) | |

In this paper, we employed the following measurements to indicate the agreement of the two partitions.

The ‘ARI’ aims to measure the agreement of two clustering partitions without cell population identification [64]. A value close to 1 indicates high consistency, while a value close to zero or even negative means decreased similarity. Assuming |$X$| represents the true populations identified by manual gating and |$Y$| the predicted groupings generated by clustering or auto-gating methods, the ARI is defined as

The ‘Kappa index’, also known as Cohen's kappa coefficient, is an indicator that measures the inter-rater reliability [65]. For a binary scenario, the kappa index can be defined as

‘F-measure’ (or F1 score) is an accuracy evaluation measurement to balance the precision and recall in binary conditions [66]. Assuming |$X$|represents the true population and |$Y$| the predicted groupings, the precision, recall, and F-measure are defined as follows:

Computing time evaluation

To benchmark the computing time, the “fresh T cells –” library from the human PBMC dataset was randomly down-sampled to 1000, 2000, 4000, 8000, 16 000, 32 000, 64 000, and 128 000 cells. Twenty-three unsupervised clustering and four supervised auto-gating methods were applied to these gradient cell numbers to evaluate both the performance and computing time. For tools where the number of clusters can be set as input, the true number of clusters was set. Otherwise, all the tools were run based on their default parameter settings (supplementary script files submitted to GitHub and Zenodo). Both clustering and auto-gating tools were run on the same Windows computer (Intel Core i7-8700 CPU @ 3.20GHz 3.19GHz, 16GB RAM, 64-bit operating system, x64-based processor) to reduce machine variability.

Results

Manual gating: consistency across multiple raters

Manual gating has been widely applied to cytometry data to group and annotate individual cells into populations of interest, where researchers have the flexibility to choose the markers and set up the cutoff to define cell populations. However, when performing manual gating, researchers often classify cells into populations based on their personal experience and preferred markers. Additionally, it’s rather arbitrary when one draws a line to split the populations. To test the consistency of manual gating across different raters, we invited five researchers from three different labs to perform manual gating independently and compare their performances on the “Fresh T cells – library” from the human PBMC dataset. When excluding the “other cells,” cells from the human PBMC study were grouped into 7 major clusters in Layer 1 and then further grouped into 14 clusters in Layer 2 (Fig. 2A). Figure 2B and Supplementary Fig. 1 illustrated the t-SNE plots of the cell populations gated by rater L.K. and all the other four researchers (J.T., O.O., S.S., and V.M.). To assess the gating similarity among the five raters, the kappa index was used as the evaluation method, where |$\kappa =1$|indicates complete agreement and |$\kappa =0$| means no agreement. As shown in Fig. 2C, the pairwise kappa index ranged from 0.44 to 0.86, showing a significant level of variation in manual gating by different experts. The highest kappa index was between LK and JT, 0.86 in Layer 1 and 0.76 in Layer 2. The lowest kappa index was the one between JT and OO in Layer 1 (0.49) and the one between LK and OO in Layer 2 (0.42). The kappa indexes in Layer 1 were higher than that in Layer 2 for the same pair, likely because the extra steps needed for the manual gating for Layer 2 introduced the variation. Furthermore, CD4 T cells and CD4 naïve T cells were selected to illustrate the agreement for individual cell populations between the raters in Fig. 2D. The same patterns were observed, though the actual numbers varied.

Given the raters were only instructed to gate the cell population by their own experience, the raters not only gated common populations differently but also focused on different sets of subpopulations. For example, LK gated 39 subpopulations in total, while OO gated 24 subpopulations, where FoxP3+ CD4 T-cells and GATA-3+ CD4 T cells were gated only by LK, while Th1 CD4 T cells and Th17 CD4 T cells were gated only by OO (Supplementary Table 4). We observed that the lines drawn to split the populations were subjective, and the raters had their own preferences in splitting the populations. These results gave rise to the need for more reproducible and less labor-intensive gating strategies, which motivated us to review the clustering and auto-gating tools in the following sections.

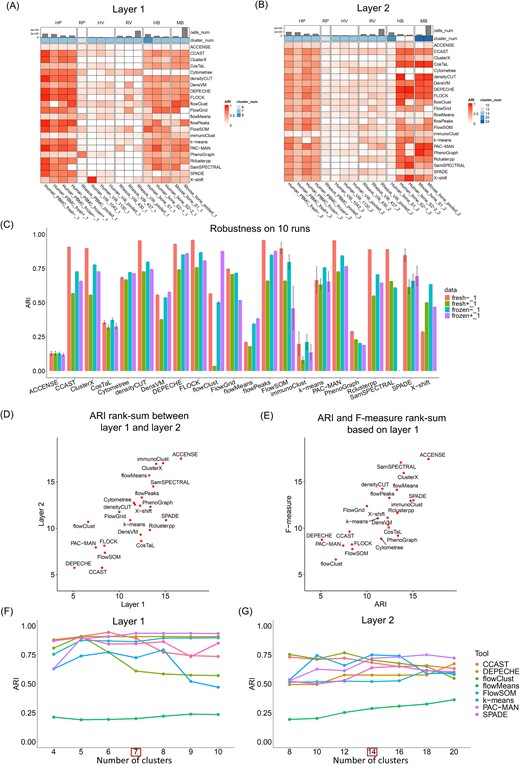

Clustering: detection of major cell populations

Unsupervised clustering refers to the computational method for cell grouping without population annotation. Thirty-two unsupervised clustering tools were reviewed and discussed in our previous study [11]. When we attempted to evaluate them comprehensively, 23 tools could be applied to individual datasets and installed successfully on our end (Table 2). To evaluate the performance of unsupervised clustering algorithms, we applied them to six datasets as described in Table 1. For each study, the major populations were identified hierarchically in two layers: Layer 1 represented top-level major populations, and Layer 2 included lower-level and detailed populations. Both the ARI and F-measure (described in the Methods section) were applied to evaluate the agreement between the clustering results for each tool and the manual gating truth. The ARI values for each dataset and method were illustrated in heatmaps with rows representing the tools and columns indicating datasets (Fig. 3A and B), where ARI = 1 indicated an exact match between the two groups, while an ARI value close to zero meant a lack of consistency. As illustrated by the heatmap color for ARI, results for both Layers 1 and 2 indicated overall high performance for the human PBMC and human bone marrow and mouse bone marrow studies, while comparatively lower performance for the rhesus PBMCs, human placental villi, and rhesus placental villi were observed. This was potentially due to human antibodies’ suboptimal staining of rhesus samples or the need for altered phenotyping in rhesus samples. Additionally, staining within the tissue (placental villi) as compared to blood resulted in less distinct marker differences than that obtained from PBMCs.

Major population detection by unsupervised clustering algorithms. (A) Performance of the clustering algorithms based on Layer 1 cell populations using the ARI measure. (B) Performance of the clustering algorithms based on Layer 2 cell populations using the ARI measure. (C) Robustness of the clustering algorithms on 10 runs using the ARI measure. (D) Rank-sum between Layer 1 and Layer 2 using the ARI measure. (E) Rank-sum between the ARI and F-measure based on Layer 1 data. (F) Performance of the clustering algorithms across different settings for the number of clusters on Layer 1 cell populations using the ARI measure. (G) Performance of the clustering algorithms across different settings for the number of clusters on Layer 2 cell populations using the ARI measure.

Many clustering algorithms include random initial steps to learn the grouping and subsequently cluster cells, which will result in distinct clustering outcomes for different runs. To check the robustness/consistency across multiple runs, all the tools were applied to the human PBMC dataset for 10 independent runs. As shown in Fig. 3C, the bar plot indicated the average and SD of the 10 repeated runs. Among these clustering algorithms, tools such as ACCENSE, CosTaL, FlowSOM, immunoClust, k-means, and SPADE presented larger variations among multiple runs, while the remaining tools generated comparatively consistent or even identical results.

When comparing the tool’s performance in detecting top-layer major populations and lower-layer detailed populations, we evaluated their performance against two hierarchical truths (described in the Methods section). For each truth layer, the rank-sum of the tool over multiple studies was calculated and averaged (Supplementary Table 5). Eventually, the average rank-sum per layer was compared (Fig. 3D), and the overall ranking was summarized in Table 2, where lower rank indicated higher consistency with the truth. When comparing tool performance between Layer 1 and Layer 2, Fig. 3D showed high agreement for most tools (close to the diagonal line), except for CCAST, CosTAL, DensVM, Rclusterpp, and SPADE. These five tools presented comparatively lower performance in Layer 1 but higher performance in Layer 2 (higher rank in Layer 1 than Layer 2). These tools tended to provide a larger number of clusters in their default parameter settings, which resulted in better detection of more detailed subcell populations than the major ones.

In addition to the ARI measure, the F-measure was also applied in our study as an alternative evaluation benchmark (see Methods section). Per tool-predicted cluster, the highest F-measure was recorded as the best match between the predicted cluster and the true cluster. Eventually, averaged F-measures were calculated to indicate the overall performance of the clustering algorithms. Supplementary Fig. 2 illustrates tool performance quantified by F-measure, and Table 2 summarizes their overall performance ranking. When comparing the performance consistency between multiple measurements, Fig. 3E showed the average rank-sum of the ARI and F-measure. The majority of the tools showed high agreement between the two measurements, while some tools showed comparatively higher performance for one measurement than the other. For example, CosTAL, PhenoGraph, Cytometree, and SPADE resulted in better performance by the F-measure but lower performance by the ARI measure. This may be due to the mapping between tool-predicted clusters with the ground truth. Generally, a tool yielding a larger number of clusters than the truth will tend to perform better by F-measure, which will choose the best cluster to match the truth. However, this tool will receive lower performance by ARI for punishing a large number of clusters. In conclusion, when considering both layer and measurement effects, PAC-MAN, FlowSOM, CCAST, flowClust, FLOCK, and DEPECHE performed overall the best for the selected datasets (Table 2 and Supplementary Table 5).

Clustering: setting the number of clusters

Setting an appropriate number of clusters is crucial for clustering cytometry data. Among the 23 tested algorithms, only eight tools allow users to directly set the number of clusters, namely, CCAST, DEPECHE, flowClust, flowMeans, FlowSOM, k-means, PAC-MAN, and SPADE. Other tools adjust clustering indirectly via parameters like resolution, settings for nearest neighbors, or other cell distance measures. To ensure a fair comparison, we only evaluated the performance of these eight tools using the “human PBMC fresh T cell –” library, with 7 and 14 manually gated clusters as the ground truth for the two hierarchical layers. For each algorithm, we set the gradient numbers of clusters close to the ground truth to evaluate their performances. As shown in Fig. 3F and G, most tools performed optimally at the true cluster number and had comparable performance under other settings. Generally, tools k-means, flowClust, and CCAST present larger variations of the performance across different numbers of clusters. Tool flowMeans presents a smaller variation in Layer 1 but a larger variation in Layer 2. As shown in Supplementary Fig. 2F and G, the F-measure was less sensitive to the cluster number than the ARI measure because it will select the optimal cluster to match the truth. In practice, without knowing the true number of cell populations, we suggest users set a cluster number close to the anticipated populations they want to detect. Alternatively, tools like INFLECT [67] have been developed specifically for FlowSOM to optimize the number of clusters. Borrowing techniques from tools designed to estimate the number of cell types in single-cell RNA-seq data [68] may also be beneficial.

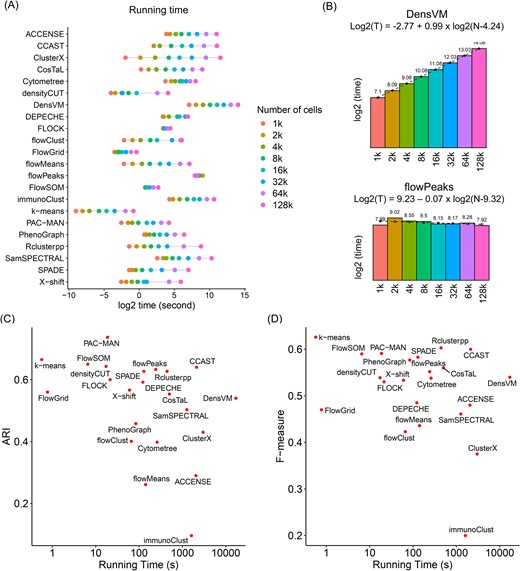

Clustering: computing time benchmarking

Besides clustering accuracy, computational cost is another factor in evaluating these tools. In this study, we selected the “human PBMC fresh T cells - library” as an example for benchmarking the computing time. Specifically, we subsampled cells from this dataset by log2 gradient numbers: 1k, 2k, 4k, 8k, 16k, 32k, 64k, and 128k. All the clustering algorithms were applied to these eight subsets based on default parameter settings to record their computing times. Figure 4A demonstrates the computing time (average of 10 runs) per tool per data subset. Among these tools, k-means, FlowGrid, and densityCUT overall consumed a shorter time for these datasets. To predict the running time for a larger number of cells, we fitted the computing time to logistic regression in terms of the number of cells (Fig. 4B and Supplementary Fig. 3). Except for flowPeaks (Fig. 4B), which consumed constant computing time across different datasets, all the other tools (for example, DensVM in Fig. 4B) resulted in longer computing time with an increasing number of cells. However, these tools yielded different increases in computing time for additional cells, which were reflected by the slope of the regression model. Tools such as FLOCK, FlowSOM, and FlowGrid achieved comparatively shorter computing costs for additional cells (with slope to be 0.12, 0.25, and 0.43 in Supplementary Fig. 3), indicating better compatibility to analyze larger datasets. In contrast, tools such as ClusterX and CCAST increased the amount of computing time fast (with slope to be 1.89 and 1.52 in Supplementary Fig. 3), which may result in much longer computing time for larger libraries.

Time benchmark for unsupervised clustering algorithms. (A) Time benchmark for clustering algorithms on gradient number of cells. (B) Computing time for two representative algorithms with linear (DensVM) and flat (flowPeaks) increasing speed. Bar graph represents the real running time, and the dashed line is for the predicted running time when fitting into the regression model. (C) The comparison between computing time and ARI performance. (D) The comparison between computing time and F-measure performance.

For an overall evaluation of the tools, Fig. 4C and D visualized the tool performance and the computing time simultaneously. In Fig. 4C, tools PAC-MAN, k-means, FlowSOM, densityCUT, FLOCK, and FlowGrid have higher ARIs with comparatively shorter computing time, while in Fig. 4D, tools k-means, FlowSOM, PAC-MAN, PhenoGraph, densityCUT, X-shift, and FLOCK yielded the best performance quantified by higher F-measure and lower computing cost.

Clustering: detection of rare populations

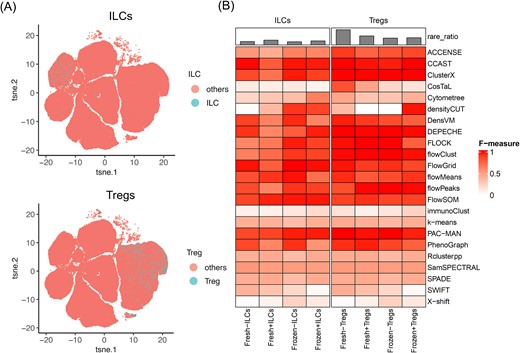

We referred to a “rare population” as a cell type with fewer cells compared with the major populations. Since each rare population only represents <3% of the whole library, it is easily missed when all the cells are clustered. To evaluate the capability of the clustering algorithms to identify rare populations, we selected two rare populations from the human PBMC dataset to illustrate (Fig. 5A): innate lymphoid cells (ILCs) and regulatory T cells (Tregs). The number of cells within each population and the markers applied are listed in Supplementary Tables 2 and 3. Their distributions to the whole libraries ae shown in the t-SNE plot in Fig. 5A. When aiming for rare population detection, parameters of the clustering tools were adjusted to detect a larger number of small clusters, rather than big clusters for the major population. The detailed settings are shown in the supplementary script files. F-measure was applied to evaluate the consistency between the clustering algorithms and the manual gating truth. As shown in Fig. 5B, the heatmap visualized the performance of 23 clustering algorithms in detecting these two rare populations across the four human PBMC libraries. In general, many tools resulted in overall high and robust performance across multiple libraries and for both of the rare populations, including ACCENSE, CCAST, ClusterX, DensVM, DEPECHE, FLOCK, flowClust, FlowGrid, flowMeans, flowPeaks, FlowSOM, PAC-MAN, and PhenoGraph.

Rare population detection by clustering algorithms. (A) Manual gating for the selection of the two rare populations: ILCs and Tregs. (B) Heatmap for the performance of the clustering algorithms on the rare population based on the F-measure.

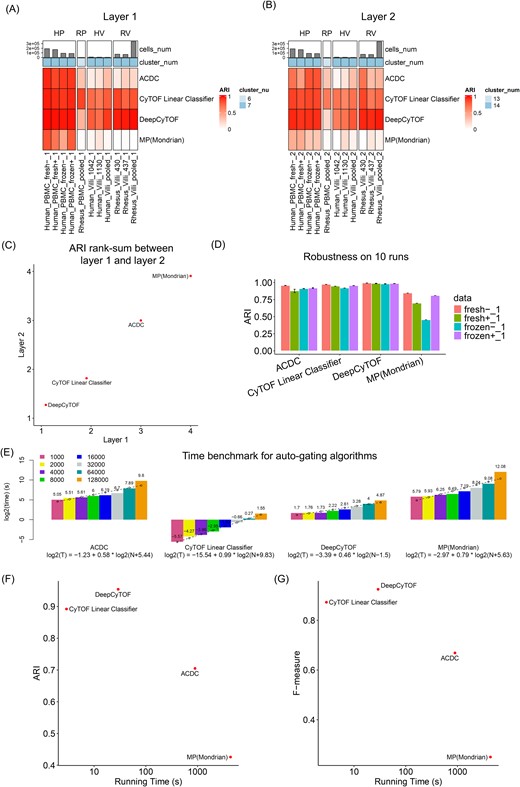

Auto-gating: prior knowledge preparation and performance evaluation

Supervised or semisupervised auto-gating methods refer to the algorithms that take in prior knowledge or training sets to train the model and then perform cell population identification based on these parameters. In this paper, we quantitatively reviewed four auto-gating methods: DeepCyTOF [58], CyTOF linear classifier [59], ACDC [60], and MP [61]. To check the agreement between these auto-gating algorithms and the manual gating truth, both ARI and F-measure were applied to evaluate the performance of these tools on the PBMC and the placental villi data. As shown in Fig. 6A, the heatmap indicated the ARI value for each tool (row) across each dataset (column) when using the Layer 1 manual gating as truth. Across all the datasets, DeepCyTOF and CyTOF Linear Classifier had an overall better performance, while ACDC and MP only performed well with the human PBMC data. The potential reason might be that the human PBMC dataset had a higher number of cells to cover a more robust set of different immune cell populations when compared with the other datasets. In addition to Layer 1, Layer 2 truth was applied and demonstrated similar patterns (Fig. 6B). The rank-sum comparison between Layers 1 and 2 is shown in Fig. 6C, where these four tools showed a high agreement of performance when applied to the two hierarchical truths, and DeepCyTOF and CyTOF Linear Classifier achieved high ARI consistently. To evaluate the robustness of these tools, we ran all the algorithms 10 times on the human PBMC data. As shown in Fig. 6D, all four auto-gating tools presented low variations across multiple runs. Besides the ARI measurement, a similar performance evaluation was measured by the F score, and the same conclusion can be drawn (Supplementary Fig. 4). Table 3 summarizes the rank-sum per measurement and their overall ranking, where DeepCyTOF and CyTOF Linear Classifier were the top two algorithms recommended based on our evaluation.

Population detection by supervised auto-gating algorithms. (A) Performance of the auto-gating algorithms based on Layer 1 cell populations using the ARI measure. (B) Performance of the auto-gating algorithms based on Layer 2 cell populations using the ARI measure. (C) Rank-sum between Layer 1 and Layer 2 using the ARI measure. (D) Robustness of the auto-gating algorithms on 10 runs using the ARI measure. (E) Time benchmark for auto-gating algorithms on gradient number of cells. Bar graph represents the real computing time, and the dashed line is for the predicted computing time when fitting into the regression model. (F) the comparison between computing time and ARI performance. (G) The comparison between computing time and F-measure performance.

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | Mean rank | Overall rank |

| ACDC | 3 | 3 | 3 | 3 | 3 | 3 |

| CyTOF Linear Classifier | 2 | 2 | 1 | 1 | 1.5 | 1 |

| DeepCyTOF | 1 | 1 | 2 | 2 | 1.5 | 1 |

| MP (Mondrian) | 4 | 4 | 4 | 4 | 4 | 4 |

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | Mean rank | Overall rank |

| ACDC | 3 | 3 | 3 | 3 | 3 | 3 |

| CyTOF Linear Classifier | 2 | 2 | 1 | 1 | 1.5 | 1 |

| DeepCyTOF | 1 | 1 | 2 | 2 | 1.5 | 1 |

| MP (Mondrian) | 4 | 4 | 4 | 4 | 4 | 4 |

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | Mean rank | Overall rank |

| ACDC | 3 | 3 | 3 | 3 | 3 | 3 |

| CyTOF Linear Classifier | 2 | 2 | 1 | 1 | 1.5 | 1 |

| DeepCyTOF | 1 | 1 | 2 | 2 | 1.5 | 1 |

| MP (Mondrian) | 4 | 4 | 4 | 4 | 4 | 4 |

| Data . | Major layer 1 . | Major layer 2 . | Major layer 1 . | Major layer 2 . | Overall ranking . | Overall ranking . |

|---|---|---|---|---|---|---|

| Measurement | ARI | ARI | F-measure | F-measure | Mean rank | Overall rank |

| ACDC | 3 | 3 | 3 | 3 | 3 | 3 |

| CyTOF Linear Classifier | 2 | 2 | 1 | 1 | 1.5 | 1 |

| DeepCyTOF | 1 | 1 | 2 | 2 | 1.5 | 1 |

| MP (Mondrian) | 4 | 4 | 4 | 4 | 4 | 4 |

In the next step, we aimed to check the computing cost of these auto-gating tools. Figure 6E indicates the computing time across a gradient number of cells for each algorithm (similar pipeline as was done for the clustering methods). DeepCYTOF achieved a low computing time and the lowest increasing slope (0.46) for the regression model among the four tools. CyTOF Linear Classifier resulted in the overall shortest computing time while maintaining a linear slope (0.99) for increasing time. When considering the tool’s computing time and performance at the same time, Fig. 6F and G illustrates that both DeepCyTOF and CyTOF Linear Classifier achieved the highest performance and shortest computing cost.

Discussion

This paper comprehensively investigated and compared three main categories of methods for analyzing cytometry data: manual gating, unsupervised clustering, and supervised auto-gating (Fig. 1). Among them, manual gating involves visually inspecting multidimensional plots of the data and drawing boundaries (gates) around populations of interest. This gating method is widely applied by expert researchers and can be operated with flexibility and transparency. However, manual gating has limitations when dealing with high-dimensional and large-scale datasets, and the results are subject to the researcher’s experience. In contrast to manual gating, unsupervised clustering and auto-gating methods are computer-assisted algorithms, which have the advantages of automation and reproducibility for large datasets, but face the limitations in transparency and parameter sensitivity. When distinguishing these two computational gating methods, clustering algorithms aim to group similar cells into clusters without predefined populations, while auto-gating tools are designed to mimic the manual gating process to identify and gate cell populations. Although many automated gating algorithms have been developed, manual gating and unsupervised clustering followed by manual annotation are the most widely used pipelines for cell population identification.

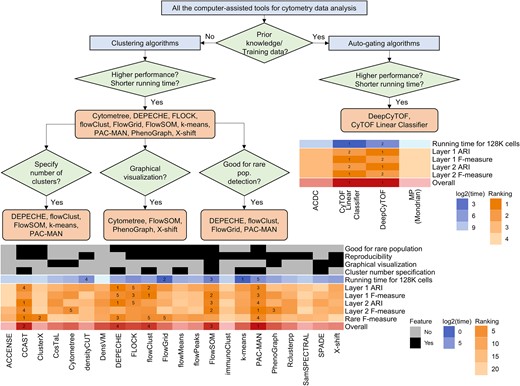

Overall recommendations

In this manuscript, we systematically evaluated 23 unsupervised clustering algorithms and 4 auto-gating tools (supervised or semisupervised). Tables 2 and 3 summarize the rank of these tools when applied to six cytometry datasets by both ARI and F-measure benchmarks and based on both Layers 1 and 2 truth. Figure 7 presents a workflow and comparison for an overall recommendation of the algorithms. Among all the computer-assisted tools, if no prior knowledge nor training data were available, unsupervised clustering tools were suggested. Among them, tools with higher performance and shorter computing time were recommended. Other specific recommendations were made as well. For example, if the users wanted to specify the number of clusters, tools such as DEPECHE, flowClust, FlowSOM, k-means, and PAC-MAN would be good options. If the researchers were interested in graphical visualization of the clustering results, tools such as Cytometree, FlowSOM, PhenoGraph, and X-shift could yield figures for hierarchical trees or gating networks. If the rare populations were the major focus of the study, we would recommend DEPECHE, flowClust, FlowGrid, and PAC-MAN. When considering all these factors and balancing accuracy and computing time, PAC-MAN, CCAST, and FlowSOM were the top three recommended tools. In addition to clustering algorithms, auto-gating methods were suggested for studies with prior knowledge of cell populations. In general, our evaluation study suggested DeepCyTOF and CyTOF Linear Classifier as they had the highest accuracy and shortest computing time.

Overall recommendation for the selection of computer-assisted algorithms. Workflow: recommendation of the cytometry tools for different applications. Heatmap for clustering tools: including tool features, rankings for computing time, accuracy evaluation, and overall accuracy ranking. Heatmap for auto-gating tools: rankings for computing time, accuracy evaluation, and overall accuracy ranking.

Depending on different research interests, some studies only focus on major cell types, while other studies aim to explore detailed subpopulations or even rare populations. In this study, we provided two hierarchical layers of truth: Layer 1 for major populations and Layer 2 for detailed types. When comparing the consistency of the gating results between the two layers, most of the tools had high agreement (Figs 3D and6C), while tools that generally yield a higher number of clusters tended to favor Layer 2 to detect more detailed populations. As an overall suggestion, if the researchers are interested in subpopulations or even rare populations, tools that can specify the number of clusters or perform well for rare populations are recommended (Figs 3 and 7). Meanwhile, selecting appropriate biomarkers is crucial for the detection of sub- and rare populations (Supplementary Table 3).

Among the six datasets, diverse samples were employed for tool comparison, including multiple tissue types (PBMCs, bone marrows, and placental villi), fresh and frozen samples, and samples from different species (human, nonhuman primate, and mouse). In general, PBMC and bone marrow samples outperformed the placental villi. This is likely due to marker expression on cells within the tissue being less distinct than in the bone marrow and the peripheral blood. As such, auto-gating for tissue samples is not recommended. In contrast, the difference between fresh and frozen PBMC samples was trivial, which indicated that this treatment wouldn’t influence the antibody capture in the cytometry experiment [26]. In addition, samples from three species were included in this study: human, rhesus, and mouse. The human samples performed the best, likely secondary to more optimized antibodies and better phenotyping.

Compared with existing review papers [11, 14, 15, 20–24] with a limited number of clustering tools and benchmarks, we provided a more systematic review and overall recommendations. For clustering, PAC-MAN ranks high in our study, but this tool was rarely discussed by the current review papers. Moreover, we evaluated the tools from the views of data types, multiple hierarchical layers, rare populations, settings for the number of clusters, and graphical visualizations. In addition, auto-gating highlights the promising future of cytometry data analysis, where our manuscript is the first attempt to comprehensively benchmark four cutting-edge tools in this field.

While this study aimed to provide a comprehensive evaluation of cytometry gating methods, several limitations remain to be addressed. First, given that clustering and auto-gating tools update quickly, we’ve tried our best to apply the newest version of the software. Tools that were no longer maintained, failed to install on our computer, or could not complete running on some datasets were not included in the final results. Second, although we attempted to include diverse datasets, the comparison of the results may differ by the specific datasets applied. In this study, both our in-house data and public datasets were employed to provide an unbiased evaluation. Third, the truth was generated by the manual gating of the most experienced research in our evaluation. As such, we only focused on the well-defined immune cell populations for the purpose of this study. Lastly, the comparison of auto-gating methods on rare populations was not performed, given the limitation of prior knowledge and training data.

Practical guidelines for computational cytometry data analysis

The experimental design for research and clinical applications of cytometry data was well summarized by previous research [4, 69, 70]. In general, the computational analysis of cytometry data involves three main steps: data preprocessing and normalization, gating and visualization, and downstream analysis. In preprocessing, tools like the R package flowClust [45] are used to import raw FCS files, followed by quality control (QC) to filter low-quality data, remove dead cells and outliers, and impute missing values. To scale the cytometry data, transformation, normalization, and batch effect correction will then be applied. All these preprocessing steps can be customized by many well-established QC and integration tools, including flowClust [45], PeacoQC [71], ANPELA [72], CATALYST [73], swiftReg [74], CytoNorm [75], CytofIn [76], and flowAI [77].

After preprocessing, gating will be performed to annotate the cell types of the single-cell cytometry data. This project has comprehensively reviewed manual-gating, 23 clustering, and 4 auto-gating approaches. In addition to the tools evaluated in this study, clustering tools such as Citrus [78] and CellCnn [79] are only compatible with multiple datasets. To optimize the clustering results, tools such as Phenograph and X-shift have the embedded algorithm to determine the optimal number of clusters. Meanwhile, tools such as INFLECT [67] are developed to identify the best number of clusters for FlowSOM. Based on QC and gating, multiple algorithms can be employed to visualize the high-dimensional cytometry data. For example, principal component analysis (PCA), t-distributed stochastic neighbor embedding (t-SNE), and Uniform Manifold Approximation and Projection (UMAP) are widely applied for dimension reduction. Tools such as CosTaL, Cytometree, FlowSOM, PhenoGraph, SPADE, and X-shift embed graphical learning and visualization algorithms.

Following this, multiple downstream analyses can be applied based on the research purpose and experimental design. For instance, differential abundance and state analysis aim to discover differentially expressed markers across different platforms, experimental conditions, or sample types within cell populations [80]. These analyses can be performed using tools Cydar [81], diffcyt [82], CytoGLMM [83], and CyEMD [80] following the single-cell clustering. Moreover, phenotypic comparisons of cell populations can be achieved by CytoCompare [84]. In addition to the tools that are focused on a specific step of cytometry data analysis, several pipelines have been well established for comprehensive analyses, including platforms such as CytoBank and FlowJo, and computational software like cytofkit [32], PICAFlow [85], CRUSTY [86], ImmunoCluster [87], Cyclone [88], CytoPipeline [89], CYANUS [80], and Cytofast [90].

Future opportunities for cytometry data analysis

Determining the number of clusters is a key challenge in unsupervised learning. In cytometry data analysis for single-cell clustering annotation, certain tools incorporate algorithms to optimize cluster numbers. For example, SPADE allows users to set a desired number of cluster k, while the tool selects the optimal number within the range of k/2 and 3 k/2 [56]. Tool INFLECT [67] is specifically designed to optimize the number of clusters for FlowSOM. However, few tools are tailored to general clustering algorithms for cytometry data. By leveraging the clustering algorithms developed for estimating the number of cell types in single-cell RNA-seq data [68], future methods specifically designed for cytometry data clustering will be developed to optimize the number of cell populations.