-

PDF

- Split View

-

Views

-

Cite

Cite

Qing Li, Zhihang Hu, Yixuan Wang, Lei Li, Yimin Fan, Irwin King, Gengjie Jia, Sheng Wang, Le Song, Yu Li, Progress and opportunities of foundation models in bioinformatics, Briefings in Bioinformatics, Volume 25, Issue 6, November 2024, bbae548, https://doi.org/10.1093/bib/bbae548

Close - Share Icon Share

Abstract

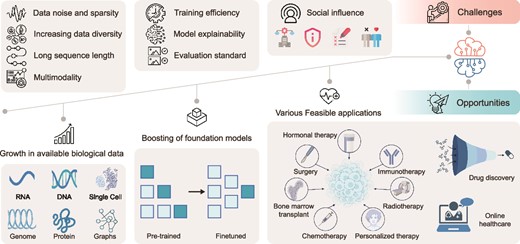

Bioinformatics has undergone a paradigm shift in artificial intelligence (AI), particularly through foundation models (FMs), which address longstanding challenges in bioinformatics such as limited annotated data and data noise. These AI techniques have demonstrated remarkable efficacy across various downstream validation tasks, effectively representing diverse biological entities and heralding a new era in computational biology. The primary goal of this survey is to conduct a general investigation and summary of FMs in bioinformatics, tracing their evolutionary trajectory, current research landscape, and methodological frameworks. Our primary focus is on elucidating the application of FMs to specific biological problems, offering insights to guide the research community in choosing appropriate FMs for tasks like sequence analysis, structure prediction, and function annotation. Each section delves into the intricacies of the targeted challenges, contrasting the architectures and advancements of FMs with conventional methods and showcasing their utility across different biological domains. Further, this review scrutinizes the hurdles and constraints encountered by FMs in biology, including issues of data noise, model interpretability, and potential biases. This analysis provides a theoretical groundwork for understanding the circumstances under which certain FMs may exhibit suboptimal performance. Lastly, we outline prospective pathways and methodologies for the future development of FMs in biological research, facilitating ongoing innovation in the field. This comprehensive examination not only serves as an academic reference but also as a roadmap for forthcoming explorations and applications of FMs in biology.

Introduction

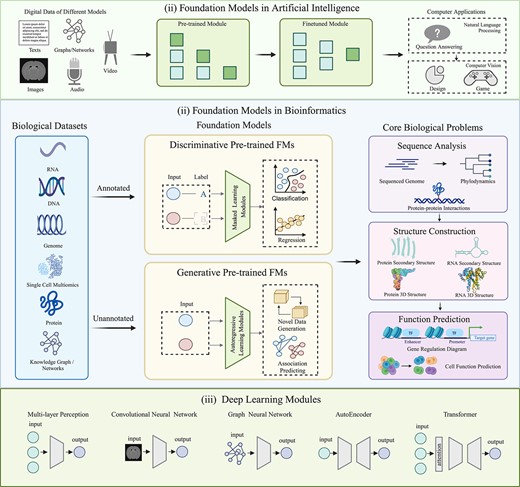

Bioinformatics endeavors to extract meaningful insights from diverse biological data sources such as amino acid sequences, protein structures, single-cell transcriptomics, biomedical text, and images. These efforts underpin critical applications including disease detection, drug design, and novel therapy discovery. However, their applicability often requires extensive customization for specific datasets over decades [1, 2]. Artificial intelligence (AI), powered by increasing data availability and computational resources, presents an alternative avenue for elucidating biological phenomena through the integration of deep learning mechanisms [3] such as multilayer perceptron (MLP) for nonlinear features [4], convolutional neural network (CNN) for image features [5], recurrent neural network for time series features [6], transformer for natural language features [7], graph neural network (GNN) for graph-represented features [8], and graph attention network for targeted graph features with distinct attention [9]. Foundation models (FMs) are inherently versatile, pretrained on a wide range of data to cater to multiple downstream tasks without requiring reinitialization of parameters. This broad pretraining, focusing on universal learning goals rather than task-specific ones, ensures adaptability in fine-tuning, few-shot, or zero-shot scenarios, significantly enhancing performance and garnering growing attention and popularity within the AI community [10]. General FMs undergo pretraining on digital data and are subsequently fine-tuned for specific computer applications of interest [Fig. 1 (i)]. They have emerged as the state-of-the-art approach for question answering [11], video games [12], AI education [13], medical AI [14], and other applications in computer science.

FMs in artificial intelligence (AI) and bioinformatics. (i) FMs in AI. General FMs are predominantly pretrained on diverse digital data and fine-tuned for various computer applications, such as question-answering systems, image design, and computer games. (ii) FMs in bioinformatics. FMs in bioinformatics primarily focus on core biological problems including biological sequence analysis, biological structure construction, and biological function prediction, encompassing both annotated and unannotated biological datasets. They can undergo pretraining on multiple phases of biological data for diverse downstream tasks. Based on the pretraining architectures of the foundation model, they can be classified into discriminative FMs, which capture complex patterns and relationships within annotated data through masking strategies for classification or regression tasks, and generative FMs, which focus on generating semantic features and context from novel data or predicting their associations. (iii) Deep learning modules. Deep learning modules are the cornerstone of building encoders and decoders in FMs. Commonly used modules include MLP, CNN, AutoEncoder (input is consistent with output), GCN (input with graph structure), and transformer (rectangle represents attention mechanism). All these deep learning modules can be trained in an end-to-end manner, enhancing computational efficiency through parallel processing mechanisms.

Recently, FMs have deciphered immense potential in bioinformatics. Compared with large language models (LLMs) that extract meaningful insights from biological texts with natural language processing (NLP) techniques, FMs provide essential groundwork for domain-specific applications, building blocks for specialized LLMs. It tailors LLMs for biological problems by fine-tuning process, narrowing the model representation gap between the general domain and the biological domain. A key strength of FMs lies in their capacity to learn accurate representations of intricate biological datasets. This is facilitated by data-intensive pretraining, a process that researchers can easily utilize for various downstream tasks with limited data through fine-tuning mechanisms (e.g. transfer learning for varying scale biological targets on a pretrained FM). This flexibility allows researchers to employ pretrained embeddings acquired from others to solve their targeting problems. In terms of reproducibility, discriminative FMs offer more robust generalizations in the translation of experimental inputs to outputs. Their flexibility and robustness surpass conventional methods, providing researchers with advantages in deciphering complex biological systems. Moreover, generative FMs can significantly improve biological performance by deeply probing a plethora of underutilized resources that are either unannotated or laden with noise [15]. The robust and reliable nature, along with the strong exploration and exploitation capacities and flexible adaptability to diverse downstream tasks, make FMs a compelling approach for addressing various biological challenges. These challenges include predicting unknown 3D structures or function annotations from sequences [16], discovering rare disease from limited data [17], and analyzing data affected by noise interference or overlap in bioinformatics.

Despite the widespread adoption of FMs across various research fields and industries, there remains a significant gap in a thorough comprehension of FMs in bioinformatics. Previous approaches have often relied on general domains of text/image/video/graph to analyze targets with the help of NLP, image processing, or graph learning methods [18]. However, these methods often fall short of meeting the needs of biological researchers. Typically, there exist at least three critical challenges that hindered the effective application of FMs in addressing biological problems. Firstly, general FMs, designed primarily for computer applications without biological expertise requirements, frequently suffer from model overfitting in bioinformatics. Secondly, most FMs heavily depend on versatile large-scale training datasets, which may not be applicable to domain-specific biological data [19]. As depicted by the futility theorem, predicted results of binding sites may not necessarily translate to functional outcomes in vivo, despite their high binding likelihood to in vitro sequences [20]. In many cases, solving a specific biological problem requires incorporating specialized insights derived from biological domain knowledge. Finally, the nonlinear deep features extracted from FMs in AI for biological tasks may face challenges regarding biological interpretability and model reliability due to their complex structures and the diverse nature of biological targets.

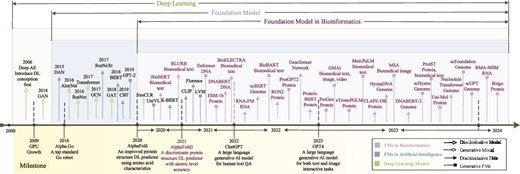

In this context, a review that encapsulates the state-of-the-art applications of FMs in bioinformatics is valuable and necessary to bridge the existing gap in a comprehensive understanding of these models, shedding light on their operational mechanisms, the contributions they have made, and the challenges and opportunities they present for future endeavors. This review specifically focuses on macromolecules rather than chemical micromolecules, addressing core biological problems such as sequence analysis, structure construction, and function prediction. In response to these challenges, we provide an extensive survey of FMs in bioinformatics. These models, trained in either a discriminative or generative manner, exhibit versatility in their applicability to downstream tasks, including biological multimedia analysis, core biological problems, scMultiomics analysis, and the integration of multimodal biological data. These challenges are intricately tied to various types of biological data, including DNA, RNA, proteins, and single-cell multi-omics data, as well as knowledge graphs/networks, and text/image data, as depicted in Table 1. Further, the evolutionary trajectory of FMs in bioinformatics, as presented in Fig. 2, underscores the development of network structures within deep learning, general FMs in AI, and FMs in bioinformatics with remarkable milestones.

Biological problems and their associated data in biological FMs. This table provides an overview of five distinct problems in bioinformatics addressed by biological FMs: multimedia analysis, core biological problems (sequence analysis, structure construction, function prediction), single-cell multi-omics (scMultiomics) analysis, and multimodal integration. These problems involve one or more categories of biological data, including DNA, RNA, proteins, scMultiomics, knowledge graphs/networks, and biological text/images. Biological multimedia analysis primarily focuses on biological text/image or video data. Core biological problems involve genes and mutations, biological phenomena data, and their relations and interactions. Multimodal integration biological problems may utilize multiple data types, such as biomedical text/images and proteins.

| Problems/Data . | Multimedia analysis . | Sequence analysis . | Construction . | Function prediction . | Multimodal integration . |

|---|---|---|---|---|---|

| DNA | √ | √ | |||

| RNA | √ | √ | |||

| Protein | √ | √ | √ | √ | |

| scMultiomics | √ | √ | √ | √ | |

| KGs/Net. | √ | √ | |||

| Text/Image | √ | √ | √ |

| Problems/Data . | Multimedia analysis . | Sequence analysis . | Construction . | Function prediction . | Multimodal integration . |

|---|---|---|---|---|---|

| DNA | √ | √ | |||

| RNA | √ | √ | |||

| Protein | √ | √ | √ | √ | |

| scMultiomics | √ | √ | √ | √ | |

| KGs/Net. | √ | √ | |||

| Text/Image | √ | √ | √ |

Biological problems and their associated data in biological FMs. This table provides an overview of five distinct problems in bioinformatics addressed by biological FMs: multimedia analysis, core biological problems (sequence analysis, structure construction, function prediction), single-cell multi-omics (scMultiomics) analysis, and multimodal integration. These problems involve one or more categories of biological data, including DNA, RNA, proteins, scMultiomics, knowledge graphs/networks, and biological text/images. Biological multimedia analysis primarily focuses on biological text/image or video data. Core biological problems involve genes and mutations, biological phenomena data, and their relations and interactions. Multimodal integration biological problems may utilize multiple data types, such as biomedical text/images and proteins.

| Problems/Data . | Multimedia analysis . | Sequence analysis . | Construction . | Function prediction . | Multimodal integration . |

|---|---|---|---|---|---|

| DNA | √ | √ | |||

| RNA | √ | √ | |||

| Protein | √ | √ | √ | √ | |

| scMultiomics | √ | √ | √ | √ | |

| KGs/Net. | √ | √ | |||

| Text/Image | √ | √ | √ |

| Problems/Data . | Multimedia analysis . | Sequence analysis . | Construction . | Function prediction . | Multimodal integration . |

|---|---|---|---|---|---|

| DNA | √ | √ | |||

| RNA | √ | √ | |||

| Protein | √ | √ | √ | √ | |

| scMultiomics | √ | √ | √ | √ | |

| KGs/Net. | √ | √ | |||

| Text/Image | √ | √ | √ |

Timeline of FMs in bioinformatics and their background in deep learning. The emergence of FMs in bioinformatics coincided with the ascent of deep learning, gaining significant momentum as these models showcased remarkable advancements in the era of big data. Landmark achievements such as Alpha Go, the first robot to meet top standards, significantly enriched the landscape of deep learning. Subsequent developments, exemplified by AlphaFold and AlphaFold2, revolutionized protein structure prediction from biological sequences. The introduction of GPT4 marked a pivotal moment, catalyzing a surge in the application of FMs. These strides propelled FMs (including discriminative FMs and generative FMs) in bioinformatics to acquire salient information for practical applications in biology.

This review delivers an in-depth exploration of recent advancements and challenges related to FMs, with a focus on cultivating a comprehensive understanding of biological issues. While the primary shift involves transitioning FMs from general domains to specialized biological multimedia domains, the review primarily concentrates on three core biological problems critical for analyzing the sequence, structure, and function of biological targets. Additionally, it highlights the significance of analyzing single-cell multi-omics and addressing multimodal integration biological problems, which involve the integration of multiple types of biological data to enhance performance further. The review concludes by deliberating on potential directions in light of current challenges and opportunities. In summary, the exploration of recent FMs in bioinformatics is structured across the following subsections: (i) FM architectures; (ii) biological FMs tailored for five types of biological problems, including the introduction of distinct problems and datasets, data preprocessing, and downstream tasks; (iii) challenges and opportunities; and (iv) conclusions.

Foundation model architectures

FMs has been primordially observed in NLP [21] and subsequently permeated into computer vision and various other domains of deep learning [22]. In bioinformatics, FMs trained on massive biological data offer unparalleled predictive capabilities through fine-tuning mechanisms. Based on pretraining modules, FMs in bioinformatics can be divided into two main categories: discriminative FMs are primarily designed to capture the semantic or biological meaning of entire sequences by constructing encoders that extract intricate patterns and relationships within annotated data through masked language modeling, resulting in meaningful embeddings. These models excel at tasks like classification and regression, where accurate predictions are derived from well-structured inputs. On the other hand, generative FMs focus on autoregressive methods to generate semantic features and contextual information from unannotated data. These models produce rich representations that are valuable for various downstream applications, particularly in generation tasks where the model must synthesize new data based on learned patterns. The complementary strengths of both discriminative and generative FMs highlight their versatility across a wide array of applications, from precise predictive modeling to creative content generation.

Discriminative pretrained foundation models

Conventional AI models are typically designed to train neural networks for specific tasks in an end-to-end manner, focusing on optimizing the model for a single task at a time. However, this approach often lacks generalizability and requires significant retraining when applied to new tasks. The advancements in NLP and word embeddings introduced a significant shift in this paradigm, exemplified by BERT (Bidirectional Encoder Representations from Transformers), which marked a breakthrough in embedding capabilities. BERT demonstrated substantial improvements in tasks like summarization, semantic understanding, and sentence classification, underscoring its ability to capture the overall semantic meaning of sequences.

As a discriminative model, BERT [23] leverages variations of masked language modeling during pretraining, where a portion of tokens is masked, and the model is trained to predict these masked tokens. The corresponding loss function is typically cross-entropy, applied to the masked tokens. This bidirectional context allows embeddings to fully capture the semantic nuances of a sequence. Discriminative pre-trained foundation models are particularly effective for classification and regression tasks, enabling a deeper understanding of complex biological processes in both normal and pathological states. These models are typically structured with encoder-only deep learning architectures that employ masking strategies on labeled inputs—such as words and characters—to extract relevant features. These features are then processed through self-attention mechanisms to effectively capture and interpret intricate relationships within the data, enhancing model performance across various applications.

Building on BERT’s success, discriminative pretrained FMs such as BERT have been adapted for specialized domains. For example, in the biomedical field, models like BioBERT [24], BLURB [25], and DNABERT [26] extend BERT’s pipeline to pretrain encoders specifically on biomedical text corpora. These models are designed to capture correlations and associations within large-scale biomedical data, supporting a wide range of downstream tasks such as entity recognition, relation extraction (RE), document classification, and question answering. ProteinBERT [27], for instance, introduces innovative strategies by replacing tokens and introducing false annotations during pretraining, compelling the model to accurately predict the correct sequence information even under challenging conditions. These advancements illustrate how discriminative pretrained FMs continue to evolve, offering robust solutions for increasingly complex and domain-specific applications.

Generative pretrained foundation models

Generative pretrained foundation models focus on generating coherent sequences by modeling the underlying distribution of the data. Unlike discriminative models, which primarily concentrate on understanding and classifying inputs, generative models are designed to predict the next token in a sequence, making them suitable for tasks that involve generating new content, such as text completion, translation, and content creation. These models are typically trained using autoregressive techniques, where each token is predicted based on previously generated tokens. A well-known example is GPT (Generative Pretrained Transformer), which generates text by predicting each word one at a time, conditioned on all the previous words. In the context of bioinformatics, generative models can be fine-tuned to produce meaningful sequences, such as protein or RNA structures, based on a given prompt. By learning from vast unannotated datasets, these models can generate outputs that are contextually rich and informative for various downstream applications. Generative pretrained FMs excel at capturing complex relationships within data, making them versatile tools for a wide array of tasks beyond classification, including synthesis, creativity, and exploratory data generation. Generative pretrained FMs usually tailor decoder-only modules, such as GPT-3 [28], and GPT-4 V [29], or encoder–decoder, such as ESM-2 [30], ESM3 [31], and T5 models [32], and are adept at simultaneously understanding and generating tasks. These tasks are achieved by optimizing an objective of bidirectional input autoregressive blank-filling, which involves a permuted language modeling approach [33]. For instance, the targeted encoder–decoder model, Enformer [34], predicts promoter–enhancer interactions directly from DNA sequences by leveraging substantial information flow within the CNN. ProtST [35] integrates mask prediction and representation alignment into the pretraining task, facilitating the modeling of paired protein sequences and textual descriptions. ESM3 demonstrates the potential of transformers to generate protein sequences and structures with new functions by training on data produced by evolution. As for the decoder-only model, ProtGPT2 [36] generates protein sequences that exhibit amino acid and disorder properties comparable to those found in natural proteins yet remain distinct from the existing protein space. Additionally, xTrimoPGLM [37] employs four distinct masking strategies to redesign the sequence of complementarity determining region 3 (CDR3).

As shown in Fig. 1 (iii), FMs consist of various neural network modules. MLP with multiple hidden layers in a feedforward neural network is suitable for regression and classification tasks. CNNs, like ResNet, excel in processing grid-like data for vision tasks. The graph convolutional network (GCN) handles graph-structured data, such as molecular graphs, by aggregating information from neighbors. AutoEncoder reduces data dimensions for their representations using encoder and decoder architectures. Transformer, initially proposed for NLP tasks, has positional encodings, self-attention, and multihead attention mechanisms. Generative pretrained FMs leverage these diverse modules for specific biological tasks. For example, the encoder–decoder model Bingo uses a transformer and the GNN for protein function prediction. Decoder-only models such as ProGen, xTrimoPGLM, and CLAPE-DB [38] combine transformer and the CNN for protein sequence, structure, and function analysis. In contrast, encoder-only models, e.g. BioBERT and HyenaDNA, use either transformer or MLP paired with the CNN to address biological text and DNA analysis tasks.

Remarkably, the choice of pretraining strategies holds significant importance in attaining optimal performance [39]. For example, CLAPE-DB combines a pretrained protein language model and constructive learning to predict DNA binding residues [40]. Similarly, HyenaDNA uses a sequence length scheduling technique to stabilize model pretraining and leverages longer context to better adapt to novel tasks [41]. Both discriminative and generative pretrained FMs possess the capability to update and pretrain neural networks via a back-propagation pipeline, leveraging the statistical outcomes of target variables and their estimated counterparts.

Tuning with foundation models

FMs, particularly in the context of LLMs and biological applications, provide several strategies for interaction depending on the specific needs of a task. These strategies range from zero-shot approaches requiring no additional training to more sophisticated methods that involve fine-tuning or conditional training [24, 25, 35, 41]. Understanding these mechanisms is key to maximizing the effectiveness of FMs across various applications, including bioinformatics.

Zero-shot learning (no-tuning)

Zero-shot learning enables FMs to perform tasks without any additional training or fine-tuning [25, 42]. In this approach, the model leverages the knowledge it has acquired during pretraining to handle entirely new tasks by relying solely on prompts or queries provided by the user. For instance, in a biological FM like Geneformer [17], BioBERT [24], or scGPT [42], zero-shot learning might be applied to infer the function of a protein sequence based solely on pre-existing general knowledge encoded in the model. This approach is particularly useful when there are limited annotated data available for fine-tuning, as it capitalizes on the model’s broad understanding of biological concepts.

Few-shot fine-tuning

Few-shot fine-tuning involves providing the model with a small number of labeled examples specific to a task [26, 27]. The model then adapts to the task through minimal additional training. In biological contexts, few-shot fine-tuning is valuable when a model needs to be specialized for niche applications, such as identifying rare genetic mutations or predicting highly specific protein–protein interactions (PPIs). Biological FMs like GMAI [14], BLURB [25], and Enformer [34] can be fine-tuned with just a few examples, making this approach both efficient and effective for tasks where obtaining large datasets is challenging.

Conditional training and prompt engineering

Conditional training involves adapting foundation models using carefully designed prompts or instructions that guide the model’s output based on task-specific conditions [35, 41]. In the realm of large language models and bioinformatics, this could mean designing prompts that instruct the model to generate hypotheses about molecular structures or to predict biological interactions based on a given sequence. Biological FMs such as ProtST [35], HyenaDNA [41], and ProGen [43] can be used in this approach, often combined with few-shot learning, to allow for more controlled outputs that are aligned with the desired application. Prompt engineering and conditional training are particularly effective when nuanced control over the model’s response is required.

Foundation models for biological problems

To implement FMs in bioinformatics appropriately, biological problems and datasets together with relevant data preprocessing and downstream tasks in biology will be elucidated at first. As this review concentrates on biological macromolecules (including DNA, RNA, and protein), single-cell genomics, knowledge graphs/networks, text/images, and FMs illustrated in this part are generally employed to solve problems in macromolecule biology. We introduce FMs as versatile tools capable of addressing practical biological problems, including multimedia, sequence analysis, structure construction, function prediction, single-cell multi-omics analysis, and multimodal integration. Each of these areas represents a unique challenge within the field of bioinformatics, and the application of FMs provides innovative approaches to these complex issues. Recent FMs in bioinformatics are summarized in Tables 2 and 3.

A summary of foundation models in bioinformatics. This table summarizes the model categories, targets, deep module types, and technical advancement of FMs for tackling biological problems (MA, multimedia analysis; SA, sequence analysis; SC, structure construction; FP, function prediction; MI, multimodal integration). FMs are categorized by their pretraining process: DM, discriminative model; GM, generative model. Target biological data types include DNA, RNA, protein, scMultiomics, biomedical text/image/video, and knowledge graph/network. Various deep modules enhance the performance or interpretability of FMs, such as MLP, multilayer perceptron; CNN, convolutional neural network; GNN, graph neural network, and transformer.

| Model name . | Biological problem . | Model category . | Targets . | Deep module type . | Technical advancement . | Author name, publication year . |

|---|---|---|---|---|---|---|

| BioBERT | MA | DM | Biomedical text | Transformer | Adapt for biomedical corpora by pretrained BERT on large-scale biomedical corpora | Lee et al., 2020 |

| BioELECTRA | MA | DM | Biomedical text | Transformer | A biomedical domain-specific language moBMAl introducing a replaced token prediction pretraining task with generator and discriminator network | Kanakarajan et al., 2021 |

| BLURB | MA | DM | Biomedical text | Transformer | Pretrain biomedical language model from scratch for a wide range of biomedical NLP tasks instead of using complex tagging schemes | Gu et al., 2021 |

| BioBART | MA | GM | Biomedical text | Transformer | A bidirectional and auto-regressive generative language model for biomedical natural language generation tasks along with corresponding data | Yuan et al., 2022 |

| Med-PaLM | MA | GM | Biomedical text | Transformer | Introduce HealthSearchQA dataset, propose a human evaluation framework, and present instruction prompt tuning for aligning LLMs to new domains using a few exemplars | Karan et al., 2023 |

| MSA | MA | GM | Biomedical graph | MLP | A medical image segmentation model that fine-tunes the pretrained SAM by integrating the medical-specific domain knowledge | Wu et al., 2023 |

| GMAI | MA | GM | Biomedical text, graph, video | Transformer | Adapt to new tasks due to the acceptance of inputs and production of outputs with varying combinations of data modalities | Moor et al., 2023 |

| DNABERT | SA, FP | DM | DNA | Transformer | Use pretrained bidirectional encoder representation to capture a global and transferrable understanding of genomic DNA sequences | Ji et al., 2021 |

| Enformer | SA | GM | DNA | Transformer | Use a larger receptive field to improve gene expression and promoter–enhancer interaction prediction | Avsec et al., 2021 |

| HyenaDNA | SA, SC | DM | DNA | MLP | CNN | Use a sequence length scheduling technique to stabilize training and leverage longer context length to adapt to novel tasks | Nguyen et al., 2023 |

| Nucleotide Transformer | SA | DM | DNA | Transformer | Build and pretrain foundational language models in genomics, across different genomic datasets and parameter sizes | Dalla-Torre et al., 2023 |

| ProteinBERT | SA, SC | DM | Protein | Transformer | Pretrain protein language model with gene ontology annotation prediction task for both local and global representations | Brandes et al., 2022 |

| ProtGPT2 | SA, SC | GM | Protein | Transformer | A generative language model trained on protein space to learn the protein language and produce sequences to sample any region | Ferruz et al., 2022 |

| DNABERT-2 | SA, FP | DM | DNA | Transformer | Adapt byte pair encoding to improve computational efficiency and employ multiple strategies to overcome input length constraints | Zhou et al., 2023 |

| ProGen | SA, SC | GM | Protein | CNN | Transformer | A protein language model trained on millions of raw protein sequences that generate artificial proteins across multiple families and functions | Madani et al., 2023 |

| xTrimoPGLM | SA, SC, FP | GM | Protein | CNN | Transformer | A pretraining framework to address protein understanding and generation tasks with joint optimization of the two types of objectives | Chen et al., 2023 |

| CLAPE-DB | SA, SC | DM | Protein | CNN | Transformer | Combines pre-trained protein language model and constructive learning to predict DNA binding residues | Liu et al., 2023 |

| Geneformer | SA, FP | DM | scMultiomics | Transformer | A context-aware, attention-based deep learning model pretrained on a large-scale corpus and can be transferred to diverse fine-tuning tasks | Theodoris et al., 2022 |

| scGPT | SA, SC, FP | GM | scMultiomics | Transformer | A single cell foundation model through generative pre-training on over 10 million cells stored by an in-memory data structure | Cui et al., 2023 |

| ESM-1b | SC, FP | GM | Protein | Transformer | Use an unsupervised deep language model to acquire protein structure and function directly from 250 million protein sequences | Rives et al., 2021 |

| AlphaFold2 | SC | DM | Protein | Transformer | Improve the AlphaFold by employing an SE(3)-equivariant transformer with an attention mechanism to represent their interactions and distances | Jumper et al., 2021 |

| RGN2 | SC | DM | Protein | Transformer | Combine a differentiable recurrent geometric network (RGN) with a transformer-based AminoBERT protein language model to generate backbone structures from unaligned proteins before refinement | Chowdhury et al., 2022 |

| Uni-Mol | SC | GM | Protein | Transformer | A 3D position predict model by a 3D molecular pre-training framework along with the candidate protein pre-training for various downstream tasks | Zhou et al., 2023 |

| RNA-FM | SC, FP | DM | RNA | Transformer | Use self-supervised learning to train 23 million non-coding RNA sequences and infer their sequential and evolutionary information | Chen et al., 2022 |

| UNI-RNA | SC, FP | DM | RNA | Transformer | A context-aware foundation model pretrained on an unprecedented scale of RNA sequences unraveling evolutionary and structural information | Wang et al., 2023 |

| RNA-MSM | SC, FP | GM | RNA | Transformer | An RNA language model effective at capturing evolutionary information from homologous sequences using a stack of MSA transformer blocks | Zhang et al., 2024 |

| Bingo | FP | GM | Protein | GNN | Transformer | A large language model and graph neural network (LLM-GNN) based adversarial training method for protein-coding genes prediction | Ma et al., 2024 |

| scFoundation | FP | GM | scMultiomics | Transformer | An extensive single-cell foundation model pre-trained on a dataset of over 50 million single-cell data points with 100 million parameters | Hao et al., 2023 |

| scHyena | FP | GM | scMultiomics | Transformer | A full-length scRNA-seq analysis in the brain by a linear adaptor layer and a bidirectional Hyena operator without losing raw data information | Oh et al., 2023 |

| scBERT | FP | DM | scMultiomics | Transformer | Use self-supervised learning on large-scale unannotated scRNA-seq data to improve the model’s generalizability and overcome the batch effect | Yang et al., 2022 |

| ProtST | FP, MI | GM | Protein, biomedical text | CNN | Transformer | A pretrained framework with three tasks of both protein and biomedical text to boost protein sequence understanding | Xu et al., 2023 |

| Model name . | Biological problem . | Model category . | Targets . | Deep module type . | Technical advancement . | Author name, publication year . |

|---|---|---|---|---|---|---|

| BioBERT | MA | DM | Biomedical text | Transformer | Adapt for biomedical corpora by pretrained BERT on large-scale biomedical corpora | Lee et al., 2020 |

| BioELECTRA | MA | DM | Biomedical text | Transformer | A biomedical domain-specific language moBMAl introducing a replaced token prediction pretraining task with generator and discriminator network | Kanakarajan et al., 2021 |

| BLURB | MA | DM | Biomedical text | Transformer | Pretrain biomedical language model from scratch for a wide range of biomedical NLP tasks instead of using complex tagging schemes | Gu et al., 2021 |

| BioBART | MA | GM | Biomedical text | Transformer | A bidirectional and auto-regressive generative language model for biomedical natural language generation tasks along with corresponding data | Yuan et al., 2022 |

| Med-PaLM | MA | GM | Biomedical text | Transformer | Introduce HealthSearchQA dataset, propose a human evaluation framework, and present instruction prompt tuning for aligning LLMs to new domains using a few exemplars | Karan et al., 2023 |

| MSA | MA | GM | Biomedical graph | MLP | A medical image segmentation model that fine-tunes the pretrained SAM by integrating the medical-specific domain knowledge | Wu et al., 2023 |

| GMAI | MA | GM | Biomedical text, graph, video | Transformer | Adapt to new tasks due to the acceptance of inputs and production of outputs with varying combinations of data modalities | Moor et al., 2023 |

| DNABERT | SA, FP | DM | DNA | Transformer | Use pretrained bidirectional encoder representation to capture a global and transferrable understanding of genomic DNA sequences | Ji et al., 2021 |

| Enformer | SA | GM | DNA | Transformer | Use a larger receptive field to improve gene expression and promoter–enhancer interaction prediction | Avsec et al., 2021 |

| HyenaDNA | SA, SC | DM | DNA | MLP | CNN | Use a sequence length scheduling technique to stabilize training and leverage longer context length to adapt to novel tasks | Nguyen et al., 2023 |

| Nucleotide Transformer | SA | DM | DNA | Transformer | Build and pretrain foundational language models in genomics, across different genomic datasets and parameter sizes | Dalla-Torre et al., 2023 |

| ProteinBERT | SA, SC | DM | Protein | Transformer | Pretrain protein language model with gene ontology annotation prediction task for both local and global representations | Brandes et al., 2022 |

| ProtGPT2 | SA, SC | GM | Protein | Transformer | A generative language model trained on protein space to learn the protein language and produce sequences to sample any region | Ferruz et al., 2022 |

| DNABERT-2 | SA, FP | DM | DNA | Transformer | Adapt byte pair encoding to improve computational efficiency and employ multiple strategies to overcome input length constraints | Zhou et al., 2023 |

| ProGen | SA, SC | GM | Protein | CNN | Transformer | A protein language model trained on millions of raw protein sequences that generate artificial proteins across multiple families and functions | Madani et al., 2023 |

| xTrimoPGLM | SA, SC, FP | GM | Protein | CNN | Transformer | A pretraining framework to address protein understanding and generation tasks with joint optimization of the two types of objectives | Chen et al., 2023 |

| CLAPE-DB | SA, SC | DM | Protein | CNN | Transformer | Combines pre-trained protein language model and constructive learning to predict DNA binding residues | Liu et al., 2023 |

| Geneformer | SA, FP | DM | scMultiomics | Transformer | A context-aware, attention-based deep learning model pretrained on a large-scale corpus and can be transferred to diverse fine-tuning tasks | Theodoris et al., 2022 |

| scGPT | SA, SC, FP | GM | scMultiomics | Transformer | A single cell foundation model through generative pre-training on over 10 million cells stored by an in-memory data structure | Cui et al., 2023 |

| ESM-1b | SC, FP | GM | Protein | Transformer | Use an unsupervised deep language model to acquire protein structure and function directly from 250 million protein sequences | Rives et al., 2021 |

| AlphaFold2 | SC | DM | Protein | Transformer | Improve the AlphaFold by employing an SE(3)-equivariant transformer with an attention mechanism to represent their interactions and distances | Jumper et al., 2021 |

| RGN2 | SC | DM | Protein | Transformer | Combine a differentiable recurrent geometric network (RGN) with a transformer-based AminoBERT protein language model to generate backbone structures from unaligned proteins before refinement | Chowdhury et al., 2022 |

| Uni-Mol | SC | GM | Protein | Transformer | A 3D position predict model by a 3D molecular pre-training framework along with the candidate protein pre-training for various downstream tasks | Zhou et al., 2023 |

| RNA-FM | SC, FP | DM | RNA | Transformer | Use self-supervised learning to train 23 million non-coding RNA sequences and infer their sequential and evolutionary information | Chen et al., 2022 |

| UNI-RNA | SC, FP | DM | RNA | Transformer | A context-aware foundation model pretrained on an unprecedented scale of RNA sequences unraveling evolutionary and structural information | Wang et al., 2023 |

| RNA-MSM | SC, FP | GM | RNA | Transformer | An RNA language model effective at capturing evolutionary information from homologous sequences using a stack of MSA transformer blocks | Zhang et al., 2024 |

| Bingo | FP | GM | Protein | GNN | Transformer | A large language model and graph neural network (LLM-GNN) based adversarial training method for protein-coding genes prediction | Ma et al., 2024 |

| scFoundation | FP | GM | scMultiomics | Transformer | An extensive single-cell foundation model pre-trained on a dataset of over 50 million single-cell data points with 100 million parameters | Hao et al., 2023 |

| scHyena | FP | GM | scMultiomics | Transformer | A full-length scRNA-seq analysis in the brain by a linear adaptor layer and a bidirectional Hyena operator without losing raw data information | Oh et al., 2023 |

| scBERT | FP | DM | scMultiomics | Transformer | Use self-supervised learning on large-scale unannotated scRNA-seq data to improve the model’s generalizability and overcome the batch effect | Yang et al., 2022 |

| ProtST | FP, MI | GM | Protein, biomedical text | CNN | Transformer | A pretrained framework with three tasks of both protein and biomedical text to boost protein sequence understanding | Xu et al., 2023 |

A summary of foundation models in bioinformatics. This table summarizes the model categories, targets, deep module types, and technical advancement of FMs for tackling biological problems (MA, multimedia analysis; SA, sequence analysis; SC, structure construction; FP, function prediction; MI, multimodal integration). FMs are categorized by their pretraining process: DM, discriminative model; GM, generative model. Target biological data types include DNA, RNA, protein, scMultiomics, biomedical text/image/video, and knowledge graph/network. Various deep modules enhance the performance or interpretability of FMs, such as MLP, multilayer perceptron; CNN, convolutional neural network; GNN, graph neural network, and transformer.

| Model name . | Biological problem . | Model category . | Targets . | Deep module type . | Technical advancement . | Author name, publication year . |

|---|---|---|---|---|---|---|

| BioBERT | MA | DM | Biomedical text | Transformer | Adapt for biomedical corpora by pretrained BERT on large-scale biomedical corpora | Lee et al., 2020 |

| BioELECTRA | MA | DM | Biomedical text | Transformer | A biomedical domain-specific language moBMAl introducing a replaced token prediction pretraining task with generator and discriminator network | Kanakarajan et al., 2021 |

| BLURB | MA | DM | Biomedical text | Transformer | Pretrain biomedical language model from scratch for a wide range of biomedical NLP tasks instead of using complex tagging schemes | Gu et al., 2021 |

| BioBART | MA | GM | Biomedical text | Transformer | A bidirectional and auto-regressive generative language model for biomedical natural language generation tasks along with corresponding data | Yuan et al., 2022 |

| Med-PaLM | MA | GM | Biomedical text | Transformer | Introduce HealthSearchQA dataset, propose a human evaluation framework, and present instruction prompt tuning for aligning LLMs to new domains using a few exemplars | Karan et al., 2023 |

| MSA | MA | GM | Biomedical graph | MLP | A medical image segmentation model that fine-tunes the pretrained SAM by integrating the medical-specific domain knowledge | Wu et al., 2023 |

| GMAI | MA | GM | Biomedical text, graph, video | Transformer | Adapt to new tasks due to the acceptance of inputs and production of outputs with varying combinations of data modalities | Moor et al., 2023 |

| DNABERT | SA, FP | DM | DNA | Transformer | Use pretrained bidirectional encoder representation to capture a global and transferrable understanding of genomic DNA sequences | Ji et al., 2021 |

| Enformer | SA | GM | DNA | Transformer | Use a larger receptive field to improve gene expression and promoter–enhancer interaction prediction | Avsec et al., 2021 |

| HyenaDNA | SA, SC | DM | DNA | MLP | CNN | Use a sequence length scheduling technique to stabilize training and leverage longer context length to adapt to novel tasks | Nguyen et al., 2023 |

| Nucleotide Transformer | SA | DM | DNA | Transformer | Build and pretrain foundational language models in genomics, across different genomic datasets and parameter sizes | Dalla-Torre et al., 2023 |

| ProteinBERT | SA, SC | DM | Protein | Transformer | Pretrain protein language model with gene ontology annotation prediction task for both local and global representations | Brandes et al., 2022 |

| ProtGPT2 | SA, SC | GM | Protein | Transformer | A generative language model trained on protein space to learn the protein language and produce sequences to sample any region | Ferruz et al., 2022 |

| DNABERT-2 | SA, FP | DM | DNA | Transformer | Adapt byte pair encoding to improve computational efficiency and employ multiple strategies to overcome input length constraints | Zhou et al., 2023 |

| ProGen | SA, SC | GM | Protein | CNN | Transformer | A protein language model trained on millions of raw protein sequences that generate artificial proteins across multiple families and functions | Madani et al., 2023 |

| xTrimoPGLM | SA, SC, FP | GM | Protein | CNN | Transformer | A pretraining framework to address protein understanding and generation tasks with joint optimization of the two types of objectives | Chen et al., 2023 |

| CLAPE-DB | SA, SC | DM | Protein | CNN | Transformer | Combines pre-trained protein language model and constructive learning to predict DNA binding residues | Liu et al., 2023 |

| Geneformer | SA, FP | DM | scMultiomics | Transformer | A context-aware, attention-based deep learning model pretrained on a large-scale corpus and can be transferred to diverse fine-tuning tasks | Theodoris et al., 2022 |

| scGPT | SA, SC, FP | GM | scMultiomics | Transformer | A single cell foundation model through generative pre-training on over 10 million cells stored by an in-memory data structure | Cui et al., 2023 |

| ESM-1b | SC, FP | GM | Protein | Transformer | Use an unsupervised deep language model to acquire protein structure and function directly from 250 million protein sequences | Rives et al., 2021 |

| AlphaFold2 | SC | DM | Protein | Transformer | Improve the AlphaFold by employing an SE(3)-equivariant transformer with an attention mechanism to represent their interactions and distances | Jumper et al., 2021 |

| RGN2 | SC | DM | Protein | Transformer | Combine a differentiable recurrent geometric network (RGN) with a transformer-based AminoBERT protein language model to generate backbone structures from unaligned proteins before refinement | Chowdhury et al., 2022 |

| Uni-Mol | SC | GM | Protein | Transformer | A 3D position predict model by a 3D molecular pre-training framework along with the candidate protein pre-training for various downstream tasks | Zhou et al., 2023 |

| RNA-FM | SC, FP | DM | RNA | Transformer | Use self-supervised learning to train 23 million non-coding RNA sequences and infer their sequential and evolutionary information | Chen et al., 2022 |

| UNI-RNA | SC, FP | DM | RNA | Transformer | A context-aware foundation model pretrained on an unprecedented scale of RNA sequences unraveling evolutionary and structural information | Wang et al., 2023 |

| RNA-MSM | SC, FP | GM | RNA | Transformer | An RNA language model effective at capturing evolutionary information from homologous sequences using a stack of MSA transformer blocks | Zhang et al., 2024 |

| Bingo | FP | GM | Protein | GNN | Transformer | A large language model and graph neural network (LLM-GNN) based adversarial training method for protein-coding genes prediction | Ma et al., 2024 |

| scFoundation | FP | GM | scMultiomics | Transformer | An extensive single-cell foundation model pre-trained on a dataset of over 50 million single-cell data points with 100 million parameters | Hao et al., 2023 |

| scHyena | FP | GM | scMultiomics | Transformer | A full-length scRNA-seq analysis in the brain by a linear adaptor layer and a bidirectional Hyena operator without losing raw data information | Oh et al., 2023 |

| scBERT | FP | DM | scMultiomics | Transformer | Use self-supervised learning on large-scale unannotated scRNA-seq data to improve the model’s generalizability and overcome the batch effect | Yang et al., 2022 |

| ProtST | FP, MI | GM | Protein, biomedical text | CNN | Transformer | A pretrained framework with three tasks of both protein and biomedical text to boost protein sequence understanding | Xu et al., 2023 |

| Model name . | Biological problem . | Model category . | Targets . | Deep module type . | Technical advancement . | Author name, publication year . |

|---|---|---|---|---|---|---|

| BioBERT | MA | DM | Biomedical text | Transformer | Adapt for biomedical corpora by pretrained BERT on large-scale biomedical corpora | Lee et al., 2020 |

| BioELECTRA | MA | DM | Biomedical text | Transformer | A biomedical domain-specific language moBMAl introducing a replaced token prediction pretraining task with generator and discriminator network | Kanakarajan et al., 2021 |

| BLURB | MA | DM | Biomedical text | Transformer | Pretrain biomedical language model from scratch for a wide range of biomedical NLP tasks instead of using complex tagging schemes | Gu et al., 2021 |

| BioBART | MA | GM | Biomedical text | Transformer | A bidirectional and auto-regressive generative language model for biomedical natural language generation tasks along with corresponding data | Yuan et al., 2022 |

| Med-PaLM | MA | GM | Biomedical text | Transformer | Introduce HealthSearchQA dataset, propose a human evaluation framework, and present instruction prompt tuning for aligning LLMs to new domains using a few exemplars | Karan et al., 2023 |

| MSA | MA | GM | Biomedical graph | MLP | A medical image segmentation model that fine-tunes the pretrained SAM by integrating the medical-specific domain knowledge | Wu et al., 2023 |

| GMAI | MA | GM | Biomedical text, graph, video | Transformer | Adapt to new tasks due to the acceptance of inputs and production of outputs with varying combinations of data modalities | Moor et al., 2023 |

| DNABERT | SA, FP | DM | DNA | Transformer | Use pretrained bidirectional encoder representation to capture a global and transferrable understanding of genomic DNA sequences | Ji et al., 2021 |

| Enformer | SA | GM | DNA | Transformer | Use a larger receptive field to improve gene expression and promoter–enhancer interaction prediction | Avsec et al., 2021 |

| HyenaDNA | SA, SC | DM | DNA | MLP | CNN | Use a sequence length scheduling technique to stabilize training and leverage longer context length to adapt to novel tasks | Nguyen et al., 2023 |

| Nucleotide Transformer | SA | DM | DNA | Transformer | Build and pretrain foundational language models in genomics, across different genomic datasets and parameter sizes | Dalla-Torre et al., 2023 |

| ProteinBERT | SA, SC | DM | Protein | Transformer | Pretrain protein language model with gene ontology annotation prediction task for both local and global representations | Brandes et al., 2022 |

| ProtGPT2 | SA, SC | GM | Protein | Transformer | A generative language model trained on protein space to learn the protein language and produce sequences to sample any region | Ferruz et al., 2022 |

| DNABERT-2 | SA, FP | DM | DNA | Transformer | Adapt byte pair encoding to improve computational efficiency and employ multiple strategies to overcome input length constraints | Zhou et al., 2023 |

| ProGen | SA, SC | GM | Protein | CNN | Transformer | A protein language model trained on millions of raw protein sequences that generate artificial proteins across multiple families and functions | Madani et al., 2023 |

| xTrimoPGLM | SA, SC, FP | GM | Protein | CNN | Transformer | A pretraining framework to address protein understanding and generation tasks with joint optimization of the two types of objectives | Chen et al., 2023 |

| CLAPE-DB | SA, SC | DM | Protein | CNN | Transformer | Combines pre-trained protein language model and constructive learning to predict DNA binding residues | Liu et al., 2023 |

| Geneformer | SA, FP | DM | scMultiomics | Transformer | A context-aware, attention-based deep learning model pretrained on a large-scale corpus and can be transferred to diverse fine-tuning tasks | Theodoris et al., 2022 |

| scGPT | SA, SC, FP | GM | scMultiomics | Transformer | A single cell foundation model through generative pre-training on over 10 million cells stored by an in-memory data structure | Cui et al., 2023 |

| ESM-1b | SC, FP | GM | Protein | Transformer | Use an unsupervised deep language model to acquire protein structure and function directly from 250 million protein sequences | Rives et al., 2021 |

| AlphaFold2 | SC | DM | Protein | Transformer | Improve the AlphaFold by employing an SE(3)-equivariant transformer with an attention mechanism to represent their interactions and distances | Jumper et al., 2021 |

| RGN2 | SC | DM | Protein | Transformer | Combine a differentiable recurrent geometric network (RGN) with a transformer-based AminoBERT protein language model to generate backbone structures from unaligned proteins before refinement | Chowdhury et al., 2022 |

| Uni-Mol | SC | GM | Protein | Transformer | A 3D position predict model by a 3D molecular pre-training framework along with the candidate protein pre-training for various downstream tasks | Zhou et al., 2023 |

| RNA-FM | SC, FP | DM | RNA | Transformer | Use self-supervised learning to train 23 million non-coding RNA sequences and infer their sequential and evolutionary information | Chen et al., 2022 |

| UNI-RNA | SC, FP | DM | RNA | Transformer | A context-aware foundation model pretrained on an unprecedented scale of RNA sequences unraveling evolutionary and structural information | Wang et al., 2023 |

| RNA-MSM | SC, FP | GM | RNA | Transformer | An RNA language model effective at capturing evolutionary information from homologous sequences using a stack of MSA transformer blocks | Zhang et al., 2024 |

| Bingo | FP | GM | Protein | GNN | Transformer | A large language model and graph neural network (LLM-GNN) based adversarial training method for protein-coding genes prediction | Ma et al., 2024 |

| scFoundation | FP | GM | scMultiomics | Transformer | An extensive single-cell foundation model pre-trained on a dataset of over 50 million single-cell data points with 100 million parameters | Hao et al., 2023 |

| scHyena | FP | GM | scMultiomics | Transformer | A full-length scRNA-seq analysis in the brain by a linear adaptor layer and a bidirectional Hyena operator without losing raw data information | Oh et al., 2023 |

| scBERT | FP | DM | scMultiomics | Transformer | Use self-supervised learning on large-scale unannotated scRNA-seq data to improve the model’s generalizability and overcome the batch effect | Yang et al., 2022 |

| ProtST | FP, MI | GM | Protein, biomedical text | CNN | Transformer | A pretrained framework with three tasks of both protein and biomedical text to boost protein sequence understanding | Xu et al., 2023 |

| Model name . | Model size . | Model task . | Model name . | Model size . | Model task . |

|---|---|---|---|---|---|

| BioBERT | 110 M/340 M | Biomedical text mining (NER, RE, QA) | ProGen | 1.2B | Stability prediction, remote homology detection, secondary structure prediction |

| BioELECTRA | 109 M | Biomedical text mining (NER, RE, QA) | ProGen2 | 6.4B | Functional sequence generation, protein fitness prediction |

| BLURB | Unknown | Biomedical NLP benchmark (QA, NER, parsing, etc.) | CLAPE-DB | Unknown | Protein–ligand-binding site prediction |

| BioBART | 139 M/400 M | Biomedical text generation (dialogue, summarization, NER) | Geneformer | 30 M | Sequence-based prediction |

| Med-PaLM | 12B/84B/562B | Medical question answering | scGPT | Unknown | Multibatch integration, multi-omic integration, cell-type annotation, genetic perturbation prediction, gene network inference |

| MSA | 30 M/100 M | Arabic NLP tasks (NER, POS tagging, sentiment analysis, etc.) | ESM-1b | 650 M | Supervised prediction of mutational effect and secondary structure |

| GMAI | Unknown | Generalist medical AI (multimodal tasks) | AlphaFold2 | 21 M | Protein structure prediction |

| DNABERT | 110 M | DNA sequence prediction (promoters, TFBSs, splice sites) | AlphaFold3 | 93 M | Protein structure prediction, structure of protein–protein interaction prediction |

| Enformer | Unknown | Gene expression prediction | RGN2 | 110 M | Protein design and analysis of allelic variation or disease mutations |

| HyenaDNA | 7 M | Genomic sequence modeling (regulatory elements, chromatin profiles) | Uni-Mol | 1.1B | 3D position recovery, masked atom prediction, molecular property prediction |

| Nucleotide Transformer | 500 M ~ 2.5B | DNA sequence analysis | RNA-FM | 99.52 M | RNA secondary structure prediction, distance regression task |

| ProteinBERT | 16 M | Bidirectional language modeling of protein sequences, Gene Ontology (GO) annotation prediction | UNI-RNA | 25 M/85 M/169 M/400 M | RNA structure and function prediction |

| ProtGPT | 1.6 M/25.2 M | Protein sequence generation | RNA-MSM | Unknown | RNA structure and function prediction |

| ProtGPT2 | 738 M | Protein sequence generation, structural similarity detection, stability prediction | Bingo | 8 ~ 15 M | Filling in randomly masked amino acids, generating residue-level feature matrix and protein contact map |

| xTrimoPGLM | 100B | Protein understanding and generation | scFoundation | 100 M | Gene expression enhancement, tissue drug response prediction, single-cell drug response classification, single-cell perturbation prediction |

| DNABERT-2 | 117 M | DNA sequence prediction | scHyena | Unknown | Cell type classification, scRNA-seq imputation |

| scBERT | Unknown | Single-cell RNA sequencing analysis | ProtST | 650 M | Unimodal mask prediction, multimodal representation alignment, multimodal mask prediction |

| Model name . | Model size . | Model task . | Model name . | Model size . | Model task . |

|---|---|---|---|---|---|

| BioBERT | 110 M/340 M | Biomedical text mining (NER, RE, QA) | ProGen | 1.2B | Stability prediction, remote homology detection, secondary structure prediction |

| BioELECTRA | 109 M | Biomedical text mining (NER, RE, QA) | ProGen2 | 6.4B | Functional sequence generation, protein fitness prediction |

| BLURB | Unknown | Biomedical NLP benchmark (QA, NER, parsing, etc.) | CLAPE-DB | Unknown | Protein–ligand-binding site prediction |

| BioBART | 139 M/400 M | Biomedical text generation (dialogue, summarization, NER) | Geneformer | 30 M | Sequence-based prediction |

| Med-PaLM | 12B/84B/562B | Medical question answering | scGPT | Unknown | Multibatch integration, multi-omic integration, cell-type annotation, genetic perturbation prediction, gene network inference |

| MSA | 30 M/100 M | Arabic NLP tasks (NER, POS tagging, sentiment analysis, etc.) | ESM-1b | 650 M | Supervised prediction of mutational effect and secondary structure |

| GMAI | Unknown | Generalist medical AI (multimodal tasks) | AlphaFold2 | 21 M | Protein structure prediction |

| DNABERT | 110 M | DNA sequence prediction (promoters, TFBSs, splice sites) | AlphaFold3 | 93 M | Protein structure prediction, structure of protein–protein interaction prediction |

| Enformer | Unknown | Gene expression prediction | RGN2 | 110 M | Protein design and analysis of allelic variation or disease mutations |

| HyenaDNA | 7 M | Genomic sequence modeling (regulatory elements, chromatin profiles) | Uni-Mol | 1.1B | 3D position recovery, masked atom prediction, molecular property prediction |

| Nucleotide Transformer | 500 M ~ 2.5B | DNA sequence analysis | RNA-FM | 99.52 M | RNA secondary structure prediction, distance regression task |

| ProteinBERT | 16 M | Bidirectional language modeling of protein sequences, Gene Ontology (GO) annotation prediction | UNI-RNA | 25 M/85 M/169 M/400 M | RNA structure and function prediction |

| ProtGPT | 1.6 M/25.2 M | Protein sequence generation | RNA-MSM | Unknown | RNA structure and function prediction |

| ProtGPT2 | 738 M | Protein sequence generation, structural similarity detection, stability prediction | Bingo | 8 ~ 15 M | Filling in randomly masked amino acids, generating residue-level feature matrix and protein contact map |

| xTrimoPGLM | 100B | Protein understanding and generation | scFoundation | 100 M | Gene expression enhancement, tissue drug response prediction, single-cell drug response classification, single-cell perturbation prediction |

| DNABERT-2 | 117 M | DNA sequence prediction | scHyena | Unknown | Cell type classification, scRNA-seq imputation |

| scBERT | Unknown | Single-cell RNA sequencing analysis | ProtST | 650 M | Unimodal mask prediction, multimodal representation alignment, multimodal mask prediction |

| Model name . | Model size . | Model task . | Model name . | Model size . | Model task . |

|---|---|---|---|---|---|

| BioBERT | 110 M/340 M | Biomedical text mining (NER, RE, QA) | ProGen | 1.2B | Stability prediction, remote homology detection, secondary structure prediction |

| BioELECTRA | 109 M | Biomedical text mining (NER, RE, QA) | ProGen2 | 6.4B | Functional sequence generation, protein fitness prediction |

| BLURB | Unknown | Biomedical NLP benchmark (QA, NER, parsing, etc.) | CLAPE-DB | Unknown | Protein–ligand-binding site prediction |

| BioBART | 139 M/400 M | Biomedical text generation (dialogue, summarization, NER) | Geneformer | 30 M | Sequence-based prediction |

| Med-PaLM | 12B/84B/562B | Medical question answering | scGPT | Unknown | Multibatch integration, multi-omic integration, cell-type annotation, genetic perturbation prediction, gene network inference |

| MSA | 30 M/100 M | Arabic NLP tasks (NER, POS tagging, sentiment analysis, etc.) | ESM-1b | 650 M | Supervised prediction of mutational effect and secondary structure |

| GMAI | Unknown | Generalist medical AI (multimodal tasks) | AlphaFold2 | 21 M | Protein structure prediction |

| DNABERT | 110 M | DNA sequence prediction (promoters, TFBSs, splice sites) | AlphaFold3 | 93 M | Protein structure prediction, structure of protein–protein interaction prediction |

| Enformer | Unknown | Gene expression prediction | RGN2 | 110 M | Protein design and analysis of allelic variation or disease mutations |

| HyenaDNA | 7 M | Genomic sequence modeling (regulatory elements, chromatin profiles) | Uni-Mol | 1.1B | 3D position recovery, masked atom prediction, molecular property prediction |

| Nucleotide Transformer | 500 M ~ 2.5B | DNA sequence analysis | RNA-FM | 99.52 M | RNA secondary structure prediction, distance regression task |

| ProteinBERT | 16 M | Bidirectional language modeling of protein sequences, Gene Ontology (GO) annotation prediction | UNI-RNA | 25 M/85 M/169 M/400 M | RNA structure and function prediction |

| ProtGPT | 1.6 M/25.2 M | Protein sequence generation | RNA-MSM | Unknown | RNA structure and function prediction |

| ProtGPT2 | 738 M | Protein sequence generation, structural similarity detection, stability prediction | Bingo | 8 ~ 15 M | Filling in randomly masked amino acids, generating residue-level feature matrix and protein contact map |

| xTrimoPGLM | 100B | Protein understanding and generation | scFoundation | 100 M | Gene expression enhancement, tissue drug response prediction, single-cell drug response classification, single-cell perturbation prediction |

| DNABERT-2 | 117 M | DNA sequence prediction | scHyena | Unknown | Cell type classification, scRNA-seq imputation |

| scBERT | Unknown | Single-cell RNA sequencing analysis | ProtST | 650 M | Unimodal mask prediction, multimodal representation alignment, multimodal mask prediction |

| Model name . | Model size . | Model task . | Model name . | Model size . | Model task . |

|---|---|---|---|---|---|

| BioBERT | 110 M/340 M | Biomedical text mining (NER, RE, QA) | ProGen | 1.2B | Stability prediction, remote homology detection, secondary structure prediction |

| BioELECTRA | 109 M | Biomedical text mining (NER, RE, QA) | ProGen2 | 6.4B | Functional sequence generation, protein fitness prediction |

| BLURB | Unknown | Biomedical NLP benchmark (QA, NER, parsing, etc.) | CLAPE-DB | Unknown | Protein–ligand-binding site prediction |

| BioBART | 139 M/400 M | Biomedical text generation (dialogue, summarization, NER) | Geneformer | 30 M | Sequence-based prediction |

| Med-PaLM | 12B/84B/562B | Medical question answering | scGPT | Unknown | Multibatch integration, multi-omic integration, cell-type annotation, genetic perturbation prediction, gene network inference |

| MSA | 30 M/100 M | Arabic NLP tasks (NER, POS tagging, sentiment analysis, etc.) | ESM-1b | 650 M | Supervised prediction of mutational effect and secondary structure |

| GMAI | Unknown | Generalist medical AI (multimodal tasks) | AlphaFold2 | 21 M | Protein structure prediction |

| DNABERT | 110 M | DNA sequence prediction (promoters, TFBSs, splice sites) | AlphaFold3 | 93 M | Protein structure prediction, structure of protein–protein interaction prediction |

| Enformer | Unknown | Gene expression prediction | RGN2 | 110 M | Protein design and analysis of allelic variation or disease mutations |

| HyenaDNA | 7 M | Genomic sequence modeling (regulatory elements, chromatin profiles) | Uni-Mol | 1.1B | 3D position recovery, masked atom prediction, molecular property prediction |

| Nucleotide Transformer | 500 M ~ 2.5B | DNA sequence analysis | RNA-FM | 99.52 M | RNA secondary structure prediction, distance regression task |

| ProteinBERT | 16 M | Bidirectional language modeling of protein sequences, Gene Ontology (GO) annotation prediction | UNI-RNA | 25 M/85 M/169 M/400 M | RNA structure and function prediction |

| ProtGPT | 1.6 M/25.2 M | Protein sequence generation | RNA-MSM | Unknown | RNA structure and function prediction |

| ProtGPT2 | 738 M | Protein sequence generation, structural similarity detection, stability prediction | Bingo | 8 ~ 15 M | Filling in randomly masked amino acids, generating residue-level feature matrix and protein contact map |

| xTrimoPGLM | 100B | Protein understanding and generation | scFoundation | 100 M | Gene expression enhancement, tissue drug response prediction, single-cell drug response classification, single-cell perturbation prediction |

| DNABERT-2 | 117 M | DNA sequence prediction | scHyena | Unknown | Cell type classification, scRNA-seq imputation |

| scBERT | Unknown | Single-cell RNA sequencing analysis | ProtST | 650 M | Unimodal mask prediction, multimodal representation alignment, multimodal mask prediction |

Biological problems and datasets

FMs can solve practical biological problems that fall within five categories: multimedia analysis, sequence analysis, structure construction, function prediction, single-cell multi-omics analysis, and multimodal integration. Core biological problems are sequence analysis, structure construction, and function prediction. Sequence analysis obtains salient gene information, such as the information of binding sites (commonly encoded as position weight matrices), PPIs, and gene expression, from gene and mutation sequence data DNA, RNA (in which each of four kinds of nucleotides is encoded as a one-hot vector like [1, 0, 0, 0]), protein, and genome (the complex of all the genetic information of an organism). Indeed, the information obtained from these models can be utilized to analyze various downstream tasks. For instance, gene expression embodies the functional regulation process of cells. The differences observed between single-cell genomics pave the way for the discovery of new cell types. Similarly, PPIs and their analogs (such as protein–nucleic acid interactions, protein–ligand interactions, and protein–small molecule interactions) encapsulate the physical binding information between them, providing valuable insights into their interactions. Sequential data are often included as annotations of higher-level data that further contain their synergistic or catalytic interactions.

Structure construction focuses on predicting the structures of proteins and RNA from the secondary structure to the quaternary structure based on the primary structure linear sequences of amino acids in a peptide or protein; the secondary structure contains α-helix, β-sheet with three strands, β-bend, Ω-loop, random coil architecture, and topology targets; the tertiary structure has hydrogen bonds, hydrophobic interactions, and tertiary contacts; and the quaternary structure represents a complex molecule structure. As these structures can be represented by statistical information among amino acid residues [44], many structure construction efforts are made on amino acids in DNA, single-cell genomics, and homologous protein families. Moreover, biological sequence data with different positions may have different functions. In this context, they can also be categorized under multiple sequence alignment (MSA) [16].

Function prediction related to biomedicine enables understanding functions of targets such as proteins and variants to predict polypharmacy side-effects, etc. Core biological data for solving this problem are proteins, individual genes, and their spatial interactions commonly encapsulated within knowledge graphs or networks. These networks represent various information indicated as gene interaction networks, disease pathways, patient networks, and networks that capture similarities between cells. Notably, the prediction of biological function is intrinsically linked to the outcomes of gene expression analysis, given that protein functionality is influenced by the degree of gene expression.

Multimedia analysis involves parsing biological problems by transforming the principles of natural language analysis and computer vision domains into biological areas. Hence, biomedical text like BioBERT [24] and 2D and 3D biomedical images such as microscopy images [19] make up major data in solving domain-specific problems. Multimodal integration biological problems can map multiple types of biological data encompassing multi-omics and morphological histology data, etc.

Data preprocessing

Data preprocessing is paramount to ensure satisfactory performance before building a model. Original biological datasets may contain multiple inconsistencies caused by varying purposes and acquisition technologies preventing them from being analyzed directly. Adoption of appropriately curated and preprocessed data without exorbitant data overlap, data deficiency, noise interference, or other unexplored data can improve model computational efficiency and representative ability with better model performance. Examples include doublet removal (without duplicate articles), cell-cycle variance removal, data imputation and denoising, dimensionality reduction (reducing the sequence similarity), representation learning, batch effect removal, normalization, background correction, etc.

Doublet removal can avoid mapping duplicate overlapping data with different identifiers or different data that share the same identifying barcode, which plays a significant role in constructing a unique set of relations between entities [45, 46]. Cell-cycle variance removal focuses on removing vain variations in gene expression between cells emerging along the cell cycle by subtracting out the cell cycle influence [47]. It is intractable for data imputation and denoising because only 6%–30% of values can be captured under different chemistry versions and depths, and to decipher “true” and “false” (called “dropout”) zeros in >70% missing values is a guarantee for further identification. To a certain degree, these data can be refined by leveraging similarities with other datasets or through the construction of multiple subneural networks for imputation [48]. Dimensionality reduction of wide gene expression profiles represented in high feature dimensions, also known as representation learning, aims at the construction of embeddings that facilitate the identification of data elements. Systematic variations specific to each batch tend to raise challenges in data integration and lead to significant data wastage. Tran et al. compared benchmarks of batch effect removal methods such as seGen (variational autoencoder neural network model and latent space), Scanorama (mutual nearest neighbor and panoramic stitching), and MND-ResNet (residual neural network for calibration) to effectively reduce the variations and batch effects in data captured with different times, types of equipment, or technologies [49]. Therefore, customized fine-tuning can correct sequencing batches from multiple datasets [42]. Protein data can be compared on a common scale by normalization to adjust the measurements [50], and background correction aims to correct for any background noise in the protein data [51].

With preprocessed biological data, the data analysis model can be efficiently employed and mitigate or even eliminate obstacles in biological tasks such as doublet detection and cell-cycle variance annotation. As a result, the judicious utilization of biological data and their corresponding embeddings can significantly enhance the performance of downstream tasks.

Downstream tasks

In bioinformatics, the analysis of downstream tasks is permitted to evolve through the application of fine-tuning strategies that are desired for accurate performance in analyzing biological problems of interest based on pretrained biological knowledge in FMs. Fine-tuning can greatly reduce computational time and barriers to their implementation and is capable of solving biological tasks related to sequence analysis, structure construction, function prediction, domain exploration, scMultiomics analysis, and multimodal integration.

For sequence analysis, besides traditional sequence alignment analysis [52], homology detection [53], and molecular evolutionary genetics analysis tasks [54], there are promoter interaction prediction, enhancer–promoter interaction prediction, variant identification, variant effect prediction, signal peptide prediction, gene dosage sensitivity predictions, genetic perturbation prediction, protein understanding, DNA replication, stability prediction, etc. Promoter prediction identifies promoter regions of motifs in transcription start sites of genome-wide sequences. Nonpromoter region samples can then be constructed by shuffling and keeping different parts of split promoter sequences with matching lengths. Enhancer–promoter interaction (EPI) prediction is essential in cell differentiation and can interpret noncoding mutation with potential pathogenicity [55]. EPIs are determined by chromatin conformation and thereby can be inferred by chromatin conformation capture-based techniques or other genetic approaches. In addition, the promoters and enhancers are also known as initial and distal regulatory elements, respectively [56].

Variant identification discloses human diseases and traits by distinguishing casual from noncasual variants [34]. Variant effect prediction focuses on determining functional important variants and giving priorities to them [57]. Signal peptide prediction is a binary protein sequence analysis that predicts their presence and locates their cleavage sites [27]. Gene dosage sensitivity predictions present genes that are sensitive to changes in their dosage interpreting copy number variants in genetic diagnosis [17]. Genetic perturbation prediction aims to forecast perturbed original values or perturbed gene expression values in certain tasks [42]. Protein understanding requires accurate representation at the residue level or protein level to understand biological information encoded within proteins [37]. The process of DNA replication is governed by specific initiation and termination sites, with the function of the origin of replication being modulated by epigenetic factors. This intricate process can be studied at a population level by leveraging nontransformed, highly proliferative, and karyotypically stable pluripotent stem cells. Stability prediction calls for statistical representations of protein informatics such as natural language–inspired representations [43].

Structure construction commonly performs secondary or tertiary structure prediction in downstream tasks. Secondary structure prediction was originally achieved by thermodynamic and alignment methods to determine the homologous sequences and their alignments [58]. 3D structures, by contrast, need further exploration due to the lack of 3D structure data, which may be constructed on the raised deep learning method. Moreover, other tasks related to DNA, RNA, protein, and genomics such as predicting DNA binding residues, protein–RNA binding preference, protein–ligand binding pose, splicing junction prediction, neuropeptide cleavage, genome structure and evolution, and gene network, underlie the discovery of their structure information as well. Predicting DNA- and RNA-binding proteins is essential for analyzing genetic variants [59]. Transcription factors (TFs) are binding proteins in regulating gene expression that can bind motifs (specific DNA sequences) to regulate transcription. Generally, pathogenic functional variants in complex neurodegenerative diseases occur with the change of TF-binding intensities [5]. PPI prediction aims at revealing bindings between proteins with transient or stable physical connections. Protein–small molecules and protein–nucleic acid interactions are significant prediction tasks that dominate organism activities [60].

Splicing junction prediction is crucial for protein synthesis and genetic disease identification, whose variant effects can be predicted with the integration of process-specific scores [61]. Neuropeptide cleavage is one of the post-translational modification binary prediction tasks where the maturation of neuropeptides occurs associated with molecule variability for behavioral and physiological states [27]. Genome structure represents genome-regulatory-element secondary structures, and evolution denotes the evolutionary trend of virus variants [58]. Gene network prediction can map networks based on learned connections between genes. Recently, a transfer learning method has been proposed to learn the connection with limited task-specific data showing a promising analysis for rare diseases [17].