-

PDF

- Split View

-

Views

-

Cite

Cite

Brighter Agyemang, Wei-Ping Wu, Daniel Addo, Michael Y Kpiebaareh, Ebenezer Nanor, Charles Roland Haruna, Deep inverse reinforcement learning for structural evolution of small molecules, Briefings in Bioinformatics, Volume 22, Issue 4, July 2021, bbaa364, https://doi.org/10.1093/bib/bbaa364

Close - Share Icon Share

Abstract

The size and quality of chemical libraries to the drug discovery pipeline are crucial for developing new drugs or repurposing existing drugs. Existing techniques such as combinatorial organic synthesis and high-throughput screening usually make the process extraordinarily tough and complicated since the search space of synthetically feasible drugs is exorbitantly huge. While reinforcement learning has been mostly exploited in the literature for generating novel compounds, the requirement of designing a reward function that succinctly represents the learning objective could prove daunting in certain complex domains. Generative adversarial network-based methods also mostly discard the discriminator after training and could be hard to train. In this study, we propose a framework for training a compound generator and learn a transferable reward function based on the entropy maximization inverse reinforcement learning (IRL) paradigm. We show from our experiments that the IRL route offers a rational alternative for generating chemical compounds in domains where reward function engineering may be less appealing or impossible while data exhibiting the desired objective is readily available.

Introduction

Identifying promising leads is crucial to the early stages of drug discovery. Combinatorial organic synthesis and high-throughput screening are well-known methods used to generate new compounds in the domain (drug and compound are used interchangeably in this study). This generation process is typically followed by an expert analysis, which focuses on the desired properties such as solubility, activity, pharmacokinetic profile, toxicity and synthetic accessibility, to ascertain the desirability of a generated compound. Compound generation and modification methods are useful for enriching chemical databases and scaffold hopping [1]. A review of the structural and analog entity evolution patent landscape estimates that the pharmaceutical industry constitutes |$70\%$| of the domain [2].

Indeed, the compound generation task is noted to be hard and complicated [3] considering that there exist |$10^{30}-10^{60}$| synthetically feasible drug-like compounds [4]. With about |$96\%$| [5] of the drug development projects failing due to unforeseen reasons, it is significant to ensure diversity in compounds that are desirable to avoid a fatal collapse of the drug discovery process. As a result of these challenges in the domain, there is a need for improved de novo compound generation methods.

In recent times, the proliferation of data, advances in computer hardware, novel algorithms for studying complex problems and other related factors have contributed significantly to the steady growth of data-driven methods such as deep learning (DL). DL-based approaches have been applied to several domains, such as natural language processing [6, 7], computer vision [8], proteochemometric modeling [9], compound and target representation learning [10, 11] and reaction analysis [12]. Consequently, there has been a growing interest in the literature to use data-driven methods to study the compound generation problem.

Deep reinforcement learning (DRL), generative adversarial networks (GANs) and transfer learning (TL) are some of the approaches that have been used to generate compounds represented using the simplified molecular-input line-entry system (SMILES) [13]. The DRL-based methods model the compound generation task as a sequential decision-making process and use reinforcement learning (RL) algorithms to design generators (agents) that estimate the statistical relationship between actions and outcomes. This statistical knowledge is then leveraged to maximize the outcome, thereby biasing the generator according to the desired chemical space. Motivated by the work in [14], GAN-based methods also model the compound generation task as a sequential decision-making problem but with a discriminator parameterizing the reward function. TL methods train a generator on a large dataset to increase the proportion of valid SMILES strings sampled from the generator before performing a fine-tuning training to bias the generator to the target chemical space.

Regarding DRL-based methods, [1] proposed a recurrent neural network (RNN)-based approach to train generative models for producing analogs of a query compound and compounds that satisfy certain chemical properties, such as activity to the dopamine receptor D2 (DRD2) target. The generator takes as input one-hot encoded representations of the canonical SMILES of a compound, and for each experiment, a corresponding reward function is specified. Also, [15] proposed a stack-augmented RNN-generative model using the REINFORCE algorithm [16] where, unlike [1], the canonical SMILES encoding of a compound is learned using backpropagation. The reward functions in [15] are parameterized by a prediction model that is trained separately from the generator. In both studies of [1] and [15], the generator was pretrained on a large SMILES dataset using a supervised learning approach before applying RL to bias the generator. Similarly, [17] proposed a SMILES- and graph-based compound generation model that adopts the supervised pretraining and subsequent RL biasing approach. Unlike [1] and [15], [17] assign intermediate rewards to valid incomplete SMILES strings. We posit that since an incomplete SMILES string could, in some cases, have meaning (e.g. moieties), assigning intermediate rewards could facilitate learning. While the DRL-based generative models can generate biased compounds, an accurate specification of the reward function is challenging and time-consuming in complex domains. In most interesting de novo compound generation scenarios, compounds meeting multiple objectives may be required, and specifying such multi-objective reward functions leads to the generator (agent) exploiting the straightforward objective and generating compounds with low variety.

In another vein, [3] trained an RNN model on a large dataset using supervised learning and then performed TL to the domain of interest to generate focused compounds. Since the supervised approach used to train the generator is different from the autoregressive sampling technique adopted at test time, such methods are not well suited for multi-step SMILES sampling [18]. This discrepancy is referred to as exposure bias. Additionally, methods such as [3] that maximize the likelihood of the underlying data are susceptible to learning distributions that place masses in low-density areas of the multivariate distribution giving rise to the underlying data.

On the other hand, [19] based on the work of [20] to propose a GAN-based generator that produces compounds matching some desirable metrics. The authors adopted an alternating approach to enable multi-objective RL optimization. As pointed out by [21], the challenges with training GAN cause a high rate of invalid SMILES strings, low diversity and reward function exploitation. In [22], a memory-based generator replaced the generator proposed by [19] in order to mitigate the problems in [19]. In these GAN-based methods, the authors adopted a Monte Carlo tree search (MCTS) method to assign intermediate rewards. However, GAN training could be unstable and the generator could get worse as a result of early saturation [23]. Additionally, the discriminator in the GAN-based models is typically discarded after training.

In this paper, we propose a novel framework for training a compound generator and learning a reward function from data using a sample-based deep inverse reinforcement learning (DIRL) objective [24]. We observe that while it may be daunting or impossible to accurately specify the reward function of some complex drug discovery campaigns to leverage DRL algorithms, sample of compounds that satisfy the desired behavior may be readily available or collated. Therefore, our proposed method offers a solution to develop in silico generators and recover reward function from compound samples. As pointed out by [25], the DIRL objective could lead to stability in training and producing effective generators. Also, unlike the typical GAN case where the discriminator is discarded, the sample-based DIRL objective is capable of training a generator and a reward function. Since the learned reward function succinctly represents the agent’s objective, it could be transferred to related domains (with a possible fine-tuning step). Moreover, since the binary cross-entropy (BCE) loss usually applied to train a discriminator does not apply in the case of the sample-based DIRL objective, this eliminates saturation problems in training the generator. The DIRL approach also mitigates the challenge of imbalance between different RL objectives.

Preliminaries

In this section, we review the concepts of RL and inverse reinforcement learning (IRL) related to this study.

Reinforcement learning

The aim of artificial intelligence (AI) is to develop autonomous agents that can sense their environment and act intelligently. Since learning through interaction is vital for developing intelligent systems, the paradigm of RL has been adopted to study several AI research problems. In RL, an agent receives an observation from its environment, reasons about the received observation to decide on an action to execute in the environment and receives a signal from the environment as to the usefulness of the action executed in the environment. This process continues in a trial-and-error manner over a finite or infinite time horizon for the agent to estimate the statistical relationship between the observations, actions and their results. This statistical relationship is then leveraged by the agent to optimize the expected signal from the environment.

Formally, an RL agent receives a state |$s_t$| from the environment and takes an action |$a_t$| in the environment leading to a scalar reward |$r_{t+1}$| in each time step |$t$|. The agent’s behavior is defined by a policy |$\pi (a_t|s_t)$|, and each action performed by the agent transitions the agent and the environment to a next state |$s_{t+1}$| or a terminal state |$s_T$|. The RL problem is modeled as a Markov decision process (MDP) consisting of

a set of states |$\mathcal{S}$|, with a distribution over starting states |$p(s_0)$|;

a set of actions |$\mathcal{A}$|;

state transition dynamics function |$\mathcal{T}(s_{t+1}|s_t, a_t)$| that maps a state-action pair at a time step |$t$| to a distribution of states at time step |$t+1$|;

a reward function |$\mathcal{R}(s_t,a_t)$| that assigns a reward to the agent after taking action |$a_t$| in state |$s_t$|;

a discount factor |$\gamma \in [0,1]$| that is used to specify a preference for immediate and long-term rewards. Also, |$\gamma < 1$| ensures that a limit is placed on the time steps considered in the infinite horizon case.

On the other hand, policy search/gradient algorithms directly learn the policy |$\pi $| of the agent instead of indirectly estimating it from value-based methods. Specifically, policy-based methods optimize the policy |$\pi (a|s,\theta )$|, where |$\theta $| is the set of parameters of the model approximating the true policy |$\pi ^*$|. We review the policy gradient (PG) methods used in this study below.

PG optimization

Proximal policy optimization

Schulman et al. [28] proposed a robust and data efficient policy gradient algorithm as an alternative to prior RL training methods such as the REINFORCE, the Q-learning [29] and the relatively complicated trust region policy optimization (TRPO) [30]. The proposed method, named proximal policy optimization (PPO), shares some similarities with the TRPO in using the ratio between the new and old policies scaled by the advantages of actions to estimate the policy gradient instead of the logarithm of action probabilities (as seen in Equation (5)). While TRPO uses a constrained optimization objective that requires the conjugate gradient algorithm to avoid large policy updates, PPO uses a clipped objective that forms a pessimistic estimate of the policy’s performance to avoid destructive policy updates. These attributes of the PPO objective enables performing multiple epochs of policy update with the data sampled from the environment. In REINFORCE, such multiple epochs of optimization often leads to destructive policy updates. Thus, PPO is also more sample efficient than the REINFORCE algorithm.

Inverse reinforcement learning

IRL is the problem of learning the reward function of an observed agent, given its policy or behavior, thereby avoiding the manual specification of a reward function [31]. The IRL class of solutions assumes the following MDP|$\setminus R_E$|:

a set of states |$\mathcal{S}$|, with a distribution over starting states |$p(s_0)$|;

a set of actions |$\mathcal{A}$|;

state transition dynamics function |$\mathcal{T}(s_{t+1}|s_t, a_t)$| that maps a state-action pair at a time step |$t$| to a distribution of states at time step |$t+1$|;

a set of demonstrated trajectories

|$\mathcal{D}=\left \{\left <(s_0^i,a_0^i),...,(s_{T-1}^i,a_{T-1}^i)\right>_{i=1}^N\right \}$| from the observed agent or expert;

a discount factor |$\gamma \in [0,1]$| may be used to discount future rewards.

The goal of IRL then is to learn the reward function |$R_E$| that best explains the expert demonstrations. It is assumed that the demonstrations are perfectly observed and that the expert follows an optimal policy.

While learning reward functions from data is appealing, the IRL problem is ill-posed since there are many reward functions under which the observed expert behavior is optimal [31, 32]. An instance is a reward function that assigns 0 (or any constant value) for all selected actions; in such a case, any policy is optimal. Other main challenges are the accurate inference, generalizability, correctness of prior knowledge and computational cost with an increase in problem complexity.

Methods

Problem statement

We consider the problem of training a model |$G_\theta (a_t|s_{0:t})$| to generate a set of compounds |$\mathbf{C}=\left \{c_1, c_2,..., c_M|M\in \mathbb{N}\right \}$|, each encoded as a valid SMILES string, such that |$\mathbf{C}$| is generally biased to satisfy a predefined criteria that could be evaluated by a function |$E$|. Considering the sequential nature of a SMILES string, we study this problem in the framework of IRL following the MDP described in Section 2.2; instead of the RL approach mostly adopted in the literature on compound generation, a parameterized reward function |$R_\psi $| has to be learned from |$\mathcal{D}$|, which is a set of SMILES that satisfy the criteria evaluated by |$E$|, to train |$G_\theta $| following the MDP in Section 2.1. Note that in the case of SMILES generation, |$\mathcal{T}(s_{t+1}|s_t, a_t)=1$|.

In this context, the action space |$\mathcal{A}$| is defined as the set of unique tokens that are combined to form a canonical SMILES string and the state space |$\mathcal{S}$| is the set of all possible combinations of these tokens to encode a valid SMILES string, each of length |$l\in [1, T], T\in \mathbb{N}$|. We set |$s_0$| to a fixed state that denotes the beginning of a SMILES string, and |$s_T$| is the terminal state.

Proposed approach

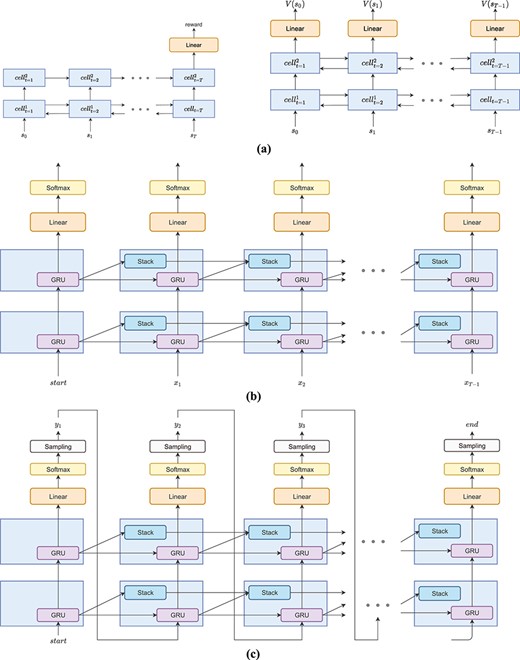

The workflow we propose in this study for the structural evolution of compounds is presented in Figure 1a. The framework is described in what follows.

![(A) Illustration of the proposed framework for training a small molecule generator and learning a reward function using IRL. The workflow begins with pretraining the generator model using large-scale compound sequence dataset, such as ChEMBL. The pretrained model is used to initialize the agent network in an IRL training scheme where a reward function is learned and the agent is biased to generate desirable compounds. The generated SMILES could be examined by an evaluation function where compounds satisfying a specified threshold could be persisted for further analysis. (B) The general structure of the models used in the study. The agent is represented as an actor–critic architecture that is trained using the PPO [28] algorithm. The actor, critic and reward functions are all RNNs that share the same SMILES encoder. The actor net becomes the SMILES generator at test time.](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/bib/22/4/10.1093_bib_bbaa364/1/m_bbaa364f1.jpeg?Expires=1750320648&Signature=HxgZJjwJOVyJ1FeW5DNJjlyyYJbqrwuN1YmVLQg0QLOOvwBCXcq316LgyvpZGMGZ74dvDHJuF-4Oenhn3iIPAby-Pf7xxcAybNzFMSmXFsAZeeFZpjVRC0gyMNwqU3Pkbg7IdV-wrxRedlI-jQeuFWsMSukG~uDhQBIpepWW8V0dUcDBLd2LsHUm2c-lEWY~iUqZAXQa8AGPLb~no-8jQ1mTkBlj5leH5L40yFHnJiuTUEEXno2DSYy748lzs4q9pH8~NfuYs6~qMXpvQhGYHiT-NJGX4jc0-NfqK2dD~eqvHPtPlTv1pZVMXwHne3-JBd4Qlw7ozXNf6vPiKIHzwg__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

(A) Illustration of the proposed framework for training a small molecule generator and learning a reward function using IRL. The workflow begins with pretraining the generator model using large-scale compound sequence dataset, such as ChEMBL. The pretrained model is used to initialize the agent network in an IRL training scheme where a reward function is learned and the agent is biased to generate desirable compounds. The generated SMILES could be examined by an evaluation function where compounds satisfying a specified threshold could be persisted for further analysis. (B) The general structure of the models used in the study. The agent is represented as an actor–critic architecture that is trained using the PPO [28] algorithm. The actor, critic and reward functions are all RNNs that share the same SMILES encoder. The actor net becomes the SMILES generator at test time.

Datasets

The first stage of the workflow is creating a SMILES dataset, similar to most existing GAN and vanilla-RL methods. Possible repositories for facilitating this dataset creation are DrugBank [36], KEGG [37], STITCH [38] and ChEMBL [39]. This dataset is used at the next stage for pretraining the generator. For this purpose, we used the curated ChEMBL dataset of [15], which consists of approximately |$1.5$| million drug-like compounds.

Also, a set of SMILES satisfying the constraints or criteria of interest are collated from an appropriate source as the demonstrations |$\mathcal{D}$| of the IRL phase (for instance, see Section 3.6). To evaluate SMILES during the training of the generator, we assume the availability of an evaluation function |$ E $| that can evaluate the extent to which a given compound satisfies the optimization constraints. Here, |$E$| could be an ML model, a robot that conducts chemical synthesis or a molecular docking program.

In this study, we avoided composing the demonstrations dataset from the data used to train the evaluation function. Since, in practice, the data, rule set or method used for developing any of the evaluation functions mentioned above could differ from the approach for constructing the demonstrations dataset, this independence provides a more realistic context to assess our proposed method.

Generative pretraining of prior model

This stage of the framework entails using the large-scale dataset of the previous step to pretrain a model that would be a prior for initializing the agent network/policy. This pretraining step aims to enable the model to learn the SMILES syntax to attain a high rate of valid generated SMILES strings.

Since the SMILES string is a sequence of characters, we represent the prior model as an RNN with gated recurrent units (GRUs). The architecture of the generator at this stage of the workflow is depicted in Figure 3b. The generator takes as input the output of an encoder that learns the embedding of SMILES characters, as shown in Figure 1b. For each given SMILES string, the generator is trained to predict the next token in the sequence using the cross-entropy loss function.

According to the findings of [40], regular RNNs cannot effectively generate sequences of a context-free language due to the lack of memory. To this end, [40] proposed the use of a Stack-RNN architecture, which equips the standard RNN cell with a stack unit to mitigate this problem. Since the SMILES encoding of a compound is a context-free language, we follow [15] to use the Stack-RNN for generating SMILES as it ensures that tasks such as noting the start and end parts of aromatic moieties and parentheses for branches in the chemical structure are well addressed. We note that while [15] used a single-layer Stack-RNN, we adopt a multi-layer Stack-RNN structure in our study. We reckon that the multi-layer Stack-RNN could facilitate learning better representations at multiple levels of abstraction akin to multi-layer RNNs.

IRL phase

As stated earlier, we frame the SMILES string generation task as an MDP problem where the reward function is unknown. Consequently, this MDP does not enable the use of RL algorithms to approximate the solution since RL methods require a reward function. Therefore, we learn the reward function using demonstrations that exhibit the desired behavior.

The architectures of the RNN models used in this study. (A) The structure of the reward net (left) and the critic net (right). (B) The structure of the generator net during pretraining to learn the SMILES syntax. The model is trained using the cross-entropy loss. (C) The structure of the generator net during the RL phase; the model autoregressively generates a SMILES string given a fixed start state. The sampling process terminates when an |$end$| token is sampled or a length limit is reached.

In [24], the authors proposed a sample-based entropy maximization IRL algorithm. The authors use a sample or background distribution to approximate the partition function and continuously adapt this sample distribution to provide a better estimation of Equation (15). Therefore, if the sample distribution represents the agent’s policy and this distribution is trained using an RL algorithm, given an approximation of |$R_\psi $|, then the RL algorithm guides the sample distribution to a space that provides a better estimation of |$Z$|. We refer to the method discussed by [24] as guided reward learning (GRL) in this study. We adopt this approach to bias a pretrained generator to the desired compound space since the GRL method produces a policy and a learned reward function that could be transferred to other related domains.

RL is used to train |$q$| to improve the background distribution used in estimating the partition function. The model architecture of |$q$| during the RL training and SMILES generation phases is shown in Figure 3c.

Since we represent |$q$| as an RNN in this study, training the sample distribution with high-variance RL algorithms such as the REINFORCE [16] objective could override the efforts of the pretraining due to the nonlinearity of the model. Therefore, we train the sample distribution using the PPO algorithm [28]. The PPO objective ensures a gradual change in the parameters of the agent policy (sample distribution). The PPO algorithm learns a value function to estimate |$A^\pi (s, a)$| making it an actor–critic method. The architecture of the critic in this study, modeled as an RNN, is illustrated in Figure 3a-right. Like the actor model, the critic takes the SMILES encoder’s outputs as input to predict |$V(s_t)$| at each time step |$t$|.

Lastly, the evaluation function |$E$| of our proposed framework (Figure 1a) is used, at designated periods of training, to evaluate a set of generated SMILES strings. Compounds deemed to satisfy the learning objective could be persisted for further examination in the drug discovery process and the training of the generator could be terminated.

Experiments setup

We performed four experiments to evaluate the approach described in this study. In each experiment, the aim is to ascertain the effectiveness of our proposed approach and how it compares with the case where the reward function is known, as found in the literature. The hardware specifications we used for our experiments are presented in Table 1. The performance of each of the evaluation functions used in the experiments are shown in Table 2.

| Model . | Number of cores . | RAM (GB) . | GPUs . |

|---|---|---|---|

| Intel Xeon CPU E5-2687W | 48 | 128 | 1 GeForce GTX 1080 |

| Intel Xeon CPU E5-2687W | 24 | 128 | 4 GeForce GTX 1080Ti |

| Model . | Number of cores . | RAM (GB) . | GPUs . |

|---|---|---|---|

| Intel Xeon CPU E5-2687W | 48 | 128 | 1 GeForce GTX 1080 |

| Intel Xeon CPU E5-2687W | 24 | 128 | 4 GeForce GTX 1080Ti |

| Model . | Number of cores . | RAM (GB) . | GPUs . |

|---|---|---|---|

| Intel Xeon CPU E5-2687W | 48 | 128 | 1 GeForce GTX 1080 |

| Intel Xeon CPU E5-2687W | 24 | 128 | 4 GeForce GTX 1080Ti |

| Model . | Number of cores . | RAM (GB) . | GPUs . |

|---|---|---|---|

| Intel Xeon CPU E5-2687W | 48 | 128 | 1 GeForce GTX 1080 |

| Intel Xeon CPU E5-2687W | 24 | 128 | 4 GeForce GTX 1080Ti |

Performance of ML models used as evaluation functions in the experiments. The reported values are averages of a 5-fold CV in each case. The standard deviation values are shown in parenthesis. The corresponding experiment(s) that used each ML model is/are specified in parenthesis in the first column

| Model . | Binary classification . | Regression . | ||||

|---|---|---|---|---|---|---|

| Precision . | Recall . | Accuracy . | AUC . | RMSE . | |$R^2$| . | |

| RNN-Bin (DRD2) | 0.971 (0.120) | 0.970 (0.120) | 0.985 (0.011) | 0.996 (0.001) | – | – |

| RNN-Reg (LogP) | – | – | – | – | 0.845 (0.508) | 0.708 (0.359) |

| XGB-Reg (JAK2 Min & Max) | – | – | – | – | 0.646 (0.037) | 0.691 (0.039) |

| Model . | Binary classification . | Regression . | ||||

|---|---|---|---|---|---|---|

| Precision . | Recall . | Accuracy . | AUC . | RMSE . | |$R^2$| . | |

| RNN-Bin (DRD2) | 0.971 (0.120) | 0.970 (0.120) | 0.985 (0.011) | 0.996 (0.001) | – | – |

| RNN-Reg (LogP) | – | – | – | – | 0.845 (0.508) | 0.708 (0.359) |

| XGB-Reg (JAK2 Min & Max) | – | – | – | – | 0.646 (0.037) | 0.691 (0.039) |

Performance of ML models used as evaluation functions in the experiments. The reported values are averages of a 5-fold CV in each case. The standard deviation values are shown in parenthesis. The corresponding experiment(s) that used each ML model is/are specified in parenthesis in the first column

| Model . | Binary classification . | Regression . | ||||

|---|---|---|---|---|---|---|

| Precision . | Recall . | Accuracy . | AUC . | RMSE . | |$R^2$| . | |

| RNN-Bin (DRD2) | 0.971 (0.120) | 0.970 (0.120) | 0.985 (0.011) | 0.996 (0.001) | – | – |

| RNN-Reg (LogP) | – | – | – | – | 0.845 (0.508) | 0.708 (0.359) |

| XGB-Reg (JAK2 Min & Max) | – | – | – | – | 0.646 (0.037) | 0.691 (0.039) |

| Model . | Binary classification . | Regression . | ||||

|---|---|---|---|---|---|---|

| Precision . | Recall . | Accuracy . | AUC . | RMSE . | |$R^2$| . | |

| RNN-Bin (DRD2) | 0.971 (0.120) | 0.970 (0.120) | 0.985 (0.011) | 0.996 (0.001) | – | – |

| RNN-Reg (LogP) | – | – | – | – | 0.845 (0.508) | 0.708 (0.359) |

| XGB-Reg (JAK2 Min & Max) | – | – | – | – | 0.646 (0.037) | 0.691 (0.039) |

DRD2 activity

This experiment’s objective was to train the generator to produce compounds that target the DRD2 protein. Hence, we retrieved a DRD2 dataset of |$351~529$| compounds from ExCAPE-DB [41] (https://git.io/JUgpt). This dataset contained |$8323$| positive compounds (binding to DRD2). We then sampled an equal number of the remaining compounds as the negatives (non-binding to DRD2) to create a balanced dataset. The balanced DRD2 dataset of |$16~646$| samples was then used to train a two-layer LSTM RNN, similar to the reward function network shown in Figure 3a-left but with an additional sigmoid endpoint, using five-fold cross-validation with the BCE loss function.

The resulting five models of the CV training then served as the evaluation function |$E$| of this experiment. This evaluation function is referred to as RNN-Bin in Table 2. At test time, the average value of the predicted DRD2 activity probability was assigned as the result of the evaluation of a given compound.

Also, to create the set of demonstrations |$\mathcal{D}$| of this experiment, we used the SVM classifier of [1] to filter the ChEMBL dataset of [15] for compounds with a probability of activity greater than |$0.8$|. This filtering resulted in a dataset of |$7732$| compounds to serve as |$\mathcal{D}$|.

LogP optimization

In this experiment, we trained a generator to produce compounds biased toward having their octanol-water partition coefficient (logP) less than five and greater than one. LogP is one of the elements of Lipinski’s rule of five.

On the evaluation function |$E$| of this experiment, we used the LogP dataset of [15], consisting of |$14~176$| compounds, to train an LSTM RNN, similar to the reward function network shown in Figure 3a-left, using five-fold cross-validation with the mean square error (MSE) loss function. Similar to the DRD2 experiment, the five models serve as the evaluation function of |$E$|. The evaluation function of this experiment is labeled RNN-Reg in Table 2.

We constructed the LogP demonstrations dataset |$\mathcal{D}$| by using the LogP-biased generator of [15] to produce |$10~000$| SMILES strings, of which |$5019$| were unique valid compounds. The |$5019$| compounds then served as the set of demonstrations for this experiment.

JAK2 inhibition

We also performed two experiments on producing compounds for JAK2 modulation. In the first JAK2 experiment, we trained a generator to produce compounds that maximize the negative logarithm of half-maximal inhibitory concentration (pIC|$_{50}$|) values for JAK2. In this instance, we used the public JAK2 dataset of [15], consisting of |$1911$| compounds, to train an XGBoost model in a five-fold cross-validation method with the MSE loss function. The five resulting models then served as the evaluation function |$E$|, similar to the LogP and DRD2 experiments. The JAK2 inhibition evaluation function is referred to as XGB-Reg in Table 2. We used the JAK2-maximization-biased generator of [15] to produce a demonstration set of |$3608$| unique valid compounds out of |$10~000$| generated SMILES.

On the other hand, we performed an experiment to bias the generator toward producing compounds that minimize the pIC|$_{50}$| values for JAK2. JAK2 minimization is useful for reducing off-target effects. While we maintained the evaluation function of the JAK2 maximization experiment in the JAK2 minimization experiment, we replaced the demonstrations set with |$285$| unique valid SMILES, out of |$10000$| generated SMILES, produced by the JAK2-minimization-biased generator of [15]. Also, we did not train a reward function for JAK2 minimization but rather transferred the reward function learned for JAK2 maximization to this experiment. However, we negated each reward obtained from the JAK2 maximization reward function for the minimization case study. This was done to examine the ability to transfer a learned reward function to a related domain.

Model types

As discussed in Section 3.4, we pretrained a two-layer Stack-RNN model with the ChEMBL dataset of [15] for one epoch. The training time was approximately 14 days. This pretrained model served as the initializing model for the following generators.

PPO-GRL: this model type follows our proposed approach. It is trained using the GRL objective at the IRL phase and the PPO algorithm at the RL phase.

PPO-Eval: this model type follows our proposed approach but without the IRL phase. The RL algorithm used is PPO. Since the problem presented in Section 3.1 assumes an evaluation function |$E$| to periodically determine the performance of the generator during training, this model enables us to evaluate an instance where |$E$| is able to serve as a reward function directly, such as ML models in our experiments. We note that other instances of |$E$|, such as molecular docking or a robot performing synthesis, may be expensive to serve as the reward function in RL training.

REINFORCE: this model type uses the proposed SMILES generation method in [15] to train a two-layer Stack-RNN generator following the method and reward functions of [15]. Thus, no IRL is performed. In the DRD2 experiment, we used the reward function of [1]. Also, for the JAK2 and LogP experiments, we used their respective reward functions in [15].

REINFORCE-GRL: likewise, the REINFORCE-GRL model type is trained using the REINFORCE algorithm at the RL phase and the GRL method to learn the reward function. This model facilitates assessment of the significance of the PPO algorithm properties to our proposed DIRL approach.

Stack-RNN-TL: this model type is trained using TL. Specifically, after the pretraining stage, the demonstrations dataset is used to fine-tune the prior model to bias the generator toward the desired chemical space. Unlike the previous model types which are either trained using RL/IRL, this generator is trained using supervised learning (cross-entropy loss function). Since this model type is a possible candidate for scenarios where the reward function is not specified but exemplar data could be provided, TL serves as a useful baseline to compare the PPO-GRL approach.

The training time of each generator in each experiment is shown in Table 3.

Results of experiments without applying threshold to generated or dataset samples. PPO-GRL follows our proposed approach, REINFORCE follows the work in [15], PPO-Eval, REINFORCE-GRL, and Stack-RNN-TL are baselines in this study

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . | Approx. training time (mins) . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 7732 | 0.897 | – | 0.778 | 0.536 | 0.638 | 0.603 | – | |

| Unbiased | 2048 | 0.917 | 0.381 | 0.661 | 0.556 | 0.640 | 0.602 | – | |

| PPO-GRL | 3264 | 0.887 | 0.189 | 0.887 | 0.674 | 0.579 | 0.271 | 41 | |

| DRD2 | PPO-Eval | 4538 | 0.878 | 0.183 | 0.885 | 0.722 | 0.521 | 0.236 | 33 |

| REINFORCE | 7393 | 0.904 | 0.325 | 0.802 | 0.703 | 0.718 | 0.443 | 42 | |

| REINFORCE-GRL | 541 | 0.924 | 0.513 | 0.846 | 0.794 | 0.293 | 0.304 | 390 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 3064 | |

| Demonstrations | 5019 | 0.880 | – | 0.900 | 0.553 | 0.803 | 0.512 | – | |

| Unbiased | 2051 | 0.917 | 0.372 | 0.658 | 0.553 | 0.640 | 0.603 | - | |

| PPO-GRL | 4604 | 0.903 | 0.159 | 0.836 | 0.580 | 0.770 | 0.494 | 60 | |

| LogP | PPO-Eval | 4975 | 0.733 | 0.060 | 0.982 | 0.731 | 0.449 | 0.075 | 50 |

| REINFORCE | 7704 | 0.897 | 0.101 | 0.774 | 0.580 | 0.827 | 0.618 | 56 | |

| REINFORCE-GRL | 7225 | 0.915 | 0.347 | 0.663 | 0.551 | 0.689 | 0.627 | 62 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 1480 | |

| Demonstrations | 3608 | 0.805 | – | 0.083 | 0.485 | 0.560 | 0.483 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | – | |

| PPO-GRL | 5911 | 0.928 | 0.614 | 0.587 | 0.664 | 0.468 | 0.581 | 22 | |

| JAK2 max | PPO-Eval | 6937 | 0.917 | 0.386 | 0.657 | 0.554 | 0.644 | 0.604 | 38 |

| REINFORCE | 6768 | 0.916 | 0.351 | 0.608 | 0.608 | 0.529 | 0.627 | 30 | |

| REINFORCE-GRL | 7039 | 0.917 | 0.381 | 0.658 | 0.555 | 0.644 | 0.607 | 133 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 608 | |

| Demonstrations | 285 | 0.828 | – | 0.534 | 0.548 | 0.895 | 0.604 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | - | |

| PPO-GRL | 3446 | 0.907 | 0.244 | 0.488 | 0.506 | 0.776 | 0.663 | 34 | |

| JAK2 min | PPO-Eval | 1533 | 0.703 | 0.008 | 0.997 | 0.756 | 0.414 | 0.049 | 148 |

| REINFORCE | 7694 | 0.908 | 0.234 | 0.655 | 0.591 | 0.799 | 0.649 | 40 | |

| REINFORCE-GRL | 6953 | 0.917 | 0.376 | 0.662 | 0.547 | 0.657 | 0.613 | 47 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 83 |

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . | Approx. training time (mins) . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 7732 | 0.897 | – | 0.778 | 0.536 | 0.638 | 0.603 | – | |

| Unbiased | 2048 | 0.917 | 0.381 | 0.661 | 0.556 | 0.640 | 0.602 | – | |

| PPO-GRL | 3264 | 0.887 | 0.189 | 0.887 | 0.674 | 0.579 | 0.271 | 41 | |

| DRD2 | PPO-Eval | 4538 | 0.878 | 0.183 | 0.885 | 0.722 | 0.521 | 0.236 | 33 |

| REINFORCE | 7393 | 0.904 | 0.325 | 0.802 | 0.703 | 0.718 | 0.443 | 42 | |

| REINFORCE-GRL | 541 | 0.924 | 0.513 | 0.846 | 0.794 | 0.293 | 0.304 | 390 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 3064 | |

| Demonstrations | 5019 | 0.880 | – | 0.900 | 0.553 | 0.803 | 0.512 | – | |

| Unbiased | 2051 | 0.917 | 0.372 | 0.658 | 0.553 | 0.640 | 0.603 | - | |

| PPO-GRL | 4604 | 0.903 | 0.159 | 0.836 | 0.580 | 0.770 | 0.494 | 60 | |

| LogP | PPO-Eval | 4975 | 0.733 | 0.060 | 0.982 | 0.731 | 0.449 | 0.075 | 50 |

| REINFORCE | 7704 | 0.897 | 0.101 | 0.774 | 0.580 | 0.827 | 0.618 | 56 | |

| REINFORCE-GRL | 7225 | 0.915 | 0.347 | 0.663 | 0.551 | 0.689 | 0.627 | 62 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 1480 | |

| Demonstrations | 3608 | 0.805 | – | 0.083 | 0.485 | 0.560 | 0.483 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | – | |

| PPO-GRL | 5911 | 0.928 | 0.614 | 0.587 | 0.664 | 0.468 | 0.581 | 22 | |

| JAK2 max | PPO-Eval | 6937 | 0.917 | 0.386 | 0.657 | 0.554 | 0.644 | 0.604 | 38 |

| REINFORCE | 6768 | 0.916 | 0.351 | 0.608 | 0.608 | 0.529 | 0.627 | 30 | |

| REINFORCE-GRL | 7039 | 0.917 | 0.381 | 0.658 | 0.555 | 0.644 | 0.607 | 133 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 608 | |

| Demonstrations | 285 | 0.828 | – | 0.534 | 0.548 | 0.895 | 0.604 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | - | |

| PPO-GRL | 3446 | 0.907 | 0.244 | 0.488 | 0.506 | 0.776 | 0.663 | 34 | |

| JAK2 min | PPO-Eval | 1533 | 0.703 | 0.008 | 0.997 | 0.756 | 0.414 | 0.049 | 148 |

| REINFORCE | 7694 | 0.908 | 0.234 | 0.655 | 0.591 | 0.799 | 0.649 | 40 | |

| REINFORCE-GRL | 6953 | 0.917 | 0.376 | 0.662 | 0.547 | 0.657 | 0.613 | 47 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 83 |

Results of experiments without applying threshold to generated or dataset samples. PPO-GRL follows our proposed approach, REINFORCE follows the work in [15], PPO-Eval, REINFORCE-GRL, and Stack-RNN-TL are baselines in this study

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . | Approx. training time (mins) . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 7732 | 0.897 | – | 0.778 | 0.536 | 0.638 | 0.603 | – | |

| Unbiased | 2048 | 0.917 | 0.381 | 0.661 | 0.556 | 0.640 | 0.602 | – | |

| PPO-GRL | 3264 | 0.887 | 0.189 | 0.887 | 0.674 | 0.579 | 0.271 | 41 | |

| DRD2 | PPO-Eval | 4538 | 0.878 | 0.183 | 0.885 | 0.722 | 0.521 | 0.236 | 33 |

| REINFORCE | 7393 | 0.904 | 0.325 | 0.802 | 0.703 | 0.718 | 0.443 | 42 | |

| REINFORCE-GRL | 541 | 0.924 | 0.513 | 0.846 | 0.794 | 0.293 | 0.304 | 390 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 3064 | |

| Demonstrations | 5019 | 0.880 | – | 0.900 | 0.553 | 0.803 | 0.512 | – | |

| Unbiased | 2051 | 0.917 | 0.372 | 0.658 | 0.553 | 0.640 | 0.603 | - | |

| PPO-GRL | 4604 | 0.903 | 0.159 | 0.836 | 0.580 | 0.770 | 0.494 | 60 | |

| LogP | PPO-Eval | 4975 | 0.733 | 0.060 | 0.982 | 0.731 | 0.449 | 0.075 | 50 |

| REINFORCE | 7704 | 0.897 | 0.101 | 0.774 | 0.580 | 0.827 | 0.618 | 56 | |

| REINFORCE-GRL | 7225 | 0.915 | 0.347 | 0.663 | 0.551 | 0.689 | 0.627 | 62 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 1480 | |

| Demonstrations | 3608 | 0.805 | – | 0.083 | 0.485 | 0.560 | 0.483 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | – | |

| PPO-GRL | 5911 | 0.928 | 0.614 | 0.587 | 0.664 | 0.468 | 0.581 | 22 | |

| JAK2 max | PPO-Eval | 6937 | 0.917 | 0.386 | 0.657 | 0.554 | 0.644 | 0.604 | 38 |

| REINFORCE | 6768 | 0.916 | 0.351 | 0.608 | 0.608 | 0.529 | 0.627 | 30 | |

| REINFORCE-GRL | 7039 | 0.917 | 0.381 | 0.658 | 0.555 | 0.644 | 0.607 | 133 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 608 | |

| Demonstrations | 285 | 0.828 | – | 0.534 | 0.548 | 0.895 | 0.604 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | - | |

| PPO-GRL | 3446 | 0.907 | 0.244 | 0.488 | 0.506 | 0.776 | 0.663 | 34 | |

| JAK2 min | PPO-Eval | 1533 | 0.703 | 0.008 | 0.997 | 0.756 | 0.414 | 0.049 | 148 |

| REINFORCE | 7694 | 0.908 | 0.234 | 0.655 | 0.591 | 0.799 | 0.649 | 40 | |

| REINFORCE-GRL | 6953 | 0.917 | 0.376 | 0.662 | 0.547 | 0.657 | 0.613 | 47 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 83 |

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . | Approx. training time (mins) . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 7732 | 0.897 | – | 0.778 | 0.536 | 0.638 | 0.603 | – | |

| Unbiased | 2048 | 0.917 | 0.381 | 0.661 | 0.556 | 0.640 | 0.602 | – | |

| PPO-GRL | 3264 | 0.887 | 0.189 | 0.887 | 0.674 | 0.579 | 0.271 | 41 | |

| DRD2 | PPO-Eval | 4538 | 0.878 | 0.183 | 0.885 | 0.722 | 0.521 | 0.236 | 33 |

| REINFORCE | 7393 | 0.904 | 0.325 | 0.802 | 0.703 | 0.718 | 0.443 | 42 | |

| REINFORCE-GRL | 541 | 0.924 | 0.513 | 0.846 | 0.794 | 0.293 | 0.304 | 390 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 3064 | |

| Demonstrations | 5019 | 0.880 | – | 0.900 | 0.553 | 0.803 | 0.512 | – | |

| Unbiased | 2051 | 0.917 | 0.372 | 0.658 | 0.553 | 0.640 | 0.603 | - | |

| PPO-GRL | 4604 | 0.903 | 0.159 | 0.836 | 0.580 | 0.770 | 0.494 | 60 | |

| LogP | PPO-Eval | 4975 | 0.733 | 0.060 | 0.982 | 0.731 | 0.449 | 0.075 | 50 |

| REINFORCE | 7704 | 0.897 | 0.101 | 0.774 | 0.580 | 0.827 | 0.618 | 56 | |

| REINFORCE-GRL | 7225 | 0.915 | 0.347 | 0.663 | 0.551 | 0.689 | 0.627 | 62 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 1480 | |

| Demonstrations | 3608 | 0.805 | – | 0.083 | 0.485 | 0.560 | 0.483 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | – | |

| PPO-GRL | 5911 | 0.928 | 0.614 | 0.587 | 0.664 | 0.468 | 0.581 | 22 | |

| JAK2 max | PPO-Eval | 6937 | 0.917 | 0.386 | 0.657 | 0.554 | 0.644 | 0.604 | 38 |

| REINFORCE | 6768 | 0.916 | 0.351 | 0.608 | 0.608 | 0.529 | 0.627 | 30 | |

| REINFORCE-GRL | 7039 | 0.917 | 0.381 | 0.658 | 0.555 | 0.644 | 0.607 | 133 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 608 | |

| Demonstrations | 285 | 0.828 | – | 0.534 | 0.548 | 0.895 | 0.604 | – | |

| Unbiased | 2050 | 0.917 | 0.379 | 0.654 | 0.554 | 0.641 | 0.604 | - | |

| PPO-GRL | 3446 | 0.907 | 0.244 | 0.488 | 0.506 | 0.776 | 0.663 | 34 | |

| JAK2 min | PPO-Eval | 1533 | 0.703 | 0.008 | 0.997 | 0.756 | 0.414 | 0.049 | 148 |

| REINFORCE | 7694 | 0.908 | 0.234 | 0.655 | 0.591 | 0.799 | 0.649 | 40 | |

| REINFORCE-GRL | 6953 | 0.917 | 0.376 | 0.662 | 0.547 | 0.657 | 0.613 | 47 | |

| Stack-RNN-TL | 6927 | 0.918 | 0.391 | 0.655 | 0.551 | 0.647 | 0.611 | 83 |

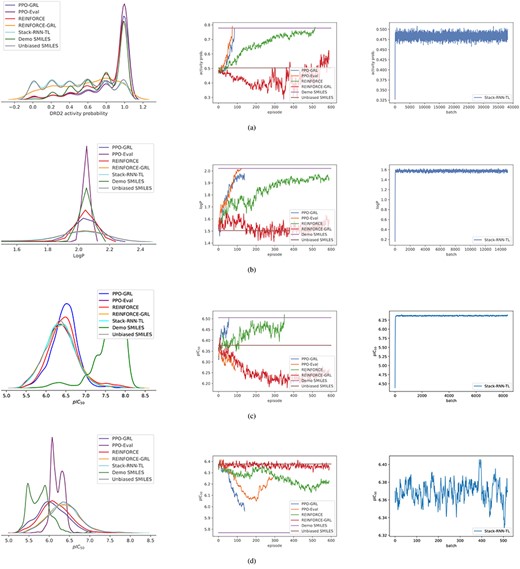

Column 1: the distribution plots of the evaluation function’s outputs for samples generated from the different model types used in the experiment. Column 2: the convergence plot of the RL and IRL-trained models during training; the y-axis represents the mean value of the experiment’s evaluation function output for samples generated at a point during training. The demo SMILES results correspond to the demonstration files of a given experiment. The unbiased SMILES results correspond to samples generated from the pretrained (unbiased or prior) model. Column 3: the convergence plot of the Stack-RNN-TL model during training. (A) The results of DRD2 experiment. (B) The results of LogP optimization experiment. (C) The results of JAK2 maximization. (D) The results of JAK2 minimization experiment.

Metrics

Apart from the Stack-RNN-TL model, all other models in each experiment were trained for a maximum of |$600$| episodes (across all trajectories), with early termination once the threshold of the experiment was reached. The Stack-RNN-TL model was trained for two epochs (due to time constraint) in each experiment. The threshold for each experiment is the average score of the evaluation function on the demonstration set. Also, only the model weights yielding the best score, as evaluated by |$E$| during training, were saved for each model type.

Furthermore, we assessed the performance of all trained generators using the metrics provided by [20] and the internal diversity metric in [21]. In each of these metrics, the best value is 1 and the worst value is 0. We give a brief introduction of the metrics below.

Diversity: the diversity metrics measure the relative diversity between the generated compounds and a reference set. Given a compound from the generated set, a value of 1 connotes that the substructures of the compound is diverse from the referenced set, whereas a value of 0 indicates that the compound shares several substructures with the compounds in the reference set. In our study, a random sample of the demonstrations dataset (1000 compounds) constitute the external diversity [20] reference set. On the other hand, all generated compounds constitute the reference set when calculating internal diversity [21]. Intuitively, the internal diversity metric indicates whether the compound generator repeats similar substructures. We used ECFP8 (extended-connectivity fingerprint with diameter 8 represented using 2048 bits) vector of each compound for calculating internal and external diversities.

Solubility: measures the likeliness for a compound to mix with water. This is also referred to as the water-octanol partition coefficient.

Naturalness: measures how similar a generated compound is to the structure space of natural products (NPs). NPs are small molecules that are synthesized by living organisms and are viewed as good starting points for drug development [42].

Synthesizability: measures how a compound lends itself to chemical synthesis (0 indicates hard to make and 1 indicates easy to make) [43].

Druglikeness: estimates the plausibility of a generated compound being a drug candidate. The synthesizability and solubility of a compound contribute to the compound’s druglikeness.

Discussion

We generated |$10~000$| samples for each trained generator and used the molecular metrics in Section 3.6.5 to assess the generator’s performance. However, during training, we generated |$200$| samples and maintained an exponential average to monitor performance. Figure 4 shows the density plots of each model’s valid SMILES string and the convergence progression of each model toward the threshold of an experiment, beginning from the score of samples generated by the pretrained model.

Also, Table 3 presents the results of each metric for the valid SMILES samples of each model. Likewise, Table 4 presents the results for a set of compounds filtered from the valid SMILES samples of each model by applying the threshold of each experiment. In the case of the logP results in Table 4, we selected compounds whose values are less than 5 and greater than 1. The added proportion in threshold column in Table 4 presents the quota of a generator’s valid SMILES that were within the threshold of the experiment; the maximum value is 1 and minimum is 0.

Results of experiments with applied optimization threshold on generated or dataset samples. PPO-GRL follows our proposed approach, REINFORCE follows the work in [15], PPO-Eval, REINFORCE-GRL, Stack-RNN-TL are baselines in this study

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Proportion in threshold . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 4941 | 0.635 | 0.896 | – | 0.793 | 0.524 | 0.642 | 0.588 | |

| Unbiased | 528 | 0.266 | 0.919 | 0.412 | 0.691 | 0.586 | 0.588 | 0.609 | |

| PPO-GRL | 2406 | 0.737 | 0.864 | 0.104 | 0.939 | 0.709 | 0.508 | 0.181 | |

| DRD2 | PPO-Eval | 3465 | 0.764 | 0.849 | 0.092 | 0.947 | 0.756 | 0.458 | 0.144 |

| REINFORCE | 5005 | 0.677 | 0.888 | 0.222 | 0.837 | 0.715 | 0.706 | 0.392 | |

| REINFORCE-GRL | 201 | 0.372 | 0.907 | 0.223 | 0.929 | 0.853 | 0.211 | 0.194 | |

| Stack-RNN-TL | 1715 | 0.248 | 0.920 | 0.447 | 0.696 | 0.586 | 0.585 | 0.608 | |

| Demonstrations | 5007 | 1.000 | 0.880 | – | 0.898 | 0.553 | 0.803 | 0.512 | |

| Unbiased | 1903 | 0.927 | 0.915 | 0.346 | 0.687 | 0.546 | 0.652 | 0.605 | |

| PPO-GRL | 4548 | 0.988 | 0.903 | 0.149 | 0.842 | 0.579 | 0.772 | 0.493 | |

| LogP | PPO-Eval | 4969 | 0.999 | 0.733 | 0.061 | 0.983 | 0.731 | 0.449 | 0.075 |

| REINFORCE | 7638 | 0.991 | 0.897 | 0.098 | 0.777 | 0.579 | 0.829 | 0.618 | |

| REINFORCE-GRL | 6796 | 0.941 | 0.914 | 0.317 | 0.686 | 0.546 | 0.698 | 0.630 | |

| Stack-RNN-TL | 6479 | 0.935 | 0.916 | 0.364 | 0.678 | 0.545 | 0.655 | 0.614 | |

| Demonstrations | 3449 | 0.958 | 0.794 | – | 0.073 | 0.482 | 0.560 | 0.479 | |

| Unbiased | 717 | 0.354 | 0.916 | 0.360 | 0.639 | 0.572 | 0.565 | 0.572 | |

| PPO-GRL | 2768 | 0.468 | 0.925 | 0.543 | 0.578 | 0.700 | 0.376 | 0.533 | |

| JAK2 Max | PPO-Eval | 2427 | 0.350 | 0.916 | 0.369 | 0.640 | 0.564 | 0.565 | 0.576 |

| REINFORCE | 2942 | 0.434 | 0.912 | 0.281 | 0.586 | 0.609 | 0.462 | 0.605 | |

| REINFORCE-GRL | 2508 | 0.356 | 0.916 | 0.355 | 0.644 | 0.561 | 0.568 | 0.577 | |

| Stack-RNN-TL | 2376 | 0.343 | 0.917 | 0.370 | 0.650 | 0.557 | 0.578 | 0.580 | |

| Demonstrations | 145 | 0.518 | 0.785 | – | 0.539 | 0.506 | 0.908 | 0.617 | |

| Unbiased | 123 | 0.062 | 0.887 | 0.075 | 0.680 | 0.422 | 0.827 | 0.655 | |

| PPO-GRL | 693 | 0.201 | 0.88 | 0.061 | 0.534 | 0.453 | 0.852 | 0.673 | |

| JAK2 Min | PPO-Eval | 10 | 0.007 | 0.618 | 0.000 | 1.000 | 0.728 | 0.387 | 0.050 |

| REINFORCE | 1078 | 0.140 | 0.891 | 0.086 | 0.681 | 0.500 | 0.887 | 0.677 | |

| REINFORCE-GRL | 490 | 0.070 | 0.897 | 0.142 | 0.682 | 0.432 | 0.831 | 0.671 | |

| Stack-RNN-TL | 426 | 0.0.061 | 0.897 | 0.130 | 0.683 | 0.430 | 0.832 | 0.662 |

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Proportion in threshold . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 4941 | 0.635 | 0.896 | – | 0.793 | 0.524 | 0.642 | 0.588 | |

| Unbiased | 528 | 0.266 | 0.919 | 0.412 | 0.691 | 0.586 | 0.588 | 0.609 | |

| PPO-GRL | 2406 | 0.737 | 0.864 | 0.104 | 0.939 | 0.709 | 0.508 | 0.181 | |

| DRD2 | PPO-Eval | 3465 | 0.764 | 0.849 | 0.092 | 0.947 | 0.756 | 0.458 | 0.144 |

| REINFORCE | 5005 | 0.677 | 0.888 | 0.222 | 0.837 | 0.715 | 0.706 | 0.392 | |

| REINFORCE-GRL | 201 | 0.372 | 0.907 | 0.223 | 0.929 | 0.853 | 0.211 | 0.194 | |

| Stack-RNN-TL | 1715 | 0.248 | 0.920 | 0.447 | 0.696 | 0.586 | 0.585 | 0.608 | |

| Demonstrations | 5007 | 1.000 | 0.880 | – | 0.898 | 0.553 | 0.803 | 0.512 | |

| Unbiased | 1903 | 0.927 | 0.915 | 0.346 | 0.687 | 0.546 | 0.652 | 0.605 | |

| PPO-GRL | 4548 | 0.988 | 0.903 | 0.149 | 0.842 | 0.579 | 0.772 | 0.493 | |

| LogP | PPO-Eval | 4969 | 0.999 | 0.733 | 0.061 | 0.983 | 0.731 | 0.449 | 0.075 |

| REINFORCE | 7638 | 0.991 | 0.897 | 0.098 | 0.777 | 0.579 | 0.829 | 0.618 | |

| REINFORCE-GRL | 6796 | 0.941 | 0.914 | 0.317 | 0.686 | 0.546 | 0.698 | 0.630 | |

| Stack-RNN-TL | 6479 | 0.935 | 0.916 | 0.364 | 0.678 | 0.545 | 0.655 | 0.614 | |

| Demonstrations | 3449 | 0.958 | 0.794 | – | 0.073 | 0.482 | 0.560 | 0.479 | |

| Unbiased | 717 | 0.354 | 0.916 | 0.360 | 0.639 | 0.572 | 0.565 | 0.572 | |

| PPO-GRL | 2768 | 0.468 | 0.925 | 0.543 | 0.578 | 0.700 | 0.376 | 0.533 | |

| JAK2 Max | PPO-Eval | 2427 | 0.350 | 0.916 | 0.369 | 0.640 | 0.564 | 0.565 | 0.576 |

| REINFORCE | 2942 | 0.434 | 0.912 | 0.281 | 0.586 | 0.609 | 0.462 | 0.605 | |

| REINFORCE-GRL | 2508 | 0.356 | 0.916 | 0.355 | 0.644 | 0.561 | 0.568 | 0.577 | |

| Stack-RNN-TL | 2376 | 0.343 | 0.917 | 0.370 | 0.650 | 0.557 | 0.578 | 0.580 | |

| Demonstrations | 145 | 0.518 | 0.785 | – | 0.539 | 0.506 | 0.908 | 0.617 | |

| Unbiased | 123 | 0.062 | 0.887 | 0.075 | 0.680 | 0.422 | 0.827 | 0.655 | |

| PPO-GRL | 693 | 0.201 | 0.88 | 0.061 | 0.534 | 0.453 | 0.852 | 0.673 | |

| JAK2 Min | PPO-Eval | 10 | 0.007 | 0.618 | 0.000 | 1.000 | 0.728 | 0.387 | 0.050 |

| REINFORCE | 1078 | 0.140 | 0.891 | 0.086 | 0.681 | 0.500 | 0.887 | 0.677 | |

| REINFORCE-GRL | 490 | 0.070 | 0.897 | 0.142 | 0.682 | 0.432 | 0.831 | 0.671 | |

| Stack-RNN-TL | 426 | 0.0.061 | 0.897 | 0.130 | 0.683 | 0.430 | 0.832 | 0.662 |

Results of experiments with applied optimization threshold on generated or dataset samples. PPO-GRL follows our proposed approach, REINFORCE follows the work in [15], PPO-Eval, REINFORCE-GRL, Stack-RNN-TL are baselines in this study

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Proportion in threshold . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 4941 | 0.635 | 0.896 | – | 0.793 | 0.524 | 0.642 | 0.588 | |

| Unbiased | 528 | 0.266 | 0.919 | 0.412 | 0.691 | 0.586 | 0.588 | 0.609 | |

| PPO-GRL | 2406 | 0.737 | 0.864 | 0.104 | 0.939 | 0.709 | 0.508 | 0.181 | |

| DRD2 | PPO-Eval | 3465 | 0.764 | 0.849 | 0.092 | 0.947 | 0.756 | 0.458 | 0.144 |

| REINFORCE | 5005 | 0.677 | 0.888 | 0.222 | 0.837 | 0.715 | 0.706 | 0.392 | |

| REINFORCE-GRL | 201 | 0.372 | 0.907 | 0.223 | 0.929 | 0.853 | 0.211 | 0.194 | |

| Stack-RNN-TL | 1715 | 0.248 | 0.920 | 0.447 | 0.696 | 0.586 | 0.585 | 0.608 | |

| Demonstrations | 5007 | 1.000 | 0.880 | – | 0.898 | 0.553 | 0.803 | 0.512 | |

| Unbiased | 1903 | 0.927 | 0.915 | 0.346 | 0.687 | 0.546 | 0.652 | 0.605 | |

| PPO-GRL | 4548 | 0.988 | 0.903 | 0.149 | 0.842 | 0.579 | 0.772 | 0.493 | |

| LogP | PPO-Eval | 4969 | 0.999 | 0.733 | 0.061 | 0.983 | 0.731 | 0.449 | 0.075 |

| REINFORCE | 7638 | 0.991 | 0.897 | 0.098 | 0.777 | 0.579 | 0.829 | 0.618 | |

| REINFORCE-GRL | 6796 | 0.941 | 0.914 | 0.317 | 0.686 | 0.546 | 0.698 | 0.630 | |

| Stack-RNN-TL | 6479 | 0.935 | 0.916 | 0.364 | 0.678 | 0.545 | 0.655 | 0.614 | |

| Demonstrations | 3449 | 0.958 | 0.794 | – | 0.073 | 0.482 | 0.560 | 0.479 | |

| Unbiased | 717 | 0.354 | 0.916 | 0.360 | 0.639 | 0.572 | 0.565 | 0.572 | |

| PPO-GRL | 2768 | 0.468 | 0.925 | 0.543 | 0.578 | 0.700 | 0.376 | 0.533 | |

| JAK2 Max | PPO-Eval | 2427 | 0.350 | 0.916 | 0.369 | 0.640 | 0.564 | 0.565 | 0.576 |

| REINFORCE | 2942 | 0.434 | 0.912 | 0.281 | 0.586 | 0.609 | 0.462 | 0.605 | |

| REINFORCE-GRL | 2508 | 0.356 | 0.916 | 0.355 | 0.644 | 0.561 | 0.568 | 0.577 | |

| Stack-RNN-TL | 2376 | 0.343 | 0.917 | 0.370 | 0.650 | 0.557 | 0.578 | 0.580 | |

| Demonstrations | 145 | 0.518 | 0.785 | – | 0.539 | 0.506 | 0.908 | 0.617 | |

| Unbiased | 123 | 0.062 | 0.887 | 0.075 | 0.680 | 0.422 | 0.827 | 0.655 | |

| PPO-GRL | 693 | 0.201 | 0.88 | 0.061 | 0.534 | 0.453 | 0.852 | 0.673 | |

| JAK2 Min | PPO-Eval | 10 | 0.007 | 0.618 | 0.000 | 1.000 | 0.728 | 0.387 | 0.050 |

| REINFORCE | 1078 | 0.140 | 0.891 | 0.086 | 0.681 | 0.500 | 0.887 | 0.677 | |

| REINFORCE-GRL | 490 | 0.070 | 0.897 | 0.142 | 0.682 | 0.432 | 0.831 | 0.671 | |

| Stack-RNN-TL | 426 | 0.0.061 | 0.897 | 0.130 | 0.683 | 0.430 | 0.832 | 0.662 |

| Objective . | Algorithm/ dataset . | Number of unique canonical SMILES . | Proportion in threshold . | Internal diversity . | External diversity . | Solubility . | Naturalness . | Synthesizability . | Druglikeness . |

|---|---|---|---|---|---|---|---|---|---|

| Demonstrations | 4941 | 0.635 | 0.896 | – | 0.793 | 0.524 | 0.642 | 0.588 | |

| Unbiased | 528 | 0.266 | 0.919 | 0.412 | 0.691 | 0.586 | 0.588 | 0.609 | |

| PPO-GRL | 2406 | 0.737 | 0.864 | 0.104 | 0.939 | 0.709 | 0.508 | 0.181 | |

| DRD2 | PPO-Eval | 3465 | 0.764 | 0.849 | 0.092 | 0.947 | 0.756 | 0.458 | 0.144 |

| REINFORCE | 5005 | 0.677 | 0.888 | 0.222 | 0.837 | 0.715 | 0.706 | 0.392 | |

| REINFORCE-GRL | 201 | 0.372 | 0.907 | 0.223 | 0.929 | 0.853 | 0.211 | 0.194 | |

| Stack-RNN-TL | 1715 | 0.248 | 0.920 | 0.447 | 0.696 | 0.586 | 0.585 | 0.608 | |

| Demonstrations | 5007 | 1.000 | 0.880 | – | 0.898 | 0.553 | 0.803 | 0.512 | |

| Unbiased | 1903 | 0.927 | 0.915 | 0.346 | 0.687 | 0.546 | 0.652 | 0.605 | |

| PPO-GRL | 4548 | 0.988 | 0.903 | 0.149 | 0.842 | 0.579 | 0.772 | 0.493 | |

| LogP | PPO-Eval | 4969 | 0.999 | 0.733 | 0.061 | 0.983 | 0.731 | 0.449 | 0.075 |

| REINFORCE | 7638 | 0.991 | 0.897 | 0.098 | 0.777 | 0.579 | 0.829 | 0.618 | |

| REINFORCE-GRL | 6796 | 0.941 | 0.914 | 0.317 | 0.686 | 0.546 | 0.698 | 0.630 | |

| Stack-RNN-TL | 6479 | 0.935 | 0.916 | 0.364 | 0.678 | 0.545 | 0.655 | 0.614 | |

| Demonstrations | 3449 | 0.958 | 0.794 | – | 0.073 | 0.482 | 0.560 | 0.479 | |

| Unbiased | 717 | 0.354 | 0.916 | 0.360 | 0.639 | 0.572 | 0.565 | 0.572 | |

| PPO-GRL | 2768 | 0.468 | 0.925 | 0.543 | 0.578 | 0.700 | 0.376 | 0.533 | |

| JAK2 Max | PPO-Eval | 2427 | 0.350 | 0.916 | 0.369 | 0.640 | 0.564 | 0.565 | 0.576 |

| REINFORCE | 2942 | 0.434 | 0.912 | 0.281 | 0.586 | 0.609 | 0.462 | 0.605 | |

| REINFORCE-GRL | 2508 | 0.356 | 0.916 | 0.355 | 0.644 | 0.561 | 0.568 | 0.577 | |

| Stack-RNN-TL | 2376 | 0.343 | 0.917 | 0.370 | 0.650 | 0.557 | 0.578 | 0.580 | |

| Demonstrations | 145 | 0.518 | 0.785 | – | 0.539 | 0.506 | 0.908 | 0.617 | |

| Unbiased | 123 | 0.062 | 0.887 | 0.075 | 0.680 | 0.422 | 0.827 | 0.655 | |

| PPO-GRL | 693 | 0.201 | 0.88 | 0.061 | 0.534 | 0.453 | 0.852 | 0.673 | |

| JAK2 Min | PPO-Eval | 10 | 0.007 | 0.618 | 0.000 | 1.000 | 0.728 | 0.387 | 0.050 |

| REINFORCE | 1078 | 0.140 | 0.891 | 0.086 | 0.681 | 0.500 | 0.887 | 0.677 | |

| REINFORCE-GRL | 490 | 0.070 | 0.897 | 0.142 | 0.682 | 0.432 | 0.831 | 0.671 | |

| Stack-RNN-TL | 426 | 0.0.061 | 0.897 | 0.130 | 0.683 | 0.430 | 0.832 | 0.662 |

Firstly, we observed from the results that the PPO-GRL model focused on generating compounds that either satisfy the demonstration dataset threshold or are toward the threshold after a few episodes during training in each of the experiments. This early convergence of the PPO-GRL generator (in terms of number of episodes) connotes that an appropriate reward function has been retrieved from the demonstration dataset to facilitate the biasing optimization using RL. Also, while the evaluation function provided an appropriate signal for training the PPO-Eval in some cases, the diversity metrics of the PPO-Eval model were typically less than the PPO-GRL model. We also realized in training that generating focused compounds toward a threshold was accompanied by an increase in the number of invalid SMILES strings. This increase in invalid compounds explains the drop in the number of valid SMILES of PPO-GRL in Table 3. Although the REINFORCE model mostly generated a high number of valid SMILES samples, it was less sample efficient. We reckon that the difference in sample efficiency between PPO-GRL and REINFORCE-GRL is as a result of the variance in estimating |$\Psi $| (see Equation (5)). Thus, a stable estimation of |$\Psi $| is significant to the sample-based objective in Equation (18) since a better background distribution could be estimated. The performance of the REINFORCE-GRL, as shown in Figure 4, reifies this high-variance challenge. Also, the Stack-RNN-TL model recorded the same scores for all metrics across the experiments, as seen in Table 3. This performance of the Stack-RNN-TL model connotes that no focused compounds could be generated in each experiment after two epochs of training but mostly took longer time to train as compared with the other models. We also note that the Stack-RNN-TL model produced a higher number of valid canonical SMILES than the unbiased or prior generator after the fine-tuning step.

Concerning the DRD2 experiment, although the PPO-GRL model’s number of valid SMILES strings was more than the number of unique compounds in the unbiased dataset, it performed approximately one-third lesser than the PPO-Eval model. As shown in Figure 4a, this is observed in the mean predicted DRD2 activity of the PPO-Eval model, which reaches the threshold in fewer episodes than the PPO-GRL and REINFORCE models. The PPO-GRL model produced a higher proportion of its valid compounds in the activity threshold than the REINFORCE model, and the generated samples seem to share a relatively lesser number of substructures with the demonstrations set (external diversity) than the PPO-Eval approach as reported in Table 4. Unsurprisingly, due to the variance problem mentioned earlier, the REINFORCE-GRL performed poorly and generated the fewest number of valid compounds with more than half of its produced SMILES being less than the DRD2 activity threshold.

Regarding the logP optimization experiment, most of the compounds sampled from all the generators had logP values that fell within the range of |$1$| and |$5$|. However, while the REINFORCE-GRL and Stack-RNN-TL models recorded an average logP value of approximately |$1.6$|, the PPO-GRL, PPO-Eval and REINFORCE models recorded higher average logP values closer to the demonstration dataset’s average logP value, as shown in Figure 4b. Considering that the PPO-GRL model was trained without the reward function used to train the PPO-Eval and REINFORCE generators, our proposed approach was effective at recovering a proper reward function for biasing the generator. Interestingly, the samples of the PPO-GRL generator recorded better diversity scores and were deemed more druglike than the PPO-Eval generator’s samples, as shown in Table 3.

On the JAK2 maximization experiment, the PPO-Eval method could not reach the threshold. The PPO-GRL generator reached the threshold in less than |$100$| episodes but with fewer valid SMILES strings than the REINFORCE generator. As shown in Table 4, the proportion of compounds in the JAK2 max threshold of the PPO-GRL generated samples, when compared with the other models in the JAK2 maximization experiment, indicates that the PPO-GRL model seems to focus on the objective early in training than the other generators.

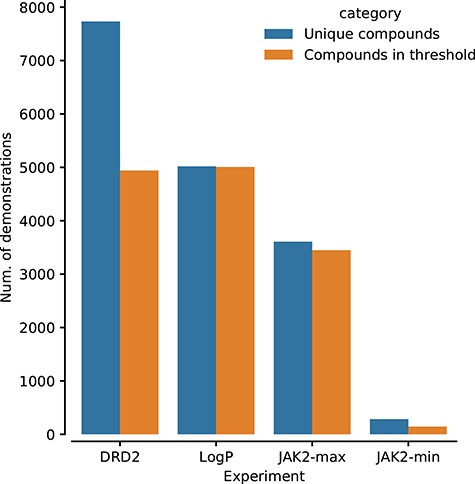

On the other hand, the JAK2 minimization experiment provides an insightful view of the PPO-GRL behavior despite its inability, as well as the other models, to reach the threshold in Figure 4d. In Figure 5, we show the size of the demonstrations dataset in each experiment and the number of compounds that satisfy the experiment’s threshold. We think of the proportion of each demonstration dataset satisfying the threshold as vital to the learning objective, and hence, fewer numbers could make learning a useful reward function more challenging. Hence, the size and quality of the demonstration dataset contribute significantly to learning a useful reward function. We suggest this explains the PPO-GRL generator’s inability to reach the JAK2 minimization threshold. Therefore, it is no surprise that the REINFORCE-GRL generator’s best mean pIC|$_{50}$| value was approximately the same as that of the pretrained model’s score, as seen in Figure 4d. We note that the number of unique PPO-GRL generated compounds was almost five times larger than the demonstrations with approximately the same internal variation. It is worth noting that while the REINFORCE approach used the JAK2 minimization reward function of [15], the PPO-GRL method used the negated rewards of the learned JAK2 maximization reward function. This ability to transfer learned reward functions could be a useful technique in drug discovery campaigns.

The size of the demonstrations dataset used in each experiment of this study.

In a nutshell, the preceding results and analysis show that our proposed framework offers a rational approach to train compound generators and learn a reward function, transferable to related domains, in situations where specifying the reward function for RL training is challenging or not possible. While the PPO-Eval model can perform well in some instances, the evaluation function may not be readily available or expensive to serve in the training loop as a reward function in some real-world scenarios such as a robot synthesis or molecular docking evaluation function.

Conclusion

This study reviewed the importance of chemical libraries to the drug discovery process and discussed some notable existing proposals in the literature for evolving chemical structures to satisfy specific objectives. We pointed out that while RL methods could facilitate this process, specifying the reward function in certain drug discovery studies could be challenging, and its absence renders even the most potent RL technique inapplicable. Our study has proposed a reward function learning and a structural evolution model development framework based on the entropy maximization IRL method. The experiments conducted have shown the promise such a direction offers in the face of the large-scale chemical data repositories that have become available in recent times. We conclude that further studies into improving the proposed method could provide a powerful technique to aid drug discovery.

Further Studies

An area for further research could be techniques for reducing the number of invalid SMILES while the generator focuses on the training objective. An approach that could be investigated is a training method that actively teaches the reward function to distinguish between valid and invalid SMILES strings. Also, a study to provide a principled understanding of developing the demonstrations dataset could be an exciting and useful direction. Additionally, considering the impact of transformer models in DL research, the gated transformer proposed by Parisotto et al. [44] to extend the capabilities of transformer models to the RL domain offers the opportunity to develop better compound generators. In particular, our work could be extended by replacing the Stack-RNN used to model the generator with a memory-based gated transformer.

The size and quality of chemical libraries to the drug discovery pipeline are crucial for drug design.

Existing reinforcement learning-based de novo drug design methods require a reward function that could be challenging to specify or design.

We propose a method for learning a transferable reward function from a given set of compounds.

The proposed training approach produces a compound generator and a reward function.

Our findings indicate that the proposed method provides a rational alternative for scenarios where the reward function design could be challenging.

Data Availability

The source code and data of this study are available at https://github.com/bbrighttaer/irelease.

Acknowledgments

We would like to thank Zhihua Lei, Kwadwo Boafo Debrah and all reviewers of this study.

Funding

This work was partly supported by SipingSoft Co. Ltd.

Conflict of interest

The authors declare that they have no competing interests.

Brighter Agyemang completed is a research assistant at SipingSoft Co. Ltd and a PhD candidate at the University of Electronic Science and Technology of China (UESTC). His current research interests are reinforcement learning, machine learning, the internet of things and cheminformatics.

Wei-Ping Wu received a PhD in metallurgy from RWTH Aachen, Germany, in 1992. He is a professor at UESTC. His research interests include the application of artificial intelligence in drug design, IoT and mechanical manufacturing.

Daniel Addo is a PhD student at UESTC. His current research interests are machine learning, internet of things and security in deep brain implants.

Michael Y. Kpiebaareh is a PhD candidate at UESTC. His current research interests include recommendation systems for configurable product design engineering and machine learning for mass customization.

Ebenezer Nanor is a research assistant at Sipingsoft Co. Ltd, China. His research interests include the development of oversampling techniques for imbalanced and high-dimensional datasets for drug design.

Charles Roland Haruna received a PhD in computer science and technology at UESTC in 2020. His research interests are distributed data, data cleaning and data privacy.

References