-

PDF

- Split View

-

Views

-

Cite

Cite

Georgia Galanou Luchen, Toni Fera, Scott V. Anderson, David Chen, Pharmacy Futures: Summit on Artificial Intelligence in Pharmacy Practice, American Journal of Health-System Pharmacy, Volume 81, Issue 24, 15 December 2024, Pages 1327–1343, https://doi.org/10.1093/ajhp/zxae279

Close - Share Icon Share

Introduction

The American Society of Health-System Pharmacists (ASHP) convened pharmacists and other stakeholders for the Summit on Artificial Intelligence (AI) in Pharmacy Practice held on June 9 and 10, 2024, as part of the inaugural ASHP Pharmacy Futures Meeting in Portland, OR. The goal of the Summit was to advance the expertise of pharmacy practitioners and thought leaders in the evolving landscape of AI adoption in healthcare and build on this knowledge to advance pharmacy practice. Participating in interactive sessions, pharmacy practitioners, leaders, and innovators were able to collaborate, learn about, and shape the ongoing integration of AI in pharmacy practice and research. Lisa Stump, MS, RPh, FASHP, senior vice president and chief information and digital transformation officer for Yale School of Medicine and Yale New Haven Health System, opened the Summit with a call to action for pharmacists to lead AI development and implementation within their organizations to ensure that AI tools enhance the quality, safety, and efficiency of medication-related processes and patient care (Figure 1). Stump also emphasized that with the potential for AI to transform medication management processes and improve patient outcomes, there is also the potential for unintended consequences and careful validation is required. AI continues to be a hot topic for health systems, and the impact on the profession has been cited in several important publications.

Lisa Stump welcomes participants to the AI Summit. Photo courtesy of ASHP.

The ASHP Statement on the Use of Artificial Intelligence in Pharmacy highlights several key areas where pharmacists can take the lead, including implementation and ongoing evaluation of AI-related applications and technologies that affect medication-use processes and tasks, such as identifying which parts of the medication-use process can benefit from AI, choosing the right AI methods, and setting standards for clinical validation of AI tools.1 Additionally, pharmacists must assist in validating AI for clinical use and identify strategies to mitigate unintended consequences. The role of AI in pharmacy practice is evolving and has important implications; AI is poised to transform pharmacy informatics, patient care, pharmacy operations, and education. As AI becomes more integrated into healthcare, pharmacy education will need to evolve and the workforce will need training in effective application of AI, which includes understanding of limitations and ethical considerations. This technology expansion will also open new career paths in pharmacy informatics and data science. The 2019 ASHP Commission on Goals report Impact of Artificial Intelligence on Healthcare and Pharmacy Practice also identified trends in the use of AI in clinical practice and healthcare delivery; explored legal, regulatory, and ethical aspects of healthcare AI; and discussed potential opportunities for ASHP in healthcare AI.2 ASHP’s 2023 Commission on Goals report also addressed AI with focused attention on optimizing medication therapy through advanced analytics and data-driven healthcare.3 Key domains with implications for the profession and strategic planning included data stewardship and governance, enhancing the quality of patient care, addressing health inequity, managing population health, and workforce preparation. The ASHP and ASHP Foundation Pharmacy Forecast 2024 dedicated a chapter to AI and data integration.4 Key points included the importance of developing an ethical framework to ensure these tools are optimally integrated in health systems and medication management, and the acknowledgment that adoption might be slow if there is a gap between what AI can technically do and what regulations in pharmacy practice allow. The Pharmacy Forecast report also highlighted that keeping patient safety at the forefront is essential to manage the risks of both the algorithms themselves and how humans use the AI-generated content. In keeping with the strategic considerations outlined with this earlier work, the Summit goal was to more deeply explore the implications of AI for society and healthcare, patient care, the profession, and individual pharmacy practitioners. The Summit was designed to catalyze the future of pharmacy practice by fostering a collaborative environment where pharmacy practitioners, leaders, technologists, and innovators could explore the integration of AI in pharmacy practice and healthcare.

Summit design and Town Hall methods

An advisory council (AC) of ASHP members with expertise in AI and ASHP staff was convened in December 2023 to develop Summit content and programming format. The AC identified focus topics for the meeting, faculty experts to consider, and a framework for the interactive Town Hall session. The Summit plenary sessions provided a practical overview of current AI implementations in pharmacy practice, a global understanding of AI opportunities in healthcare, and a review of ethical considerations with AI use. The Summit programming included presentations on clinical, operational, and leadership applications of AI in pharmacy, practice-based use-cases, and facilitated group discussions. In addition, as attendee engagement was a priority, and with intent to create a “roadmap for the future,” Summit attendees were asked to contribute their perspectives on opportunities, challenges, limitations, and key considerations for AI adoption in pharmacy practice in a Town Hall format, with attendees asked to reflect on 4 areas of focus (ie, domains):

What are the implications of AI for patient care?

What are the AI implications for the pharmacy profession?

What are the implications of AI for pharmacy practitioners?

What are the implications of AI for society / healthcare landscape?

The AI Town Hall was conducted over a 2 ½-hour period that opened with a 30-minute overview of key points provided by the Summit presenters. Discussion questions were developed based on input from the AC and other guiding documents.1-3,5,6 The participants were organized so that a quarter of them discussed a particular domain for 1 hour and then discussed a different domain for the second hour. For each of these areas, participants were also asked, “What would you recommend to pharmacy professionals as an action item?” This matrix process was established to ensure the broadest possible capturing of ideas.

The Town Hall was facilitated by faculty, ASHP staff, and key leaders from ASHP’s Sections and Forums. Participation was open to all Summit and Pharmacy Futures attendees. Town Hall participants were provided with a Qualtrics (Silver Lake; Qualtrics, Seattle, WA) survey link and asked after each discussion segment to submit their “Key Takeaways” from the discussion. Key Takeaways could be submitted by discussion groups or individuals. Over 350 Key Takeaways were submitted by individuals or discussion groups and were analyzed for common themes and sorted by alignment with core areas of focus defined by the AC (eg, pharmacy profession, patient safety, and healthcare). Summary recommendations were developed to reflect the “wisdom of the crowd.” Based on a review of those requesting continuing education credit for the Town Hall session (n = 313), participants included pharmacists and technicians and represented 43 states, the District of Columbia, and 2 other countries.

Pursuant to the initial analysis for common themes and development of recommendations, these items were placed into a Qualtrics survey instrument and presented via email to all individuals who claimed continuing education credit for the ASHP Summit Town Hall, the Summit Advisory Council, the Section of Pharmacy Informatics and Technology Executive Committee, the Section of Digital and Telehealth Practitioners Executive Committee, and Summit presenters. These individuals were asked to evaluate the appropriateness of the analysis.

The AI revolution in healthcare

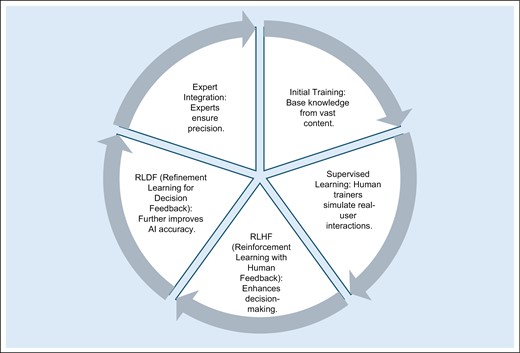

The Summit keynote presenter, Harvey Castro, MD, chief medical AI officer for Helpp AI, entrepreneur, and consultant, provided a window into the possibilities of AI and how he believes it will revolutionize healthcare (Figure 2). Castro noted that AI has already impacted medicine and is being integrated into providers’ (ie, physicians, pharmacists, nurses, and other patient care professionals) routine work. Revolutionary applications are also being developed that will dramatically impact direct patient care (eg, diagnostic devices, patient care bots, and smart glasses). Other AI is being used or developed to enhance patient and provider education and engagement (eg, language translation technology, tailored discharge instructions in the physician’s voice) and health record documentation (eg, care alerts and automatically generated notes). AI is also being applied for research (data analysis, study design), predictive analytics (missed appointments, prediction of sepsis, prediction of stroke), drug discovery and design, and personalized medicine. AI adoption is on a steep trajectory, in part due to the evolution of large language models (LLMs) and generative AI, like OpenAI’s ChatGPT, which are capable of summarizing, generating, and predicting new text at a speed and with data sources exponentially greater than the most intelligent humans could process. Castro emphasized that AI should be leveraged in healthcare to make humans more productive and efficient, but AI will not replace human judgement; reinforced learning with human feedback (RLHF) of the data is required (Figure 3). He urged pharmacists to embrace AI and to collaborate and use their collective knowledge to refine the technology and enhance the profession. Along with the benefits of AI come a host of concerns and challenges; awareness of its risks and limitations is key (Box 1). Potential AI risks that practitioners need to be aware of include data security and privacy, environmental concerns, cost implications, ethical dilemmas, and liability. For example, increasing efficiency needs to be weighed against the fact that system requirements to process LLMs utilize considerable energy, leaving a large carbon footprint. Castro described limitations of AI, including biases and algorithm training gaps. For example, training gaps occur when there is model “drift,” where results are no longer as accurate as when the model was initially validated. He addressed the human factor concerns such as “over trust” or fear of losing the “art” of medicine, emphasizing the goal should be to maximize the positive impact while minimizing unintended consequences. Castro stressed the importance of balancing the benefits and risks of AI as a call to action for pharmacists to embrace AI and shape its integration into healthcare practice. Another important consideration is how to engage patients in the use of AI, including transparency and informed consent. As AI becomes seamlessly integrated into systems and processes, it may be difficult to provide transparency, even if well-intended. Finally, to stay abreast of changes, Castro urged attendees to network with others, follow industry leaders through social media, and embrace opportunities to implement new technologies in their practice setting.

|

|

aUsed with permission from Harvey Castro.

|

|

aUsed with permission from Harvey Castro.

ASHP Chief Executive Officer Paul Abramowitz welcomes keynote presenter Harvey Castro. Photo courtesy of ASHP.

Training AI models and fine-tuning process. Illustration used with permission from Harvey Castro.

Building the base: infrastructure needed to support AI

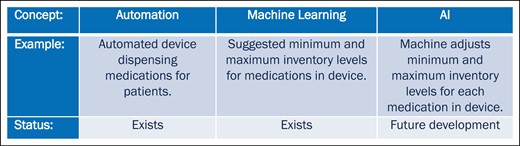

To level-set the group, Ghalib Abbasi, PharmD, MS, MBA, system director, pharmacy informatics at Houston Methodist Hospital System, and Benjamin R. Michaels, PharmD, data science pharmacist at UCSF Health, presented an overview of terms associated with AI in pharmacy practice (also see Box 2), skills and services needed for pharmacy data team members, and a strategy for securing executive leadership buy-in for AI utilization and adoption. AI is not new; it has evolved over several decades, but expectations have often exceeded reality. This holds true today; for the most part, actual outcomes generated by LLMs lag behind the advertised potential. It is important to begin the AI conversation with a common definition of AI, which can vary, even within the industry. ASHP defines AI as “the theory and development of computer systems to perform tasks normally requiring human intelligence, such as visual perception, language processing, learning, and problem solving.”1 Generally, there is a distinction in the level of programming complexity and intervention needed for an outcome, between automation, machine learning, and AI (Figure 4).

| Artificial Intelligence (AI) — The use of algorithms or models to perform tasks and exhibit behaviors such as learning, making decisions, and making predictions.aChatbot — A computer program designed to simulate conversation with human users, especially over the internet.bDisruptive Technology — A specific technology that can fundamentally change not only established technologies but also the rules and business models of a given market, and often business and society overall. The internet is the best-known example in recent times; the personal computer and telephone, in previous generations.bDrift — Model drift refers to the degradation of model performance due to changes in data or changes in relationships between input and output variables. Model drift—also known as model decay—can negatively impact model performance, resulting in faulty decision-making and bad predictions.cGenerative Artificial Intelligence — A type of AI (eg, OpenAI’s ChatGPT) that uses machine learning algorithms to create new digital content.cHallucination — Generated content that is nonsensical or unfaithful to the provided source content. There are two main types of hallucinations, namely intrinsic hallucination and extrinsic hallucination. An intrinsic hallucination is a generated output that contradicts the source content; an extrinsic hallucination is a generated output that cannot be verified from the source content (ie, output can neither be supported nor contradicted by the source.cLarge Language Models (LLMs) — Neural networks that perform natural language processing and are capable of summarizing, generating, and predicting new text. LLMs are trained on massive amounts of text, billions or trillions of words, often from the internet. They have been functionally described as producing text by predicting the next word in a sentence based on the preceding words.aMachine Learning — A set of techniques that can be used to train AI algorithms to improve performance at a task based on data.d |

| Artificial Intelligence (AI) — The use of algorithms or models to perform tasks and exhibit behaviors such as learning, making decisions, and making predictions.aChatbot — A computer program designed to simulate conversation with human users, especially over the internet.bDisruptive Technology — A specific technology that can fundamentally change not only established technologies but also the rules and business models of a given market, and often business and society overall. The internet is the best-known example in recent times; the personal computer and telephone, in previous generations.bDrift — Model drift refers to the degradation of model performance due to changes in data or changes in relationships between input and output variables. Model drift—also known as model decay—can negatively impact model performance, resulting in faulty decision-making and bad predictions.cGenerative Artificial Intelligence — A type of AI (eg, OpenAI’s ChatGPT) that uses machine learning algorithms to create new digital content.cHallucination — Generated content that is nonsensical or unfaithful to the provided source content. There are two main types of hallucinations, namely intrinsic hallucination and extrinsic hallucination. An intrinsic hallucination is a generated output that contradicts the source content; an extrinsic hallucination is a generated output that cannot be verified from the source content (ie, output can neither be supported nor contradicted by the source.cLarge Language Models (LLMs) — Neural networks that perform natural language processing and are capable of summarizing, generating, and predicting new text. LLMs are trained on massive amounts of text, billions or trillions of words, often from the internet. They have been functionally described as producing text by predicting the next word in a sentence based on the preceding words.aMachine Learning — A set of techniques that can be used to train AI algorithms to improve performance at a task based on data.d |

aSource: Smoke S. Artificial intelligence in pharmacy: a guide for clinicians. Am J Health-Syst Pharm. 2024;81(14):641-646.

bSource: Oxford Reference. Definition [search by term]. https://www.oxfordreference.com

cSource: IBM Corporation. What is model drift? Updated July 24, 2024. https://www.ibm.com/topics/model-drift#.

dSource: Executive Order 14110. (October 30, 2023). Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. https://www.federalregister.gov/documents/2023/11/01/2023‐24283/safe‐secure‐and‐trustworthy‐development‐and‐use‐of‐artificial‐intelligence>.

| Artificial Intelligence (AI) — The use of algorithms or models to perform tasks and exhibit behaviors such as learning, making decisions, and making predictions.aChatbot — A computer program designed to simulate conversation with human users, especially over the internet.bDisruptive Technology — A specific technology that can fundamentally change not only established technologies but also the rules and business models of a given market, and often business and society overall. The internet is the best-known example in recent times; the personal computer and telephone, in previous generations.bDrift — Model drift refers to the degradation of model performance due to changes in data or changes in relationships between input and output variables. Model drift—also known as model decay—can negatively impact model performance, resulting in faulty decision-making and bad predictions.cGenerative Artificial Intelligence — A type of AI (eg, OpenAI’s ChatGPT) that uses machine learning algorithms to create new digital content.cHallucination — Generated content that is nonsensical or unfaithful to the provided source content. There are two main types of hallucinations, namely intrinsic hallucination and extrinsic hallucination. An intrinsic hallucination is a generated output that contradicts the source content; an extrinsic hallucination is a generated output that cannot be verified from the source content (ie, output can neither be supported nor contradicted by the source.cLarge Language Models (LLMs) — Neural networks that perform natural language processing and are capable of summarizing, generating, and predicting new text. LLMs are trained on massive amounts of text, billions or trillions of words, often from the internet. They have been functionally described as producing text by predicting the next word in a sentence based on the preceding words.aMachine Learning — A set of techniques that can be used to train AI algorithms to improve performance at a task based on data.d |

| Artificial Intelligence (AI) — The use of algorithms or models to perform tasks and exhibit behaviors such as learning, making decisions, and making predictions.aChatbot — A computer program designed to simulate conversation with human users, especially over the internet.bDisruptive Technology — A specific technology that can fundamentally change not only established technologies but also the rules and business models of a given market, and often business and society overall. The internet is the best-known example in recent times; the personal computer and telephone, in previous generations.bDrift — Model drift refers to the degradation of model performance due to changes in data or changes in relationships between input and output variables. Model drift—also known as model decay—can negatively impact model performance, resulting in faulty decision-making and bad predictions.cGenerative Artificial Intelligence — A type of AI (eg, OpenAI’s ChatGPT) that uses machine learning algorithms to create new digital content.cHallucination — Generated content that is nonsensical or unfaithful to the provided source content. There are two main types of hallucinations, namely intrinsic hallucination and extrinsic hallucination. An intrinsic hallucination is a generated output that contradicts the source content; an extrinsic hallucination is a generated output that cannot be verified from the source content (ie, output can neither be supported nor contradicted by the source.cLarge Language Models (LLMs) — Neural networks that perform natural language processing and are capable of summarizing, generating, and predicting new text. LLMs are trained on massive amounts of text, billions or trillions of words, often from the internet. They have been functionally described as producing text by predicting the next word in a sentence based on the preceding words.aMachine Learning — A set of techniques that can be used to train AI algorithms to improve performance at a task based on data.d |

aSource: Smoke S. Artificial intelligence in pharmacy: a guide for clinicians. Am J Health-Syst Pharm. 2024;81(14):641-646.

bSource: Oxford Reference. Definition [search by term]. https://www.oxfordreference.com

cSource: IBM Corporation. What is model drift? Updated July 24, 2024. https://www.ibm.com/topics/model-drift#.

dSource: Executive Order 14110. (October 30, 2023). Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. https://www.federalregister.gov/documents/2023/11/01/2023‐24283/safe‐secure‐and‐trustworthy‐development‐and‐use‐of‐artificial‐intelligence>.

Distinguishing between automation, machine learning and AI in a pharmacy model. Illustration used with permission from Benjamin R. Michaels.

Pharmacy enterprise infrastructure to support AI.

It is likely there are AI initiatives undergoing evaluation or deployment at most health systems, and it is essential for pharmacy to be involved, particularly when tools impact clinical care and medication-use processes (Box 3). First, it is important to identify an organization’s current infrastructure for informatics support and within the pharmacy enterprise. Expert skills needed for pharmacy data team members to support an infrastructure for AI include having a deep pharmacy understanding of pharmacy workflow, processes, and clinical care; ability to analyze and access large datasets; and ability to manage the electronic health record (EHR) pharmacy module and integration with other systems/data. As AI models are evaluated and implemented, there needs to be inter- and intraorganization collaboration and resources allocated to vendor relations. While the learning curve may be steep, there is a significant opportunity for pharmacists to become involved in the development of AI models. Michaels suggested starting by selecting a project for which there is data available and then learning the skills and programming languages needed to operationalize the data into project outcomes.

Operational applications

| Clinical applications

|

Operational applications

| Clinical applications

|

Adapted with permission from reference 8.

Operational applications

| Clinical applications

|

Operational applications

| Clinical applications

|

Adapted with permission from reference 8.

When beginning an initiative, pharmacy leaders should identify quantifiable benefits expected from a pharmacy data team and develop a strategy for securing C-suite buy-in for AI utilization and adoption. As with any solid business plan, those implementing AI should determine what problem needs to be solved, how AI will impact current workflow and workforce, the return on the investment, and how the new AI implementation aligns with existing AI initiatives and resources (including experts) in the organization.

To summarize, there are significant AI implications for patient care, health systems, the pharmacy profession, the pharmacy workforce, and society in general. Health systems will need to foster internal and external collaborations and build an infrastructure to support AI implementation and ongoing evaluation. There is an opportunity to embrace new technology and to implement it safely and effectively, but this requires knowledge and an understanding of how it works, including its strengths as well as its limitations.

Infrastructure for applying AI to clinical pharmacy.

AI models can rapidly discover patterns in data, including clinical data. Andrea Sikora, PharmD, MSCR, BCCCP, FCCM, FCCP, clinical associate professor, University of Georgia College of Pharmacy, noted that, with this capability, current AI models can apply novel variables (unique parameters an AI model can discover through its data processing) derived from complex data domains such as image analysis, genomics, and modeling of electronic health records, but they may struggle to interpret the data, particularly medication-related data. Thus far, most AI models have either excluded medications from their datasets (focusing on laboratory values, diagnoses, etc) or have only included simplistic data, such as elements within a charge description master. Currently, there is no high-quality common language for medication learning models. Sikora noted that when more complex scenarios occur, such as with patients in the intensive care unit, medication-related data within models has not typically included patient factors that affect drug response, patient factors that affect clinical outcomes, safety of medications, and efficacy of medication within certain scenarios. In short, without a common language and standardization for medication learning models, data may be inaccurately interpreted, which could decrease the validity of results. For example, an appropriate drug dosage in one clinical situation may be entirely inappropriate in another, when multiple factors are considered. To close the gap, Sikora suggests there is a need to adopt common data models for medications, medication-oriented methodology, and metrics that address clinical bias. Standardization will facilitate equity, reproducibility, and generalizability across datasets. Sikora proposed adoption of the “FAIR” criteria (Findable, Accessible, Interoperable, and Reusable) as a foundation for a common data model to support machine readability for medication-related data.7 Sikora stressed that clinical judgement and conducting research to validate models will remain an ongoing requirement to ensure safe and reliable application of AI in clinical pharmacy.

Key Points:

Understanding AI’s potential benefits and limitations allows the pharmacy enterprise to be more proactive and embrace new technology while minimizing potential unintended consequences.

Pharmacy team members can shape and guide the development and implementation of AI.

AI shows great potential to support evidence-based drug utilization and comprehensive medication management.

Integration of AI into patient care will fundamentally change practice and will require pharmacy practitioners to adapt and learn new skills.

Responsible use of AI requires key infrastructure including datasets meeting the FAIR criteria, common data models, methods transparency, ethical standards, and clinical implementation studies.

Ethical dimensions of AI in pharmacy practice (The Joseph A. Oddis Ethics Colloquium1)

In February 2020, the Department of Defense (DOD) adopted 5 AI ethical principles with a host of related considerations: AI is responsible (privacy and security), equitable (bias and fairness, equitable access, diversity/equity/inclusion), traceable (transparency and explainability), reliable (accountability and liability), and governable (human oversight and control, continued monitoring and evaluation).5,8 These ethical principles and considerations for adopting AI have important implications for the pharmacy profession and patient care. Kenneth A. Richman, PhD, professor of philosophy and health care ethics at MCPHS, in collaboration with Scott D. Nelson, PharmD, MS, ACHIP, FAMIA, associate professor, Vanderbilt University Medical Center, and Casey Olsen, PharmD, informatics pharmacy manager – inpatient, Advocate Health, addressed ethical implications of AI in two presentations on pharmacy practice (AI Behind the Counter) and patient care (AI and the Patient Experience). Each presentation was followed by breakout groups and a discussion session guided by questions around case studies describing ethical dilemmas in AI. Richman described AI as disruptive technology and how to approach ethical implications for pharmacy practice, including values relevant to choosing and implementing AI. He explained how ethical dilemmas emerge when competing values conflict (Box 4). AI can improve efficiency, but it comes with a large carbon footprint; in this case, the value conflict between efficiency and sustainability creates an ethical dilemma. Richman stated these dilemmas must be handled, and choices made when there are competing values, but they will not be resolved. Understanding and applying ethical principles is necessary for ethical stewardship of AI and making choices guided by both facts and values. For example, Nelson shared an example of how ethical dilemmas and related choices can shape equity and bias when evaluating AI models.

Values Relevant to AI, the Patient-Provider Experience, and Ethical Dilemmasb

Values applicable to AI Efficiency

Validity

Reliability

Viability

Governability

Sustainability

Values specific to the patient experience Vision/vocation

| Transparency

Responsibility

Trustworthiness

Example ethical dilemmas Beneficence and respect for persons

Nonmaleficence and justice

Efficiency

Potential for additional unintended consequences

|

Values applicable to AI Efficiency

Validity

Reliability

Viability

Governability

Sustainability

Values specific to the patient experience Vision/vocation

| Transparency

Responsibility

Trustworthiness

Example ethical dilemmas Beneficence and respect for persons

Nonmaleficence and justice

Efficiency

Potential for additional unintended consequences

|

aAdapted with permission from Kenneth A. Richman.

Values Relevant to AI, the Patient-Provider Experience, and Ethical Dilemmasb

Values applicable to AI Efficiency

Validity

Reliability

Viability

Governability

Sustainability

Values specific to the patient experience Vision/vocation

| Transparency

Responsibility

Trustworthiness

Example ethical dilemmas Beneficence and respect for persons

Nonmaleficence and justice

Efficiency

Potential for additional unintended consequences

|

Values applicable to AI Efficiency

Validity

Reliability

Viability

Governability

Sustainability

Values specific to the patient experience Vision/vocation

| Transparency

Responsibility

Trustworthiness

Example ethical dilemmas Beneficence and respect for persons

Nonmaleficence and justice

Efficiency

Potential for additional unintended consequences

|

aAdapted with permission from Kenneth A. Richman.

The focus of the second presentation was how AI is poised to change the patient experience and provider-patient relationship. When polled during the presentation, about a quarter of the Summit audience responding said that AI had already changed their interaction with patients, and nearly all responded they expect AI to change how they interact with patients in the future. Richman described how, over the years, the provider-patient relationship has shifted from a directive, paternalistic model to a consumer model where the patient decides on a course of action based on the provider-suggested options, and, more recently, to a collaborative model, where the provider and patient engage jointly to develop a plan of care. Some additional values apply when AI is used in patient care, including the vision and vocation of the profession, transparency, responsibility, and trustworthiness (Box 4). For example, if a provider relies on AI to provide a diagnosis (which is a “mindless” function for the technology) but the provider does not understand and cannot articulate how the AI develops its conclusion, this is going to impact patient trust in the decision being made that informs their care. In this scenario, does the value of increased efficiency outweigh the value of transparency? Patients have diverse preferences, and trust in AI as compared to provider-guided care and transparency is key.9 The resulting conflict becomes an ethical dilemma that must be considered by applying ethical principles. Olsen closed the Ethics Colloquium with a discussion of the importance of transparency on trust and the patient-provider relationship.

Key Points:

AI is a disruptive technology that has implications for the provider-patient relationship.

Implementing AI in healthcare requires balancing competing values.

Ethical analysis involves identifying relevant values and dilemmas, which allows decisions to be made based on sound reasoning.

AI applications in healthcare have significant potential benefits but can put strain on the provider-patient relationship as ethical dilemmas emerge.

Identifying relevant values and applying an ethical framework to address ethical dilemmas can help to implement AI responsibly.

Safety and efficacy: “package inserts” for AI models?

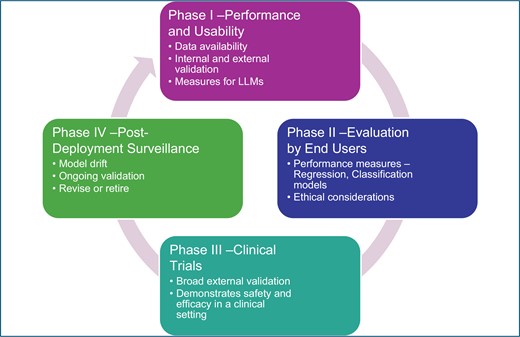

The Food and Drug Administration (FDA) has regulated software that meets the criteria to be deemed a device since guidance was first issued in 2013. Those products approved most often are diagnostic radiology applications.10 Updated guidance released in September 2022 defines devices as including “software that is intended to provide decision support for the diagnosis, treatment, prevention, cure, or mitigation of diseases or other conditions (often referred to as clinical decision support software).”11 The guidance goes further to distinguish between nondevice software (eg, evidence-based order sets for the healthcare provider to choose from, drug-drug or drug-allergy alerts, therapeutic duplication alerts) and AI software that would be considered to be a device (eg, a software function that analyzes patient-specific medical information to detect a life-threatening condition such as stroke or sepsis, software that provides possible diagnoses based on image analysis, software that analyzes heart failure patient health data to predict a need for hospitalization). There is greater risk when AI is used for clinical decision-making, and the risk must be balanced with the benefit. In their presentation titled “Between the Lines: Reading Package Inserts for AI Models,” Nelson and Olsen present an approach to AI review and validation that is analogous to the rigor used in drug product development and approval. Product labeling, or package inserts approved by FDA for drugs, contain detailed information that supports product safety and efficacy and is based on the scientific evidence presented for the intended use. The package insert for AI might include the results of clinical trials and describe indications and the intended population for use.12,13 Olsen also described a phased-model approach to AI model validation similar to the phases of clinical trials used for FDA drug and device approvals (Figure 5). A format to collate this evidence for validation could then be organized like a package insert for a device or drug. For example, a model brief or “package insert” might include similar information, including its indications (intended population and outcomes), features (data structure), evidence of performance (training data description, validation strategy and performance scores), use and directions (implementation recommendations), and cautions/warnings.12 It is important to note that the performance of an AI model may degrade significantly over time. This “drift” occurs when relationships between variables change over time, the underlying data changes, or clinical practice or evidence changes.14 The model must be recalibrated, and thus, as for medications, there needs to be a long-term maintenance or postmarketing plan, with consideration of when to remove or retire the model. In any event, a standardized approach for a model brief, including validation data, will increase the ability to evaluate the technology and increase confidence in the outputs. Regardless of the approach, pharmacists should be familiar with and educated in the elements required for a thorough review of the safety and efficacy of AI when used for clinical decision support.

Proposed phases for AI clinical evaluation. Illustration used with permission from Scott D. Nelson.

Key Points:

New modalities of technology offer benefits but also new risks.

Opportunities exist to create common expectations for model approval and communication of evidence describing its safety, efficacy, and limitations.

The same critical evaluation performed on clinical trials applies to AI technology.

It is critical for users to understand how to interpret machine learning/AI-based studies using a method similar to that for evaluating clinical trials to establish a baseline, safely implement tools without unintended consequences, and have thoughtful explanations for the functionality of AI.

Models (including those approved by FDA) need to be regularly monitored and updated over time.

Ready or not, the AI revolution is here

Day 2 of the Summit opened with a Pharmacy Futures Keynote Address presented by Mike Walsh, futurist, CEO of the global consultancy Tomorrow, and a leading authority on disruptive innovation and digital transformation. Walsh believes the most successful organizations in the 21st century will be those that leverage this technology, not just to cut costs or increase efficiencies but to do something much more important: to transform experiences—not just for patients but for everyone. In his presentation Walsh provided some examples of cutting-edge technologies and how AI is poised to change transactions to experience—from products and services to platforms—and drive a shift from apps to agents. He cautioned that the biggest potential mistake for today’s decision-makers is that they will underestimate “the true impact of what the next 10 years might look like.” Walsh offered the following advice to leaders in this time of accelerated change: (1) Automate and elevate—give staff the skills they need to elevate their work; (2) Don’t work, design work; (3) Embrace uncertainty; and (4) Be curious, not judgmental. The keynote session ended with an engaging question-and-answer sit-down between Walsh and ASHP’s Daniel J. Cobaugh, PharmD, DABAT, FAACT, senior vice president, professional development and publishing office, and editor in chief of AJHP. The two discussed the legal and regulatory aspects of AI, bias and equity in data used to train AI, education to prepare the healthcare workforce for an AI-influenced world, how to ensure AI is implemented in ways that add value to the healthcare enterprise, and avoiding information overload in the pharmacy workplace.

Key Points:

AI is ultimately about people; the most important place to focus attention is on the people—how we change, how we behave, how we adopt new ways of thinking.

While AI will become adept at tasks traditionally performed by people, this shift opens new opportunities for the workforce.

Using people’s skills and capabilities to leverage AI and other technologies is probably going to be more important in healthcare than in any other industry.

Micromanaging technology isn’t an effective use of time; there are going to be things that we trust machines to do.

AI in pharmacy practice today: from vision to reality

While the potential of AI is yet to be fully realized, it is important to note that there are already AI applications being developed or deployed for use in health-system pharmacy with leadership and workforce implications for both operational and clinical medication-use processes.15 A recent US Government Accountability Office report recognizes that the use of these tools can improve patient treatment, reduce burden on providers, and increase resource efficiency at healthcare facilities, among other potential benefits.16 One concern voiced by the pharmacy workforce is that AI will replace jobs. While it is likely that some tasks will be replaced, it is also possible that jobs will increase, but the skill sets and roles required may be different.17 Staff will need to be engaged through ongoing education, by clearly defining proposed benefits of the technology, and by providing assurances that individuals will be supported as new roles evolve. AI in healthcare is still evolving and is not likely to function, at least in the near term, without human intervention and oversight. LLMs can process vast amounts of data, but there is still potential for hallucination, drift, and bias. Even if the models are validated, over time, as new data is absorbed into the model, the outputs may become less reliable. For example, if patient information is built into the algorithm based on clinic visits, it will exclude data from patients who do not come for appointments and, over time, will create bias toward patients who are more able to access care. Therefore, successfully operationalizing AI in the pharmacy enterprise should include evaluating AI studies (internal and external findings), establishing processes for oversight and evaluation of AI technology (eg, having an oversight committee of key stakeholders), applying core ethical principles to ensure AI and provider trustworthiness, and employing thoughtful implementation and follow-up review. Some AI models already developed include those used to predict and detect adverse drug events, detect potential drug diversion, and provide clinical decision support. Providers are being educated using AI-generated case simulations, and patient-facing AI is being used to provide medication reminders and language translation.15 Summit presenters described their experience with AI applications currently used in their pharmacy operations to support pharmacy leadership and support clinicians at the bedside.

Case studies of AI applications for pharmacy operations and leadership.

Abbasi, Michaels, and Benjamin J. Anderson, PharmD, MPH, FASHP, FMSHP, medication management informaticist, Mayo Clinic, presented 3 examples of how rules-based algorithms, machine learning, and AI are currently being developed or deployed in pharmacy operations for order verification, pharmacy spend forecasting, and to enhance patient safety. Clinical decision support for order verification trained by AI technology has the potential to impact patient care and pharmacy operations by providing consistent review of defined medications, decreasing burnout by decreasing the volume of orders requiring verification. With an increase in efficiency, pharmacists can expand their reach as vital members of the care team and practice at the top of their demonstrated competency and scope of practice. Anderson described his organization’s experience with verification decision support (VDS) that incorporates the AI capability of electronic prospective medication order review (EPMOR), also referred to as “autoverification.” EPMOR is the prospective review of medication orders with an automated computer system utilizing predetermined VDS criteria, prior to dispensing. The proof-of-concept approach for the design and testing of an algorithm for EPMOR applying criteria-based VDS is described in a recently published study.17 The criteria are integrated into a clinically driven algorithm that informs what orders require a pharmacist review and what orders have minimal risk if the review is automated. The technology proved to be highly reliable in the proof-of-concept phase. Thus far, the technology shows promise, but Anderson stressed that additional initiatives are needed to refine VDS criteria and to determine best use cases for the technology. Michaels went on to describe how their organization described a leadership need: how to more accurately predict pharmacy drug spend. They began their initiative by identifying all the factors that impact spend and found that it is driven by multiple variables such as facilities or service line changes, outside events (eg, COVID-19, payer changes), patient volume or disease state mix, and medication changes (eg, new drug approvals, new clinical guidelines, shortages). They realized there were similarities to other industries and that they could possibly apply the ARIMA (autoregressive integrated moving average) model, or “time series forecasting,” to predicting pharmacy spend.18 Initial challenges included determining the best data to model and what inputs would produce the most reliable predictions. Trial and error found that a combination of price type and therapeutic class produced the best correlation in their regression model.19 Lastly, Abbasi described two use cases: how voice-activated artificial intelligence (ie, Amazon’s Echo Dot) was deployed to improve patient education and to aid in optimizing the time to bleeding reversal. To improve patient satisfaction with medication education, patient rooms are equipped with technology preloaded with drug information, and if information is not available, the pharmacist is notified and contacts the patient phone-to-device. The technology helps to triage patient questions with the goal of improving patient satisfaction. Abbasi described a second use case to improve pharmacy operations and response time: When a critical order is entered into the EHR (in this case an order for an anticoagulant reversal agent), the Echo Dot technology sends a verbal announcement to the pharmacy that there is an order requiring urgent attention. The turnaround time improved by an impressive 75%. Given the success of these use cases, they are currently looking at other uses for the Echo Dot technology. Abbasi suggested identifying opportunities for improvement, then working backwards to ask in what way can AI provide a potential solution.

Case studies in AI applications for clinical pharmacy at the bedside.

During the Summit, Weihsuan Jenny Lo-Ciganic, PhD, MS, MSPharm, professor of medicine, University of Pittsburgh, along with Sikora and Ian Wong, MD, PhD, assistant professor, Duke University, presented a series of use-case scenarios for AI at the bedside, including a review of potential challenges and limitations for clinicians using AI technologies. Sikora stressed that AI models applied to clinical care must be critically evaluated and a series of questions asked when evaluating AI studies (Box 5). She also noted that current, common data models for drugs are lacking and typically include only basic features (eg, drug, dose, route).20 She shared her experience studying the use of machine learning to predict adverse drug events in the highly complex intensive care unit setting and how the data taxonomy can impact outcomes of the algorithms.21 Lo-Ciganic described her experience with applying AI for precision opioid overdose prevention22,23 using predictive models among Medicare and Medicaid beneficiaries. Getting from model development to implementation involves many steps, including algorithm refinement, prototype design, mixed-methods evaluation (of acceptability, usability, and feasibility), refinement and integration, implementation (design and usability), and lastly, external validation. Other important steps in the process include engaging stakeholders, addressing ethical concerns, assuring data quality and accuracy, communicating (risks and transparency), conducting rigorous evaluation (randomized trials and external validation) and planning for sustainability (infrastructure and interoperability). Wong emphasized how people and processes interface with the technology is also a fundamentally important consideration. Finally, when implementing AI, it is important to consider levels of risk and the risk-benefit equation. Generally, AI models can be grouped as low risk (ie, standard of care), medium risk (local clinical consensus), and high risk (data-derived models). Wong concluded the presentation by providing a framework for governance and examples of the importance of a collaborative, critical, and ongoing evaluation of AI models for use in patient care. He stated that the heart of governance is the assurance of technology trustworthiness. Core principles that support trust in AI include explainability, accountability and transparency, privacy and security, safety, equity, and usefulness. He emphasized the importance of a governance structure that includes relevant stakeholders and supports a thoughtful evaluation using a systematic approach that allows for moving forward while keeping everyone safe.

|

|

aUsed with permission from Andrea Sikora.

|

|

aUsed with permission from Andrea Sikora.

Key Points

AI adoption is dependent on a complex combination of ethical, legal, and technological constraints that will continually evolve as Al and society progress.

There are multiple layers for thoughtful deployment and implementation of AI for safe, effective interventions.

Stakeholders and end users should be engaged from the earliest phases of implementation to maximize the clinical utility of AI tools.

The growth of AI is dependent on acceptance of and utilization by the healthcare industry and integration by the professions in practice settings.

AI has the potential to automate low-level tasks and can potentially change pharmacists’ and pharmacy technicians’ roles and require new skills.

The pharmacy workforce can shape and guide this impact through interaction with Al.

Developing a roadmap of AI for pharmacy: a Town Hall

Summit participants from diverse practice settings participated in the Town Hall session, where they helped build a roadmap for safely incorporating AI into the medication-use process (Figures 6-9). This roadmap represents a distillation of Key Takeaways submitted by AI Summit participants, and the resulting recommendations address 6 domains: Society and Healthcare Safety, Patient Care, Health Systems, Pharmacy Profession, Pharmacy Enterprise, and Pharmacy Workforce. The adoption of AI in healthcare and by pharmacy is accelerating and will continue to evolve over time, but these actionable recommendations provide insights and direction to those in the pharmacy workforce who can influence the safe and effective integration of AI in medication-use processes. The roadmap should stimulate curiosity, discussion, and innovation about how AI can enhance the pharmacy profession and delivery of patient care.

Casey Olsen captures the discussion from Townhall participants. Photo courtesy of ASHP.

Ben Michaels leads a breakout group discussion. Photo courtesy of ASHP.

Ben Anderson engages Town Hall participants in a breakout group discussion. Photo courtesy of ASHP.

ASHP’s Scott Anderson summarizes Key Takeaways from the Summit. Photo courtesy of ASHP.

Recommendations

Society and Healthcare Safety

Stakeholders should develop a national roadmap for AI in healthcare and a framework for implementation that places the patient at the center.

ASHP should advocate other professional organizations and the federal government to create a national advisory committee on the use of AI in patient care.

Payers should incentivize health systems to adopt AI tools that are shown to improve health outcomes.

Regulations and accreditation standards should address equitable patient access to AI innovations deemed to be the standard of care.

Regulatory bodies should require AI-based technology used in patient care to undergo a systematic, evidence-based review (with the same rigor as drug approvals) to ensure public and provider confidence in product safety and effectiveness.

Regulators and accreditors should require ongoing monitoring processes and cadence that ensure the AI technology is functioning as expected.

ASHP should develop model legislation and advocate to ensure AI tools related to medication-use processes are standardized, regulated, and equitable for shealth systems and patient care.

Patient Care

AI models should strive to close known gaps in patient care, with a focus on improved health outcomes.

Health systems should adopt a rigorous process for systematic review and approval of commercially available AI tools used in patient care.

AI should be leveraged to assure that all patients, regardless of their demographics, have equal access to care (eg, language translations, tailored educational tools, vision and hearing assistance, culturally appropriate patient education).

Patient-facing AI products should address barriers to equity (eg, language, age, culture, access, vision/hearing).

Patient-facing AI should be designed to be individualized and personalized to enhance patient engagement.

ASHP should provide a forum to showcase successful adoption of AI in medication-use processes.

ASHP should advocate for patient rights related to the use of AI (eg, transparency on the use of their data, expectations for use, and informing patients of its use in their care).

Health Systems

Health systems should plan for allocation of resources required to support AI applications, including for data management infrastructure and integration, implementation, validation, and ongoing review.

Health-system governance processes need to recognize resources required for ongoing validation of AI tools, particularly for generative AI models with a high risk of drift or for high-risk applications.

Health systems should develop internal governance processes that address, at a minimum: structure and membership, patient rights, workforce education and training, and deployment of AI (evaluation, implementation, validation, and ongoing monitoring).

Health systems should establish dedicated AI subject matter experts trained within the organization to prevent corruptible algorithms (ie data drift).

Health systems should include pharmacists on AI product design, evaluation, and implementation teams as subject matter experts to ensure data integrity and tools validation when applicable to medication-use processes.

Health-system leaders need to recognize and prepare for workforce implications in a future with AI (eg, training needs, subject matter experts, blending of workforce, shifting roles and organization structure).

Health systems should develop a standard framework to guide implementation of AI technology, including elements of internal and external validation and metrics for monitoring.

To achieve optimal outcomes, health systems should prioritize capability for integration between the electronic health record and AI solutions.

ASHP should facilitate conversations between the technology industry and health-system stakeholders to provide feedback on AI product development related to medication-use processes.

Health systems should advocate for vendor transparency regarding what data is used (and not used) to generate output data (eg, clinical notes, treatment recommendations) that is critical to algorithm validation.

ASHP should advocate for collaboration between health systems and leading AI solution vendors to include pharmacists in the development of AI (prioritizing needs, informing the build, and validating outcomes), particularly those related to medication use and related processes.

When health systems seek to implement vendor solutions or partner with vendors for AI development, pharmacists should be involved at all phases when medication-related data or processes are involved.

ASHP should identify best practices for validating AI used in healthcare delivery, specifically for those requiring integration of medication-related data.

Health systems should establish a peer-review process as part of AI validation.

Pharmacy Profession

Pharmacy profession leaders should participate in national collaboratives to develop guidance on the use of AI in healthcare.

The pharmacy profession should advocate for the development of AI applications that improve medication use as a priority.

With input from interprofessional stakeholders, pharmacy professional organizations should develop guidance for implementing AI into medication-use processes.

The pharmacy profession should define best practices on the use of AI to provide decision support for the diagnosis, treatment, prevention, cure, or mitigation of diseases or other conditions (often referred to as clinical decision support software).

The pharmacy profession should advocate for the development of standardized medication-related data elements and taxonomy for training AI models.

Pharmacy professional organizations should provide guidance on the responsible use of AI and management of risk, including professional liability.

ASHP should lead an effort with other pharmacy and accreditation organizations (eg, Institute for Safe Medication Practices, The Joint Commission) to create consensus guidance/standards that recognize benefits and limitations of AI and addresses its safe, equitable, and ethical use in patient care.

ASHP should develop an AI task force of subject matter experts (including data scientists and prompt engineers) to inform guidelines and resource development.

ASHP should develop a toolkit on how to communicate with the C-suite about the strategic implications of AI for the health system and pharmacy enterprise.

ASHP should develop guidance and tools that assist health systems in evaluating commercially available AI tools used in pharmacy practice.

ASHP should develop guidance for health-system pharmacists and learners for the safe use of AI applying publicly available large language models (eg, ChatGPT and Microsoft’s Copilot).

To provide consistency of guidance and interpretation across states, ASHP should work with the National Association of Boards of Pharmacy to develop model regulations to safely enable the use of AI in pharmacy practice. This guidance should recognize needs for data privacy, security, and integrity; transparency; professional oversight; and ethical use in patient care.

ASHP should support initiatives to create awareness through research and identifying best practices where AI can enhance safe and effective medication use.

ASHP should support research to evaluate how AI may impact organizational leadership and human resources management.

ASHP should support the development and dissemination of strong business cases for the use of AI that includes expanding pharmacy services’ reach and impact.

Pharmacy Enterprise

Pharmacy leaders and subject matter experts should collaborate to identify patient care gaps, needs, and opportunities with regard to how to best integrate AI into patient care.

Pharmacy leaders should consider AI in their strategic planning processes.

Pharmacy leaders should be proactive and involved with their health system’s AI implementation efforts and look for opportunities to align with other departments.

Pharmacy leaders should conduct a needs assessment to determine opportunities for AI within pharmacy, including where products exist and where new products would be beneficial. Examples include regulatory compliance review, staffing optimization, drug utilization projections, conducting research, processing prior authorizations, and diversion prevention.

Health-system leaders should proactively plan for the impact of AI on the workforce and identify the need for redistribution and retraining to support new and evolving roles of pharmacy practitioners that elevate care.

Pharmacy leaders should leverage AI as an opportunity to redeploy pharmacists to work at the top of their license and provide care to more patients with complex care needs or who are receiving complex therapies.

Health systems should support professional development and alternate career paths in AI for pharmacists and pharmacy technicians.

Pharmacy leaders need to be prepared to manage workforce concerns with AI implementation and the changes it may bring to traditional work processes.

Pharmacy leaders need to ensure that resources are allocated for ongoing support and evaluation of AI after implementation.

Pharmacy Workforce

Pharmacists should pursue ongoing education and training to ensure they understand how to effectively integrate AI technologies into their practice while maintaining patient safety and quality of care.

Pharmacists should be trained in evaluating AI, as with clinical trials, including methods (ie, model transparency), ethical concerns, internal and external validation results, limitations, and potential biases.

The pharmacy workforce should be stewards of AI technology by appropriately training and validating predictive models for safe and effective patient care.

Pharmacy workforce training and residency programs should address AI-related competencies including ethical considerations, implementation, validation, and application in practice.

Interprofessional education should be encouraged to accelerate the integration of AI technology in healthcare.

Pharmacists should disseminate their experiences with AI through case studies, publications, and continuing education programs.

Pharmacists should embrace AI as an opportunity to elevate clinical care and reach more patients.

Pharmacists should partner with nonpharmacist digital experts and thought leaders to bridge knowledge gaps.

State boards of pharmacy should expand regulations to enable the safe adoption of AI.

ASHP should develop a resource guide to showcase new roles for pharmacists and pharmacy technicians in AI.

ASHP should offer layered learning training programs to support pharmacy leaders involved in AI governance and use.

ASHP should provide a suite of educational opportunities (eg, webinars, meeting programming, certificate program) to build skills to ensure no member of the pharmacy workforce is left behind.

Conclusion

AI is a disruptive innovation in healthcare and has significant implications for patient care and the pharmacy profession, including ethical and equitably delivery of care, regulatory implications, and workforce transformation. Health-system leaders and pharmacy practitioners need to understand the governance and infrastructure requirements for safe, effective, and ethical deployment of AI in healthcare as well as navigate the complex combination of ethical, legal, and technological considerations that will continually evolve as AI and society progress. As the use of AI expands and becomes more integrated into medication-use processes, there will be a need for the pharmacy workforce to develop new expertise and prepare for new roles. AI is not going away, and the pharmacy profession has an opportunity and responsibility to shape the future.

Sunday, June 9

Summit Spotlight: Focus on Artificial Intelligence/The AI Revolution in Medicine: Focus on Pharmacy Practice

Harvey Castro, Chief Medical AI Officer for Helpp AI, Texas

Lisa Stump, MS, RPh, FASHP, SVP, Chief Information and Digital Transformation Officer, Yale New Haven Health System, Connecticut (Moderator)

Building the Base: Data for Yourself and Your Organization

Ghalib Abbasi, PharmD, MS, MBA, System Director, Pharmacy Informatics, Houston Methodist Hospital System, Texas

Benjamin R. Michaels, PharmD, 340B-ACE, Data Science Pharmacist, UCSF Health, California

Infrastructure Needs for Applying Artificial Intelligence to Clinical Pharmacy

Andrea Sikora, PharmD, MSCR, BCCCP, FCCM, FCCP, Clinical Associate Professor, University of Georgia College of Pharmacy, Georgia

Ethical Dimensions of AI in Pharmacy Practice, Part I: AI Behind the Counter (The Joseph A. Oddis Ethics Colloquium)

Scott D. Nelson, PharmD, MS, ACHIP, FAMIA, Associate Professor, Vanderbilt University Medical Center, Tennessee

Casey Olsen, PharmD, Informatics Pharmacy Manager – Inpatient, Advocate Health, Illinois

Kenneth A. Richman, PhD. Professor of Philosophy and Health Care Ethics, MCPHS University, Massachusetts

Ethical Dimensions of AI in Pharmacy Practice, Part II: AI and The Patient Experience (The Joseph A. Oddis Ethics Colloquium)

Scott D. Nelson, PharmD, MS, ACHIP, FAMIA, Associate Professor, Vanderbilt University Medical Center, Tennessee

Casey Olsen, PharmD, Informatics Pharmacy Manager – Inpatient, Advocate Health, Illinois

Kenneth A. Richman, PhD. Professor of Philosophy and Health Care Ethics, MCPHS University, Massachusetts

Between the Lines: Reading Package Inserts for AI Models

Scott D. Nelson, PharmD, MS, ACHIP, FAMIA, Associate Professor, Vanderbilt University Medical Center, Tennessee

Casey Olsen, PharmD, Informatics Pharmacy Manager – Inpatient, Advocate Health, Illinois

Monday, June 10

Pharmacy Futures Keynote Address: Ready or Not, the Artificial Intelligence Revolution is Here

Mike Walsh, CEO, Tomorrow

London, UK

Bottles to Bytes: Pharmacy Operations and Leadership AI Case Studies

Ghalib Abbasi, PharmD, MS, MBA, System Director, Pharmacy Informatics, Houston Methodist Hospital System, Texas

Benjamin J. Anderson, PharmD, MPH, FASHP, FMSHP, Medication Management Informaticist, Mayo Clinic, Minnesota

Benjamin R. Michaels, PharmD, 340B-ACE, Data Science Pharmacist, UCSF Health, California

Glimpses of AI at the Bedside: A Series of Use-Case Scenarios

Weihsuan Jenny Lo-Ciganic, PhD, MS, MSPharm, Professor of Medicine, University of Pittsburgh, Pennsylvania

Andrea Sikora, PharmD, MSCR, BCCCP, FCCM, FCCP, Clinical Associate Professor, University of Georgia College of Pharmacy, Georgia

Ian Wong, MD, PhD, Assistant Professor, Duke University, North Carolina

Developing a Roadmap of AI for Pharmacy: A Town Hall

Scott V. Anderson, PharmD, MS, CPHIMS, FASHP, FVSHP, Director, Member Relations, American Society of Health-System Pharmacists, Maryland

Harvey Castro, MD, Chief Medical AI Officer for Helpp AI, Texas

David Chen, RPh, MBA, Assistant Vice President for Pharmacy Leadership and Planning, Maryland

Advisory Council Roster

Varintorn (Bank) Aramvareekul, BSPharm, RPh, CPEL, MHSA, MBA, DPLA, CPHIMS, CPHQ, CSSGB, PMP, SHIMSS, FACHE

VBA Consulting Group, North Bethesda, MD, USA

Benjamin R. Michaels, PharmD, ACE

University of California, San Francisco Medical Center, San Francisco, CA, USA

Scott D. Nelson, PharmD, MS, CPHIMS, FAMIA, ACHIP

Vanderbilt University Medical Center, Nashville, TN, USA

Casey Olsen, PharmD

Advocate Aurora Health, Downers Grove, IL

Jared White, MS, MBA

University of California, San Francisco Medical Center, San Francisco, CA, USA

ASHP Staff

Scott V. Anderson, PharmD, MS, CPHIMS, FASHP, FVSHP

American Society of Health-System Pharmacists, Bethesda, MD, USA

David Chen, BS Pharm, MBA

American Society of Health-System Pharmacists, Bethesda, MD, USA

Daniel J. Cobaugh, PharmD, DABAT, FAACT

American Society of Health-System Pharmacists, Bethesda, MD, USA

Toni Fera, BS Pharm, PharmD

Contractor, Greater Pittsburgh Area, PA, USA

Georgia Galanou Luchen, PharmD

American Society of Health-System Pharmacists, Bethesda, MD, USA

Note: This article is based on proceedings from the Summit on Artificial Intelligence in Pharmacy Practice held as part of the inaugural ASHP Pharmacy Futures Meeting on June 9 and 10, 2024, in Portland, OR.

Acknowledgments

The authors wish to acknowledge and thank the ASHP National Meetings Education and ASHP Conference and Convention Division teams for their contribution to planning and executing the Summit.

Data availability

The data underlying this article will be shared on reasonable request to the corresponding author.

Disclosures

The authors have declared no potential conflicts of interest.

Footnotes

The ASHP Foundation is proud to sponsor The Joseph A. Oddis Ethics Colloquium. This series of case-based ethics workshops is designed to assist health-system pharmacists in addressing ethical challenges in pharmacy practice and patient care.

Comments